Network dynamics underlying OFF responses in the auditory cortex

Abstract

Across sensory systems, complex spatio-temporal patterns of neural activity arise following the onset (ON) and offset (OFF) of stimuli. While ON responses have been widely studied, the mechanisms generating OFF responses in cortical areas have so far not been fully elucidated. We examine here the hypothesis that OFF responses are single-cell signatures of recurrent interactions at the network level. To test this hypothesis, we performed population analyses of two-photon calcium recordings in the auditory cortex of awake mice listening to auditory stimuli, and compared them to linear single-cell and network models. While the single-cell model explained some prominent features of the data, it could not capture the structure across stimuli and trials. In contrast, the network model accounted for the low-dimensional organization of population responses and their global structure across stimuli, where distinct stimuli activated mostly orthogonal dimensions in the neural state-space.

Introduction

Neural responses within the sensory cortices are inherently transient. In the auditory cortex (AC) even the simplest stimulus, for instance a pure tone, evokes neural responses that strongly vary in time following the onset and offset of the stimulus. A number of past studies have reported a prevalence of ON- compared to OFF-responsive neurons in different auditory areas (Phillips et al., 2002; Luo et al., 2008; Fu et al., 2010; Pollak and Bodenhamer, 1981). As a result, the transient onset component has been long considered the dominant feature of auditory responses and has been extensively studied across the auditory pathway (Liu et al., 2019b; Kuwada and Batra, 1999; Grothe et al., 1992; Guo and Burkard, 2002; Yu et al., 2004; Heil, 1997a; Heil, 1997b), with respect to its neurophysiological basis and perceptual meaning (Phillips et al., 2002). In parallel, due to less evidence of OFF-responsive neurons in anaesthetized animals, OFF cortical responses have received comparably less attention. Yet, OFF responses have been observed in awake animals throughout the auditory pathway, and in the mouse AC they arise in 30–70% of the responsive neurons (Scholl et al., 2010; Keller et al., 2018; Joachimsthaler et al., 2014; Liu et al., 2019a; Sollini et al., 2018).

While the generation of ON responses has been attributed to neural mechanisms based on short-term adaptation, most likely inherited from the auditory nerve fibers (Phillips et al., 2002; Smith and Brachman, 1980; Smith and Brachman, 1982), the mechanisms that generate OFF responses are more diverse and seem to be area-specific (Xu et al., 2014; Kopp-Scheinpflug et al., 2018). In subcortical regions, neurons in the dorsal cochlear nucleus and in the superior paraolivary nucleus of the brainstem nuclei may generate OFF responses by post-inhibitory rebound, a synaptic mechanism in which a neuron emits one or more spikes following the cessation of a prolonged hyperpolarizing current (Suga, 1964; Hancock and Voigt, 1999; Kopp-Scheinpflug et al., 2011). In the midbrain inferior colliculus and in the medial geniculate body of the thalamus, OFF responses appear to be mediated by excitatory inputs from upstream areas and potentially boosted by a post-inhibitory facilitation mechanism (Kasai et al., 2012; Vater et al., 1992; Yu et al., 2004; He, 2003). Unlike in subcortical areas, OFF responses in AC do not appear to be driven by hyperpolarizing inputs during the presentation of the stimulus, since synaptic inhibition has been found to be only transient with respect to the stimulus duration (Qin et al., 2007; Scholl et al., 2010). The precise cellular or network mechanisms underlying transient OFF responses in cortical areas therefore remain to be fully elucidated.

Previous studies investigating the transient responses in the auditory system mostly adopted a single-neuron perspective (Henry, 1985; Scholl et al., 2010; Qin et al., 2007; He, 2002; Wang et al., 2005; Wang, 2007). However, in recent years, population approaches to neural data have proven valuable for understanding the role of transients dynamics in various cortical areas (Buonomano and Maass, 2009; Remington et al., 2018; Saxena and Cunningham, 2019). Work in the olfactory system has shown that ON and OFF responses encode the stimulus identity in the dynamical patterns of activity across the neural population (Mazor and Laurent, 2005; Stopfer et al., 2003; Broome et al., 2006; Friedrich and Laurent, 2001; Saha et al., 2017). In motor and premotor cortex, transient responses during movement execution form complex population trajectories (Churchland and Shenoy, 2007; Churchland et al., 2010) that have been hypothesized to be generated by a mechanism based on recurrent network dynamics (Shenoy et al., 2011; Hennequin et al., 2014; Sussillo et al., 2015; Stroud et al., 2018). In the AC, previous works have suggested a central role of the neural dynamics across large populations for the coding of different auditory features (Deneux et al., 2016; Saha et al., 2017; Lim et al., 2016), yet how these dynamics are generated remains an open question.

Leveraging the observation that the AC constitutes a network of neurons connected in a recurrent fashion (Linden and Schreiner, 2003; Oswald and Reyes, 2008; Oswald et al., 2009; Barbour and Callaway, 2008; Bizley et al., 2015), in this study we test the hypothesis that transient OFF responses are generated by a recurrent network mechanism broadly analogous to the motor cortex (Churchland et al., 2006; Hennequin et al., 2014). We first analyzed OFF responses evoked by multiple auditory stimuli in large neural populations recorded using calcium imaging in the mouse AC (Deneux et al., 2016). These analyses identified three prominent features of the auditory cortical data: (i) OFF responses correspond to transiently amplified trajectories of population activity; (ii) for each stimulus, the corresponding trajectory explores a low-dimensional subspace; and (iii) responses to different stimuli lie mostly in orthogonal subspaces. We then determined to what extent these features can be accounted for by a linear single-cell or network model. We show that the single-cell model can reproduce the first two features of population dynamics in response to individual stimuli, but cannot capture the structure across stimuli and single trials. In contrast, the network model accounts for all three features. Identifying the mechanisms responsible for these features led to additional predictions that we verified on the data.

Results

ON/OFF responses in AC reflect transiently amplified population dynamics

We analyzed the population responses of 2343 cells from the AC of three awake mice recorded using calcium imaging techniques (data from Deneux et al., 2016). The neurons were recorded while the mice passively listened to randomized presentations of different auditory stimuli. In this study we consider a total of 16 stimuli, consisting of two groups of intensity modulated UP- or DOWN-ramping sounds. In each group, there were stimuli with different frequency content (either 8 kHz pure tones or white noise [WN] sounds), different durations (1 or 2 s) and different intensity slopes (either 50–85 dB or 60–85 dB and reversed, see Table 1 and Materials and methods, Section 'The data set').

Stimuli set.

| Stimulus | Direction | Frequency | Duration (s) | Modulation (dB) |

|---|---|---|---|---|

| 1 | UP | 8 kHz | 1 s | 50–85 |

| 2 | UP | 8 kHz | 1 s | 60–85 |

| 3 | UP | 8 kHz | 2 s | 50–85 |

| 4 | UP | 8 kHz | 2 s | 60–85 |

| 5 | UP | WN | 1 s | 50–85 |

| 6 | UP | WN | 1 s | 60–85 |

| 7 | UP | WN | 2 s | 50–85 |

| 8 | UP | WN | 2 s | 60–85 |

| 9 | DOWN | 8 kHz | 1 s | 85–50 |

| 10 | DOWN | 8 kHz | 1 s | 85–60 |

| 11 | DOWN | 8 kHz | 2 s | 85–50 |

| 12 | DOWN | 8 kHz | 2 s | 85–60 |

| 13 | DOWN | WN | 1 s | 85–50 |

| 14 | DOWN | WN | 1 s | 85–60 |

| 15 | DOWN | WN | 2 s | 85–50 |

| 16 | DOWN | WN | 2 s | 85–60 |

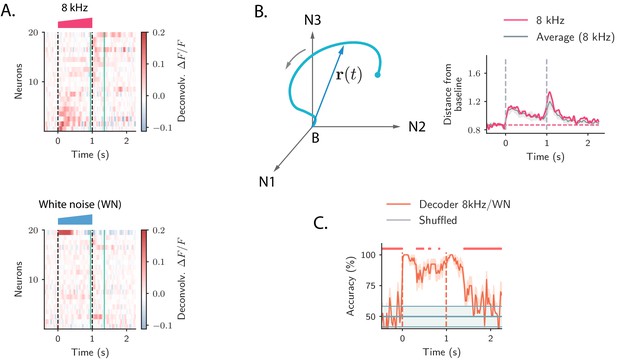

We first illustrate the responses of single cells to the presentation of different auditory stimuli, focusing on the periods following the onset and offset of the stimulus. The activity of individual neurons to different stimuli was highly heterogeneous. In response to a single stimulus, we found individual neurons that were strongly active only during the onset of the stimulus (ON responses), or only during the offset (OFF responses), while other neurons in the population responded to both stimulus onset and offset, consistent with previous analyses (Deneux et al., 2016). Importantly, across stimuli some neurons in the population exhibited ON and/or OFF responses only when specific stimuli were presented, showing stimulus-selectivity of transient responses, while others strongly responded at the onset and/or at the offset of multiple stimuli (Figure 1A).

Strong transient ON and OFF responses in auditory cortex of mice passively listening to different sounds.

(A) Top: deconvolved calcium signals averaged over 20 trials showing the activity (estimated firing rate) of 20 out of 2343 neurons in response to a 8 kHz 1 s UP-ramp with intensity range 60–85 dB. We selected neurons with high signal-to-noise ratios (ratio between the peak activity during ON/OFF responses and the standard deviation of the activity before stimulus presentation). Neurons were ordered according to the difference between peak activity during ON and OFF response epochs. Bottom: activity of the same neurons as in the top panel in response to a white noise (WN) sound with the same duration and intensity profile. In all panels dashed lines indicate onset and offset of the stimulus, and green solid lines show the temporal region where OFF responses were analyzed (from 50 ms before stimulus offset to 300 ms after stimulus offset). (B) Left: cartoon showing the OFF response to one stimulus as a neural trajectory in the state space, where each coordinate represents the firing rate of one neuron (with respect to the baseline B). The length of the dashed line represents the distance between the population activity vector and its baseline firing rate, that is, . Right: the red trace shows the distance from baseline computed for the population response to the 8 kHz sound in A. The gray trace shows the distance from baseline averaged over the 8 kHz sounds of 1 s duration (four stimuli). The gray shading represents ±1 standard deviation. The dashed horizontal line shows the average level of the distance before stimulus presentation (even if baseline-subtracted responses were used, a value of the norm different from zero is expected because of noise in the spontaneous activity before stimulus onset). (C) Accuracy of stimulus classification between a 8 kHz versus WN UP-ramping sounds over time based on single trials (20 trials). The decoder is computed at each time step (spaced by ∼50 ms) and accuracy is computed using leave-one-out cross-validation. Orange trace: average classification accuracy over the cross-validation folds. Orange shaded area corresponds to ±1 standard error. The same process is repeated after shuffling stimulus labels across trials at each time step (chance level). Chance level is represented by the gray trace and shading, corresponding to its average and ±1 std computed over time. The red markers on the top indicate the time points where the average classification accuracy is lower than the average accuracy during the ON transient response (, two-tailed t-test).

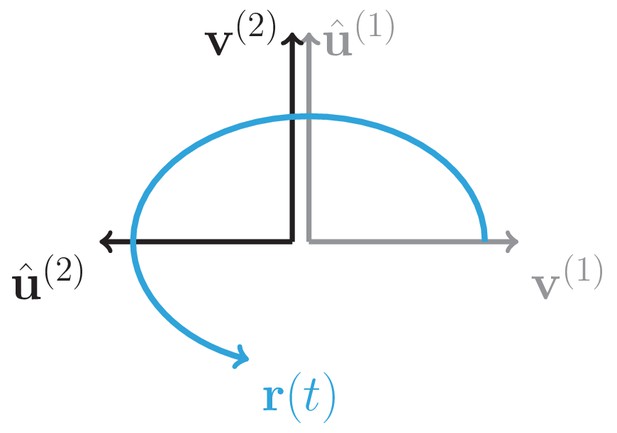

Because of the intrinsic heterogeneity of single-cell responses, we examined the structure of the transient ON and OFF responses to different stimuli using a population approach (Buonomano and Maass, 2009; Saxena and Cunningham, 2019). The temporal dynamics of the collective response of all the neurons in the population can be represented as a sequence of states in a high-dimensional state space, in which the i-th coordinate corresponds to the (baseline-subtracted) firing activity of the i-th neuron in the population. At each time point, the population response is described by a population activity vector which draws a neural trajectory in the state space (Figure 1B left panel).

To quantify the strength of the population transient ON and OFF responses, we computed the distance of the population activity vector from its baseline firing level (average firing rate before stimulus presentation), corresponding to the norm of the population activity vector (Mazor and Laurent, 2005). This revealed that the distance from baseline computed during ON and OFF responses was larger than the distance computed for the state at the end of stimulus presentation (Figure 1B right panel). We refer to this feature of the population transient dynamics as the transient amplification of ON and OFF responses.

To examine what the transient amplification of ON and OFF responses implies in terms of stimulus decoding, we trained a simple decoder to classify pairs of stimuli that differed in their frequency content, but had the same intensity modulation and duration. We found that the classification accuracy was highest during the transient phases corresponding to ON and OFF responses, while it decreased at the end of stimulus presentation (Figure 1C). This result revealed a robust encoding of the stimulus features during ON and OFF responses, as previously found in the locust olfactory system (Mazor and Laurent, 2005; Saha et al., 2017).

OFF responses rely on orthogonal low-dimensional subspaces

To further explore the structure of the neural trajectories associated with the population OFF responses to different stimuli, we analyzed neural activity using dimensionality reduction techniques (Cunningham and Yu, 2014). We focused specifically on responses within the period starting 50 ms before stimulus offset to 300 ms after stimulus offset.

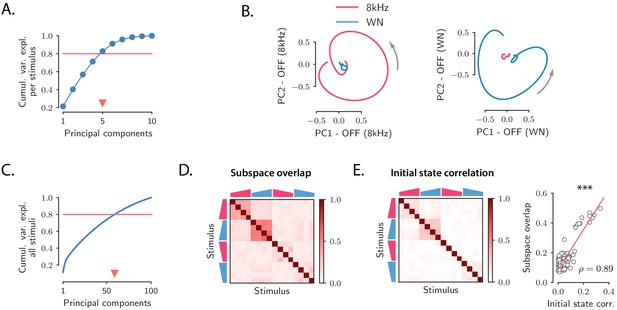

By performing principal component analysis (PCA) independently for the responses to individual stimuli, we found that the dynamics during the transient OFF responses to individual stimuli explored only about five dimensions, as 80% of the variance of the OFF responses to individual stimuli was explained on average by the first five principal components (Figure 2A; see Figure 2—figure supplement 1 for cross-validated controls; note that, given the temporal resolution, the maximal dimensionality explaining 80% of the variance of the responses to individual stimuli was 9). The projection of the low-dimensional OFF responses to each stimulus onto the first two principal components revealed circular activity patterns, where the population vector smoothly rotated between the two dominant dimensions (Figure 2B).

Low-dimensional structure of population OFF responses.

(A) Cumulative variance explained for OFF responses to individual stimuli, as a function of the number of principal components. The blue trace shows the cumulative variance averaged across all 16 stimuli. Error bars are smaller than the symbol size. The triangular marker indicates the number of PCs explaining 80% (red line) of the total response variance for individual stimuli. (B) Left: projection of the population OFF response to the 8 kHz and white noise (WN) sounds on the first two principal components computed for the OFF response to the 8 kHz sound. Right: projection of both responses onto the first two principal components computed for the OFF response to the WN sound. PCA was performed on the period from −50 ms to 300 ms with respect to stimulus offset. We plot the response from 50 ms before stimulus offset to the end of the trial duration. (C) Cumulative variance explained for the OFF responses to all 16 stimuli together as a function of the number of principal components. The triangular marker indicates the number of PCs explaining 80% of the total response variance for all stimuli. (D) Overlap between the subspaces defined by the first five principal components of the OFF responses corresponding to pairs of stimuli. The overlap is measured by the cosine of the principal angle between these subspaces (see Materials and methods, Section 'Correlations between OFF response subspaces'). (E) Left: correlation matrix between the initial population activity states at the end of stimulus presentation (50 ms before offset) for each pair of stimuli. Right: linear correlation between subspace overlaps (D) and the overlap between initial states (E left panel) for each stimulus pair. The component of the dynamics along the corresponding initial states was subtracted before computing the subspace overlaps.

A central observation revealed by the dimensionality reduction analysis was that the OFF response trajectories relative to stimuli with different frequency content spanned orthogonal low-dimensional subspaces. For instance, the response to the 8 kHz sound was poorly represented on the plane defined by the two principal components of the response to the WN sound (Figure 2B), and vice versa, showing that they evolved in distinct subspaces. To quantify the relationship between the subspaces spanned by the OFF responses to different stimuli, we proceeded as follows. We first computed the first five principal components of the (baseline-subtracted) OFF response to each individual stimulus s. Therefore, for each stimulus s these dimensions define a five-dimensional subspace. Then, for each pair of stimuli, we computed the relative orientation of the corresponding pair of subspaces, measured by the subspace overlap (Figure 2D; see Materials and methods, Section 'Correlations between OFF response subspaces').

This approach revealed an interesting structure between the OFF response subspaces for different stimuli (Figure 2D and Figure 2—figure supplement 2). Stimuli with different frequency content evoked in general non-overlapping OFF responses reflected in low values of the subspace overlap. Two clusters of correlated OFF responses emerged, corresponding to the 8 kHz UP-ramps and WN UP-ramps of different durations and intensity. Surprisingly, DOWN-ramps evoked OFF responses that were less correlated than UP-ramps, even for sounds with the same frequency content.

The fact that most of the stimuli evoked non-overlapping OFF responses is reflected in the number of principal components that explain 80% of the variance for all OFF responses considered together, which is around 60 (Figure 2C and Figure 2—figure supplement 1). This number is in fact close to the number of dominant components of the joint response to all 16 stimuli (see Table 1) that we would expect if the responses to individual stimuli evolved on uncorrelated subspaces (given by #PC per stimulus × #stimuli ≈80). Notably this implies that while the OFF responses to individual stimuli span low-dimensional subspaces, the joint response across stimuli shows high-dimensional structure.

We finally examined to what extent the structure observed between the OFF response trajectories to different stimuli (Figure 2D) could be predicted from the structure of the population activity states reached at the end of stimulus presentation, corresponding to the initial states . Remarkably, we found that the initial states exhibited structure across stimuli that matched well the structure of OFF response dynamics (Figure 2E left panel and Figure 2—figure supplement 4), even though the component along the initial states was substracted from the corresponding OFF response trajectories (Figure 2E right panel and Figure 2—figure supplement 4). This suggests that initial states contribute to determining the subsequent dynamics of the OFF responses.

Single-cell model for OFF responses

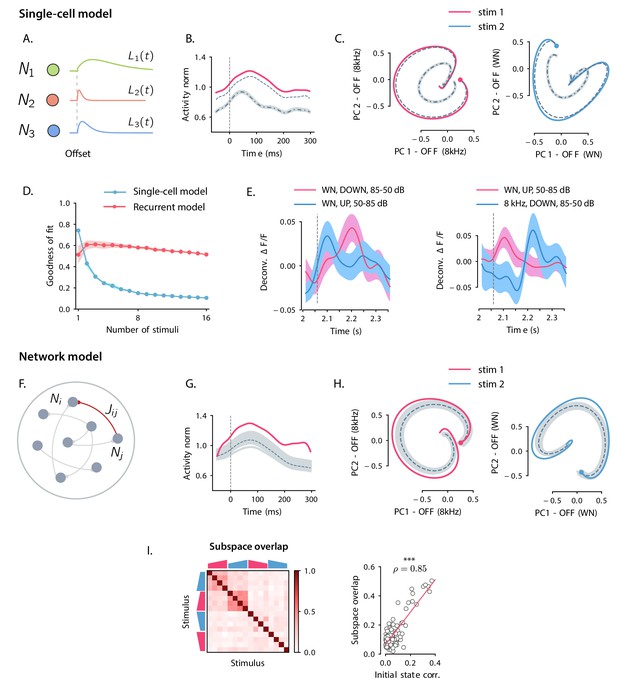

Our analyses of auditory cortical data identified three prominent features of population dynamics: (i) OFF responses correspond to transiently amplified trajectories; (ii) responses to individual stimuli explore low-dimensional subspaces; (iii) responses to different stimuli lie in largely orthogonal subspaces. We next examined to what extent these three features could be accounted for by a single-cell mechanisms for OFF response generation.

The AC is not the first stage where OFF responses arise. Robust OFF responses are found throughout the auditory pathway, and in subcortical areas the generation of OFF responses most likely relies on mechanisms that depend on the interaction between the inhibitory and excitatory synaptic inputs to single cells (e.g. post-inhibitory rebound or facilitation, see Kopp-Scheinpflug et al., 2018). To examine the possibility that OFF responses in AC are consistent with a similar single-cell mechanism, we considered a simplified, linear model that could be directly fit to calcium recordings in the AC. Adapting previously used models (Anderson and Linden, 2016; Meyer et al., 2016), we assumed that the cells received no external input after stimulus offset, and that the response of neuron i after stimulus offset is specified by a characteristic linear filter, which describes the cell’s intrinsic response generated by intracellular or synaptic mechanisms (Figure 3A). We moreover assumed that the shape of this temporal response is set by intrinsic properties, and is therefore identical across different stimuli. In the model, each stimulus modulates the response of a single neuron linearly depending on its activity at stimulus offset, so that the (baseline-subtracted) OFF response of neuron i to stimulus s is written as:

where is the modulation factor for neuron i and stimulus s. We estimated the single-cell responses from the data by fitting basis functions to the responses of neurons subject to prior normalization by the modulation factors using linear regression (see Materials and methods, Section 'Single-cell model for OFF response generation'). We first fitted the single-cell model to responses to a single stimulus, and then increased the number of stimuli.

Comparison between single-cell and network models for OFF response generation.

(A) Cartoon illustrating the single-cell model defined in Equation (1). The activity of each unit is described by a characteristic temporal response (colored traces). Across stimuli, only the relative activation of the single units changes, while the temporal shape of the responses of each unit does not vary. (B) Distance from baseline of the population activity vector during the OFF response to one example stimulus (red trace; same stimulus as in C left panel). The dashed vertical line indicates the time of stimulus offset. Gray dashed lines correspond to the norms of the population activity vectors obtained from fitting the single-cell model respectively to the OFF response to a single stimulus (dashed line), and simultaneously to the OFF responses to two stimuli (dash-dotted line; in this example, the two fitted stimuli are the ones considered in panel C). In light gray we plot the norm of 100 realizations of the fitted OFF response by simulating different initial states distributed in the vicinity of the fitted initial state (shown only for the simultaneous fit of two stimuli for clarity). Note that in the single-cell model fit (see Materials and methods, Section 'Fitting the single-cell model'), the fitted initial condition can substantially deviate from the initial condition taken from the data. (C) Colored traces: projection of the population OFF responses to two distinct example stimuli (same stimuli as in Figure 2B) onto the first two principal components of either stimulus. As in panel B gray dashed and dash-dotted traces correspond to the projection of the OFF responses obtained when fitting the single-cell model to one or two stimuli at once. (D) Goodness of fit (as quantified by coefficient of determination ) computed by fitting the single-cell model (blue trace) and the network model (red trace) to the calcium activity data, as a function of the number of stimuli included in the fit. Both traces show the cross-validated value of the goodness of fit (10-fold cross-validation in the time domain). Error bars represent the standard deviation over multiple subsamplings of a fixed number of stimuli (reported on the abscissa). Prior to fitting, for each subsampling of stimuli, we reduced the dimensionality of the responses using PCA, and kept the dominant dimensions that accounted for 90% of the variance. (E) Examples of the OFF responses to distinct stimuli of two different auditory cortical neurons (each panel corresponds to a different neuron). The same neuron exhibits different temporal response profiles for different stimuli, a feature consistent with the network model (see Figure 6A,D), but not with the single-cell model. (F) Illustration of the recurrent network model. The variables defining the state of the network are the (baseline-subtracted) firing rates of the units, denoted by . The strength of the connection from unit j to unit i is denoted by . (G) Distance from baseline of the population activity vector during the OFF response to one example stimulus (red trace; same stimulus as in C left panel). Gray traces correspond to the norms of the population activity vectors obtained from fitting the network model to the OFF response to a single stimulus and generated using the fitted connectivity matrix. The initial conditions were chosen in a neighborhood of the population activity vector 50 ms before sound offset. 100 traces corresponding to 100 random choices of the initial condition are shown. Dashed trace: average distance from baseline. (H) Colored traces: projection of the population OFF responses to two different stimuli (same stimuli as in Figure 2B) on the first two principal components. Gray traces: projections of multiple trajectories generated by the network model using the connectivity matrix fitted to the individual stimuli as in G. The initial condition is indicated with a dot. 100 traces are shown. Dashed trace: projection of the average trajectory. (I) Left: overlap between the subspaces corresponding to OFF response trajectories to pairs of stimuli (as in Figure 2D) generated using the connectivity matrix fitted to all stimuli at once. Right: linear correlation between subspace overlaps and the overlaps between the initial states for each stimulus pair computed using the trajectories obtained by the fitted network model. The component of the dynamics along the corresponding initial states was subtracted before computing the subspace overlaps.

The single-cell model accounted well for the first two features observed in the data: (i) because the single-cell responses are in general non-monotonic, the distance from baseline of the population activity vector displayed amplified dynamics (Figure 3B); (ii) at the population level, the trajectories generated by the single-cell model spanned low-dimensional subspaces of at least two dimensions (Figure 3C) and provided excellent fits to the trajectories in the data when the response to a single stimulus was considered (Figure 3C). However, we found that the single-cell model could not account for the structure of responses across multiple stimuli. Indeed, although fitting the model to OFF responses to a single stimulus led to an excellent match with auditory cortical data (coefficient of determination ), increasing the number of simultaneously fitted stimuli led to increasing deviations from the data (Figure 3B,C), and strongly decreased the goodness of fit (Figure 3D). When fitting all stimuli at once, the goodness of fit was extremely poor (coefficient of determination ), and therefore the single-cell model could not provide useful information about the third feature of the data, the structure of subspaces spanned in response to different stimuli. A simple explanation for this inability to capture structure across stimuli is that in the single-cell model the temporal shape of the response of each neuron is the same across all stimuli, while this was not the case in the data (Figure 3E).

Network model for OFF responses

We contrasted the single-cell model with a network model that generated OFF responses through collective interactions between neurons. Specifically, we studied a linear network of recurrently coupled linear rate units with time evolution given by:

The quantity represents the deviation of the activity of the unit i from its baseline firing rate, while denotes the effective strength of the connection from neuron j to neuron i (Figure 3F). As in the single-cell model, we assumed that the network received no external input after stimulus offset. The only effect of the preceding stimulus was to set the initial pattern of activity of the network at . The internal recurrent dynamics then fully determined the subsequent evolution of the activity. Each stimulus s was thus represented by an initial state that was mapped onto a trajectory of activity . We focused on the dynamics of the network following stimulus offset, which we represent as .

To quantify to what extent recurrent interactions could account for auditory cortical dynamics, we fitted the network model to OFF responses using linear regression (see Materials and methods, Section 'Fitting the network model'). For each stimulus, the pattern of initial activity was taken from the data, and the connectivity matrix was fitted to the responses to a progressively increasing number of stimuli.

Qualitatively, we found that the fitted network model reproduced well the first two features of the data, transient amplification and low-dimensional population trajectories (Figure 3G,H). Quantitatively, we evaluated the goodness of fit by computing the coefficient of determination . While the goodness of fit of the network model was lower than the single-cell model when fitting the response to a single stimulus (), for the network model the goodness of fit remained consistently high as the number of simultaneously fitted stimuli was increased (Figure 3D, when fitted on the responses to all stimuli). Computing the goodness of fit by taking into account the number of parameters of the network and single-cell models led to the same conclusion (Figure 3—figure supplement 1). When fitted to all stimuli at once, the fitted network model captured the structure of the subspace overlaps (Figure 3I left panel) and its correlation with the structure of initial conditions (Figure 3I right panel). In contrast to the single-cell model, the network model therefore accounted well for the third feature of the data, the structure of responses across stimuli. This can be explained by the fact that, in the network model, the temporal OFF-responses of single cells can in general differ across stimuli (see Figure 6A,D), similar to the activity in the AC (Figure 3E).

Testing the network mechanisms of OFF responses on the data

Having found that the fitted network model reproduced the three main features of the data, we next analyzed this model to identify the mechanisms responsible for each feature. The identified mechanisms provided new predictions that we tested on the effective connectivity matrix obtained from the fit to the data.

Transiently amplified OFF responses

The first feature of the data was that the dynamics were transiently amplified, i.e. the OFF responses first deviated from baseline before eventually converging to it. A preliminary requirement to reproduce this feature is that dynamics are stable, that is, eventually decay to baseline following any initial state. This requirement leads to the usual condition that the eigenvalues of the connectivity matrix have real parts less than unity. Provided this basic requirement is met, during the transient dynamics from the initial state at stimulus offset, the distance from baseline can either monotonically decrease, or transiently increase before eventually decreasing. To generate the transient increase, the connectivity matrix needs to belong to the class of non-normal matrices (Trefethen and Embree, 2005; Murphy and Miller, 2009; Goldman, 2009; Hennequin et al., 2012; see Materials and methods, Section 'Normal and non-normal connectivity matrices'), but this is not a sufficient condition. More specifically, the largest eigenvalue of the symmetric part defined by needs to be larger than unity, while the initial state needs to belong to a subset of amplified patterns (Bondanelli and Ostojic, 2020; see Materials and methods, Section 'Sufficient condition for amplified OFF responses').

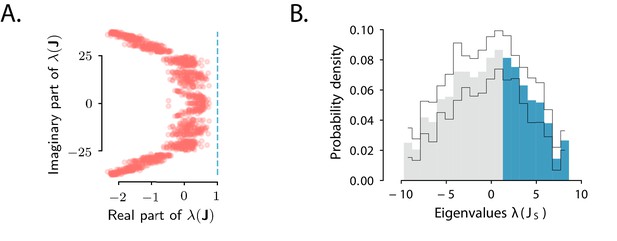

To test the predictions derived from the condition for amplified transient responses, that is, that belongs to a specific subset of non-normal matrices, we examined the spectra of the full connectivity and of its symmetric part (Bondanelli and Ostojic, 2020). The eigenvalues of the fitted connectivity matrix had real parts smaller than one, indicating stable dynamics, and large imaginary parts (Figure 4A). Our theoretical criterion predicted that for OFF responses to be amplified, the spectrum of the symmetric part must have at least one eigenvalue larger than unity. Consistent with this predictions, we found that the symmetric part of the connectivity had indeed a large number of eigenvalues larger than one (Figure 4B) and could therefore produce amplified responses (Figure 3G).

Spectra of the connectivity matrix of the fitted network model.

(A) Eigenvalues of the connectivity matrix obtained by fitting the network model to the population OFF responses to all 1 s stimuli at once. The dashed line marks the stability boundary given by . (B) Probability density distribution of the eigenvalues of the symmetric part of the effective connectivity, . Eigenvalues larger than unity (; highlighted in blue) determine strongly non-normal dynamics. In A and B the total dimensionality of the responses was set to 100. The fitting was performed on 20 bootstrap subsamplings (with replacement) of 80% of neurons out of the total population. Each bootstrap subsampling resulted in a set of 100 eigenvalues. In panel A we plotted the eigenvalues of obtained across all subsamplings. In panel B the thin black lines indicate the standard deviation of the eigenvalue probability density across subsamplings. In panels A and B, the temporal window considered for the fit was extended from 350 ms to 600 ms, to include the decay to zero baseline of the OFF responses. The extension of the temporal window was possible only for the 1 s long stimuli (n=8), since the length of the temporal window following stimulus offset for the 2 s stimuli was limited by the length of the neural recordings.

Low-dimensionality of OFF response trajectories

The second feature of auditory cortical data was that each stimulus generated a population response embedded in an approximately five-dimensional subspace of the full state space. We hypothesized that low-dimensional responses in the fitted network model originated from a low-rank structure in the connectivity (Mastrogiuseppe and Ostojic, 2018), implying that the connectivity matrix could be approximated in terms of modes, that is, as

where each mode was specified by two -dimensional vectors and , which we term the right and left connectivity patterns respectively (Mastrogiuseppe and Ostojic, 2018). This set of connectivity patterns is uniquely defined from the singular value decomposition (SVD) of the connectivity matrix (see Materials and methods, Section 'Low-dimensional dynamics').

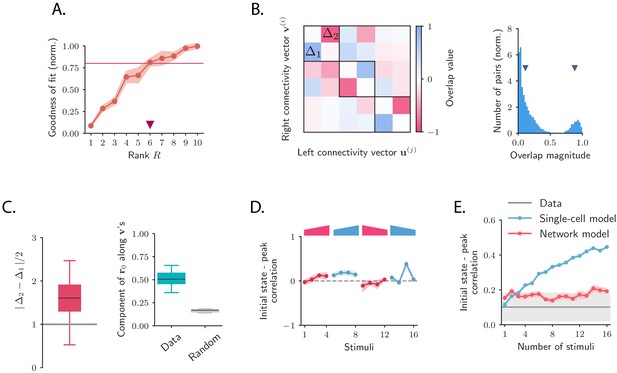

To test the low-rank hypothesis, we re-fitted the network model while constraining the rank of connectivity matrix (Figure 5A, Figure 5—figure supplement 1, Figure 5—figure supplement 2; see Materials and methods, Section 'Fitting the network model'). Progressively increasing the rank , we found that was sufficient to capture more than 80% of the variance explained by the network model when fitting the responses to individual stimuli (Figure 5A).

Low-dimensional structure of the dynamics of OFF responses to individual stimuli.

(A) Goodness of fit (coefficient of determination) as a function of the rank of the fitted network, normalized by the goodness of fit computed using ordinary least squares ridge regression. The shaded area represents the standard deviation across individual stimuli. For reduced-rank regression captures more than 80% (red solid line) of the variance explained by ordinary least squares regression. (B) Left: overlap matrix consisting of the overlaps between left and right connectivity patterns of the connectivity fitted on one example stimulus. The color code shows strong (and opposite in sign) correlation values between left and right connectivity patterns within pairs of nearby modes. Weak coupling is instead present between different rank-2 channels (highlighted by dark boxes). Right: histogram of the absolute values of the correlation between right and left connectivity patterns, across all stimuli, and across 20 random subsamplings of 80% of the neurons in the population for each stimulus. Left and right connectivity vectors are weakly correlated, except for the pairs corresponding to the off-diagonal couplings within each rank-2 channel. The two markers indicate the average values of the correlations within each group. When fitting individual stimuli the rank parameter and the number of principal components are set respectively to 6 and 100. (C) Left: absolute value of the difference between and (see panel B) divided by 2, across stimuli. For a rank-R channel (here ) comprising rank-2 channels, the maximum difference across the rank-2 channels is considered. Large values of this difference indicate that the dynamics of the corresponding rank-R channel are amplified. Right: component of the initial state on the left connectivity vectors , (i.e. in Equation (75)), obtained from the fitted connectivity to individual stimuli (in green). The component of the initial condition along the left connectivity vectors (green box) is larger than the component of random vectors along the same vectors (gray box). In both panels the boxplots show the distributions across all stimuli of the corresponding average values over 20 random subsamplings of 80% of the neurons. The rank parameter and the number of principal components are the same as in B. (D) For each stimulus, we show the correlation between the state at the end of stimulus presentation and the state at the peak of the OFF response, defined as the time of maximum distance from baseline. Error bars represent the standard deviation computed over 2000 bootstrap subsamplings of 50% of the neurons in the population (2343 neurons). (E) Correlation between initial state and peak state obtained from fitting the single-cell (in green) and network models (in red) to a progressively increasing number of stimuli. For each fitted response the peak state is defined as the population vector at the time of maximum distance from baseline of the original response. The colored shaded areas correspond to the standard error over 100 subsamplings of a fixed number of stimuli (reported on the abscissa) out of 16 stimuli. For each subsampling of stimuli, the correlation is computed for one random stimulus.

The dynamics in the obtained low-rank network model are therefore fully specified by a set of patterns over the neural population: patterns corresponding to initial states determined by the stimuli, and patterns corresponding to connectivity vectors and for that determine the network dynamics. Recurrent neural networks based on such connectivity directly generate low-dimensional dynamics: for a given stimulus s the trajectory of the dynamics lies in the subspace spanned by the initial state and the set of right connectivity patterns (Mastrogiuseppe and Ostojic, 2018; Bondanelli and Ostojic, 2020; Schuessler et al., 2020; Beiran et al., 2020). More specifically, the transient dynamics can be written as (see Materials and methods, Section 'Low-dimensional dynamics')

where and are matrices that contain respectively the right and left connectivity vectors as columns, and is the matrix of overlaps between left and right connectivity vectors. This overlap matrix therefore fully determines the dynamics in the network (see Materials and methods, Section 'Low-dimensional dynamics').

Inspecting the overlap matrix obtained from the fitted connectivity matrix revealed a clear structure, where left and right vectors were strongly correlated within pairs, but uncorrelated between different pairs (Figure 5B left panel). This structure effectively defined a series of rank-2 channels that were orthogonal to each other. Within individual rank-2 channels, strong correlations were observed only across the two modes (e.g. between and , and , etc.; Figure 5B), so that the connectivity matrix corresponding to each rank-2 channel can be written as

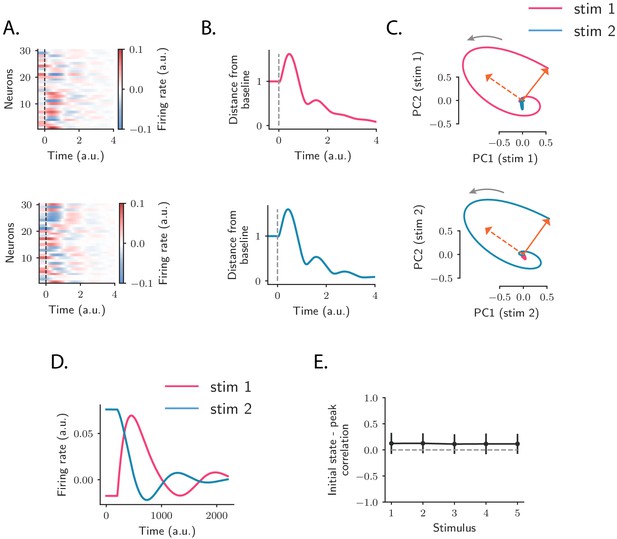

where and are two scalar values. Rank-2 matrices of this type have purely imaginary eigenvalues given by , reflecting the strong imaginary components in the eigenspectrum of the full matrix (Figure 4A). These imaginary eigenvalues lead to strongly rotational dynamics in the plane defined by and (Figure 6C; see Materials and methods, Section 'Dynamics of a low-rank rotational channel'), qualitatively similar to the low-dimensional dynamics in the data (Figure 2B). Such rotational dynamics however do not necessarily imply transient amplification. In fact, a rank-2 connectivity matrix as in Equation (5) generates amplified dynamics only if two conditions are satisfied (Figure 6B; see Materials and methods, Section 'Dynamics of a low-rank rotational channel'): (i) the difference is greater than unity; (ii) the initial state overlaps strongly with the left connectivity patterns . A direct consequence of these constraints is that when dynamics are transiently amplified, the population activity vector at the peak of the transient dynamics lies along a direction that is largely orthogonal to the initial state at stimulus offset (Figure 6C,E, see Materials and methods, Section 'Correlation between initial and peak state').

Population OFF responses in a network model with low-rank rotational structure.

(A) Single-unit OFF responses to two distinct stimuli generated by orthogonal rank-2 rotational channels (see Materials and methods, Section 'Dynamics of a low-rank rotational channel'). The two stimuli were modeled as two different activity states and at the end of stimulus presentation (). We simulated a network of 1000 units. The connectivity consisted of the sum of rank-2 rotational channels of the form given by Equation (5, 6) ( in Equation (6) and , for all ). Here the two stimuli were chosen along two orthogonal vectors and . Dashed lines indicate the time of stimulus offset. (B) Distance from baseline of the population activity vector during the OFF responses to the two stimuli in A. For each stimulus, the amplitude of the offset responses (quantified by the peak value of the distance from baseline) is controlled by the difference between the lengths of the right connectivity vectors of each rank-2 channel, that is, . (C) Projection of the population OFF responses to the two stimuli onto the first two principal components of either stimuli. The projections of the vectors and (resp. and ) on the subspace defined by the first two principal components of stimulus 1 (resp. 2) are shown respectively as solid and dashed arrows. The subspaces defined by the vector pairs , and , are mutually orthogonal, so that the OFF responses to stimuli 1 and 2 evolve in orthogonal dimensions. (D) Firing rate of one example neuron in response to two distinct stimuli: in the recurrent network model the time course of the activity of one unit is not necessarily the same across stimuli. (E) Correlation between the initial state (i.e. the state at the end of stimulus presentation) and the state at the peak of the OFF responses, for five example stimuli. Error bars represent the standard deviation computed over 100 bootstrap subsamplings of 50% of the units in the population.

The theoretical analyses of the model therefore provide a new set of predictions that we directly tested on the connectivity fitted to the data. We first computed the differences for each channel using the SVD of the fitted matrix , and found that they were sufficiently large to amplify the dynamics within each rank- channel (Figure 5C left panel). We next examined for each stimulus the component of the initial state on the ’s and found that it was significantly larger than the component on the ’s of a random vector, across all stimuli (Figure 5C right panel). Finally, computing the correlation between the peak state and the initial state at the end of stimulus presentation, we found that this correlation took values lower than values predicted by chance for almost all stimuli, consistent with the prediction of the network model (Figure 5D, Figure 5—figure supplement 3). The single-cell model instead predicts stronger correlations between initial and peak states, in clear conflict with the data (Figure 5E).

Orthogonal OFF response trajectories across stimuli

The third feature observed in the data was that population responses evoked by different stimuli were orthogonal for many of the considered stimuli. Orthogonal trajectories for different stimuli can be reproduced in the model under the conditions that (i) initial patterns of activity corresponding to different stimuli are orthogonal, (ii) the connectivity matrix is partitioned into a set of orthogonal low-rank terms, each individually leading to transiently amplified dynamics, and (iii) each stimulus activates one of the low-rank terms. Altogether, for stimuli leading to orthogonal responses, the connectivity matrix can be partitioned in different groups of modes:

with

where the vectors have unit norm. The set of modes indexed by s correspond to the s-th stimulus, so that the pattern of initial activity evoked by stimulus s overlaps only with those modes. Moreover, modes corresponding to different groups are orthogonal, and generate dynamics that span orthogonal subspaces (Figure 2D). Each term in Equation (6) can therefore be interpreted as an independent transient coding channel that can be determined from the OFF-response to stimulus s alone.

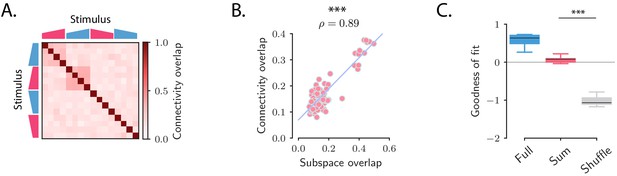

We therefore examined whether the fitted connectivity consisted of independent low-rank coding channels (Equation (6)), a constraint that would generate orthogonal responses to different stimuli as observed in the data. If consisted of fully independent channels as postulated in Equation (6), then it could be equivalently estimated by fitting the recurrent model to the OFF responses to each stimulus s independently, leading to one matrix for each stimulus. The hypothesis of fully independent channels then implies that (i) the connectivity vectors for the different connectivity matrices are mutually orthogonal, (ii) the full connectivity matrix can be reconstructed by summing the matrices estimated for individual stimuli. To test these two predictions, we estimated the connectivity matrices (the rank parameter for each stimulus was set to ). We then computed the overlaps between the connectivity vectors corresponding to different pairs of stimuli (see Materials and methods, Section 'Analysis of the transient channels') and compared them to the overlaps between the subspaces spanned in response to the same pairs of stimuli (see Figure 2D). We found a close match between the two quantities (Figure 7A,B): pairs of stimuli with strong subspace overlaps corresponded to high connectivity overlaps, and pairs of stimuli with low subspace overlaps corresponded to low connectivity overlaps. This indicated that some of the stimuli, but not all, corresponded to orthogonal coding channels. We further reconstructed the OFF responses using the matrix . We then compared the goodness of fit computed using the matrices , and multiple controls where the elements of the matrix were randomly shuffled. While the fraction of variance explained when using the matrix was necessarily lower than the one computed using , the model with connectivity yielded values of the goodness of fit significantly higher than the ones obtained from the shuffles (Figure 7C). These results together indicated that the full matrix can indeed be approximated by the sum of the low-rank channels represented by the .

Structure of the transient channels across stimuli.

(A) Overlaps between the transient channels corresponding to individual stimuli (connectivity overlaps), as quantified by the overlap between the right connectivity vectors of the connectivities fitted to each individual stimulus s (see Materials and methods, Section 'Analysis of the transient channels'). The ridge and rank parameters, and the number of principal components have been respectively set to , and (the choice of maximized the coefficient of determination between the connectivity overlaps, A, and the subspace overlaps, Figure 2D). (B) Scatterplot showing the relationship between the subspace overlaps (see Figure 2D) and connectivity overlaps (panel A) for each pair of stimuli. (C) Goodness of fit computed by predicting the population OFF responses using the connectivity (Full) or the connectivity given by the sum of the individual channels (Sum). These values are compared with the goodness of fit obtained by shuffling the elements of the matrix (Shuffle). Box-and-whisker plots show the distributions of the goodness of fit computed for each cross-validation partition (10-fold). For the ridge and rank parameters, and the number of principal components were set to , and (Figure 5—figure supplement 1).

Amplification of single-trial fluctuations

So far we examined the activity obtained by averaging for each neuron the response to the same stimulus over multiple trials. We next turned to trial-to-trial variability of simultaneously recorded neurons and compared the structure of the variability in the data with the network and single-cell models. Specifically, we examined the variance of the activity along a direction of state-space (Figure 8A; Hennequin et al., 2012), defined as:

where denotes the averaging across trials. We compared the variance along the direction corresponding to the maximum amplification (distance from baseline) of the trial-averaged dynamics with variance along a random direction .

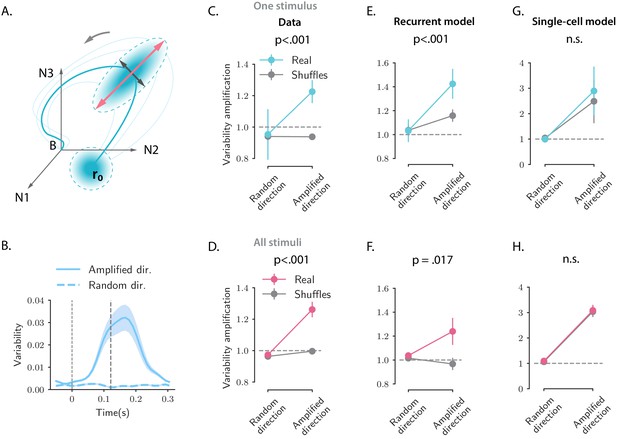

Structure of trial-to-trial variability generated by the network and single-cell models, compared to the auditory cortical data.

(A) Cartoon illustrating the structure of single-trial population responses along different directions in the state-space. The thick and thin blue lines represent respectively the trial-averaged and single-trial responses. Single-trial responses start in the vicinity of the trial-averaged activity . Both the network and single-cell mechanisms dynamically shape the structure of the single-trial responses, here represented as graded blue ellipses. The red and black lines represent respectively the amplified and random directions considered in the analyses. (B) Time course of the variability computed along the amplified direction (solid trace) and along a random direction (dashed trace) for one example stimulus and one example session (287 simultaneously recorded neurons). In this and all the subsequent panels the amplified direction is defined as the population activity vector at the time when the norm of the trial-averaged activity of the pseudo-population pooled over sessions and animals reaches its maximum value (thick dashed line). Thin dashed lines denote stimulus offset. Shaded areas correspond to the standard deviation computed over 20 bootstrap subsamplings of 19 trials out of 20. (C, E, and G) Variability amplification (VA) computed for the amplified and random directions on the calcium activity data (panel C), on trajectories generated using the network model (panel E) and the single-cell model (panel G); (see Materials and methods, Section 'Single-trial analysis of population OFF responses'), for one example stimulus (same as in B). The network and single-cell models were first fitted to the trial-averaged responses to individual stimuli, independently across all recordings sessions (13 sessions, 180 ± 72 neurons). 100 single-trial responses were then generated by simulating the fitted models on 100 initial conditions drawn from a random distribution with mean and covariance matrix equal to the covariance matrix computed from the single-trial initial states of the original responses (across neurons for the single-cell model, across PC dimensions for the recurrent model). Results did not change by drawing the initial conditions from a distribution with mean and isotropic covariance matrix (i.e. proportional to the identity matrix, as assumed for the theoretical analysis in Materials and methods, Section 'Single-trial analysis of population OFF responses'). In the three panels, the values of VA were computed over 50 subsamplings of 90% of the cells (or PC dimensions for the recurrent model) and 50 shuffles. Error bars represent the standard deviation over multiple subsamplings, after averaging over all sessions and shuffles. Significance levels were evaluated by first computing the difference in VA between amplified and random directions () and then computing the p-value on the difference between and across subsamplings (two-sided independent t-test). For the network model, the VA is higher for the amplified direction than for a random direction, and this effect is significantly stronger for the real than for the shuffled responses. Instead, for the single-cell model the values of the VA computed on the real responses are not significantly different from the ones computed on the shuffled responses. (D, F, and H) Values of the VA computed as in panels C, E, and G pooled across all 16 stimuli. Error bars represent the standard error across stimuli. Significance levels were evaluated computing the p-value on the difference between and across stimuli (two-sided Wilcoxon signed-rank test). The fits of the network and single-cell models of panels E, G, F, and H were generated using ridge regression ( for both models).

Inspecting the variability during the OFF-responses in the AC data revealed two prominent features: (i) fluctuations across trials are amplified along the same direction of state-space as trial-averaged dynamics, but not along random directions (Figure 8B,C,D); (ii) cancelling cross-correlations by shuffling trial labels independently for each cell strongly reduces the amplification of the variance (Figure 8C,D). This second feature demonstrates that the amplification of variance is due to noise-correlations across neurons that have been previously reported in the AC (Rothschild et al., 2010). Indeed the variance along a direction at time can be expressed as

where represents the covariance matrix of the population activity at time . Shuffling trial labels independently for each cell effectively cancels the off-diagonal elements of the covariance matrix and leaves only the diagonal elements that correspond to single-neuron variances. The comparison between simultaneous and shuffled data (Figure 8C,D) demonstrates that an increase in single-neuron variance is not sufficient to explain the total increase in variance along the amplified direction .

Simulations of the fitted models and a mathematical analysis show that the network model reproduces both features of trial-to-trial variability observed in the data (Figure 8E,F), based on a minimal assumption that variability originates from independent noise in the initial condition at stimulus offset (see Materials and methods, Section 'Structure of single-trial responses in the network model'). Surprisingly, the single-cell model also reproduces the first feature, the amplification of fluctuations along the same direction of state-spate as trial-averaged dynamics, although in that model the different neurons are not coupled and noise-correlations are absent (Figure 8G,H). Instead, the single-cell model fails to capture the second feature, as it produces amplified variability in both simultaneous and shuffled activity. This demonstrates that the amplification of variability in the single-cell model is due to an amplification of single-cell variances, that is, the diagonal elements of covariance matrix (see Materials and methods, Section 'Structure of single-trial responses in the single-cell model'). Somewhat counter-intuitively, the disagreement between the single-cell model and the data is not directly due to the lack of noise-correlations in that model, but due to the fact that the single-cell model does not predict accurately the variance of single-neuron activity during the OFF-responses, and therefore fails to capture variance in shuffled activity.

In summary, the network model accounts better than the single-cell model for the structure of single-trial fluctuations present in the AC data.

Discussion

Adopting a population approach, we showed that strong OFF responses observed in auditory cortical neurons form transiently amplified trajectories that encode individual stimuli within low-dimensional subspaces. A geometrical analysis revealed a clear structure in the relative orientation of these subspaces, where subspaces corresponding to different auditory stimuli were largely orthogonal to each other. We found that a simple, linear single-neuron model captures well the average response to individual stimuli, but not the structure across stimuli and across individual trials. In contrast, we showed that a simple, linear recurrent network model accounts for all the properties of the population OFF responses, notably their global structure across multiple stimuli and the fluctuations across single trials. Our results therefore argue for a potential role of network interactions in shaping OFF responses in AC. Ultimately, future work could combine both single-cell and network mechanisms in a network model with more complex intrinsic properties of individual neurons (Beiran and Ostojic, 2019; Muscinelli et al., 2019).

In this study, we focused specifically on the responses following stimulus offset. Strong transients during stimulus onset display similar transient coding properties (Mazor and Laurent, 2005) and could be generated by the same network mechanism as we propose for the OFF responses. However, during ON-transients, a number of additional mechanisms are likely to play a role, in particular single-cell adaptation, synaptic depression, or feed-forward inhibition. Indeed, recent work has shown that ON and OFF trajectories elicited by a stimulus are orthogonal to each other in the state-space (Saha et al., 2017), and this was also the case in our data set (Figure 2—figure supplement 3). Linear network models instead produce ON and OFF responses that are necessarily correlated and cannot account for the observed orthogonality of ON and OFF responses for a given stimulus. Distinct ON and OFF response dynamics could also result from intrinsic nonlinearities known to play a role in the AC (Calhoun and Schreiner, 1998; Rotman et al., 2001; Sahani and Linden, 2003; Machens et al., 2004; Williamson et al., 2016; Deneux et al., 2016).

A major assumption of both the single-cell and network models we considered is that the AC does not receive external inputs after the auditory stimulus is removed. Indeed, the observation that the structure of the dynamics across stimuli could be predicted by the structure of their initial states (Figure 2D,E and Figure 2—figure supplement 4) indicates that autonomous dynamics at least partly shape the OFF responses. The comparisons between data and models moreover show that autonomous dynamics are in principle sufficient to reproduce a number of features of OFF responses in the absence of any external drive. However, neurons in the AC do receive direct afferents from the medial geniculate body of the thalamus, and indirect input from upstream regions of the auditory pathway, where strong OFF responses have been observed. Thus, in principle, OFF responses observed in AC could be at least partly inherited from upstream auditory regions (Kopp-Scheinpflug et al., 2018). Disentangling the contributions of upstream inputs and recurrent dynamics is challenging if one has access only to auditory cortical activity (but see Seely et al., 2016 for an interesting computational approach). Ultimately, the origin of OFF responses in AC needs to be addressed by comparing responses between related areas, an approach recently adopted in the context of motor cortical dynamics (Lara et al., 2018). A direct prediction of our model is that the inactivation of recurrent excitation in auditory cortical areas should weaken OFF responses (Li et al., 2013). However, recurrency in the auditory system is not present only within the cortex but also between different areas along the pathway (Ito and Malmierca, 2018; Winer et al., 1998; Lee et al., 2011). Therefore OFF responses could be generated at a higher level of recurrency and might not be abolished by inactivation of AC.

The dimensionality of the activity in large populations of neurons in the mammalian cortex is currently the subject of debate. A number of studies have found that neural activity explores low-dimensional subspaces during a variety of simple behavioral tasks (Gao et al., 2017). In contrast, a recent study in the visual cortex has shown that the response of large populations of neurons to a large number of visual stimuli is instead high-dimensional (Stringer et al., 2019). Our results provide a potential way of reconciling these two sets of observations. We find that the population OFF responses evoked by individual auditory stimuli are typically low-dimensional, but lie in orthogonal spaces, so that the dimensionality of the responses increases when considering an increasing number of stimuli. Note that in contrast to Stringer et al., 2019, we focused here on the temporal dynamics of the population response. The dimensionality of these dynamics is in particular limited by the temporal resolution of the recordings (Figure 2—figure supplement 1).

The analyses we performed in this study were directly inspired by an analogy between OFF responses in the sensory areas and neural activity in the motor cortices (Churchland and Shenoy, 2007; Churchland et al., 2010). Starting at movement onset, single-neuron activity recorded in motor areas exhibits strongly transient and multiphasic firing lasting a few hundreds of milliseconds. Population-level dynamics alternate between at least two dimensions, shaping neural trajectories that appear to rotate in the state-space. These results have been interpreted as signatures of an underlying dynamical system implemented by recurrent network dynamics (Churchland et al., 2012; Shenoy et al., 2011), where the population state at movement onset provides the initial condition able to generate the transient dynamics used for movement generation. Computational models have explored this hypothesis (Sussillo et al., 2015; Hennequin et al., 2014; Stroud et al., 2018) and showed that the complex transient dynamics observed in motor cortex can be generated in network models with strong recurrent excitation balanced by fine-tuned inhibition (Hennequin et al., 2014). Surprisingly, fitting a linear recurrent network model to auditory cortical data, we found that the arrangement of the eigenvalues of the connectivity matrix was qualitatively similar to the spectrum of this class of networks (Hennequin et al., 2014), suggesting that a common mechanism might account for the responses observed in both areas.

The perceptual significance of OFF responses in the auditory pathway is still matter of ongoing research. Single-cell OFF responses observed in the auditory and visual pathways have been postulated to form the basis of duration selectivity (Brand et al., 2000; Alluri et al., 2016; He, 2002; Aubie et al., 2009; Duysens et al., 1996). In the auditory brainstem and cortex, OFF responses of single neurons exhibit tuning in the frequency-intensity domain, and their receptive field has been reported to be complementary to the receptive field of ON responses (Henry, 1985; Scholl et al., 2010). The complementary spatial organization of ON and OFF receptive fields may result from two distinct sets of synaptic inputs to cortical neurons (Scholl et al., 2010), and has been postulated to form the basis for higher-order stimulus features selectivity in single cells, such as frequency-modulated (FM) sounds (Sollini et al., 2018) and amplitude-modulated (AM) sounds (Deneux et al., 2016), both important features of natural sounds (Sollini et al., 2018; Nelken et al., 1999). Complementary tuning has also been observed between cortical ON and OFF responses to binaural localization cues, suggesting OFF responses may contribute to the encoding of sound-source location or motion (Hartley et al., 2011). At the population level, the proposed mechanism for OFF response generation may provide the basis for encoding complex sequences of sounds. Seminal work in the olfactory system has shown that sequences of odors evoked specific transient trajectories that depend on the history of the stimulation (Broome et al., 2006; Buonomano and Maass, 2009). Similarly, within our framework, different combinations of sounds could bring the activity at the end of stimulus offset to different regions of the state-space, setting the initial condition for the subsequent OFF responses. If the initial conditions corresponding to different sequences are associated with distinct transient coding channels, different sound sequences would evoke transient trajectories along distinct dimensions during the OFF responses, therefore supporting the robust encoding of complex sound sequences.

Materials and methods

Data analysis

The data set

Request a detailed protocolNeural recordings

Request a detailed protocolNeural data was recorded and described in previous work (Deneux et al., 2016). We analyzed the activity of 2343 neurons in mouse AC recorded using two-photon calcium imaging while mice were passively listening to different sounds. Each sound was presented 20 times. Data included recordings from three mice across 13 different sessions. Neural recordings in the three mice comprised respectively 1251, 636, and 456 neurons. Recordings in different sessions were performed at different depths, typically with a 50 µm difference (never less than 20 µm). Since the soma diameter is ∼15 µm, this ensured that different cells were recorded in different sessions. We analyzed the trial-averaged activity of a pseudo-population of neurons built by pooling neural activity across all recording sessions and all animals. The raw calcium traces (imaging done at 31.5 frames per second) were smoothed using a Gaussian kernel with standard deviation ms. We then subtracted the baseline firing activity (i.e. the average neural activity before stimulus presentation) from the activity of each neuron, and used the baseline-subtracted neural activity for the analyses.

The stimuli set

Request a detailed protocolThe stimuli consisted of a randomized presentation of 16 different sounds, 8 UP-ramping sounds, and 8 DOWN-ramping sounds. For each type, sounds had different frequency content (either 8 kHz or WN), different durations (1 or 2 s), and different combinations of onset and offset intensity levels (for UP-ramps either 50–85 dB or 60–85 dB, while for DOWN-ramps 85–50 dB or 85–60 dB). The descriptions of the stimuli are summarized in Table 1.

Decoding analysis

Request a detailed protocolTo assess the accuracy of stimulus discrimination (8 kHz vs. WN sound) on single-trials, we trained and tested a linear discriminant classifier (Bishop, 2006) using cross-validation. For each trial, the pseudo-population activity vectors (built by pooling across sessions and animals) were built at each 50 ms time bin. We used leave-one-out cross-validation. At each time bin we used 19 out of 20 trials for the training set, and tested the trained decoder on the remaining trial. The classification accuracy was computed as the average fraction of correctly classified stimuli over all 20 cross-validation folds.

At each time the decoder for classification between stimuli s1 and s2 was trained using the trial-averaged pseudo-population vectors and . These vectors defined the decoder and the bias term as:

A given population vector was classified as either stimulus s1 or stimulus s2 according to the value of the function :

Random performance was evaluated by training and testing the classifier using cross-validation on surrogate data sets built by shuffling stimulus single-trial labels at each time bin. We performed the same analysis using more sophisticated decoder algorithms, that is, Linear Discriminant Analysis (LDA), Quadratic Discriminant Analysis (QDA), and C-SVM decoders. For none of these decoder algorithms was the cross-validated accuracy substantially better than the naive classifier reported in Figure 1C (and for the QDA algorithm the accuracy was substantially worse; not shown).

Principal component analysis

Request a detailed protocolTo perform PCA on the population responses to stimuli we considered the matrix , where is the number of neurons and is the number of time steps considered. The matrix contained the population OFF responses to the stimuli , centered around the mean over times and stimuli. If we denote by the i-th eigenvalue of the covariance matrix , the percentage of variance explained by the i-th principal component is given by:

while the cumulative percentage of variance explained by the first principal components (Figure 2A,C) is given by:

In Figure 2A, for each stimulus we consider the matrix containing the population OFF responses to stimulus . We computed the cumulative variance explained as a function of the number of principal components and then averaged over all stimuli.

Cross-validated PCA

Request a detailed protocolWe used cross-validation to estimate the variance of OFF responses to individual stimuli and across stimuli attributable to the stimulus-related component, discarding the component due to trial-to-trial variability (Figure 2—figure supplement 1). Specifically, we applied to the calcium activity data the method of cross-validated PCA (cvPCA) developed in Stringer et al., 2019. This method provides an unbiased estimate of the stimulus-related variance by computing the covariance between a training and a test set of responses (e.g. two different trials or two different sets of trials) to an identical collection of stimuli. Let and be the matrices containing the mean-centered responses to the same stimuli. We consider the training and test responses to be two distinct trials. We first perform ordinary PCA on the training responses and find the principal component (). We then evaluate the cross-validated PCA spectrum as:

We repeat the procedure for all pairs of trials with and average the result over pairs. The cross-validated cumulative variance is finally computed as in Equation (13).

Correlations between OFF response subspaces

Request a detailed protocolTo quantify the degree of correlation between pairs of OFF responses corresponding to two different stimuli, termed subspace overlap, we computed the cosine of the principal angle between the corresponding low-dimensional subspaces (Figure 2D, Figure 3I). In general, the principal angle between two subspaces and corresponds to the largest angle between any two pairs of vectors in and respectively, and it is defined by Bjorck and Golub, 1973; Knyazev and Argentati, 2002:

To compute the correlations between the OFF responses to stimuli s1 and s2 we first identified the first principal components of the response to stimulus s1 and organized them in a matrix . We repeated this for stimulus s2, which yielded a matrix . Therefore the columns of and define the two subspaces on which the responses to stimuli s1 and s2 live. The cosine of the principal angle between these two subspaces is given by Bjorck and Golub, 1973; Knyazev and Argentati, 2002:

where denotes the largest singular value of a matrix . We note that this procedure directly relates to canonical correlation analysis (CCA; see Hotelling, 1936; Uurtio et al., 2018). In particular the first principal angle corresponds to the first canonical weight between the subspaces spanned by the columns of and (Golub and Zha, 1992; Bjorck and Golub, 1973).

Controls for subspace overlaps and initial state-peak correlations

Request a detailed protocolIn this section we describe the controls that we used to evaluate the statistical significance of the measures of orthogonality between subspaces spanned by neural activity during OFF responses to different stimuli (Figure 2D, Figure 3I), and between initial and peak activity vectors for a single stimulus (Figure 5D). We assessed the significance of the orthogonality between OFF response subspaces across stimuli using two separate controls, which aim at testing for two distinct null hypotheses (Figure 2D, Figure 3I, Figure 2—figure supplement 2). The first hypothesis is that small subspace overlaps (i.e. low correlations) between OFF responses to different stimuli may be due to the high number of dimensions of the state-space in which they are embedded. To test for this hypothesis we compared the subspace overlap computed on the trial-averaged activity with the subspace overlaps computed on the trial-averaged activity where the stimulus labels had been shuffled across trials for each pair of stimuli. We shuffled stimulus labels multiple times, resulting in one value of the subspace overlap for each shuffle. For each pair of stimuli, significance levels were then computed as the fraction of shuffles for which the subspace overlap was lower than the subspace overlap for the real data (lower tail test; Figure 2—figure supplement 2A).

Alternatively, small subspace overlaps could be an artifact of the trial-to-trial variability present in the calcium activity data. In fact, for a single stimulus, maximum values of the correlation between two different trials were of the order of 0.2 (Deneux et al., 2016). To test for the possibility that small subspace overlaps may be due to trial-to-trial variability, for each pair of stimuli we computed the values of the subspace overlaps by computing the trial-averaged activity on only half of the trials (10 trials), subsampling the set of 10 trials multiple times for each stimulus. This yielded a set of values , where s1 and s2 are the specific stimuli considered and . We then computed the subspace overlaps between the trial-averaged responses to the same stimulus, but averaged over two different sets of 10 trials each, over multiple permutations of the trials, resulting in a set of values , where is the specific stimulus considered and . For each pair of stimuli s1 and s2, significance levels were computed using two-tailed t-test and taking the maximum between the p-values given by and for those stimulus pairs for which and , where the bar symbol indicated the mean over shuffles (Figure 2—figure supplement 2B).

The same null hypotheses have been used to test the significance of the orthogonality between initial state and peak state for individual stimuli (Figure 5D, Figure 5—figure supplement 3). A procedure analogous to the one outlined above was employed. Here, instead of the subspace overlaps, the quantity of interest is the correlation (scalar product) between the initial and peak state. For the first control shuffled data are obtained by shuffling the labels ‘initial state’ and ‘peak state’ across trials. Significance levels were evaluated as outlined above for the first control (Figure 5—figure supplement 3A). To test for the second hypothesis, we computed correlations between activity vectors defined at the same time point, but averaged over two different sets of 10 trials each. For each trial permutations we computed these correlations for all time points belonging to the OFF response (35 time points) and average over time points. Significance level was then assessed as outlined above for the second control (Figure 5—figure supplement 3B).

Single-cell model for OFF response generation

Request a detailed protocolIn the next section we describe the procedure used to fit the single-cell model to the auditory cortical OFF responses. We consider the single-cell model given by Equation (1), where represents the initial state of the response of unit i to stimulus s. Without loss of generality we assume that the dynamic range of the temporal filters, defined as , is equal to unity, so that represents the firing rate range of neuron i for stimulus s. If that was not true, i.e. if the single-neuron responses were given by with we could always write the responses as , where and , formally equivalent to Equation (1).

Fitting the single-cell model

Request a detailed protocolTo fit the single-cell OFF responses , we expressed the single-cell responses on a set of basis functions (Pillow et al., 2008).

where the shape and the number of basis function are predetermined. We choose the functions to be Gaussian functions centered around a value and with a given width , that is, . The problem then consists in finding the coefficients that best approximate Equation (17). By dividing the left- and right-hand side of Equation (17) by the firing rate range we obtain:

In general the coefficients could be fitted independently for each stimulus. However, the single-cell model assumes that the coefficients do not change across stimuli (see Equation (1)). Therefore, to find the stimulus-independent coefficients that best approximate Equation (18) across a given set of stimuli, we minimize the mean squared error given by:

The minimization of the mean squared error can be solved using linear regression techniques. Suppose we want to fit the population responses to different stimuli simultaneously. Let be the matrix of size obtained by concatenating the matrices (, , ) corresponding to the normalized responses to the stimuli. Let be the matrix obtained by concatenating times the matrix . Let be the matrix given by . Then Equation (18) can be written as:

which can be solved using linear regression.

In order to avoid normalizing by very small values, we fit only the most responding neurons in the population, as quantified by their firing rate range. We set for all , and we set the number of basis functions to , corresponding to the minimal number of basis functions sufficient to reach the highest cross-validated goodness of fit.

The network model

Request a detailed protocolWe consider a recurrent network model of randomly coupled linear rate units. Each unit is described by the time-dependent variable , which represents the difference between the firing rate of neuron at time and its baseline firing level. The equation governing the temporal dynamics of the network reads: