Binocular rivalry reveals an out-of-equilibrium neural dynamics suited for decision-making

Abstract

In ambiguous or conflicting sensory situations, perception is often ‘multistable’ in that it perpetually changes at irregular intervals, shifting abruptly between distinct alternatives. The interval statistics of these alternations exhibits quasi-universal characteristics, suggesting a general mechanism. Using binocular rivalry, we show that many aspects of this perceptual dynamics are reproduced by a hierarchical model operating out of equilibrium. The constitutive elements of this model idealize the metastability of cortical networks. Independent elements accumulate visual evidence at one level, while groups of coupled elements compete for dominance at another level. As soon as one group dominates perception, feedback inhibition suppresses supporting evidence. Previously unreported features in the serial dependencies of perceptual alternations compellingly corroborate this mechanism. Moreover, the proposed out-of-equilibrium dynamics satisfies normative constraints of continuous decision-making. Thus, multistable perception may reflect decision-making in a volatile world: integrating evidence over space and time, choosing categorically between hypotheses, while concurrently evaluating alternatives.

Introduction

In deducing the likely physical causes of sensations, perception goes beyond the immediate sensory evidence and draws heavily on context and prior experience (von Helmholtz, 1867; Barlow et al., 1972; Gregory, 1980; Rock, 1983). Numerous illusions in visual, auditory, and tactile perception – all subjectively compelling, but objectively false – attest to this extrapolation beyond the evidence. In natural settings, perception explores alternative plausible causes of sensory evidence by active readjustment of sensors (‘active perception,’ Mirza et al., 2016; Yang et al., 2018; Parr and Friston, 2017a). In general, perception is thought to actively select plausible explanatory hypotheses, to predict the sensory evidence expected for each hypothesis from prior experience, and to compare the observed sensory evidence at multiple levels of scale or abstraction (‘analysis by synthesis,’ ‘predictive coding,’ ‘hierarchical Bayesian inference,’ Yuille and Kersten, 2006, Rao and Ballard, 1999, Parr and Friston, 2017b, Pezzulo et al., 2018). Active inference engages the entire hierarchy of cortical areas involved in sensory processing, including both feedforward and feedback projections (Bar, 2009; Larkum, 2013; Shipp, 2016; Funamizu et al., 2016; Parr et al., 2019).

The dynamics of active inference becomes experimentally observable when perceptual illusions are ‘multistable’ (Leopold and Logothetis, 1999). In numerous ambiguous or conflicting situations, phenomenal experience switches at irregular intervals between discrete alternatives, even though the sensory scene is stable (Necker, 2009; Wheatstone, 1838; Rubin, 1958; Attneave, 1971; Ramachandran and Anstis, 2016; Pressnitzer and Hupe, 2006; Schwartz et al., 2012). Multistable illusions are enormously diverse, involving visibility or audibility, perceptual grouping, visual depth or motion, and many kinds of sensory scenes, from schematic to naturalistic. Average switching rates differ greatly and range over at least two orders of magnitude (Cao et al., 2016), depending on sensory scene, perceptual grouping (Wertheimer, 1912; Koffka, 1935; Ternus, 1926), continuous or intermittent presentation (Leopold and Logothetis, 2002; Maier et al., 2003), attentional condition (Pastukhov and Braun, 2007), individual observer (Pastukhov et al., 2013c; Denham et al., 2018; Brascamp et al., 2019), and many other factors.

In spite of this diversity, the stochastic properties of multistable phenomena appear to be quasi-universal, suggesting that the underlying mechanisms may be general. Firstly, average dominance duration depends in a characteristic and counterintuitive manner on the strength of dominant and suppressed evidence (‘Levelt’s propositions I–IV,’ Levelt, 1965; Brascamp et al., 2006; Klink et al., 2016; Kang, 2009; Brascamp et al., 2015; Moreno-Bote et al., 2010). Secondly, the statistical distribution of dominance durations shows a stereotypical shape, resembling a gamma distribution with shape parameter (‘scaling property,’ Cao et al., 2016; Fox and Herrmann, 1967; Blake et al., 1971; Borsellino et al., 1972; Walker, 1975; De Marco et al., 1977; Murata et al., 2003; Brascamp et al., 2005; Pastukhov and Braun, 2007; Denham et al., 2018; Darki and Rankin, 2021). Thirdly, the durations of successive dominance periods are correlated positively, over at least two or three periods (Fox and Herrmann, 1967; Walker, 1975; Van Ee, 2005; Denham et al., 2018).

Here, we show that these quasi-universal characteristics are comprehensively and quantitatively reproduced, indeed guaranteed, by an interacting hierarchy of birth-death processes operating out of equilibrium. While the proposed mechanism combines some of the key features of previous models, it far surpasses their explanatory power.

Several possible mechanisms have been proposed for perceptual dominance, the triggering of reversals, and the stochastic timing of reversals. That a single, coherent interpretation typically dominates phenomenal experience is thought to reflect competition (explicit or implicit) at the level of explanatory hypotheses (e.g., Dayan, 1998), sensory inputs (e.g., Lehky, 1988), or both (e.g., Wilson, 2003). That a dominant interpretation is occasionally supplanted by a distinct alternative has been attributed to fatigue processes (e.g., neural adaptation, synaptic depression, Laing and Chow, 2002), spontaneous fluctuations (‘noise,’ e.g., Wilson, 2007, Kim et al., 2006), stochastic sampling (e.g., Schrater and Sundareswara, 2006), or combinations of these (e.g., adaptation and noise, Shpiro et al., 2009; Seely and Chow, 2011; Pastukhov et al., 2013c). The characteristic stochasticity (gamma-like distribution) of dominance durations has been attributed to Poisson counting processes (e.g., birth-death processes, Taylor and Ladridge, 1974; Gigante et al., 2009; Cao et al., 2016) or stochastic accumulation of discrete samples (Murata et al., 2003; Schrater and Sundareswara, 2006; Sundareswara and Schrater, 2008; Weilnhammer et al., 2017).

‘Dynamical’ models combining competition, adaptation, and noise capture well the characteristic dependence of dominance durations on input strength (‘Levelt’s propositions’) (Laing and Chow, 2002; Wilson, 2007; Ashwin and Aureliu, 2010), especially when inputs are normalized (Moreno-Bote et al., 2007; Moreno-Bote et al., 2010; Cohen et al., 2019), and when the dynamics emphasize noise (Shpiro et al., 2009; Seely and Chow, 2011; Pastukhov et al., 2013c). However, such models do not preserve distribution shape over the full range of input strengths (Cao et al., 2016; Cohen et al., 2019). On the other hand, ‘sampling’ models based on discrete random processes preserve distribution shape (Taylor and Ladridge, 1974; Murata et al., 2003; Schrater and Sundareswara, 2006; Sundareswara and Schrater, 2008; Cao et al., 2016; Weilnhammer et al., 2017), but fail to reproduce the dependence on input strength. Neither type of model accounts for the sequential dependence of dominance durations (Laing and Chow, 2002).

Here, we reconcile ‘dynamical’ and ‘sampling’ approaches to multistable perception, extending an earlier effort (Gigante et al., 2009). Importantly, every part of the proposed mechanism appears to be justified normatively in that it may serve to optimize perceptual choices in a general behavioral situation, namely, continuous inference in uncertain and volatile environments (Bogacz, 2007; Veliz-Cuba et al., 2016). We propose that sensory inputs are represented by birth-death processes in order to accumulate sensory information over time and in a format suited for Bayesian inference (Ma et al., 2006; Pouget et al., 2013). Further, we suggest that explanatory hypotheses are evaluated competitively, with a hypothesis attaining dominance (over phenomenal experience) when its support exceeds the alternatives by a certain finite amount, consistent with optimal decision-making between multiple alternatives (Bogacz, 2007). Finally, we assume that a dominant hypothesis suppresses its supporting evidence, as required by ‘predictive coding’ implementations of hierarchical Bayesian inference (Pearl, 1988; Rao and Ballard, 1999; Hohwy et al., 2008). In contrast to many previous models, we do not require a local mechanisms of fatigue, adaptation, or decay.

Based on these assumptions, the proposed mechanism reproduces dependence on input strength, as well as distribution of dominance durations and positive sequential dependence. Additionally, it predicts novel and unsuspected dynamical features confirmed by experiment.

Results

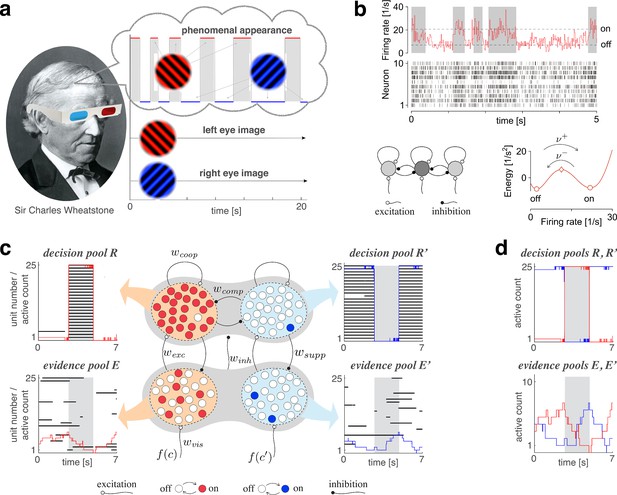

Below we introduce each component of the mechanism and its possible normative justification, before describing out-of-equilibrium dynamics resulting from the interaction of all components. Subsequently, we compare model predictions with multistable perception of human observers, specifically, the dominance statistics of binocular rivalry (BR) at various combinations of left- and right-eye contrasts (Figure 1a).

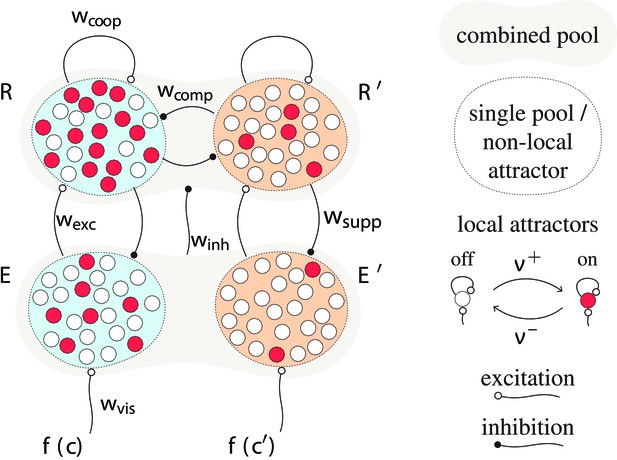

Proposed mechanism of binocular rivalry.

(a) When the left and right eyes see incompatible images in the visual field, phenomenal appearance reverses at irregular intervals, sometimes being dominated by one image and sometimes by the other (gray and white regions). Sir Charles Wheatstone studied this multistable percept with a mirror stereoscope (not as shown!). (b) Spiking neural network implementation of a ‘local attractor.’ An assembly of 150 neurons (schematic, dark gray circle) interacts competitively with multiple other assemblies (light gray circles). Population activity of the assembly explores an effective energy landscape (right) with two distinct steady states (circles), separated by a ridge (diamond). Driven by noise, activity transitions occasionally between ‘on’ and ‘off’ states (bottom), with transition rates depending sensitively on external input to the assembly (not shown). Here, . Spike raster shows 10 representative neurons. (c) Nested attractor dynamics (central schematic) that quantitatively reproduces the dynamics of binocular rivalry (left and right columns). Independently bistable variables (‘local attractors,’ small circles) respond probabilistically to input, transitioning stochastically between on- and off-states (red/blue and white, respectively). The entire system comprises four pools, with 25 variables each, linked by excitatory and inhibitory projections. Phenomenal appearance is decided by competition between decision pools and forming ‘non-local attractors’ (cross-inhibition and self-excitation ). Visual input and accumulates, respectively, in evidence pools and and propagates to decision pools (feedforward selective excitation and indiscriminate inhibition ). Decision pools suppress associated evidence pools (feedback selective suppression ). The time course of the number of active variables (active count) is shown for decision pools (top left and right) and evidence pools (bottom left and right), representing the left eye (red traces) and the right eye image (blue traces). The state of individual variables (black horizontal traces in left and middle columns) and of perceptual dominance (gray and white regions) is also shown. In decision pools, almost all variables become active (black trace) or inactive (no trace) simultaneously. In evidence pools, only a small fraction of variables is active at any given time. (d) Fractional activity dynamics of decision pools and (top, red and blue traces) and evidence pools and (bottom, red and blue traces). Reversals of phenomenal appearance are also indicated (gray and white regions).

Hierarchical dynamics

Bistable assemblies: ‘local attractors’

As operative units of sensory representation, we postulate neuronal assemblies with bistable ‘attractor’ dynamics. Effectively, assembly activity moves in an energy landscape with two distinct quasi-stable states – dubbed ‘on’ and ‘off’ – separated by a ridge (Figure 1b). Driven by noise, assembly activity mostly remains near one quasi-stable state (‘on’ or ‘off’), but occasionally ‘escapes’ to the other state (Kramers, 1940; Hanggi et al., 1990; Deco and Hugues, 2012; Litwin-Kumar and Doiron, 2012; Huang and Doiron, 2017).

An important feature of ‘attractor’ dynamics is that the energy of quasi-stable states depends sensitively on external input. Net positive input destabilizes (i.e., raises the potential of) the ‘off’ state and stabilizes (i.e., lowers the potential of) the ‘on’ state. Transition rates are even more sensitive to external input as they depend approximately exponentially on the height of the energy ridge (‘activation energy’).

Figure 1b illustrates ‘attractor’ dynamics for an assembly of 150 spiking neurons with activity levels of approximately and per neuron in the ‘off’ and ‘on’ states, respectively. Full details are provided in Appendix 1, section Metastable population dynamics, and Appendix 1—figure 2.

Binary stochastic variables

Our model is independent of neural details and relies exclusively on an idealized description of ‘attractor’ dynamics. Specifically, we reduce bistable assemblies to discretely stochastic, binary activity variables , which activate and inactivate with Poisson rates and , respectively. These rates vary exponentially and anti-symmetrically with increments or decrements of activation energy :

where and are baseline potential and baseline rate, respectively, and where the input-dependent part varies linearly with input , with synaptic coupling constant (see Appendix 1, section Metastable population dynamics and Appendix 1—figure 2e).

Pool of binary variables

An extended network, containing individually bistable assemblies with shared input , reduces to a ‘pool’ of binary activity variables with identical rates . Although all variables are independently stochastic, they are coupled through their shared input . The number of active variables or, equivalently, the active fraction =, forms a discretely stochastic process (‘birth-death’ or ‘Ehrenfest’ process; Karlin and McGregor, 1965).

Relaxation dynamics

While activity develops discretely and stochastically according to Equation 5 (Materials and methods), its expectation develops continuously and deterministically,

relaxing with characteristic time towards asymptotic value . As rates change with input (Equation 1), we can define the functions and (see Materials and methods). Characteristic time is longest for small input and shortens for larger positive or negative input . The asymptotic value ranges over the interval (0, 1) and varies sigmoidally with input , reaching half-activation for .

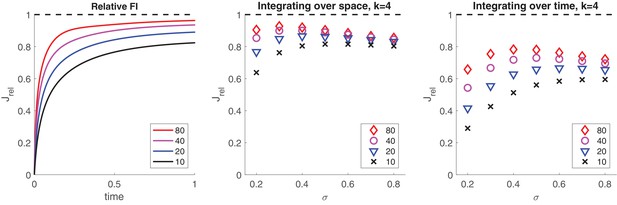

Quality of representation

Pools of bistable variables belong to a class of neural representations particularly suited for Bayesian integration of sensory information (Beck et al., 2008; Pouget et al., 2013). In general, summation of activity is equivalent to optimal integration of information, provided that response variability is Poisson-like, and response tuning differs only multiplicatively (Ma et al., 2006; Ma et al., 2008). Pools of bistable variables closely approximate these properties (see Appendix 1, section Quality of representation: Suitability for inference).

The representational accuracy of even a comparatively small number of bistable variables can be surprisingly high. For example, if normally distributed inputs drive the activity of initially inactive pools of bistable variables, pools as used in the present model (, ) readily capture 90% of the Fisher information (see Appendix 1, section Quality of representation: Integration of noisy samples).

Conflicting evidence

Any model of BR must represent the conflicting evidence from both eyes (e.g., different visual orientations), which supports alternative perceptual hypotheses (e.g., distinct grating patterns). We assume that conflicting evidence accumulates in two separate pools of bistable variables, and , (‘evidence pools,’ Figure 1c). Fractional activations and develop stochastically following Equation 5 (Materials and methods). Transition rates and vary exponentially with activation energy (Equation 1), with baseline potential and baseline rate . The variable components of activation energy, and , are synaptically modulated by image contrasts, and :

where is a coupling constant and is a monotonic function of image contrast (see Materials and methods).

Competing hypotheses: ‘non-local attractors’

Once evidence for, and against, alternative perceptual hypotheses (e.g., distinct grating patterns) has been accumulated, reaching a decision requires a sensitive and reliable mechanism for identifying the best supported hypothesis and amplifying the result into a categorical read-out. Such a winner-take-all decision (Koch and Ullman, 1985) is readily accomplished by a dynamical version of biased competition (Deco and Rolls, 2005; Wang, 2002; Deco et al., 2007; Wang, 2008).

We assume that alternative perceptual hypotheses are represented by two further pools of bistable variables, and , forming two ‘non-local attractors’ (‘decision pools,’ Figure 1c). Similar to previous models of decision-making and attentional selection (Deco and Rolls, 2005; Wang, 2002; Deco et al., 2007; Wang, 2008), we postulate recurrent excitation within pools, but recurrent inhibition between pools, to obtain a ‘winner-take-all’ dynamics. Importantly, we assume that ‘evidence pools’ project to ‘decision pools’ not only in the form of selective excitation (targeted at the corresponding decision pool), but also in the form of indiscriminate inhibition (targeting both decision pools), as suggested previously (Ditterich et al., 2003; Bogacz et al., 2006).

Specifically, fractional activations and develop stochastically according to Equation 5 (Materials and methods). Transition rates and vary exponentially with activation energy (Equation 1), with baseline difference and baseline rate . The variable components of activation energy, and , are synaptically modulated by evidence and decision activities:

where coupling constants , , , reflect feedforward excitation, feedforward inhibition, lateral cooperation within decision pools, and lateral competition between decision pools, respectively.

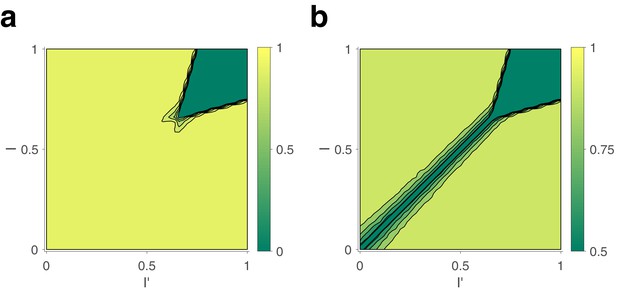

This biased competition circuit expresses a categorical decision by either raising towards unity (and lowering towards zero) or vice versa. The choice is random when visual input is ambiguous, , but becomes deterministic with growing input bias . This probabilistic sensitivity to input bias is reliable and robust under arbitrary initial conditions of , , and (see Appendix 1, section Categorical choice with Appendix 1—figure 3).

Feedback suppression

Finally, we assume feedback suppression, with each decision pool selectively targeting the corresponding evidence pool. A functional motivation for this systematic bias against the currently dominant appearance is given momentarily. Its effects include curtailment of dominance durations and ensuring that reversals occur from time to time. Specifically, we modify Equation 3 to

where is a coupling constant.

Previous models of BR (Dayan, 1998; Hohwy et al., 2008) have justified selective feedback suppression of the evidence supporting a winning hypothesis in terms of ‘predictive coding’ and ‘hierarchical Bayesian inference’ (Rao and Ballard, 1999; Lee and Mumford, 2003). An alternative normative justification is that, in volatile environments, where the sensory situation changes frequently (‘volatility prior’), optimal inference requires an exponentially growing bias against evidence for the most likely hypothesis (Veliz-Cuba et al., 2016). Note that feedback suppression applies selectively to evidence for a winning hypothesis and is thus materially different from visual adaptation (Wark et al., 2009), which applies indiscriminately to all evidence present.

Reversal dynamics

A representative example of the joint dynamics of evidence and decision pools is illustrated in Figure 1c,d, both at the level of pool activities , , , , and at the level of individual bistable variables . The top row shows decision pools and , with instantaneous active counts, and and active/inactive states of individual variables . The bottom row shows evidence pools and , with instantaneous active counts, and and active/inactive states of individual variables . Only a small fraction of evidence variables is active at any one time.

Phenomenal appearance reverses when the differential activity of evidence pools, and , contradicts sufficiently strongly the differential activity of decision pools, and , such that the steady state of decision pools is destabilized (see further below and Figure 4). As soon as the reversal has been effected at the decision level, feedback suppression lifts from the newly non-dominant evidence and descends upon the newly dominant evidence. Due to this asymmetric suppression, the newly non-dominant evidence recovers, whereas the newly dominant evidence habituates. This opponent dynamics progresses, past the point of equality , until differential evidence activity once again contradicts differential decision activity . Whereas the activity of decision pools varies in phase (or counterphase) with perceptual appearance, the activity of evidence pools changes in quarterphase (or negative quarterphase) with perceptual appearance (e.g., Figures 1c,d,2a), consistent with some previous models (Gigante et al., 2009; Albert et al., 2017; Weilnhammer et al., 2017).

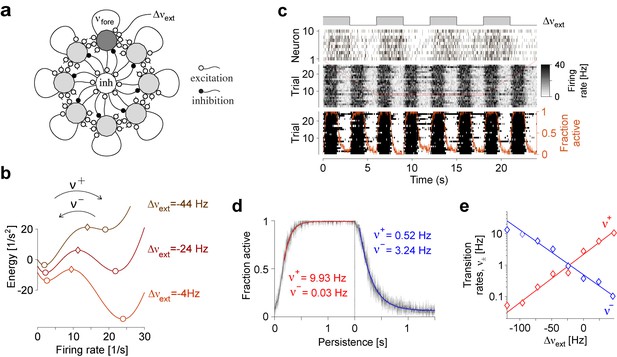

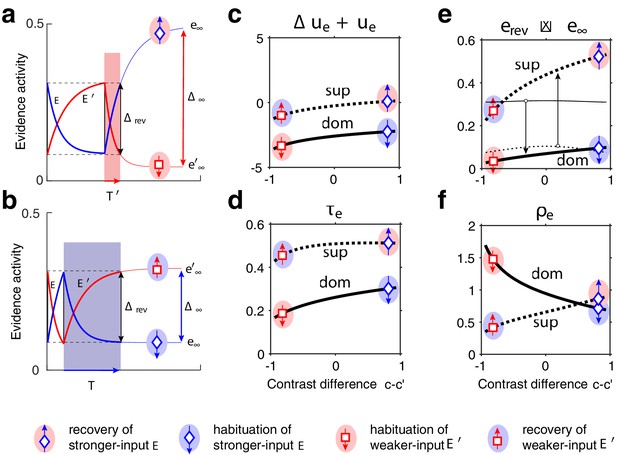

Joint dynamics of evidence habituation and recovery.

Exponential development of evidence activities is governed by input-dependent asymptotic values and characteristic times. (a) Fractional activities (blue traces) and (red traces) of evidence pools and , respectively, over several dominance periods for unequal stimulus contrast (). Stochastic reversals of finite system ( units per pool, left) and deterministic reversals of infinite system (, right). Perceptual dominance (decision activity) is indicated along the upper margin (red or blue stripe). Dominance evidence habituates (dom), and non-dominant evidence recovers (sup), until evidence contradicts perception sufficiently (black vertical lines) to trigger a reversal (gray and white regions). (b) Development of stronger-input evidence (blue) and weaker-input evidence (red) over two successive dominance periods (). Activities recover, or habituate, exponentially until reversal threshold is reached. Thin curves extrapolate to the respective asymptotic values, and . Dominance durations depend on distance and on characteristic times and . Left: incrementing non-dominant evidence (dashed curve) raises upper asymptotic value and shortens dominance by . Right: incrementing dominant evidence (dashed curve) raises lower asymptotic value and shortens dominance by . (c) Increasing asymptotic activity difference accelerates the development of differential activity and curtails dominance periods , (and vice versa). As the dependence is hyperbolic, any change to disproportionately affects longer dominance periods. If , then (and vice versa).

Binocular rivalry

To compare predictions of the model described above to experimental observations, we measured spontaneous reversals of BR for different combinations of image contrast. BR is a particularly well-studied instance of multistable perception (Wheatstone, 1838; Diaz-Caneja, 1928; Levelt, 1965; Leopold and Logothetis, 1999; Brascamp et al., 2015). When conflicting images are presented to each eye (e.g., by means of a mirror stereoscope or of colored glasses, see Materials and methods), the phenomenal appearance reverses from time to time between the two images (Figure 1a). Importantly, the perceptual conflict involves also representations of coherent (binocular) patterns and is not restricted to eye-specific (monocular) representations (Logothetis et al., 1996; Kovács et al., 1996; Bonneh et al., 2001; Blake and Logothetis, 2002).

Specifically, our experimental observations established reversal sequences for combinations of image contrast, . During any given dominance period, is the contrast of the phenomenally dominant image and the contrast of the other, phenomenally suppressed image (see Materials and methods). We analyzed these observations in terms of mean dominance durations , higher moments and of the distribution of dominance durations, and sequential correlation of successive dominance durations.

Additional aspects of serial dependence are discussed further below.

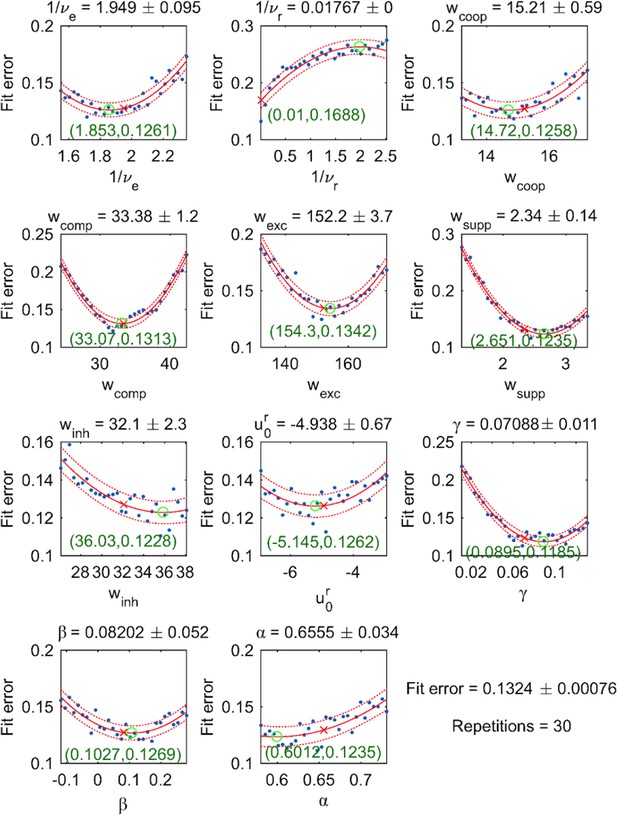

As described in Materials and methods, we fitted 11 model parameters to reproduce observations with more than 50 degrees of freedom: mean dominance durations , coefficients of variation , one value of skewness , and one correlation coefficient . The latter two values were obtained by averaging over contrast combinations and rounding. Importantly, minimization of the fit error, by random sampling of parameter space with a stochastic gradient descent, resulted in a three-dimensional manifold of suboptimal solutions. This revealed a high degree of redundancy among the 11 model parameters (see Materials and methods). Accordingly, we estimate that the effective number of degrees of freedom needed to reproduce the desired out-of-equilibrium dynamics was between 3 and 4. Model predictions and experimental observations are juxtaposed in Figures 3 and 4.

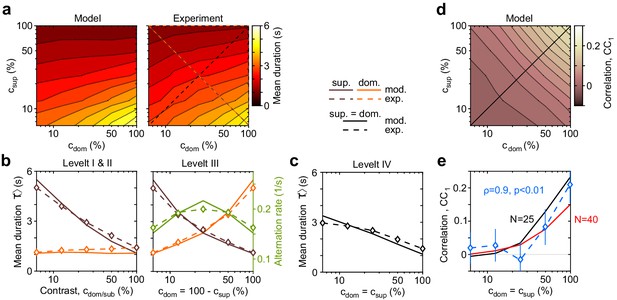

Dependence of mean dominance duration on dominant and suppressed image contrast (‘Levelt’s propositions’).

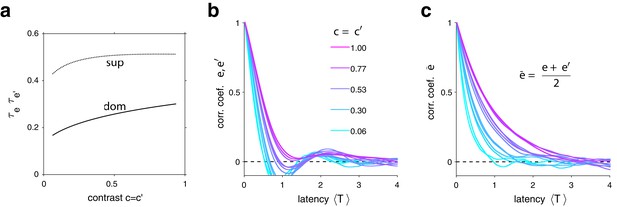

(a) Mean dominance duration (color scale), as a function of dominant contrast and suppressed contrast , in model (left) and experiment (right). (b) Model prediction (solid traces) and experimental observation (dashed traces and symbols) compared. Levelt I and II: weak increase of with when (red traces and symbols), and strong decrease with when (brown traces and symbols). Levelt III: symmetric increase of with (orange traces and symbols) and decrease with (brown traces and symbols), when . Alternation rate (green traces and symbols) peaks at equidominance and decreases symmetrically to either side. (c) Levelt IV: decrease of with image contrast, when . (d) Predicted dependence of sequential correlation (color scale) on and . (e) Model prediction (black trace, ) and experimental observation (blue trace and symbols, mean ± SEM, Spearman’s rank correlation ρ), when . Also shown is a second model prediction (red trace, ).

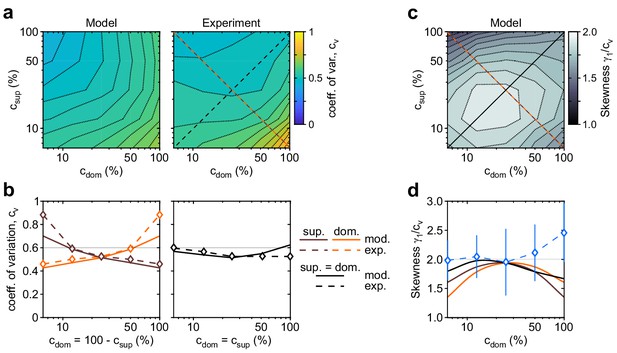

Shape of dominance distribution depends only weakly on image contrast (‘scaling property’).

Distribution shape is parametrized by coefficient of variation cv and relative skewness . (a) Coefficient of variation cv (color scale), as a function of dominant contrast and suppressed contrast , in model (left) and experiment (right). (b) Model prediction (solid traces) and experimental observation (dashed traces and symbols) compared. Left: increase of cv with (red traces and symbols), and symmetric decrease with (brown traces and symbols), when . Right: weak dependence when (black traces and symbols). (c) Predicted dependence of relative skewness (gray scale) on and . (d) Model prediction (solid traces), when (black) and (orange and brown) and experimental observation when (blue dashed trace and symbols, mean ± SEM).

The complex and asymmetric dependence of mean dominance durations on image contrast — aptly summarized by Levelt’s ‘propositions’ I to IV (Levelt, 1965; Brascamp et al., 2015) — is fully reproduced by the model (Figure 3). Here, we use the updated definition of Brascamp et al., 2015: increasing the contrast of one image increases the fraction of time during which this image dominates appearance (‘predominance,’ Levelt I). Counterintuitively, this is due more to shortening dominance of the unchanged image than to lengthening dominance of the changed image (Levelt II, Figure 3b, left panel). Mean dominance durations grow (and alternation rates decline) symmetrically around equal predominance as contrast difference increases (Levelt III, Figure 3b, right panel). Mean dominance durations shorten when both image contrasts increase (Levelt IV, Figure 3c).

Successive dominance durations are typically correlated positively (Fox and Herrmann, 1967; Walker, 1975; Pastukhov et al., 2013c). Averaging over all contrast combinations, observed and fitted correlation coefficients were comparable with (mean and standard deviation). Unexpectedly, both observed and fitted correlations coefficients increased systematically with image contrast (, ), growing from at to at (Figure 3e, blue symbols). It is important to that this dependence was not fitted. Rather, this previously unreported dependence constitutes a model prediction that is confirmed by observation.

The distribution of dominance durations typically takes a characteristic shape (Cao et al., 2016; Fox and Herrmann, 1967; Blake et al., 1971; Borsellino et al., 1972; Walker, 1975; De Marco et al., 1977; Murata et al., 2003; Brascamp et al., 2005; Pastukhov and Braun, 2007; Denham et al., 2018), approximating a gamma distribution with shape parameter , or coefficient of variation . The fitted model fully reproduces this ‘scaling property’ (Figure 4). The observed coefficient of variation remained in the range for nearly all contrast combinations (Figure 4b). Unexpectedly, both observed and fitted values increased above, or decreased below, this range at extreme contrast combinations (Figure 4b, left panel). Along the main diagonal , where observed values had smaller error bars, both observed and fitted values of skewness were and thus approximated a gamma distribution (Figure 4d, blue symbols).

Specific contribution of evidence and decision levels

What are the reasons for the surprising success of the model in reproducing universal characteristics of multistable phenomena, including the counterintuitive input dependence (‘Levelt’s propositions’), the stereotypical distribution shape (‘scaling property’), and the positive sequential correlation (as detailed in Figures 3 and 4)? Which level of model dynamics is responsible for reproducing different aspects of BR dynamics?

Below, we describe the specific contributions of different model components. Specifically, we show that the evidence level of the model reproduces ‘Levelt’s propositions I–III’ and the ‘scaling property,’ whereas the decision level reproduces ‘Levelt’s proposition IV.’ A non-trivial interaction between evidence and decision levels reproduces serial dependencies. Additionally, we show that this interaction predicts further aspects of serial dependencies – such as sensitivity to image contrast – that were not reported previously, but are confirmed by our experimental observations.

Levelt’s propositions I, II, and III

The characteristic input dependence of average dominance durations emerges in two steps (as in Gigante et al., 2009). First, inputs and feedback suppression shape the birth-death dynamics of evidence pools and (by setting disparate transition rates , following Equation 3’ and Equation 1). Second, this sets in motion two opponent developments (habituation of dominant evidence activity and recovery of non-dominant evidence activity, both following Equation 2) that jointly determine dominance duration.

To elucidate this mechanism, it is helpful to consider the limit of large pools () and its deterministic dynamics (Figure 2), which corresponds to the average stochastic dynamics. In this limit, periods of dominant evidence or start and end at the same levels ( and ), because reversal thresholds are the same for evidence difference and (see section Levelt IV below).

The rates at which evidence habituates or recovers depend, in the first instance, on asymptotic levels and (Equation 1 and 2, Figure 2b and Appendix 1—figure 4). In general, dominance durations depend on distance between asymptotic levels: the further apart these are, the faster the development and the shorter the duration. As feedback suppression inverts the sign of the opponent developments, dominant evidence decreases (habituates) while non-dominant evidence increases (recovers). Due to this inversion, is roughly proportional to . It follows that the distance is smaller and the reversal dynamics slower when dominant input is stronger, and vice versa. It further follows that incrementing one input (and raising the corresponding asymptotic level) speeds up recovery or slows down habituation, shortening or lengthening periods of non-dominance and dominance, respectively (Levelt I).

In the second instance, rates of habituation or recovery depend on characteristic times and (Equation 1 and 2). When these rates are unequal, dominance durations depend more sensitively on the slower process. This is why dominance durations depend more sensitively on non-dominant input (Levelt II): recovery of non-dominant evidence is generally slower than habituation of dominant evidence, independently of which input is weaker or stronger. The reason is that the respective effects of characteristic times and and asymptotic levels and are synergistic for weaker-input evidence (in both directions), whereas they are antagonistic for stronger-input evidence (see Appendix 1, section Deterministic dynamics: Evidence pools and Appendix 1—figure 4).

In general, dominance durations depend hyperbolically on (Figure 2c and Equation 7 in Appendix 1). Dominance durations become infinite (and reversals cease) when falls below the reversal threshold . This hyperbolic dependence is also why alternation rate peaks at equidominance (Levelt III): increasing the difference between inputs always lengthens longer durations more than it shortens shorter durations, thus lowering alternation rate.

Distribution of dominance durations

For all combinations of image contrast, the mechanism accurately predicts the experimentally observed distributions of dominance durations. This is owed to the stochastic activity of pools of bistable variables.

Firstly, dominance distributions retain nearly the same shape, even though average durations vary more than threefold with image contrast (see also Appendix 1—figure 6a,b). This ‘scaling property’ is due to the Poisson-like variability of birth-death processes (see Appendix 1, section Stochastic dynamics). Generally, when a stochastic accumulation approaches threshold, the rates of both accumulation and dispersion of activity affect the distribution of first-passage-times (Cao et al., 2014; Cao et al., 2016). In the special case of Poisson-like variability, the two rates vary proportionally and preserve distribution shape (see also Appendix 1—figure 6c,d).

Secondly, predicted distributions approximate gamma distributions with scale factor . As shown previously (Cao et al., 2014; Cao et al., 2016), this is due to birth-death processes accumulating activity within a narrow range (i.e., evidence difference ). In this low-threshold regime, the first-passage-times of birth-death processes are both highly variable and gamma distributed, consistent with experimental observations.

Thirdly, the predicted variability (coefficients of variation) of dominance periods varies along the axis, being larger for longer than for shorter dominance durations (Figure 4a,b). The reason is that stochastic development becomes noise-dominated. For longer durations, stronger-input evidence habituates rapidly into a regime where random fluctuations gain importance (see also Appendix 1—figure 4a,b).

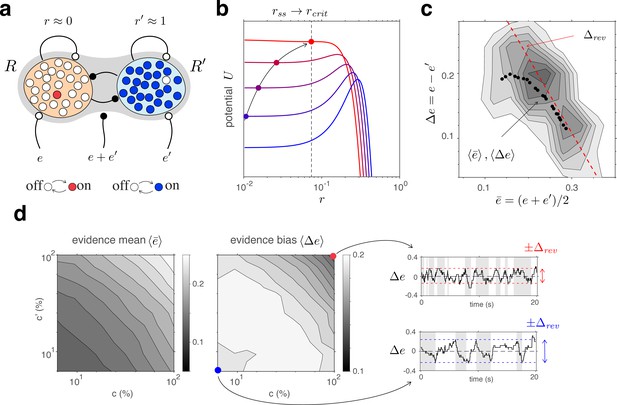

Levelt’s proposition IV

The model accurately predicts how dominance durations shorten with higher image contrast (Levelt IV). Surprisingly, this reflects the dynamics of decision pools and (Figure 5).

Competitive dynamics of decision pools ensures Levelt IV.

(a) The joint stable state of decision pools (here and ) can be destabilized by sufficiently contradictory evidence, . (b) Effective potential (colored curves) and steady states (colored dots) for different levels of contradictory input, . Increasing destabilizes the steady state and shifts rightward (curved arrow). The critical value (dotted vertical line), at which the steady state turns unstable, is reached when reaches the reversal threshold . At this point, a reversal ensues with and . (c) The reversal threshold diminishes with combined evidence . In the deterministic limit, decreases linearly with (dashed red line). In the stochastic system, the average evidence bias at the time of reversals decreases similarly with the average evidence mean (black dots). Actual values of at the time of reversals are distributed around these average values (gray shading). (d) Average evidence mean (left) and average evidence bias (middle) at the time of reversals as a function of image contrast and . Decrease of average evidence bias with contrast shortens dominance durations (Levelt IV). At low contrast (blue dot), higher reversal thresholds result in less frequent reversals (bottom right, gray and white regions) whereas, at high contrast (red dot), lower reversal thresholds lead to more frequent reversals (top right).

Here again it is helpful to consider the deterministic limit of large pools (). In this limit, a dominant decision state is destabilized when a contradictory evidence difference exceeds a certain threshold value (Figure 5b and Appendix 1, section Deterministic dynamics: Decision pools). Due to the combined effect of excitatory and inhibitory feedforward projections, and (Equation 4 and Figure 5a), this average reversal threshold decreases with mean evidence activity . Simulations of the fully stochastic model () confirm this analysis (Figure 5c). As average evidence activity increases with image contrast, the average evidence bias at the time of reversals decreases, resulting in shorter dominance periods (Figure 5d).

Serial dependence

The proposed mechanism predicts positive correlations between successive dominance durations, a well-known characteristic of multistable phenomena (Fox and Herrmann, 1967; Walker, 1975; Van Ee, 2005; Denham et al., 2018). In addition, it predicts further aspects of serial dependence not reported previously.

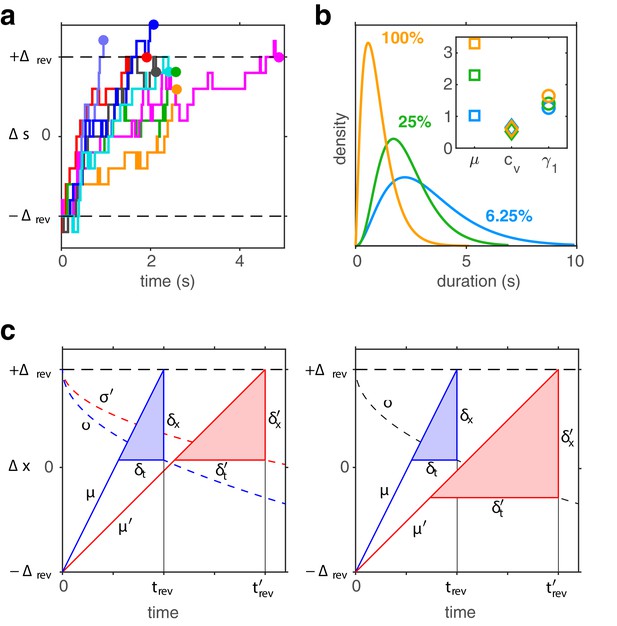

In both model and experimental observations, a long dominance period tends to be followed by another long period, and a short dominance period by another short period (Figure 6). In the model, this is due to mean evidence activity fluctuating stochastically above and below its long-term average. The autocorrelation time of these fluctuations increases monotonically with image contrast and, for high contrast, spans multiple dominance periods (see Appendix 1, section Characteristic times and Appendix 1—figure 7). Note that fluctuations of diminish as the number of bistable variables increases and vanishe in the deterministic limit .

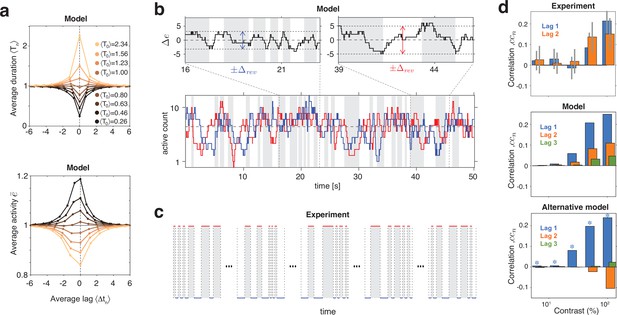

Serial dependency predicted by model and confirmed by experimental observations.

(a) Conditional expectation of dominance duration (top) and of average mean evidence activity, (bottom), in model simulations with maximal stimulus contrast (). Dominance periods T0 were grouped into octiles, from longest (yellow) to shortest (black). For each octile, the average duration of preceding and following dominance periods, as well as the average mean evidence activity at the end of each period, is shown. All times in multiples of the overall average duration, , and activities in multiples of the overall average activity . (b) Example reversal sequence from model. Bottom: stochastic development of evidence activities and (red and blue traces), with large, joint fluctuations raising or lowering mean activity above or below long-term average (dashed line). Top left: episode with above average, lower , and shorter dominance periods. Top right: episode with below average, higher , and longer dominance durations. (c) Examples of reversal sequences from human observers ( and ). (d) Positive lagged correlations predicted by model (mean, middle) and confirmed by experimental observations (mean ± std, top). Alternative model (Laing and Chow, 2002) with adaptation and noise (mean, bottom), fitted to reproduce the values of , cv, , and predicted by the present model (blue stars).

Crucially, fluctuations of mean evidence modulate both reversal threshold and dominance durations , as illustrated in Figure 6a,b. To obtain Figure 6a, dominance durations were grouped into quantiles and the average duration of each quantile was compared to the conditional expectation of preceding and following durations (upper graph). For the same quantiles (compare color coding), average evidence activity was compared to the conditional expectation at the end of preceding and following periods (lower graph). Both the inverse relation between and and the autocorrelation over multiple dominance periods are evident.

This source of serial dependency – comparatively slow fluctuations of and – predicts several qualitative characteristics not reported previously and now confirmed by experimental observations. First, sequential correlations are predicted (and observed) to be strictly positive at all lags (next period, one-after-next period, and so on) (Figure 6d). In other words, it predicts that several successive dominance periods are shorter (or longer) than average.

Second, due to the contrast dependence of autocorrelation time, sequential correlations are predicted (and observed) to increase with image contrast (Figure 6d). The experimentally observed degree of contrast dependence is broadly consistent with pool sizes between and (black and red curves in Figure 3e). Larger pools with hundreds of bistable variables do not express the observed dependence on contrast (not shown).

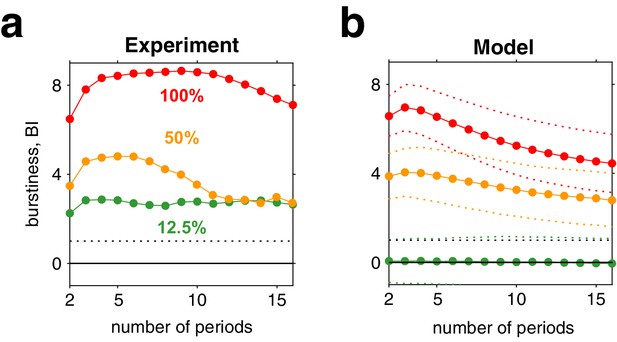

Third, for high image contrast, reversal sequences are predicted (and observed) to contain extended episodes with dominance periods that are short or extended episodes with periods that are long (Figure 6c). When quantified in terms of a ‘burstiness index,’ the degree of inhomogeneity in predicted and observed reversal sequences is comparable (see Appendix 1, section Burstiness and Appendix 1—figure 8).

Many previous models of BR (e.g., Laing and Chow, 2002) postulated selective adaptation of competing representations to account for serial dependency. However, selective adaptation is an opponent process that favors positive correlations between different dominance periods, but negative correlations between same dominance periods. To demonstrate this point, we fitted such a model to reproduce our experimental observations (, , , and ) for five image contrasts . As expected, the alternative model predicts negative correlations for same dominance periods (Figure 6d, right panel), contrary to what is observed.

Discussion

We have shown that many well-known features of BR are reproduced, and indeed guaranteed, by a particular dynamical mechanism. Specifically, this mechanism reproduces the counterintuitive input dependence of dominance durations (‘Levelt’s propositions’), the stereotypical shape of dominance distributions (‘scaling property’), and the positive sequential correlation of dominance periods. The explanatory power of the proposed mechanism is considerably higher than that of previous models. Indeed, the observations explained exhibited more effective degrees of freedom (approximately 14) than the mechanism itself (between 3 and 4).

The proposed mechanism is biophysically plausible in terms of the out-of-equilibrium dynamics of a modular and hierarchical network of spiking neurons (see also further below). Individual modules idealize the input dependence of attractor transitions in assemblies of spiking neurons. All synaptic effects superimpose linearly, consistent with extended mean-field theory for neuronal networks (Amit and Brunel, 1997; Van Vreeswijk and Sompolinski, 1996). The interaction between ‘rivaling’ sets of modules (‘pools’) results in divisive normalization, which is consistent with many cortical models (Carandini and Heeger, 2011; Miller, 2016).

It has long been suspected that multistable phenomena in visual, auditory, and tactile perception may share a similar mechanistic origin. As the features of BR explained here are in fact universal features of multistable phenomena in different modalities, we hypothesize that similar out-of-equilibrium dynamics of modular networks may underlie all multistable phenomena in all sensory modalities. In other words, we hypothesize that this may be a general mechanism operating in many perceptual representations.

Dynamical mechanism

Two principal alternatives have been considered for the dynamical mechanism of perceptual decision-making: drift-diffusion models (Luce, 1986; Ratcliff and Smith, 2004) and recurrent network models (Wang, 2008; Wang, 2012). The mechanism proposed here combines both alternatives: at its evidence level, sensory information is integrated, over both space and time, by ‘local attractors’ in a discrete version of a drift-diffusion process. At its decision level, the population dynamics of a recurrent network implements a winner-take-all competition between ‘non-local attractors.’ Together, the two levels form a ‘nested attractor’ system (Braun and Mattia, 2010) operating perpetually out of equilibrium.

A recurrent network with strong competition typically ‘normalizes’ individual responses relative to the total response (Miller, 2016). Divisive normalization is considered a canonical cortical computation (Carandini and Heeger, 2011), for which multiple rationales can be found. Here, divisive normalization is augmented by indiscriminate feedforward inhibition. This combination ensures that decision activity rapidly and reliably categorizes differential input strength, largely independently of total input strength.

Another key feature of the proposed mechanism is that a ‘dominant’ decision pool applies feedback suppression to the associated evidence pool. Selective suppression of evidence for a winning hypothesis features in computational theories of ‘hierarchical inference’ (Rao and Ballard, 1999; Lee and Mumford, 2003; Parr and Friston, 2017b; Pezzulo et al., 2018), as well as in accounts of multistable perception inspired by such theories (Dayan, 1998; Hohwy et al., 2008; Weilnhammer et al., 2017). A normative reason for feedback suppression arises during continuous inference in uncertain and volatile environments, where the accumulation of sensory information is ongoing and cannot be restricted to appropriate intervals (Veliz-Cuba et al., 2016). Here, optimal change detection requires an exponentially rising bias against evidence for the most likely state, ensuring that even weak changes are detected, albeit with some delay.

The pivotal feature of the proposed mechanism are pools of bistable variables or ‘local attractors.’ Encoding sensory inputs in terms of persistent ‘activations’ of local attractors assemblies (rather than in terms of transient neuronal spikes) creates an intrinsically retentive representation: sites that respond are also sites that retain information (for a limited time). Our results are consistent with a few tens of bistable variables in each pool. In the proposed mechanism, differential activity of two pools accumulates evidence against the dominant appearance until a threshold is reached and a reversal ensues (see also Barniv and Nelken, 2015; Nguyen et al., 2020). Conceivably, this discrete non-equilibrium dynamics might instantiate a variational principle of inference such as ‘maximum caliber’ (Pressé et al., 2013; Dixit et al., 2018).

Emergent features

The components of the proposed mechanism interact to guarantee the statistical features that characterize BR and other multistable phenomena. Discretely stochastic accumulation of differential evidence against the dominant appearance ensures sensitivity of dominance durations to non-dominant input. It also ensures the invariance of relative variability (‘scaling property’) and gamma-like distribution shape of dominance durations. Due to a non-trivial interaction with the competitive decision, discretely stochastic fluctuations of evidence-level activity express themselves in a serial dependency of dominance durations. Several features of this dependency were unexpected and not reported previously, for example, the sensitivity to image contrast and the ‘burstiness’ of dominance reversals (i.e., extended episodes in which dominance periods are consistently longer or shorter than average). The fact that these predictions are confirmed by our experimental observations provides further support for the proposed mechanism.

Relation to previous models

How does the proposed mechanism compare to previous ‘dynamical’ models of multistable phenomena? It is of similar complexity as previous minimal models (Laing and Chow, 2002; Wilson, 2007; Moreno-Bote et al., 2010) in that it assumes four state variables at two dynamical levels, one slow (accumulation) and one fast (winner-take-all competition). It differs in reversing their ordering: visual input impinges first on the slow level, which then drives the fast level. It also differs in that stochasticity dominates the slow dynamics (as suggested by van Ee, 2009), not the fast dynamics. However, the most fundamental difference is discreteness (pools of bistable variables), which shapes all key dynamical properties.

Unlike many previous models (e.g., Laing and Chow, 2002; Wilson, 2007; Moreno-Bote et al., 2007; Moreno-Bote et al., 2010; Cohen et al., 2019), the proposed mechanism does not include adaptation (stimulation-driven weakening of evidence), but a phenomenologically similar feedback suppression (perception-driven weakening of evidence). Evidence from perceptual aftereffects supports the existence of both stimulation- and perception-driven adaptation, albeit at different levels of representation. Aftereffects in the perception of simple visual features – such as orientation, spatial frequency, or direction of motion (Blake and Fox, 1974; Lehmkuhle and Fox, 1975; Wade and Wenderoth, 1978) – are driven by stimulation rather than by perceived dominance, whereas aftereffects in complex features – such as spiral motion, subjective contours, rotation in depth (Wiesenfelder and Blake, 1990; Van der Zwan and Wenderoth, 1994; Pastukhov et al., 2014a) – typically depend on perceived dominance. Several experimental observations related to BR have been attributed to stimulation-driven adaptation (e.g., negative priming, flash suppression, generalized flash suppression; Tsuchiya et al., 2006). The extent to which a perception-driven adaptation could also explain these observations remains an open question for future work.

Multistable perception induces a positive priming or ‘sensory memory’ (Pearson and Clifford, 2005; Pastukhov and Braun, 2008; Pastukhov et al., 2013a), which can stabilize a dominant appearance during intermittent presentation (Leopold et al., 2003; Maier et al., 2003; Sandberg et al., 2014). This positive priming exhibits rather different characteristics (e.g., shape-, size- and motion-specificity, inducement period, persistence period) than the negative priming/adaptation of rivaling representations (de Jong et al., 2012; Pastukhov et al., 2013a; Pastukhov and Braun, 2013b; Pastukhov et al., 2014a; Pastukhov et al., 2014b; Pastukhov, 2016). To our mind, this evidence suggest that sensory memory is mediated by additional levels of representation and not by self-stabilization of rivaling representations, as has been suggested (Noest et al., 2007; Leptourgos, 2020). To incorporate sensory memory, the present model would have to be extended to include three hierarchical levels (evidence, decision, and memory), as previously proposed by Gigante et al., 2009.

BR arises within local regions of the visual field, measuring approximately to in the fovea (Leopold, 1997; Logothetis, 1998). No rivalry ensues when the stimulated locations in the left and right eye are more distant from each other. The computational model presented here encompasses only one such local region, and therefore cannot reproduce spatially extended phenomena such as piecemeal rivalry (Blake et al., 1992) or traveling waves (Wilson et al., 2001). To account for these phenomena, the visual field would have to be tiled with replicant models linked by grouping interactions (Knapen et al., 2007; Bressloff and Webber, 2012).

A particularly intriguing previous model (Wilson, 2003) postulated a hierarchy with competing and adapting representations in eight state variables at two separate levels, one lower (monocular) and another higher (binocular) level. This ‘stacked’ architecture could explain the fascinating experimental observation that one image can continue to dominate (dominance durations ) even when images are rapidly swapped between eyes (period ) (Kovács et al., 1996; Logothetis et al., 1996). We expect that our hierarchical model could also account for this phenomenon if it were to be replicated at two successive levels. It is tempting to speculate that such ‘stacking’ might have a normative justification in that it might subserve hierarchical inference (Yuille and Kersten, 2006; Hohwy et al., 2008; Friston, 2010).

Another previous model (Li et al., 2017) used a hierarchy with 24 state variables at three separate levels to show that a stabilizing influence of selective visual attention could also explain slow rivalry when images are swapped rapidly. Additionally, this rather complex model reproduced the main features of Levelt’s propositions, but did not consider scaling property and sequential dependency. The model shared some of the key features of the present model (divisive inhibition, differential excitation-inhibition), but added a multiplicative attentional modulation. As the present model already incorporates the ‘biased competition’ that is widely thought to underlie selective attention (Sabine and Ungerleider, 2000; Reynolds and Heeger, 2009), we expect that it could reproduce attentional effects by means of additive modulations.

Continuous inference

The notion that multistable phenomena such as BR reflect active exploration of explanatory hypotheses for sensory evidence has a venerable history (von Helmholtz, 1867; Barlow et al., 1972; Gregory, 1980; Leopold and Logothetis, 1999). The mechanism proposed here is in keeping with that notion: higher-level ‘explanations’ compete for control (‘dominance’) of phenomenal appearance in terms of their correspondence to lower-level ‘evidence.’ An ‘explanation’ takes control if its correspondence is sufficiently superior to that of rival ‘explanations.’ The greater the superiority, the longer control is retained. Eventually, alternative ‘explanations’ seize control, if only briefly. This manner of operation is also consistent with computational theories of ‘analysis by synthesis’ or ‘hierarchical inference,’ although there are many differences in detail (Rao and Ballard, 1999; Parr and Friston, 2017b; Pezzulo et al., 2018).

Interacting with an uncertain and volatile world necessitates continuous and concurrent evaluation of sensory evidence and selection of motor action (Cisek and Kalaska, 2010; Gold and Stocker, 2017). Multistable phenomena exemplify continuous decision-making without external prompting (Braun and Mattia, 2010). Sensory decision-making has been studied extensively, mostly in episodic choice-task, and the neural circuits and activity dynamics underlying episodic decision-making – including representations of potential choices, sensory evidence, and behavioral goals – have been traced in detail (Cisek and Kalaska, 2010; Gold and Shadlen, 2007; Wang, 2012; Krug, 2020). Interestingly, there seems to be substantial overlap between choice representations in decision-making and in multistable situations (Braun and Mattia, 2010).

Continuous inference has been studied extensively in auditory streaming paradigms (Winkler et al., 2012; Denham et al., 2014). The auditory system seems to continually update expectations for sound patterns on the basis of recent experience. Compatible patterns are grouped together in auditory awareness, and incompatible patterns result in spontaneous reversals between alternatives. Many aspects of this rich phenomenology are reproduced by computational models driven by some kind of ‘prediction error’ (Mill et al., 2013). The dynamics of two recent auditory models (Barniv and Nelken, 2015; Nguyen et al., 2020) are rather similar to the model presented here: while one sound pattern dominates awareness, evidence against this pattern is accumulated at a subliminal level.

Relation to neural substrate

What might be the neural basis of the bistable variables/‘local attractors’ proposed here? Ongoing activity in sensory cortex appears to be low-dimensional, in the sense that the activity of neurons with similar response properties varies concomitantly (‘shared variability,’ ‘noise correlations,’ Ponce-Alvarez et al., 2012, Mazzucato et al., 2015, Engel et al., 2016, Rich and Wallis, 2016, Mazzucato et al., 2019). This shared variability reflects the spatial clustering of intracortical connectivity (Muir and Douglas, 2011; Okun et al., 2015; Cossell et al., 2015; Lee et al., 2016; Rosenbaum et al., 2017) and unfolds over moderately slow time scales (in the range of to both in primates and rodents (Ponce-Alvarez et al., 2012; Mazzucato et al., 2015; Cui et al., 2016; Engel et al., 2016; Rich and Wallis, 2016; Mazzucato et al., 2019).

Possible dynamical origins of shared and moderately slow variability have been studied extensively in theory and simulation (for reviews, see Miller, 2016; Huang and Doiron, 2017; La Camera et al., 2019). Networks with weakly clustered connectivity (e.g., 3% rewiring) can express a metastable attractor dynamics with moderately long time scales (Litwin-Kumar and Doiron, 2012; Doiron and Litwin-Kumar, 2014; Schaub et al., 2015; Rosenbaum et al., 2017). In a metastable dynamics, individual (connectivity-defined) clusters transition spontaneously between distinct and quasi-stationary activity levels (‘attractor states’) (Tsuda, 2001; Stern et al., 2014).

Evidence for metastable attractor dynamics in cortical activity is accumulating steadily (Mattia et al., 2013; Mazzucato et al., 2015; Rich and Wallis, 2016; Engel et al., 2016; Marcos et al., 2019; Mazzucato et al., 2019). Distinct activity states with exponentially distributed durations have been reported in sensory cortex (Mazzucato et al., 2015; Engel et al., 2016), consistent with noise-driven escape transitions (Doiron and Litwin-Kumar, 2014; Huang and Doiron, 2017). And several reports are consistent with external input modulating cortical activity mostly indirectly, via the rate of state transitions (Fiser et al., 2004; Churchland et al., 2010; Mazzucato et al., 2015; Engel et al., 2016; Mazzucato et al., 2019).

The proposed mechanism assumes bistable variables with noise-driven escape transitions, with transition rates modulated exponentially by external synaptic drive. Following previous work (Cao et al., 2016), we show this to be an accurate reduction of the population dynamics of metastable networks of spiking neurons.

Unfortunately, the spatial structure of the ‘shared variability’ or ‘noise correlations’ in cortical activity described above is poorly understood. However, we estimate that the cortical representation of our rivaling display involves approximately and of cortical surface in cortical areas V1 and V4, respectively (Winawer and Witthoft, 2015; Winawer and Benson, 2021). Accordingly, in each of these two cortical areas, the neural representation of rivaling stimulation can comfortably accommodate several thousand recurrent local assemblies, each capable of expressing independent collective dynamics (i.e., ‘classic columns’ comprising several ‘minicolumns’ with distinct stimulus selectivity Nieuwenhuys R, 1994, Kaas, 2012). Thus, our model assumes that the representation of two rivaling images engages approximately 1–2% of the available number of recurrent local assemblies.

Neurophysiological correlates of BR

Neurophysiological correlates of BR have been studied extensively, often by comparing reversals of phenomenal appearance during binocular stimulation with physical alternation (PA) of monocular stimulation (e.g., Leopold and Logothetis, 1996; Scheinberg and Logothetis, 1997; Logothetis, 1998; Wilke et al., 2006; Aura et al., 2008; Keliris et al., 2010; Panagiotaropoulos et al., 2012; Bahmani et al., 2014; Xu et al., 2016; Kapoor et al., 2020; Dwarakanath et al., 2020). At higher cortical levels, such as inferior temporal cortex (Scheinberg and Logothetis, 1997) or prefrontal cortex (Panagiotaropoulos et al., 2012; Kapoor et al., 2020; Dwarakanath et al., 2020), BR and PA elicit broadly comparable neurophysiological responses that mirror perceptual appearance. Specifically, activity crosses its average level at the time of each reversal, roughly in phase with perceptual appearance (Scheinberg and Logothetis, 1997; Kapoor et al., 2020). In primary visual cortex (area V1), where many neurons are dominated by input from one eye, neurophysiological correlates of BR and PA diverge in an interesting way: whereas modulation of spiking activity is weaker during BR than PA (Leopold and Logothetis, 1996; Logothetis, 1998; Wilke et al., 2006; Aura et al., 2008; Keliris et al., 2010), measures thought to record dendritic inputs are modulated comparably under both conditions (Aura et al., 2008; Keliris et al., 2010; Bahmani et al., 2014; Yang et al., 2015; Xu et al., 2016). A stronger divergence is observed at an intermediate cortical level (visual area V4), where neurons respond to both eyes. Whereas some units modulate their spiking activity comparably during BR and PA (i.e., increased activity when preferred stimulus becomes dominant), other units exhibit the opposite modulation during BR (i.e., reduced activity when preferred stimulus gains dominance) (Leopold and Logothetis, 1996; Logothetis, 1998; Wilke et al., 2006). Importantly, at this intermediate cortical level, activity crosses its average level well before and after each reversal (Leopold and Logothetis, 1996; Logothetis, 1998), roughly in quarter phase with perceptual appearance.

Some of these neurophysiological observations are directly interpretable in terms of the model proposed here. Specifically, activity modulation at higher cortical levels (inferotemporal cortex, prefrontal cortex) could correspond to ‘decision activity,’ predicted to vary in phase with perceptual appearance. Similarly, activity modulation at intermediate cortical levels (area V4) could correspond to ‘evidence activity,’ which is predicted to vary in quarter phase with perceptual appearance. This identification would also be consistent with the neurophysiological evidence for attractor dynamics in columns of area V4 (Engel et al., 2016). The subpopulation of area V4 with opposite modulation could mediate feedback suppression from decision levels. If so, our model would predict this subpopulation to vary in counterphase with perceptual appearance. Finally, the fascinating interactions observed within primary visual cortex (area V1) are well beyond the scope of our simple model. Presumably, a ‘stacked’ model with two successive levels of competitive interactions at monocular and binocular levels or representation (Wilson, 2003; Li et al., 2017) would be required to account for these phenomena.

Conclusion

As multistable phenomena and their characteristics are ubiquitous in visual, auditory, and tactile perception, the mechanism we propose may form a general part of sensory processing. It bridges neural, perceptual, and normative levels of description and potentially offers a ‘comprehensive task-performing model’ (Kriegeskorte and Douglas, 2018) for sensory decision-making.

Materials and methods

Psychophysics

Request a detailed protocolSix practiced observers participated in the experiment (four males, two females). Informed consent, and consent to publish, was obtained from all observers, and ethical approval Z22/16 was obtained from the Ethics Commission of the Faculty of Medicine of the Otto-von-Guericke University, Magdeburg. Stimuli were displayed on an LCD screen (EIZO ColorEdge CG303W, resolution pixels, viewing distance was 104 cm, single pixel subtended , refresh rate 60 Hz) and were viewed through a mirror stereoscope, with viewing position being stabilized by chin and head rests. Display luminance was gamma-corrected and average luminance was .

Two grayscale circular orthogonally oriented gratings ( and ) were presented foveally to each eye. Gratings had diameter of , spatial period . To avoid a sharp outer edge, grating contrast was modulated with Gaussian envelope (inner radius , ). Tilt and phase of gratings was randomized for each block. Five contrast levels were used: 6.25, 12.5, 25, 50, and 100%. Contrast of each grating was systematically manipulated, so that each contrast pair was presented in two blocks (50 blocks in total). Blocks were long and separated by a compulsory 1 min break. Observers reported on the tilt of the visible grating by continuously pressing one of two arrow keys. They were instructed to press only during exclusive visibility of one of the gratings, so that mixed percepts were indicated by neither key being pressed (25% of total presentation time). To facilitate binocular fusion, gratings were surrounded by a dichoptically presented square frame (outer size 9.8°, inner size 2.8°).

Dominance periods of ‘clear visibility’ were extracted in sequence from the final of each block and the mean linear trend was subtracted from all values. Values from the initial were discarded. To make comparable the dominance periods of different observers, values were rescaled by the ratio of the all-condition-all-observer average () and the all-condition average of each observer (). Finally, dominance periods from symmetric conditions with were combined into a single category , where () was the contrast viewed by the dominant (suppressed) eye. The number of observed dominance periods ranged from 900 to 1700 per contrast combination ().

For the dominance periods observed in each condition, first, second, and third central moments were computed, as well as coefficient of variation and skewness relative to coefficient of variation:

The expected standard error of the mean for distribution moments is 2% for the mean, 3% for the coefficient of variation, and 12% for skewness relative to coefficient of variation, assuming 1000 gamma-distributed samples.

Coefficients of sequential correlations were computed from pairs of periods with opposite dominance (first and next: ‘lag’ ), pairs of periods with same dominance (first and next but one: ‘lag’ ), and so on,

where and are mean duration and mean square duration, respectively. The expected standard deviation of the coefficient of correlation is 0.03, assuming 1000 gamma-distributed samples.

To analyze ‘burstiness,’ we adapted a statistical measure used in neurophysiology (Compte et al., 2003). First, sequences of dominance periods were divided into all possible subsets of successive periods and mean durations computed for each subset. Second, heterogeneity was assessed by computing, for each size , the coefficient of variation cV over mean durations, compared to the mean and variance of the corresponding coefficient of variation for randomly shuffled sequences of dominance periods. Specifically, a ‘burstiness index’ was defined for each subset size as.

where is the coefficient of variation over subsets of size and where and are, respectively, mean and mean square of the coefficients of variation from shuffled sequences.

Model

Request a detailed protocolThe proposed mechanism for BR dynamics relies on discretely stochastic processes (‘birth-death’ or generalized Ehrenfest processes). Bistable variables transition between active and inactive states with time-varying Poisson rates (activation) and (inactivation). Two ‘evidence pools’ of such variables, and , represent two kinds visual evidence (e.g., for two visual orientations), whereas two ‘decision pools,’ and , represent alternative perceptual hypotheses (e.g., two grating patterns) (see also Appendix 1—figure 1). Thus, instantaneous dynamical state is represented by four active counts or, equivalently, by four active fractions .

The development of pool activity over time is described by a master equation for probability of the number active variables.

For constant , the distribution is binomial at all times Karlin and McGregor, 1965, van Kampen, 1981. The time development of the number of active units in pool is an inhomogeneous Ehrenfest process and corresponds to the count of activations, minus the count of deactivations,

where is a discrete random variable drawn from a binomial distribution with trial number and success probability .

All variables of a pool have identical transition rates, which depend exponentially on the ‘potential difference’ between states, with a input-dependent component and a baseline component :

where and are baseline rates and and baseline components. The input-dependent components of effective potentials are modulated linearly by synaptic couplings

Visual inputs are and , respectively, where

is a monotonically increasing, logarithmic function of image contrast, with parameter γ.

Degrees of freedom

Request a detailed protocolThe proposed mechanism has 11 independent parameters – 6 synaptic couplings, 2 baseline rates, 2 baseline potentials, 1 contrast nonlinearity – which were fitted to experimental observations. A 12th parameter – pool size – remained fixed.

| Symbol | Description | Value |

|---|---|---|

| N | Pool size | 25 |

| 1/ve | Baseline rate, evidence | 1.95 ± 0.10 s |

| 1/vr | Baseline rate, decision | 0.018 ± 0.010 s |

| Baseline potential, evidence | -1.65 ± 0.24 | |

| Baseline potential, decision | -4.94 ± 0.67 | |

| wvis | Visual input coupling | 1.780 ± 0.092 |

| wexc | Feedforward excitation | 152.2 ± 3.7 |

| winh | Feedforward inhibition | 32.10 ± 2.3 |

| wcomp | Lateral competition | 33.4 ± 1.2 |

| wcoop | Lateral cooperation | 15.21± 0.59 |

| wsupp | Feedback suppression | 2.34 ± 0.14 |

| γ | Contrast nonlinearity | 0.071 ± 0.011 |

Fitting procedure

Request a detailed protocolThe experimental dataset consisted of two 5 × 5 arrays for mean and coefficient of variation , plus two scalar values for skewness and correlation coefficient . The two scalar values corresponded to the (rounded) average values observed over the 5 × 5 combinations of image contrast. In other words, the fitting procedure prescribed contrast dependencies for the first two distribution moments, but not for correlation coefficients.

The fit error was computed as a weighted sum of relative errors

with weighting emphasizing distribution moments.

Approximately 400 minimization runs were performed, starting from random initial configurations of model parameters. For the optimal parameter set, the resulting fit error for the mean observer dataset was approximately 13%. More specifically, the fit errors for mean dominance , coefficient of variation , relative skewness , and correlation coefficients and were 9.8, 7.9, 8.7, 70, and 46%, respectively. Here, fit errors for relative skewness and correlation coefficients were computed for the isocontrast conditions, where experimental observations were least noisy.

To confirm that resulting fit was indeed optimal and could not be further improved, we studied the behavior of the fit error in the vicinity of the optimal parameter set. For each parameter , 30 values were picked in the direct vicinity of the optimal parameter (Appendix 1—figure 9). The resulting scatter plot of value pairs and fit error was approximated by a quadratic function, which provided 95% confidence intervals for . For all parameters except , the estimated quadratic function was convex and the coefficient of the Hessian matrix associated with the fit error was positive. Additionally, the estimated extremum of each parabola was close to the corresponding optimal parameter, confirming that the parameter set was indeed optimal (Appendix 1—figure 9).

To minimize fit error, we repeated a stochastic gradient descent from randomly chosen initial parameter. Interestingly, the ensemble of suboptimal solutions found by this procedure populated a low-dimensional manifold of the parameter space in three principal components accounted for 95% of the positional variance. Thus, models that reproduce experimental observations with varying degrees of freedom exhibit only 3–4 effective degrees of freedom. We surmise that this is due, on the one hand, to the severe constraints imposed by our model architecture (e.g., discrete elements, exponential input dependence of transition rates) and, on the other hand, by the requirement that the dynamical operating regime behaves as a relaxation oscillator.

In support of this interpretation, we note that our 5 × 5 experimental measurements of and were accurately described by ‘quadric surfaces’ () with six coefficients each. Together with the two further measurements of and , our experimental observations accordingly exhibited approximately effective degrees of freedom. This number was sufficient to constrain the 3–4 dimensional manifold of parameters, where the model operated as a relaxation oscillator with a particular dynamics, specifically, a slow-fast dynamics associated, respectively, with the accumulation and reversal phases of BR.

Alternative model

Request a detailed protocolAs an alternative model (Laing and Chow, 2002), a combination of competition, adaptation, and image-contrast-dependent noise was fitted to reproduce four 5 × 5 arrays for mean , coefficient of variation , skewness , and correlation coefficient . Fit error was computed as the average of relative errors

For purposes of comparison, a weighted fit error with weighting was computed, as well.

The model comprised four state variables and independent colored noise:

where is a nonlinear activation function and is white noise.

Additionally, both input and noise amplitude were assumed to depend nonlinearly on image contrast :

This coupling between input and noise amplitude served stabilizes the shape of dominance distributions over different image contrasts (‘scaling property’).

Parameters for competition β = 10, activity time constant , noise time constant , and activation function were fixed. Parameters for adaptation strength , adaptation time constant , contrast dependence of input , , and contrast dependence of noise amplitude , were explored within the ranges indicated.

The best fit (determined with a genetic algorithm) was as follows: , , , , , . The fit errors for mean dominance , coefficient of variation , skewness , and correlation coefficient were, respectively, 11.3, 8.3, 20, and 55%. The fit error for correlation coefficient was 180% (because the model predicted negative values). The combined average for , , and was 13.2%. The fit error obtained with weighting was 16.4%.

For Figure 6d, the alternative model was fitted only to observations at equal image contrast, : mean dominance , coefficient of variation , skewness , and correlation coefficient . The combined average fit error for , , and was 11.2%. The combined average for all four observables was 22%.

Spiking network simulation

Request a detailed protocolTo illustrate a possible neural realization of ‘local attractors,’ we simulated a competitive network with eight identical assemblies of excitatory and inhibitory neurons, which collectively expresses a spontaneous and metastable dynamics (Mattia et al., 2013). One assembly (denoted as ‘foreground’) comprised 150 excitatory leaky-integrate-and-fire neurons, which were weakly coupled to the 1050 excitatory neurons of the other assemblies (denoted as ‘background’), as well as 300 inhibitory neurons. Note that background assemblies are not strictly necessary and are included only for the sake of verisimilitude. The connection probability between any two neurons was . Excitatory synaptic efficacy between neurons in the same assembly and in two different assemblies was and , respectively. Inhibitory synaptic efficacy was , and the efficacy of excitatory synapses onto inhibitory neurons was . Finally, ‘foreground’ neurons, ‘background neurons,’ and ‘inhibitory neurons’ each received independent Poisson spike trains of , and , respectively. Other settings were as in Mattia et al., 2013. As a result of these settings, ‘foreground’ activity transitioned spontaneously between an ‘off’ state of approximately and an ‘on’ state of approximately .

Appendix 1

Model schematics

Proposed mechanism of binocular rivalry dynamics (schematic).

Bistable variables are represented by white (inactive) or red (active) circles. Four pools, each with variables, are shown: two evidence pools and , with active counts and , and two decision pools, and , with active counts and . Excitatory and inhibitory synaptic couplings include selective feedforward excitation , indiscriminate feedforward inhibition , recurrent excitation , and mutual inhibition of decision pools, as well as selective feedback suppression of evidence pools. Visual input to evidence pools and is a function of image contrast and .

Metastable attractor dynamics

Metastable dynamics of spiking neural network.

(a) Eight assemblies of excitatory neurons (schematic, light and dark gray disks) and one pool of inhibitory neurons (white disc) interact competitively with recurrent random connectivity. We focus on one ‘foreground’ assembly (dark gray), with firing rate and selective external input . (b) ‘Foreground’ activity explores an effective energy landscape with two distinct steady states (circles), separated by ridge points (diamonds). As this landscape changes with external input , transition rates between ‘on’ and ‘off’ states also change with external input. (c) Simulation to establish transition rates of foreground assembly. External input is stepped periodically between and . Spiking activity of 10 representative excitatory neurons in a single trial, population activity over 25 trials, thresholded population activity over 25 trials, and activation probability (fraction of ‘on’ states). (d) Relaxation dynamics in response to step change of , with ‘on’ transitions (left) and ‘off’ transitions (right). (e) Average state transition rates vary anti-symmetrically and exponentially with external input: and (red and blue lines).