By Mark Patterson (@marknpatterson)

Our goal at eLife is to be a platform for research communication that encourages and recognises the most responsible behaviours in science, but to achieve that there is a pressing need to reform practices around research assessment. Towards that end we recently collaborated with several journal publishers (PLOS, EMBO, Royal Society, Springer Nature and AAAS) and researchers (Stephen Curry and Vincent Larivière) to compare the citation distributions that underpin impact factor calculations in various journals. The motivation for the project was to show how little value the impact factor has as a tool for research assessment, and the results have been made available as a preprint at bioRxiv.

The key points are:

- Citation distributions are broad, ranging from zero to more than 100 in many journals.

- Distributions are skewed and overlapping, with the majority of articles receiving modest numbers of citations in the 0-25 range.

- The journal impact factor, which attempts to estimate the ‘average’ number of citations an article in a journal receives, therefore provides little information about the likely significance of any given article within a journal, regardless of its impact factor.

These features of citation distributions and impact factors are quite well known, and yet impact factors are frequently used as proxies for research quality in the evaluation of research and researchers. The hope of the authors involved in this study is that by sharing this data behind the impact factor, we would be able to raise awareness of the limitations of the impact factor and encourage journals that promote impact factors to publish citation distributions as well.

The focus on impact factors in research evaluation is unhealthy for science because it has led to extreme levels of competition to publish in high-impact-factor journals. Such competition can encourage behaviours – such as exaggeration of claims, selective reporting of data, and worse – which undermine the integrity of published work and adversely affect the conduct of science. These concerns have been noted, for example, in studies of scientific culture ( Nuffield Council on Bioethics Report on the Culture of Scientific Research in the UK) and reproducibility ( Academy of Medical Sciences Report on Reproducibility and Reliability of Biomedical Research).

On the other hand, scientists now have a wealth of possibilities to share their findings, data and resources so that others can build on their work. To take much greater advantage of these possibilities, initiatives such as DORA and the Leiden Manifesto have called for approaches to research evaluation that focus on a rich collection of scientific accomplishments and move away from impact factors and journal names. Highlighting the deficiencies of impact-factor-based assessment is one way to encourage this change towards more holistic and meaningful approaches to research assessment.

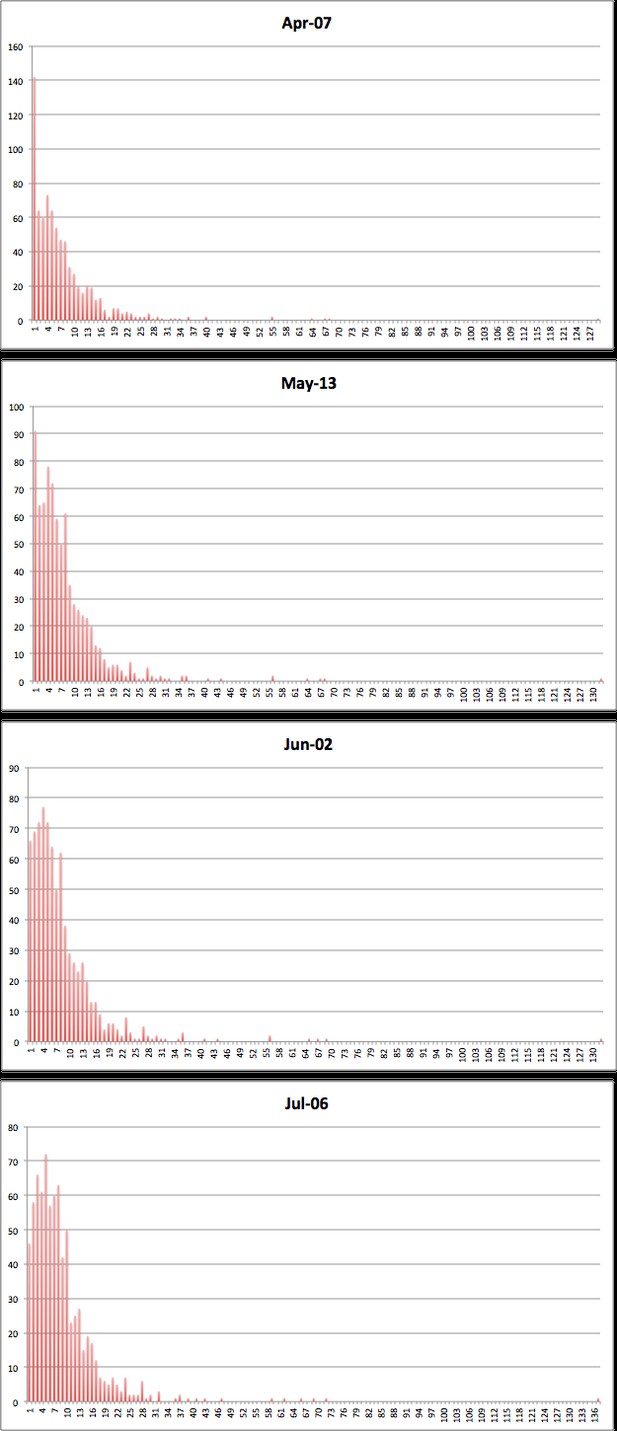

At eLife, our position on the impact factor has been not to use it to promote our journal and not to attempt to influence it in any way, as it is possible to do. However, one of the observations about the eLife citation distribution in particular is that the data in the Thomson Reuters Web of Science database contained many missing citations, which could be identified using other searches. It is important that databases such as this give an accurate view of the citation network surrounding any given article. Thomson Reuters is attempting to correct the eLife data as can be seen in the four distributions shown below, which were generated at different times over the previous few months. We will update this post when Thomson Reuters has completed the data cleanup. Missing citations were found in high numbers in some of the other journals in our analysis, which led to another recommendation: we encourage all publishers to make their reference lists publicly available, so that the data can be studied openly and deficiencies can be understood and corrected. Crossref provides a very straightforward way to do this as discussed in a recent blog post.

We were delighted to collaborate with such a diverse and prominent group of journals to highlight the limitations of the impact factor in research evaluation. We hope that other journals that do promote the impact factor will follow suit and share the underlying citation data. Most important of all, we hope that administrators and researchers involved in the assessment of science will base their judgments on the merits of specific scientific findings and achievements.