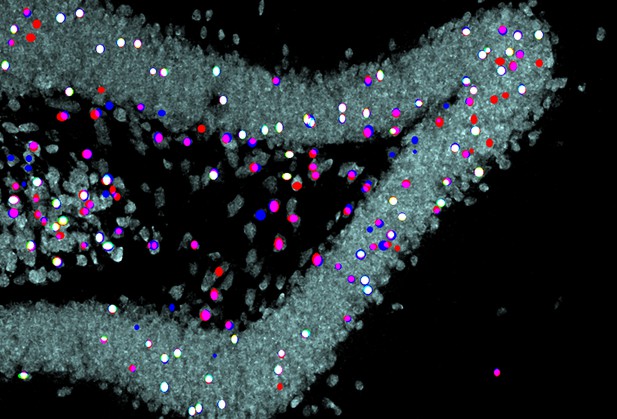

An image taken of the brain of a mouse with dots indicating the somewhat subjective annotations of three different human experts shown in red, blue or green. Image credit: Dennis Segebarth and Robert Blum (CC BY 4.0)

Research in biology generates many image datasets, mostly from microscopy. These images have to be analyzed, and much of this analysis relies on a human expert looking at the images and manually annotating features. Image datasets are often large, and human annotation can be subjective, so automating image analysis is highly desirable. This is where machine learning algorithms, such as deep learning, have proven to be useful. In order for deep learning algorithms to work first they have to be ‘trained’. Deep learning algorithms are trained by being given a training dataset that has been annotated by human experts. The algorithms extract the relevant features to look out for from this training dataset and can then look for these features in other image data.

However, it is also worth noting that because these models try to mimic the annotation behavior presented to them during training as well as possible, they can sometimes also mimic an expert’s subjectivity when annotating data. Segebarth, Griebel et al. asked whether this was the case, whether it had an impact on the outcome of the image data analysis, and whether it was possible to avoid this problem when using deep learning for imaging dataset analysis.

For this research, Segebarth, Griebel et al. used microscopy images of mouse brain sections, where a protein called cFOS had been labeled with a fluorescent tag. This protein typically controls the rate at which DNA information is copied into RNA, leading to the production of proteins. Its activity can be influenced experimentally by testing the behaviors of mice. Thus, this experimental manipulation can be used to evaluate the results of deep learning-based image analyses.

First, the fluorescent images were interpreted manually by a group of human experts. Then, their results were used to train a large variety of deep learning models. Models were trained either on the results of an individual expert or on the results pooled from all experts to come up with a consensus model, a deep learning model that learned from the personal annotation preferences of all experts. This made it possible to test whether training a model on multiple experts reduces the risk of subjectivity. As the training of deep learning models is random, Segebarth, Griebel et al. also tested whether combining the predictions from multiple models in a so-called model ensemble improves the consistency of the analyses. For evaluation, the annotations of the deep learning models were compared to those of the human experts, to ensure that the results were not influenced by the subjective behavior of one person. The results of all bioimage annotations were finally compared to the experimental results from analyzing the mice’s behaviors in order to check whether the models were able to find the behavioral effect on cFOS.

Segebarth, Griebel et al. concluded that combining the expert knowledge of multiple experts reduces the subjectivity of bioimage annotation by deep learning algorithms. Combining such consensus information in a group of deep learning models improves the quality of bioimage analysis, so that the results are reliable, transparent and less subjective.