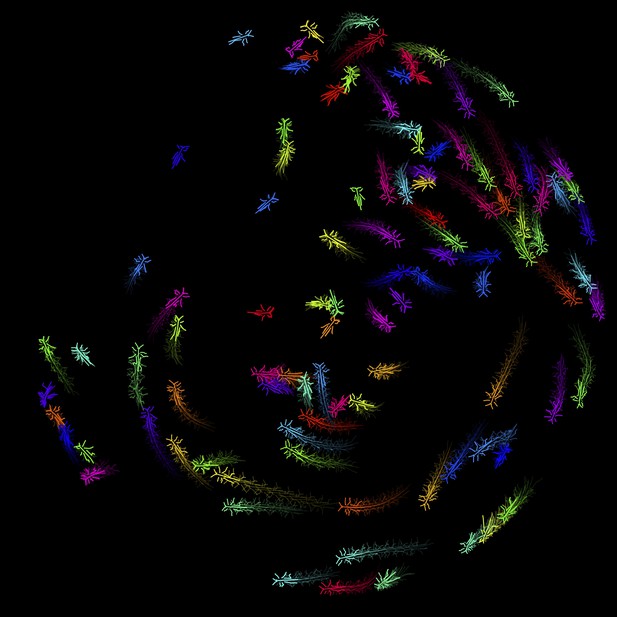

Posture data for a swarm of 100 locusts collectively marching in a circular arena. Image credit: Jake Graving (CC BY 4.0)

Studying animal behavior can reveal how animals make decisions based on what they sense in their environment, but measuring behavior can be difficult and time-consuming. Computer programs that measure and analyze animal movement have made these studies faster and easier to complete. These tools have also made more advanced behavioral experiments possible, which have yielded new insights about how the brain organizes behavior.

Recently, scientists have started using new machine learning tools called deep neural networks to measure animal behavior. These tools learn to measure animal posture – the positions of an animal’s body parts in space – directly from real data, such as images or videos, without being explicitly programmed with instructions to perform the task. This allows deep learning algorithms to automatically track the locations of specific animal body parts in videos faster and more accurately than previous techniques. This ability to learn from images also removes the need to attach physical markers to animals, which may alter their natural behavior.

Now, Graving et al. have created a new deep learning toolkit for measuring animal behavior that combines components from previous tools with the latest advances in computer science. Simple modifications to how the algorithms are trained can greatly improve their performance. For example, adding connections between layers, or ‘neurons’, in the deep neural network and training the algorithm to learn the full geometry of the body – by drawing lines between body parts – both enhance its accuracy. As a result of adding these changes, the new toolkit can measure an animal's pose from previously unseen images with high speed and accuracy, after being trained on just 100 examples. Graving et al. tested their model on videos of fruit flies, zebras and locusts, and found that, after training, it was able to accurately track the animals’ movements. The new toolkit has an easy-to-use software interface and is freely available for other scientists to use and build on.

The new toolkit may help scientists in many fields including neuroscience and psychology, as well as other computer scientists. For example, companies like Google and Apple use similar algorithms to recognize gestures, so making those algorithms faster and more efficient may make them more suitable for mobile devices like smartphones or virtual-reality headsets. Other possible applications include diagnosing and tracking injuries, or movement-related diseases in humans and livestock.