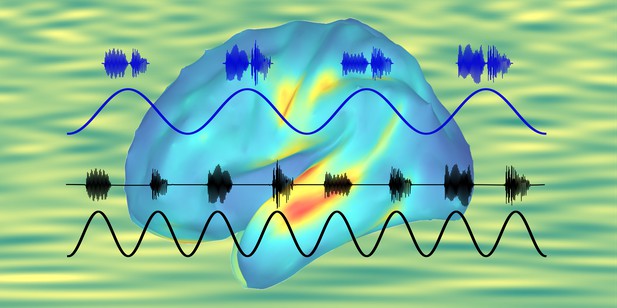

The brain can apply rules to segment a speech sequence into chunks. Brainwaves can synchronize to both the rhythm of speech and the rhythm of chunks formed in the mind. Image credit: Nai Ding and Peiqing Jin (CC BY 4.0)

From digital personal assistants like Siri and Alexa to customer service chatbots, computers are slowly learning to talk to us. But as anyone who has interacted with them will appreciate, the results are often imperfect.

Each time we speak or write, we use grammatical rules to combine words in a specific order. These rules enable us to produce new sentences that we have never seen or heard before, and to understand the sentences of others. But computer scientists adopt a different strategy when training computers to use language. Instead of grammar, they provide the computers with vast numbers of example sentences and phrases. The computers then use this input to calculate how likely for one word to follow another in a given context. "The sky is blue" is more common than "the sky is green", for example.

But is it possible that the human brain also uses this approach? When we listen to speech, the brain shows patterns of activity that correspond to units such as sentences. But previous research has been unable to tell whether the brain is using grammatical rules to recognise sentences, or whether it relies on a probability-based approach like a computer.

Using a simple artificial language, Jin et al. have now managed to tease apart these alternatives. Healthy volunteers listened to lists of words while lying inside a brain scanner. The volunteers had to group the words into pairs, otherwise known as chunks, by following various rules that simulated the grammatical rules present in natural languages. Crucially, the volunteers’ brain activity tracked the chunks – which differed depending on which rule had been applied – rather than the individual words. This suggests that the brain processes speech using abstract rules instead of word probabilities.

While computers are now much better at processing language, they still perform worse than people. Understanding how the human brain solves this task could ultimately help to improve the performance of personal digital assistants.