By Paul Shannon, Head of Technology

Continuum is the platform that powers eLife 2.0. The first question I’m often asked is: “how much does it cost?”. The short answer: “it’s free”. Continuum is available as free open-source software and licenced under an MIT licence, meaning that you can use it as you wish, even for commercial purposes. However, like any software, even though its ticket price may be zero, there are still running, maintenance and people costs involved.

The more granular nature of the services in Continuum means that costs can be easily broken down with regard to the scale, diversity and needs of any publisher. There are three main aspects to the costs of running eLife 2.0 on Continuum: the cost of the computers (or instances) that run the software, the cost of the bandwidth that people use to access those computers and the cost of the people used to ensure those computers work as expected 24 hours a day, seven days a week.

In common with other systems, Continuum also needs to be set up and adapted to every publisher's needs to allow them to do more with everything they publish – there are options for both internal development teams and for using a digital agency for that. We’ll look at setup costs in a future post, since we’re currently improving the developer experience to help teams get up and running quickly.

Compute and bandwidth

We use Amazon Web Services (AWS) for hosting Continuum and the total monthly bill for running the live services and bandwidth is around $1,710.

This can be broken down as follows, and also includes pro-rata costs for reserved instances (i.e. we pay upfront for 44 instances to get a 40% reduction in cost, but share those out between running live services, development machines and data science activities).

| Description | Service | Live services |

|---|---|---|

| Computing (on demand) | EC2 | $400.00 |

| Computing (predictive) | EC2 reserved instances | $100.00 |

| Database | RDS | $500.00 |

| Content delivery | CloudFront | $500.00 |

| Storage | S3 | $100.00 |

| Data transfer | Data transfer | $30.00 |

| Caching | ElastiCache | $40.00 |

| Messaging and orchestration | Other (SQS, SWF, etc.) | $40.00 |

You’re probably now wondering what you get for that outlay. Other than the flexibility and agility that running your own publishing platform provides, those computers manage to publish around 120 articles per month and that bandwidth helps serve 500,000 visitors per month too. We handle peaks in excess of 300 requests per minute on eLife, with an average of 200 – that equates to roughly 100 simultaneous visitors on the site. Our highest peak was just over 1,000 concurrent visitors (when the Homo naledi discovery was first published on eLife), so we have load tested the site to 10 times that amount and performance remained acceptable.

Compute

We over-provision the number of machines we use for two reasons:

- Redundancy – we try to eliminate single points of failure in our live services. There are at least two machines running key services and all databases have a standby copy, ready to go, in a different part of Amazon’s data centre. This makes deployment of new features safer and more seamless as one machine can be updated while another continues to serve traffic. This is especially important for us as we deploy changes up to five times per day.

- Low-touch scaling – we don’t want the journal website to degrade in performance when we have a spike in visitors, which is unpredictable. While we could build complex elastic scaling into the platform, we’ve opted for simplicity for now: additional machines. Since computers are cheaper than people (and don’t need to rest), this means midnight spikes are coped with and we can all sleep soundly.

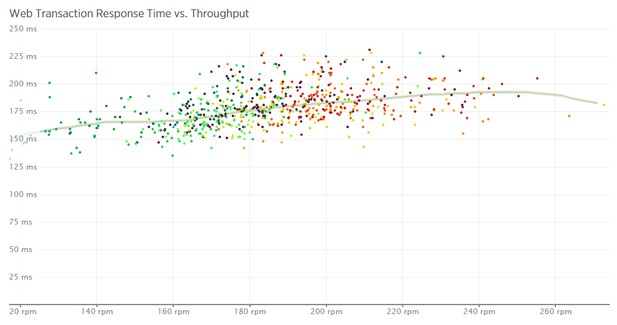

As you’ll see in the more detailed section later, although we have spare capacity we still only take the smallest and least expensive types of machines from Amazon for most of the services. You can see how the response time only increases slightly when the number of requests increases:

Our scalability monitoring graph, with requests per minute (rpm) versus response time (milliseconds).

Bandwidth

With Continuum and eLife 2.0, we have taken care to justify each byte, meaning that every new library, stylesheet or piece of site furniture is evaluated as to whether the potential benefit is likely to outweigh the performance cost. This approach, coupled with the use of progressive enhancement, helps to reduce the amount of bandwidth needed to download the the pages and their assets. For example, today’s homepage downloads completely with around 1Mb of data used. Contrast this with other popular journals’ homepages that average over 1.7Mb, with one example downloading 11.2Mb for every fresh visitor to the site.

Most of the data transfer cost is in the use of CloudFront, Amazon’s content delivery network (CDN) service. CloudFront stores cached copies of our web pages and related files, including the figure and PDF assets, and we pay for the amount of data that is transferred to our visitors’ respective browsers. This is a common way of charging for CDN services, and is a cost most hosting options will have to bear. Following good practices to minimise the size of pages, images and related files therefore improves the user experience and saves on the cost of hosting too.

People

There are now five full-time developers working in the eLife Technology and Development team, along with myself, our Head of Product and Data Scientist. We don’t spend much time maintaining the system, though. Instead, we outsource that work to the digital consultancy that helped us initially build Continuum. We reserve 10 hours of time from their development team every month – but we rarely use it, as the system is stable and the quality-driven software practices we followed when building the system mean that actual software defects are rare and are quick to resolve if they do occur. There are often minor changes needed to the system, however, when new types of data are encountered, and this is the most common type of work conducted under the banner of ‘maintenance’.

Our developers do get involved when there are incidents, but spend most of their time improving or adding features to Continuum. We are happy to speak to anyone who wants their team to contribute code to Continuum, so please get in touch if you are interested. Incidents the team have encountered are usually a result of the evolution of the system with new features rather than major errors in the system.

I’ll not detail the cost of the software consultancy we work with here, since it will be very variable depending on location. If you need a comparison though, you should look for a software developer with skills in PHP or Python, and basic knowledge of AWS, with availability of between five and 10 hours a month depending on your volumes.

Calculating your own costs

Since Continuum was launched, we have spoken to people interested in the platform for publishing content for a broad range of reasons: from departments in educational institutions wanting to self-publish their findings to continent-wide archives; and from small societies to the world’s largest publishers. This is why we have specifically architected Continuum to be scalable, and when we talk about scalability we mean scaling down just as much as scaling up. We’re also aware that not everyone will want all of the services Continuum can offer, so I’ll be as granular as possible in the detail of the ‘computer instance types’ and services we use so that you can consider your own use case.

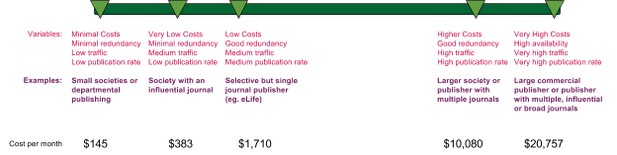

For those who want a quick estimate, let’s explore some different scenarios.

Simple calculator

The variables that will most influence the costs with Continuum are the number of visitors, number of articles published per month and the levels of resiliency you’d like to build into your hosting infrastructure. For eLife, these values are:

- Visitors: 500,000

- Articles published: 120

- Resilience level: good

Having more visitors will increase bandwidth usage in a proportionate way, and compute power will increase in a more stepped way. Publishing more content will also increase compute power needs, as well as the amount of storage/messaging needed.

I’ve calculated a few examples, based on proportionate growth with each of the variables and by estimating the compute power needed for each. I’ve assumed that bandwidth grows linearly with visitors and storage/messaging grows linearly with publications. Here is a summary of the variables and estimated costs for each potential use case:

You can see the data table and the values used for estimates in this simple calculation spreadsheet. Try copying the sheet to perform calculations for your own use case. Let us know if you create a real-world example for yourself – I’d be interested to publish updates to this model in the future.

One variable not included here is the people cost. Keeping any website running smoothly will require some sporadic effort, so you cannot remove that cost completely, even for a minimal setup, as it still needs to be available 24 hours a day. Similarly, a highly available, fault-tolerant system supporting millions of visitors is likely to need different skills or more people, so the variability is hard to predict. The cost should still be considered in your own evaluation.

Ways to save costs

As mentioned, we run eLife on infrastructure with resilience in mind. It can be run on far fewer compute instances, with databases that only have one copy (and regular backups for disaster recovery), and you could try and reduce bandwidth costs by using a cached CDN.

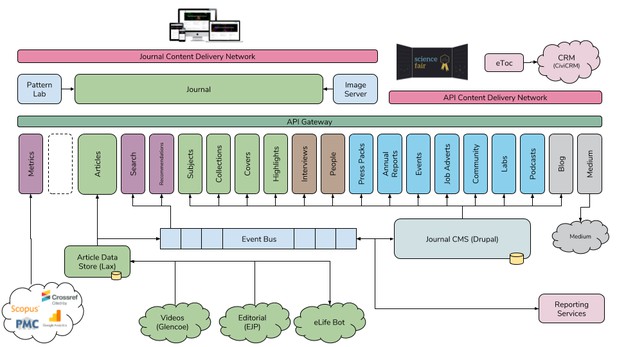

Running multiple services on a single instance is possible with the current architecture:

The eLife 2.0 architecture.

It would be best to segregate services based on their usage type, so we wouldn’t recommend combining two services that require heavy-disk access (like the Image Server and Journal CMS). You could also minimise the number of services you use by not having Recommendations or only using Journal CMS for basic list administration to minimise its footprint.

To explore the costs of running Continuum in greater detail, particularly for Heads of Technology, CTOs, CIOs and Lead Developers, the sections below cover the instance types and specific AWS services we use.

Detailed costs

To get started, AWS provides a useful calculator to predict the costs of using its services, but you could also run Continuum on your own hardware or in other popular cloud providers like Google Cloud Platform or Microsoft Azure (which also provide calculators). Note that the Continuum scripts for automated deployment are currently designed for AWS, but we are working on using containers (with Docker) in the future that should ease deployment to any infrastructure.

Details of EC2 instances

Here is a table of the actual instances running eLife right now, grouped by instance size:

| Name | Instance | Description |

|---|---|---|

| iiif--prod--1, iiif--prod--2 | t2.medium | Image server. Resizes, caches and serves images using IIIF standard. Image source is kept on S3 and resized versions are locally cached using large, fast attached storage on these two instances. |

| search--prod--1 | t2.medium | Search server. Provides ordered and filtered lists to homepage and subject pages and also powers the search tool. |

| recommendations--prod--1 | t2.medium | Recommendations. Provides related articles for the sidebar and related content at the end of an article. |

| elife-bot--prod | t2.medium | Publishing automation. Orchestrates and processes jobs for publishing, including submitting to Crossref, transforming JATS and moving files. |

| medium--prod--1 | t2.micro | Medium post. Provides a conduit to medium.com for our magazine content. |

| redirects--prod--1 | t2.nano | Redirection. Provides a quick service to map from other eLife domains like e-lifesciences.org. |

| Journal--prod--1, journal--prod--2, journal--prod--3 | t2.small | Journal website. This app serves the visitors and requests data from all other services. It calls lots of other APIs but caches those calls heavily. |

| elife-metrics--prod--1 | t2.small | Metrics. Gathers metrics nightly and then exposes them via an API for views, downloads and citations. |

| journal-cms--prod--1 | t2.small | Journal CMS. Provides authoring tools for non-scholarly content and ways to administer lists, collections, images and the home page carousel. Only serves JSON or images and not HTML. |

| pattern-library--prod--1 | t2.small | Pattern library. Contains the CSS and JS for the front end, but is not required to be run in production as the assets are added to the journal in the codebase. |

| api-gateway--prod--1 | t2.small | API gateway. Redirects requests on the root domain name to individual APIs on separate machines. |

| lax--prod | t2.small | Article data store. Stores and provides an API for all scholarly content. |

| elife-dashboard--prod | t2.small | Publishing dashboard. Gives information on the current status and condition of unpublished articles and allows scheduling and publishing. |

Details of database costs

In addition to the compute instances, we also have instances powering databases using the AWS RDS service. This service takes some of the administration out of running a production database, such as hardware provisioning, database setup and patching and backups. It also provides a multi-availability zone copy of our database for the purposes of redundancy. We use Postgresql where possible for Continuum, but also support different databases for other applications including CRM, data science and reporting.

| Name | Instance | DB type | Description |

|---|---|---|---|

| elife-dashboard-prod | db.t2.small | Postgresql | Stores scheduling and user interaction data for the publishing dashboard |

| elife-metrics-prod | db.t2.small | Postgresql | Provides a relational version of the harvested metrics data for use in the metrics API |

| journal-cms-prod | db.t2.medium | Postgresql | The database behind the Drupal instance that powers the journal CMS |

| lax-prod | db.t2.small | Postgresql | The article data store – contains all article data in JSON format and related metadata |

Other costs

There are other services that contribute to the cost that are worthy of note.

Caching is handled by the CDN at the most external level, but we also have caching layers between services, most notably between the journal web app and the search service using Redis, provided by the AWS ElasticCache service.

Communication between services that use an event-driven architecture is handled through SQS and SNS. These, although at a negligible cost, could have an impact on high-throughput journals.

The Amazon Simple Workflow Service is a very cheap yet effective service that provides orchestration of jobs and tasks in the ‘eLife bot’, the part of the platform that does publishing tasks. It basically runs a number of small Python scripts and controls the flow, so could be replaced with more queues and listeners. For now, however, this works very well.

Next steps

If you’d like to know more, or want more detail on how we host the infrastructure for Continuum, then please get in touch directly by emailing me at: p.shannon@elifesciences.org.

We’ll endeavour to make our data more accurate and timely by utilising cost tagging in AWS, so that we can expose figures directly from AWS Cost Explorer for those interested in future running costs as we scale. Unfortunately, you have to add cost tags in prospectively and we didn’t do that at launch, so will have to wait for more accurate numbers.

This blogpost is cross-posted on Medium.

For the latest in innovation, eLife Labs and new open-source tools, sign up for our technology and innovation newsletter. You can also follow @eLifeInnovation or Paul (@BlueReZZ) on Twitter.