Learning the specific quality of taste reinforcement in larval Drosophila

Figures

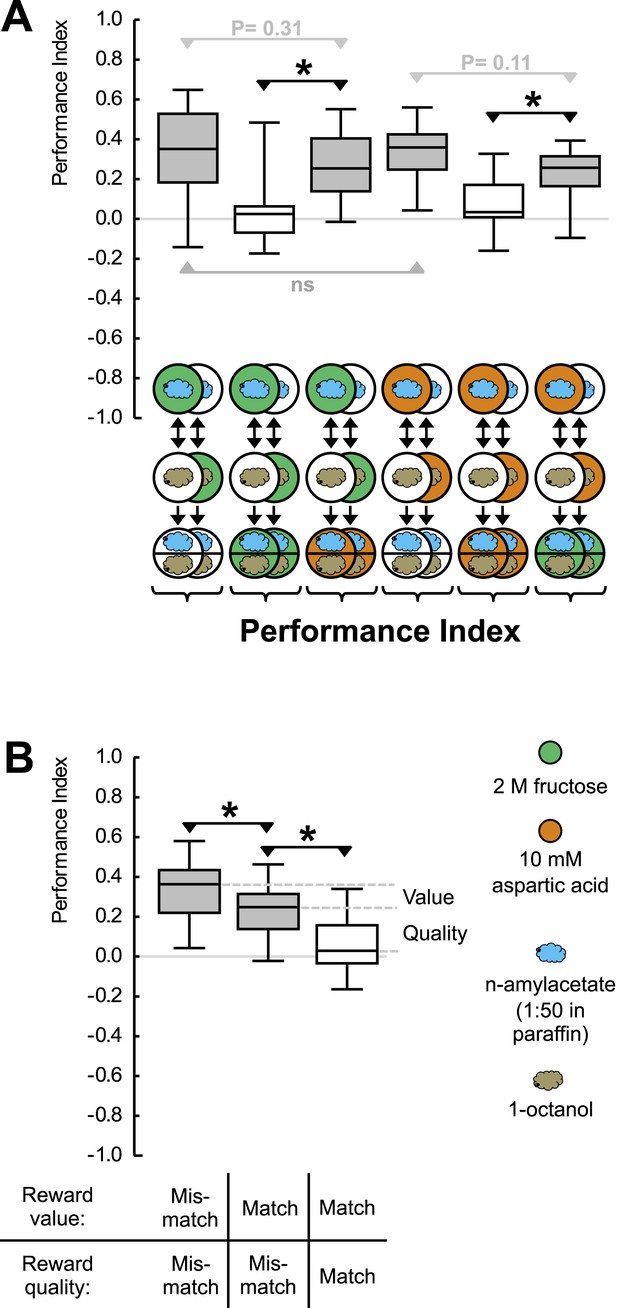

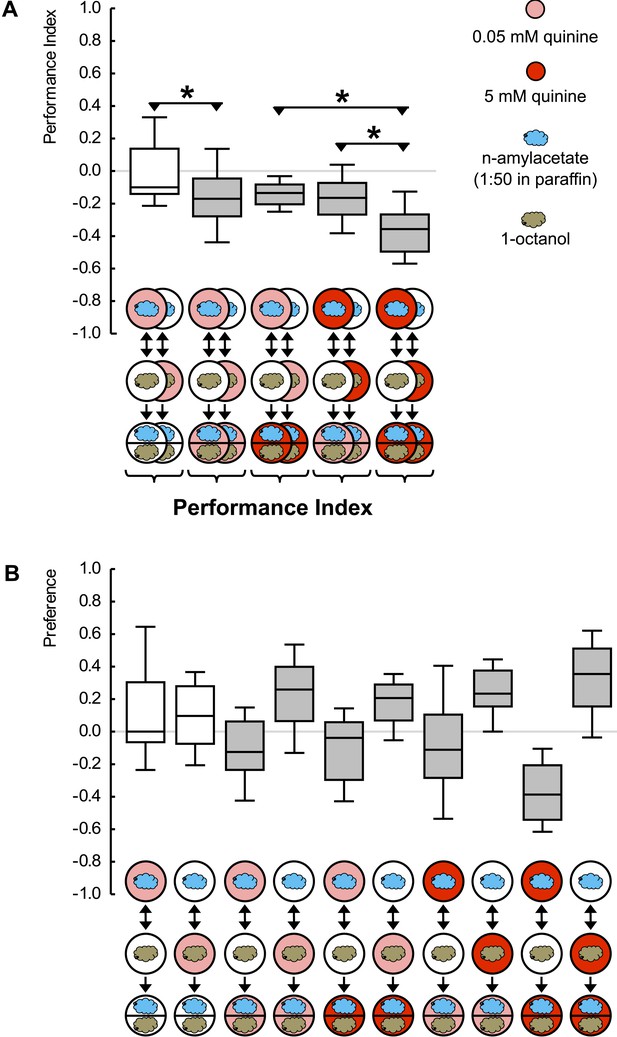

Reward processing by quality and value.

(A) Larvae are trained to associate one of two odors with either 2 M fructose or 10 mM aspartic acid as reward. Subsequently, they are tested for their choice between the two odors—in the absence or in the presence of either substrate. For example, in the left-most panel, a group of larvae is first (upper row) exposed to n-amyl acetate (blue cloud) together with fructose (green circle), and subsequently (middle row) to 1-octanol (gold cloud) without any tastant (white circle). After three cycles of such training, larvae are given the choice between n-amyl acetate and 1-octanol in the absence of any tastant (lower row). A second group of larvae is trained reciprocally, that is, 1-octanol is paired with fructose (second column from left, partially hidden). For the other panels, procedures are analogous. Aspartic acid is indicated by brown circles. (B) Data from (A) plotted combined for the groups tested on pure agarose (‘Mismatch’ in both value and quality), in the presence of the respectively other reward, or of the training reward. Learned search behavior towards the reward-associated odor is abolished in presence of the training reward because both reward value and reward quality in the testing situation are as sought-for (‘Match’ in both cases), yet search remains partially intact in the presence of the other quality of reward, because reward value is as sought-for (‘Match’) but reward quality is not (‘Mismatch’). Please note that value-memory is apparently weaker than memory for reward quality, and is revealed only when pooling across tastants. Sample sizes 15–19. Shaded boxes indicate p < 0.05/6 (A) or p < 0.05/3 (B) from chance (one-sample sign-tests), asterisks indicate pairwise differences between groups at p < 0.05/3 (A) or p < 0.05/2 (B) (Mann–Whitney U-tests).

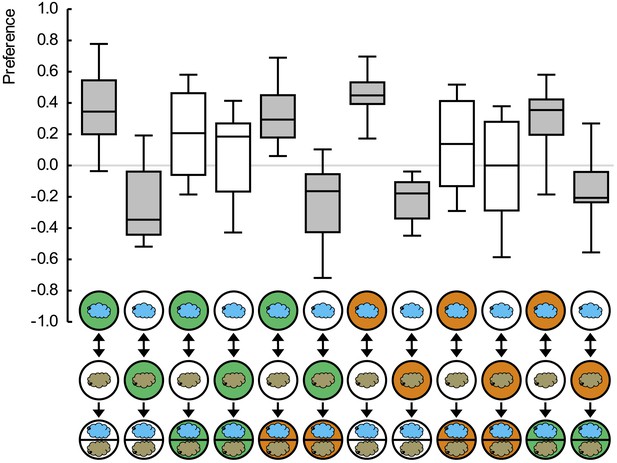

Preference scores for the reciprocally trained groups of Figure 1.

Sample sizes: 15–19. Shaded boxes indicate pairwise differences between groups (p < 0.05/6, Mann–Whitney U-tests). For a detailed description of the sketches, see legend of Figure 1.

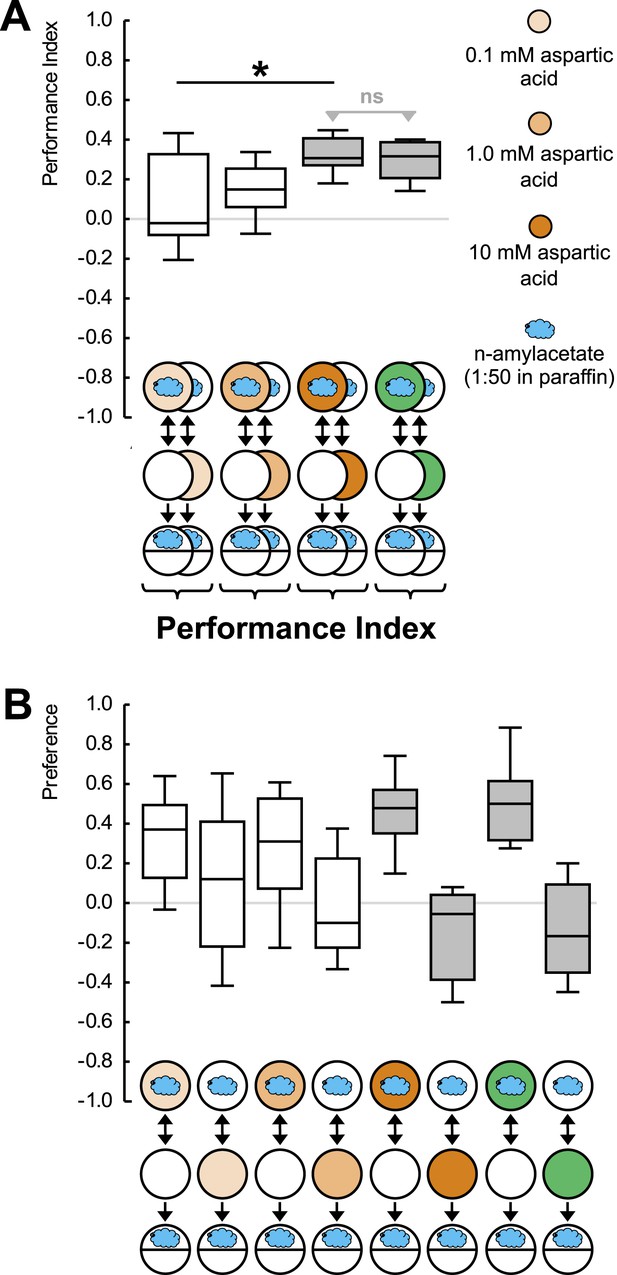

(A, B) Larvae are trained in a one-odor version of the learning paradigm, using different concentrations of aspartic acid as reward.

Increasing the concentration increases the associative performance index. For the highest concentration used, levels of learned behavior are not different from those obtained with 2 M fructose as reward. (B) Preference scores underlying the associative performance indices in (A). Sample sizes: 11–17. Shaded boxes in (A) indicate difference from chance (p < 0.05/4, one-sample sign-tests), in (B) pairwise differences between groups (p < 0.05/4, Mann–Whitney U-tests). Asterisk: difference between groups (p < 0.05, Kruskal–Wallis test). For a detailed description of the sketches, see legend of Figure 1.

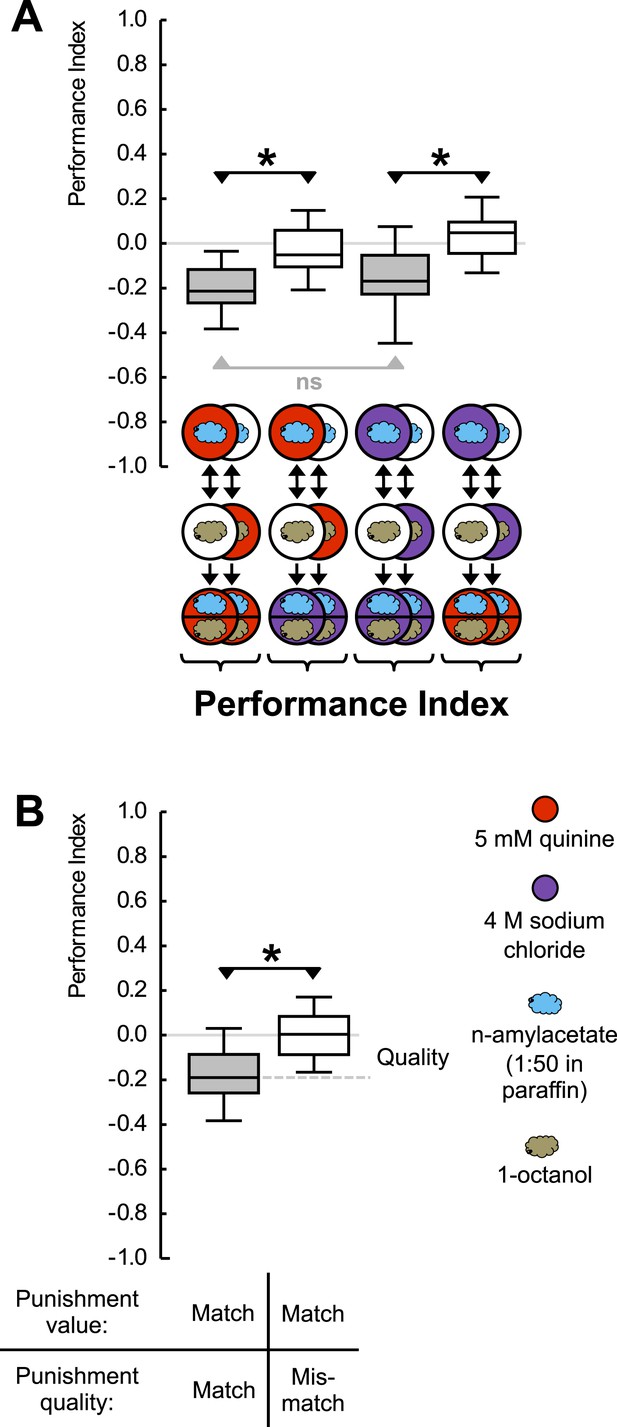

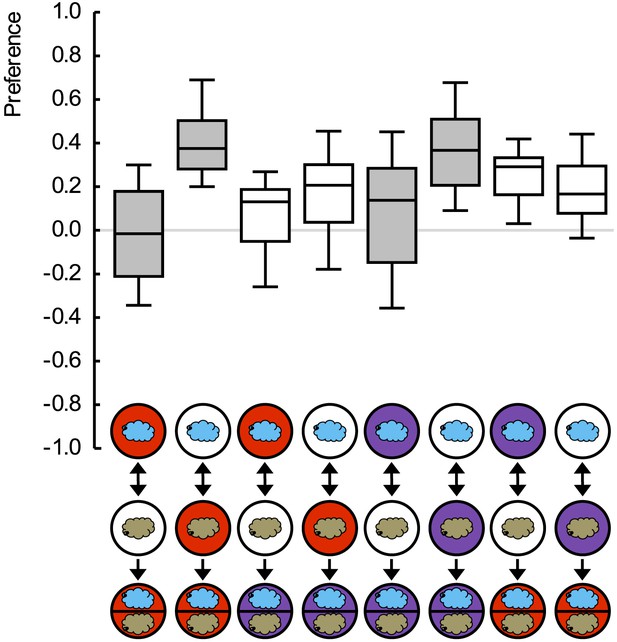

Punishment processing by quality.

(A) Larvae are trained to associate one of two odors with either 5 mM quinine (red circle) or 4 M sodium chloride (purple circle) as punishment and asked for their choice between the two odors—in the presence of either substrate. The larvae show learned escape from the punishment-associated odor only if a matching quality of punishment is present during the test as compared to training. (B) Data from (A) combined according to ‘Match’ or ‘Mismatch’ between test- and training-punishment. We note that value-memory would reveal itself by negative scores upon a match of punishment value despite a mismatch in punishment quality, which is not observed (right-hand box plot, based on second and fourth box plot from A). Sample sizes: 25–32. Shaded boxes indicate p < 0.05/4 (A) or p < 0.05/2 (B) from chance (one-sample sign-tests), asterisks indicate pairwise differences between groups at p < 0.05/3 (A) or p < 0.05 (B) (Mann–Whitney U-tests). For a detailed description of the sketches, see legend of Figure 1.

Quinine memory includes quinine strength.

(A) Larvae are trained to associate one of two odors with a quinine punishment, using either a low (light red) or a high (dark red) concentration of quinine. Learned escape behavior is expressed if the testing conditions are as ‘bad’ as the training-punishment, or worse. In other words, neither the value of the training-punishment alone nor the value of the testing situation alone, determines the levels of learned behavior—but a comparison of them does. (B) Preference scores underlying the associative performance indices in (A). Sample sizes: 22–23. Shaded boxes in (A) indicate p < 0.05/5 from chance (one-sample sign-tests), in (B) pairwise differences between groups at p < 0.05/5 (Mann–Whitney U-tests). Asterisks in (A) indicate pairwise differences between groups at p < 0.05/3 (Mann–Whitney U-tests). For a detailed description of the sketches, see legend of Figure 1.

Preference scores for the reciprocally trained groups of Figure 2.

Sample sizes: 25–32. Shaded boxes indicate differences between groups (p < 0.05/4, Mann–Whitney U-tests). For a detailed description of the sketches, see legend of Figure 1.

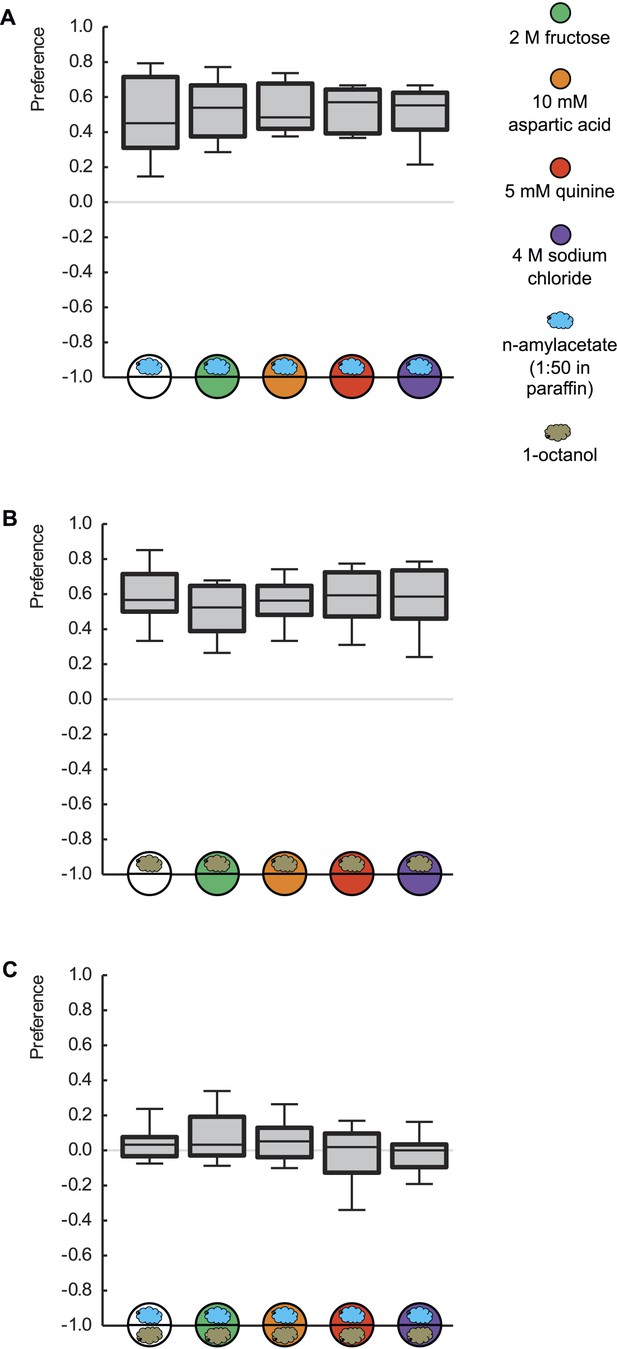

Innate odor preference is not influenced by taste processing.

Larvae are tested for their olfactory preference regarding (A) n-amyl acetate (blue cloud), (B) 1-octanol (gold cloud), or (C) for their choice between n-amyl acetate and 1-octanol. This is done in the presence of pure agarose (white circle), 2 M fructose (green circle), 10 mM aspartic acid (brown circle), 5 mM quinine (red circle), or 4 M sodium chloride (purple circle). We find no differences in odor preferences across different substrates (p > 0.05, Kruskal–Wallis tests). Sample sizes: 20–26.

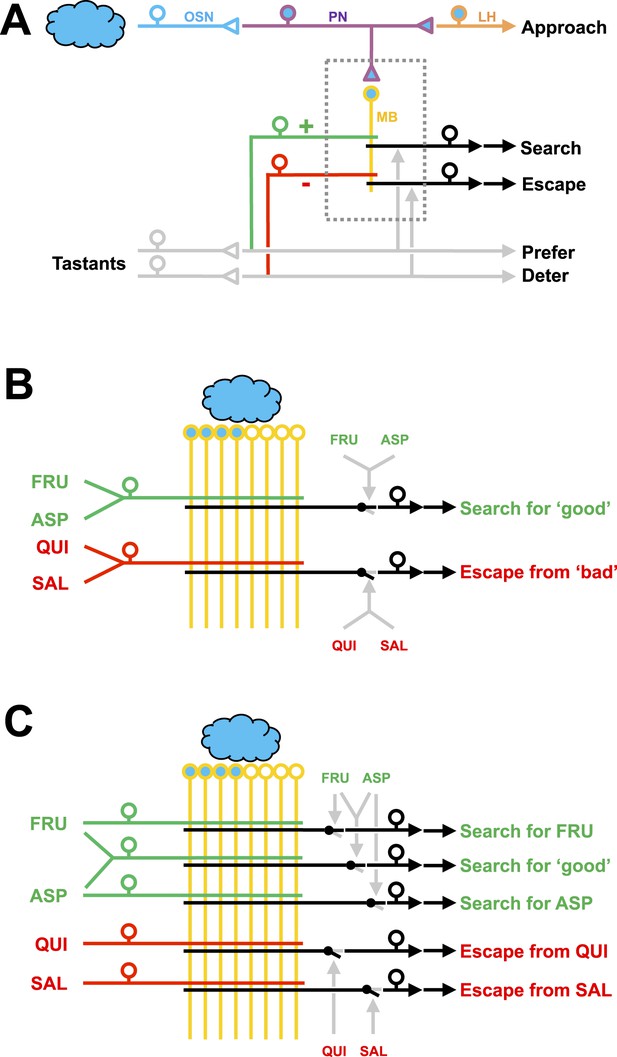

Working hypotheses of reinforcement processing by value-only or by value and quality in larval Drosophila.

(A) Simplified overview (based on e.g., Heisenberg, 2003; Perisse et al., 2013). Odors are coded combinatorially across the olfactory sensory neurons (OSN, blue). In the antennal lobe, these sensory neurons signal towards local interneurons (not shown) and projection neurons (PN, deep blue). Projection neurons have two target areas, the lateral horn (LH, orange) mediating innate approach, and the mushroom body (MB, yellow). Reinforcement signals (green and red for appetitive and aversive reinforcement, respectively) from the gustatory system reach the mushroom body, leading to associative memory traces in simultaneously activated mushroom body neurons. In the present analysis, this sketch focuses selectively on five broad classes of chemosensory behavior, namely innate odor approach, learned odor search and escape, as well as appetitive and aversive innate gustatory behavior. The boxed region is displayed in detail in (B–C). The break in the connection between mushroom body output and behavior is intended to acknowledge that mushroom body output is probably not in itself sufficient as a (pre-) motor signal but rather exerts a modulatory effect on weighting between behavioral options (Schleyer et al., 2013; Menzel, 2014; Aso et al., 2014). (B) Reinforcement processing by value (based on e.g., Heisenberg, 2003; Schleyer et al., 2011; Perisse et al., 2013): a reward neuron sums input from fructose and aspartic acid pathways and thus establishes a memory allowing for learned search for ‘good’. In a functionally separate compartment, a punishment neuron summing quinine and salt signals likewise establishes a memory trace for learned escape from ‘bad’. This scenario cannot account for quality-of-reinforcement memory. (C) Reinforcement processing by both value and quality: in addition to a common, value-specific appetitive memory, fructose and aspartic acid drive discrete reward signals leading to discrete memory traces in at least functionally distinct compartments of the Kenyon cells, which can be independently turned into learned search. For aversive memory, there may be only quality-specific punishment signals. This scenario is in accordance with the present data.