Integration of head and body orientations in the macaque superior temporal sulcus is stronger for upright bodies

Figures

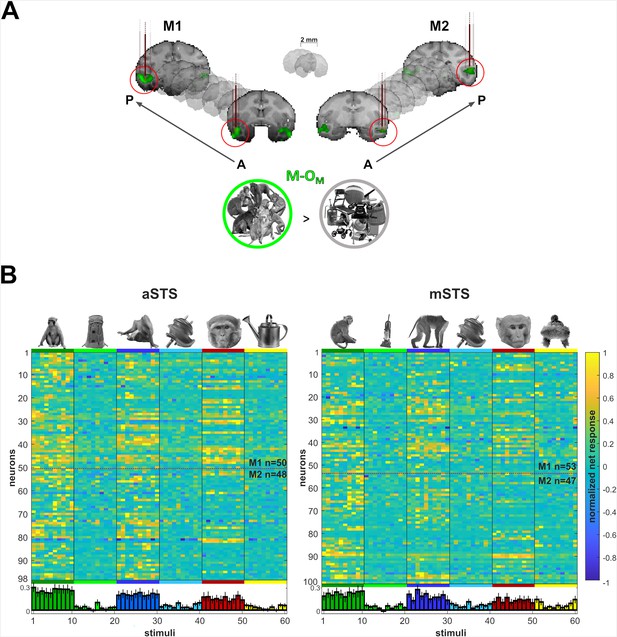

Recording locations and stimulus selectivity.

(A) Targeted patches (red circles) shown in the native space of M1 (left) and M2 (right) on 2 mm coronal slices. Brown vertical lines illustrate penetrations. Monkey patches were defined by the contrast monkeys > monkey control objects (M-OM; p < 0.05 FEW). (B) Normalized net responses in the Selectivity test (experiment 1) in anterior superior temporal sulcus (aSTS) (left) and mid superior temporal sulcus (mSTS) (right). Rows represent neurons from M1 and M2 (separated by a dotted line). Columns correspond to the stimuli, color-coded per category with a bar and one example image. Below, bars in corresponding colors show the mean normalized net response to each stimulus (95% confidence intervals (1000 resamplings)). n indicates the number of neurons.

Monkey avatar stimuli for the Head–body Orientation test (experiment 1).

(A) Sixty-four combinations of head and body orientations for the sitting (P1, left) and the standing pose (P2, right). Body orientation varies by row and head orientation varies by column. Different colors group the eight avatar orientations of four head–body angles. (B) Example stimuli of P1 and P2 in the monkey-centered (MC, left) and the head-centered (HC, right) conditions, with zero angle between head and body. (C) Headless bodies (B) and heads (H) for the zero and straight angle head–body configurations, showing the frontal, back, and two lateral views for P1 and P2. Images are shown for MC (left) and HC (right) conditions and were positioned to match their corresponding avatars in (A). The location of the red fixation target is indicated in (B) and (C).

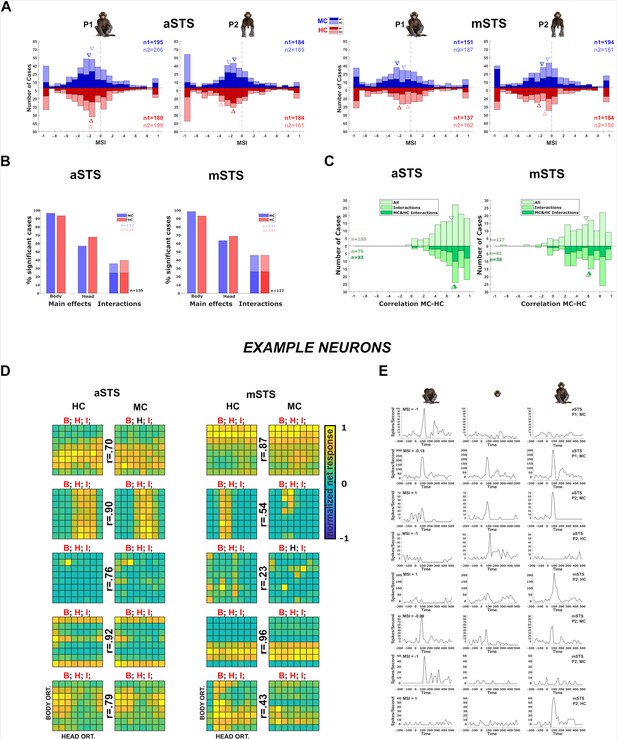

Neuronal responses in the Head–body Orientation test (experiment 1).

(A) Distribution of monkey-sum index (MSI) computed for the responses for all orientation, pose (P1 and P2), and centering (monkey-centered (MC) (blue) and head-centered (HC) (red)) combinations in anterior superior temporal sulcus (aSTS) (upper panel) and mid superior temporal sulcus (mSTS) (lower panel). Data for M1 and M2, and medians (triangles) are shown in different hues (n1 = number of cases for M1; n2 = number of cases for M2). (B) Significant main effects and interactions of the factors body and head orientation, for P1 and P2 in MC (blue) and HC (red) conditions in corresponding colors in aSTS (left) and mSTS (right). Pooled data of both subjects. Significant interactions in both MC and HC are highlighted with darker blue and red (number of cases (n) in black). (C) Distribution of the correlation coefficients between responses to the head–body configurations in MC and HC conditions in aSTS (left) and mSTS (right). Data from both subjects and poses were pooled. Correlation coefficients for all cases are shown above the x-axis. Below the x-axis, the light green bars represent the correlation coefficients for cases with a significant interaction (either MC or HC), while the dark green bars indicate the coefficients for cases with a significant interaction in both MC and HC. N and medians (triangles) are shown in corresponding colors. (D) Normalized net responses of example neurons to the 64 combinations of head and body orientations in aSTS (left) and mSTS (right) for MC and HC conditions. Body orientation (ORT) varies by row and head orientation varies by column, from 0° to 315°. Main effects and interactions of the factors body and head orientation B, H, and I are shown for each neuron in each centering (significant ones are in red, see (B) for details), as well as the correlation coefficients r between responses to the head–body configurations in MC and HC conditions. (E) Net responses of example neurons to bodies, heads, and the corresponding monkey configurations with MSI of 1, –1, or the median. Data for P1 and P2, MC and HC, and aSTS and mSTS (smoothed with the Matlab function interp1).

Correlation between head- and body orientation selectivity between the sitting (P1) and standing pose (P2; experiment 1).

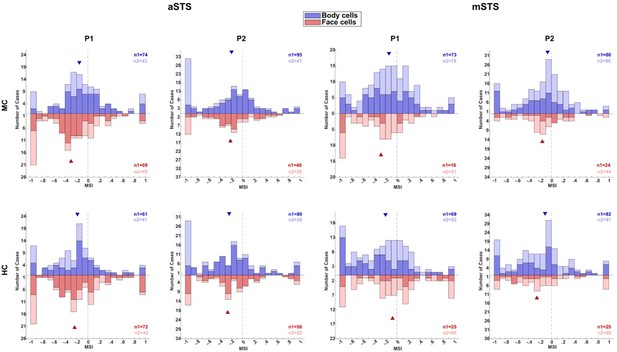

We computed the correlation between the 64 head–body orientation conditions of P1 and P2 for those neurons that were tested with both poses and showed a response for both poses (split-plot ANOVA). This was performed for the head-centered (HC) and monkey-centered (MC) tests of experiment 1 for each monkey and region. Note that not all neurons were tested with both poses (because of failure to maintain isolation of the single unit in both tests or the monkey stopped working) and not all neurons that were recorded in both tests showed a significant response for both poses, which is not unexpected since these neurons can be pose selective. The distribution of the Pearson correlation coefficients of the neurons with a significant response in both tests is shown for each region and centering condition (n1 and n2 indicate the number of neurons for subjects M1 and M2, respectively). The data of the two subjects are shown in different color. The median correlation coefficient (arrow) was significantly larger than zero for each region, monkey, and centering condition (the outcomes of Wilcoxon tests, testing whether the median was different from zero (p1 = p-value for M1; p2 = p-value for M2) are indicated).

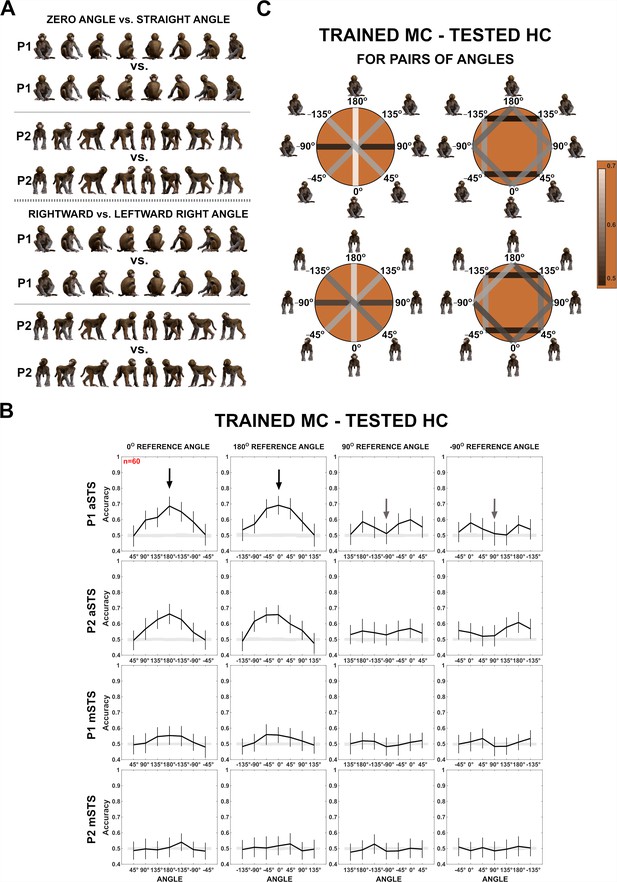

Decoding of head–body angles from neuronal responses in the Head–body Orientation test (experiment 1).

(A) Head–body configurations with zero and straight angles (black arrows in B) and leftward and rightward right angles of head and body (gray arrows in B), of P1 and P2, at eight avatar orientations. (B) Mean decoding accuracies for the head–body configurations with zero, straight, leftward, and rightward right angles versus other head–body angles as indicated, in anterior superior temporal sulcus (aSTS) (top two panels) and mid superior temporal sulcus (mSTS) (bottom two panels), for poses P1 and P2. N indicates the number of neurons. Results are for monkey-centered (MC) training and head-centered (HC) testing. Responses to the eight orientations of a configuration (rows in A) were pooled when training the classifier. Error bars show standard deviations across resamplings. The shaded area indicates null distribution (stimulus labels permutation). (C) Decoding accuracies for different head–body angle pairs in aSTS, for P1 (top) and P2 (bottom). Decoded pairs are connected with lines, with decoding accuracies indicated by color. Training was performed after pooling the eight orientations of a head–body configuration defined by the angle of the head and body. Results are from MC training and HC testing. Avatars of one orientation of the head–body configuration angles are shown.

Decoding of head–body angles in the Head–body Orientation test (experiment 1).

(A) Decoding accuracies for the head–body configurations with zero, straight, leftward, and rightward right angles versus other head–body angles, in anterior superior temporal sulcus (aSTS) (top two panels) and mid superior temporal sulcus (mSTS) (bottom two panels), for P1 and P2, n indicates the number of neurons. Results are for head-centered (HC) training and monkey-centered (MC) testing. The same conventions as Figure 4. (B) Same as (A), but with MC training and MC testing. (C) Same as (A), but with HC training and HC testing. (D) Decoding accuracies for different head–body angle pairs in aSTS, for P1 (top) and P2 (bottom). Decoded pairs are connected with lines, with decoding accuracies indicated by color. Results are from HC training and MC testing (see Figure 4 for MC training and HC testing; same conventions apply).

Neuron-dropping analysis (experiment 1).

We attempted to relate the tuning for body and head orientation with the decoding of the head–body orientation angle. To do this, one needs to identify the neurons that contribute to the decoding of head–body orientation angles. For that, we employed a neuron-dropping analysis, similar to Chiang et al. (Chiang FK, Wallis JD, Rich EL. Cognitive strategies shift information from single neurons to populations in prefrontal cortex. Neuron. 2022 Feb 16;110(4):709–721) to assess the positive (or negative) contribution of each neuron to the decoding performance. We performed cross-validated linear support vector machine (SVM) decoding N times (N = 60 (the number of neurons in the sample)), each time leaving out a different neuron (using N − 1 neurons; 2000 resamplings of pseudo-population vectors). We then ranked decoding accuracies from highest to lowest, identifying the ‘worst’ (rank 1) to ‘best’ (rank N) neurons. Next, we conducted N decodings, incrementally increasing the number of included neurons from 1 to N, starting with the worst-ranked neuron (rank 1) and sequentially adding the next (rank 2, rank 3, etc.). This analysis focused on zero versus straight angle decoding in the anterior superior temporal sulcus (aSTS), as it yielded the highest accuracy. We applied it when training on monkey-centered (MC) and testing on head-centered (HC) for each pose (P1 and P2). Plotting accuracy as a function of the number of included neurons suggested that less than half contributed positively to decoding (A). We examined the tuning for head and body orientation of the 10 ‘best’ neurons (B). Both subjects (M1, M2) contributed neurons to the 10 best. For half or more of those the two-way ANOVA showed a significant interaction and these are indicated by the red color in (B). We performed for each neuron of experiment 1, a one-way ANOVA with as factor head–body orientation angle. To do that, we combined all 64 trials that had the same head–body orientation angle. The percentage of neurons (required to be responsive in the tested condition) for which this one-way ANOVA was computed for each region, separately for each pose, centering condition and monkey subject. These percentages were low but larger than the expected 5% (Type 1 error), with a median of 16.5% (range: 3–23%) in aSTS and 8% for mid superior temporal sulcus (mSTS) (range: 0–19%). A higher percentage of the 10 best neurons for each pose (indicated by * in (B)) showed a significant one-way ANOVA for angle (for P1, MC: 50% (95% confidence interval (CI): 19–81%); P1, HC: 70% (CI: 35–93%); P2, MC: 70% (CI: 35–93%); P2: HC: 50% (CI: 19–81%)). These percentages were significantly higher than expected for a random sample from the population of neurons for each pose-centering combination (expected percentages listed in the same order as above: 16%, 13%, 16%, and 10%; all outside CI). Thus, for at least half of the ‘best’ neurons, the response differed significantly among the head orientation angles at the single neuron level. Nonetheless, the tuning profiles were quite diverse, suggesting population coding of head–body orientation angle.

Decoding of head and body orientation (experiment 1).

We employed the responses to the avatar of experiment 1, using the same sample of neurons of which we decoded the head–body orientation angle. To decode the head orientation, the trials with identical head orientation, irrespective of their body orientation, were given the same label. For this, we employed only responses in the head-centered condition. To decode the body orientation, the trials with identical body orientation, irrespective of their head orientation, had the same label, and we employed only responses in the body-centered condition. The decoding was performed separately for each pose (poses 1 and 2) and region. We decoded either the responses of 20 neurons (10 randomly sampled from each monkey for each of 1000 resamplings), 40 neurons (20 randomly sampled per monkey), or 60 neurons (30 neurons per monkey) since the sample of 60 neurons yielded close to ceiling performance for the body orientation decoding. For each pose, the body orientation decoding was worse for anterior superior temporal sulcus (aSTS) than for mid superior temporal sulcus (mSTS), although this difference reached significance only for P1 and for the 40 neurons sample of P2 (p < 0.025; two-tailed test; same procedure as employed for testing the significance of the decoding of whole-body orientation for upright vs. inverted avatars (experiment 3)). Face orientation decoding was significantly worse for aSTS compared to mSTS.

Decoding of head–body angles across orientations (experiment 1).

(A) Head–body configurations with zero and straight angles, and leftward and rightward right angles for P1 and P2, split into four orientations for training and testing. (B) Decoding accuracies for the head–body configurations with zero, straight, leftward, and rightward right angles in anterior superior temporal sulcus (aSTS), for P1 (top) and P2 (bottom); n indicates the number of neurons. Results are for monkey-centered (MC) training and head-centered (HC) testing, using four orientations for training and testing. Panels 1 and 3: classifier trained on ‘+’ orientations and tested on ‘x’ orientations; panels 2 and 4: classifier trained on ‘x’ orientations and tested on ‘+’ orientations. Same conventions as Figure 4.

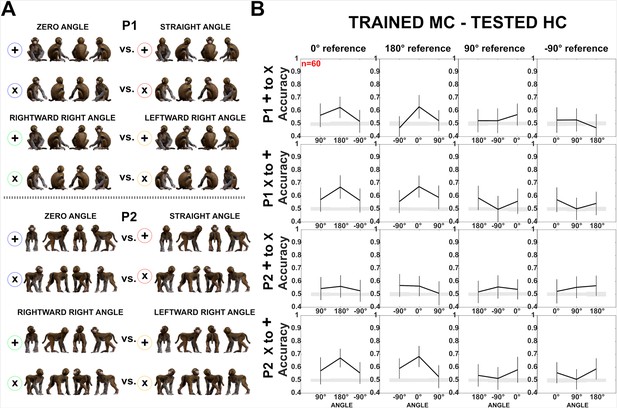

Decoding of head–body angles across orientations (experiment 1).

Same as Figure 5. (A) Head–body configurations with zero and straight angles, and leftward and rightward right angles for P1 and P2, split into four orientations for training and testing, except that (B) head-centered (HC) responses were employed for training and monkey-centered (MC) for testing. Note the difference in accuracy for P2 when training and testing ‘+’ and ‘x’ orientations in the two centering conditions (see Figure 5 for MC to HC training and testing). This interaction between generalization across orientation and centering for P2 is likely related to the larger variations in the eccentricity of the head when changing the orientation and centering of P2. To decode the angle between the head and the body, the head needs to be processed. For the two lateral views (90° and –90° orientation of the angle pairs) of the MC condition of P2, which are included in the ‘+’ orientations (Figure 5A), the head is at a larger eccentricity than for the ‘x’ orientations. This difference in head eccentricity is even enlarged when training is performed with the MC ‘+’ orientations and testing with the HC ‘x’ orientations, yielding low generalization performance. The same holds for the opposite generalization condition in which the training is with the HC ‘x’ orientations and the testing is with the MC ‘+’ orientations of P2. In the other two conditions (MC ‘x’ trained – HC ‘+’ tested; HC ‘+’ trained – MC ‘x’ tested) the differences in head eccentricity between the trained and tested conditions are less, yielding better generalization which was similar to that for P1, at least in the case of the zero versus straight angles.

Dissimilarity matrices of neural responses for head and body orientations (experiment 1).

The color-coded dissimilarity values are 1 − Pearson correlation distances between neural response patterns for all body (top panels) and head (bottom panels) orientations of poses P1 and P2 (left and right panels, respectively) in the monkey-centered (MC) and head-centered (HC) conditions. Data are from the same anterior superior temporal sulcus (aSTS) neurons employed in the decoding of head–body angles in experiment 1. Correlations are computed using normalized firing rates, averaging head orientations when correlating body orientations, and averaging body orientations when correlating head orientations. The dissimilarity is represented by the color scale with yellow indicating higher dissimilarities.

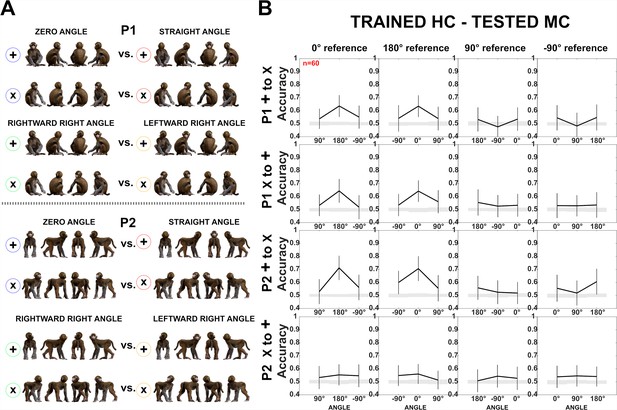

Decoding of head–body angles in the Sum versus Configuration test (experiment 2).

(A) Stimuli of experiment 2: heads and bodies presented at four orientations (frontal, back, and the two lateral ones; B+H), along with all head–body configurations (M). Images were monkey-centered and the isolated heads and bodies were positioned to match their corresponding avatars. The red square indicates the fixation target. (B) Decoding accuracies for head–body configurations (M) and head–body sums (B+H) with zero, straight, leftward, and rightward right angles of head and body (trained pooling the four orientations (A)), for anterior superior temporal sulcus (aSTS) and mid superior temporal sulcus (mSTS), n indicates the number of units. Same conventions as Figure 4.

Decoding of head–body angles for head- and body-selective anterior superior temporal sulcus (aSTS) units (experiment 2).

Our sample of units contains a mixture of units selective for heads and/or bodies. To assess whether the head/body selectivity matters for the decoding of head–body orientation angle, we subdivided our aSTS units into groups based on their head/body selectivity. We performed two analyses, using distinct definitions of head/body selectivity. (A) We averaged for each aSTS unit the mean net response to the 16 heads (differing in location and orientation) and to the 4 bodies (differing in orientation) and computed a Head/body Selectivity Index (HBI): (mean response to head – mean response to body)/(abs(mean response to head) + abs(mean response to body)), which ranges from –1 (response to the bodies only) to 1 (response to the heads only). The HBI was computed for those aSTS units (n = 359) that responded to either the heads or bodies (Materials and methods). Then, we decoded the head–body orientation angles, using the same procedure as described in the main text, for aSTS units with HBI <–0.33 (n = 115; top panels) and those with HBI >0.33 (n = 179; bottom panels). The decoding accuracies for head–body configurations with zero, straight, leftward, and rightward right angles between the head and the body for the body-selective (top panels) and head-selective (bottom panels) aSTS units are shown using the same conventions as in Figure 6, main text. Error bars show standard deviations across resamplings, dotted horizontal lines marking the null distribution. Both groups showed a higher classification accuracy for the zero versus straight angles than for the left versus right angle showing that both body- and head-selective units show similar decoding patterns of head–body orientation angle. (B) Because our stimuli depicted the same identity (that differed in orientation), we employed also a second definition of head–body selectivity: instead of averaging the responses across the images of a category, we took the maximum response for each category to compute the HBI. Dividing the units in two groups HBI <−0.33 (n = 67; top panels) and HBI >0.33 (n = 184; bottom panels) showed again a similar pattern of head–body orientation angle, although this pattern was less pronounced for the body-selective units defined based on the maximum response. Overall, these analyses suggest that head- and body-selective aSTS units show, as a population, a similar decoding bias for zero versus straight angles, both confusing the left and right angles.

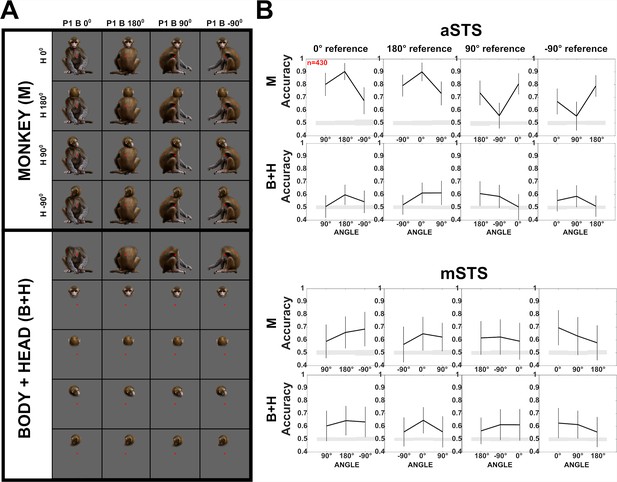

Effect of head–body configuration inversion (experiment 3).

(A) Stimuli of the head–body configurations of P1 with zero and straight angles, and leftward and rightward right angles, at eight orientations, upright and inverted. (B) Decoding accuracies for the head–body configurations with zero, straight, leftward, and rightward right angles in anterior superior temporal sulcus (aSTS), for poses P1 (top panels) and P2 (bottom panels), n indicates the number of units. Solid and dotted lines represent data for upright and inverted configurations, respectively. Same conventions as Figure 4. (C) Responses to the preferred orientation of upright or inverted head–body configurations for aSTS and mid superior temporal sulcus (mSTS) and P1 and P2 (M1 (dark green); M2 (light green)), for the monkey-responsive units. Medians (diamonds) and the number of units per subject (M1: n1; M2: n2) are shown in corresponding colors. p-values of Wilcoxon signed-rank tests (data pooled across subjects) testing the difference in response between upright and inverted are shown (red: significant).

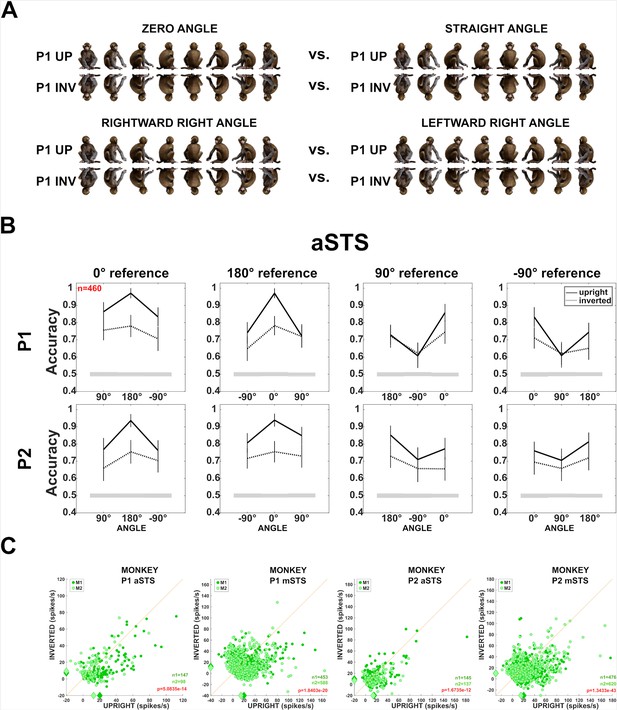

Monkey avatar stimuli for the Upright versus Inverted test (experiment 3).

(A) Employed combinations of head and body orientations for P1 (left panel) and P2 (right panel) of the avatar, shown on a gray background matching the employed one (the same applies for the backgrounds in (B) and (C)). We presented head–body configurations with zero, straight, leftward, and rightward right angles of the head and the body, presented at eight whole-monkey orientations, both upright and inverted. (B) Example stimuli of P1 and P2 from the upright (left panel) and inverted (right panel) conditions, with zero angle of head and body for the eight avatar orientations. A small red square represents the fixation target. (C) Headless bodies (B) and heads (H) for zero and straight angle configurations of the frontal, back, and two lateral views of P1 and P2. Heads and bodies are from the upright (left panel) and inverted (right panel) conditions and were positioned to match their corresponding avatars in (A). A small red square represents the fixation target.

Population PSTHs of anterior superior temporal sulcus (aSTS) and mid superior temporal sulcus (mSTS) units recorded in the Upright versus Inverted test (experiment 3).

Population PSTHs (20 ms bin width) for aSTS and mSTS (top and bottom panels) units displayed for M1 and M2 (left and right panels) and P1 and P2. Responses are shown for upright (solid lines) and inverted (dotted lines) heads, headless bodies, and head–body configurations with zero (in blue) and straight (in red) angles of the head and the body. Averaged responses are for the preferred orientation of responsive units. Shaded areas represent 95% confidence intervals (bootstrapping units; 1000 resamplings), and n denotes the number of responsive units for each subject, pose, and region. The stimulus duration is indicated by the black horizontal line (0 is stimulus onset).

Inversion effect of head- and body-responsive anterior superior temporal sulcus (aSTS) and mid superior temporal sulcus (mSTS) units (experiment 3).

Responses to the preferred orientation of upright or inverted head–body configurations for P1 and P2, of units which responded significantly to an upright or inverted head (in red) or headless body (in blue). Subjects M1 and M2 are represented by dark and light red or blue, respectively. Medians (diamonds) and the number of units per subject (n1 and n2) are shown in the corresponding color. p-values in red indicate significantly higher responses to the upright compared to the inverted configurations for each pose, region, and subject (Wilcoxon signed-rank tests on data pooled across subjects).

Tables

| aSTS | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MC | HC | ||||||||||||||

| M1 | M2 | M1 | M2 | ||||||||||||

| P1 | P2 | P1 | P2 | P1 | P2 | P1 | P2 | ||||||||

| 15% | n=34 | 9% | n=33 | 17% | n=35 | 23% | n=35 | 16% | n=32 | 3% | n=33 | 11% | n=36 | 17% | n=35 |