Adaptive coding for dynamic sensory inference

Figures

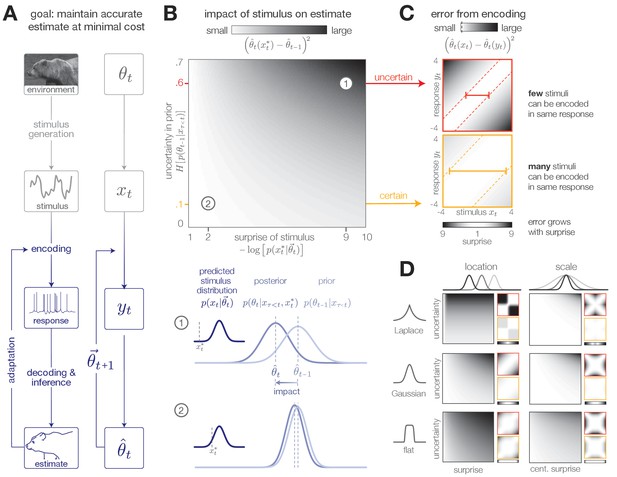

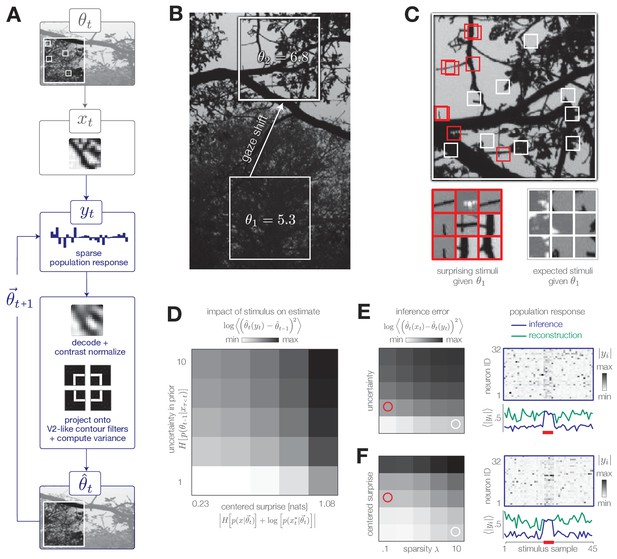

Surprise and uncertainty determine the impact of incoming stimuli for efficient inference.

(A) We consider a framework in which a sensory system infers the state of a dynamic environment at minimal metabolic cost. The state of the environment () is signaled by sensory stimuli () that are encoded in neural responses (). To infer this state, the system must decode stimuli from neural responses and use them to update an internal model of the environment (consisting of an estimate and a prediction ). This internal model can then be used to adapt the encoding at earlier stages. (The image of the bear was taken from the Berkeley Segmentation Dataset, Martin et al., 2001). (B) Incoming stimuli can have varying impact on the observer’s estimate of the environmental state depending on the relationship between the observer’s uncertainty and the surprise of the stimulus (heatmap). We use the example of Bayesian estimation of the mean of a stationary Gaussian distribution (Murphy, 2007) to demonstrate that when the observer is uncertain (wide prior ) and the stimulus is surprising ( falls on the edge of the distribution ), the stimulus has high impact and causes a large shift in the posterior (schematic (1)). In contrast, when the observer is certain and the stimulus is expected, the stimulus has a small impact on the observer’s estimate (schematic (2)). We quantify impact by the squared difference between the estimate before and after incorporating the stimulus (Materials and methods). (Computed using , for which impact spans the interval [0,0.7]). (C) When the observer is certain, a large number of stimuli can be mapped onto the same neural response without inducing error into the observer’s estimate (orange panel). When the observer is uncertain, the same mapping from stimulus to response induces higher error (red panel). Error is highest when mapping a surprising stimulus onto an expected neural response, or vice versa. We quantify error by the squared difference between the estimate constructed with the stimulus versus the response (Materials and methods). Shown for uncertainty values of 0.1 (orange) and 0.6 (red). Pairs of colored dotted lines superimposed on the heatmap indicate contours of constant error tolerance (whose value is also marked by the vertical dotted line in the colorbar). Colored horizontal bars indicate the set of stimuli that can be mapped to the same neural response with an error less than . (D) Qualitatively similar results to those shown in panels B-C are observed for estimating the location and scale of a stationary generalized Gaussian distribution. Stimuli have a larger impact on the observer’s estimate when the observer is uncertain and when stimuli are unexpected (quantified by surprise in the case of location estimation, and centered surprise in the case of scale estimation; see main text). The error induced by mapping a stimulus onto a response grows with the surprise of the stimulus. For the case of scale estimation, this error is symmetric to exchanging and , because positive and negative deviations from the mean (taken here to be 0) exert similar influence on the estimation of scale. Results are computed using (location) and (scale) and are displayed over the same ranges of uncertainty ([0,0.7]), surprise/centered surprise (Yu et al., 2015; Roddey et al., 2000), and stimulus/response ([−4,4]) as in panels B-C. Heatmaps of impact are individually scaled for each stimulus distribution relative to their minimum and maximum values; heatmaps of encoding error are scaled relative to the minimum and maximum error across both uncertainty values for a given stimulus distribution. See Figure 1—figure supplement 2 for numerical values of color scale.

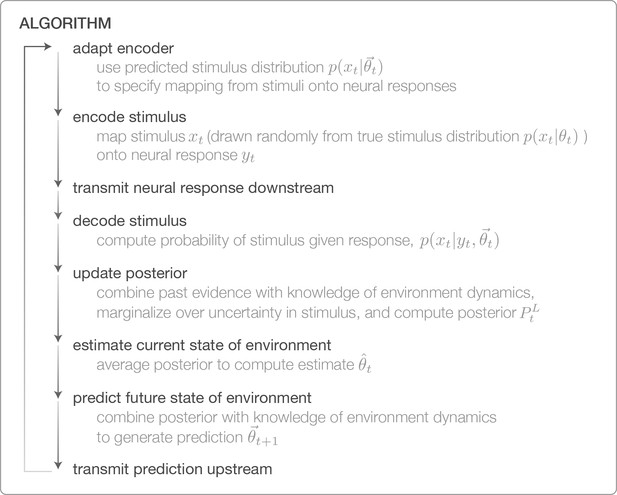

Algorithm for performing Bayesian inference with adaptively encoded stimuli.

https://doi.org/10.7554/eLife.32055.003

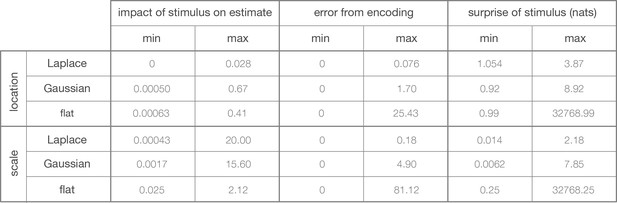

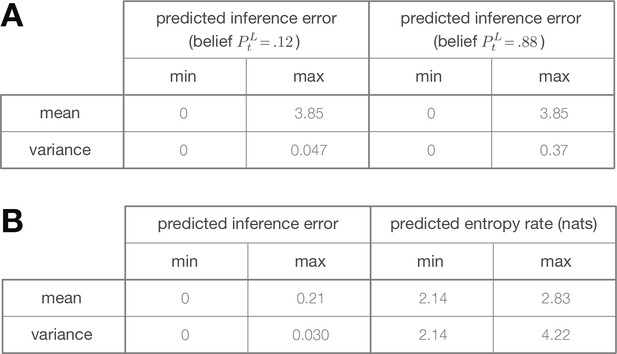

Minimum and maximum values of the color ranges shown in Figure 1B–D.

https://doi.org/10.7554/eLife.32055.004

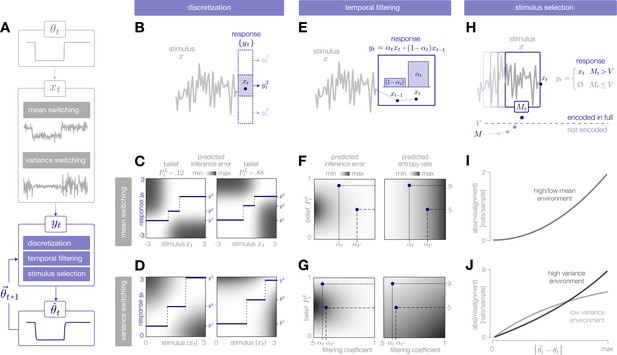

Adaptive encoding schemes.

(A) We consider a specific implementation of our general framework in which an environmental state switches between two values with fixed probability. This state parameterizes the mean or variance of a Gaussian stimulus distribution. Stimuli are drawn from this distribution and encoded in neural responses . We consider three encoding schemes that perform discretization (panels B-D), temporal filtering (panels E-G), or stimulus selection (panels H-J) on incoming stimuli. (B) (Schematic) At each timestep, an incoming stimulus (black dot) is mapped onto a discrete neural response level (solid blue rectangle) chosen from a set (dotted rectangles). (C–D) The predicted inference error induced by mapping a stimulus onto a neural response varies as a function of the observer’s belief about the state of the environment (shown for , left column; , right column). At each timestep, the optimal response levels (solid lines) are chosen to minimize this error when averaged over the predicted stimulus distribution. See Figure 2—figure supplement 1A for numerical values of color scale. (E) (Schematic) At each timestep, incoming stimuli are combined via a linear filter with a coefficient . (F–G) The average predicted inference error (left column) depends on the filter coefficient and on the observer’s belief about the state of the environment. At each timestep, the optimal filter coefficient (blue dot) is found by balancing error and entropy given a prediction of the environmental state ( and are shown for and , respectively). See Figure 2—figure supplement 1B for numerical values of color scale. (H) (Schematic) At each timestep, the encoder computes the misalignment between the predicted and measured surprise of incoming stimuli. If the misalignment exceeds a threshold , the stimulus is encoded with perfect fidelity; otherwise, the stimulus is not encoded. (I–J) The misalignment signal (computed here analytically; see Materials and methods) depends on the relationship between the predicted and true state of the environment. When the mean is changing over time (panel I), the misalignment depends only on the absolute difference between the true and predicted mean. When the variance is changing over time (panel J), the misalignment also depends on the true variance of the environment.

Minimum and maximum values of the color ranges shown in Figure 2.

Each entry reports the minimum or maximum value of (A) the predicted inference error induced by discretization (as shown in Figure 2C–D), or (B) the predicted inference error or predicted entropy rate induced by filtering (as shown in Figure 2F–G).

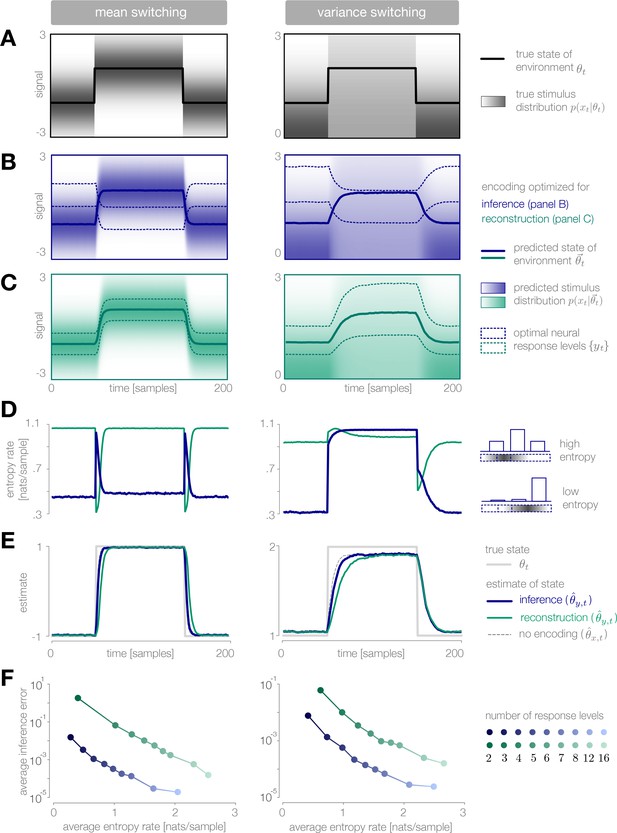

Dynamic inference with optimally-adapted response levels.

(A) We consider a probe environment in which a state (solid line) switches between two values at fixed time intervals. This state parametrizes the mean (left) or the variance (right) of a Gaussian stimulus distribution (heatmap). (B, C) Optimal response levels (dotted lines) are chosen to minimize error in inference (blue) or stimulus reconstruction (green) based on the predicted stimulus distribution (heatmap). Results are shown for three response levels. All probability distributions in panels A-C are scaled to the same range, . (B) Response levels optimized for inference devote higher resolution (narrower levels) to stimuli that are surprising given the current prediction of the environment. (C) Response levels optimized for stimulus reconstruction devote higher resolution to stimuli that are likely. (D) The entropy rate of the encoding is found by partitioning the true stimulus distribution (heatmap in panel A) based on the optimal response levels (dotted lines in panels B-C). Abrupt changes in the environment induce large changes in entropy rate that are symmetric for mean estimation (left) but asymmetric for variance estimation (right). Apparent differences in the baseline entropy rate for low- versus high-mean states arise from numerical instabilities. (E) Encoding induces error in the estimate . Errors are larger if the encoding is optimized for stimulus reconstruction than for inference. The error induced by upward and downward switches is symmetric for mean estimation (left) but asymmetric for variance estimation (right). In the latter case, errors are larger when inferring upward switches in variance. (F) Increasing the number of response levels decreases the average inference error but increases the cost of encoding. Across all numbers of response levels, an encoding optimized for inference (blue) achieves lower error at lower cost than an encoding optimized for stimulus reconstruction (green). All results in panels A-C and E are averaged over 500 cycles of the probe environment. Results in panel D were computed using the average response levels shown in panels B-C. Results in panel F were determined by computing time-averages of the results in panels D-E.

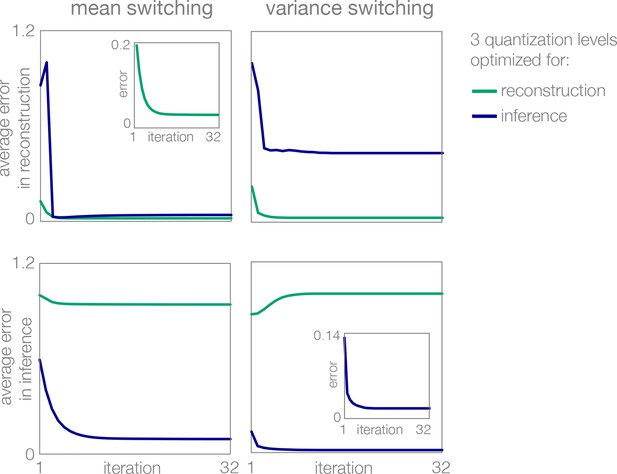

Learning of optimal response levels with Lloyd’s algorithm.

Optimization of three response levels (quantization levels) for reconstruction (green) differs from optimization for inference (blue). Regardless of the error function (distortion measure), the algorithm converges to an optimum.

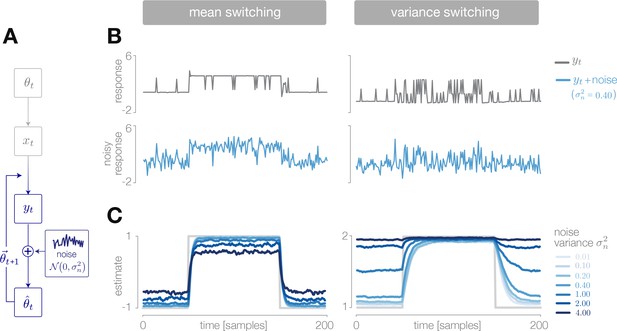

Deviations from optimal inference due to transmission noise.

(A) We simulated the noisy transmission of stimulus representations to downstream areas by injecting additive Gaussian noise into the response of a discretizing encoder. (B) The output response of the encoder is a discretized version of the incoming stimulus (upper row, gray). The observer receives a noisy version of the response (lower row, blue). (C) The estimate is robust at low-noise levels, but degrades significantly for high noise levels. Results are averaged over 500 cycles of the probe environment.

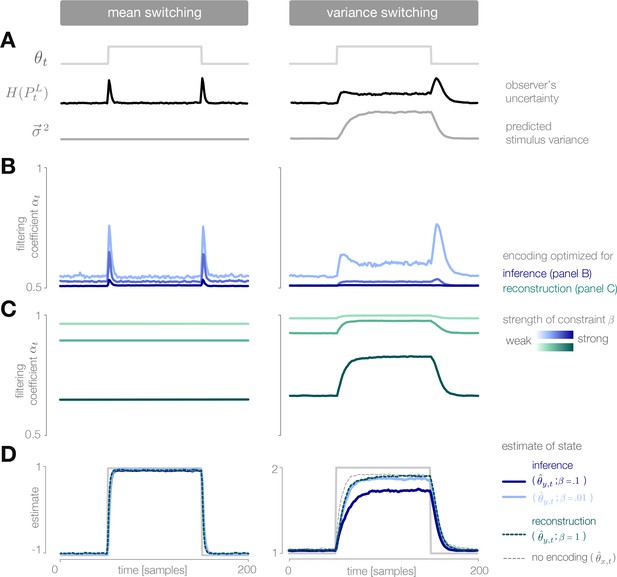

Dynamic inference with optimally-adapted temporal filters.

(A) The observer’s uncertainty () is largest when the environment is changing. The predicted stimulus variance (a proxy for both the predicted magnitude of the stimulus distribution, and the predicted surprise of incoming stimuli) is constant in a mean-switching environment (left) but variable in a variance-switching environment (right) (computed using a filter coefficient optimized for inference with a weak entropy constraint, corresponding to the lightest blue curves in panel B). (B, C) Optimal values of the filter coefficient are chosen at each timestep to minimize error in inference (blue) or stimulus reconstruction (green), subject to a constraint on predicted entropy. Darker colors indicate stronger constraints. (B) Filters optimized for inference devote high fidelity at times when the observer is uncertain and stimuli are predicted to be surprising. Shown for (left) and (right). (C) Filters optimized for reconstruction devote fidelity at times when the magnitude of the stimulus is predicted to be high. Shown for . (D) Filtering induces error into the estimate . Strong filtering has minimal impact on mean estimation (left), but induces large errors in the estimation of high variances (right). All results in panels A-D are averaged over 800 cycles of the probe environment.

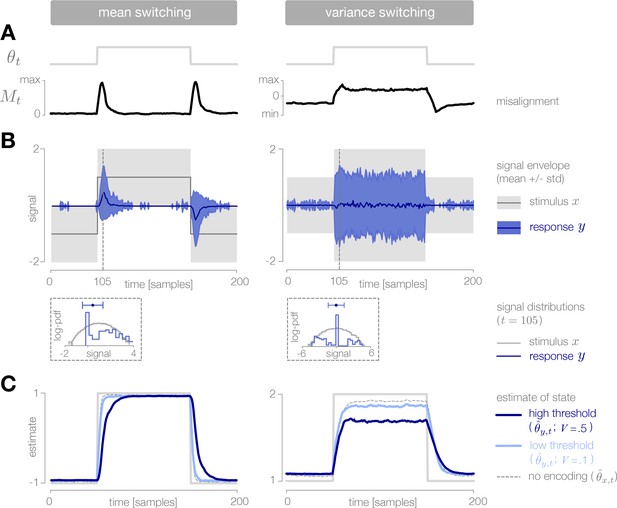

Dynamic inference with stimulus selection.

(A) When the environment is changing, the observer’s prediction is misaligned with the state of the environment. When this misalignment is large, stimuli are transmitted in full (). When this misalignment falls below a threshold , stimuli are not transmitted at all (). (B) The distribution of encoded stimuli changes over time, as can be seen by comparing the envelope of the stimulus distribution (gray) with the envelope of the neural responses (blue). Left: When the mean of the stimulus distribution changes abruptly, a large proportion of stimuli are encoded, and the mean of the neural response (blue line) approaches the mean of the stimulus distribution (black line). At times when the mean of the stimulus distribution is stable, very few stimuli are encoded, and the mean of the neural response drops to zero. Right: When the variance is low, very few stimuli are encoded. When the variance increases, the average surprise of incoming stimuli increases, and a large proportion of stimuli are encoded. The envelope of the neural response expands and approaches the envelope of the stimulus distribution. Insets: At times when the environment is changing (shown for ), the distribution of responses (blue) is sparser than the distribution of stimuli (gray), due to the large proportion of stimuli that are not encoded (indicated by the large peak in probability mass at ). Shown for . (C) Higher thresholds slow the observer’s detection of changes in the mean (left), and cause the observer to underestimate high variances (right). Threshold values are scaled relative to the maximum analytical value of the misalignment signal in the mean- and variance-switching environment (shown in Figure 2I and J, respectively). Results in panels B and C are averaged over 800 cycles of the probe environment.

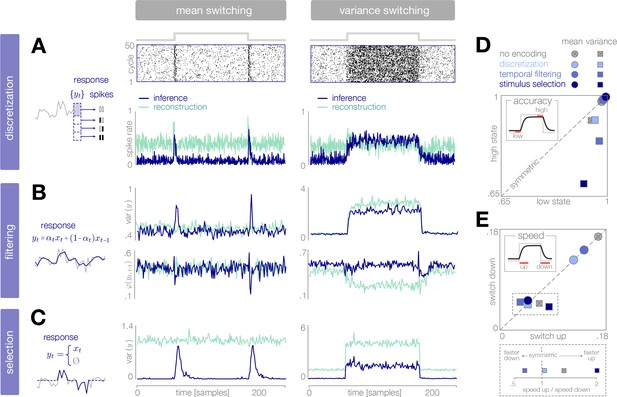

Dynamical signatures of adaptive coding schemes.

(A–C) We simulate the output of each encoder to repeated cycles of the probe environment. In the case of discretization (panel A), we use a simple entropy coding procedure to map optimal response levels onto spike patterns, as shown by the spike rasters. In the case of temporal filtering (panel B) and stimulus selection (panel C), we measure properties of the response . When encodings are optimized for inference (dark blue traces), abrupt changes in the mean of the stimulus distribution (panels A-C, left) are followed by transient increases in spike rate (discretization, panel A) and response variability (filtering, panel B; stimulus selection, panel C). In the case of temporal filtering, these changes are additionally marked by decreases in the temporal correlation of the response. In contrast, the response properties of encoders optimized for stimulus reconstruction (light green traces) remain more constant over time. Abrupt changes in variance (panels A-C, right) are marked by changes in baseline response properties. Responses show transient deviations away from baseline when encodings are optimized for inference, but remain fixed at baseline when encodings are optimized for reconstruction. In all cases, encodings optimized for inference maintain lower baseline firing rates, lower baseline variability, and higher baseline correlation than encodings optimized for stimulus reconstruction. Spike rates (panel A) are averaged over 500 cycles of the probe environment. Response variability (panels B-C) is computed at each timepoint across 800 cycles of the probe environment. Temporal correlation (panel B) is computed between consecutive timepoints across 800 cycles of the probe environment. (D–E) Encoding schemes impact both the accuracy (panel D) and speed (panel E) of inference. In all cases, the dynamics of inference are symmetric for changes in mean (points lie along the diagonal) but asymmetric for changes in variance (points lie off the diagonal). Encodings decrease the accuracy of estimating high-variance states (panel D), and they alter the speed of responding to changes in both mean and variance. The response to upward versus downward switches (dotted box) separates encoding schemes based on whether they are faster (right of dotted vertical line) or slower (left of dotted vertical line) to respond to increases versus decreases in variance. Speed and accuracy are measured from the trial-averaged trajectories of shown in Figure 3E, Figure 4D (), and Figure 5C () (Materials and methods).

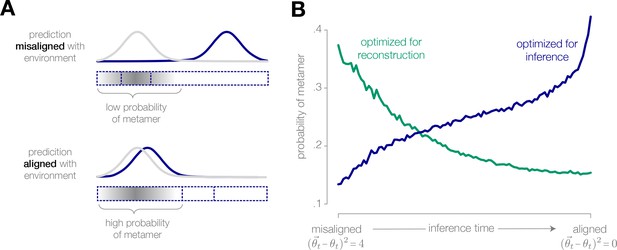

Physically different stimuli become indistinguishable to an adapted system optimized for inference.

(A) (Schematic) If the observer’s prediction is misaligned with the true state of the environment, the stimuli that are most likely to occur in the environment are mapped onto different neural responses. The probability of a metamer—defined by the set of stimuli that give rise to the same neural response—is low. Conversely, when the prediction is closely aligned with the environment, the probability of a metamer is high. (B) When an encoding is optimized for inference, the probability of a metamer increases as the observer’s prediction aligns with the environment, because an increasing fraction of stimuli are mapped onto the same neural response. The opposite trend is observed when the encoding is optimized for stimulus reconstruction (illustrated for a discretized encoder with eight response levels operating in a mean-switching environment).

Model inference task with natural stimuli.

(A) We model a simple task of inferring the variance of local curvature in a region of an image. The system encodes randomly drawn image patches that model saccadic fixations. Individual image patches are encoded in sparse population activity via V1-like receptive fields (see Figure 7—figure supplement 1). Image patches are then decoded from the population activity, contrast-normalized, and projected onto V2-like curvature filters. The observer computes the variance of these filter outputs. (B) After a gaze shift from an area of low curvature (bottom square, ) to an area of high curvature (top square, ), the observer must update its estimate of local curvature. (C) Image patches that are surprising given the observer’s estimate (red) have larger variance in curvature, while expected patches (white) have low variance in curvature. Frames of highly overlapping patches were slightly shifted for display purposes. (D) Individual image patches have a large impact on the observer’s estimate when the observer is uncertain and when image patches have high centered surprise, analogous to the behavior observed in simple model environments (see Figure 1B). Shown for . Impact spans the interval [0, 34.12]. (E) The observer can exploit its uncertainty to adapt the sparsity of the sensory encoding (heatmap; blue trace). When the observer is certain (white marker), population activity can be significantly reduced without changing the inference error. Increases in uncertainty (red marker) result in bursts of activity (red bar). An encoder optimized for constant reconstruction error produces activity that remains constant over time (green trace). Inference error spans the interval [0, 2.22]. (F) The observer can similarly exploit the predicted surprise of incoming stimuli to reduce population activity when stimuli are expected. Inference error spans the interval [0, 1.57].

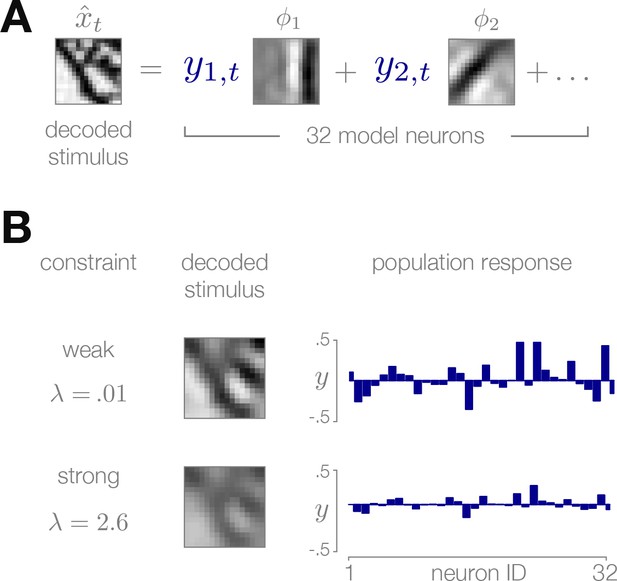

Sparse coding model of natural image patches.

(A) Each reconstructed image patch is represented as a linear combination of basis functions multiplied by sparse coefficients . (B) Small values of the sparsity constraint (upper row) result in a more accurate reconstruction of the stimulus, but require a stronger population response to encode. Large values of (bottom row) result in a weaker population response at the cost of decreased quality of the stimulus reconstruction.

Additional files

-

Transparent reporting form

- https://doi.org/10.7554/eLife.32055.016