Real-time experimental control using network-based parallel processing

Figures

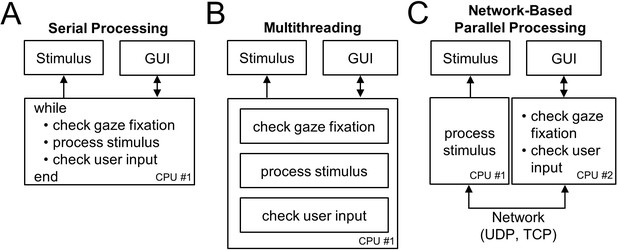

Experimental control frameworks.

(A) Serial processing: All processes are executed serially in a while-loop. (B) Multithreading: A single CPU executes multiple processes in parallel on different threads. (C) Network-based parallel processing: Multiple processes are executed in parallel on different CPUs coordinated over a network. Individual processes in CPU #2 can be implemented serially or using multithreading. Arrows indicate the direction of information flow.

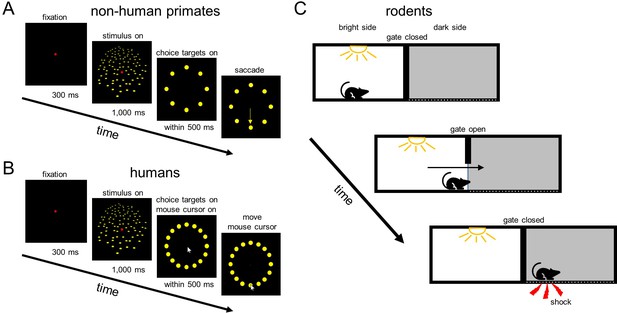

Behavioral tasks.

(A) Application 1: Neural basis of 3D vision in non-human primates. A monkey fixated a target (red) at the screen center for 300 ms. A planar surface was then presented at one of eight tilts for 1000 ms (one eye’s view is shown) while fixation was maintained. The plane and fixation target then disappeared, and eight choice targets corresponding to the possible tilts appeared. A liquid reward was provided for a saccade (yellow arrow) to the target in the direction that the plane was nearest. (B) Application 2: 3D vision in humans. The task was similar to the monkey task, but there were sixteen tilts/targets, and choices were reported using the cursor of a computer mouse that appeared at the end of the stimulus presentation. In A and B, dot sizes and numbers differ from in the actual experiments. (C) Application 3: Passive avoidance task in mice. A mouse was placed in a bright room that was separated from a dark room by a gate. After the gate was lifted, the mouse entered the dark room. This was automatically detected by the GUI which triggered an electric shock through the floor of the dark room.

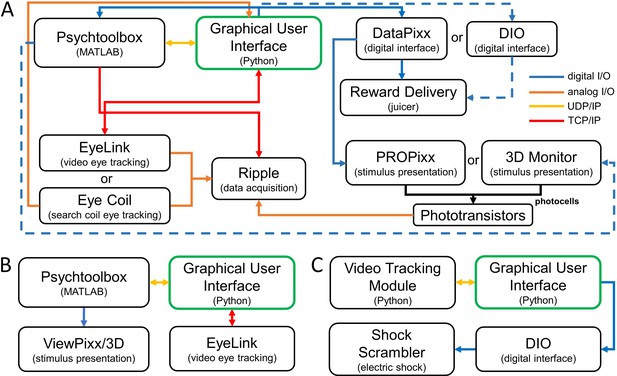

Three system configurations using the Real-Time Experimental Control with Graphical User Interface (REC-GUI) framework.

Experimental control and monitoring are achieved using a GUI (green box) that coordinates components such as: (i) stimulus rendering and presentation, (ii) external input devices, (iii) external output devices, and (iv) data acquisition. Arrows indicate the direction of information flow. (A) Application 1: Neural basis of 3D vision in non-human primates. This application implements a gaze-contingent vision experiment with neuronal recordings. Dashed lines show an alternative communication pathway. (B) Application 2: 3D vision in humans. This application implements a gaze-contingent vision experiment without external devices for data acquisition or reward delivery. (C) Application 3: Passive avoidance task in mice. This application uses a video tracking module and external shock scrambler to automate behavioral training and assessment.

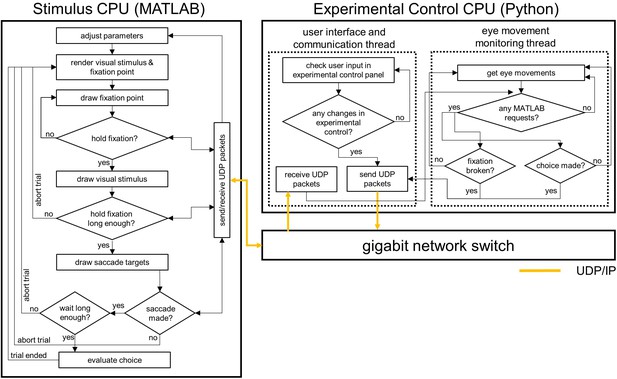

Experimental routine and communication flowchart between the stimulus CPU and experimental control CPU showing the exchange of parameters for stimulus rendering and behavioral control for Application 1.

Arrows indicate the direction of information flow.

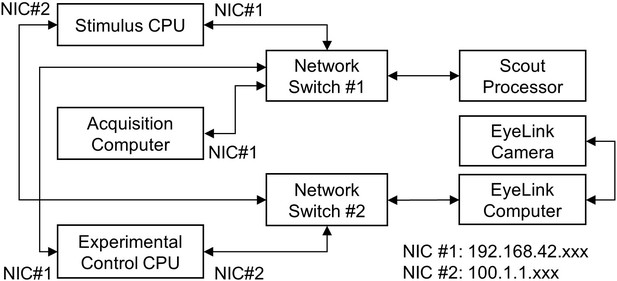

Network configuration for Application 1: Neural basis of 3D vision in non-human primates.

The Scout Processor and EyeLink have non-configurable IP addresses, so two network switches and multiple network interface cards (NICs) are required to route different subgroups of IP addresses to the stimulus and experimental control CPUs. Arrows indicate the direction of information flow.

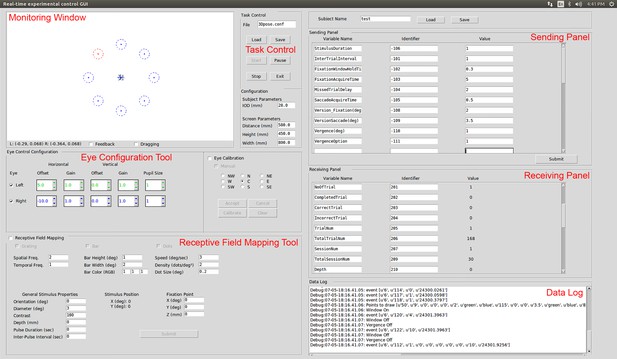

Graphical user interface.

The GUI is a customizable experimental control panel, configured here for vision experiments. The upper left corner is a monitoring window, showing a scaled depiction of the visual display. Fixation windows and eye position markers are at the center. Eight choice windows for Application 1 are also shown (correct choice in red, distractors in blue). Below the monitoring window are eye configuration and receptive field mapping tools, which can be substituted for other experiment-specific tools. Task control for starting, pausing, or stopping a protocol is at the center top, along with subject- and system-specific configuration parameters. The sending panel in the upper right allows the experimenter to modify task parameters in real time. The receiving panel below that is used to display information about the current stimulus and experiment progress. The lower right panel shows a data log.

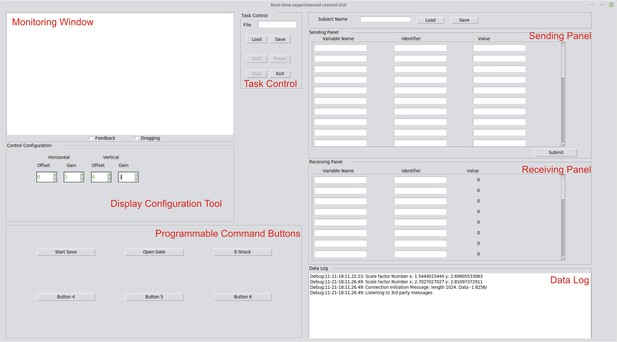

GUI configured with programmable command buttons for Application 3.

The display configuration tool controls the monitoring window panel. The programmable command buttons execute user-defined callback routines.

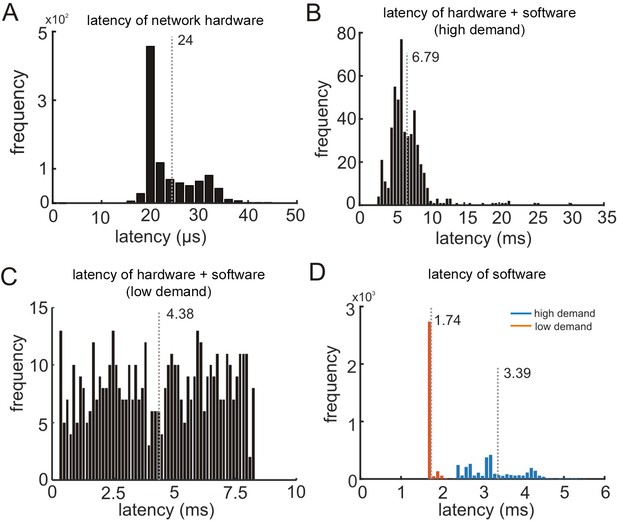

System performance.

(A) Latency introduced by the network hardware (N = 1000 pings). (B) Overall system performance measured using the round-trip latency of UDP packets between the experimental control (GUI) and stimulus (MATLAB) CPUs with complex stimulus rendering routines (‘high demand’ on the system; N = 500 round-trip packet pairs). (C) Same as B without the stimulus rendering routines (‘low demand’ on the system; N = 500). (D) Duration of the main while-loop in the stimulus CPU with complex stimulus rendering routines (blue bars) or without (orange bars), N = 3000 iterations each. Vertical gray dotted lines mark mean durations.

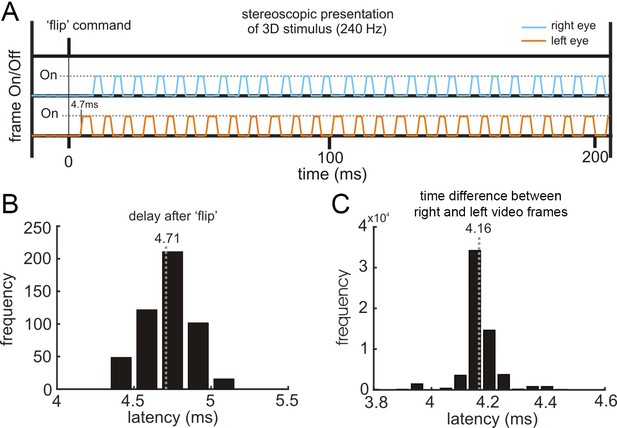

Quantifying the fidelity of external device control.

(A) Right and left eye frame signals measured on the screen. The anti-phase rise and fall of the two signals indicates that they are temporally synchronized. (B) Latency between the initial flip command in MATLAB and the appearance of the stimulus (N = 500 trials). (C) Time differences between the two eyes’ frames peak at 4.16 ms, indicating that the intended 240 Hz stimulus presentation was reliably achieved (N = 500 trials). Voltage traces were sampled at 30 kHz. Mean times are indicated by vertical gray dotted lines.

Application 1: Neural basis of 3D vision in non-human primates.

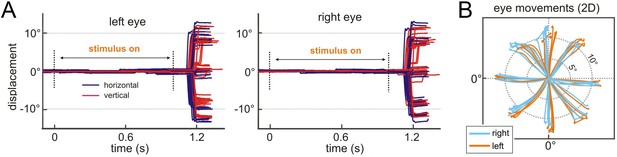

Verifying behavior-contingent experimental control. (A) Left and right eye traces as a function time during the 3D orientation discrimination task, aligned to the stimulus onset (N = 34 trials). The traces end when the choice was detected by the GUI. Horizontal and vertical components of the eye movements are shown in purple and red, respectively. Version was enforced using a 2° window. Vergence was enforced using a 1° window (data not shown). (B) Two-dimensional (2D) eye movement traces shown from stimulus onset until the choice was detected by the GUI (same data as in A).

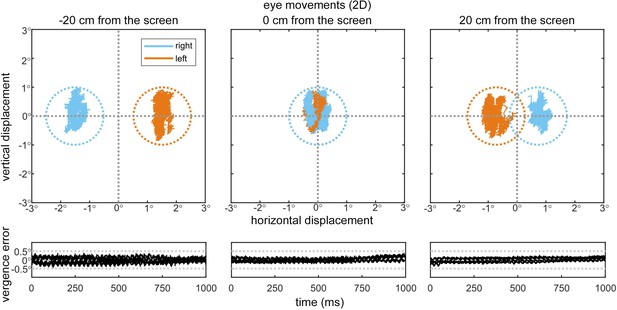

Enforcement of version and vergence during fixation at different depths.

Stereoscopically rendered targets were presented at three depths relative to the screen. Fixation was held on a target for 1 s. Top row shows eye traces while fixation was held at each depth (N = 4 trials per depth). Dotted circles show the 2° version windows. Bottom row shows vergence error. Gray dotted lines show the 1° vergence window.

Application 2: 3D vision in humans.

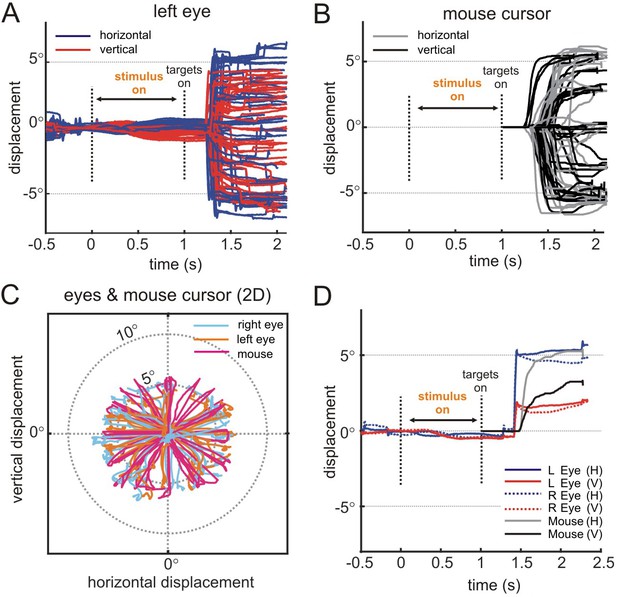

Verifying behavior-contingent experimental control. (A) Left eye traces as a function time during the 3D orientation discrimination task, aligned to the stimulus onset (N = 34 trials). The traces end when the choice (mouse click on a target) was detected by the GUI. Horizontal and vertical components of the eye movements are shown in purple and red, respectively. Version was enforced using a 3° window. Vergence was enforced using a 2° window (data not shown). (B) Computer mouse cursor traces for the same trials. The cursor appeared at the location of the fixation target at the end of the stimulus presentation. (C) 2D eye and mouse cursor traces ending when the choice was detected by the GUI. (D) Movement of both eyes and the mouse cursor for a single trial. Note that the movement of the mouse cursor was delayed relative to the movement of the eyes but caught up shortly after movement initiation.

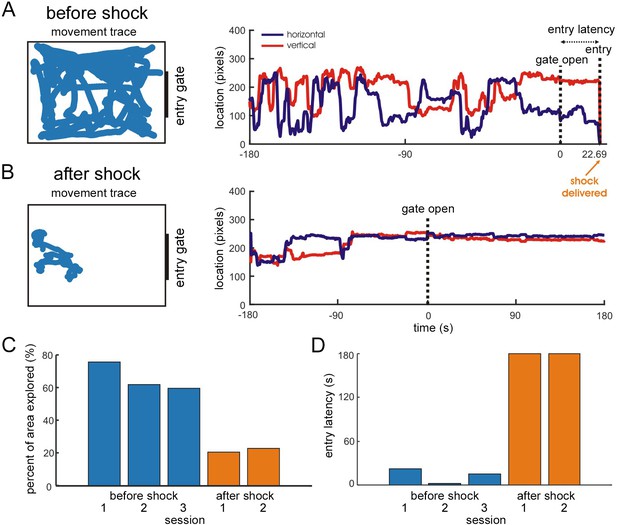

Application 3: Passive avoidance task in mice.

Verifying automated behavior-contingent experimental control. (A,B) The left column shows the movement of a mouse (blue trace) within the bright room before (A) and after (B) foot shock. The right column shows the movement as a function of time, and marks the gate opening. In A, the mouse’s entry into the dark room and the foot shock are also marked. (A) Before foot shock. (B) After foot shock. (C) The percentage of the bright room explored by the mouse before (blue bars) and after (orange bars) foot shock. The mouse explored much less area after the shock. (D) Time to enter the dark room after the gate was opened. Before the foot shock (blue bars), the mouse quickly entered the dark room. After the foot shock (orange bars), the mouse never entered the dark room (sessions were ended after 3 minutes).

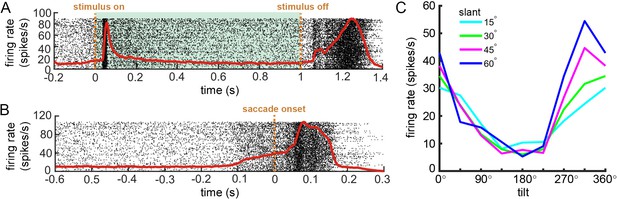

Temporal alignment of action potentials to stimulus-related and behavioral events.

(A) Raster plot showing spike times aligned to the stimulus onset (N = 545 trials). Shaded region marks the stimulus duration. (B) Raster plot showing spike times aligned to the saccade onset. Each row is a different trial, and each dot marks a single action potential. Red curves are spike density functions. (C) 3D surface orientation tuning. Each curve shows tilt tuning at a fixed slant.

Additional files

-

Transparent reporting form

- https://doi.org/10.7554/eLife.40231.016