Neural dynamics of visual ambiguity resolution by perceptual prior

Figures

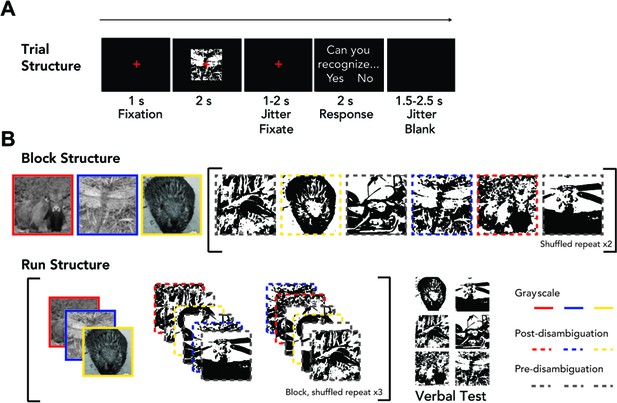

Task paradigm.

(A) Trial structure. The left/right position (and corresponding button) for ‘Yes’/‘No’ answer was randomized from trial to trial. (B) Block and run structure. Each block includes 15 trials: three grayscale images, six Mooney images in a randomized order, then a repeat of these six Mooney images in a randomized order. Three of the six Mooney images correspond to the grayscale images presented in the same run and are presented post-disambiguation. The other three Mooney images are presented pre-disambiguation, and their matching grayscale images will be shown in the following run. An experimental run consists of a block presented three times with randomized image order, followed by a verbal test (for details, see Materials and methods). Mooney images were not presented to subjects with colored frames.

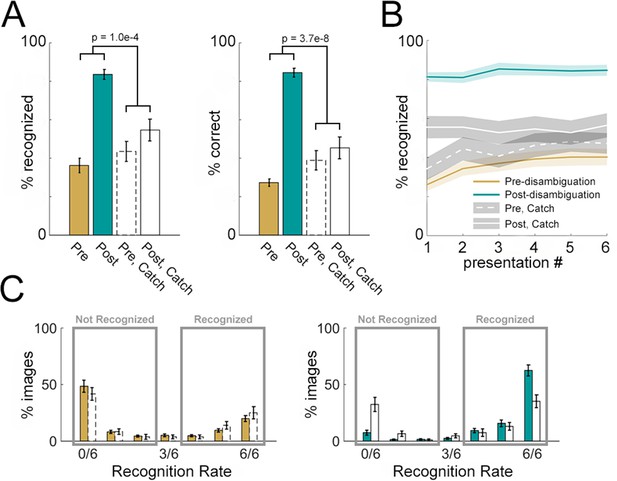

Disambiguation-related behavioral effects.

(A) Subjective recognition rates (left, averaged across six presentations) and correct verbal identification rates (right) for Mooney images presented in the pre- and post- disambiguation period (gold and teal bars). Corresponding results for catch images are shown in open bars. p-Values corresponding to the interaction effect (pre vs. post × catch vs. non-catch) of two-way ANOVAs are shown in the graph. (B) Subjective recognition rates grouped by presentation number in the pre- and post- disambiguation stage (gold and teal), as well as the corresponding rates for catch images (white lines). (C) Distribution of subjective recognition rates across Mooney images in the pre- (gold bars, left) and post- (teal bars, right) disambiguation stage. Corresponding distributions for catch images are shown as open bars. All results show mean and s.e.m. across subjects.

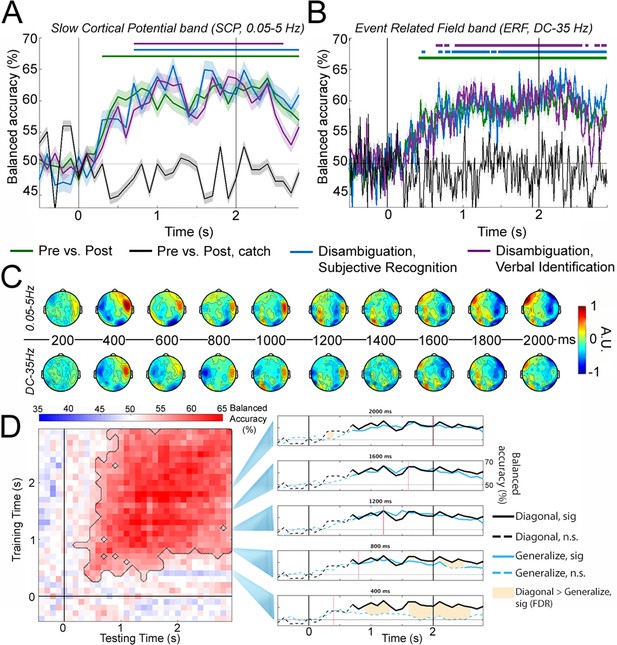

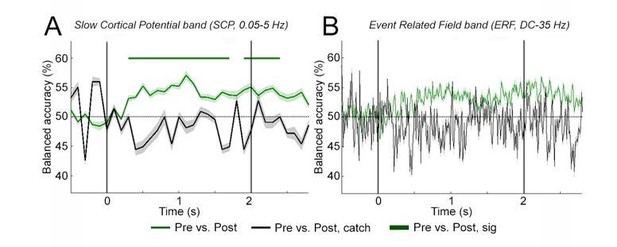

Decoding perceptual state information.

(A) Decoding accuracy using SCP (0.05–5 Hz) activity across all sensors. Classifiers were constructed to decode i) presentation stage for all 33 Mooney images (Pre vs. Post; green); ii) presentation stage using only Mooney images that are not recognized pre-disambiguation and recognized post-disambiguation (Disambiguation, subjective recognition; blue); iii) presentation stage using only Mooney images that are not identified pre-disambiguation and identified post-disambiguation (Disambiguation, verbal identification; magenta); iv) ‘presentation stage’ for catch images, where the grayscale images did not match the Mooney images (black). Shaded areas represent s.e.m. across subjects. Horizontal bars indicate significant time points (p<0.05, cluster-based permutation tests). (B) Same as A, but for ERF (DC – 35 Hz) activity. (C) Activation patterns transformed from decoder weight vectors of the ‘Disambiguation, subjective recognition’ decoder constructed using SCP (top row) and ERF (bottom row) activity, respectively. (D) Left: TGM for the ‘Disambiguation, subjective recognition’ decoder constructed using SCP. Significance is outlined by the dotted black trace. Right: Cross-time generalization accuracy for classifiers trained at five different time points (marked as red vertical lines; blue traces, corresponding to rows in the TGM). The within-time decoding accuracy (corresponding to the diagonal of the TGM and blue trace in A) is plotted in black for comparison. Solid traces show significant decoding (p<0.05, cluster-based permutation test); shaded areas denote significant differences between within- and across- time decoding (p<0.05, FDR corrected). The black vertical bars in A, B, and D denote onset (0 s) and offset (2 s) of image presentation.

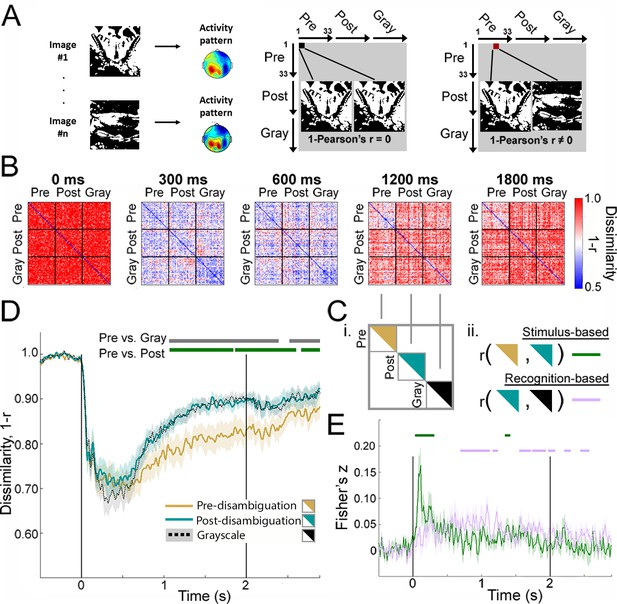

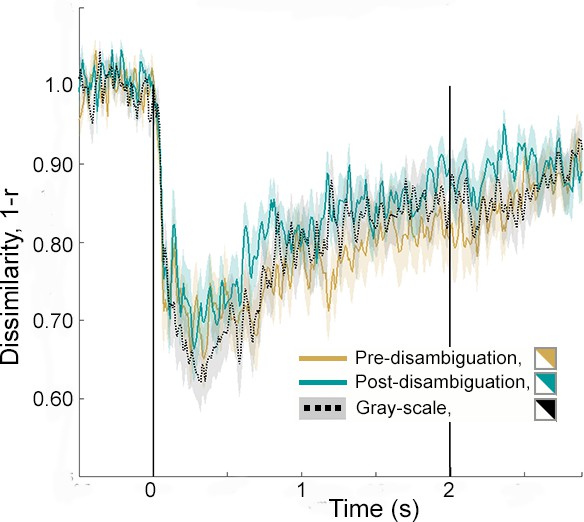

Image-level representations are influenced by prior information.

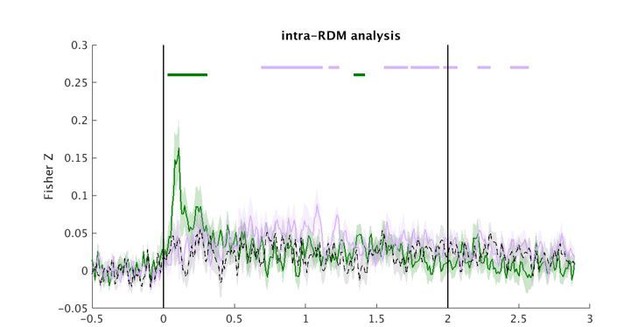

(A) Schematic for RSA. See Materials and methods, RSA, for details. (B) Group-average MEG representation dissimilarity matrices (RDMs) at selected time points. (C) Schematics for analyses shown in D and E. (D) Mean across-image representational dissimilarity in each perceptual condition, calculated by averaging the elements in the upper triangles for each condition in the RDM (see C-i). Horizontal bars denote significant differences between conditions (p<0.05, cluster-based permutation tests). (E) Results from the intra-RDM analysis showing time courses of neural activity related to ‘stimulus-based’ and ‘recognition-based’ representation, obtained by performing element-wise correlations between the Pre and Post triangles in the RDM, and between the Post and Gray triangles, respectively (see C-ii). Correlation values were Fisher-z-transformed. Horizontal bars denote significance for each time course (p<0.05, cluster-based permutation tests). Shaded areas in D and E show s.e.m. across subjects.

Mean across-image representational dissimilarity in each perceptual condition for catch image sets.

Format is similar to Figure 4D, except that representational dissimilarity was computed using catch image sets. For catch image sets, grayscale images presented did not correspond to their respective Mooney images, that is no prior information is provided. There was no significant difference between perceptual conditions.

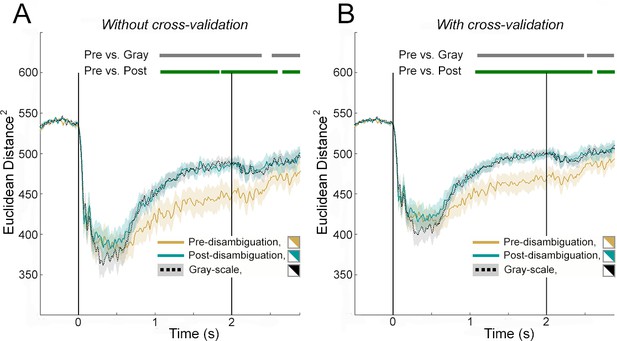

Mean across-image representational dissimilarity in each perceptual condition for real image sets, calculated using Euclidean distance.

Similar to Figure 4D, except that representational dissimilarity was computed as squared Euclidean distance. Horizontal bars denote significant differences between conditions (p<0.05, cluster-based permutation tests). Non-cross-validated (A) and cross-validated (B) results yield similar time courses differing slightly in overall amplitude. Significant differences between Pre and Post conditions begin at 1070 ms for both methods. Significant differences between Pre and Gray conditions for non-cross-validated and cross-validated results begin at 1060 ms and 1100 ms, respectively. Cross-validation was carried out using a three-fold scheme. Note that due to the preprocessing step of normalizing MEG data across sensors at each time step (see Materials and methods, RSA), both Euclidean distance and the original 1 – r measure capture the angle between two population activity vectors (for discussions on the angle measure, see Baria et al., 2017). In addition, baseline correction (see Materials and methods, MEG data preprocessing) necessitated that angle difference in the pre-stimulus period is largest.

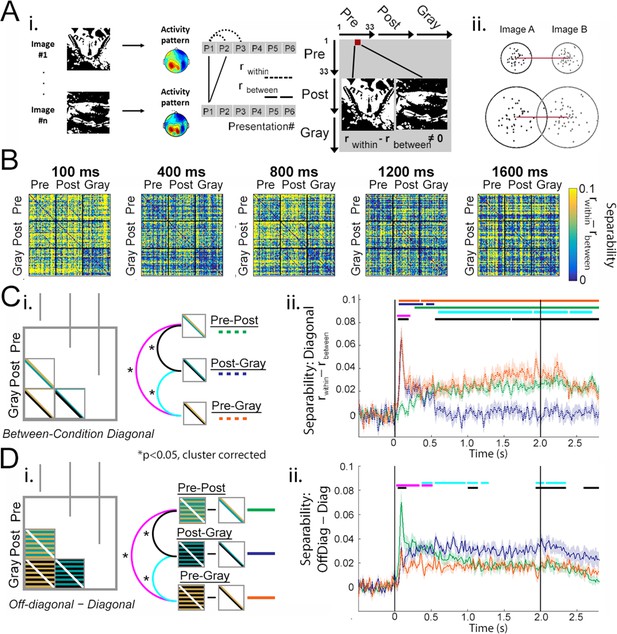

Single-trial separability analysis.

(A) i: Schematic for separability calculation. For details, see Materials and methods, Single-trial separability. ii: Two hypothetical examples of single-trial neural activity patterns (projected to a 2-D plane) for Image A (black dots) and Image B (gray dots). The neural dissimilarity calculated based on trial-averaged activity patterns (1 – r measure used earlier) is identical between the two examples, while single-trial separability (rwithin - rbetween) is higher in the top example. (B) Group-average MEG RDMs computed with the separability (rwithin - rbetween) measure at selected time points. (C) Quantifying separability between neural activity patterns elicited by the same/matching image presented in different conditions. (i) Analysis schematic: diagonal elements in the between-condition squares of the RDM are averaged together, yielding three time-dependent outputs corresponding to the three condition-pairs. (ii) Separability time courses averaged across 33 real image sets for each between-condition comparison, following the color legend shown in C-i. The top three horizontal bars represent significant (p<0.05, cluster-based permutation test) time points of each time course compared to chance level (0); and the bottom three bars represent significance of pairwise comparisons between the time courses. (D) Quantifying the difference between off-diagonal and diagonal elements in the between-condition squares of the separability RDMs. Intuitively, this analysis captures how similar an image is to itself or its matching version presented in a different condition over and above its similarity to other images presented in that same condition. Statistical significance (p<0.05, cluster-based permutation test) for pairwise comparisons are shown as horizontal bars. When compared to chance (0), the three traces are significant from 40 ms (Pre-Post), 50 ms (Pre-Gray), and 60 ms (Post-Gray) onward until after image offset, respectively. Traces in C-ii- and D-ii show mean and s.e.m. across subjects.

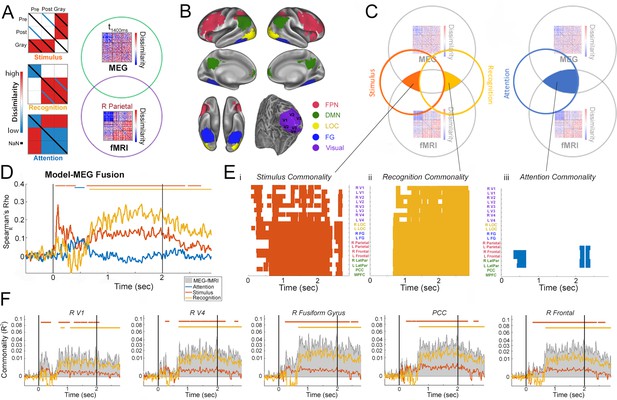

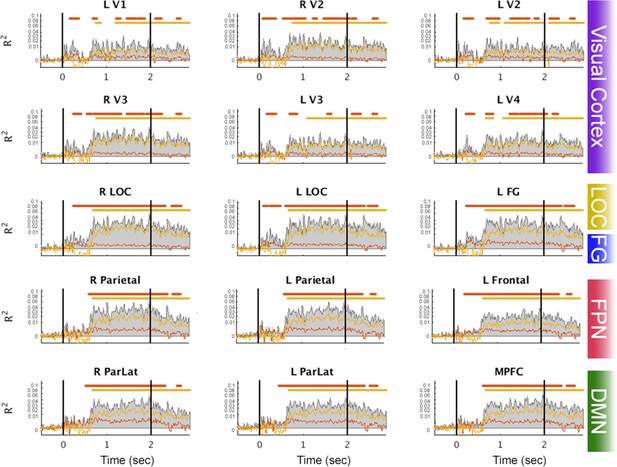

Model-based MEG-fMRI fusion analysis.

(A) Model RDMs and analysis cartoon. Left: RDMs corresponding to ‘Stimulus’, ‘Recognition’, and ‘Attention’ models. For details, see Results. Right: MEG RDM from each time point and fMRI RDM from each ROI are compared, and shared variance between them that is accounted for by each model is computed. (B) ROIs used in the fMRI analysis. For details, see Materials and methods. These were defined based on a previous study (González-García et al., 2018). (C) Schematics for the commonality analyses employed in the model-based MEG-fMRI data fusion (results shown in E-F). Because neural activities related to the Stimulus and Recognition model overlap in time (see D), to dissociate them, variance uniquely attributed to each model was calculated (left). Shared variance between MEG and fMRI RDMs accounted for by the attention model is also assessed (right). (D) Correlation between model RDMs and MEG RDMs at each time point. Horizontal bars denote significant correlation (p<0.05, cluster-based permutation tests). (E) Commonality analysis results for Stimulus (i), Recognition (ii), and Attention (iii) models. Colors denote significant (p<0.05, cluster-based permutation tests) presence of neural activity corresponding to the model in a given ROI and at a given time point (with 10 ms steps). (F) Commonality time courses for the Stimulus (red) and Recognition (yellow) model (analysis schematic shown in panel C, left) for five selected ROIs, showing shared MEG-fMRI variance explained by each model. Total shared variance between MEG and fMRI RDMs for each ROI is plotted as gray shaded area. PCC: posterior cingulate cortex; R Frontal: right frontal cortex in the FPN. Horizontal bars denote significant model-related commonality (p<0.05, cluster-based permutation tests).

Stimulus and Recognition commonality results for all remaining ROIs.

Format is the same as Figure 6F.

Same as Figure 4E, now showing element-wise correlations between the Pre-Pre and Gray-Gray triangles in the RDM as the black dashed line.

No significant cluster was found for this comparison at a level of p < 0.05 (cluster-based permutation test).

Same as Figure 3A-B (black and green traces), except that 6 randomly selected real image sets were used to match the statistical power of catch image sets.

Results from 10 such randomly selected subsets were averaged together, and mean and s.e.m. of decoding accuracy across subjects are plotted for both real (green) and catch (black) image sets. Horizontal bar indicates significant difference from chance level (p < 0.05, FDR corrected). Catch results are identical as in Figure 3A-B.

Additional files

-

Source code 1

- https://doi.org/10.7554/eLife.41861.011

-

Transparent reporting form

- https://doi.org/10.7554/eLife.41861.012