Mechanisms underlying sharpening of visual response dynamics with familiarity

Figures

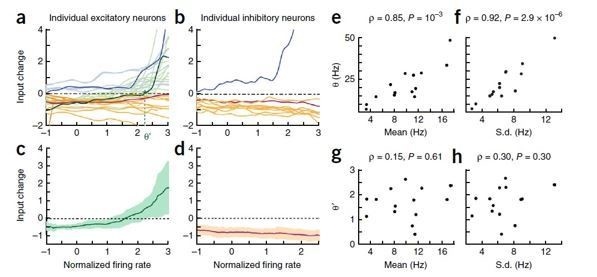

Changes in response dynamics of putative excitatory neurons with learning in a passive viewing task (Lim et al., 2015; Woloszyn and Sheinberg, 2012).

(A, B) Average and maximal response to familiar (blue) and novel (red) stimuli. For each excitatory neuron, we normalized firing rates by the mean and standard deviation of time-averaged activities over novel stimuli during stimulus presentation (80 ms-200 ms after the stimulus onset) and took the average over stimuli (A) and the response with the highest time-averaged activity (B). Solid curves are activities averaged over neurons, and shaded regions represent mean ± s.e.m of activities averaged over individual neurons. The gray horizontal bar represents the visual stimulation period starting at 0 ms. (C) Distribution of time-averaged activities during stimulus presentation. For each neuron, according to time-averaged activities, a stimulus was rank-ordered among familiar and novel stimuli, respectively. At each rank of the stimuli, we averaged the normalized response over neurons, and obtained the distributions of activities over different ranks of stimuli. To avoid negative values in the x-axis on a logarithmic scale, we added two to normalized rates. (D) Rebound strength of damped oscillation. At each rank of stimuli, the rebound strength was quantified by the slope of changes of activities between 230 ms and 320 ms after the stimulus onset.

-

Figure 1—source code 1

Data for Figure 1D.

- https://doi.org/10.7554/eLife.44098.019

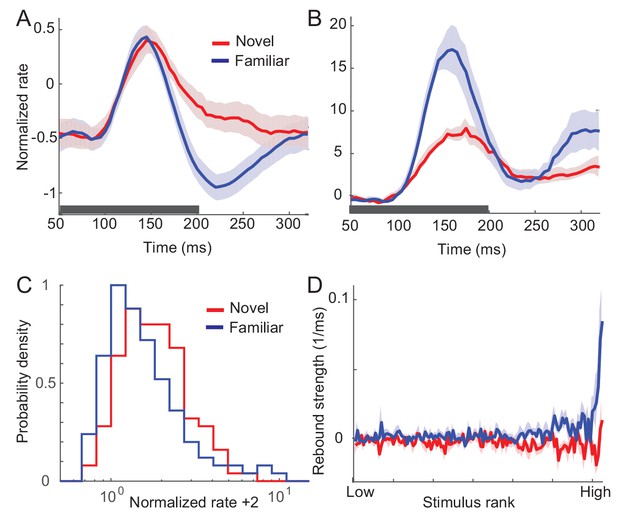

Changes in response dynamics of putative inhibitory neurons with learning in a passive viewing task (Woloszyn and Sheinberg, 2012).

(A, B) Average (A) and maximal (B) response to familiar (blue) and novel (red) stimuli. (C) Distributions of time-averaged activities. (D) Rebound strengths in inhibitory neurons before and after learning. Activities and rebound strengths were quantified in the same manner as in the excitatory neurons in Figure 1.

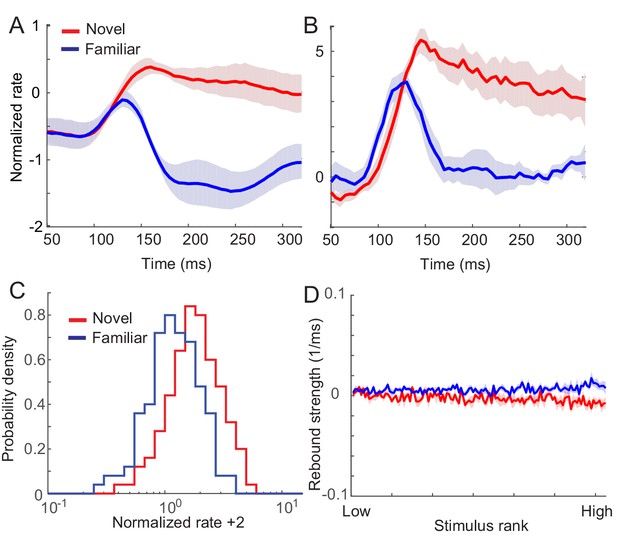

Networks with synaptic plasticity in recurrent connections without slow negative feedback.

(A) Example post-synaptic dependence of recurrent synaptic plasticity inferred from changes of time-averaged responses. Dependence of synaptic plasticity on the post-synaptic rate, f in Equation (2), shows depression for low rates and potentiation at high rates. (B, C) Average (B) and maximal (C) response before (red) and after (blue) learning for the network with synaptic plasticity only in recurrent connections.

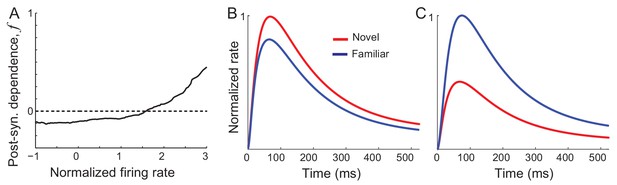

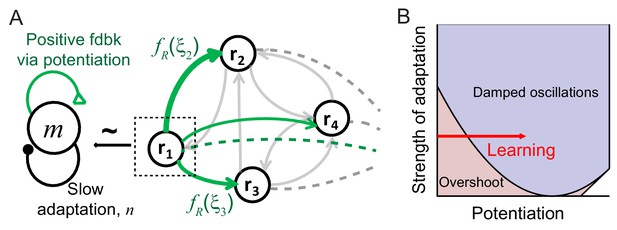

Mechanism of generating damped oscillations after learning.

(A) Schematics of the dynamics after learning. The overlap variable m is similar to activities of high rate neurons represented as r1, and n represents adaptation in m. These high rate neurons drive a damped oscillation in the remaining population whose strength is proportional to the post-synaptic dependence of recurrent synaptic plasticity . (B) Interactions between potentiation of recurrent inputs and a slow adaptation mechanism. The strength of potentiation is proportional to (Equation 4) which is 0 before learning. The separatrix dividing overshoot and damped oscillations is shown as a parabola defined by , the strength of adaptation k and time constants τR and τA.

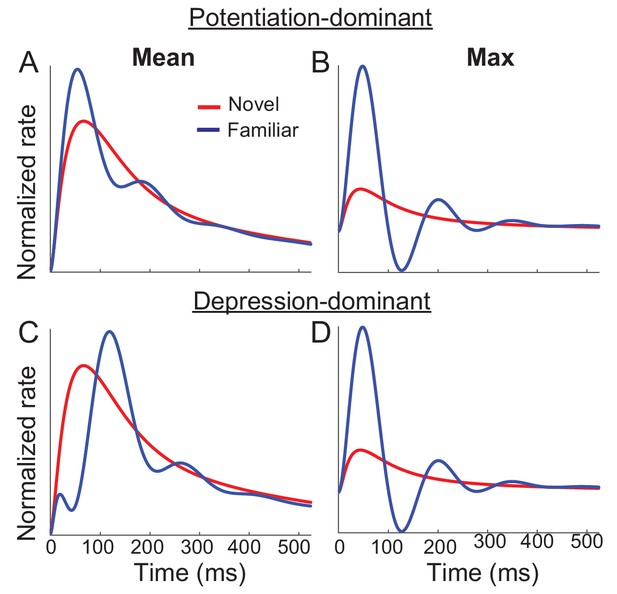

Networks with synaptic plasticity only in recurrent connections with slow negative feedback.

Average (A,C) and maximal (B,D) response before (red) and after (red) learning with potentiation-dominant (A,B) or depression-dominant (C,D) synaptic plasticity rules. The simulation of the mean-field dynamics is the same as in Figure 5 with the same parameters except , (A,B), and (C,D).

Networks with synaptic plasticity only in recurrent connections without the constraint of the sum normalization of synaptic weights achieved by .

(A) Schematics of the mean-field dynamics after learning. The qualitative difference between the dynamics with or without the constraint is the feedback from to m (dotted curve). (B–E) Average response before (red) and after (blue) learning with different and . As the case with the constraint, the oscillation can be generated by the strong positive feedback and slow negative feedback in the m dynamics, and the synchronous oscillations in and m are determined by the signs of and . When and have the same signs, a positive feedback loop through further boosts the oscillation in the m dynamics (B,E), while the opposite signs of and suppress the oscillation (C,D). Synchrony oscillation between and m requires >0 (B,D), and >0 further increase overall oscillation as well as average rates (B). On the other hand, <0 diminishes the oscillation while decreasing average rates (D). None of these cases can reproduce the experimental observations, synchronous oscillations in and m with a decrease in the average rates. Thus, additional changes as the feedforward synaptic plasticity are still required without the constraint on the pre-synaptic dependence. The simulation of the mean-field dynamics is the same as in Figure 3—figure supplement 1 except wR = −0.1, a = −6.5, b = 5.5, t0 = 400 ms, t1 = 40 ms, =±0.1 and =±1.

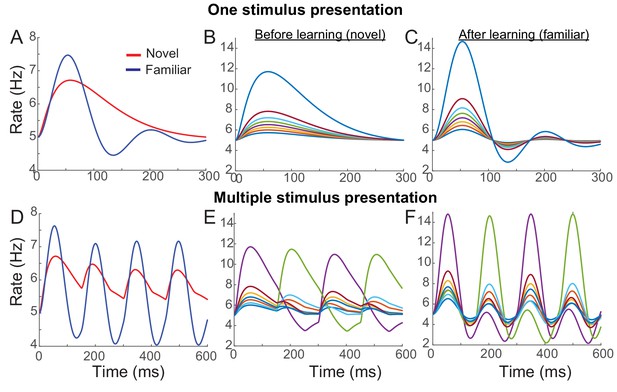

Example network reproducing the effects of visual learning in one stimulus presentation (A–C) and successive presentation of two stimuli (D–F).

The network implements potentiation in the recurrent connections through Hebbian synaptic plasticity, depression in the feedforward connections through uniform scaling down of the external inputs, and spike-adaptation mechanisms. Mean responses reproduce the effects of visual learning predicted in the mean-field dynamics, showing average reduction and stronger oscillations (A, D). Representative individual activities before (B,E) and after (C,F) learning show that activities in neurons with high firing rates increase with strong oscillation after learning (E,F), but the rank of stimuli is shuffled with the arrival of a new stimulus.

-

Figure 4—source code 1

MATLAB code for Figure 4.

- https://doi.org/10.7554/eLife.44098.020

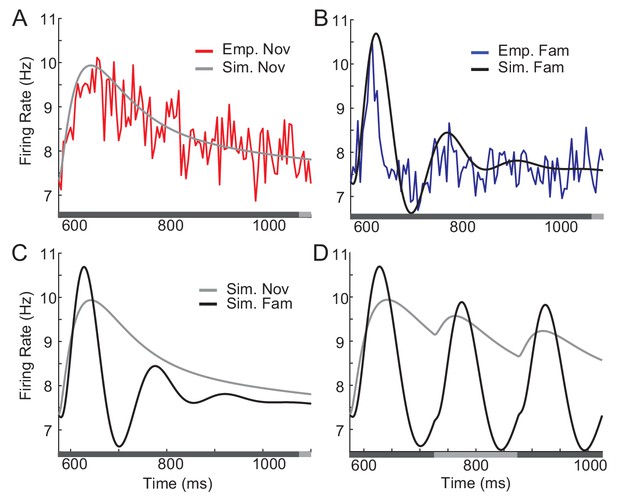

Comparison between network simulation and data obtained in a dimming-detection task.

(A, B) Fitting response dynamics (red in A and blue in B) using mean-field equations (gray in A and black in B) for novel (A) and familiar (B) stimuli. (C,D) Simulation for one stimulus presentation (C) and successive presentation of stimuli (D). The gray horizontal bar represents the visual stimulation period starting at 500 ms and x-axis is truncated to show activities from their onsets. In A-C, the stimulus was presented for a duration that was a sum of a fixed duration (650 ms shown in the dark gray) and a random duration (shown in the light gray). In D, different gray bars represent different stimuli shown alternatively for a duration of 150 ms.

-

Figure 5—source code 1

Data for Figure 5.

- https://doi.org/10.7554/eLife.44098.021

-

Figure 5—source code 2

MATLAB code for Figure 5.

- https://doi.org/10.7554/eLife.44098.022

Constraints on wR and k to reproduce responses to novel stimuli (shaded area).

In the dynamics of and in Equation (4), no damped oscillation for novel stimuli provides a constraint (solid black line). To analytically obtain the constraints for the lower peak in the successive presentation of novel stimuli, we assumed that during the rising phase of activity, adaptation variable and external inputs are constant. Also, we assumed neural activity changes linearly during the rising and decaying phase. Under these assumptions, the lower peak for the second novel stimuli provides the constraint (the dotted line). Here, c is a constant determined by time constants τ’s and t0 and t1 that are the durations of the rising and decaying phases, and r0 and r1 that are the activities at the end of the rising and decaying phases such that . The parameters used in this figure are τR = 5 ms, τA = 200 ms, t0 = 30 ms, t1 = 120 ms, r1/r0 = 0.7.

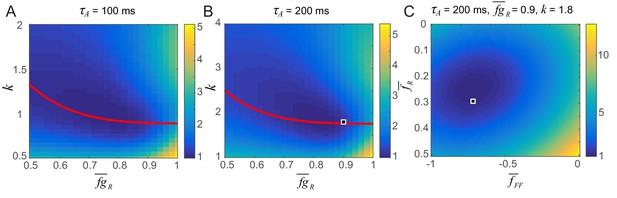

Parameter search for the strengths of potentiation and adaptation (A,B) and average post-synaptic dependence of recurrent and feedforward connections, , and (C).

The color map shows the distance between the data and simulation of the response for familiar stimuli normalized by the lowest distance. The red curve in A and B shows the pairs of and k that provide the damped oscillation with its period around 150 ms and provide a good fit to the data. In Figure 5, we chose (,k) = (0.9,1.8) (the square in B) and (,) = (0.3,–0.7) (the square in C) for τA = 200 ms which gives a good fit to a data in a dimming detection task (Figure 5B) as well as generating strong resonance behavior in the successive stimulus presentation (Figure 5D). The distance between the data and simulation is obtained by comparing them between 0 and 300 ms after the activity onset except between 50 ms and 100 ms due to the rapid decrease of activity between 50 and 100 ms which cannot be captured by the simulation.

Sensitivity of fitting to changes in the recurrent connectivity strength before learning, wR.

(A) The distance between the data and simulation of the response for familiar stimuli normalized by the lowest distance as in Figure 5—figure supplement 2. (B–D) Comparison between the data and network simulations for different wR. For a wide range of wR, the simulation fit the data well. The parameters are the same as in those in Figure 5 except a, b, t0, and t1 which were adjusted for each wR to reproduce response to novel stimuli.

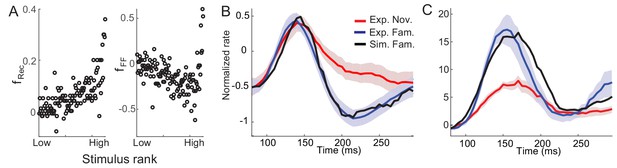

Post-synaptic dependence of synaptic plasticity in recurrent (left) and feedforward (right) connections derived from a passive viewing task (A) and comparison between the data and network simulations for average (B) and maximal (C) responses.

The external inputs were chosen so that the response to novel stimuli is the same in the experiment and simulation (red in B,C). With the derived post-synaptic dependence in the recurrent and feedforward connections (A), the response to familiar stimuli was simulated (black in B,C).

-

Figure 6—source code 1

MATLAB code for Figure 6.

- https://doi.org/10.7554/eLife.44098.023

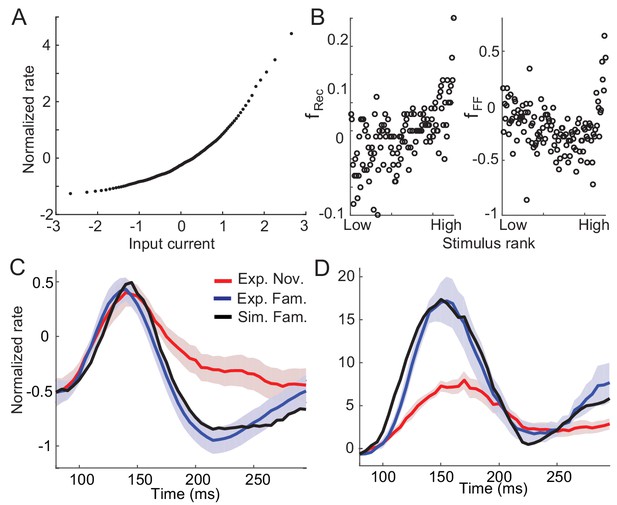

Example nonlinearity in dynamics and synaptic plasticity inferred under nonlinearity.

(A) Nonlinear input current-output firing rate transfer function that leads to nonlinear dynamics in the circuits. Under the assumptions of a normal distribution of input currents before learning and monotonically increasing transfer function, the transfer function was obtained from the distribution of time-averaged responses to novel stimuli (Lim et al., 2015). (B) Post-synaptic dependence of synaptic plasticity in the recurrent (left) and feedforward (right) connections that best fit the changes in response dynamics with the nonlinear transfer function in (A). (C,D) Comparison between data and network simulations for average (C) and maximal responses (D) (blue for data and black for network simulation).

Short-term depression cannot reproduce a damped oscillation after learning.

(A) Parameter search of the strength of depression (γ) and strength of potentiation (c = ). The color map shows the distance between the data and simulation of the response for familiar stimuli normalized by the lowest distance. (B) Time course of the response to the best familiar stimuli with the best-fitted parameters γ = 0.125, and = 2.56. Other parameters are = 5 ms, = 200 ms, and is obtained from the response to the best novel stimuli.

Schematics of synaptic plasticity rules in different connections.

The learning rule inferred from time-averaged activities (A) can be considered to be a combination of recurrent (B) and feedforward (C) synaptic plasticity. Such a learning rule shows a similar dependence on the normalized rates (equivalently the rank of stimuli) across different neurons (A; Lim et al., 2015). Inspired by this, we assumed the same synaptic plasticity rules of recurrent and feedforward connections across different neurons for normalized firing rates (B,C).

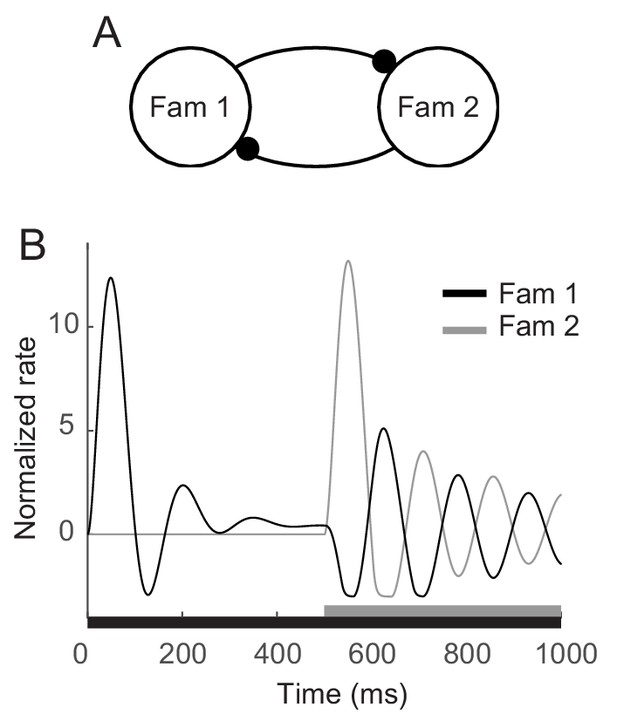

Enhanced oscillation through competitive interactions between different stimuli.

(A) Two mutually inhibitory populations selectively responding to stimuli 1 and 2, respectively. The dynamics of each population follows the dynamics of m in the mean-field description for a single familiar stimulus in Figures 3A,5. (B) Time course of visual responses of two populations with different stimulus onsets (black and gray bars below). Stimulus 2 was present 500 ms after the onset of stimulus 1, and the population two was assumed to be silent before the arrival of stimulus 2. After the onset of stimulus 2, visual response selective to stimulus one was transiently suppressed and showed stronger oscillation compared to that under the single stimulus presentation.

Additional files

-

Source code 1

- https://doi.org/10.7554/eLife.44098.018

-

Transparent reporting form

- https://doi.org/10.7554/eLife.44098.024