Monkey plays Pac-Man with compositional strategies and hierarchical decision-making

Figures

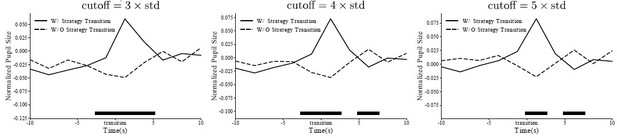

The Pac-Man game and the performance of the monkeys.

(A) The monkeys used a joystick to navigate Pac-Man in the maze and collect pellets for juice rewards. Also in the maze, there were two ghosts, Blinky and Clyde. The maze was fixed, but the pellets and the energizers were placed randomly initially in each game. Eating energizers turned the ghosts into the scared mode for 14 s, during which they were edible. There were also fruits randomly placed in the maze. The juice rewards corresponding to each game element are shown on the right. (B) The monkeys were more likely to move toward the direction with more local rewards. The abscissa is the reward difference between the most and the second most rewarding direction. Different grayscale shades indicate path types with different numbers of moving directions. Means and standard errors are plotted with lines and shades. See Figure 1—figure supplement 1 for the analysis for individual monkeys. (C) The monkeys escaped from normal ghosts and chased scared ghosts. The abscissa is the Dijkstra distance between Pac-Man and the ghosts. Dijkstra distance measures the distance of the shortest path between two positions on the map. Means and standard errors are denoted with lines and shades. See Figure 1—figure supplement 1 for the analysis for individual monkeys.

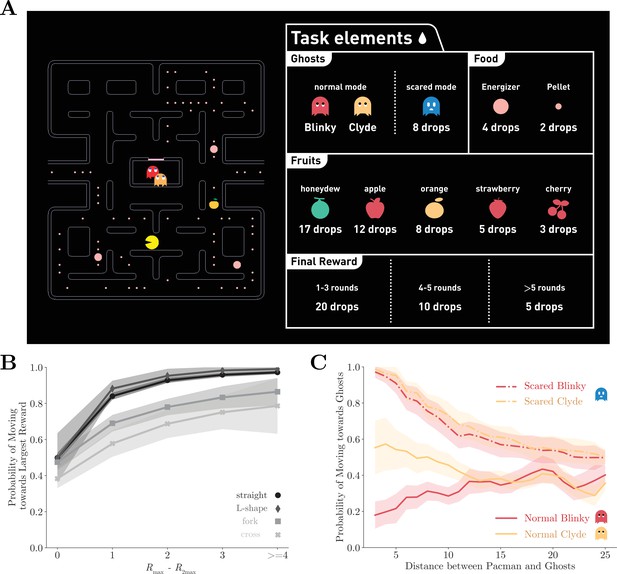

The performance of Monkey O (left) and Monkey P (right).

(A, C) Probability of monkeys moving toward the largest reward. The monkeys were more likely to move toward the direction with more local rewards. The abscissa is the reward difference between the most and the second most rewarding direction. The colors indicate the path types in which different numbers of moving directions are possible. Means and standard errors are plotted with lines and shades. (B, D) Probability of monkeys moving toward ghosts. The monkeys escaped from the normal ghosts and chased the scared ghosts. The abscissa is the distance between Pac-Man and the ghosts. The colors and the line types indicate the ghosts and their modes. Means and standard errors are plotted with lines and shades.

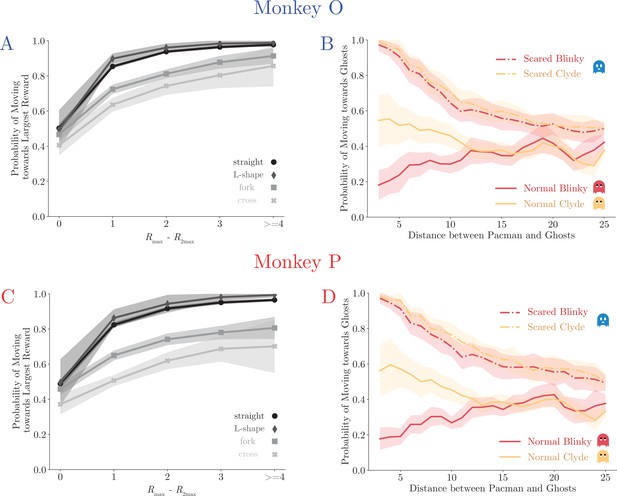

Fitting behavior with basis strategies.

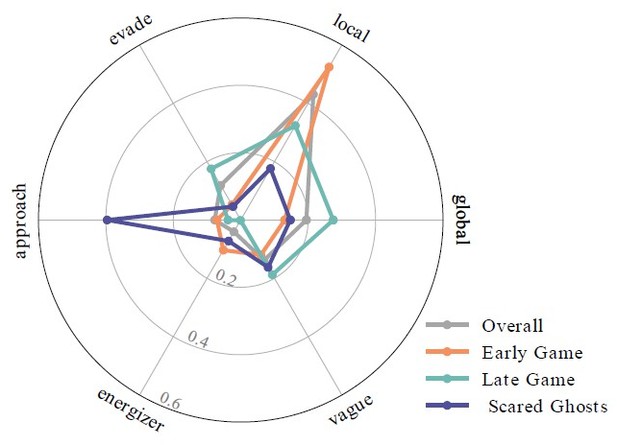

(A) The normalized strategy weights in an example game segment. The horizontal axis is the time step. Each time step is 417 ms, which is the time that it takes Pac-Man to move across a tile. The color bar indicates the dominant strategies across the segment. The monkey’s actual choice and the corresponding model prediction at each time step are shown below, with red indicating a mismatch. The prediction accuracy for this segment is 0.943. Also, see Figure 2—video 1. (B) Comparison of prediction accuracy across four models in four game contexts. Four game contexts were defined according to the criteria listed in Appendix 1—table 3. Vertical bars denote standard deviations. Horizontal dashed lines denote the chance-level prediction accuracies. See Appendix 1—tables 4–6 for detailed prediction accuracy comparisons. (C) The distribution of the three dominating strategies’ weights. The most dominating strategy’s weights (0.907 ± 0.117) were significantly larger than the secondary strategy (0.273 ± 0.233) and tertiary strategy (0.087 ± 0.137) by far. Horizontal white bars denote means, and the vertical black bars denote standard errors. (D) The distribution of the weight difference between the most and the second dominating strategies. The distribution is heavily skewed toward 1. In over 90% of the time, the weight difference was larger than 0.1, and more than 33% of the time the difference was over 0.9. (E) The ratios of labeled dominating strategies across four game contexts. In the early game, the local strategy was the dominating strategy. In comparison, in the late game, both the local and the global strategies had large weights. The weight of the approach strategy was largest when the ghosts were in the scared mode. See Figure 2—figure supplement 1 for the analysis for individual monkeys.

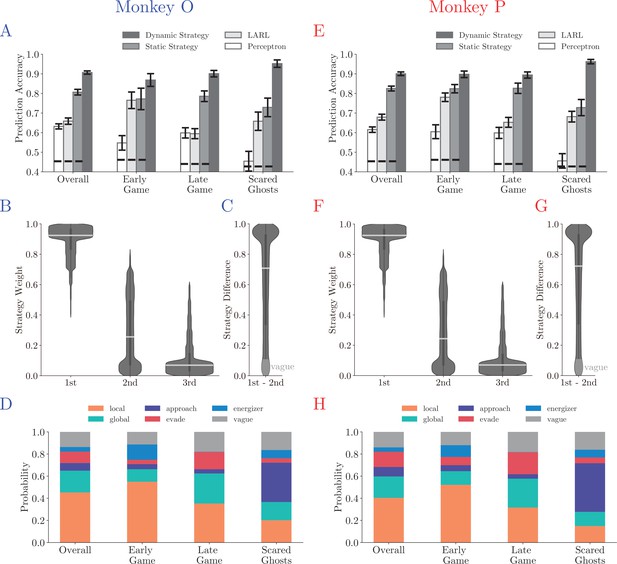

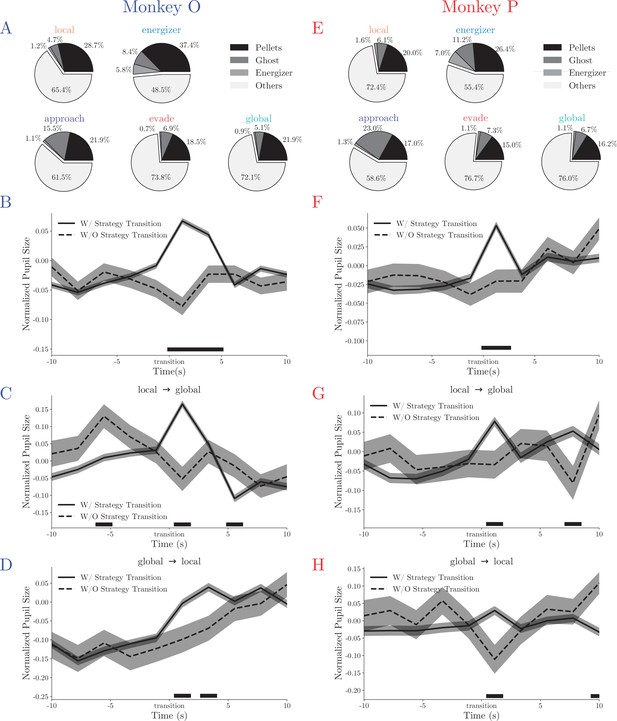

Fitting behavior with strategy labels for Monkey O (left) and Monkey P (right).

(A, E) Comparison of prediction accuracy across three models in four game contexts. See Appendix 1—tables 5 and 6 for details. Vertical bars denote standard deviations. Horizontal dashed lines denote the chance-level prediction accuracies. (B, F) The histograms of the three dominating strategies’ weights. (B) The most dominating strategy’s weights (0.907 ± 0.115) were much larger than the secondary strategy (0.276 ± 0.233) and tertiary strategy (0.088 ± 0.136). (F) The most dominating strategy’s weights (0.907 ± 0.119), the secondary strategy (0.270 ± 0.233), and tertiary strategy (0.07 ± 0.138). Horizontal white bars denote means, and the vertical black bars denote standard errors. (C, G) The histogram of the weight difference between the most and the second dominating strategies. The distribution is heavily skewed toward 1. In about 90% of the time, the weight difference was larger than 0.1, and more than 30% of the time the difference was over 0.9. Horizontal white bars denote means, and the vertical black bars denote standard errors. (D, H) The ratios of labeled dominating strategies across four game contexts. In the early game, the local strategy was the dominating strategy. In comparison, in the late game, both the local and the global strategies had large weights. The weight of the approach strategy was largest when the ghosts were in the scared mode.

Example game segment.

Monkey’s moving trajectory, actual and predicted actions, and labeled strategies are plotted in this example game segment. Monkey’s real-time saccade position is plotted as a moving white dot. In this example, the monkey started with the local strategy and grazed pellets. With the ghosts getting close, it initiated the energizer strategy and went for a nearby energizer. Once eating the energizer, the monkey switched to the approach strategy to hunt the scared ghosts. Afterward, the monkey resumed the local strategy and then used the global strategy to navigate toward another patch when the local rewards were depleted.

Monkeys’ behavior under different strategies.

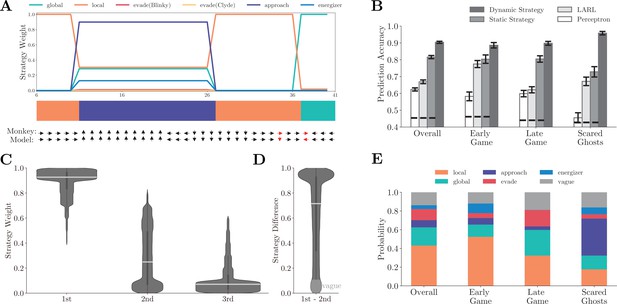

(A) The probabilities of the monkeys adopting the local or global strategy correlate with the number of local pellets. Solid lines denote means, and shades denote standard errors. (B) The probabilities of the monkeys adopting the local or approach strategy correlate with the distance between Pac-Man and the ghosts. Solid lines denote means, and shades denote standard errors. (C) When adopting the global strategy to reach a far-away patch of pellets, the monkeys’ actual trajectory length was close to the shortest. The column denotes the actual length, and the row denotes the optimal number. The percentages of the cases with the corresponding actual lengths are presented in each cell. High percentages in the diagonal cells indicate close to optimal behavior. (D) When adopting the global strategy to reach a far-away patch of pellets, the monkeys’ number of turns was close to the fewest possible turns. The column denotes the actual turns, and the row denotes the optimal number. The percentages of the cases with the corresponding optimal numbers are presented in each cell. High percentages in the diagonal cells indicate close to optimal behavior. (E) Average fixation ratios of ghosts, energizers, and pellets when the monkeys used different strategies. (F) The monkeys’ pupil diameter increases around the strategy transition (solid line). Such increase was absent if the strategy transition went through the vague strategy (dashed line). Shades denote standard errors. Black bar at the bottom denotes p<0.01, two-sample t-test. See Figure 3—figure supplement 1 for the analysis for individual monkeys.

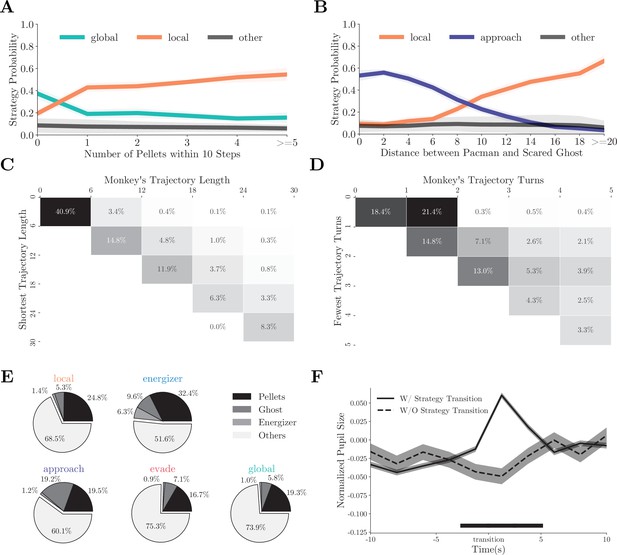

Monkey’s behavior under different strategies for Monkey O (left) and Monkey P (right).

(A, E) The probabilities of the monkeys adopting the local or global strategy correlate with the number of local pellets. Solid lines denote means, and shades denote standard errors. (B, F) The probabilities of the monkeys adopting the local or approach strategy correlate with the distance between Pac-Man and the ghosts. Solid lines denote means, and shades denote standard errors. (C, G) When adopting the global strategy to reach a far-away patch of pellets, the monkeys’ actual trajectory length was close to the shortest. The column denotes the actual length, and the row denotes the optimal number. The percentages of the cases with the corresponding actual lengths are presented in each cell. High percentages in the diagonal cells indicate close to optimal behavior. (D, H) When adopting the global strategy to reach a far-away patch of pellets, the monkeys’ number of turns was close to the fewest possible turns. The column denotes the actual turns, and the row denotes the optimal number. The percentages of the cases with the corresponding optimal numbers are presented in each cell. High percentages in the diagonal cells indicate close to optimal behavior.

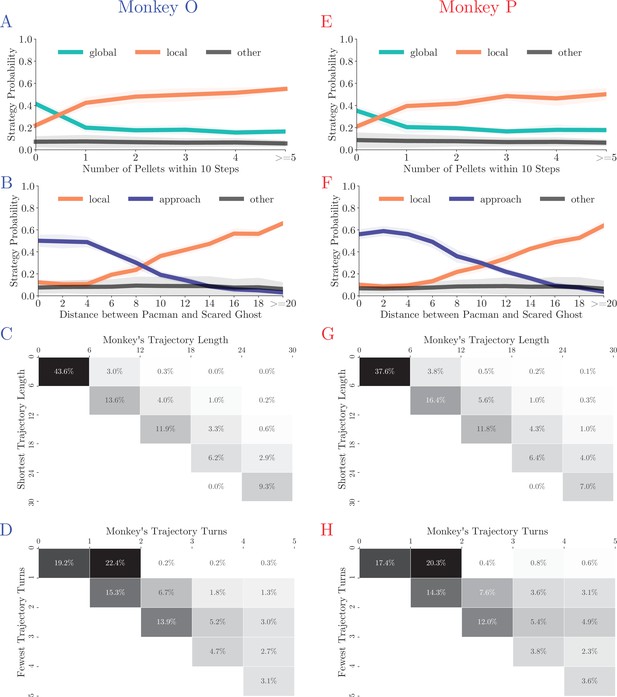

Monkey’s eye movement patterns under different strategies for Monkey O (left) and Monkey P (right).

(A, E) Average fixation ratios of ghosts, energizers, and pellets when the monkeys used different strategies. (B, F) The monkeys’ pupil diameter increases around the strategy transition (solid line). Such increase was absent if the strategy transition went through the vague strategy (dashed line). Shades denote standard errors across trials. Black bars at the bottom denote p<0.01, two-sample t-test. (C, G) The monkeys’ pupil diameter increase was evident in transitions from local to global. Shades denote standard errors across trials. Black bars at the bottom denote p<0.01, two-sample t-test. (D, H) The monkeys’ pupil diameter increase was evident in transitions from global to local in Monkey P. It was not obvious in Monkey O as the pupil size increased in general. Shades denote standard errors across trials. Black bars at the bottom denote p<0.01, two-sample t-test.

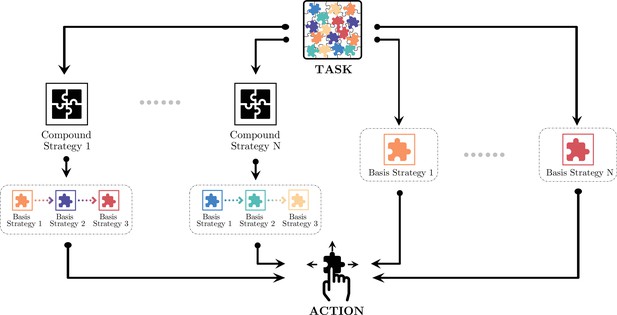

Monkeys’ decision-making in different hierarchies.

At the lowest level, decisions are made for the joystick movements: up, down, left, or right. At the middle level, choices are made between the basis strategies. At a higher level, simple strategies may be pieced together for more sophisticated compound strategies. Monkeys may adopt one of the compound strategies or just a basis strategy depending on the game situation.

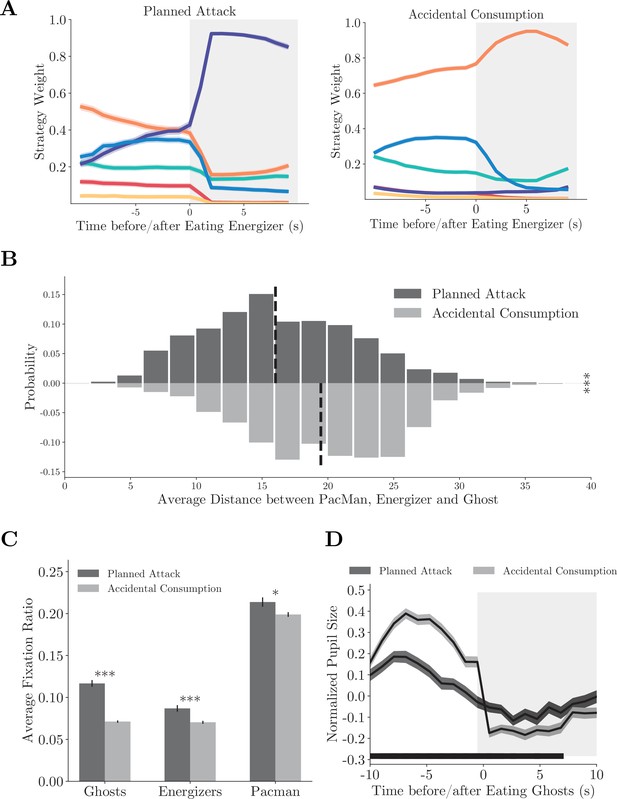

Compound strategies: planned attack.

(A) Average strategy weight dynamics in planned attacks (left) and accidental consumptions (right). Solid lines denote means, and shades denote standard errors. (B) The average distance between Pac-Man, the energizer, and the ghosts in planned attacks and accidental consumptions. Vertical dashed lines denote means. ***p<0.001, two-sample t-test. (C) Ratios of fixations on the ghosts, the energizer, and Pac-Man. Vertical bars denote standard errors. ***p<0.001, **p<0.01, two-sample t-test. (D) The pupil size aligned to the ghost consumption. The black bar near the abscissa denotes data points where the two traces are significantly different (p<0.01, two-sample t-test). Shades denote standard errors at every time point. See Figure 5—figure supplement 1 for the analysis for individual monkeys.

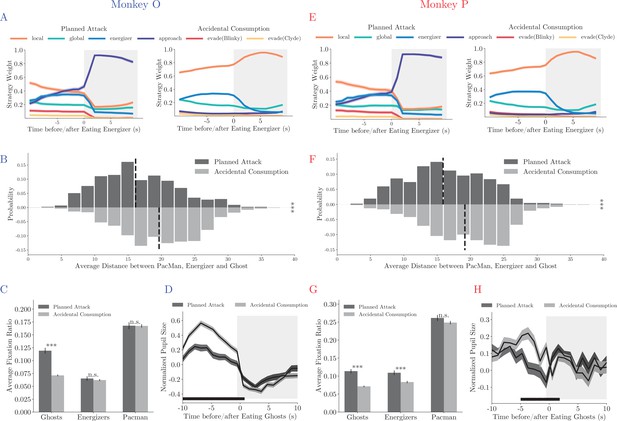

Planned attacks in Monkey O (upper) and Monkey P (lower).

(A, E) Average strategy weight dynamics in planned attacks (left) and accidental consumptions (right). Solid lines denote means, and shades denote standard errors. (B, F) The average distance between Pac-Man, the energizer, and the ghosts in planned attacks and accidental consumptions. Vertical dashed lines denote means. ***p<0.001, two-sample t-test. (C, G) Ratios of fixations on the ghosts, the energizer, and Pac-Man. Vertical bars denote standard errors. ***p<0.001, ** p<0.01, two-sample t-test. (D, H) The pupil size aligned to the ghost consumption. The black bar near the abscissa denotes data points where the two traces are significantly different (p<0.01, two-sample t-test). Shades denote standard errors at every time point.

Planned attack game segment.

Monkey’s moving trajectory, actual and predicted actions, and labeled strategies are plotted in this planned attack game segment. Monkey’s real-time saccade position is plotted as a moving white dot. In this trial, the monkey immediately switched to the approach strategy after eating an energizer.

Accidental consumption game segment.

Monkey’s moving trajectory, actual and predicted actions, and labeled strategies are plotted in this accidental consumption game segment. Monkey’s real-time saccade position is plotted as a moving white dot. In this trial, the monkey treated an energizer just as a more rewarding pellet and continued collecting pellets with the local strategy after eating the energizer.

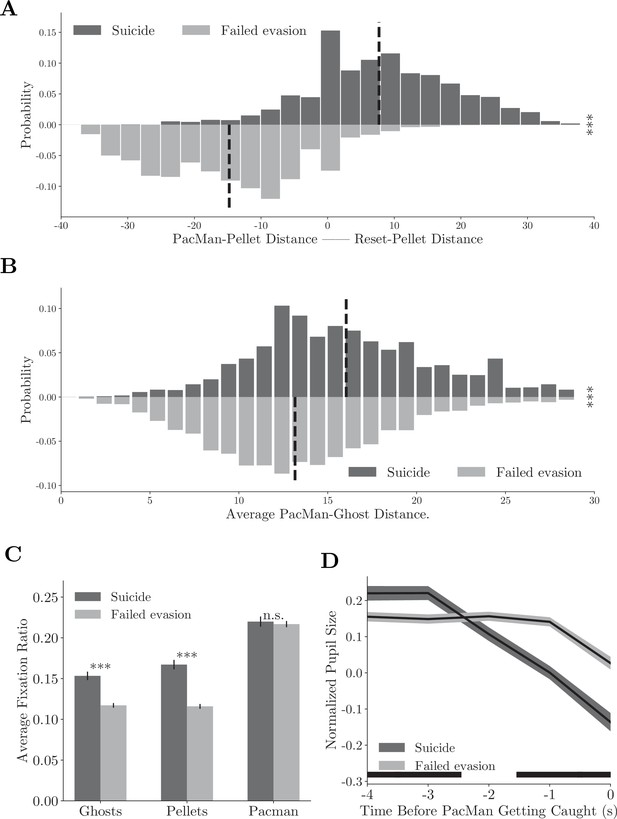

Compound strategies: suicide.

(A) Distance difference between Pac-Man and closest pellet before and after the death is smaller in suicides than in failed evasions. Vertical dashed lines denote means. ***p<0.001, two-sample t-test. (B) Average distance between Pac-Man and the ghosts was greater in suicides than in failed evasions. Vertical dashed lines denote means. ***p<0.001, two-sample t-test. (C) The monkeys fixated more frequently on the ghosts and the pellets in suicides than in failed evasions. Vertical bars denote standard errors. ***p<0.001, two-sample t-test. (D) The monkeys’ pupil size decreased before Pac-Man’s death in suicides. The black bar near the abscissa denotes data points where the two traces are significantly different (p<0.01, two-sample t-test). Shades denote standard errors at every time point. See Figure 6—figure supplement 1 for the analysis for individual monkeys.

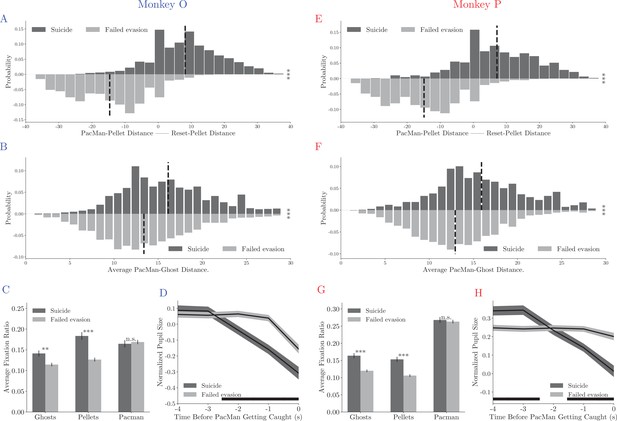

Suicides in Monkey O (left) and Monkey P (right).

(A, E) The distance difference between Pac-Man and closest pellet before and after the death is smaller in suicides than in failed evasions. Vertical dashed lines denote means. ***p<0.001, two-sample t-test. (B, F) The average distance between Pac-Man and the ghosts was greater in suicides than in failed evasions. Vertical dashed lines denote means. ***p<0.001, two-sample t-test. (C, G) The monkeys fixated more frequently on the ghosts and the pellets in suicides than in failed evasions. Vertical bars denote standard errors. ***p<0.001, two-sample t-test. (D, H) The monkeys’ pupil size decreased before Pac-Man’s death in suicides. The black bars near the abscissa denote data points where the two traces are significantly different (p<0.01, two-sample t-test). Shades denote standard errors at every time point.

Suicide game segment.

Monkey’s moving trajectory, actual and predicted actions, and labeled strategies are plotted in this suicide game segment. Monkey’s real-time saccade position is plotted as a moving white dot. In this trial, the monkey moved Pac-Man toward a normal ghost to be eaten on purpose. The local pellets were scarce, and the remaining pellets were far away. Thus, the death of Pac-Man resets the game and returns Pac-Man and the ghosts to their starting positions, making suicide a more advantageous compound strategy.

Failed evasion game segment.

Monkey’s moving trajectory, actual and predicted actions, and labeled strategies are plotted in this failed evasion game segment. Monkey’s real-time saccade position is plotted as a moving white dot. In this trial, the monkeys were adopting the evade strategy but failed to escape from the ghosts.

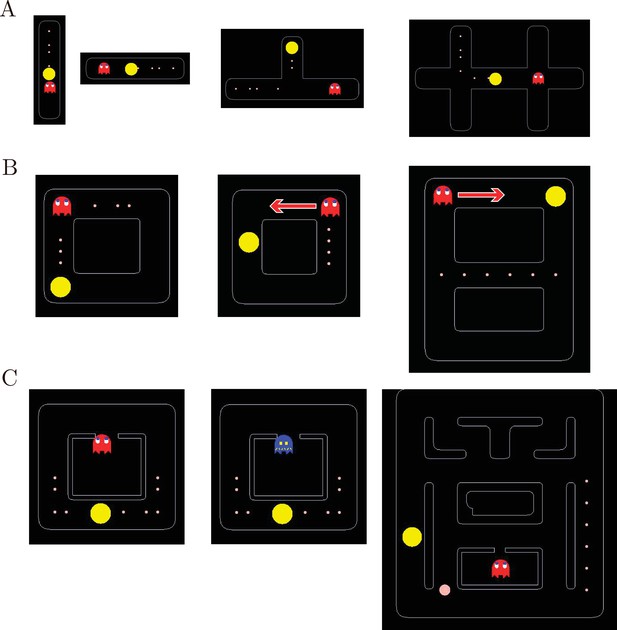

Training procedure.

(A) Stage 1 training mazes. From left to right: (1) Vertical maze. Pac-Man started from the middle position, with several pellets in one direction and a static ghost in the other. The monkeys learned to move the joystick upward and downward. (2) Horizontal maze. The monkeys learned to move the joystick toward left and right. (3) T-maze. Pac-Man started from the vertical arm, and the monkeys learned to move out of it by turning left or right. Pellets were placed in one arm and a static ghost in the other. (4) H-maze. Pac-Man started from the middle of the maze. There were pellets placed on the way leading to one of the three arms, and a static ghost was placed at the crossroad on the opposite side. (B) Stage 2 training mazes. From left to right: (1) Square maze with a static ghost. Pac-Man started from one of the four corners, and pellets were placed in two adjacent sides with a static ghost placed at the corner connecting the two. (2) Square maze with a moving ghost. Pac-Man started from the middle of one of the four sides, and pellets were placed on the opposite side. A ghost moved from one end of the pellet side and stopped at the other end. (3) Eight-shaped maze with a moving ghost. Pac-Man stated from one of the four corners. The pellets were placed in the middle tunnel. A ghost started from a corner and moved toward the pellets. (C) Stage 3 training mazes. From left to right: (1) Square maze with Blinky. Pac-Man started from the middle of the bottom side with pellets placed on both sides. Blinky in normal mode started from its home. (2) Square maze with a ghost in a permanent scared mode. The scared ghost started from its home. Once caught by Pac-Man, the ghost went back to its home. (3) Maze with an energizer and Blinky. An energizer was randomly placed in the maze. Once the energizer was eaten, the ghost would be turned into the scared mode. The scared mode lasted until the ghost was eaten by Pac-Man. Once the ghost was eaten, it returned to its home immediately and came out again in the normal mode. After the monkeys were able to perform the task, we limited the scared mode to 14 s.

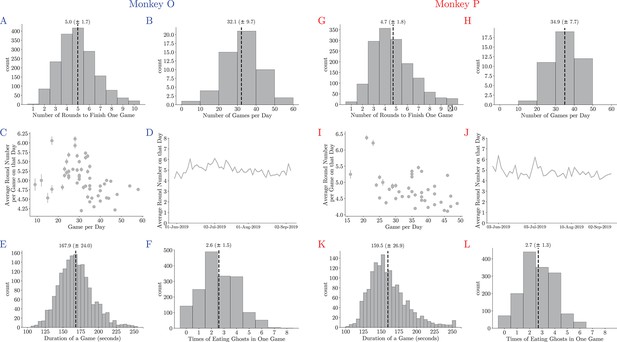

Basic game statistics of Monkey O (left) and Monkey P (right).

(A, G) The number of rounds to clear all pellets in each game. Vertical dashed lines denote means. (B, H) The number of games accomplished on each day. Vertical dashed lines denote means. (C, I) The average number of rounds to clear a maze plotted against the number of games in a session. Vertical lines denote standard deviations. Playing more games in each session can slightly improve the monkey’s game performance. (D, J) The average number of rounds during the training. (E, K) The time needed to clear a maze. Vertical dashed lines denote means. (E, K) The time needed to clear a maze. Vertical dashed lines denote means.

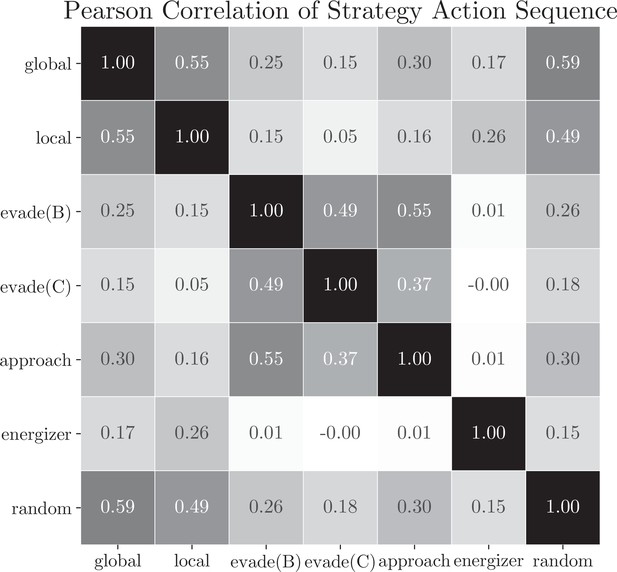

Strategy basis correlation matrix.

We computed the Pearson correlations between the action sequences chosen with each basis strategy within each coarse-grained segment determined by the two-pass fitting procedure. As a control, we computed the correlation between each basis strategy and a random strategy, which generates action randomly, as a baseline. Most strategy pairs' correlation was lower than the random baseline.

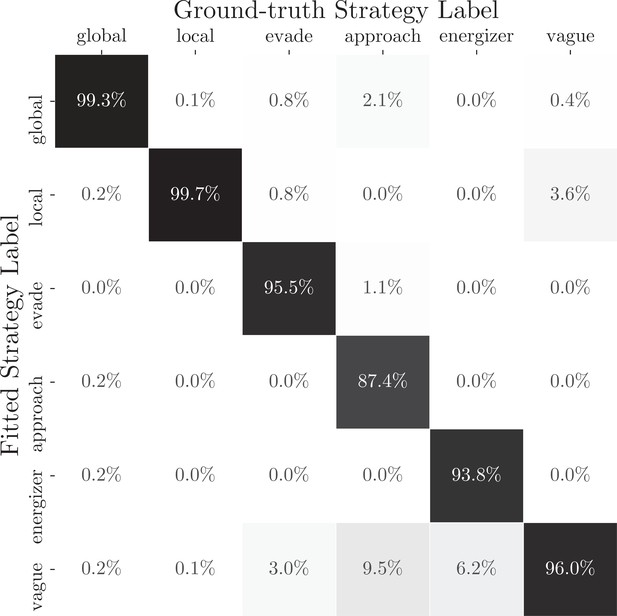

Recovering the strategy labels of an artificial agent with the dynamic compositional strategy model based on simulated gameplay data.

The confusion matrix between the fitted strategy labels and the ground-truth strategy labels from an artificial agent is shown. The artificial agent used time-varying strategy weights to combine six strategies illustrated in the method. Strategy weights were selected based on two monkeys’ choices at the same game context determined by the location and state of Pac-Man, the pellets, the energizers, and the ghosts. We used the dynamic compositional strategy model to estimate the strategy labels from 2050 rounds of simulated data and produced the confusion matrix. In most cases, the model was able to recover the correct strategy (diagonal boxes).

Radar chart of the labeled dominating strategy ratios across four game contexts.

Videos

Example game trials.

Monkey O’s moving trajectory, actual and predicted actions, and labeled strategies are plotted in these example game trials.

Example game trials.

Monkey O’s moving trajectory, actual and predicted actions, and labeled strategies are plotted in these example game trials.

Example game trials.

Monkey O’s moving trajectory, actual and predicted actions, and labeled strategies are plotted in these example game trials.

Example game trials.

Monkey P’s moving trajectory, actual and predicted actions, and labeled strategies are plotted in this example game segment.

Example game trials.

Monkey P’s moving trajectory, actual and predicted actions, and labeled strategies are plotted in this example game segment.

Tables

Four path types in the maze.

| Path type | Selection criteria |

|---|---|

| Straight | Contains two opposite moving directions |

| L-shape | Contains two orthogonal moving directions |

| Fork | Contains three moving directions |

| Cross | Contains four moving directions |

Awarded and penalized utilities for each game element in the model.

| (1–5) | ||||

|---|---|---|---|---|

| 2 | 4 | 3, 5, 8, 12, 17 | 8 | -8 |

Special game contexts and corresponding selection criteria.

| Context | Selection criteria |

|---|---|

| All stage | n.a. |

| Early game | Remaining number of pellets of total (80 for O and 65 for P) |

| Late game | Remaining number of pellets of total (10 for O and 7 for P) |

| Scared ghost | Any scared ghosts within 10 steps away from Pac-Man |

Comparison of prediction accuracy (± SE) across four models in four game contexts for the two monkeys.

| Strategy | ||||

|---|---|---|---|---|

| Context | Dynamic | Static | LARL | Perceptron |

| Overall | 0.904 ± 0.006 | 0.816 ± 0.010 | 0.669 ± 0.011 | 0.624 ± 0.010 |

| Early game | 0.886 ± 0.0016 | 0.804 ± 0.025 | 0.775 ± 0.021 | 0.582 ± 0.026 |

| Late game | 0.898 ± 0.011 | 0.805 ± 0.019 | 0.621 ± 0.018 | 0.599 ± 0.019 |

| Scared ghosts | 0.958 ± 0.010 | 0.728 ± 0.031 | 0.672 ± 0.025 | 0.455 ± 0.030 |

-

LARL: linear approximate reinforcement learning.

Comparison of prediction accuracy (± SE) across four models in four game contexts for Monkey O.

| Strategy | ||||

|---|---|---|---|---|

| Context | Dynamic | Static | LARL | Perceptron |

| Overall | 0.907 ± 0.008 | 0.806 ± 0.014 | 0.659 ± 0.016 | 0.632 ± 0.013 |

| Early game | 0.868 ± 0.0132 | 0.772 ± 0.054 | 0.765 ± 0.042 | 0.548 ± 0.037 |

| Late game | 0.901 ± 0.016 | 0.786 ± 0.027 | 0.595 ± 0.026 | 0.598 ± 0.026 |

| Scared ghosts | 0.952 ± 0.019 | 0.729 ± 0.047 | 0.658 ± 0.046 | 0.545 ± 0.050 |

-

LARL: linear approximate reinforcement learning.

Comparison of prediction accuracy (± SE) across four models in four game contexts for Monkey P.

| Strategy | ||||

|---|---|---|---|---|

| Context | Dynamic | Static | LARL | Perceptron |

| Overall | 0.900 ± 0.009 | 0.825 ± 0.012 | 0.679 ± 0.015 | 0.615 ± 0.014 |

| Early game | 0.898 ± 0.017 | 0.824 ± 0.021 | 0.781 ± 0.022 | 0.605 ± 0.035 |

| Late game | 0.894 ± 0.016 | 0.826 ± 0.026 | 0.653 ± 0.025 | 0.599 ± 0.028 |

| Scared ghosts | 0.962 ± 0.011 | 0.723 ± 0.041 | 0.682 ± 0.027 | 0.456 ± 0.037 |

-

LARL: linear approximate reinforcement learning.

Trained feature weights in the LARL model for Monkey O and Monkey P.

| Feature | |||||||

|---|---|---|---|---|---|---|---|

| Monkey | |||||||

| O | 0.3192 | 0.0049 | –0.0758 | 2.4743 | –0.7799 | 1.0287 | –0.9717 |

| P | 0.5584 | 0.0063 | –0.0563 | 2.5802 | –0.6324 | 1.6068 | –1.1994 |

-

LARL: linear approximate reinforcement learning.