Non-shared coding of observed and executed actions prevails in macaque ventral premotor mirror neurons

Figures

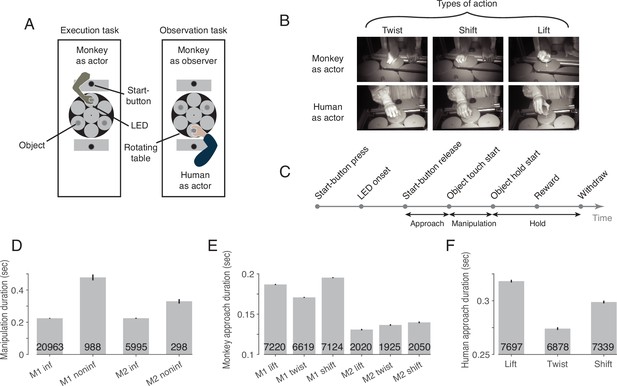

The behavioral paradigm.

(A) Experimental setup with three identical objects positioned on a rotating table in front of the actor. Each object could be acted on in only one way. The type of action required was cued by the color of an LED next to the object. After LED onset, the actor was allowed to release the start-button. (B) Photographs of the three actions in execution and observation tasks at the time when the object was held in its target position. (C) The sequence of events in a trial. (D) Duration of manipulation epoch compared between trials with informative and non-informative cues (inf and noninf for monkey M1 and M2, error bars show 95% confidence intervals of the mean of all trials of sessions in which mirror neurons were recorded, pooled within a monkey, unpaired t-test, p<0.001 in each monkey, trial count per condition is indicated on the bars). (E) Duration of approach epoch compared between the three actions in trials with informative cue (error bars as in D, one-way ANOVA, p<0.001 in each monkey). (F) as E but for the human actor (p<0.001).

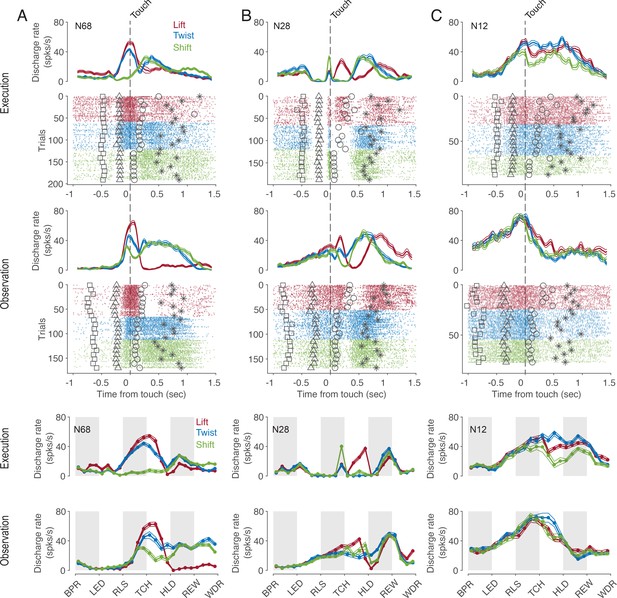

Exemplary F5 MNs.

(A) A neuron that prefers a lift around the time of touch and then later a twist and a shift in both tasks. (B) A neuron that prefers a shift during execution and a lift during observation before the touch, a lift during manipulation in both tasks, and a twist after hold during observation. (C) A neuron with an action code during observation that differs from that during execution. In the upper panels, spike density functions (mean ± s.e.m.) and raster plots are aligned to the time of touch start (vertical dashed line), and markers indicate the time of four events around the time of touch: LED onset (square), start-button release (triangle), object hold start (circle), and reward (star). For better visualization, the events are shown for only about 10% of trials. In the lower two panels, discharge rate is plotted per relative time bin (vertical stripes, gray and white, indicate the six epochs). BPR: start-button press, LED: LED onset, RLS: start-button release, TCH: object touch start, HLD: object hold start, REW: reward, WDR: withdrawal, object release. N68, N28 and N12 are the IDs of MNs.

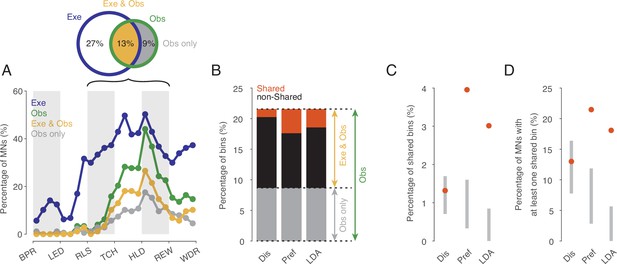

Shared action code in single neurons.

(A) Percentages of MNs (n=177) discriminating between actions on execution (Exe), on observation (Obs), on both (Exe and Obs) and on observation only (Obs only) per time bin (Kruskal-Wallis tests, alpha <0.05 for Exe and for Obs, Benjamini-Hochberg-corrected for 177*24 tests). For the abbreviations of the events, see Figure 2. Black bracket indicates the action period consisting of three epochs further analyses focusses on. Venn diagram provides percentages of bins discriminating actions on Exe or Obs with respect to all bins examined in the action period (n=177*12). (B) Stacked bar chart showing the percentages of bins with respect to all bins examined in the action period with shared action code, non-shared action code and action code on observation only depending on the method used. Dis: same discharge method, Pref: same preference method, LDA: cross-task classification method using LDA. (C) Percentages of bins with shared action code with respect to all bins examined in the action period depending on the method (as in B). Gray bars indicate 95% confidence intervals derived from permutation tests under the null hypothesis that the coding of actions in the population of MNs at observation is independent of the coding at execution. (D) Percentages of MNs with at least one bin with shared action code related to all MNs (n=177). Methods and gray bars as in C.

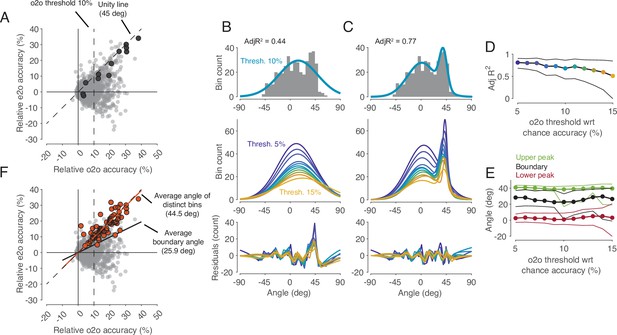

Comparison of classification accuracy for observed actions of classifiers trained either on execution (e2o) or on observation (o2o).

(A) Each dot in the scatter plot represents the e2o accuracy and the o2o accuracy in a time bin of a neuron, relative to chance level. Each neuron contributed to this plot with 12 time bins between start-button release and reward. The black dots indicate the data points of an example neuron (N68). Threshold 10% is one instance of the 11 filtering thresholds. (B) Top: The gray histogram shows the distribution of the angles of each dot above threshold 10% in A. The envelope depicts the single-Gaussian fit. Middle: single-Gaussian fits for 11 different thresholds. Bottom: The residuals of the single-Gaussian fits for the 11 thresholds. (C) : The two-Gaussian fit for threshold 10%. Middle and bottom: two-Gaussian fits and residuals as in B. (D) Adjusted R-squared (AdjR2) of two-Gaussian fits for thresholds 5 to 15%. Colored dots indicate the means and the black lines the 95% confidence intervals across 1000 bootstrapped resamples. (E) Upper peak, lower peak, and boundary (trough between the two peaks) of two-Gaussian fits for thresholds 5 to 15%. Green, black, and red dots indicate the means and the lines the 95% confidence intervals across 1000 bootstrapped resamples. (F) Same scatter plot as in A. The orange dots indicate the bins with significant e2o accuracies and the orange line shows their average angle. The average boundary angle (black line) corresponds to the mean boundary angle across the 11 thresholds in E.

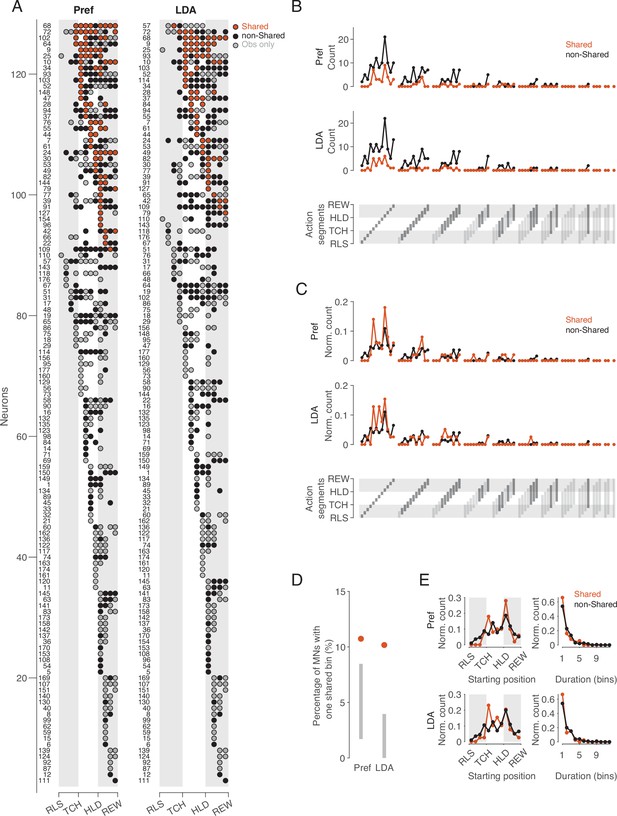

Distribution of shared and non-shared codes across time bins and neurons.

(A) The bins in which observed actions were encoded are shown for all neurons with at least one such bin (rows) across relative time (columns). Bins are classified into bins with shared code, bins with non-shared code, and bins that do not distinguish actions at all when executed (obs only). Neurons are ordered by (1) occurrence of at least one shared vs. no shared bin, (2) first occurrence of shared bin, (3) number of shared bins, (4) first occurrence of non-shared or obs only bin, (5) number of non-shared or obs only bins. Left: action preference method, Right: cross-task classification method using LDA. Numbers to the left indicate the ID of each neuron. For the abbreviations of the events, see Figure 2. (B) Count of action segments of consecutive bins with shared or non-shared (including obs only) codes separately for the two methods (top and middle). Segments indicated by light gray did not occur (bottom). (C) As B, but count of action segments normalized to the total number of bins of one code type. (D) Percentages of MNs with segments consisting of one bin with a shared code. Methods and gray bars as in Figure 3D. (E) Normalized count of segments depending on starting position (left) and duration (right), separately for the two methods.

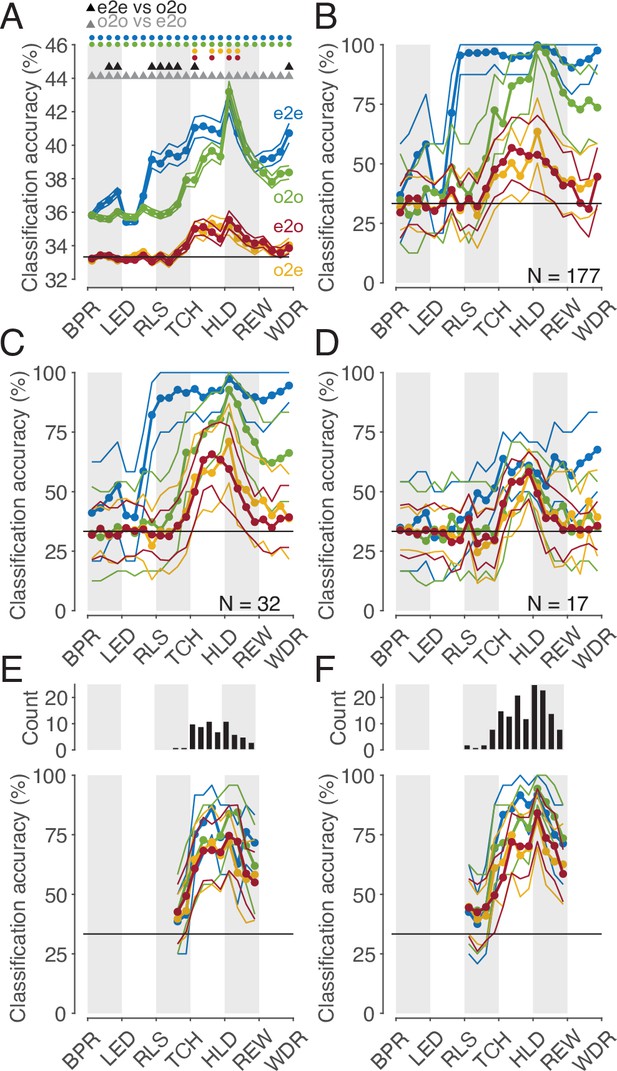

Classification of the three actions.

(A) Single neuron classification accuracy (mean ± s.e.m, n=177 neurons) per time bin, execution-trained classifiers tested with execution trials (e2e, blue), tested with observation trials (e2o, red). Observation-trained classifiers tested with observation trials (o2o, green), and execution trials (o2e, orange). Colored dots indicate time bins of the same color with significant accuracy above chance (Benjamini-Hochberg-corrected one-sided signed-rank tests, alpha <0.05). Triangles indicate time bins with significant differences in the accuracy between the indicated classifications (Benjamini-Hochberg-corrected two-sided signed-rank tests, alpha <0.05). For the abbreviations of the events, see Figure 2. The very low but above chance level accuracy of the e2e and o2o classifiers before LED onset indicates that the three conditions were already distinguishable to some extent before LED onset by cues we could not identify. (B) Population classification accuracy per time bin (mean and 90% CI derived from bootstrapping). Same as A, but here, all neurons (n=177) constructed the 177 features of a classifier. (C) Same as B, but the population consists of only 32 neurons with at least one bin with a shared code according to Figure 5A, right. (D) Same as B, but the population consists of only 17 neurons with only bins with a shared code according to a 10% threshold criterion and the boundary angle shown in Figure 4F. (E and F) Same as C and D, respectively, but for each time bin only neurons with a shared code in this bin were included, which leads to a variable population size (top), and in F, not only neurons with only bins with a shared code (as in D), but for each time bin all neurons with a shared code in this bin were included.

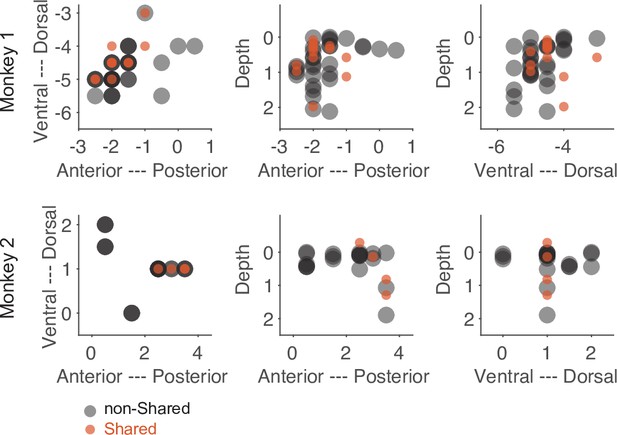

Anatomical location of neurons with only shared bins and neurons with only non-shared bins selected based on the threshold method (see main text).

Each dot represents the recording site of a neuron. Dark colors indicate overlap of neuron locations. The numbers of the axes refer to the electrode position (in mm) in relation to the center of the recording chamber and to the cortical surface (for depth).

Tables

The average duration (in ms) across trials of each epoch per action for monkeys and humans.

The numbers in brackets indicate the lower and upper bound of the middle of 95% of data.

| Monkey | Human | |||||

|---|---|---|---|---|---|---|

| twist | shift | lift | twist | shift | lift | |

| Start-button press to LED onset | 3288 [1197, 4728] | 3608 [1242, 5211] | 3497 [1306, 4959] | 1272 [1029, 1507] | 1283 [1030, 1513] | 1277 [1029, 1510] |

| LED onset to Start-button release | 296 [224, 389] | 302 [231, 404] | 295 [221, 389] | 460 [335, 662] | 450 [324, 663] | 473 [337, 679] |

| Start-button release to touch | 163 [113, 219] | 183 [112, 248] | 175 [108, 243] | 274 [203, 409] | 299 [213, 422] | 318 [231, 461] |

| Touch to hold | 235 [140, 626] | 117 [59, 672] | 322 [199, 637] | 131 [73, 287] | 121 [60, 297] | 298 [183, 550] |

| Hold to reward | 566 [329, 804] | 570 [328, 805] | 568 [329, 803] | 570 [329, 806] | 569 [328, 804] | 570 [330, 804] |

| Reward to withdraw | 675 [296, 1325] | 744 [316, 1380] | 464 [288, 700] | 382 [229, 786] | 433 [276, 787] | 431 [257, 856] |