Transformer-based deep learning for predicting protein properties in the life sciences

Figures

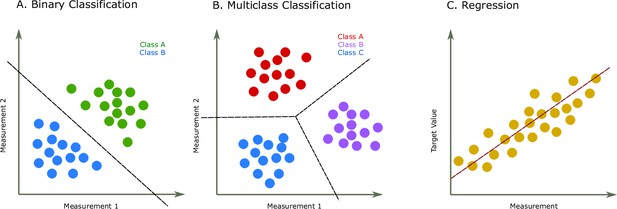

Two common prediction tasks in machine learning (ML) are classification and regression.

For illustration purpose, two-dimensional plots are used, but in reality, the dimensions are much higher. (A) Binary classification tasks are for samples that can be separated into two groups, called classes. For instance, the samples can be several features of some proteins, where each protein is associated with one of two classes. A protein variant could either be stable or unstable (Fang, 2020) or a lysine residue could be phosphoglycerylated or non-phosphoglycerylated (Chandra et al., 2020). The ML task would be to build a model that can determine the class for a new sample. (B) The multiclass classification task is performed when the proteins belong to one of multiple classes. For instance, predicting which structural class a protein belongs to Chou and Zhang, 1995. (C) The regression task is for applications where we want to predict real output values, for example, the brightness of a fluorescent protein (Lu et al., 2021).

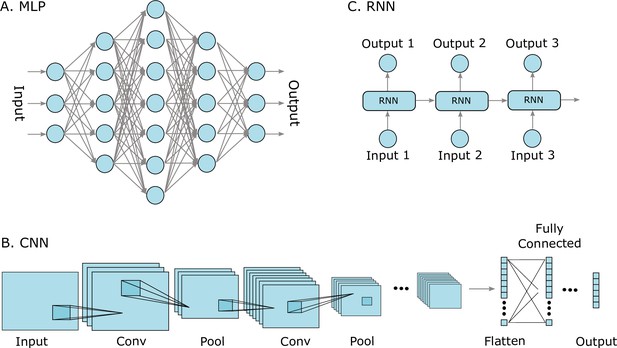

Three well-known deep learning models.

(A) Multilayer perceptrons (MLPs) are characterized by an input layer, several hidden layers, and an output layer. (B) Convolutional neural networks (CNNs) use convolution operations in their layers and learn filters that automatically extract features from the input sequences (e.g. from images, audio signals, time series, or protein sequences). At some point, the learned image features are strung out as a vector, called flattening, and are often passed on to fully connected layers at the end. (C) A recurrent neural network (RNNs) is a model that processes an input sequence step-by-step with one element in the sequence at a time.

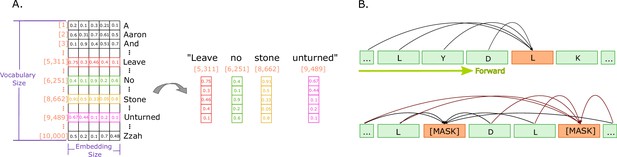

Illustrations of embeddings and of next and masked token predictions.

(A) An illustration of real-valued vector representations (input embeddings) of the tokens for a sample sentence. Each square represents a numerical value in the vector representation. The vector for each word in the sentence is obtained by looking up the unique ID attributed to the word with the ID in a vocabulary. Each word embedding is of the same size, called the embedding size, and they must be found in the vocabulary (in the illustration, the vocabulary size is 10,000 words). (B) The two main training approaches for protein language models, and specifically for Transformers. The top part illustrates autoregressive language modelling (predicting the next token), and the bottom part illustrates masked language modelling (predict a few missing, or masked tokens).

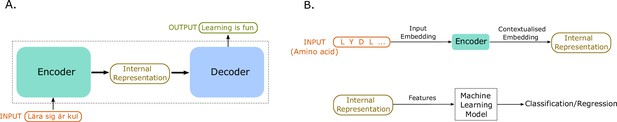

An illustration of sequence-to-sequence models and of how to use the internal representations for down-stream machine learning tasks.

(A) The conceptual idea behind sequence-to-sequence models. The Transformer model by Vaswani et al., 2017 has a similar form, to map the input sequence to an output sequence using an encoder and a decoder. (B) An example application of the Transformer language model for protein property prediction. The input embedding is contextualized using the encoder block, which gives an internal representation, the model’s embedding of the input sequence. The internal representation is then used as features of the amino acids and can be passed in a second step to a machine learning model. The decoder block is not normally used after training since it does not serve much purpose in protein property prediction but is a critical component for training in natural language processing (NLP) applications such as language translation.

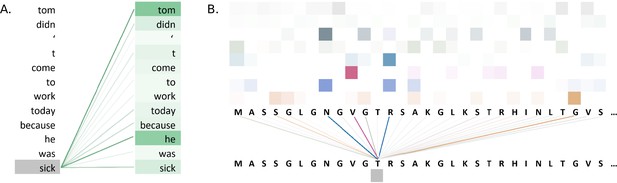

Visualisations of the attention weights in transformer models.

(A) A visualization of the attention weights in a BERT model. The weights are from the first attention head of the eighth layer of the model. The model has a total of 12 layers and 12 attention heads. In this example, the model connects the words 'tom' and 'he' to the word 'sick' (darker lines indicate larger weights). Visualization inspired by BertViz (Vig, 2019a; https://github.com/jessevig/bertviz; Vig, 2022). (B) Attention weights visualization showing that a protein language model learned to put more weight from one residue onto four other residues in one layer. The shades of a particular colour (horizontal order) correspond to an attention head in the layer of the Transformer. Dark shades indicate stronger attention and are hence shown with darker lines connecting the tokens.

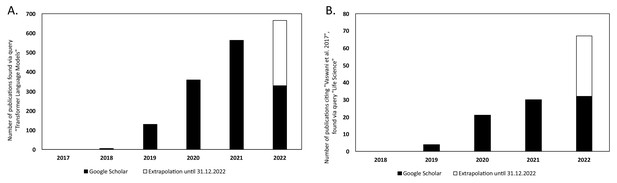

Yearly number of publications on Google Scholar for the years 2017–2021 and extrapolated count for the year 2022.

For: (A) the search query 'Transformer Language Model' and (B) the search query 'Life Science' that have cited the original Transformer paper by Vaswani et al., 2017.

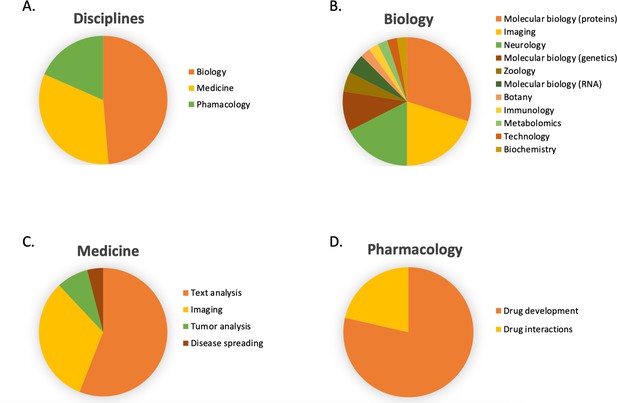

The article counts for the three main disciplines (medicine, pharmacology, and biology) and percentage breakdown of their sub-categories in Google Scholar citing the 'Attention is all you need' paper by Vaswani et al., 2017.

The search was based on the query 'Life Science' and included all scientific research papers from 2017 to 2022 (cut-off on 2022-06-23).

Tables

The performance on five datasets, i.e. the five feature sets (Phy + Bio, BigramPGK, T5, ESM1b-avg, and ESM1b-concate) by five classification models (LR, SVM (poly), SVM (RBF), RF, and LightGBM) evaluated using accuracy (ACC) and the area under the receiver operating characteristic curve (AUC).

The reported cross-validation results are the mean over the five CV rounds. Standard errors for both CV and test are in the parenthesis. The highest scores are highlighted in bold. CV: five-fold cross-validation; Test: held-out test set.

| LR | SVM (poly) | SVM (RBF) | RF | LightGBM | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| ACC | AUC | ACC | AUC | ACC | AUC | ACC | AUC | ACC | AUC | ||

| Phy +Bio | CV | 0.550 (0.032) | 0.546 (0.012) | 0.614 (0.017) | 0.550 (0.027) | 0.545 (0.035) | 0.552 (0.013) | 0.609 (0.010) | 0.564 (0.034) | 0.525 (0.027) | 0.498 (0.029) |

| Test | 0.471 (0.071) | 0.395 (0.083) | 0.588 (0.070) | 0.552 (0.083) | 0.471 (0.071) | 0.371 (0.082) | 0.628 (0.068) | 0.489 (0.084) | 0.529 (0.071) | 0.503 (0.084) | |

| BigramPGK | CV | 0.678 (0.026) | 0.686 (0.019) | 0.590 (0.025) | 0.723 (0.025) | 0.599 (0.004) | 0.711 (0.030) | 0.698 (0.008) | 0.707 (0.025) | 0.629 (0.024) | 0.627 (0.028) |

| Test | 0.628 (0.068) | 0.686 (0.074) | 0.647 (0.068) | 0.666 (0.076) | 0.608 (0.069) | 0.668 (0.076) | 0.686 (0.066) | 0.742 (0.069) | 0.706 (0.064) | 0.742 (0.069) | |

| T5 | CV | 0.704 (0.038) | 0.742 (0.039) | 0.713 (0.035) | 0.744 (0.038) | 0.713 (0.034) | 0.737 (0.041) | 0.634 (0.021) | 0.747 (0.041) | 0.668 (0.022) | 0.756 (0.018) |

| Test | 0.647 (0.068) | 0.726 (0.070) | 0.628 (0.068) | 0.726 (0.070) | 0.628 (0.068) | 0.737 (0.069) | 0.647 (0.068) | 0.736 (0.070) | 0.471 (0.071) | 0.592 (0.081) | |

| ESM-1b-avg | CV | 0.768 (0.025) | 0.830 (0.025) | 0.748 (0.022) | 0.826 (0.028) | 0.599 (0.004) | 0.785 (0.055) | 0.639 (0.015) | 0.745 (0.058) | 0.708 (0.020) | 0.741 (0.044) |

| Test | 0.726 (0.063) | 0.803 (0.061) | 0.667 (0.067) | 0.813 (0.059) | 0.608 (0.069) | 0.811 (0.060) | 0.628 (0.068) | 0.719 (0.071) | 0.647 (0.068) | 0.748 (0.068) | |

| ESM-1b-concate | CV | 0.782 (0.012) | 0.852 (0.015) | 0.792 (0.014) | 0.853 (0.015) | 0.773 (0.015) | 0.844 (0.023) | 0.609 (0.017) | 0.742 (0.048) | 0.718 (0.017) | 0.755 (0.039) |

| Test | 0.745 (0.062) | 0.797 (0.062) | 0.745 (0.062) | 0.824 (0.057) | 0.726 (0.063) | 0.798 (0.061) | 0.628 (0.068) | 0.850 (0.053) | 0.667 (0.067) | 0.726 (0.070) | |

Additional files

-

Supplementary file 1

Some of the commonly used pre-trained Transformer models in the literature.

The higher the number of Transformer parameters, the larger the model.

- https://cdn.elifesciences.org/articles/82819/elife-82819-supp1-v1.docx