Attention modulates human visual responses to objects by tuning sharpening

Figures

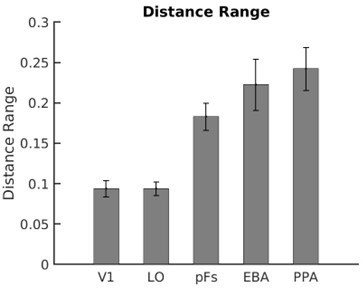

Stimuli, paradigm, and regions of interest.

(A) Top images represent the four categories used in the main experiment: body, car, house, and cat. The stimulus set consisted of 10 exemplars from each category (here: cats), with exemplars differing in pose and 3D-orientation. (B) The experimental design comprised 16 task conditions (12 paired, four isolated). The 4×4 matrix on the left illustrates the 12 paired conditions, with the to-be-attended category (outlined in orange for illustration purposes, not present in the experiment) on the y-axis and the to-be-ignored category on the x-axis. The right column illustrates the four isolated conditions. (C) Experimental paradigm. A paired block is depicted with superimposed body and house stimuli. In this example block, house stimuli were cued as targets, and the participant responded on the repetition of the exact same house in two consecutive trials, as marked here by the arrow. (D) Regions of interest for an example participant; the primary visual cortex (V1), the object-selective regions lateral occipital cortex (LO) and posterior fusiform gyrus (pFs), the body-selective region extrastriate body area (EBA), and the scene-selective region parahippocampal place area (PPA).

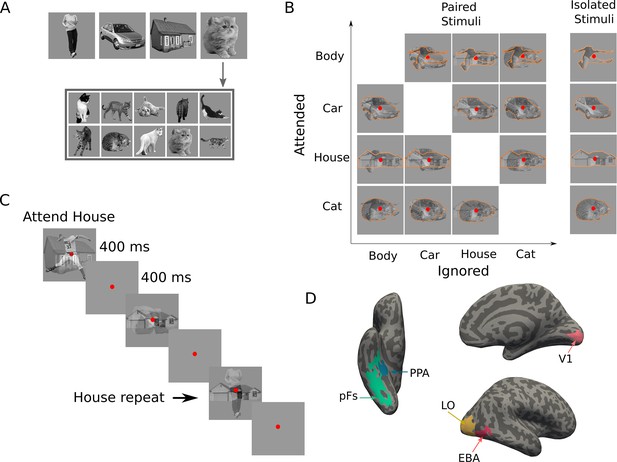

Behavioral performance of the participants during the recording.

B, Cr, H, and Ct represent the Body, Car, House, and Cat categories, respectively. Each x-axis label represents the two conditions with stimuli from the same two categories but with different attentional targets, with the dark gray bar illustrating the results related to the condition in which the first category was attended, and the light gray bar representing the results in the condition in which the second category was attended. For instance, the B-Cr bars represent the results related to the and conditions, respectively. Error bars represent standard errors of the mean. N=15 human participants. (A) Average detection rate for each category pair. (B) Average reaction time for each category pair.

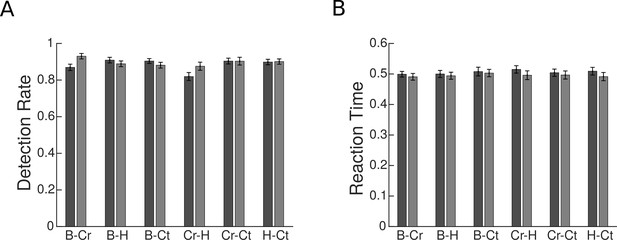

Response vectors related to and stimuli in isolated and paired conditions.

(A) Example illustration of the isolated and paired responses in three-dimensional space. and denote the response vectors related to isolated and isolated conditions and illustrates the response in the paired condition with attention directed to stimulus . The paired response is projected on the plane defined by the two isolated responses and . This projection is illustrated by . (B) Two-dimensional illustration of the plane defined by the two isolated response vectors and , along with the paired response vectors and and as the projection of the paired response, , on the plane. We calculated the weight of the two isolated responses in the paired response using multiple regression, with the weights of and shown as and , respectively.

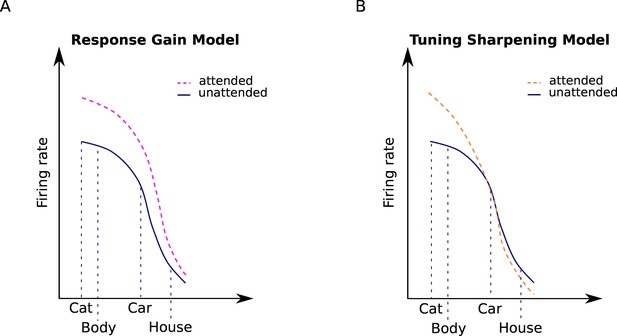

Attentional modulation by the response gain and tuning sharpening models.

We illustrate the models here for the example of a neuron with high selectivity for cat stimuli. Solid curves denote the response to unattended stimuli and dashed curves denote the response to attended stimuli. (A) According to the response gain model, the response of the neuron to attended stimuli is scaled by a constant attention factor. Therefore, the response of the cat-selective neuron to an attended stimulus is enhanced to the same degree for all stimuli. (B) According to the tuning sharpening model, the response modulation by attention depends on the neuron’s tuning for the attended stimulus. Therefore, for optimal and near-optimal stimuli such as cat and body stimuli the response is highly increased, while for non-optimal stimuli such as houses, the response is suppressed.

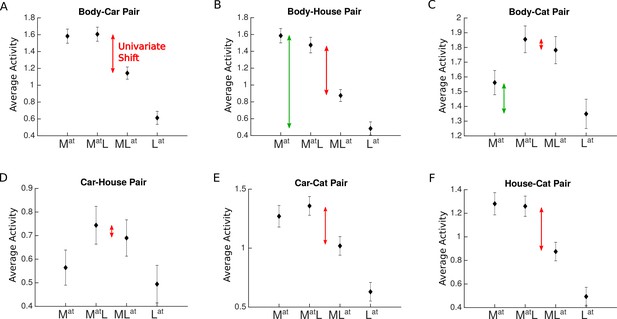

Average voxel response in the extastriate body area (EBA) for each pair of stimulus categories.

The x-axis labels represent the four conditions related to each category pair, , , , , with and denoting the presence of the more preferred and the less preferred category and the superscript denoting the attended category. For instance, refers to the condition in which the more preferred stimulus was presented in isolation (and automatically attended), and refers to the paired condition in which the less preferred stimulus was attended to. Red arrows in each panel illustrate the observed change in response (univariate shift) caused by the shift of attention from the more preferred to the less preferred stimulus. Green arrows in panels B and C illustrate the difference in the response to isolated stimuli. Error bars represent standard errors of the mean. N=17 human participants.

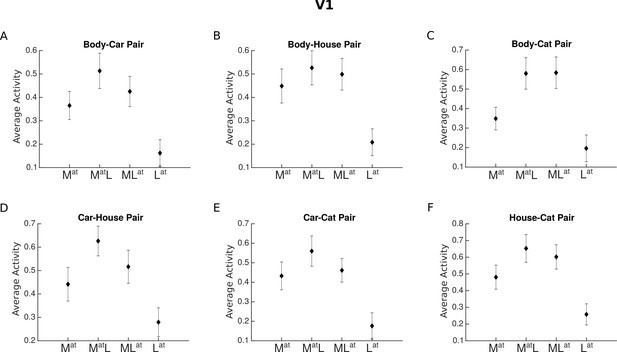

Average voxel response in the primary visual cortex (V1) for each pair of stimulus categories.

The x-axis labels represent the four conditions related to each category pair, , , , , with , and denoting the presence of the more preferred and the less preferred category and the superscript denoting the attended category. For instance, refers to the condition in which the more preferred stimulus was presented in isolation (and automatically attended), and refers to the paired condition in which the less preferred stimulus was attended to.

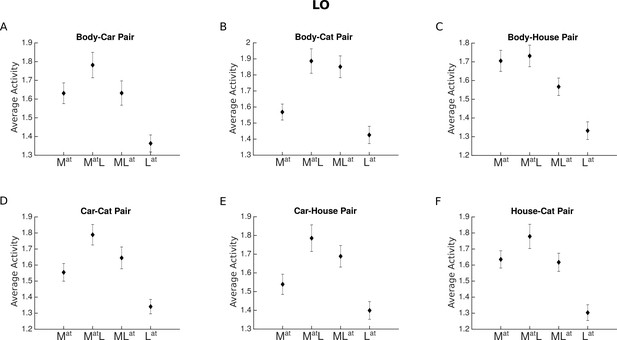

Average voxel response in the lateral occipital cortex (LO) for each pair of stimulus categories.

The x-axis labels represent the four conditions related to each category pair, , , , , with , and denoting the presence of the more preferred and the less preferred category and the superscript denoting the attended category.

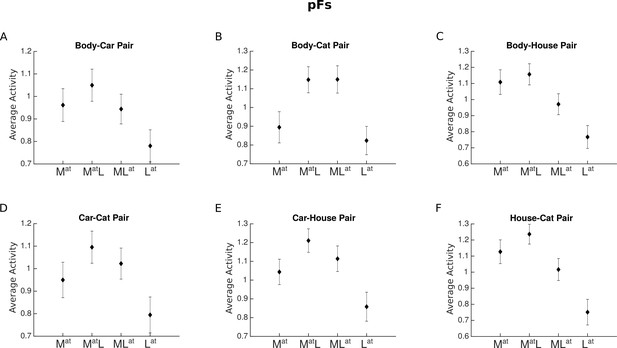

Average voxel response in the posterior fusiform gyrus (pFs) for each pair of stimulus categories.

The x-axis labels represent the four conditions related to each category pair, , , , , with , and denoting the presence of the more preferred and the less preferred category and the superscript denoting the attended category.

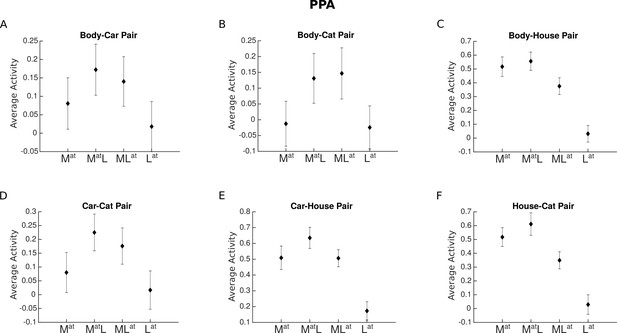

Average voxel response in the parahippocampal place area (PPA) for each pair of stimulus categories.

The x-axis labels represent the four conditions related to each category pair, , , , , with , and denoting the presence of the more preferred and the less preferred category and the superscript denoting the attended category.

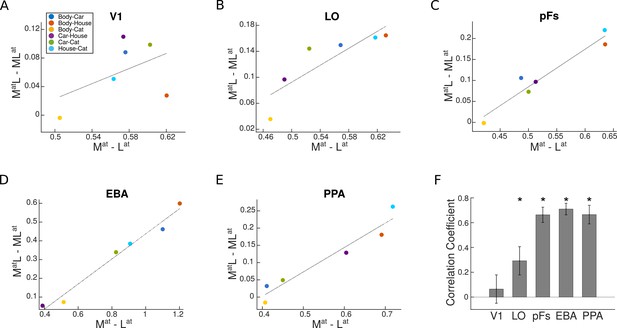

Univariate shift versus category distance in each region of interest (ROI).

(A–E) The value of univariate shift versus category distance. and denote the two paired conditions with attention directed to the more preferred (M) or less preferred (L) stimulus, respectively. and represent the isolated conditions, respectively, with the more preferred or the less preferred stimulus presented in isolation. The blue, red, yellow, purple, green, and sky blue circles in each panel represent the values related to the Body-Car, Body-House, Body-Cat, Car-House, Car-Cat, and House-Cat pairs, respectively. The correlation coefficients in each ROI were calculated for single participants and the lines in the average plots are only shown for illustration purposes. (F) Correlation coefficient for the correlation between the univariate shift and category distance in each ROI. Asterisks indicate that the correlation coefficients are significantly positive (). Error bars represent standard errors of the mean. N=17 human participants.

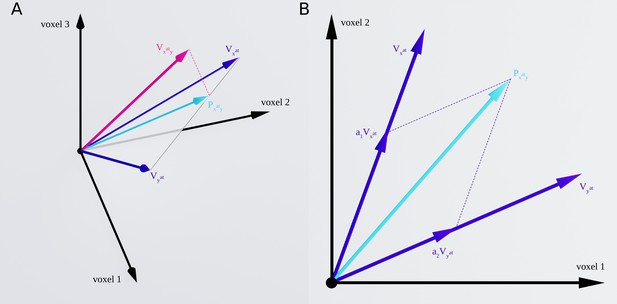

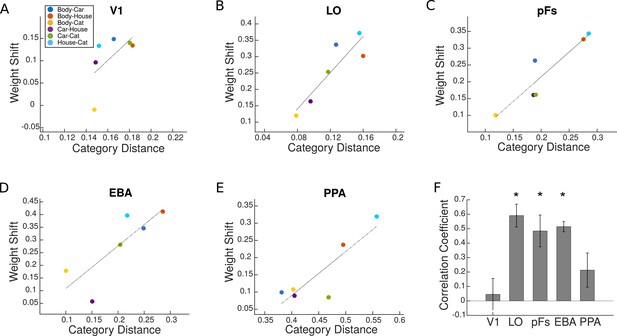

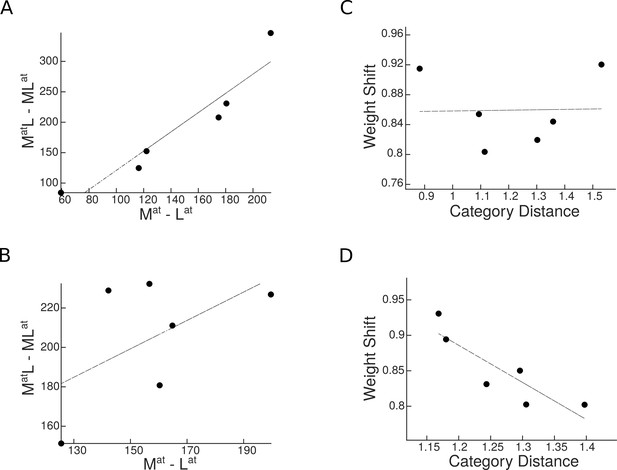

Weight shift versus category distance in each region of interest (ROI).

(A–E) Attentional weight shift versus category distance. The blue, red, yellow, purple, green, and sky blue circles in each panel represent the values related to the Body-Car, Body-House, Body-Cat, Car-House, Car-Cat, and House-Cat pairs, respectively. We calculated the correlation coefficients for single participants and the lines in these average plots are only for illustration purposes. (F) Correlation coefficient for the correlation between attentional weight shift and category distance in each ROI. Asterisks indicate that the correlations are significantly positive (). Error bars represent standard errors of the mean. N=17 human participants.

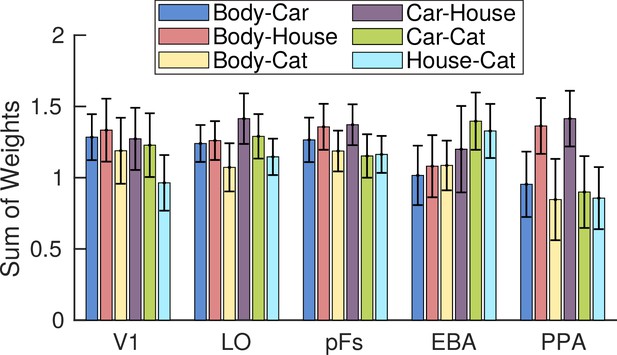

Sum of weights in the multivariate analysis for each category pair in each region of interest (ROI), averaged across conditions with attention directed to each of the two categories of a pair and across participants.

For instance, the blue Body-Car bars represent the average results related to the and conditions, averaged across participants. Error bars represent standard errors of the mean. N=17 human participants.

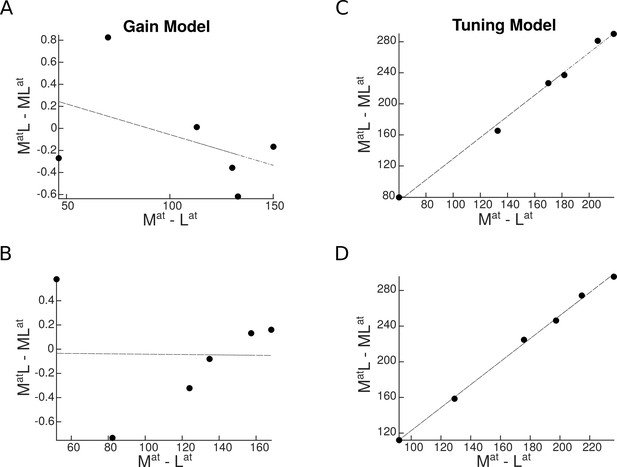

Univariate shift as a function of category distance, as predicted by the two attentional mechanisms.

and denote the two paired conditions with attention directed to the more preferred () or the less preferred () stimulus, respectively. and represent the isolated conditions, respectively with the or the stimulus presented in isolation. Top panels represent predictions in a region with a strong preference for a specific category, and bottom panels illustrate predictions in an object-selective region. Each circle represents a pair of categories. (A–B) Predicted univariate shift based on the response gain model in a region with a strong preference for a specific category (A) and in an object-selective region (B). (C–D) Predicted univariate shift based on the tuning model in a region with strong preference for a specific category (C) and in an object-selective region (D).

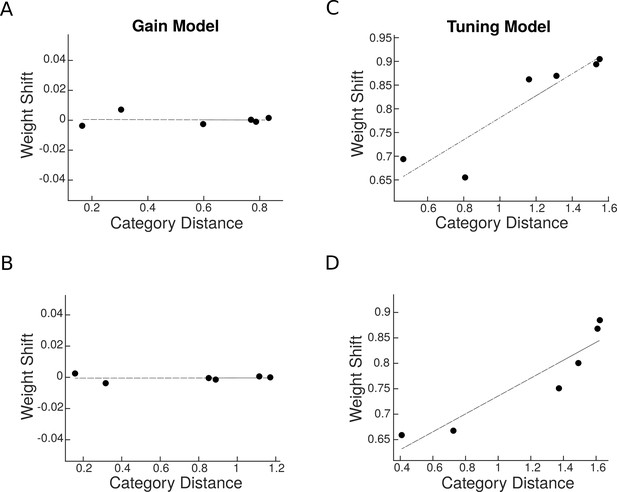

Predicted weight shift as a function of category distance.

Weight shift for each pair is calculated using Equation 5. Category distance represents the difference in multi-voxel representation between responses to the two isolated stimuli, calculated by Equation 3. Top panels are related to predictions in a region with a strong preference for a specific category and the bottom panels illustrate predictions in an object-selective region. (A–B) Weight shift predicted by the response gain model in a region with a strong preference for a specific category (A) and in an object-selective region (B). (C–D) Weight shift predicted by the tuning model in a region with strong preference for a specific category (C) and in an object-selective region (D).

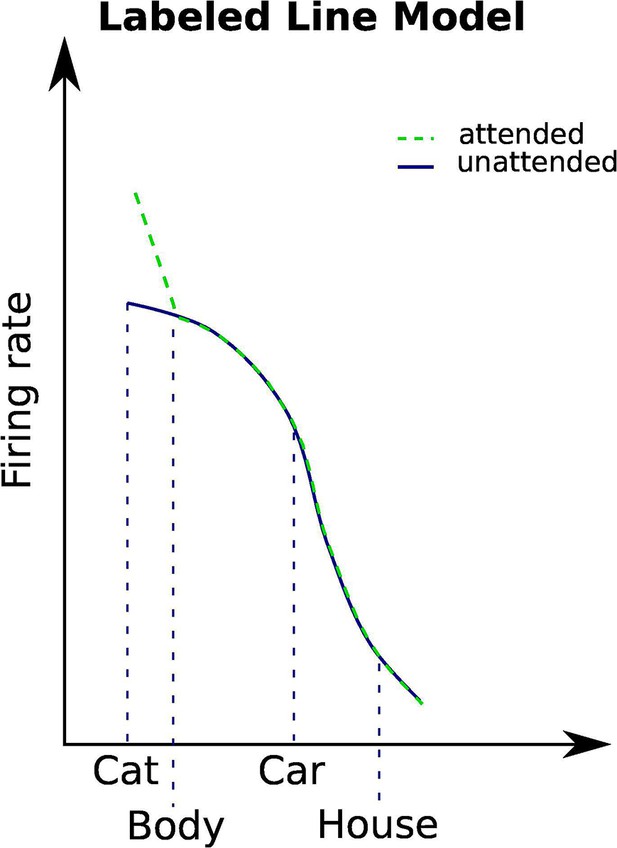

Based on the labeled line model, attention enhances the response of a neuron only when the attended stimulus is the neuron’s preferred stimulus.

We illustrate the models here for the example of a neuron with high selectivity for cat stimuli. Solid curves denote the response to unattended stimuli and dashed curves denote the response to attended stimuli.

Simulation results for the labeled line model.

(A–B) Predicted univariate shift based on the labeled line model in a region with a strong preference for a specific category (A) and in an object-selective region (B). (C–D) Weight shift predicted by the labeled line model in a region with a strong preference for a specific category (C) and in an object-selective region (D).

Univariate shift versus category distance including single-subject data points in all ROIs.

Tables

Percentage of voxels in each regions of interest (ROI) most responsive to each of the four stimulus categories, averaged across participants.

| Preferred Category | V1 | LO | pFs | EBA | PPA |

|---|---|---|---|---|---|

| Body | 21% | 31% | 21% | 70% | 10% |

| Car | 19% | 18% | 17% | 4% | 12% |

| House | 33% | 32% | 40% | 3% | 68% |

| Cat | 27% | 19% | 22% | 23% | 10% |

Average voxel response (general linear model, GLM coefficients) to each category in each region of interest (ROI), averaged across participants.

| Stimulus Category | V1 | LO | pFs | EBA | PPA |

|---|---|---|---|---|---|

| Body | 0.28 | 1.59 | 0.88 | 1.62 | 0.001 |

| Car | 0.28 | 1.43 | 0.88 | 0.60 | 0.12 |

| House | 0.47 | 1.51 | 1.03 | 0.48 | 0.55 |

| Cat | 0.35 | 1.49 | 0.89 | 1.32 | –0.02 |

Single-subject correlation coefficients for the correlation between the univariate shift and category distance in all regions of interest (ROIs).

| V1 | LO | pFs | EBA | PPA |

|---|---|---|---|---|

| –0.60 | 0.67 | –0.073 | 0.72 | 0.18 |

| 0.16 | 0.38 | 0.015 | 0.64 | 0.46 |

| –0.53 | 0.52 | 0.89 | 0.60 | 0.42 |

| –0.062 | 0.78 | 0.93 | 0.87 | 0.69 |

| –0.098 | 0.072 | 1.0 | 0.72 | 0.96 |

| 0.63 | 0.93 | 0.88 | 0.94 | 0.58 |

| –0.31 | 0.52 | –0.11 | 0.88 | 0.89 |

| 0.29 | 0.18 | 0.62 | 0.67 | 0.63 |

| –0.49 | 0.094 | 0.63 | 0.81 | 0.80 |

| 0.21 | 0.57 | 0.31 | 0.81 | 0.87 |

| –0.015 | –0.57 | 0.79 | 0.35 | 0.69 |

| 0.35 | 0.79 | 0.90 | 0.90 | 0.88 |

| –0.47 | 0.058 | –0.050 | 0.93 | –0.24 |

| –0.047 | –0.21 | 0.91 | 0.28 | 0.86 |

| 0.66 | 0.70 | 0.91 | 0.64 | 0.45 |

| –0.74 | 0.54 | 0.24 | 0.60 | –0.31 |

| –0.095 | 0.73 | 0.89 | 0.64 | 0.95 |

Weights of each stimulus for each category pair in each region of interest (ROI), averaged across participants.

| Pair | Attended Stimulus | V1 | LO | pFs | EBA | PPA |

|---|---|---|---|---|---|---|

| Body-Car | Body | |||||

| Body-Car | Car | |||||

| Body-House | Body | |||||

| Body-House | House | |||||

| Body-Cat | Body | |||||

| Body-Cat | Cat | |||||

| Car-House | Car | |||||

| Car-House | House | |||||

| Car-Cat | Car | |||||

| Car-Cat | Cat | |||||

| House-Cat | House | |||||

| House-Cat | Cat |

Single-subject correlation coefficients for the correlation between weight shift and category distance in all regions of interests (ROIs).

| V1 | LO | pFs | EBA | PPA |

|---|---|---|---|---|

| –0.36 | 0.93 | –0.25 | 0.46 | 0.06 |

| 0.31 | –0.06 | 0.16 | 0.82 | –0.25 |

| –0.29 | 0.35 | 0.80 | 0.48 | –0.42 |

| –0.038 | 0.70 | 0.75 | 0.57 | 0.38 |

| 0.72 | 0.60 | 0.90 | 0.58 | –0.77 |

| 0.53 | 0.69 | 0.76 | 0.51 | 0.59 |

| –0.32 | 0.90 | –0.25 | 0.29 | 0.065 |

| 0.33 | 0.22 | –0.36 | 0.24 | –0.48 |

| 0.52 | 0.31 | 0.63 | 0.54 | 0.91 |

| 0.019 | 0.96 | 0.80 | 0.61 | 0.71 |

| –0.11 | 0.87 | 0.89 | 0.69 | 0.51 |

| –0.27 | 0.94 | 0.81 | 0.63 | 0.50 |

| –0.28 | 0.28 | –0.11 | 0.49 | 0.59 |

| 0.42 | 0.11 | 0.61 | 0.42 | 0.59 |

| 0.67 | 0.77 | 0.78 | 0.36 | 0.36 |

| –0.96 | 0.78 | 0.48 | 0.43 | –0.24 |

| –0.12 | 0.69 | 0.82 | 0.63 | 0.56 |