A deep learning framework for automated and generalized synaptic event analysis

Figures

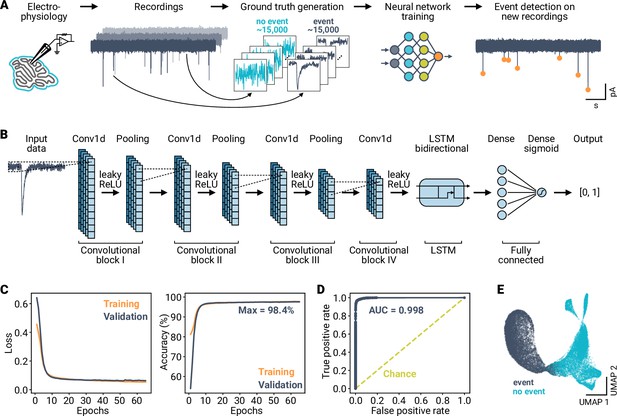

High performance classification of synaptic events using a deep neural network.

(A) Overview of the analysis workflow. Data segments from electrophysiological recordings are extracted and labeled to train an artificial neural network. The deep learning-based model is then applied to detect events in novel time-series data. (B) Schematic of the model design. Data is input to a convolutional network consisting of blocks of 1D convolutional, ReLU, and average pooling layers. The output of the convolutional layers is processed by a bidirectional LSTM block, followed by two fully connected layers. The final output is a label in the interval [0, 1]. (C) Loss (binary crossentropy) and accuracy of the model over training epochs for training and validation data. (D) Receiver operating characteristic of the best performing model. Area under the curve (AUC) is indicated; dashed line indicates performance of a random classifier. (E) UMAP representation of the training data as input to the final layer of the model, indicating linear separability of the two event classes after model training.

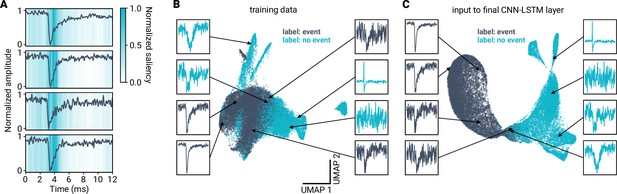

Visualization of model training.

(A) Saliency maps (Simonyan et al., 2013) for four example events of the training data. Darker regions indicate discriminative data segments. Data and saliency values are min-max scaled. (B) The miniML model transforms input to enhance separability. Shown is a Uniform Manifold Approximation and Projection (UMAP) dimensionality reduction of the original training dataset. (C) UMAP of the input to the final ML model layer. Examples of labeled training samples are illustrated. Model training greatly improves linear separability of the two labeled event classes.

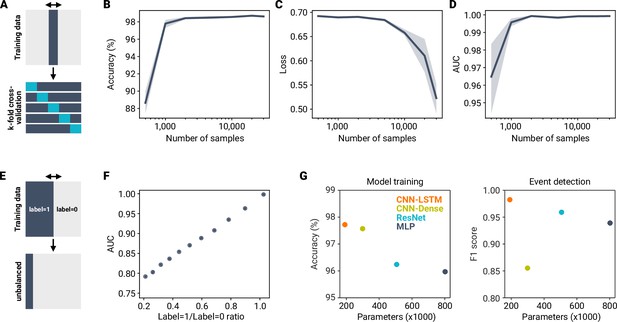

Impact of dataset size, class balance, and model architecture on training performance.

(A) To test how size of the training dataset impacts model training, we took random subsamples from the MF–GC dataset and trained miniML models using fivefold cross validation. (B–D) Comparison of loss (B), accuracy (C) and area under the ROC curve (AUC; D) across increasing dataset sizes. Data are means of model training sessions with k-fold cross-validation. Shaded areas represent SD. Note the log-scale of the abscissa. (E) Comparison of model training with unbalanced training data. (F) Area under the ROC curve for models trained with different levels of unbalanced training data. Unbalanced datasets impair classification performance. (G) Accuracy and F1 score for different model architectures plotted against number of free parameters. The CNN-LSTM architecture provided the best model performance with the lowest number of free parameters. EarlyStopping was used for all models to prevent overfitting (difference between training and validation accuracy <0.3%). ResNet, Residual Neural Network; MLP, multi-layer perceptron.

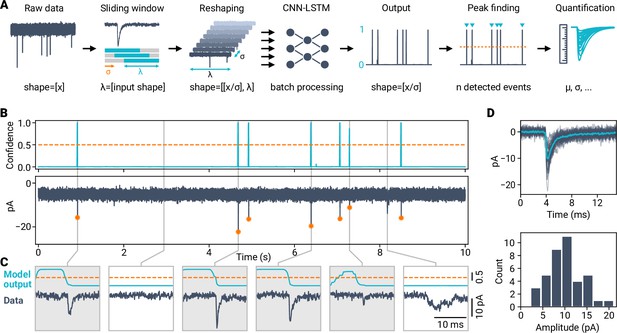

Applying AI-based classification to robustly detect synaptic events in electrophysiological time-series data.

(A) Event detection workflow using a deep learning classifier. Time-series data are reshaped with a window size corresponding to length of the training data and a given stride. Peak detection of the model output allows event localization (orange dashed line indicates peak threshold) and subsequent quantification. (B) Example of event detection in a voltage-clamp recording from a cerebellar granule cell. Top: Prediction trace (top) and corresponding raw data with synaptic events (bottom). Dashed line indicates minimum peak height of 0.5, orange circles indicate event localizations. (C) Zoom in for different data segments. Detected events are highlighted by gray boxes. (D) Event analysis for the cell shown in (B) (total recording time, 120 s). Top: Detected events with average (light blue). Bottom: Event amplitude histogram.

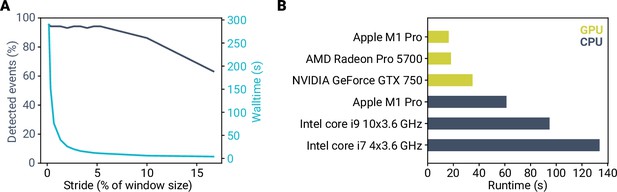

Fast computation time for event detection using miniML.

(A) Detected events and analysis runtime plotted versus stride. Note that runtime can be minimized by using stride sizes up to 5% of the event window size without impacting detection performance. (B) Analysis runtime with different computer hardware for a 120-s long recording at 50 kHz sampling (total of 6,000,000 samples). Runtime is given as wall time including event analysis. GPU computing enables analysis runtimes shorter than 20 s.

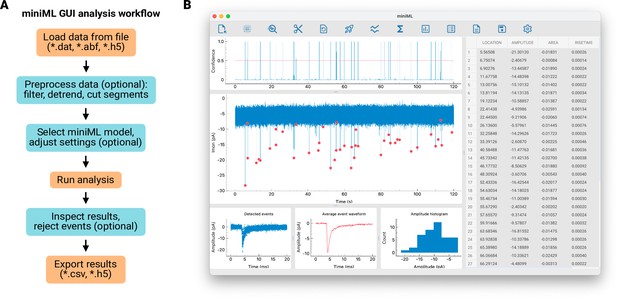

A graphical user interface for miniML.

(A) Workflow for synaptic event analysis using miniML. Optional steps include data pre-processing, model selection, and event rejection. (B) Screenshot of the graphical user interface (GUI). Users can use the GUI to load, inspect, and analyze data. After running the event detection, all detected events are marked by red dots. Individual event parameters are displayed in tabular form, where events can be enlarged and rejected. The final results can be saved in different formats via the GUI.

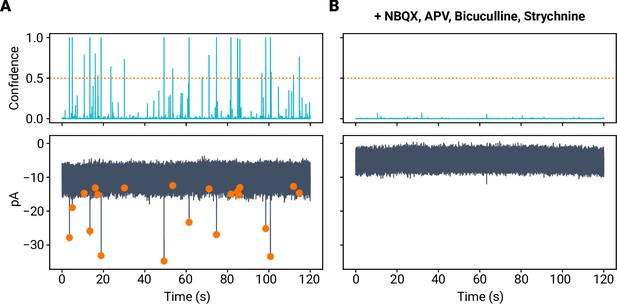

miniML performance on event-free data.

(A) Confidence (top) and raw data with detected events from a MF–GC recording. Dashed line indicates miniML minimum peak height. (B) Recording from the same cell after addition of blockers of synaptic transmission (NBQX, APV, Bicuculline, Strychnine). miniML does not detect any events under these conditions. Note that addition of Bicuculline blocks tonic inhibition in cerebellar GCs, causing a reduction in holding current and reduced noise (Kita et al., 2021).

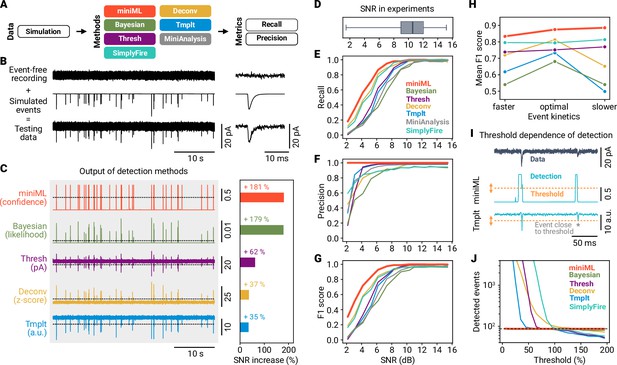

Systematic benchmarking demonstrates that AI-based event detection is superior to previous methods.

(A) Scheme of event detection benchmarking. Six methods are compared using precision and recall metrics. (B) Event-free recordings were superimposed with generated events to create ground-truth data. Depicted example data have a signal-to-noise ratio (SNR) of 9 dB. (C) Left: Output of the detection methods for data in (B). Right: Improvement in SNR relative to the data. Note that MiniAnalysis is omitted from this analysis because the software does not provide output trace data. (D) SNR from mEPSC recordings at MF–GC synapses (n = 170, whiskers cover full range of data). (E–G) Recall, precision, and F1 score versus SNR for the six different methods. Data are averages of three independent runs for each SNR. (H) Average F1 score versus event kinetics. Detection methods relying on an event template (template-matching, deconvolution and Bayesian) are not very robust to changes in event kinetics. (I) Evaluating the threshold dependence of detection methods. Asterisk marks event close to detection threshold. (J) Number of detected events vs. threshold (in % of default threshold value, range 5–195) for different methods. Dashed line indicates true event number.

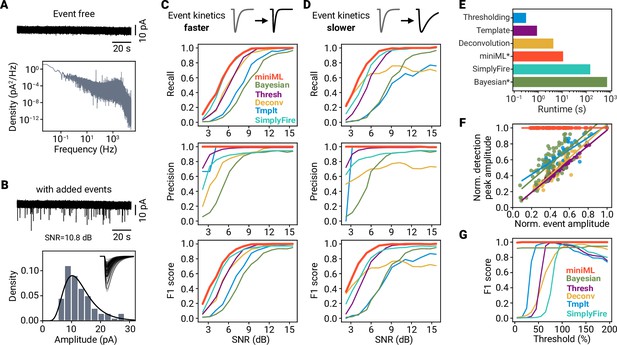

Extended benchmarking and threshold dependence of event detection.

(A) Example event-free recording (Top) and power density spectrum of the data (Bottom). (B) Top: Data trace from (A) superimposed with simulated synaptic events. Bottom: Amplitude histogram of simulated events for a signal-to-noise ratio (SNR) of 10.8 dB. Solid line represents the log-normal distribution from which event amplitudes were drawn; Inset shows individual simulated events. (C) Recall, precision and F1 score for five detection methods with simulated events that have a 2× faster decay than in Figure 3. (D) Same as in (C), but for events with a 4.5× slower decay time constant. Note that we did not adjust detection hyperparameters for the comparisons in (C) and (D). (E) Runtime of five different synaptic event detection methods for a 120 s section of data recorded with 50 kHz sampling rate. Average wall-time of five runs. * miniML was run using a GPU, and data were downsampled to 20 kHz for the Bayesian analysis. (F) Normalized amplitude of the peaks in the different methods’ detection traces (‘detection peak amplitude’) versus normalized event amplitude. A linear dependence indicates that detection traces contain implicit amplitude information, which will cause a strong threshold dependence of the detection result. Note that miniML’s output does not contain amplitude information. (G) F1 score versus threshold (in % of default threshold value, range 5–195) for different methods.

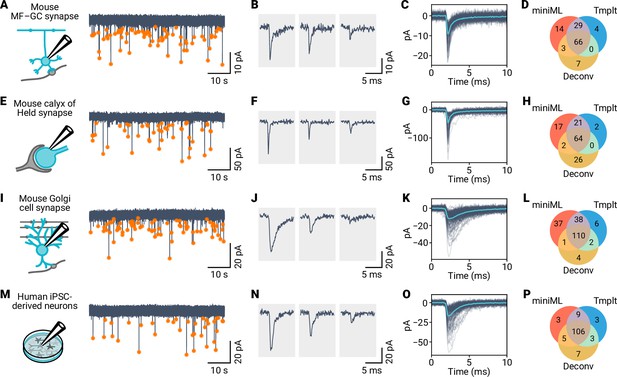

Application of miniML to electrophysiological recordings from diverse synaptic preparations.

(A) Schematic and example voltage-clamp recordings from mouse cerebellar GCs. Orange circles mark detected events. (B) Representative individual mEPSC events detected by miniML. (C) All detected events from (A), aligned and overlaid with average (light blue). (C) Detected event numbers for miniML, template matching, and deconvolution. (E–H) Same as in (A–D), but for recordings from mouse calyx of Held synapses. (I–L) Same as in (A–D), but for recordings from mouse cerebellar Golgi cells. (M–P) Same as in (A–D), but for recordings from cultured human induced pluripotent stem cell (iPSC)-derived neurons. miniML consistently detects spontaneous events in all four preparations and retrieves more events than matched-filtering approaches.

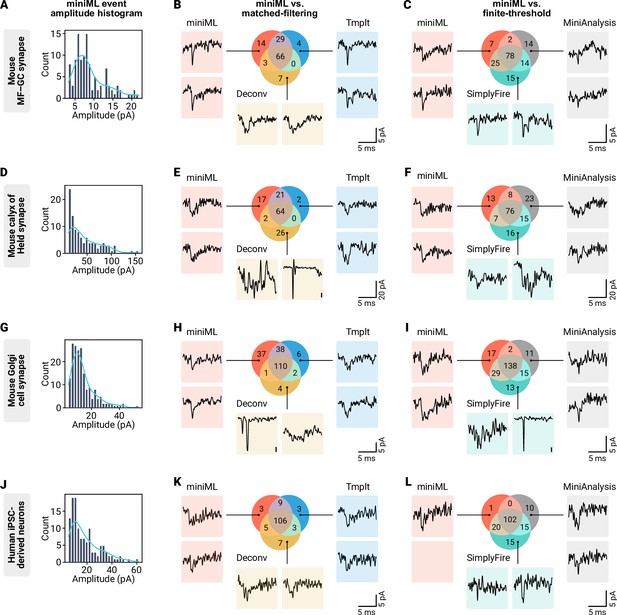

Event detection in different synaptic preparations.

(A) Amplitude histogram with kernel-density estimate (light blue line) of miniML-detected events for the mouse granule cell recording shown in Figure 4. (B) Detected events for miniML and matched-filtering approaches. Color-coded representative examples of events unique to any of the three detection methods are shown. (C) Detected events for miniML and two finite-threshold approaches with representative unique detected events. (D–F) Same as in (A–C), but for the calyx of Held example. (G–H) Same as in (A–C), but for the Golgi cell example. Due to the slower event kinetics, miniML was run with a 1.5× larger window size. (J–L) Same as in (A–C), but for the hiPSC-derived neuron example. Example event traces were filtered for display purposes with a 18-samples Hann window.

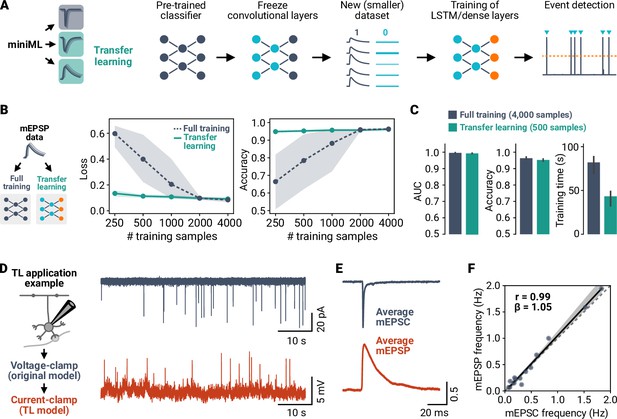

Transfer learning allows analyzing different types of events with small amounts of training data.

(A) Illustration of the transfer learning (TL) approach. The convolutional layers of a trained miniML model are frozen before retraining with new, smaller datasets. (B) Comparison of TL and full training for MF–GC mEPSP data. Shown are loss and accuracy versus sample size for full training and TL. Indicated subsets of the dataset were split into training and validation sets; lines are averages of fivefold crossvalidation, shaded areas represent 95% CI. Note the log-scale of the abscissa. (C) Average AUC, accuracy, and training time of models trained with the full dataset or with TL on a reduced dataset. TL models yield comparable classification performance with reduced training time using only 12.5% of the samples. (D) Example recordings of synaptic events in voltage-clamp and current-clamp mode consecutively from the same neuron. (E) Average peak normalized mEPSC and mEPSP waveform from the example in (D). Note the different event kinetics depending on recording mode. (F) Event frequency for mEPSPs plotted against mEPSCs. Dashed line represents identity, solid line with shaded area represents linear regression.

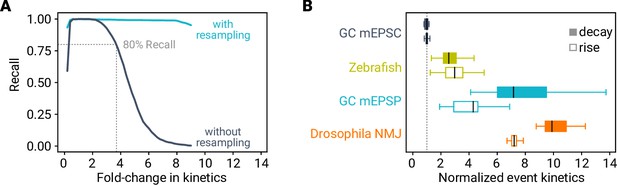

Recall depends on event kinetics.

(A) Recall versus event kinetics for the MF–GC model. Kinetics (i.e. rise and decay time constants) of simulated events were changed as indicated. miniML robustly detects events with up to ∼fourfold slower kinetics (dark blue, dashed line indicates 80% recall). Resampling of the data improves the recall in synthetic data with altered event kinetics (light blue). (B) Event kinetics for different preparations and/or recording modes. Data are normalized to MF–GC mEPSCs (dashed line).

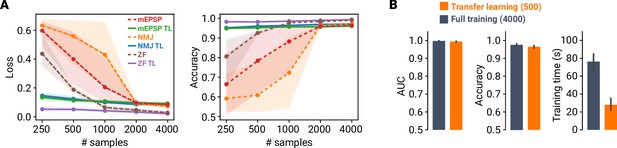

Transfer learning facilitates model training across different datasets.

(A) Loss and accuracy versus number of training dataset samples for three different datasets (mouse MF–GC mEPSPs, Drosophila NMJ mEPSCs, zebrafish (ZF) spontaneous EPSCs). Dashed lines indicate transfer learning (TL), whereas solid lines depict results from full training. Points are averages of fivefold cross-validation and shaded areas represent 95% CI. (B) Average AUC, accuracy, and training time for TL using 500 samples, and full training using 4000 samples. Error bars denote 95% CI.

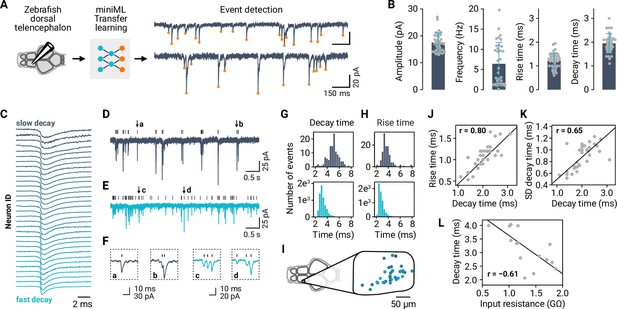

Synaptic event detection for neurons in a full-brain explant preparation of adult zebrafish.

(A) Application of TL to facilitate event detection for EPSC recordings. (B) Extraction of amplitudes, event frequencies, decay times, and rise time for all neurons in the dataset (n = 34). Bars are means and error bars denote SD. (C) Typical (mean) event kinetics for the analyzed neurons, ordered by decay times. Event traces are peak-normalized. (D–E) Example recordings with slow (dark blue) and fast (light blue) event kinetics. (F) Examples of events taken from (D–E), illustrating the diversity of kinetics within and across neurons. (G) Distribution of event decay kinetics across single events for two example neurons (traces shown in (D–E)). (H) Distribution of event rise kinetics across single events for same two example neurons. (I) Mapping of decay times (color-coded as in (C)) onto the anatomical map of the recording subregion of the telencephalon. (J) Mean decay and rise times are correlated across neurons. (K) Decay time distributions are broader (SD of decay time distributions) when mean decay times are larger. (L) Input resistance as a proxy for cell size is negatively correlated with the decay time.

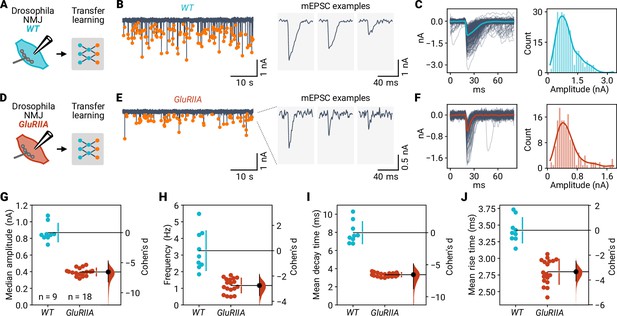

Event detection at Drosophila neuromuscular synapses upon altered glutamate receptor composition.

(A) Two-electrode voltage-clamp recordings from wild-type (WT) Drosophila NMJs were analyzed using miniML with transfer learning. (B) Left: Example voltage-clamp recording with detected events highlighted. Right: Three individual mEPSCs on expanded time scale. (C) Left: All detected events from the example in (B) overlaid with the average (blue line). Right: Event amplitude histogram. (D–F) Same as in (A–C), but for GluRIIA mutant flies. (G) Comparison of median event amplitude for WT and GluRIIA NMJs. Both groups are plotted on the left axes; Cohen’s d is plotted on a floating axis on the right as a bootstrap sampling distribution (red). The mean difference is depicted as a dot (black); the 95% confidence interval is indicated by the ends of the vertical error bar. (H) Event frequency is lower in GluRIIA mutant NMJs than in WT. (I) Knockout of GluRIIA speeds event decay time. (J) Faster event rise times in GluRIIA mutant NMJs.

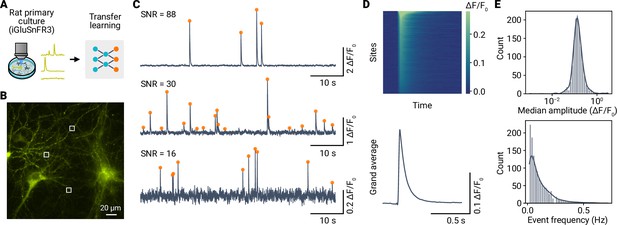

Optical detection of spontaneous glutamate release events in cultured neurons using iGluSnFR3 and miniML.

(A) miniML was applied to recordings from rat primary culture neurons expressing iGluSnFR3. Data from Aggarwal et al., 2023. (B) Example epifluorescence image of iGluSnFR3-expressing cultured neurons. Image shows a single frame with three example regions of interest (ROIs) indicated. (C) traces for the regions shown in (B). Orange circles indicate detected optical minis. Note the different signal-to-noise ratios of the examples. (D) Top: Heatmap showing average optical minis for all sites with detected events, sorted by amplitude. Bottom: Grand average optical mini. (E) Top: Histogram with kernel density estimate (solid line) of event amplitudes for n = 1524 ROIs of the example in (B). Note the log-scale of the abscissa. Bottom: Event frequency histogram.

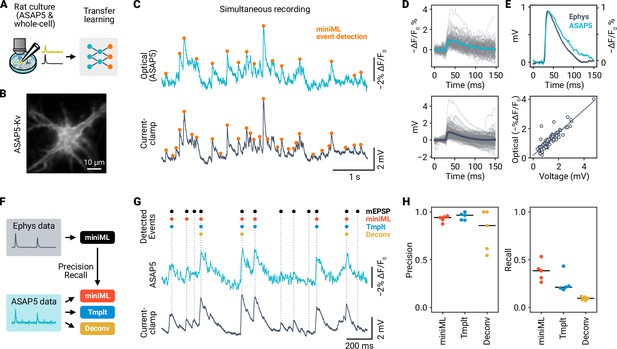

Optical detection of mEPSPs in cultured rat hippocampal neurons using ASAP5 and miniML.

(A) Event detection in simultaneous recordings of membrane voltage using electrophysiology and ASAP5 voltage imaging. (B) Example epifluorescence image of an ASAP5-Kv-expressing cultured rat hippocampal neuron. (C) trace (Top) and simultaneous current-clamp recording (Bottom) from the neuron shown in (B). Events detected by miniML are indicated. (D) Detected events from the example recording in (B–C) overlaid with average (colored line). Top: ASAP5-Kv recording. Bottom: Current-clamp recording. (E) Top: Peak-scaled average mEPSP (dark blue) and optical events (light blue). Bottom: Amplitudes measured in optical and electrophysiological data are highly correlated (Pearson correlation coefficient r = 0.9). (F) Comparative analysis of detection performance in ASAP5 data. (G) Example ASAP5 recording with detected event positions indicated for different analysis methods (miniML, template matching, deconvolution). (H) Precision and Recall of all three detection methods for n = 5 neurons. miniML showed highest recall (miniML, 0.38 ± 0.05; template matching, 0.25 ± 0.05 (Cohen’s d, 1.25); deconvolution, 0.09 ± 0.01 (Cohen’s d, 3.87); mean ± SEM) with similar precision (0.93 ± 0.01; 0.95 ± 0.02; 0.8 ± 0.1). Bars are median values.

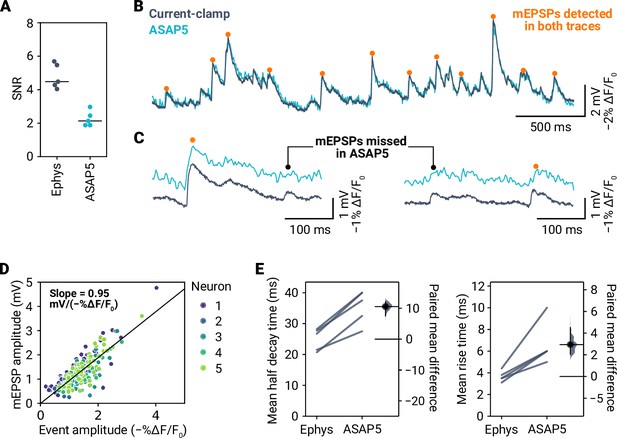

mEPSP detection in ASAP5 recordings.

(A) Signal-to-noise ratio (SNR) of mEPSPs in electrophysiology and ASAP5 for five neurons. (B) Overlay of simultaneous current-clamp and ASAP5 recording. Events detected by miniML in both types of recordings are indicated. (C) Examples of mEPSPs detected in the electrophysiology data that were either detected by miniML in ASAP5 data (orange dots) or missed (blacked dots). (D) mEPSP amplitude versus optical amplitude for detected events in five neurons (color-coded). Line is a linear fit to the data (slope = 0.95 mV/−%, Pearson correlation coefficient = 0.83). (E) Event half decay time (Left) and rise time (Right) for five neurons from electrophysiology (’Ephys’) and ASAP5 data. Data were calculated from the event averages of each cell. The lower sampling rate of imaging acquisition (400 Hz) vs. electrophysiology (10,000 Hz) likely contributes to the slower event kinetics observed in ASAP5 data.

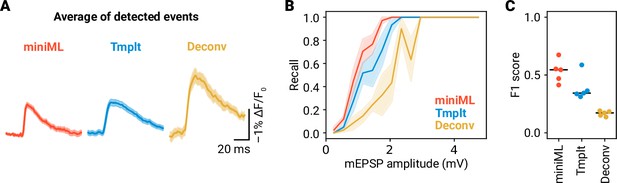

Methods comparison for event detection in ASAP5 recordings.

(A) Average waveforms of detected events in ASAP5 data for miniML (n = 101 events), template-matching (n = 82 events), and deconvolution (n = 15 events). Data are from the example shown in Figure 9C, shaded areas represent SEM. (B) Recall of events in ASAP5 data versus mEPSP amplitude for miniML, template-matching, and deconvolution. Lines are averages of five recordings with SEM as shaded area. (C) F1 score for event detection in ASAP5 data was higher using miniML (0.53 ± 0.04 , mean ± SEM) than for template-matching (0.39 ± 0.05; Cohen’s d, 1.35) and deconvolution (0.17 ± 0.01; Cohen’s d, 5.1). Bars are median values, n = 5 neurons.

Tables

| Reagent type (species) or resource | Designation | Source or reference | Identifiers | Additional information |

|---|---|---|---|---|

| Strain, strain background (Mus musculus, ♀ and ♂) | C57BL/6JRj | Janvier Labs | RRID:MGI:2670020 | |

| Chemical compound, drug | D-APV | Tocris | Cat. # 0106 | 50 µM |

| Chemical compound, drug | NBQX | HelloBio | Cat. # HB0443 | 10 µM |

| Chemical compound, drug | Bicuculline | Sigma | Cat. # 14343 | 10 µM |

| Chemical compound, drug | Strychnine | Sigma | Cat. # S8753 | 1 µM |

| Software, algorithm | Patchmaster | HEKA Elektronik | RRID:SCR_000034 | Version 2x90.5 |

| Software, algorithm | Mini Analysis Program | Synaptosoft | RRID:SCR_002184 | Version 6.0 |

| Software, algorithm | Python | Python Software Foundation | RRID:SCR_008394 | Version 3.9 or 3.10 |

| Software, algorithm | TensorFlow | RRID:SCR_016345 | Version 2.12 or 2.15 | |

| Other | Borosilicate glass | Science Products | Cat. # GB150F-10P | Outer/inner diameter: 1.5/0.86 mm |

Overview of model architecture.

| Block | Layer | Output shape | Parameters | Settings | Values |

|---|---|---|---|---|---|

| Convolutional Block I | 1D Convolutional | (None, 600, 32) | 320 | Filters | 32 |

| Layer | Kernel Size | 9 | |||

| Padding | ’same’ | ||||

| Batch Normalization | (None, 600, 32) | 128 | All | default | |

| Leaky ReLU | (None, 600, 32) | 0 | 0.3 | ||

| Avg. Pooling | (None, 200, 32) | 0 | Pool Size | 3 | |

| Strides | 3 | ||||

| Convolutional Block II | 1D Convolutional | (None, 200, 48) | 10’800 | Filters | 48 |

| Layer | Kernel Size | 7 | |||

| Padding | ’same’ | ||||

| Batch Normalization | (None, 200, 48) | 192 | All | default | |

| Leaky ReLU | (None, 200, 48) | 0 | 0.3 | ||

| Avg. Pooling | (None, 100, 48) | 0 | Pool Size | 2 | |

| Strides | 2 | ||||

| Convolutional Block III | 1D Convolutional | (None, 100, 64) | 15’424 | Filters | 64 |

| Layer | Kernel Size | 5 | |||

| Padding | ’same’ | ||||

| Batch Normalization | (None, 100, 64) | 256 | All | default | |

| Leaky ReLU | (None, 100, 64) | 0 | 0.3 | ||

| Avg. Pooling | (None, 50, 64) | 0 | Pool size | 2 | |

| Strides | 2 | ||||

| Convolutional Block IV | 1D Convolutional | (None, 50, 80) | 15’440 | Filters | 80 |

| Layer | Kernel Size | 5 | |||

| Padding | ’same’ | ||||

| Batch Normalization | (None, 50, 80) | 320 | All | default | |

| Leaky ReLU | (None, 50, 80) | 0 | 0.3 | ||

| LSTM Layer | Bidirectional LSTM | (None, 96) | 135’936 | Units | 96 |

| Dropout Rate | 0.2 | ||||

| Merge Mode | ’sum’ | ||||

| Activation | tanh | ||||

| Fully Connected Layers | Dense | (None, 128) | 12’416 | Units | 128 |

| Activation | Leaky ReLU | ||||

| Dropout | (None, 128) | 0 | Dropout Rate | 0.2 | |

| Dense | (None, 1) | 129 | Units | 1 | |

| Activation | sigmoid |