Peer review process

Not revised: This Reviewed Preprint includes the authors’ original preprint (without revision), an eLife assessment, public reviews, and a provisional response from the authors.

Read more about eLife’s peer review process.Editors

- Reviewing EditorMimi LiljeholmUniversity of California, Irvine, Irvine, United States of America

- Senior EditorMichael FrankBrown University, Providence, United States of America

Reviewer #1 (Public review):

In this study, the authors aim to elucidate both how Pavlovian biases affect instrumental learning from childhood to adulthood, as well as how reward outcomes during learning influence incidental memory. While prior work has investigated both of these questions, findings have been mixed. The authors aim to contribute additional evidence to clarify the nature of developmental changes in these processes. Through a well-validated affective learning task and a large age-continuous sample of participants, the authors reveal that adolescents outperform children and adults when Pavlovian biases and instrumental learning are aligned, but that learning performance does not vary by age when they are misaligned. They also show that younger participants show greater memory sensitivity for images presented alongside rewards.

The manuscript has notable strengths. The task was carefully designed and modified with a clever, developmentally appropriate cover story, and the large sample size (N = 174) means their study was better powered than many comparable developmental learning studies. The addition of the memory measure adds a novel component to the design. The authors transparently report their somewhat confusing findings.

The manuscript also has weaknesses, which I describe in detail below.

It was not entirely clear to me what central question the researchers aimed to address. They note that prior studies using a very similar learning task design have reported inconsistent findings, but they do not propose a reason for why these inconsistent findings may emerge nor do they test a plausible cause of them (in contrast, for example, Raab et al. 2024 explicitly tested the idea that developmental changes in inferences about controllability may explain age-related change in Pavlovian influences on learning). While the authors test a sample of participants that is very large compared to many developmental studies of reinforcement learning, this sample is much smaller than two prior developmental studies that have used the same learning task (and which the authors cite - Betts et al., 2020; Moutoussis et al., 2018). Thus, the overall goal seems to be to add an additional ~170 subjects of data to the existing literature, which isn't problematic per se, but doesn't do much to advance our theoretical understanding of learning across development. They happen to find a pattern of results that differs from all three prior studies, and it is not clear how to interpret this.

Along those lines, the authors extend prior work by adding a memory manipulation to the task, in which trial-unique images were presented alongside reward outcomes. It was not clear to me whether the authors see the learning and memory questions as fundamentally connected or as two separate research questions that this paradigm allows them to address. The manuscript would potentially be more impactful if the authors integrated their discussion of these two ideas more. Did they have any a priori hypotheses about how Pavlovian biases may affect the encoding of incidentally presented images? Could heightened reward sensitivity explain both changes in learning and changes in memory? It was also not clear to me why the authors hypothesized that younger participants would demonstrate the greatest effects of reward on memory, when most of the introduction seems to suggest they might hypothesize an adolescent peak in both learning and memory.

As stated above, while the task methods seemed sound, some of the analytic decisions are potentially problematic and/or require greater justification for the results of the study to be interpretable.

Firstly, it is problematic not to include random participant slopes in the regression models. Not accounting for individual variation in the effects of interest may inflate Type I errors. I would suggest that the authors start with the maximal model, or follow the same model selection procedure they did to select the fixed effects to include for the random effects as well.

Secondly, the central learning finding - that adolescents demonstrate enhanced learning in Pavlovian-congruent conditions only - is interesting, but it is unclear why this is the case or how much should be made of this finding. The authors show that adolescents outperform others in the Pavlovian-congruent conditions but not the Pavlovian-incongruent conditions. However, this conclusion is made by analyzing the two conditions separately; they do not directly compare the strength of the adolescent peak across these conditions, which would be needed to draw this strong conclusion. Given that no prior study using the same learning design has found this, the authors should ensure that their evidence for it is strong before drawing firm conclusions.

It was also not clear to me whether any of the RL models that the authors fit could potentially explain this pattern. Presumably, they need an algorithmic mechanism in which the Pavlovian bias is enhanced when it is rewarded. This seems potentially feasible to implement and could help explain the condition-specific performance boosts.

I also have major concerns about the computational model-fitting results. While the authors seemingly follow a sound approach, the majority of the fitted lapse rates (Figure S10) are near 1. This suggests that for most participants, the best-fitting model is one in which choices are random. This may be why the authors do not observe age-related change in model parameters: for these subjects, the other parameter values are essentially meaningless since they contribute to the learned value estimate, which gets multiplied by a near-0 weight in the choice function. It is important that the authors clarify what is going on here. Is it the case that most of these subjects truly choose at random? It does seem from Figure 2A that there is extensive variability in performance. It might be helpful if the authors re-analyze their data, excluding participants who show no evidence of learning or of reward-seeking behavior. Alternatively, are there other biases that are not being accounted for (e.g., choice perseveration) that may contribute to the high lapse rates?

Parameter recovery also looks poor, particularly for gain & loss sensitivity, the lapse rate, and the Pavlovian bias - several parameters of interest. As noted above, this may be due to the fact that many of the simulations were conducted with lapse rates sampled from the empirical distribution. It would be helpful for the authors to a.) plot separately parameter recoverability for high and low lapse rates and b.) report the recoverability correlation for each parameter separately.

Finally, many of the analytic decisions made regarding the memory analyses were confusing and merit further justification.

(1) First, it seems as though the authors only analyze memory data from trials where participants "could gain a reward". Does this mean only half of the memory trials were included in the analyses? What about memory as a function of whether participants made a "correct" response? Or a correct x reward interaction effect?

(2) The RPE analysis overcomes this issue by including all trials, but the trial-wise RPEs are potentially not informative given the lapse rate issue described above.

(3) The authors exclude correct guesses but include incorrect guesses. Is this common practice in the memory literature? It seems like this could introduce some bias into the results, especially if there are age-related changes in meta-memory.

(4) Participants provided a continuum of confidence ratings, but the authors computed d' by discretizing memory into 'correct' or 'incorrect'. A more sensitive approach could compute memory ROC curves taking into account the full confidence data (e.g., Brady et al., 2020).

(5) The learning and memory tradeoff idea is interesting, but it was not clear to me what variables went into that regression model.

Reviewer #2 (Public review):

The authors of this study set out to investigate whether adolescents demonstrate enhanced instrumental learning compared to children and adults, particularly when their natural instincts align with the actions required in a learning task, using the Affective Go/No-Go Task. Their aim was to explore how motivational drives, such as sensitivity to rewards versus avoiding losses, and the congruence between automatic responses to cues and deliberate actions (termed Pavlovian-congruency) influence learning across development, while also examining incidental memory enhancements tied to positive outcomes. Additionally, they sought to uncover the cognitive mechanisms underlying these age-related differences through behavioral analyses and reinforcement learning models.

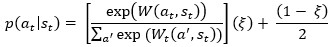

The study's major strengths lie in its rigorous methodological approach and comprehensive analysis. The use of mixed-effects logistic regression and beta-binomial regression models, with careful comparison of nested models to identify the best fit (e.g., a significant ΔBIC of 19), provides a robust framework for assessing age-related effects on learning accuracy. The task design, which separates action (pressing a key or holding back) from outcome type (earning money or avoiding a loss) across four door cues, effectively isolates these factors, allowing the authors to highlight adolescent-specific advantages in Pavlovian-congruent conditions (e.g., Go to Win and No-Go to Avoid Loss), supported by significant quadratic age interactions (p < .001). The inclusion of reaction time data and a behavioral metric of Pavlovian bias further strengthens the evidence, showing adolescents' faster responses and greater reliance on instinctual cues in congruent scenarios. The exploration of incidental memory, with a clear reward memory bias in younger participants (p < .001), adds a valuable dimension, suggesting a learning-memory trade-off that enriches the study's scope. However, weaknesses include minor inconsistencies, such as the reinforcement learning model's Pavlovian bias parameter not reflecting an adolescent enhancement despite behavioral evidence, and a weak correlation between learning and memory accuracy (r = -.17), which may indicate incomplete integration of these processes.

The authors largely achieved their aims, with the results providing convincing support for their conclusion that Pavlovian-congruency boosts instrumental learning in adolescence. The significant quadratic age effects on overall learning accuracy (p = .001) and in congruent conditions (e.g., p = .01 for Go to Win), alongside faster reaction times in these scenarios, convincingly demonstrate an adolescent peak in performance. While the reinforcement learning model's lack of an adolescent-specific Pavlovian bias parameter introduces a slight caveat, the behavioral and statistical evidence collectively align with the hypothesis, suggesting that adolescents leverage their natural instincts more effectively when these align with task demands. The incidental memory findings, showing younger participants' enhanced recall for reward-paired images, partially support the secondary aim, though the trade-off with learning accuracy warrants further exploration.

This work is likely to have an important impact on the field, offering valuable insights into developmental differences in learning and memory that could influence educational practices and psychological interventions tailored to adolescents. The methods, particularly the task's orthogonal design and probabilistic feedback, are useful to the community for studying motivation and cognition across ages, while the detailed regression analyses and reinforcement learning approach provide a solid foundation for future replication and extension. The data, including trial-by-trial accuracy and memory performance, are openly shareable, enhancing their utility for researchers exploring similar questions, though refining the model-parameter alignment could strengthen its broader applicability.