Peer review process

Revised: This Reviewed Preprint has been revised by the authors in response to the previous round of peer review; the eLife assessment and the public reviews have been updated where necessary by the editors and peer reviewers.

Read more about eLife’s peer review process.Editors

- Reviewing EditorMichael FrankBrown University, Providence, United States of America

- Senior EditorMichael FrankBrown University, Providence, United States of America

Reviewer #1 (Public Review):

People can perform a wide variety of different tasks, and a long-standing question in cognitive neuroscience is how the properties of different tasks are represented in the brain. The authors develop an interesting task that mixes two different sources of difficulty, and find that the brain appears to represent this mixture on a continuum, in the prefrontal areas involved in resolving task difficulty. While these results are interesting and in several ways compelling, they overlap with previous findings and rely on novel statistical analyses that may require further validation.

Strengths

1. The authors present an interesting and novel task for combining the contributions of stimulus-stimulus and stimulus-response conflict. While this mixture has been measured in the multi-source interference task (MSIT), this task provides a more graded mixture between these two sources of difficulty.

2. The authors do a good job triangulating regions that encoding conflict similarity, looking for the conjunction across several different measures of conflict encoding. These conflict measures use several best-practice approaches towards estimating representational similarity.

3. The authors quantify several salient alternative hypothesis, and systematically distinguish their core results from these alternatives.

4. The question that the authors tackle is important to cognitive control, and they make a solid contribution.

Concerns

1. The framing of 'infinite possible types of conflict' feels like a strawman. While they might be true across stimuli (which may motivate a feature-based account of control), the authors explore the interpolation between two stimuli. Instead, this work provides confirmatory evidence that task difficulty is represented parametrically (e.g., consistent with literatures like n-back, multiple object tracking, and random dot motion). This parametric encoding is standard in feature-based attention, and it's not clear what the cognitive map framing is contributing.

2. The representations within DLPFC appear to treat 100% Stoop and (to a lesser extent) 100% Simon differently than mixed trials. Within mixed trials, the RDM within this region don't strongly match the predictions of the conflict similarity model. It appears that there may be a more complex relationship encoded in this region.

3. To orthogonalized their variables, the authors need to employ a complex linear mixed effects analysis, with a potential influence of implementation details (e.g., high-level interactions and inflated degrees of freedom).

Reviewer #2 (Public Review):

Summary

This study examines the construct of "cognitive spaces" as they relate to neural coding schemes present in response conflict tasks. The authors use a novel experimental design in which different types of response conflict (spatial Stroop, Simon) are parametrically manipulated. These conflict types are hypothesized to be encoded jointly, within an abstract "cognitive space", in which distances between task conditions depend only on the similarity of conflict types (i.e., where conditions with similar relative proportions of spatial-Stroop versus Simon conflicts are represented with similar activity patterns). Authors contrast such a representational scheme for conflict with several other conceptually distinct schemes, including a domain-general, domain-specific, and two task-specific schemes. The authors conduct a behavioral and fMRI study to test whether prefrontal cortex activity is correlated to one of these coding schemes. Replicating the authors' prior work, this study demonstrates that sequential behavioral adjustments (the congruency sequence effect) are modulated as a function of the similarity between conflict types. In fMRI data, univariate analyses identified activation in left prefrontal and dorsomedial frontal cortex that was modulated by the amount of Stroop or Simon conflict present, and representational similarity analyses that identified coding of conflict similarity, as predicted under the cognitive space model, in right lateral prefrontal cortex.

Strengths

This study addresses an important question regarding how conflict or difficulty might be encoded in the brain within a computationally efficient representational format. Relative to the other models reported in the paper, the evidence in support of the cognitive space model is solid. The ideas postulated by the authors are interesting and valuable ones, worthy of follow-up work that provides additional necessary scrutiny of the cognitive-space account.

Weaknesses

Future, within-subject experiments will be necessary to disentangle the cognitive space model from confounded task variables. A between-subjects manipulation of stimulus orientation/location renders the results difficult to interpret, as the source and spatial scale of the conflict encoding on cortex may differ from more rigorous (and more typical) within-subject manipulations.

Results are also difficult to interpret because Stroop and Simon conflict are confounded with each other. For interpretability, these two sources of conflict need to be manipulated orthogonally, so that each source of conflict (as well as their interaction) could be separately estimated and compared in terms of neural encoding. For example, it is therefore not clear whether the RSA results are due to encoding of only one type of conflict (Stroop or Simon), to a combination of both, and/or to interactive effects.

Finally, the motivation for the use of the term "cognitive space" to describe results is unclear. Evidence for the mere presence of a graded/parametric neural encoding (i.e., the reported conflict RSA effects) would not seem to be sufficient. Indeed, it is discussed in the manuscript that cognitive spaces/maps allow for flexibility through inference and generalization. Future work should therefore focus on linking neural conflict encoding to inference and generalization more directly.

Author Response

The following is the authors’ response to the previous reviews.

Thank you and the reviewers for further providing constructive comments and suggestions on our manuscript. On behalf of all the co-authors, I have enclosed a revised version of the above referenced paper. Below, I have merged similar public reviews and recommendations (if applicable) from each reviewer and provided point-by-point responses.

Reviewer #1:

People can perform a wide variety of different tasks, and a long-standing question in cognitive neuroscience is how the properties of different tasks are represented in the brain. The authors develop an interesting task that mixes two different sources of difficulty, and find that the brain appears to represent this mixture on a continuum, in the prefrontal areas involved in resolving task difficulty. While these results are interesting and in several ways compelling, they overlap with previous findings and rely on novel statistical analyses that may require further validation.

Strengths

- The authors present an interesting and novel task for combining the contributions of stimulus-stimulus and stimulus-response conflict. While this mixture has been measured in the multi-source interference task (MSIT), this task provides a more graded mixture between these two sources of difficulty.

- The authors do a good job triangulating regions that encoding conflict similarity, looking for the conjunction across several different measures of conflict encoding. These conflict measures use several best-practice approaches towards estimating representational similarity.

- The authors quantify several salient alternative hypothesis and systematically distinguish their core results from these alternatives.

- The question that the authors tackle is important to cognitive control, and they make a solid contribution.

The authors have addressed several of my concerns. I appreciate the authors implementing best practices in their neuroimaging stats.

I think that the concerns that remain in my public review reflect the inherent limitations of the current work. The authors have done a good job working with the dataset they've collected.

Response: We would like to thank the reviewer for the positive evaluation of our manuscript and the constructive comments and suggestions. In response to your suggestions and concerns, we have removed the Stroop/Simon-only and the Stroop+Simon models, revised our conclusion and modified the misleading phrases.

We have provided detailed responses to your comments below.

- The evidence from this previous work for mixtures between different conflict sources makes the framing of 'infinite possible types of conflict' feel like a strawman. The authors cite classic work (e.g., Kornblum et al., 1990) that develops a typology for conflict which is far from infinite. I think few people would argue that every possible source and level of difficulty will have to be learned separately. This work provides confirmatory evidence that task difficulty is represented parametrically (e.g., consistent with the n-back, MOT, and random dot motion literature).

notes for my public concerns.

In their response, the authors say:

'If each combination of the Stroop-Simon combination is regarded as a conflict condition, there would be infinite combinations, and it is our major goal to investigate how these infinite conflict conditions are represented effectively in a space with finite dimensions.'

I do think that this is a strawman. The paper doesn't make a strong case that this position ('infinite combinations') is widely held in the field. There is previous work (e.g., n-back, multiple object tracking, MSIT, dot motion) that has already shown parametric encoding of task difficulty. This paper provides confirmatory evidence, using an interesting new task, that demand are parametric, but does not provide a major theoretical advance.

Response: We agree that the previous expression may have seemed somewhat exaggerative. While it is not “infinite”, recent research indeed suggests that the cognitive control shows domain-specificity across various “domains”, including conflict types (Egner, 2008), sensory modalities (Yang et al., 2017), task-irrelevant stimuli (Spape et al., 2008), and task sets (Hazeltine et al., 2011), to name a few.

These findings collectively support the notion that cognitive control is contextspecific (Bream et al., 2014). That is, cognitive control can be tuned and associated with different (and potentially large numbers of) contexts. Recently, Kikumoto and Mayr (2020) demonstrated that combinations of stimulus, rule and response in the same task formed separatable, conjunctive representations. They further showed that these conjunctive representations facilitate performance. This is in line with the idea that each stimulus-location combination in the present task may be represented separately in a domain-specific manner. Moreover, domain-general task representation can also become domain-specific with learning, which further increases the number of domain-specific conjunctive representations (Mill et al., 2023). In line with the domain-specific account of cognitive control, we referred to the “infinite combinations” in our previous response to emphasize the extreme case of domainspecificity. However, recognizing that the term “infinite” may lead to ambiguity, we have replaced it with phrases such as “a large number of”, “hugely varied”, in our revised manuscript.

We appreciate the reviewer for highlighting the potential connection of our work to existing literature that showed the parametric encoding of task difficulty (e.g., Dagher et al., 1999; Ritz & Shenhav, 2023). For instance, in Ritz et al.’s (2023) study, they parametrically manipulated target difficulty based on consistent ratios of dot color, and found that the difficulty was encoded in the caudal part of dorsal anterior cingulate cortex. Analogically, in our study, the “difficulty” pertains to the behavioral congruency effect that we modulated within the spatial Stroop and Simon dimensions. Notably, we did identify univariate effects in the right dmPFC and IPS associated with the difficulty in the Simon dimension. This parametric effect may lend support to our cognitive space hypothesis, although we exercised caution in interpreting their significance due to the absence of a clear brain-behavioral relevance in these regions. We have added the connection of our work to prior literature in the discussion. The parametric encoding of conflict also mirrors prior research showing the parametric encoding of task demands (Dagher et al., 1999; Ritz & Shenhav, 2023).

However, our analyses extend beyond solely testing the parametric encoding of difficulty. Instead, we focused on the multivariate representation of different conflict types, which we believe is independent from the univariate parametric encoding. Unlike the univariate encoding that relies on the strength within one dimension, the multivariate representation of conflict types incorporates both the spatial Stroop and Simon dimensions. Furthermore, we found that similar difficulty levels did not yield similar conflict representation, as indicated by the low similarity between the spatial Stroop and Simon conditions, despite both showing a similar level of congruency effect (Fig. S1). Additionally, we also observed an interaction between conflict similarity and difficulty (i.e., congruency, Fig. 4B/D), such that the conflict similarity effect was more pronounced when conflict was present. Therefore, we believe that our findings make contribution to the literature beyond the difficulty effect.

Reference:

Egner, T. (2008). Multiple conflict-driven control mechanisms in the human brain. Trends in Cognitive Sciences, 12(10), 374-380. https://doi.org/10.1016/j.tics.2008.07.001

Yang, G., Nan, W., Zheng, Y., Wu, H., Li, Q., & Liu, X. (2017). Distinct cognitive control mechanisms as revealed by modality-specific conflict adaptation effects. Journal of Experimental Psychology: Human Perception and Performance, 43(4), 807-818. https://doi.org/10.1037/xhp0000351

Spapé MM, Hommel B (2008). He said, she said: episodic retrieval induces conflict adaptation in an auditory Stroop task. Psychonomic Bulletin Review,15(6):1117-21. https://doi.org/10.3758/PBR.15.6.1117

Hazeltine E, Lightman E, Schwarb H, Schumacher EH (2011). The boundaries of sequential modulations: evidence for set-level control. Journal of Experimental Psychology: Human Perception & Performance. 2011 Dec;37(6):1898-914. https://doi.org/10.1037/a0024662

Braem, S., Abrahamse, E. L., Duthoo, W., & Notebaert, W. (2014). What determines the specificity of conflict adaptation? A review, critical analysis, and proposed synthesis. Frontiers in Psychology, 5, 1134. https://doi.org/10.3389/fpsyg.2014.01134

Kikumoto A, Mayr U. (2020). Conjunctive representations that integrate stimuli, responses, and rules are critical for action selection. Proceedings of the National Academy of Sciences, 117(19):10603-10608. https://doi.org/10.1073/pnas.1922166117.

Mill, R. D., & Cole, M. W. (2023). Neural representation dynamics reveal computational principles of cognitive task learning. bioRxiv. https://doi.org/10.1101/2023.06.27.546751

Dagher, A., Owen, A. M., Boecker, H., & Brooks, D. J. (1999). Mapping the network for planning: a correlational PET activation study with the Tower of London task. Brain, 122 ( Pt 10), 1973-1987. https://doi.org/10.1093/brain/122.10.1973

Ritz, H., & Shenhav, A. (2023). Orthogonal neural encoding of targets and distractors supports multivariate cognitive control. https://doi.org/10.1101/2022.12.01.518771

- (Public Reviews) The degree of Stroop vs Simon conflict is perfectly negatively correlated across conditions. This limits their interpretation of an integrated cognitive space, as they cannot separately measure Stroop and Simon effects. The author's control analyses have limited ability to overcome this task limitation. While these results are consistent with parametric encoding, they cannot adjudicate between combined vs separated representations.

(Recommendations) I think that it is still an issue that the task's two features (stroop and simon conflict) are perfectly correlated. This fundamentally limits their ability to measure the similarity in these features. The authors provide several control analyses, but I think these are limited.

Response: We need to acknowledge that the spatial Stroop and Simon components in the five conflict conditions were not “perfectly” correlated, with r = –0.89. This leaves some room for the preliminary model comparison to adjudicate between these models. However, it’s essential to note that conclusions based on these results must be tempered. In line with the reviewer’s observation, we agree that the high correlation between the two conflict sources posed a potential limitation on our ability to independently investigate the contribution of spatial Stroop and Simon conflicts. Therefore, in addition to the limitation we have previously acknowledged, we have now further revised our conclusion and adjusted our expressions accordingly.

Specifically, we now regard the parametric encoding of cognitive control not as direct evidence of the cognitive space view but as preliminary evidence that led us to propose this hypothesis, which requires further testing. Notably, we have also modified the title from “Conflicts are represented in a cognitive space to reconcile domain-general and domain-specific cognitive control” to “Conflicts are parametrically encoded: initial evidence for a cognitive space view to reconcile the debate of domain-general and domain-specific cognitive control”. Also, we revised the conclusion as: In sum, we showed that the cognitive control can be parametrically encoded in the right dlPFC and guides cognitive control to adjust goal-directed behavior. This finding suggests that different cognitive control states may be encoded in an abstract cognitive space, which reconciles the long-standing debate between the domain-general and domain-specific views of cognitive control and provides a parsimonious and more broadly applicable framework for understanding how our brains efficiently and flexibly represents multiple task settings.

From Recommendations The authors perform control analyses that test stroop-only and simon-only models. However, these analyses use a totally different similarity metric, that's based on set intersection rather than geometry. This metric had limited justification or explanation, and it's not clear whether these models fit worse because of the similarity metric. Even here, Simon-only model fit better than Stroop+Simon model. The dimensionality analyses may reflect the 1d manipulation by the authors (i.e. perfectly corrected stroop and simon effects).

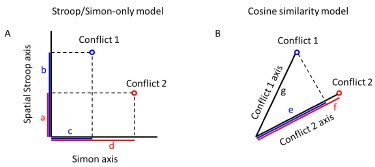

Response: The Jaccard measure is the most suitable method we can conceive of for assessing the similarity between two conflicts when establishing the Stroop-only and Simon-only models, achieved by projecting them onto the vertical or horizontal axes, respectively (Author response image 1A). This approach offers two advantages. First, the Jaccard similarity combines both similarity (as reflected by the numerator) and distance (reflected by the difference between denominator and numerator) without bias towards either. Second, the Jaccard similarity in our design is equivalent to the cosine similarity because the denominator in the cosine similarity is identical to the denominator in the Jaccard similarity (both are the radius of the circle, Author response image 1B).

Author response image 1.

Definition of Jaccard similarity. A) Two conflicts (1 and 2) are projected onto the spatial Stroop/Simon axis in the Stroop/Simon-only model, respectively. The Jaccard similarity for Stroop-only and Simon-only model are  and

and  respectively. Letters a-d are the projected vectors from the two conflicts to the two axes. Blue and red colors indicate the conflict conditions. Shorter vectors are the intersection and longer vectors are the union. B) According to the cosine similarity model, the similarity is defined as

respectively. Letters a-d are the projected vectors from the two conflicts to the two axes. Blue and red colors indicate the conflict conditions. Shorter vectors are the intersection and longer vectors are the union. B) According to the cosine similarity model, the similarity is defined as  , where e is the projected vector from conflict 1 to conflict 2, and g is the vector of conflict 1. The Jaccard similarity for this case is defined by

, where e is the projected vector from conflict 1 to conflict 2, and g is the vector of conflict 1. The Jaccard similarity for this case is defined by  , where f is the projector vector from conflict 2 to itself. Because f = g in our design, the Jaccard similarity is equivalent to the cosine similarity.

, where f is the projector vector from conflict 2 to itself. Because f = g in our design, the Jaccard similarity is equivalent to the cosine similarity.

Therefore, we believe that the model comparisons between cosine similarity model and the Stroop/Simon-Only models were equitable. However, we acknowledge the reviewer’s and other reviewers’ concerns about the correlation between spatial Stroop and Simon conflicts, which reduces the space to one dimension (1d) and limits our ability to distinguish between the Stroop-only and Simon-only models, as well as between Stroop+Simon and cosine similarity models. While these distinctions are undoubtedly important for understanding the geometry of the cognitive space, we recognize that they go beyond the major objective of this study, that is, to differentiate the cosine similarity model from domain-general/specific models. Therefore, we have chosen to exclude the Stroop-only, Simon-only and Stroop+Simon models in our revised manuscript.

Something that raised additional concerns are the RSMs in the key region of interest (Fig S5). The pure stroop task appears to be represented very differently from all of the conditions that include simon conflict.

Together, I think these limitations reflect the structure of the task and research goals, not the statistical approach (which has been meaningfully improved).

Response: We appreciate the reviewer for pointing this out. It is essential to clarify that our conclusions were based on the significant similarity modulation effect identified in our statistical analysis using the cosine similarity model, where we did not distinguish between the within-Stroop condition and the other four within-conflict conditions (Fig. 7A, now Fig. 8A). This means that the representation of conflict type was not biased by the seemingly disparities in the values shown here. Moreover, to specifically test the differences between the within-Stroop condition and the other within-conflict conditions, we conducted a mixed-effect model analysis only including trial pairs from the same conflict type. In this analysis, the primary predictor was the cross-condition difference (0 for within-Stroop condition and 1 for other within-conflict conditions). The results showed no significant cross-condition difference in either the incongruent (t = 1.22, p = .23) or the congruent (t = 1.06, p = .29) trials. Thus, we believe the evidence for different similarities is inconclusive in our data and decided not to interpret this numerical difference. We have added this note in the revised figure caption for Figure S5.

Author response image 2.

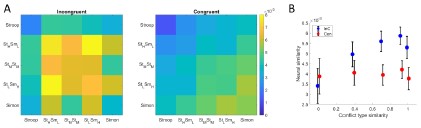

Fig. S5. The stronger conflict type similarity effect in incongruent versus congruent conditions. (A) Summary representational similarity matrices for the right 8C region in incongruent (left) and congruent (right) conditions, respectively. Each cell represents the averaged Pearson correlation of cells with the same conflict type and congruency in the 1400×1400 matrix. Note that the seemingly disparities in the values of Stroop and other within-conflict cells (i.e., the diagonal) did not reach significance for either incongruent (t = 1.22, p = .23) or congruent (t = 1.06, p = .29) trials. (2) Scatter plot showing the averaged neural similarity (Pearson correlation) as a function of conflict type similarity in both conditions. The values in both A and B are calculated from raw Pearson correlation values, in contrast to the z-scored values in Fig. 4D.

Minor:

- In the analysis of similarity_orientation, the df is very large (~14000). Here, and throughout, the df should be reflective of the population of subjects (ie be less than the sample size).

Response: The large degrees of freedom (df) in our analysis stem from the fact that we utilized a mixed-effect linear model, incorporating all data points (a total of 400×35=14000). In mixed-effect models, the df is determined by subtracting the number of fixed effects (in our case, 7) from the total number of observations. Notably, we are in line with the literature that have reported the df in this manner (e.g., Iravani et al., 2021; Schmidt & Weissman, 2015; Natraj et al., 2022).

Reference:

Iravani B, Schaefer M, Wilson DA, Arshamian A, Lundström JN. The human olfactory bulb processes odor valence representation and cues motor avoidance behavior. Proc Natl Acad Sci U S A. 2021 Oct 19;118(42):e2101209118. https://doi.org/10.1073/pnas.2101209118.

Schmidt, J.R., Weissman, D.H. Congruency sequence effects and previous response times: conflict adaptation or temporal learning?. Psychological Research 80, 590–607 (2016). https://doi.org/10.1007/s00426-015-0681-x.

Natraj, N., Silversmith, D. B., Chang, E. F., & Ganguly, K. (2022). Compartmentalized dynamics within a common multi-area mesoscale manifold represent a repertoire of human hand movements. Neuron, 110(1), 154-174. https://doi.org/10.1016/j.neuron.2021.10.002.

- it would improve the readability if there was more didactic justification for why analyses are done a certain way (eg justifying the jaccard metric). This will help less technically-savvy readers.

Response: We appreciate the reviewer’s suggestion. However, considering the Stroop/Simon-only models in our design may not be a valid approach for distinguishing the contributions of the Stroop/Simon components, we have decided not to include the Jaccard metrics in our revised manuscript.

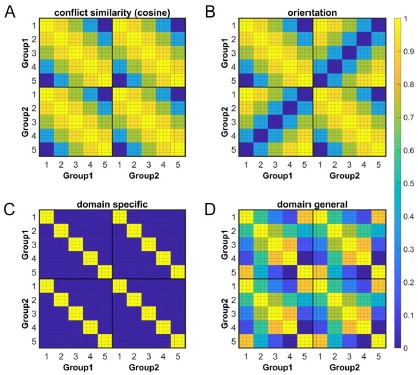

Besides, to improve the readability, we have moved Figure S4 to the main text (labeled as Figure 7), and added the domain-general/domain-specific schematics in Figure 8.

Author response image 3.

Figure 8. Schematic of key RSMs. (A) and (B) show the orthogonality between conflict similarity and orientation RSMs. The within-subject RSMs (e.g., Group1-Group1) for conflict similarity and orientation are all the same, but the cross-group correlations (e.g., Group2-Group1) are different. Therefore, we can separate the contribution of these two effects when including them as different regressors in the same linear regression model. (C) and (D) show the two alternative models. Like the cosine model (A), within-group trial pairs resemble between-group trial pairs in these two models. The domain-specific model is an identity matrix. The domain-general model is estimated from the absolute difference of behavioral congruency effect, but scaled to 0(lowest similarity)-1(highest similarity) to aid comparison. The plotted matrices here include only one subject each from Group 1 and Group 2. Numbers 1-5 indicate the conflict type conditions, for spatial Stroop, StHSmL, StMSmM, StLSmH, and Simon, respectively. The thin lines separate four different sub-conditions, i.e., target arrow (up, down) × congruency (incongruent, congruent), within each conflict type.

Reviewer #2:

This study examines the construct of "cognitive spaces" as they relate to neural coding schemes present in response conflict tasks. The authors use a novel experimental design in which different types of response conflict (spatial Stroop, Simon) are parametrically manipulated. These conflict types are hypothesized to be encoded jointly, within an abstract "cognitive space", in which distances between task conditions depend only on the similarity of conflict types (i.e., where conditions with similar relative proportions of spatial-Stroop versus Simon conflicts are represented with similar activity patterns). Authors contrast such a representational scheme for conflict with several other conceptually distinct schemes, including a domain-general, domain-specific, and two task-specific schemes. The authors conduct a behavioral and fMRI study to test which of these coding schemes is used by prefrontal cortex. Replicating the authors' prior work, this study demonstrates that sequential behavioral adjustments (the congruency sequence effect) are modulated as a function of the similarity between conflict types. In fMRI data, univariate analyses identified activation in left prefrontal and dorsomedial frontal cortex that was modulated by the amount of Stroop or Simon conflict present, and representational similarity analyses (RSA) that identified coding of conflict similarity, as predicted under the cognitive space model, in right lateral prefrontal cortex.

This study tackles an important question regarding how distinct types of conflict might be encoded in the brain within a computationally efficient representational format. The ideas postulated by the authors are interesting ones and the statistical methods are generally rigorous.

Response: We would like to express our sincere appreciation for the reviewer’s positive evaluation of our manuscript and the constructive comments and suggestions. In response to your suggestions and concerns, we excluded the StroopOnly, SimonOnly and Stroop+Simon models, and added the schematic of domain-general/specific model RSMs. We have provided detailed responses to your comments below.

The evidence supporting the authors claims, however, is limited by confounds in the experimental design and by lack of clarity in reporting the testing of alternative hypotheses within the method and results.

- Model comparison

The authors commendably performed a model comparison within their study, in which they formalized alternative hypotheses to their cognitive space hypothesis. We greatly appreciate the motivation for this idea and think that it strengthened the manuscript. Nevertheless, some details of this model comparison were difficult for us to understand, which in turn has limited our understanding of the strength of the findings.

The text indicates the domain-general model was computed by taking the difference in congruency effects per conflict condition. Does this refer to the "absolute difference" between congruency effects? In the rest of this review, we assume that the absolute difference was indeed used, as using a signed difference would not make sense in this setting. Nevertheless, it may help readers to add this information to the text.

Response: We apologize for any confusion. The “difference” here indeed refers to the “absolute difference” between congruency effects. We have now clarified this by adding the word “absolute” accordingly.

"Therefore, we defined the domain-general matrix as the absolute difference in their congruency effects indexed by the group-averaged RT in Experiment 2."

Regarding the Stroop-Only and Simon-Only models, the motivation for using the Jaccard metric was unclear. From our reading, it seems that all of the other models --- the cognitive space model, the domain-general model, and the domain-specific model --- effectively use a Euclidean distance metric. (Although the cognitive space model is parameterized with cosine similarities, these similarity values are proportional to Euclidean distances because the points all lie on a circle. And, although the domain-general model is parameterized with absolute differences, the absolute difference is equivalent to Euclidean distance in 1D.) Given these considerations, the use of Jaccard seems to differ from the other models, in terms of parameterization, and thus potentially also in terms of underlying assumptions. Could authors help us understand why this distance metric was used instead of Euclidean distance? Additionally, if Jaccard must be used because this metric seems to be non-standard in the use of RSA, it would likely be helpful for many readers to give a little more explanation about how it was calculated.

Response: We believe that the Jaccard similarity measure is consistent with the Cosine similarity measure. The Jaccard similarity is calculated as the intersection divided by the union. To define the similarity of two conflicts in the Stroop-only and Simon-only models, we first project them onto the vertical or horizontal axes, respectively (as shown in Author response image 1A). The Jaccard similarity in our design is equivalent to the cosine similarity because the denominator in the Jaccard similarity is identical to the denominator in the cosine similarity (both are the radius of the circle, Author response image 1B).

However, it is important to note that a cosine similarity cannot be defined when conflicts are projected onto spatial Stroop or Simon axis simultaneously. Therefore, we used the Jaccard similarity in the previous version of our manuscript.

Author response image 4.

Definition of Jaccard similarity. A) Two conflicts (1 and 2) are projected onto the spatial Stroop/Simon axis in the Stroop/Simon-only model, respectively. The Jaccard similarity for Stroop-only and Simon-only model are  and

and  respectively. Letters a-d are the projected vectors from the two conflicts to the two axes. Blue and red colors indicate the conflict conditions. Shorter vectors are the intersection and longer vectors are the union. B) According to the cosine similarity model, the similarity is defined as

respectively. Letters a-d are the projected vectors from the two conflicts to the two axes. Blue and red colors indicate the conflict conditions. Shorter vectors are the intersection and longer vectors are the union. B) According to the cosine similarity model, the similarity is defined as  , where e is the projected vector from conflict 1 to conflict 2, and g is the vector of conflict 1. The Jaccard similarity for this case is defined by

, where e is the projected vector from conflict 1 to conflict 2, and g is the vector of conflict 1. The Jaccard similarity for this case is defined by  , where f is the projector vector from conflict 2 to itself. Because f = g in our design, the Jaccard similarity is equivalent to the cosine similarity.

, where f is the projector vector from conflict 2 to itself. Because f = g in our design, the Jaccard similarity is equivalent to the cosine similarity.

However, we agree with the reviewer’s and other reviewers’ concern that the correlation between spatial Stroop and Simon conflicts makes it less likely to distinguish the Stroop+Simon from cosine similarity models. While distinguishing them is essential to understand the detailed geometry of the cognitive space, it is beyond our major purpose, that is, to distinguish the cosine similarity model with the domain-general/specific models. Therefore, we have chosen to exclude the Stroop-only, Simon-only and Stroop+Simon models from our revised manuscript.

When considering parameterizing the Stroop-Only and Simon-Only models with Euclidean distances, one concern we had is that the joint inclusion of these models might render the cognitive space model unidentifiable due to collinearity (i.e., the sum of the Stroop-Only and Simon-Only models could be collinear with the cognitive space model). Could the authors determine whether this is the case? This issue seems to be important, as the presence of such collinearity would suggest to us that the design is incapable of discriminating those hypotheses as parameterized.

Response: We acknowledge that our design does not allow for a complete differentiation between the parallel encoding (StroopOnly+SimonOnly) model and the cognitive space model, given their high correlation (r = 0.85). However, it is important to note that the StroopOnly+SimonOnly model introduces more free parameters, making the model fitting poorer than the cognitive space model.

Additionally, the cognitive space model also shows high correlations with the StroopOnly and SimonOnly models (both rs = 0.66). It is crucial to emphasize that our study’s primary goal does not involve testing the parallel encoding hypothesis (through the StroopOnly+SimonOnly model). As a result, we have chosen to remove the model comparison results with the StroopOnly, SimonOnly and StroopOnly+SimonOnly models. Instead, the cognitive space model shows lower correlation with the purely domain-general (r = −0.16) and domain-specific (r = 0.46) models.

- Issue of uniquely identifying conflict coding

We certainly appreciate the efforts that authors have taken to address potential confounders for encoding of conflict in their original submission. We broach this question not because we wish authors to conduct additional control analyses, but because this issue seems to be central to the thesis of the manuscript and we would value reading the authors' thoughts on this issue in the discussion.

To summarize our concerns, conflict seems to be a difficult variable to isolate within aggregate neural activity, at least relative to other variables typically studied in cognitive control, such as task-set or rule coding. This is because it seems reasonable to expect that many more nuisance factors covary with conflict -- such as univariate activation, level of cortical recruitment, performance measures, arousal --- than in comparison with, for example, a well-designed rule manipulation. Controlling for some of these factors post-hoc through regression is commendable (as authors have done here), but such a method will likely be incomplete and can provide no guarantees on the false positive rate.

Relatedly, the neural correlates of conflict coding in fMRI and other aggregate measures of neural activity are likely of heterogeneous provenance, potentially including rate coding (Fu et al., 2022), temporal coding (Smith et al., 2019), modulation of coding of other more concrete variables (Ebitz et al., 2020, 10.1101/2020.03.14.991745; see also discussion and reviews of Tang et al., 2016, 10.7554/eLife.12352), or neuromodulatory effects (e.g., Aston-Jones & Cohen, 2005). Some of these origins would seem to be consistent with "explicit" coding of conflict (conflict as a representation), but others would seem to be more consistent with epiphenomenal coding of conflict (i.e., conflict as an emergent process). Again, these concerns could apply to many variables as measured via fMRI, but at the same time, they seem to be more pernicious in the case of conflict. So, if authors consider these issues to be germane, perhaps they could explicitly state in the discussion whether adopting their cognitive space perspective implies a particular stance on these issues, how they interpret their results with respect to these issues, and if relevant, qualify their conclusions with uncertainty on these issues.

Response: We appreciate the reviewer’s insightful comments regarding the representation and process of conflict.

First, we agree that the conflict is not simply a pure feature like a stimulus but often arises from the interaction (e.g., dimension overlap) between two or more aspects. For example, in the manual Stroop, conflict emerges from the inconsistent semantic information between color naming and word reading. Similarly, other higher-order cognitive processes such as task-set also underlie the relationship between concrete aspects. For instance, in a face/house categorization task, the taskset is the association between face/house and the responses. When studying these higher-order processes, it is often impossible to completely isolate them from bottomup features. Therefore, methods like the representational similarity analysis and regression models are among the limited tools available to attempt to dissociate these concrete factors from conflict representation. While not perfect, this approach has been suggested and utilized in practice (Freund et al., 2021).

Second, we agree that conflict can be both a representation and an emerging process. These two perspectives are not necessarily contradictory. According to David Marr’s influential three-level theory (Marr, 1982), representation is the algorithm of the process to achieve a goal based on the input. Therefore, a representation can refer to not only a static stimulus (e.g., the visual representation of an image), but also a dynamic process. Building on this perspective, we posit that the representation of cognitive control consists of an array of dynamic representations embedded within the overall process. A similar idea has been proposed that the abstract task profiles can be progressively constructed as a representation in our brain (Kikumoto & Mayr, 2020).

We have incorporated this discussion into the manuscript:

"Recently an interesting debate has arisen concerning whether cognitive control should be considered as a process or a representation (Freund, Etzel, et al., 2021). Traditionally, cognitive control has been predominantly viewed as a process. However, the study of its representation has gained more and more attention. While it may not be as straightforward as the visual representation (e.g., creating a mental image from a real image in the visual area), cognitive control can have its own form of representation. An influential theory, Marr’s (1982) three-level model proposed that representation serves as the algorithm of the process to achieve a goal based on the input. In other words, representation can encompass a dynamic process rather than being limited to static stimuli. Building on this perspective, we posit that the representation of cognitive control consists of an array of dynamic representations embedded within the overall process. A similar idea has been proposed that the representation of task profiles can be progressively constructed with time in the brain (Kikumoto & Mayr, 2020)."

Reference:

Freund, M. C., Etzel, J. A., & Braver, T. S. (2021). Neural Coding of Cognitive Control: The Representational Similarity Analysis Approach. Trends in Cognitive Sciences, 25(7), 622-638. https://doi.org/10.1016/j.tics.2021.03.011

Marr, D. C. (1982). Vision: A computational investigation into human representation and information processing. New York: W.H. Freeman.

Kikumoto A, Mayr U. (2020). Conjunctive representations that integrate stimuli, responses, and rules are critical for action selection. Proceedings of the National Academy of Sciences, 117(19):10603-10608. https://doi.org/10.1073/pnas.1922166117.

- Interpretation of measured geometry in 8C

We appreciate the inclusion of the measured similarity matrices of area 8C, the key area the results focus on, to the supplemental, as this allows for a relatively model-agnostic look at a portion of the data. Interestingly, the measured similarity matrix seems to mismatch the cognitive space model in a potentially substantive way. Although the model predicts that the "pure" Stroop and Simon conditions will have maximal self-similarity (i.e., the Stroop-Stroop and Simon-Simon cells on the diagonal), these correlations actually seem to be the lowest, by what appears to be a substantial margin (particularly the Stroop-Stroop similarities). What should readers make of this apparent mismatch? Perhaps authors could offer their interpretation on how this mismatch could fit with their conclusions.

Response: We appreciate the reviewer for bringing this to our attention. It is essential to clarify that our conclusions were based on the significant similarity modulation effect observed in our statistical analysis using the cosine similarity model, where we did not distinguish between the within-Stroop condition and the other four withinconflict conditions (Fig. 7A). This means that the representation of conflict type was not biased by the seemingly disparities in the values shown here. Moreover, to specifically address the potential differences between the within-Stroop condition and the other within-conflict conditions, we conducted a mixed-effect model. In this analysis, the primary predictor was the cross-condition difference (0 for within-Stroop condition and 1 for other within-conflict conditions). The results showed no significant cross-condition difference in either the incongruent trials (t = 1.22, p = .23) or the congruent (t = 1.06, p = .29) trials. Thus, we believe the evidence for different similarities is inconclusive in our data and decided not to interpret this numerical difference.

We have added this note in the revised figure caption for Figure S5.

Author response image 5.

Fig. S5. The stronger conflict type similarity effect in incongruent versus congruent conditions. (A) Summary representational similarity matrices for the right 8C region in incongruent (left) and congruent (right) conditions, respectively. Each cell represents the averaged Pearson correlation of cells with the same conflict type and congruency in the 1400×1400 matrix. Note that the seemingly disparities in the values of Stroop and other within-conflict cells (i.e., the diagonal) did not reach significance for either incongruent (t = 1.22, p = .23) or congruent (t = 1.06, p = .29) trials. (2) Scatter plot showing the averaged neural similarity (Pearson correlation) as a function of conflict type similarity in both conditions. The values in both A and B are calculated from raw Pearson correlation values, in contrast to the z-scored values in Fig. 4D.

- It would likely improve clarity if all of the competing models were displayed as summarized RSA matrices in a single figure, similar to (or perhaps combined with) Figure 7.

Response: We appreciate the reviewer’s suggestion. We now have incorporated the domain-general and domain-specific models into the Figure 7 (now Figure 8).

Author response image 6.

Figure 8. Schematic of key RSMs. (A) and (B) show the orthogonality between conflict similarity and orientation RSMs. The within-subject RSMs (e.g., Group1-Group1) for conflict similarity and orientation are all the same, but the cross-group correlations (e.g., Group2-Group1) are different. Therefore, we can separate the contribution of these two effects when including them as different regressors in the same linear regression model. (C) and (D) show the two alternative models. Like the cosine model (A), within-group trial pairs resemble between-group trial pairs in these two models. The domain-specific model is an identity matrix. The domain-general model is estimated from the absolute difference of behavioral congruency effect, but scaled to 0(lowest similarity)-1(highest similarity) to aid comparison. The plotted matrices here include only one subject each from Group 1 and Group 2. Numbers 1-5 indicate the conflict type conditions, for spatial Stroop, StHSmL, StMSmM, StLSmH, and Simon, respectively. The thin lines separate four different sub-conditions, i.e., target arrow (up, down) × congruency (incongruent, congruent), within each conflict type.

- Because this model comparison is key to the main inferences in the study, it might also be helpful for most readers to move all of these RSA model matrices to the main text, instead of in the supplemental.

Response: We thank the reviewer for this suggestion. We have moved the Fig. S4 to the main text, labeled as the new Figure 7.

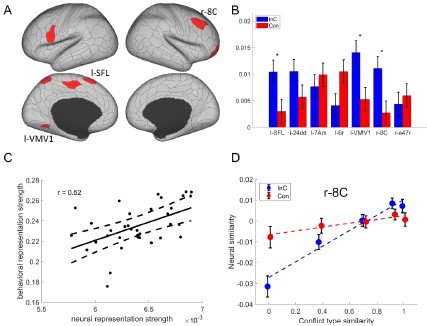

- It may be worthwhile to check how robust the observed brain-behavior association (Fig 4C) is to the exclusion of the two datapoints with the lowest neural representation strength measure, as these points look like they have high leverage.

Response: We calculated the Pearson correlation after excluding the two points and found it does not affect the results too much, with the r = 0.50, p = .003 (compared to the original r = 0.52, p = .001).

Additionally, we found the two axes were mistakenly shifted in Fig 4C. Therefore, we corrected this error in the revised manuscript. The correlation results would not be influenced.

Author response image 7.

Fig. 4. The conflict type effect. (A) Brain regions surviving the Bonferroni correction (p < 0.0001) across the regions (criterion 1). Labeled regions are those meeting the criterion 2. (B) Different encoding of conflict type in the incongruent with congruent conditions. * Bonferroni corrected p < .05. (C) The brain-behavior correlation of the right 8C (criterion 3). The x-axis shows the beta coefficient of the conflict type effect from the RSA, and the y-axis shows the beta coefficient obtained from the behavioral linear model using the conflict similarity to predict the CSE in Experiment 2. (D) Illustration of the different encoding strength of conflict type similarity in incongruent versus congruent conditions of right 8C. The y-axis is derived from the z-scored Pearson correlation coefficient, consistent with the RSA methodology. See Fig. S4B for a plot with the raw Pearson correlation measurement. l = left; r = right.

Reviewer #3:

Yang and colleagues investigated whether information on two task-irrelevant features that induce response conflict is represented in a common cognitive space. To test this, the authors used a task that combines the spatial Stroop conflict and the Simon effect. This task reliably produces a beautiful graded congruency sequence effect (CSE), where the cost of congruency is reduced after incongruent trials. The authors measured fMRI to identify brain regions that represent the graded similarity of conflict types, the congruency of responses, and the visual features that induce conflicts. They applied univariate, multivariate, and connectivity analyses to fMRI data to identify brain regions that represent the graded similarity of conflict types, the congruency of responses, and the visual features that induce conflicts. They further directly assessed the dimensionality of represented conflict space.

The authors identified the right dlPFC (right 8C), which shows 1) stronger encoding of graded similarity of conflicts in incongruent trials and 2) a positive correlation between the strength of conflict similarity type and the CSE on behavior. The dlPFC has been shown to be important for cognitive control tasks. As the dlPFC did not show a univariate parametric modulation based on the higher or lower component of one type of conflict (e.g., having more spatial Stroop conflict or less Simon conflict), it implies that dissimilarity of conflicts is represented by a linear increase or decrease of neural responses. Therefore, the similarity of conflict is represented in multivariate neural responses that combine two sources of conflict.

The strength of the current approach lies in the clear effect of parametric modulation of conflict similarity across different conflict types. The authors employed a clever cross-subject RSA that counterbalanced and isolated the targeted effect of conflict similarity, decorrelating orientation similarity of stimulus positions that would otherwise be correlated with conflict similarity. A pattern of neural response seems to exist that maps different types of conflict, where each type is defined by the parametric gradation of the yoked spatial Stroop conflict and the Simon conflict on a similarity scale. The similarity of patterns increases in incongruent trials and is correlated with CSE modulation of behavior.

The main significance of the paper lies in the evidence supporting the use of an organized "cognitive space" to represent conflict information as a general control strategy. The authors thoroughly test this idea using multiple approaches and provide convincing support for their findings. However, the universality of this cognitive strategy remains an open question.

(Public Reviews) Taken together, this study presents an exciting possibility that information requiring high levels of cognitive control could be flexibly mapped into cognitive map-like representations that both benefit and bias our behavior. Further characterization of the representational geometry and generalization of the current results look promising ways to understand representations for cognitive control.

Response: We would like to thank the reviewer for the positive evaluation of our manuscript and for providing constructive comments. In response to your suggestions, we have acknowledged the potential limitation of the design and the cross-subject RSA approach, and incorporated the open questions to the discussions. Please find our detailed responses below.

The task presented in the study involved two sources of conflict information through a single salient visual input, which might have encouraged the utilization of a common space.

Response: We agree that the unified visual input in our design may have facilitated the utilization of a common space. However, we believe the stimuli are not necessarily unified in the construction of the common space. To further test the potential interaction between the concrete stimulus setting and the cognitive space representation, it is necessary to use varied stimuli in future research. We have left this as an open question in the discussion:

Can we effectively map any sources of conflict with completely different stimuli into a single space?

The similarity space was analyzed at the level of between-individuals (i.e., crosssubject RSA) to mitigate potential confounds in the design, such as congruency and the orientation of stimulus positions. This approach makes it challenging to establish a direct link between the quality of conflict space representation and the patterns of behavioral adaptations within individuals.

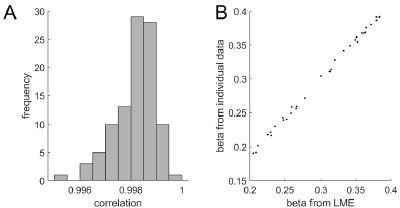

Response: By setting the variables as random effects at the subject level, we have extracted the individual effects that incorporate both the group-level fixed effects and individual-level random effects. We believe this approach yields results that are as reliable, if not more, than effects calculated from individual data only. First, the mixed effect linear (LME) model has included all the individual data, forming the basis for establishing random effects. Therefore, the individual effects derived from this approach inherently reflect the individual-specific effects. To support this notion, we have included a simulation script (accessible in the online file “simulation_LME.mlx” at https://osf.io/rcq8w) to demonstrate the strong consistency between the two approaches (see Author response image 8). In this simulation, we generated random data (Y) for 35 subjects, each containing 20 repeated measurements across 5 conditions. To streamline the simulation, we only included one predictor (X), which was treated as both fixed and random effects at the subject level. We applied two methods to calculate the individual beta coefficient. The first involved extracting individual beta coefficients from the LME model by summing the fixed effect with the subject-specific random effect. The second method was entailed conducting a regression analysis using data from each subject to obtain the slope. We tested their consistency by calculating the Pearson correlation between the derived beta coefficients. This simulation was repeated 100 times.

Author response image 8.

The consistent individual beta coefficients between the mixed effect model and the individual regression analysis. A) The distribution of Pearson correlation between the two methods for 100 times. B) An example from the simulation showing the highly correlated results from the two methods. Each data point indicates a subject (n=35).

Second, the potential difference between the two methods lies in that the LME model have also taken the group-level variance into account, such as the dissociable variances of the conflict similarity and orientation across subject groups. This enabled us to extract relatively cleaner conflict similarity effects for each subject, which we believe can be better linked to the individual behavioral adaptations. Moreover, we have extracted the behavioral adaptations scores (i.e., the similarity modulation effect on CSE) using a similar LME approach. Conducting behavioral analysis solely using individual data would have been less reliable, given the limited sample size of individual data (~32 points per subject). This also motivated us to maintain consistency by extracting individual neural effects using LME models.

Furthermore, it remains unclear at which cognitive stages during response selection such a unified space is recruited. Can we effectively map any sources of conflict into a single scale? Is this unified space adaptively adjusted within the same brain region? Additionally, does the amount of conflict solely define the dimensions of this unified space across many conflict-inducing tasks? These questions remain open for future studies to address.

Response: We appreciate the reviewer’s constructive open questions. We respond to each of them based on our current understanding.

- It remains unclear at which cognitive stages during response selection such a unified space is recruited.

We anticipate that the cognitive space is recruited to guide the transference of behavioral CSE at two critical stages. The first stage involves the evaluation of control demands, where the representational distance/similarity between previous and current trials influences the adjustment of cognitive control. The second stage pertains to is control execution, where the switch from one control state to another follows a path within the cognitive space. It is worth noting that future studies aiming to address this question may benefit from methodologies with higher temporal resolutions, such as EEG and MEG, to provide more precise insights into the temporal dynamics of the process of cognitive space recruitment.

- Can we effectively map any sources of conflict into a single scale?

It is possible that various sources of conflict can be mapped onto the same space based on their similarity, even if finding such an operational defined similarity may be challenging. However, our results may offer an approach to infer the similarity between two conflicts. One way is to examine their congruency sequence effect (CSE), with a stronger CSE suggesting greater similarity. The other way is to test their representational similarity within the dorsolateral prefrontal cortex.

Is this unified space adaptively adjusted within the same brain region? We do not have an answer to this question. We showed that the cognitive space does not change with time (Note. S3). What have adjusted is the control demand to resolve the quickly changing conflict conditions from trial to trial. Though, it is an interesting question whether the cognitive space may be altered, for example, when the mental state changes significantly. And if yes, we can further test whether the change of cognitive space is also within the right dlPFC.

Additionally, does the amount of conflict solely define the dimensions of this unified space across many conflict-inducing tasks?

Our understanding of this comment is that the amount of conflict refers to the number of conflict sources. Based on our current finding, the dimensions of the space are indeed defined by how many different conflict sources are included. However, this would require the different conflict sources are orthogonal. If some sources share some aspects, the cognitive space may collapse to a lower dimension. We have incorporated the first question into the discussion:

Moreover, we anticipate that the representation of cognitive space is most prominently involved at two critical stages to guide the transference of behavioral CSE. The first stage involves the evaluation of control demands, where the representational distance/similarity between previous and current trials influences the adjustment of cognitive control. The second stage pertains to control execution, where the switch from one control state to another follows a path within the cognitive space. However, we were unable to fully distinguish between these two stages due to the low temporal resolution of fMRI signals in our study. Future research seeking to delve deeper into this question may benefit from methodologies with higher temporal resolutions, such as EEG and MEG.

We have included the other questions into the manuscript as open questions, calling for future research.

Several interesting questions remains to be answered. For example, is the dimension of the unified space across conflict-inducing tasks solely determined by the number of conflict sources? Can we effectively map any sources of conflict with completely different stimuli into a single space? Is the cognitive space geometry modulated by the mental state? If yes, what brain regions mediate the change of cognitive space?

Minor comments:

- The original comment about out-of-sample predictions to examine the continuity of the space was a suggestion for testing neural representations, not behavior (I apologize for the lack of clarity). Given the low dimensionality of the conflict space shown by the participation ratio, we expect that linear separability exists only among specific combinations of conditions. For example, the pair of conflicts 1 and 5 together is not linearly separable from conflicts 2 and 3. But combined with other results, this is already implied.

Response: We apologize for the misunderstanding. In fact, performing a prediction analysis using the extensive RSM in our study does presents certain challenges, primarily due to its substantial size (1400x1400) and the intricate nature of the mixed-effect linear model. In our efforts to simplify the prediction process by excluding random effects, we did observe a correlation between the predicted and original values, albeit a relatively small Pearson correlation coefficient of r = 0.024, p < .001. This small correlation can be attributed to two key factors. First, the exclusion of data points impacts not only the conflict similarity regressor but also other regressors within the model, thereby diminishing the predictive power. Secondly, the large amount of data points in the model heightens the risk of overfitting, subsequently reducing the model’s capacity for generalization and increasing the likelihood of unreliable predictions. Given these potential problems, we have opted not to include this prediction in the revised manuscript.