Peer review process

Revised: This Reviewed Preprint has been revised by the authors in response to the previous round of peer review; the eLife assessment and the public reviews have been updated where necessary by the editors and peer reviewers.

Read more about eLife’s peer review process.Editors

- Reviewing EditorClaire GillanTrinity College Dublin, Dublin, Ireland

- Senior EditorMichael FrankBrown University, Providence, United States of America

Reviewer #1 (Public Review):

This paper describes the development and initial validation of an approach-avoidance task and its relationship to anxiety. The task is a two-armed bandit where one choice is 'safer' - has no probability of punishment, delivered as an aversive sound, but also lower probability of reward - and the other choice involves a reward-punishment conflict. The authors fit a computational model of reinforcement learning to this task and found that self-reported state anxiety during the task was related to a greater likelihood of choosing the safe stimulus when the other (conflict) stimulus had a higher likelihood of punishment. Computationally, this was represented by a smaller value for the ratio of reward to punishment sensitivity in people with higher task-induced anxiety. They replicated this finding, but not another finding that this behavior was related to a measure of psychopathology (experiential avoidance), in a second sample. They also tested test-retest reliability in a sub-sample tested twice, one week apart and found that some aspects of task behavior had acceptable levels of reliability. The introduction makes a strong appeal to back-translation and computational validity. The task design is clever and most methods are solid - it is encouraging to see attempts to validate tasks as they are developed. The lack of replicated effects with psychopathology may mean that this task is better suited to assess state anxiety, or to serve as a foundation for additional task development.

Reviewer #2 (Public Review):

Summary:

The authors develop a computational approach-avoidance-conflict (AAC) task, designed to overcome the limitations of existing offer based AAC tasks. The task incorporated likelihoods of receiving rewards/ punishments that would be learned by the participants to ensure computational validity and estimated model parameters related to reward/punishment and task induced anxiety. Two independent samples of online participants were tested. In both samples participants who experienced greater task induced anxiety avoided choices associated with greater probability of punishment. Computational modelling revealed that this effect was explained by greater individual sensitivities to punishment relative to rewards.

Strengths:

Large internet-based samples, with discovery sample (n = 369), pre-registered replication sample (n = 629) and test-retest sub group (n = 57). Extensive compliance measures (e.g. audio checks) seek to improve adherence.

There is a great need for RL tasks that model threatening outcomes rather than simply loss of reward. The main model parameters show strong effects and the additional indices with task based anxiety are a useful extension. Associations were broadly replicated across samples. Fair to excellent reliability of model parameters is encouraging and badly needed for behavioral tasks of threat sensitivity.

The task seems to have lower approach bias than some other AAC tasks in the literature.

Weaknesses:

The negative reliability of punishment learning rate is concerning as this is an important outcome.

The Kendall's tau values underlying task induced anxiety and safety reference/ various indices are very weak (all < 0.1), as are the mediation effects (all beta < 0.01). The interaction with P(punishment|conflict) does explain some of this.

The inclusion of only one level of reward (and punishment) limits the ecological validity of the sensitivity indices.

Appraisal and impact:

Overall this is a very strong paper, describing a novel task that could help move the field of RL forward to take account of threat processing more fully. The large sample size with discovery, replication and test-retest gives confidence in the findings. The task has good ecological validity and associations with task-based anxiety and clinical self-report demonstrate clinical relevance. Test-retest of the punishment learning parameter is the only real concern. Overall this task provides an exciting new probe of reward/threat that could be used in mechanistic disease models.

Additional context:

The sex differences between the samples are interesting as effects of sex are commonly found in AAC tasks. It would be interesting to look at the main model comparison with sex included as a covariate.

Reviewer #3 (Public Review):

This study investigated cognitive mechanisms underlying approach-avoidance behavior using a novel reinforcement learning task and computational modelling. Participants could select a risky "conflict" option (latent, fluctuating probabilities of monetary reward and/or unpleasant sound [punishment]) or a safe option (separate, generally lower probability of reward). Overall, participant choices were skewed towards more rewarded options, but were also repelled by increasing probability of punishment. Individual patterns of behavior were well-captured by a reinforcement learning model that included parameters for reward and punishment sensitivity, and learning rates for reward and punishment. This is a nice replication of existing findings suggesting reward and punishment have opposing effects on behavior through dissociated sensitivity to reward versus punishment.

Interestingly, avoidance of the conflict option was predicted by self-reported task-induced anxiety. Importantly, when a subset of participants were retested over 1 week later, most behavioral tendencies and model parameters were recapitulated, suggesting the task may capture stable traits relevant to approach-avoidance decision-making.

The revised paper commendably adds important additional information and analyses to support these claims. The initial concern that not accounting for participant control over punisher intensity confounded interpretation of effects has been largely addressed in follow-up analyses and discussion.

This study complements and sits within a broad translational literature investigating interactions between reward/punishers and psychological processes in approach-avoidance decisions.

Author Response

The following is the authors’ response to the original reviews.

Reviewer #1 (Public Review):

This paper describes the development and initial validation of an approach-avoidance task and its relationship to anxiety. The task is a two-armed bandit where one choice is 'safer' - has no probability of punishment, delivered as an aversive sound, but also lower probability of reward - and the other choice involves a reward-punishment conflict. The authors fit a computational model of reinforcement learning to this task and found that self-reported state anxiety during the task was related to a greater likelihood of choosing the safe stimulus when the other (conflict) stimulus had a higher likelihood of punishment. Computationally, this was represented by a smaller value for the ratio of reward to punishment sensitivity in people with higher task-induced anxiety. They replicated this finding, but not another finding that this behavior was related to a measure of psychopathology (experiential avoidance), in a second sample. They also tested test-retest reliability in a sub-sample tested twice, one week apart and found that some aspects of task behavior had acceptable levels of reliability. The introduction makes a strong appeal to back-translation and computational validity, but many aspects of the rationale for this task need to be strengthened or better explained. The task design is clever and most methods are solid - it is encouraging to see attempts to validate tasks as they are developed. There are a few methodological questions and interpretation issues, but they do not affect the overall findings. The lack of replicated effects with psychopathology may mean that this task is better suited to assess state anxiety, or to serve as a foundation for additional task development.

We thank the reviewer for their kind comments and constructive feedback. We agree that the approach taken in this paper appears better suited to state anxiety, and further work is needed to assess/improve its clinical relevance.

Reviewer #1 (Recommendations For The Authors):

- For the introduction, the authors communicate well the appeal of tasks with translational potential, and setting up this translation through computational validity is a strong approach. However, I had some concerns about how the task was motivated in the introduction:

a) The authors state that current approach-avoidance tasks used in humans do not resemble those used in the non-human literature, but do not provide details on what exactly is missing from these tasks that makes translation difficult.

Our intention for the section that the reviewer refers to was to briefly convey that historically, approach-avoidance conflict would have been measured either using questionnaires or joystick-based tasks which have no direct non-human counterpart. However, we note that the phrasing was perhaps unfair to recent tasks that were explicitly designed to be translatable across species. Therefore, we have amended the text to the following:

In humans, on the other hand, approach-avoidance conflict has historically been measured using questionnaires such as the Behavioural Inhibition/Activation Scale (Carver & White, 1994), or cognitive tasks that rely on motor biases, for example by using joysticks to approach/move towards positive stimuli and avoid/move away from negative stimuli, which have no direct non-human counterparts (Guitart-Masip et al., 2012; Kirlic et al., 2017; Mkrtchian et al., 2017; Phaf et al., 2014).

b) Although back-translation to 'match' human paradigms to non-animal paradigms is useful for research, this isn't the end goal of task development. What really matters is how well these tasks, whether in humans or not, capture psychopathology-relevant behavior. Many animal paradigms were developed and brought into extensive use because they showed sensitivity to pharmacological compounds (e.g., benzodiazepines). The introduction accepts the validity of these paradigms at face value, and doesn't address whether developing human tests of psychopathology based on sensitivity to existing medication classes is the best way to generate new insights about psychopathology.

We agree that whilst paradigms with translational and computational validity have merits of their own for neuroscientific theory, clinical validity (i.e. how well the paradigm reflects a phenomenon relevant to psychopathology) is key in the context of clinical applications. While our findings of associations between task performance and self-reported (state) anxiety suggest that our approach is a step in the right direction, the lack of associations with clinical measures was disappointing. Although future work is needed to more directly test the sensitivity of the current approach to psychopathology, this may mean that it, and its non-human counterparts, do not measure behaviours relevant to pathological anxiety. Since our primary focus in this paper was on translational and computational validity, we have opted to discuss the author’s suggestion in the ‘Discussion’ section, as follows:

Further, it is worth noting that many animal paradigms were developed and widely adopted due to their sensitivity to anxiolytic medication (Cryan & Holmes, 2005). Given the lack of associations with clinical measures in our results, it is possible that current translational models of anxiety may not fully capture behaviours that are directly relevant to pathological anxiety. To develop translational paradigms of clinical utility, future research should place a stronger emphasis on assessing their clinical validity in humans.

c) The authors may want to bring in the literature on the description-experience gap (e.g., PMID: 19836292) when discussing existing decision tasks and their computational dissimilarity to non-human operant conditioning tasks.

We thank the reviewer for this useful addition to the introduction. We have now added the following to the 'Introduction’ section:

Moreover, evidence from economic decision-making suggests that explicit offers of probabilistic outcomes can impact decision-making differently compared to when probabilistic contingencies need to be learned from experience (referred to as the ‘description-experience gap’; Hertwig & Erev, 2009); this finding raises potential concerns regarding the use of offer-based tasks in humans as approximations of non-human tasks that do not involve explicit offers.

d) How does one evaluate how computationally similar human vs. non-human tasks are? What are the criteria for making this judgement? Specific to the current tasks, many animal learning tasks are not learning tasks in the same sense that human learning tasks are, in terms of the number of trials used and if the animals are choosing from a learned set of contingencies versus learning the contingencies during the testing.

The computational similarity of human and non-human strategies in a given translational task can be tested empirically. This can be done by fitting models to the data and assessing whether similar models explain choices, even if parameter distributions might vary across species due to, for example, physiological differences. Indeed, non-human animals require much more training to perform even uni-dimensional reinforcement learning, but once they are trained, it should be possible to model their responses. In fact, it should even be possible to take training data into account in some cases. For example, the training phase of the Vogel/Geller-Seifter preclinical tests require an animal to learn to emit a certain action (e.g. lever press) simply to obtain some reward. In the next phase, an aversive outcome is introduced as an additional outcome, but one could model both the training and test phase together – the winning model in our studies would be a suitable candidate to model behaviour here. As we also discuss predictive validity in the ‘Discussion’ section, we opted to add the following text there too:

… computational validity would also need to be assessed directly in non-human animals by fitting models to their behavioural data. This should be possible even in the face of different procedures across species such as number of trials or outcomes used (shock or aversive sound). We are encouraged by our finding that the winning computational model in our study relies on a relatively simple classical reinforcement learning strategy. There exist many studies showing that non-human animals rely on similar strategies during reward and punishment learning (Mobbs et al., 2020; Schultz, 2013); albeit to our knowledge this has never been modelled in non-human animals where rewards and punishment can occur simultaneously.

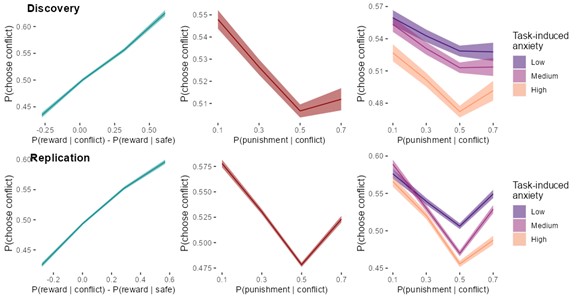

- What do the authors make of the non-linear relationship between probability of punishment and probability of choosing the conflict stimulus (Fig 2d), especially in the high task-induced anxiety participants? Did this effect show up in the replication sample as well?

Figures 2c-e were created by binning the continuous predictors of outcome probabilities into discrete bins of equal interval. Since punishment probability varied according to Gaussian random walks, it was also distributed with more of its mass in the central region (~ 0.4), and so values at the extreme bins were estimated on fewer data and with greater variance. The non-linear relationships are likely thus an artefact of our task design and plotting procedure. The pattern was also evident in the replication sample, see Author response image 1:

Author response image 1.

However, since these effects were estimated as linear effects in the logistic regression models, and to avoid overfitting/interpretations of noise arising from our task design, we now plot logistic curves fitted to the raw data instead.

- How correlated were learning rate and sensitivity parameters? The EM algorithm used here can sometimes result in high correlations among these sets of parameters.

As the reviewer suspects the parameters were strongly correlated, especially across the punishment-specific parameters. The Pearson’s r estimates for the untransformed parameter values were as follows:

Reward parameters: discovery sample r = -0.39; replication sample r = -0.78

Punishment parameters: discovery sample r = -0.91; replication sample r = -0.85

We have included the correlation matrices of the estimated parameters as Supplementary Figure 2 in the ‘Computational modelling’ section of the Supplement.

We have now also re-fitted the winning model using variational Bayesian inference (VBI) via Stan, and found that the cross-parameter correlations were much lower than when the data were fitted using EM. We also ran a sensitivity analysis assessing whether using VBI changed the main findings of our studies. This showed that the correlation between task-induced anxiety and the reward-punishment sensitivity index was robust to fitting method, as was the mediating effect of reward-punishment sensitivity index on anxiety’s effect on choice. This indicates that overall our key findings are robust to different methods of parameter-fitting.

We now direct readers to these analyses from the new ‘Sensitivity analyses’ section in the manuscript, as follows:

As our procedure for estimating model parameters (the expectation-maximisation algorithm, see ‘Methods’) produced high inter-parameter correlations in our data (Supplementary Figure 2), we also re-estimated the parameters using Stan’s variational Bayesian inference algorithm (Stan Development Team, 2023) – this resulted in lower inter-parameter correlations, but our primary computational finding, that the effect of anxiety on choice is mediated by relative sensitivity to reward/punishment was consistent across algorithms (see Supplement section 9.8 for details).

We have included the relevant analyses comparing EM and VBI in the Supplement, as follows:

[9.8 Sensitivity analysis: estimating parameters via expectation maximisation and variational Bayesian inference algorithms]

Given that the expectation maximisation (EM) algorithm produced high inter-parameter correlations, we ran a sensitivity analysis by assessing the robustness of our computational findings to an alternative method of parameter estimation – (mean-field) variational Bayesian inference (VBI) via Stan (Stan Development Team, 2023). Since, unlike EM, the results of VBI are very sensitive to initial values, we fitted the data 10 times with different initial values.

Inter-parameter correlations

The VBI produced lower inter-parameter correlations than the EM algorithm (Supplementary Figure 8).

Sensitivity analysis

Since multicollinearity in the VBI-estimated parameters was lower than for EM, indicating less trade-off in the estimation, we re-tested our computational findings from the manuscript as part of a sensitivity analysis. We first assessed whether we observed the same correlations between task-induced anxiety and punishment learning, and reward-punishment sensitivity index (Supplementary Figure 9a). Punishment learning rate was not significantly associated with task-induced anxiety in any of the 10 VBI iterations in the discovery sample, although it was in 9/10 in the replication sample. On the other hand, the reward-punishment sensitivity index was significantly associated with task-induced anxiety in 9/10 VBI iterations in the discovery sample and all iterations in the replication sample. This suggests that the correlation of anxiety and sensitivity index is robust to these two fitting approaches.

We also re-estimated the mediation models, where in the EM-estimated parameters, we found that the reward-punishment sensitivity index mediated the relationship between task-induced anxiety and task choice proportions (Supplementary Figure 9b). Again, we found that the reward-punishment sensitivity index was a significant mediator in 9/10 VBI iterations in the discovery sample and all iterations in the replication sample. Punishment learning rate was also a significant mediator in 9/10 iterations in the replication sample, although it was not in the discovery sample for all iterations, and this was not observed for the EM-estimated parameters.

Overall, we found that our key results, that anxiety is associated with greater sensitivity to punishment over reward, and this mediates the relationship between anxiety and approach-avoidance behaviour, were robust across both fitting methods.

As an aside, we were unable to run the model fitting using Markov chain Monte Carlo sampling approaches due to the computational power and time required for a sample of this size (Pike & Robinson, 2022, JAMA Psychiatry).

- What is the split-half reliability of the task parameters?

We thank the reviewer for this query. We have now included a brief section on the (good-to-excellent) split-half reliability of the task in the manuscript:

We assessed the split-half reliability of the task by correlating the overall proportion of conflict option choices and model parameters from the winning model across the first and second half of trials. For overall choice proportion, reliability was simply calculated via Pearson’s correlations. For the model parameters, we calculated model-derived estimates of Pearson’s r values from the parameter covariance matrix when first- and second-half parameters were estimated within a single model, following a previous approach recently shown to accurately estimate parameter reliability (Waltmann et al., 2022). We interpreted indices of reliability based on conventional values of < 0.40 as poor, 0.4 - 0.6 as fair, 0.6 - 0.75 as good, and > 0.75 as excellent reliability (Fleiss, 1986). Overall choice proportion showed good reliability (discovery sample r = 0.63; replication sample r = 0.63; Supplementary Figure 5). The model parameters showed good-to-excellent reliability (model-derived r values ranging from 0.61 to 0.85 [0.76 to 0.92 after Spearman-Brown correction]; Supplementary Figure 5).

- The authors do a good job of avoiding causal language when setting up the cross-sectional mediation analysis, but depart from this in the discussion (line 335). Without longitudinal data, they cannot claim that "mediation analyses revealed a mechanism of how anxiety induces avoidance".

Thank you for spotting this, we have now amended the text to:

… mediation analyses suggested a potential mechanism of how anxiety may induce avoidance.

Reviewer #2 (Public Review):

Summary:

The authors develop a computational approach-avoidance-conflict (AAC) task, designed to overcome limitations of existing offer based AAC tasks. The task incorporated likelihoods of receiving rewards/ punishments that would be learned by the participants to ensure computational validity and estimated model parameters related to reward/punishment and task induced anxiety. Two independent samples of online participants were tested. In both samples participants who experienced greater task induced anxiety avoided choices associated with greater probability of punishment. Computational modelling revealed that this effect was explained by greater individual sensitivities to punishment relative to rewards.

Strengths:

Large internet-based samples, with discovery sample (n = 369), pre-registered replication sample (n = 629) and test-retest sub group (n = 57). Extensive compliance measures (e.g. audio checks) seek to improve adherence.

There is a great need for RL tasks that model threatening outcomes rather than simply loss of reward. The main model parameters show strong effects and the additional indices with task based anxiety are a useful extension. Associations were broadly replicated across samples. Fair to excellent reliability of model parameters is encouraging and badly needed for behavioral tasks of threat sensitivity.

We thank the reviewer for their comments and constructive feedback.

The task seems to have lower approach bias than some other AAC tasks in the literature. Although this was inferred by looking at Fig 2 (it doesn't seem to drop below 46%) and Fig 3d seems to show quite a strong approach bias when using a reward/punishment sensitivity index. It would be good to confirm some overall stats on % of trials approached/avoided overall.

The range of choice proportions is indeed an interesting statistic that we have now included in the manuscript:

Across individuals, there was considerable variability in overall choice proportions (discovery sample: mean = 0.52, SD = 0.14, min/max = [0.03, 0.96]; replication sample: mean = 0.52, SD = 0.14, min/max = [0.01, 0.99]).

Weaknesses:

The negative reliability of punishment learning rate is concerning as this is an important outcome.

We agree that this is a concerning finding. As reviewer 3 notes, this may have been due to participants having control over the volume used to play the aversive sounds in the task (see below for our response to this point). Future work with better controlled experimental settings will be needed to determine the reliability of this parameter more accurately.

This may also have been due to the asymmetric nature of the task, as only one option could produce the punishment. This means that there were fewer trials on which to estimate learning about the occurrence of a punishment. Future work using continuous outcomes, as the reviewer suggests below, whilst keeping the asymmetric relationship between the options, could help in this regard.

We have included the following comment on this issue in the manuscript:

Alternatively, as participants self-determined the loudness of the punishments, differences in volume settings across sessions may have impacted the reliability of this parameter (and indeed punishment sensitivity). Further, the asymmetric nature of the task may have impacted our ability to estimate the punishment learning rate, as there were fewer occurrences of the punishment compared to the reward.

The Kendall's tau values underlying task induced anxiety and safety reference/ various indices are very weak (all < 0.1), as are the mediation effects (all beta < 0.01). This should be highlighted as a limitation, although the interaction with P(punishment|conflict) does explain some of this.

We now include references to the effect sizes to emphasise this limitation. We also note, as the reviewer suggests, that this may be due to crudeness of overall choice proportion as a measure of approach/avoidance, as it is contaminated with variables such as P(punishment|conflict).

One potentially important limitation of our findings is the small effect size observed in the correlation between task-induced anxiety and avoidance (Kendall's tau values < 0.1, mediation betas < 0.01). This may be attributed to the simplicity of using overall choice proportion as a measure of approach/avoidance, as the effect of anxiety on choice was also influenced by punishment probability.

The inclusion of only one level of reward (and punishment) limits the ecological validity of the sensitivity indices.

We agree that using multi-level outcomes will be an important question for future work and now explicitly note this in the manuscript, as below:

Using multi-level or continuous outcomes would also improve the ecological validity of the present approach and interpretation of the sensitivity parameters.

Appraisal and impact:

Overall this is a very strong paper, describing a novel task that could help move the field of RL forward to take account of threat processing more fully. The large sample size with discovery, replication and test-retest gives confidence in the findings. The task has good ecological validity and associations with task-based anxiety and clinical self-report demonstrate clinical relevance. The authors could give further context but test-retest of the punishment learning parameter is the only real concern. Overall this task provides an exciting new probe of reward/threat that could be used in mechanistic disease models.

We thank the reviewer again for helping us to improve our analyses and manuscript.

Reviewer #2 (Recommendations For The Authors):

Additional context:

In the introduction "cognitive tasks that bear little semblance to those used in the non-human literature" seems a little unfair. One study that is already cited (Ironside et al, 2020) used a task that was adapted from non-human primates for use in humans. It has almost identical visual stimuli (different levels of simultaneous reward and aversive outcome/punishment) and response selection processes (joystick) between species and some overlapping brain regions were activated across species for conflict and aversiveness. The later point that non-human animals must be trained on the association between action and outcome is well taken from the point of view of computational validity but perhaps not sufficient to justify the previous statement.

Our intention for this section was to briefly convey that historically, approach-avoidance conflict would have been measured either using questionnaires or joystick-based tasks which have no direct non-human counterpart. However, we agree that this phrasing is unfair to recent studies such as those by Ironside and colleagues. Therefore, we have amended the text to the following:

In humans, on the other hand, approach-avoidance conflict has historically been measured using questionnaires such as the Behavioural Inhibition/Activation Scale (Carver & White, 1994), or cognitive tasks that rely on motor biases to approach/move towards positive stimuli and avoid/move away from negative stimuli which have no direct non-human counterparts (Guitart-Masip et al., 2012; Kirlic et al., 2017; Mkrtchian et al., 2017; Phaf et al., 2014).

It would be good to speculate on why task induced anxiety made participants slower to update their estimates of punishment probability.

Although a meta-analysis of reinforcement learning studies using reward and punishment outcomes suggests a positive association between punishment learning rate and anxiety symptoms (and depressed mood), we paradoxically found the opposite effect. However, previous work has suggested that distinct forms of anxiety associate differently with anxiety (Wise & Dolan, 2020, Nat. Commun.), where somatic anxiety was negatively correlated with punishment learning rate whereas cognitive anxiety showed the opposite effect. We have now added the following to the manuscript, and noted that future work is needed to understand the potentially complex relationship between anxiety and learning from punishments:

Notably, although a recent computational meta-analysis of reinforcement learning studies showed that symptoms of anxiety and depression are associated with elevated punishment learning rates (Pike & Robinson, 2022), we did not observe this pattern in our data. Indeed, we even found the contrary effect in relation to task-induced anxiety, specifically that anxiety was associated with lower rates of learning from punishment. However, other work has suggested that the direction of this effect can depend on the form of anxiety, where cognitive anxiety may be associated with elevated learning rates, but somatic anxiety may show the opposite pattern (Wise & Dolan, 2020) and this may explain the discrepancy in findings. Additionally, parameter values are highly dependent on task design (Eckstein et al., 2022), and study designs to date may be more optimised in detecting differences in learning rate (Pike & Robinson, 2022) – future work is needed to better understand the potentially complex association between anxiety and punishment learning rate. Lastly, as punishment learning rate was severely unreliable in the test-retest analyses, and the associations between punishment learning rate and state anxiety were not robust to an alternative method of parameter estimation (variational Bayesian inference), the negative correlation observed in our study should be treated with caution.

Were those with more task-based anxiety more inflexible in general?

The lack of associations across reward learning rate and task-induced anxiety suggest that this was not a general inflexibility effect. To test the reviewer’s hypothesis more directly, we conducted a sensitivity analysis by examining the model with a general learning rate – this did not support a general inflexibility effect. Please see the new section in the Supplement below:

[9.10 Sensitivity analysis: anxiety and inflexibility]

As anxious participants were slower to update their estimates of punishment probability, we determined whether this was due to greater general inflexibility by examining the model including two sensitivity parameters, but one general learning rate (i.e. not split by outcome). The correlation between this general learning rate and task-induced anxiety was not significant in either samples (discovery: tau = -0.02, p = 0.504; replication: tau = -0.01, p = 0.625), suggesting that the effect is specific to punishment.

Was the 16% versus 20% of the two samples with clinically relevant anxiety symptoms significantly different? What about other demographics in the two samples?

The difference in proportions were not significantly different (χ2 = 2.33, p = 0.127). The discovery sample included more females and was older on average compared to the replication sample – information which we now report in the manuscript:

The discovery sample consisted of a significantly greater proportion of female participants than the replication sample (59% vs 52%, χ2 = 4.64, p = 0.031). The average age was significantly different across samples (discovery sample mean = 37.7, SD = 10.3, replication sample mean = 34.3, SD = 10.4; t785.5 = 5.06, p < 0.001). The differences in self-reported psychiatric symptoms across samples did not reach significance (p > 0.086).

It would be interesting to know how many participants failed the audio attention checks.

We have now included information about what proportion of participants fail each of the task exclusion criteria in the manuscript:

Firstly, we excluded participants who missed a response to more than one auditory attention check (see above; 8% in both discovery and replication samples) – as these occurred infrequently and the stimuli used for the checks were played at relatively low volume, we allowed for incorrect responses so long as a response was made. Secondly, we excluded those who responded with the same response key on 20 or more consecutive trials (> 10% of all trials; 4/6% in discovery and replication samples, respectively). Lastly, we excluded those who did not respond on 20 or more trials (1/2% in discovery and replication samples, respectively). Overall, we excluded 51 out of 423 (12%) in the discovery sample, and 98 out of 725 (14%) in the replication sample.

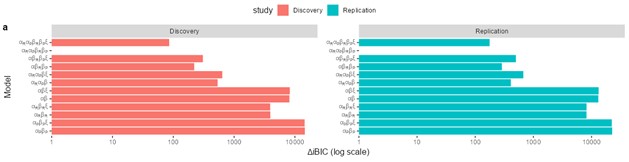

There doesn't appear to be a model with only learning from punishment (i.e. no reward learning) included in the model comparison. It would be interesting to see how it compared.

We have fitted the suggested model and found that it is the least parsimonious of the models. Since participants were monetarily incentivised based on the rewards only, this was to be expected. We have now added this ‘punishment learning only’ model and its variant including a lapse term into the model comparison. The two lowest bars on the y-axis in Author response image 2 represent these models.

Author response image 2.

Were sex effects examined as these have been commonly found in AAC tasks. How about other covariates such as age?

We have now tested the effects of sex and age on behaviour and on parameter values. There were indeed some significant effects, albeit with some inconsistencies across the two samples, which for completeness we have included in the manuscript, as follows:

While sex was significantly associated with choice in the discovery sample (β = 0.16 ± 0.07, p = 0.028) with males being more likely to choose the conflict option, this pattern was not evident in the replication sample (β = 0.08 ± 0.06, p = 0.173), and age was not associated with choice in either sample (p > 0.2).

Comparing parameters across sexes via Welch’s t-tests revealed significant differences in reward sensitivity (t289 = -2.87, p = 0.004, d = 0.34; lower in females) and consequently reward-punishment sensitivity index (t336 = -2.03, p = 0.043, d = 0.22; lower in females i.e. more avoidance-driven). In the replication sample, we observed the same effect on reward-punishment sensitivity index (t626 = -2.79, p = 0.005, d = 0.22; lower in females). However, the sex difference in reward sensitivity did not replicate (p = 0.441), although we did observe a significant sex difference in punishment sensitivity in the replication sample (t626 = 2.26, p = 0.024, d = 0.18).

Minor: Still a few placeholders (Supplementary Table X/ Table X) in the methods

We thank the reviewer for spotting these errors. We have now corrected these references.

Reviewer #3 (Public Review):

This study investigated cognitive mechanisms underlying approach-avoidance behavior using a novel reinforcement learning task and computational modelling. Participants could select a risky "conflict" option (latent, fluctuating probabilities of monetary reward and/or unpleasant sound [punishment]) or a safe option (separate, generally lower probability of reward). Overall, participant choices were skewed towards more rewarded options, but were also repelled by increasing probability of punishment. Individual patterns of behavior were well-captured by a reinforcement learning model that included parameters for reward and punishment sensitivity, and learning rates for reward and punishment. This is a nice replication of existing findings suggesting reward and punishment have opposing effects on behavior through dissociated sensitivity to reward versus punishment.

Interestingly, avoidance of the conflict option was predicted by self-reported task-induced anxiety. This effect of anxiety was mediated by the difference in modelled sensitivity to reward versus punishment (relative sensitivity). Importantly, when a subset of participants were retested over 1 week later, most behavioral tendencies and model parameters were recapitulated, suggesting the task may capture stable traits relevant to approach-avoidance decision-making.

We thank the reviewer for their useful analysis of our study. Indeed, it was reassuring to see that performance indices were reliable across time.

However, interpretation of these findings are severely undermined by the fact that the aversiveness of the auditory punisher was largely determined by participants, with the far-reaching impacts of this not being accounted for in any of the analyses. The manipulation check to confirm participants did not mute their sound is highly commendable, but the thresholding of punisher volume to "loud but comfortable" at the outset of the task leaves substantial scope for variability in the punisher delivered to participants. Indeed, participants' ratings of the unpleasantness of the punishment was moderate and highly variable (M = 31.7 out of 50, SD = 12.8 [distribution unreported]). Despite having this rating, it is not incorporated into analyses. It is possible that the key finding of relationships between task-induced anxiety, reward-punishment sensitivity and avoidance are driven by differences in the punisher experienced; a louder punisher is more unpleasant, driving greater task-induced anxiety, model-derived punishment sensitivity, and avoidance (and vice versa). This issue can also explain the counterintuitive findings from re-tested participants; lower/negatively correlated task-induced anxiety and punishment-related cognitive parameters may have been due to participants adjusting their sound settings to make the task less aversive (retest punisher rating not reported). It can therefore be argued that the task may not actually capture meaningful cognitive/motivational traits and their effects on decision-making, but instead spurious differences in punisher intensity.

We thank the reviewer for raising this important potential limitation of our study. We agree that how participants self-adjusted their sound volume may important consequences for our interpretations of the data. Unfortunately, despite the scalability of online data collection, this highlights one of its major weaknesses in the lack of controllability over experimental parameters. The previous paper from which we obtained our aversive sounds (Seow & Hauser, 2021, Behav Res, doi.org/10.3758/s13428-021-01643-0) contains useful analyses with regards to this discussion. When comparing the unpleasantness of the sounds played at 50% vs 100% volume, the authors indeed found that the lower volumes lead to lower unpleasantness ratings. However, the magnitude of this effect did not appear to be substantial (Fig. 4 from the paper), and even at 50% volume, the scream sounds we used were rated in the top quartile for unpleasantness, on average. This implies that the sounds have sufficient inherent unpleasantness, even when played at half intensity. We find this reassuring, in the sense that any self-imposed volume effects may not be large. Of note, our instructions to participants to adjust the volume to a ‘loud but comfortable’ level was based on the same phrasing used in this study.

To the reviewers point on how this might affect the reliability of the task, we have included the following in the ‘Discussion’ section:

Alternatively, as participants self-determined the loudness of the punishments, differences in volume settings across sessions may have impacted the reliability of this parameter (and indeed other measures).

Please see below for analyses accounting for punishment unpleasantness ratings.

This undercuts the proposed significance of this task as a translational tool for understanding anxiety and avoidance. More information about ratings of punisher unpleasantness and its relationship to task behavior, anxiety and cognitive parameters would be valuable for interpreting findings. It would also be of interest whether the same results were observed if the aversiveness of the punisher was titrated prior to the task.

As suggested, we have now included sensitivity analyses using the unpleasantness ratings that show their effect is minimal on our primary inference. We report relevant results below in the ‘Recommendations For The Authors’ section. At the same time, we think it is important to acknowledge that unpleasantness is a combination of both the inherent unpleasantness of the sound and the volume it is presented at, where only the latter is controlled by the participant. Therefore, these analyses are not a perfect indicator of the effect of participant control. For convenience, we reproduce the key findings from this sensitivity analysis here:

Approach-avoidance hierarchical logistic regression model

We assessed whether approach and avoidance responses, and their relationships with state anxiety, were impacted by punishment unpleasantness, by including unpleasantness ratings as a covariate into the hierarchical logistic regression model. Whilst unpleasantness was a significant predictor of choice (positively predicting safe option choices), all significant predictors and interaction effects from the model without unpleasantness survived (Supplementary Figure 11). Critically, this suggests that punishment unpleasantness does not account for all of the variance in the relationship between anxiety and avoidance.

Mediation model

When unpleasantness ratings were included in the mediation models, the mediating effect of the reward-punishment sensitivity index did not survive (discovery sample: standardised β = 0.003 ± 0.003, p = 0.416; replication sample: standardised β = 0.004 ± 0.003, p = 0.100; Supplementary Figure 12). Pooling the samples resulted in an effect that narrowly missed the significance threshold (standardised β = 0.004 ± 0.002, p = 0.068).

More generally, whether or not to titrate the punishments (and indeed the rewards) is an interesting experimental decision, which we think should be guided by the research question. In our case, we were interested in individual differences in reward/punishment learning and sensitivity and their relation to anxiety, so variation in how aversive the sounds affected approach-avoidance decisions was an important aspect of our design. In studies where the aim is to understand more general processes of how humans act under approach-avoidance conflict, it may be better to tightly control the salience of reinforcers.

Ultimately, the best test of the causal role of anxiety on avoidance, and against the hypothesis that our results were driven by spurious volume control effects, would be to run within-subjects anxiety interventions, where these volume effects are naturally accounted for. This will be an important direction for future studies using similar measures. We have added a paragraph in the ‘Discussion’ section on this point:

Relatedly, participants had some control over the intensity at which the punishments were presented, which may have driven our findings relating to anxiety and putative mechanisms of anxiety-related avoidance. Sensitivity analyses showed that our finding that anxiety is positively associated with avoidance in the task was robust to individual differences in self-reported punishment unpleasantness, whilst the mediation effects were not. Future work imposing better control over the stimuli presented, and/or using within-subjects designs will be needed to validate the role of reward/punishment sensitivities in anxiety-related avoidance.

Although the procedure and findings reported here remain valuable to the field, claims of novelty including its translational potential are perhaps overstated. This study complements and sits within a much broader literature that investigates roles for aversion and cognitive traits in approach-avoidance decisions. This includes numerous studies that apply reinforcement learning models to behavior in two-choice tasks with latent probabilities of reward and punishment (e.g., see doi: 10.1001/jamapsychiatry.2022.0051), as well as other translationally-relevant paradigms (e.g., doi: 10.3389/fpsyg.2014.00203, 10.7554/eLife.69594, etc).

We agree with the reviewer that our approach builds on previous work in reinforcement learning, approach-avoidance conflict and translational measures of anxiety. Whilst there are by now many studies using two-choice learning tasks with latent reward and punishment probabilities, our main, and which we refer to as ‘novel’, aim was to bring these fields together in such a way so as to model anxiety-related behaviour.

We note that we do not make strong statements about whether these effects speak to traits per se, and as Reviewer 1 notes, the evidence from our study suggests that the present measure may be better suited to assessing state anxiety. While computational model parameters can and are certainly often interpreted as constituting stable individual traits, a more simple interpretation of our findings may be that state anxiety is associated with a momentary preference for punishment avoidance over reward pursuit. This can still be informative for the study of anxiety, especially given the notion of a continuous relationship between adaptive/state anxiety and maladaptive/persistent anxiety.

Having said that, we agree with the underlying premise of the reviewer’s point that how the measure relates to trait-level avoidance/inhibition measures will be an interesting question for future work. We appreciate the importance of using tasks such as ours and those highlighted by the reviewer as trait-level measures, especially in computational psychiatry. We have now included a discussion on the potential roles of cognitive/motivational traits, in line with the reviewer’s recommendation – briefly, we have included the suggested references by the reviewer, discussed the measure’s potential relevance to cognitive/motivational traits, and direct interested readers to the broader literature. Please see below for details.

Reviewer #3 (Recommendations For The Authors):

As stated in the public review, punisher unpleasantness and its relationship to key findings (including for retest) should be reported and discussed.

We signpost readers to our new analyses, incorporating unpleasantness ratings into the statistical models, from the main manuscript as follows:

Since participants self-determined the volume of the punishments in the task, and therefore (at least in part) their aversiveness, we conducted sensitivity analyses by accounting for self-reported unpleasantness ratings of the punishment (see the Supplement). Our finding that anxiety impacts approach-avoidance behaviour was robust to this sensitivity analysis (p < 0.001), however the mediating effect of the reward-sensitivity sensitivity index was not (p > 0.1; see Supplement section 9.9 for details).

We reproduce the relevant section from the Supplement below. Overall, we found that the effect of anxiety on choices (via its interaction with punishment probability) remained significant after accounting for unpleasantness, however the mediating effect of reward-punishment sensitivity was no longer significant when unpleasantness ratings were included in the model. As noted above, unpleasantness ratings are not a perfect measure of self-imposed sound volume, and indeed punishment sensitivity is essentially a computationally-derived measure of unpleasantness, which makes it difficult to interpret the mediation model which contains both of these measures. However, since we found that anxiety affected choice over and above and effects of self-imposed sound volume (using unpleasantness ratings as a proxy measure), we argue that the task still holds value as a model of anxiety-related avoidance.

[Supplement Section 9.9: Sensitivity analyses of punishment unpleasantness]

Distribution of unpleasantness

The punishments were rated as unpleasant by the participants, on average (discovery sample: mean rating = 31.1 [scored between 0 and 50], SD = 13.1; replication sample: mean rating = 32.1, SD = 12.7; Supplementary Figure 10).

Approach-avoidance hierarchical logistic regression model

We assessed whether approach and avoidance responses, and their relationships with state anxiety, were impacted by punishment unpleasantness, by including unpleasantness ratings as a covariate into the hierarchical logistic regression model. Whilst unpleasantness was a significant predictor of choice (positively predicting safe option choices), all significant predictors and interaction effects from the model without unpleasantness ratings survived (Supplementary Figure 11). Critically, this suggests that punishment unpleasantness does not account for all of the variance in the relationship between anxiety and avoidance.

Mediation model

When unpleasantness ratings were included in the mediation models, the mediating effect of the reward-punishment sensitivity index did not survive (discovery sample: standardised β = 0.003 ± 0.003, p = 0.416; replication sample: standardised β = 0.004 ± 0.003, p = 0.100; Supplementary Figure 12). Pooling the samples resulted in an effect that narrowly missed the significance threshold (standardised β = 0.004 ± 0.002, p = 0.068).

Test-retest reliability of unpleasantness

The test-retest reliability of unpleasantness ratings was excellent (ICC(3,1) = 0.75), although participants gave significantly lower ratings in the second session (t56 = 2.7, p = 0.008, d = 0.37; mean difference of 3.12, SD = 8.63).

Reliability of other measures with/out unpleasantness

To assess the effect of accounting for unpleasantness ratings on reliability estimates of task performance, we extracted variance components from linear mixed models, following a standard approach (Nakagawa et al., 2017) – note that this was not the method used to estimate reliability values in the main analyses, but we used this specific approach to compare the reliability values with and without the covariate of unpleasantness ratings. The results indicated that unpleasantness ratings did not have a material effect on reliability (Supplementary Figure 14).

We discuss the findings of these sensitivity analyses in the ‘Discussion’ section, as follows:

Relatedly, participants had some control over the intensity at which the punishments were presented, which may have driven our findings relating to anxiety and putative mechanisms of anxiety-related avoidance. Sensitivity analyses showed that our finding that anxiety is positively associated with avoidance in the task was robust to individual differences in self-reported punishment unpleasantness, whilst the mediation effects were not. Future work imposing better control over the stimuli presented, and/or using within-subjects designs will be needed to validate the role of reward/punishment sensitivities in anxiety-related avoidance.

Introduction and discussion should spend more time relating the task and current findings to existing procedures and findings examining individual differences in avoidance and cognitive/motivational correlates.

We thank the reviewer for the opportunity to expand on the literature. Whilst there are numerous behavioural paradigms in both the human and non-human literature that involve learning about rewards and punishments, our starting point for the introduction was the state-of-the-art in translational models of approach-avoidance conflict models of anxiety. Therefore, for the sake of brevity and logical flow of our introduction, we have opted to bring in the discussion on other procedures primarily in the ‘Discussion’ section of the manuscript.

We have now included the reviewer’s suggested citations from their ‘Public Review’ as follows:

Since we developed our task with the primary focus on translational validity, its design diverges from other reinforcement learning tasks that involve reward and punishment outcomes (Pike & Robinson, 2022). One important difference is that we used distinct reinforcers as our reward and punishment outcomes, compared to many studies which use monetary outcomes for both (e.g. earning and losing £1 constitute the reward and punishment, respectively; Aylward et al., 2019; Jean-Richard-Dit-Bressel et al., 2021; Pizzagalli et al., 2005; Sharp et al., 2022). Other tasks have been used that induce a conflict between value and motor biases, relying on prepotent biases to approach/move towards rewards and withdraw from punishments, which makes it difficult to approach punishments and withdraw from rewards (Guitart-Masip et al., 2012; Mkrtchian et al., 2017). However, since translational operant conflict tasks typically induce a conflict between different types of outcome (e.g. food and shocks/sugar and quinine pellets; Oberrauch et al., 2019; van den Bos et al., 2014), we felt it was important to implement this feature. One study used monetary rewards and shock-based punishments, but also included four options for participants to choose from on each trial, with rewards and punishments associated with all four options (Seymour et al., 2012). This effectively requires participants to maintain eight probability estimates (i.e. reward and punishment at each of the four options) to solve the task, which may be too difficult for non-human animals to learn efficiently.

We have also included a discussion on the measure’s potential relevance to cognitive/motivational traits as follows:

Finally, whilst there is a broad literature on the roles of behavioural inhibition and avoidance tendency traits on decision-making and behaviour (Carver & White, 1994; Corr, 2004; Gray, 1982), we did not replicate the correlation of experiential avoidance and avoidance responses or the reward-punishment sensitivity index. Since there were also no significant correlations across task performance indices and clinical symptom measures, our findings suggest that the measure may be more sensitive to behaviours relating to state anxiety, rather more stable traits. Nevertheless, how performance in the present task relates to other traits such as behavioural approach/inhibition tendencies (Carver & White, 1994), as has been found in previous studies on reward/punishment learning (Sharp et al., 2022; Wise & Dolan, 2020) and approach-avoidance conflict (Aupperle et al., 2011), will be an important question for future work.

We also now direct readers to a recent, comprehensive review on applying computational methods to approach-avoidance behaviours in the ‘Introduction’ section:

A fundamental premise of this approach is that the brain acts as an information-processing organ that performs computations responsible for observable behaviours, including approach and avoidance (for a recent review on the application of computational methods to approach-avoidance conflict, see Letkiewicz et al., 2023).

I am curious why participants were excluded if they made the same response on 20+ consecutive trials. How does this represent a cut-off between valid versus invalid behavioral profiles?

We apologise for the lack of clarity on this point in our original submission – this exclusion criterion was specifically if participants used the same response key (e.g. the left arrow button) on 20 or more consecutive trials, indicating inattention. Since the left-right positions of the stimuli were randomised across trials, this did not exclude participants who repeatedly chose the same option frequently. However, as we show in the Supplement, this, along with the other exclusion criteria, did not affect our main findings.

We have now clarified this as follows:

… we excluded those who responded with the same response key on 20 or more consecutive trials (> 10% of all trials; 4%/6% in discovery and replication samples, respectively) – note that as the options randomly switched sides on the screen across trials, this did not exclude participants who frequently and consecutively chose a certain option.