Peer review process

Revised: This Reviewed Preprint has been revised by the authors in response to the previous round of peer review; the eLife assessment and the public reviews have been updated where necessary by the editors and peer reviewers.

Read more about eLife’s peer review process.Editors

- Reviewing EditorRedmond O'ConnellTrinity College Dublin, Dublin, Ireland

- Senior EditorJoshua GoldUniversity of Pennsylvania, Philadelphia, United States of America

Reviewer #1 (Public review):

Summary:

In this study, participants completed two different tasks. A perceptual choice task in which they compared the sizes of pairs of items and a value-different task in which they identified the higher value option among pairs of items with the two tasks involving the same stimuli. Based on previous fMRI research, the authors sought to determine whether the superior frontal sulcus (SFS) is involved in both perceptual and value-based decisions or just one or the other. Initial fMRI analyses were devised to isolate brain regions that were activated for both types of choices and also regions that were unique to each. Transcranial magnetic stimulation was applied to the SFS in between fMRI sessions and it was found to lead to a significant decrease in accuracy and RT on the perceptual choice task but only a decrease in RT on the value-different task. Hierarchical drift diffusion modelling of the data indicated that the TMS had led to a lowering of decision boundaries in the perceptual task and a lower of non-decision times on the value-based task. Additional analyses show that SFS covaries with model derived estimates of cumulative evidence, that this relationship is weakened by TMS.

Strengths:

The paper has many strengths, including the rigorous multi-pronged approach of causal manipulation, fMRI and computational modelling, which offers a fresh perspective on the neural drivers of decision making. Some additional strengths include the careful paradigm design, which ensured that the two types of tasks were matched for their perceptual content while orthogonalizing trial-to-trial variations in choice difficulty. The paper also lays out a number of specific hypotheses at the outset regarding the behavioural outcomes that are tied to decision model parameters and well justified.

Weaknesses:

In my previous comments (1.3.1 and 1.3.2) I noted that key results could be potentially explained by cTBS leading to faster perceptual decision making in both the perceptual and value-based tasks. The authors responded that if this were the case then we would expect either a reduction in NDT in both tasks or a reduction in decision boundaries in both tasks (whereas they observed a lowering of boundaries in the perceptual task and a shortening of NDT in the value task). I disagree with this statement. First, it is important to note that the perceptual decision that must be completed before the value-based choice process can even be initiated (i.e. the identification of the two stimuli) is no less trivial than that involved in the perceptual choice task (comparison of stimulus size). Given that the perceptual choice must be completed before the value comparison can begin, it would be expected that the model would capture any variations in RT due to the perceptual choice in the NDT parameter and not as the authors suggest in the bound or drift rate parameters since they are designed to account for the strength and final quantity of value evidence specifically. If, in fact, cTBS causes a general lowering of decision boundaries for perceptual decisions (and hence speeding of RTs) then it would be predicted that this would manifest as a short NDT in the value task model, which is what the authors see.

Reviewer #2 (Public review):

Summary:

The authors set out to test whether a TMS-induced reduction in excitability of the left Superior Frontal Sulcus influenced evidence integration in perceptual and value-based decisions. They directly compared behaviour-including fits to a computational decision process model---and fMRI pre and post TMS in one of each type of decision-making task. Their goal was to test domain-specific theories of the prefrontal cortex by examining whether the proposed role of the SFS in evidence integration was selective for perceptual but not value-based evidence.

Strengths:

The paper presents multiple credible sources of evidence for the role of the left SFS in perceptual decision making, finding similar mechanisms to prior literature and a nuanced discussion of where they diverge from prior findings. The value-based and perceptual decision-making tasks were carefully matched in terms of stimulus display and motor response, making their comparison credible.

Weaknesses:

-I was confused about the model specification in terms of the relationship between evidence level and drift rate. While the methods (and e.g. supplementary figure 3) specify a linear relationship between evidence level and drift rate, suggesting, unless I misunderstood, that only a single drift rate parameter (kappa) is fit. However, the drift rate parameter estimates in the supplementary tables (and response to reviewers) do not scale linearly with evidence level.

-The fit quality for the value-based decision task is not as good as that for the PDM, and this would be worth commenting on in the paper.

Author response:

The following is the authors’ response to the original reviews

Public Reviews:

Reviewer #1 (Public Review):

Summary:

In this study, participants completed two different tasks. A perceptual choice task in which they compared the sizes of pairs of items and a value-different task in which they identified the higher value option among pairs of items with the two tasks involving the same stimuli. Based on previous fMRI research, the authors sought to determine whether the superior frontal sulcus (SFS) is involved in both perceptual and value-based decisions or just one or the other. Initial fMRI analyses were devised to isolate brain regions that were activated for both types of choices and also regions that were unique to each. Transcranial magnetic stimulation was applied to the SFS in between fMRI sessions and it was found to lead to a significant decrease in accuracy and RT on the perceptual choice task but only a decrease in RT on the value-different task. Hierarchical drift-diffusion modelling of the data indicated that the TMS had led to a lowering of decision boundaries in the perceptual task and a lower of non-decision times on the value-based task. Additional analyses show that SFS covaries with model-derived estimates of cumulative evidence and that this relationship is weakened by TMS.

Strengths:

The paper has many strengths including the rigorous multi-pronged approach of causal manipulation, fMRI and computational modelling which offers a fresh perspective on the neural drivers of decision making. Some additional strengths include the careful paradigm design which ensured that the two types of tasks were matched for their perceptual content while orthogonalizing trial-to-trial variations in choice difficulty. The paper also lays out a number of specific hypotheses at the outset regarding the behavioural outcomes that are tied to decision model parameters and are well justified.

Weaknesses:

(1.1) Unless I have missed it, the SFS does not actually appear in the list of brain areas significantly activated by the perceptual and value tasks in Supplementary Tables 1 and 2. Its presence or absence from the list of significant activations is not mentioned by the authors when outlining these results in the main text. What are we to make of the fact that it is not showing significant activation in these initial analyses?

You are right that the left SFS does not appear in our initial task-level contrasts. Those first analyses were deliberately agnostic to evidence accumulation (i.e., average BOLD by task, irrespective of trial-by-trial evidence). Consistent with prior work, SFS emerges only when we model the parametric variation in accumulated perceptual evidence.

Accordingly, we ran a second-level GLM that included trial-wise accumulated evidence (aE) as a parametric modulator. In that analysis, the left SFS shows significant aE-related activity specifically during perceptual decisions, but not during value-based decisions (SVC in a 10-mm sphere around x = −24, y = 24, z = 36).

To avoid confusion, we now:

(i) explicitly separate and label the two analysis levels in the Results; (ii) state up front that SFS is not expected to appear in the task-average contrast; and (iii) add a short pointer that SFS appears once aE is included as a parametric modulator. We also edited Methods to spell out precisely how aE is constructed and entered into GLM2. This should make the logic of the two-stage analysis clearer and aligns the manuscript with the literature where SFS typically emerges only in parametric evidence models.

(1.2) The value difference task also requires identification of the stimuli, and therefore perceptual decision-making. In light of this, the initial fMRI analyses do not seem terribly informative for the present purposes as areas that are activated for both types of tasks could conceivably be specifically supporting perceptual decision-making only. I would have thought brain areas that are playing a particular role in evidence accumulation would be best identified based on whether their BOLD response scaled with evidence strength in each condition which would make it more likely that areas particular to each type of choice can be identified. The rationale for the authors' approach could be better justified.

We agree that both tasks require early sensory identification of the items, but the decision-relevant evidence differs by design (size difference vs. value difference), and our modelling is targeted at the evidence integration stage rather than initial identification.

To address your concern empirically, we: (i) added session-wise plots of mean RTs showing a general speed-up across the experiment (now in the Supplement); (ii) fit a hierarchical DDM to jointly explain accuracy and RT. The DDM dissociates decision time (evidence integration) from non-decision time (encoding/response execution).

After cTBS, perceptual decisions show a selective reduction of the decision boundary (lower accuracy, faster RTs; no drift-rate change), whereas value-based decisions show no change to boundary/drift but a decrease in non-decision time, consistent with faster sensorimotor processing or task familiarity. Thus, the TMS effect in SFS is specific to the criterion for perceptual evidence accumulation, while the RT speed-up in the value task reflects decision-irrelevant processes. We now state this explicitly in the Results and add the RT-by-run figure for transparency.

(1.2.1) The value difference task also requires identification of the stimuli, and therefore perceptual decision-making. In light of this, the initial fMRI analyses do not seem terribly informative for the present purposes as areas that are activated for both types of tasks could conceivably be specifically supporting perceptual decision-making only.

Thank you for prompting this clarification.

The key point is what changes with cTBS. If SFS supported generic identification, we would expect parallel cTBS effects on drift rate (or boundary) in both tasks. Instead, we find: (a) boundary decreases selectively in perceptual decisions (consistent with SFS setting the amount of perceptual evidence required), and (b) non-decision time decreases selectively in the value task (consistent with speed-ups in encoding/response stages). Moreover, trial-by-trial SFS BOLD predicts perceptual accuracy (controlling for evidence), and neural-DDM model comparison shows SFS activity modulates boundary, not drift, during perceptual choices.

Together, these converging behavioral, computational, and neural results argue that SFS specifically supports the criterion for perceptual evidence accumulation rather than generic visual identification.

(1.2.2) I would have thought brain areas that are playing a particular role in evidence accumulation would be best identified based on whether their BOLD response scaled with evidence strength in each condition which would make it more likely that areas particular to each type of choice can be identified. The rationale for the authors' approach could be better justified.

We now more explicitly justify the two-level fMRI approach. The task-average contrast addresses which networks are generally more engaged by each domain (e.g., posterior parietal for PDM; vmPFC/PCC for VDM), given identical stimuli and motor outputs. This complements, but does not substitute for, the parametric evidence analysis, which is where one expects accumulation-related regions such as SFS to emerge. We added text clarifying that the first analysis establishes domain-specific recruitment at the task level, whereas the second isolates evidence-dependent signals (aE) and reveals that left SFS tracks accumulated evidence only for perceptual choices. We also added explicit references to the literature using similar two-step logic and noted that SFS typically appears only in parametric evidence models.

(1.3) TMS led to reductions in RT in the value-difference as well as the perceptual choice task. DDM modelling indicated that in the case of the value task, the effect was attributable to reduced non-decision time which the authors attribute to task learning. The reasoning here is a little unclear.

(1.3.1) Comment: If task learning is the cause, then why are similar non-decision time effects not observed in the perceptual choice task?

Great point. The DDM addresses exactly this: RT comprises decision time (DT) plus non-decision time (nDT). With cTBS, PDM shows reduced DT (via a lower boundary) but stable nDT; VDM shows reduced nDT with no change to boundary/drift. Hence, the superficially similar RT speed-ups in both tasks are explained by different latent processes: decision-relevant in PDM (lower criterion → faster decisions, lower accuracy) and decision-irrelevant in VDM (faster encoding/response). We added explicit language and a supplemental figure showing RT across runs, and we clarified in the text that only the PDM speed-up reflects a change to evidence integration.

(1.3.2) Given that the value-task actually requires perceptual decision-making, is it not possible that SFS disruption impacted the speed with which the items could be identified, hence delaying the onset of the value-comparison choice?

We agree there is a brief perceptual encoding phase at the start of both tasks. If cTBS impaired visual identification per se, we would expect longer nDT in both tasks or a decrease in drift rate. Instead, nDT decreases in the value task and is unchanged in the perceptual task; drift is unchanged in both. Thus, cTBS over SFS does not slow identification; rather, it lowers the criterion for perceptual accumulation (PDM) and, separately, we observe faster non-decision components in VDM (likely familiarity or motor preparation). We added a clarifying sentence noting that item identification was easy and highly overlearned (static, large food pictures), and we cite that nDT is the appropriate locus for identification effects in the DDM framework; our data do not show the pattern expected of impaired identification.

(1.4) The sample size is relatively small. The authors state that 20 subjects is 'in the acceptable range' but it is not clear what is meant by this.

We have clarified what we mean and provided citations. The sample (n = 20) matches or exceeds many prior causal TMS/fMRI studies targeting perceptual decision circuitry (e.g., Philiastides et al., 2011; Rahnev et al., 2016; Jackson et al., 2021; van der Plas et al., 2021; Murd et al., 2021). Importantly, we (i) use within-subject, pre/post cTBS differences-in-differences with matched tasks; (ii) estimate hierarchical models that borrow strength across participants; and (iii) converge across behavior, latent parameters, regional BOLD, and connectivity. We now replace the vague phrase with a concrete statement and references, and we report precision (HDIs/SEs) for all main effects.

Reviewer #2 (Public Review):

Summary:

The authors set out to test whether a TMS-induced reduction in excitability of the left Superior Frontal Sulcus influenced evidence integration in perceptual and value-based decisions. They directly compared behaviour - including fits to a computational decision process model - and fMRI pre and post-TMS in one of each type of decision-making task. Their goal was to test domain-specific theories of the prefrontal cortex by examining whether the proposed role of the SFS in evidence integration was selective for perceptual but not value-based evidence.

Strengths:

The paper presents multiple credible sources of evidence for the role of the left SFS in perceptual decision-making, finding similar mechanisms to prior literature and a nuanced discussion of where they diverge from prior findings. The value-based and perceptual decision-making tasks were carefully matched in terms of stimulus display and motor response, making their comparison credible.

Weaknesses:

(2.1) More information on the task and details of the behavioural modelling would be helpful for interpreting the results.

Thank you for this request for clarity. In the revision we explicitly state, up front, how the two tasks differ and how the modelling maps onto those differences.

(1) Task separability and “evidence.” We now define task-relevant evidence as size difference (SD) for perceptual decisions (PDM) and value difference (VD) for value-based decisions (VDM). Stimuli and motor mappings are identical across tasks; only the evidence to be integrated changes.

(2) Behavioural separability that mirrors task design. As reported, mixed-effects regressions show PDM accuracy increases with SD (β=0.560, p<0.001) but not VD (β=0.023, p=0.178), and PDM RTs shorten with SD (β=−0.057, p<0.001) but not VD (β=0.002, p=0.281). Conversely, VDM accuracy increases with VD (β=0.249, p<0.001) but not SD (β=0.005, p=0.826), and VDM RTs shorten with VD (β=−0.016, p=0.011) but not SD (β=−0.003, p=0.419).

(3 How the HDDM reflects this. The hierarchical DDM fits the joint accuracy–RT distributions with task-specific evidence (SD or VD) as the predictor of drift. The model separates decision time from non-decision time (nDT), which is essential for interpreting the different RT patterns across tasks without assuming differences in the accumulation process when accuracy is unchanged.

These clarifications are integrated in the Methods (Experimental paradigm; HDDM) and in Results (“Behaviour: validity of task-relevant pre-requisites” and “Modelling: faster RTs during value-based decisions is related to non-decision-related sensorimotor processes”).

(2.2) The evidence for a choice and 'accuracy' of that choice in both tasks was determined by a rating task that was done in advance of the main testing blocks (twice for each stimulus). For the perceptual decisions, this involved asking participants to quantify a size metric for the stimuli, but the veracity of these ratings was not reported, nor was the consistency of the value-based ones. It is my understanding that the size ratings were used to define the amount of perceptual evidence in a trial, rather than the true size differences, and without seeing more data the reliability of this approach is unclear. More concerning was the effect of 'evidence level' on behaviour in the value-based task (Figure 3a). While the 'proportion correct' increases monotonically with the evidence level for the perceptual decisions, for the value-based task it increases from the lowest evidence level and then appears to plateau at just above 80%. This difference in behaviour between the two tasks brings into question the validity of the DDM which is used to fit the data, which assumes that the drift rate increases linearly in proportion to the level of evidence.

We thank the reviewer for raising these concerns, and we address each of them point by point:

2.2.1. Comment: It is my understanding that the size ratings were used to define the amount of perceptual evidence in a trial, rather than the true size differences, and without seeing more data the reliability of this approach is unclear.

That is correct—we used participants’ area/size ratings to construct perceptual evidence (SD).

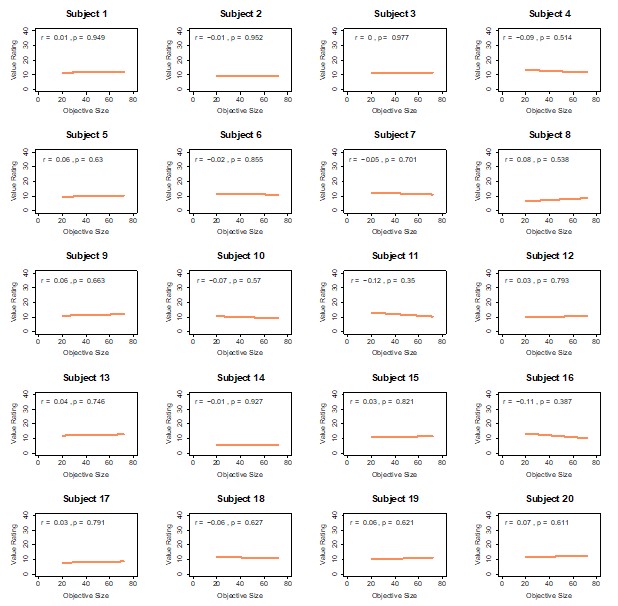

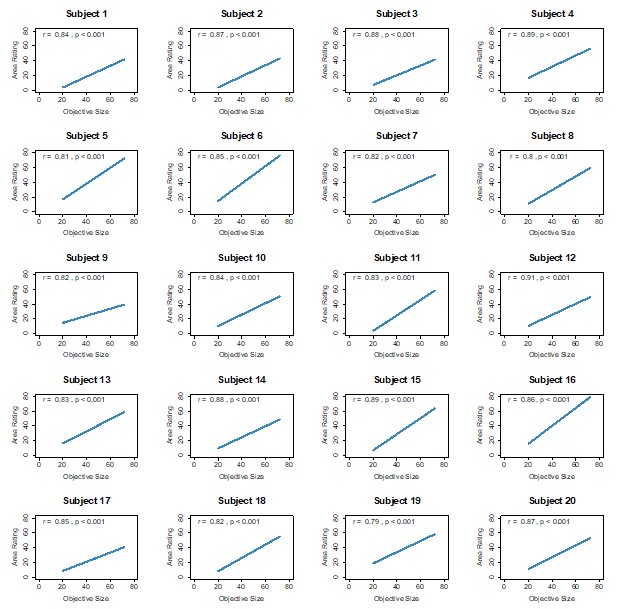

To validate this choice, we compared those ratings against an objective image-based size measure (proportion of non-black pixels within the bounding box). As shown in Author response image 3, perceptual size ratings are highly correlated with objective size across participants (Pearson r values predominantly ≈0.8 or higher; all p<0.001). Importantly, value ratings do not correlate with objective size (Author response image 2), confirming that the two rating scales capture distinct constructs. These checks support using participants’ size ratings as the participant-specific ground truth for defining SD in the PDM trials.

Author response image 1.

Objective size and value ratings are unrelated. Scatterplots show, for each participant, the correlation between objective image size (x-axis; proportion of non-black pixels within the item box) and value-based ratings (y-axis; 0–100 scale). Each dot is one food item (ratings averaged over the two value-rating repetitions). Across participants, value ratings do not track objective size, confirming that value and size are distinct constructs.

Author response image 2.

Perceptual size ratings closely track objective size. Scatterplots show, for each participant, the correlation between objective image size (x-axis) and perceptual area/size ratings (y-axis; 0–100 scale). Each dot is one food item (ratings averaged over the two perceptual ratings). Perceptual ratings are strongly correlated with objective size for nearly all participants (see main text), validating the use of these ratings to construct size-difference evidence (SD).

(2.2.2) More concerning was the effect of 'evidence level' on behaviour in the value-based task (Figure 3a). While the 'proportion correct' increases monotonically with the evidence level for the perceptual decisions, for the value-based task it increases from the lowest evidence level and then appears to plateau at just above 80%. This difference in behaviour between the two tasks brings into question the validity of the DDM which is used to fit the data, which assumes that the drift rate increases linearly in proportion to the level of evidence.

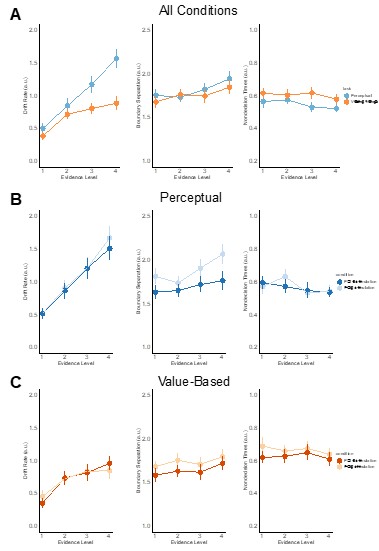

We agree that accuracy appears to asymptote in VDM, but the DDM fits indicate that the drift rate still increases monotonically with evidence in both tasks. In Supplementary figure 11, drift (δ) rises across the four evidence levels for PDM and for VDM (panels showing all data and pre/post-TMS). The apparent plateau in proportion correct during VDM reflects higher choice variability at stronger preference differences, not a failure of the drift–evidence mapping. Crucially, the model captures both the accuracy patterns and the RT distributions (see posterior predictive checks in Supplementary figures 11-16), indicating that a monotonic evidence–drift relation is sufficient to account for the data in each task.

Author response image 3.

HDDM parameters by evidence level. Group-level posterior means (± posterior SD) for drift (δ), boundary (α), and non-decision time (τ) across the four evidence levels, shown (a) collapsed across TMS sessions, (b) for PDM (blue) pre- vs post-TMS (light vs dark), and (c) for VDM (orange) pre- vs post-TMS. Crucially, drift increases monotonically with evidence in both tasks, while TMS selectively lowers α in PDM and reduces τ in VDM (see Supplementary Tables for numerical estimates).

(2.3) The paper provides very little information on the model fits (no parameter estimates, goodness of fit values or simulated behavioural predictions). The paper finds that TMS reduced the decision bound for perceptual decisions but only affected non-decision time for value-based decisions. It would aid the interpretation of this finding if the relative reliability of the fits for the two tasks was presented.

We appreciate the suggestion and have made the quantitative fit information explicit:

(1) Parameter estimates. Group-level means/SDs for drift (δ), boundary (α), and nDT (τ) are reported for PDM and VDM overall, by evidence level, pre- vs post-TMS, and per subject (see Supplementary Tables 8-11).

(2) Goodness of fit and predictive adequacy. DIC values accompany each fit in the tables. Posterior predictive checks demonstrate close correspondence between simulated and observed accuracy and RT distributions overall, by evidence level, and across subjects (Supplementary Figures 11-16).

Together, these materials document that the HDDM provides reliable fits in both tasks and accurately recovers the qualitative and quantitative patterns that underlie our inferences (reduced α for PDM only; selective τ reduction in VDM).

(2.4) Behaviourally, the perceptual task produced decreased response times and accuracy post-TMS, consistent with a reduced bound and consistent with some prior literature. Based on the results of the computational modelling, the authors conclude that RT differences in the value-based task are due to task-related learning, while those in the perceptual task are 'decision relevant'. It is not fully clear why there would be such significantly greater task-related learning in the value-based task relative to the perceptual one. And if such learning is occurring, could it potentially also tend to increase the consistency of choices, thereby counteracting any possible TMS-induced reduction of consistency?

Thank you for pointing out the need for a clearer framing. We have removed the speculative label “task-related learning” and now describe the pattern strictly in terms of the HDDM decomposition and neural results already reported:

(1) VDM: Post-TMS RTs are faster while accuracy is unchanged. The HDDM attributes this to a selective reduction in non-decision time (τ), with no change in decision-relevant parameters (α, δ) for VDM (see Supplementary Figure 11 and Supplementary Tables). Consistent with this, left SFS BOLD is not reduced for VDM, and trialwise SFS activity does not predict VDM accuracy—both observations argue against a change in VDM decision formation within left SFS.

(2) PDM: Post-TMS accuracy decreases and RTs shorten, which the HDDM captures as a lower decision boundary (α) with no change in drift (δ). Here, left SFS BOLD scales with accumulated evidence and decreases post-TMS, and trialwise SFS activity predicts PDM accuracy, all consistent with a decision-relevant effect in PDM.

Regarding the possibility that faster VDM RTs should increase choice consistency: empirically, consistency did not change in VDM, and the HDDM finds no decision-parameter shifts there. Thus, there is no hidden counteracting increase in VDM accuracy that could mask a TMS effect—the absence of a VDM accuracy change is itself informative and aligns with the modelling and fMRI.

Reviewer #3 (Public Review):

Summary:

Garcia et al., investigated whether the human left superior frontal sulcus (SFS) is involved in integrating evidence for decisions across either perceptual and/or value-based decision-making. Specifically, they had 20 participants perform two decision-making tasks (with matched stimuli and motor responses) in an fMRI scanner both before and after they received continuous theta burst transcranial magnetic stimulation (TMS) of the left SFS. The stimulation thought to decrease neural activity in the targeted region, led to reduced accuracy on the perceptual decision task only. The pattern of results across both model-free and model-based (Drift diffusion model) behavioural and fMRI analyses suggests that the left SLS plays a critical role in perceptual decisions only, with no equivalent effects found for value-based decisions. The DDM-based analyses revealed that the role of the left SLS in perceptual evidence accumulation is likely to be one of decision boundary setting. Hence the authors conclude that the left SFS plays a domain-specific causal role in the accumulation of evidence for perceptual decisions. These results are likely to add importance to the literature regarding the neural correlates of decision-making.

Strengths:

The use of TMS strengthens the evidence for the left SFS playing a causal role in the evidence accumulation process. By combining TMS with fMRI and advanced computational modelling of behaviour, the authors go beyond previous correlational studies in the field and provide converging behavioural, computational, and neural evidence of the specific role that the left SFS may play.

Sophisticated and rigorous analysis approaches are used throughout.

Weaknesses:

(3.1) Though the stimuli and motor responses were equalised between the perception and value-based decision tasks, reaction times (according to Figure 1) and potential difficulty (Figure 2) were not matched. Hence, differences in task difficulty might represent an alternative explanation for the effects being specific to the perception task rather than domain-specificity per se.

We agree that RTs cannot be matched a priori, and we did not intend them to be. Instead, we equated the inputs to the decision process and verified that each task relied exclusively on its task-relevant evidence. As reported in Results—Behaviour: validity of task-relevant pre-requisites (Fig. 1b–c), accuracy and RTs vary monotonically with the appropriate evidence regressor (SD for PDM; VD for VDM), with no effect of the task-irrelevant regressor. This separability check addresses differences in baseline RTs by showing that, for both tasks, behaviour tracks evidence as designed.

To rule out a generic difficulty account of the TMS effect, we relied on the within-subject differences-in-differences (DID) framework described in Methods (Differences-in-differences). The key Task × TMS interaction compares the pre→post change in PDM with the pre→post change in VDM while controlling for trialwise evidence and RT covariates. Any time-on-task or unspecific difficulty drift shared by both tasks is subtracted out by this contrast. Using this specification, TMS selectively reduced accuracy for PDM but not VDM (Fig. 3a; Supplementary Fig. 2a,c; Supplementary Tables 5–7).

Finally, the hierarchical DDM (already in the paper) dissociates latent mechanisms. The post-TMS boundary reduction appears only in PDM, whereas VDM shows a change in non-decision time without a decision-relevant parameter change (Fig. 3c; Supplementary Figs. 4–5). If unmatched difficulty were the sole driver, we would expect parallel effects across tasks, which we do not observe.

(3.2) No within- or between-participants sham/control TMS condition was employed. This would have strengthened the inference that the apparent TMS effects on behavioural and neural measures can truly be attributed to the left SFS stimulation and not to non-specific peripheral stimulation and/or time-on-task effects.

We agree that a sham/control condition would further strengthen causal attribution and note this as a limitation. In mitigation, our design incorporates several safeguards already reported in the manuscript:

· Within-subject pre/post with alternating task blocks and DID modelling (Methods) to difference out non-specific time-on-task effects.

· Task specificity across levels of analysis: behaviour (PDM accuracy reduction only), computational (boundary reduction only in PDM; no drift change), BOLD (reduced left-SFS accumulated-evidence signal for PDM but not VDM; Fig. 4a–c), and functional coupling (SFS–occipital PPI increase during PDM only; Fig. 5).

· Matched stimuli and motor outputs across tasks, so any peripheral sensations or general arousal effects should have influenced both tasks similarly; they did not.

Together, these converging task-selective effects reduce the likelihood that the results reflect non-specific stimulation or time-on-task. We will add an explicit statement in the Limitations noting the absence of sham/control and outlining it as a priority for future work.

(3.3) No a priori power analysis is presented.

We appreciate this point. Our sample size (n = 20) matched prior causal TMS and combined TMS–fMRI studies using similar paradigms and analyses (e.g., Philiastides et al., 2011; Rahnev et al., 2016; Jackson et al., 2021; van der Plas et al., 2021; Murd et al., 2021), and was chosen a priori on that basis and the practical constraints of cTBS + fMRI. The within-subject DID approach and hierarchical modelling further improve efficiency by leveraging all trials.

To address the reviewer’s request for transparency, we will (i) state this rationale in Methods—Participants, and (ii) ensure that all primary effects are reported with 95% CIs or posterior probabilities (already provided for the HDDM as pmcmcp_{\mathrm{mcmc}}pmcmc). We also note that the design was sensitive enough to detect RT changes in both tasks and a selective accuracy change in PDM, arguing against a blanket lack of power as an explanation for null VDM accuracy effects. We will nevertheless flag the absence of a formal prospective power analysis in the Limitations.

Recommendations for the Authors:

Reviewer #1 (Recommendations For The Authors):

Some important elements of the methods are missing. How was the site for targeting the SFS with TMS identified? The methods described how M1 was located but not SFS.

Thank you for catching this omission. In the revised Methods we explicitly describe how the left SFS target was localized. Briefly, we used each participant’s T1-weighted anatomical scan and frameless neuronavigation to place a 10-mm sphere at the a priori MNI coordinates (x = −24, y = 24, z = 36) derived from prior work (Heekeren et al., 2004; Philiastides et al., 2011). This sphere was transformed to native space for each participant. The coil was positioned tangentially with the handle pointing posterior-lateral, and coil placement was continuously monitored with neuronavigation throughout stimulation. (All of these procedures mirror what we already report for M1 and are now stated for SFS as well.)

Where to revise the manuscript:

Methods → Stimulation protocol. After the first sentence naming cTBS, insert:

“The left SFS target was localized on each participant’s T1-weighted anatomical image using frameless neuronavigation. A 10-mm radius sphere was centered at the a priori MNI coordinates x = −24, y = 24, z = 36 (Heekeren et al., 2004; Philiastides et al., 2011), then transformed to native space. The MR-compatible figure-of-eight coil was positioned tangentially over the target with the handle oriented posterior-laterally, and its position was tracked and maintained with neuronavigation during stimulation.”

It is not clear how participants were instructed that they should perform the value-difference task. Were they told that they should choose based on their original item value ratings or was it left up to them?

We agree the instruction should be explicit. Participants were told_: “In value-based blocks, choose the item you would prefer to eat at the end of the experiment.”_ They were informed that one VDM trial would be randomly selected for actual consumption, ensuring incentive-compatibility. We did not ask them to recall or follow their earlier ratings; those ratings were used only to construct evidence (value difference) and to define choice consistency offline.

Where to revise the manuscript:

Methods → Experimental paradigm.

Add a sentence to the VDM instruction paragraph:

“In value-based (LIKE) blocks, participants were instructed to choose the item they would prefer to consume at the end of the experiment; one VDM trial was randomly selected and implemented, making choices incentive-compatible. Prior ratings were used solely to construct value-difference evidence and to score choice consistency; participants were not asked to recall or match their earlier ratings.”

Line 86 Introduction, some previous studies were conducted on animals. Why it is problematic that the studies were conducted in animals is not stated. I assume the authors mean that we do not know if their findings will translate to the human brain? I think in fairness to those working with animals it might be worth an extra sentence to briefly expand on this point.

We appreciate this and will clarify that animal work is invaluable for circuit-level causality, but species differences and putative non-homologous areas (e.g., human SFS vs. rodent FOF) limit direct translation. Our point is not that animal studies are problematic, but that establishing causal roles in humans remains necessary.

Revision:

Introduction (paragraph discussing prior animal work). Replace the current sentence beginning “However, prior studies were largely correlational”

“Animal studies provide critical causal insights, yet direct translation to humans can be limited by species-specific anatomy and potential non-homologies (e.g., human SFS vs. frontal orienting fields in rodents). Therefore, establishing causal contributions in the human brain remains essential.”

Line 100-101: "or whether its involvement is peripheral and merely functionally supporting a larger system" - it is not clear what you mean by 'supporting a larger system'

We meant that observed SFS activity might reflect upstream/downstream support processes (e.g., attentional control or working-memory maintenance) rather than the computation of evidence accumulation itself. We have rephrased to avoid ambiguity.

Revision:

Introduction. Replace the phrase with:

“or whether its observed activity reflects upstream or downstream support processes (e.g., attention or working-memory maintenance) rather than the accumulation computation per se.”

The authors do have to make certain assumptions about the BOLD patterns that would be expected of an evidence accumulation region. These assumptions are reasonable and have been adopted in several previous neuroimaging studies. Nevertheless, it should be acknowledged that alternative possibilities exist and this is an inevitable limitation of using fMRI to study decision making. For example, if it turns out that participants collapse their boundaries as time elapses, then the assumption that trials with weaker evidence should have larger BOLD responses may not hold - the effect of more prolonged activity could be cancelled out by the lower boundaries. Again, I think this is just a limitation that could be acknowledged in the Discussion, my opinion is that this is the best effort yet to identify choice-relevant regions with fMRI and the authors deserve much credit for their rigorous approach.

Agreed. We already ground our BOLD regressors in the DDM literature, but acknowledge that alternative mechanisms (e.g., time-dependent boundaries) can alter expected BOLD–evidence relations. We now add a short limitation paragraph stating this explicitly.

Revision:

Discussion (limitations paragraph). Add:

“Our fMRI inferences rest on model-based assumptions linking accumulated evidence to BOLD amplitude. Alternative mechanisms—such as time-dependent (collapsing) boundaries—could attenuate the prediction that weaker-evidence trials yield longer accumulation and larger BOLD signals. While our behavioural and neural results converge under the DDM framework, we acknowledge this as a general limitation of model-based fMRI.”

Reviewer #2 (Recommendations For The Authors):

Minor points

I suggest the proportion of missed trials should be reported.

Thank you for the suggestion. In our preprocessing we excluded trials with no response within the task’s response window and any trials failing a priori validity checks. Because non-response trials contain neither a choice nor an RT, they are not entered into the DDM fits or the fMRI GLMs and, by design, carry no weight in the reported results. To keep the focus on the data that informed all analyses, we now (i) state the trial-inclusion criteria explicitly and (ii) report the number of analysed (valid) trials per task and run. This conveys the effective sample size contributing to each condition without altering the analysis set.

Revision:

Methods → (at the end of “Experimental paradigm”): “Analyses were conducted on valid trials only, defined as trials with a registered response within the task’s response window and passing pre-specified validity checks; trials without a response were excluded and not analysed.”

Results → “Behaviour: validity of task-relevant pre-requisites” (add one sentence at the end of the first paragraph): “All behavioural and fMRI analyses were performed on valid trials only (see Methods for inclusion criteria).”

Figure 4 c is very confusing. Is the legend or caption backwards?

Thanks for flagging. We corrected the Figure 4c caption to match the colouring and contrasts used in the panel (perceptual = blue/green overlays; value-based = orange/red; ‘post–pre’ contrasts explicitly labeled). No data or analyses were changed, just the wording to remove ambiguity.

Revision:

Figure 4 caption (panel c sentence). Replace with:

“(c) Post–pre contrasts for the trialwise accumulated-evidence regressor show reduced left-SFS BOLD during perceptual decisions (green overlay), with a significantly stronger reduction for perceptual vs value-based decisions (blue overlay). No reduction is observed for value-based decisions.”

Even if not statistically significant it may be of interest to add the results for Value-based decision making on SFS in Supplementary Table 3.

Done. We now include the SFS small-volume results for VDM (trialwise accumulated-evidence regressor) alongside the PDM values in the same table, with exact peak, cluster size, and statistics.

Revision:

Supplementary Table 3 (title):

“Regions encoding trialwise accumulated evidence (parametric modulation) during perceptual and value-based decisions, including SFS SVC results for both tasks.”

Model comparisons: please explain how model complexity is accounted for.

We clarify that model evidence was compared using the Deviance Information Criterion (DIC), which penalizes model fit by an effective number of parameters (pD). Lower DIC indicates better out-of-sample predictive performance after accounting for model complexity.

Revision:

Methods → Hierarchical Bayesian neural-DDM (last paragraph). Add:

“Model comparison used the Deviance Information Criterion (DIC = D̄ + pD), where pD is the effective number of parameters; thus DIC penalizes model complexity. Lower DIC denotes better predictive accuracy after accounting for complexity.”

Reviewer #3 (Recommendations For The Authors):

The following issues would benefit from clarification in the manuscript:

- It is stated that "Our sample size is well within acceptable range, similar to that of previous TMS studies." The sample size being similar to previous studies does not mean it is within an acceptable range. Whether the sample size is acceptable or not depends on the expected effect size. It is perfectly possible that the previous studies cited were all underpowered. What implications might the lack of an a priori power analysis have for the interpretation of the results?

We agree and have revised our wording. We did not conduct an a priori power analysis. Instead, we relied on a within-participant design that typically yields higher sensitivity in TMS–fMRI settings and on convergence across behavioural, computational, and neural measures. We now acknowledge that the absence of formal power calculations limits claims about small effects (particularly for null findings in VDM), and we frame those null results cautiously.

Revision:

Discussion (limitations). Add:

“The within-participant design enhances statistical sensitivity, yet the absence of an a priori power analysis constrains our ability to rule out small effects, particularly for null results in VDM.”

- I was confused when trying to match the results described in the 'Behaviour: validity of task-relevant pre-requisites' section on page 6 to what is presented in Figure 1. Specifically, Figure 1C is cited 4 times but I believe two of these should be citing Figure 1B?

Thank you—this was a citation mix-up. The two places that referenced “Fig. 1C” but described accuracy should in fact point to Fig. 1B. We corrected both citations.

Revision:

Results → Behaviour: validity… Change the two incorrect “Fig. 1C” references (when describing accuracy) to “Fig. 1B”.

- Also, where is the 'SD' coefficient of -0.254 (p-value = 0.123) coming from in line 211? I can't match this to the figure.

This was a typographical error in an earlier draft. The correct coefficients are those shown in the figure and reported elsewhere in the text (evidence-specific effects: for PDM RTs, SD β = −0.057, p < 0.001; for VDM RTs, VD β = −0.016, p = 0.011; non-relevant evidence terms are n.s.). We removed the erroneous value.

Revision:

Results → Behaviour: validity… (sentence with −0.254). Delete the incorrect value and retain the evidence-specific coefficients consistent with Fig. 1B–C.

- It is reported that reaction times were significantly faster for the perceptual relative to the value-based decision task. Was overall accuracy also significantly different between the two tasks? It appears from Figure 3 that it might be, But I couldn't find this reported in the text.

To avoid conflating task with evidence composition, we did not emphasize between-task accuracy averages. Our primary tests examine evidence-specific effects and TMS-induced changes within task. For completeness, we now report descriptive mean accuracies by task and point readers to the figure panels that display accuracy as a function of evidence (which is the meaningful comparison in our matched-evidence design). We refrain from additional hypothesis testing here to keep the analyses aligned with our preregistered focus.

Revision:

Results → Behaviour: validity… Add:

“For completeness, group-mean accuracies by task are provided descriptively in Fig. 3a; inferential tests in the manuscript focus on evidence-specific effects and TMS-induced changes within task.”