Internal models for interpreting neural population activity during sensorimotor control

Abstract

To successfully guide limb movements, the brain takes in sensory information about the limb, internally tracks the state of the limb, and produces appropriate motor commands. It is widely believed that this process uses an internal model, which describes our prior beliefs about how the limb responds to motor commands. Here, we leveraged a brain-machine interface (BMI) paradigm in rhesus monkeys and novel statistical analyses of neural population activity to gain insight into moment-by-moment internal model computations. We discovered that a mismatch between subjects’ internal models and the actual BMI explains roughly 65% of movement errors, as well as long-standing deficiencies in BMI speed control. We then used the internal models to characterize how the neural population activity changes during BMI learning. More broadly, this work provides an approach for interpreting neural population activity in the context of how prior beliefs guide the transformation of sensory input to motor output.

https://doi.org/10.7554/eLife.10015.001eLife digest

The human brain is widely hypothesized to construct “inner beliefs” about how the world works. It is thought that we need this conception to coordinate our movements and anticipate rapid events that go on around us. A driver, for example, needs to predict how the car should behave in response to every turn of the steering wheel and every tap on the brake. But on icy roads, these predictions will often not reflect how the car would behave. Applying the brakes sharply in these conditions could send the car skidding uncontrollably rather than stopping. In general, a mismatch between one’s inner beliefs and reality is thought to cause errors and accidents. Yet this compelling hypothesis has not yet been fully investigated.

Golub et al. investigated this hypothesis by conducting a “brain-machine interface” experiment. In this experiment, neural signals from the brains of two rhesus macaques were recorded using arrays of electrodes and translated into movements of a cursor on a computer screen. The monkeys were then trained to mentally move the cursor to hit targets on the screen.

The monkeys’ cursor movements were remarkably precise. In fact, the experiment showed that the monkeys could internally predict their cursor movements just as a driver predicts how a car will move when turning the steering wheel. These findings indicate that the monkeys have likely developed inner beliefs to predict how their neural signals drive the cursor, and that these beliefs helped coordinate their performance.

In addition, when the monkeys did make mistakes, their neural signals were not entirely wrong—in fact they were typically consistent with the monkeys’ inner beliefs about how the cursor moves. A mismatch between these inner beliefs and reality explained most of the monkeys’ mistakes.

The brain constructs such inner beliefs over time through experience and learning. To study this learning process, Golub et al. next conducted an experiment in which the cursor moved in a way that was substantially different from the monkey’s inner beliefs. This experiment uncovered that, during the course of learning, the monkey’s inner beliefs realigned to better match the movements of the new cursor. Taken together, this work provides a framework for understanding how the brain transforms sensory information into instructions for movement. The findings could also help improve the performance of brain-machine interfaces and suggest how we can learn new skills more rapidly and proficiently in everyday life.

https://doi.org/10.7554/eLife.10015.002Introduction

Even simple movements, like reaching to grasp a glass of water, require dozens of muscles to be activated with precise coordination. This precision is especially impressive in light of sensory feedback delays inherent to neural transmission and processing: when we make a swift arm movement, the brain only knows where the arm was a split second ago, not where it currently is. To generate the desired movement, it is widely believed that we form internal models that enable selection of appropriate motor commands and prediction of the outcomes of motor commands before sensory feedback becomes available (Crapse and Sommer, 2008; Shadmehr et al., 2010).

Mechanistic studies have made important progress toward identifying the neural circuits that implement internal models in sensory (Komatsu, 2006; Kennedy et al., 2014; Schneider et al., 2014), vestibular (Laurens et al., 2013), and motor (Sommer, 2002; Ghasia et al., 2008; Keller and Hahnloser, 2009; Azim et al., 2014) systems. In parallel, psychophysical studies have demonstrated the behavioral correlates of these internal models (Shadmehr and Mussa-Ivaldi, 1994; Wolpert et al., 1995; Thoroughman and Shadmehr, 2000; Kluzik et al., 2008; Mischiati et al., 2015) and the behavioral deficits that result from lesions to corresponding brain areas (Shadmehr and Krakauer, 2008; Bhanpuri et al., 2013). Together with studies showing neural correlates of internal models (Sommer, 2002; Gribble and Scott, 2002; Ghasia et al., 2008; Mulliken et al., 2008; Keller and Hahnloser, 2009; Green and Angelaki, 2010; Berkes et al., 2011; Laurens et al., 2013), these previous studies have provided strong evidence for the brain’s use of internal models.

These internal models are presumably rich entities that reflect the multi-dimensional neural processes observed in many brain areas (Cunningham and Yu, 2014) and can drive moment-by-moment decisions and motor output. However, to date, most studies have viewed internal models through the lens of individual neurons or low-dimensional behavioral measurements, which provides a limited view of these multi-dimensional neural processes (although see Berkes et al., 2011). Here, we address these limitations by extracting a rich internal model from the activity of tens of neurons recorded simultaneously. The key question that we ask is whether such an internal model can explain behavioral errors that cannot be explained by analyzing low-dimensional behavioral measurements in isolation.

We define an internal model to be one’s inner conception of a motor effector, which includes one’s prior beliefs about the physics of the effector as well as how neural commands drive movements of the effector. When we extract a subject’s internal model, we seek a statistical model of the effector dynamics that is most consistent with the subject’s neural commands. Interpreting high-dimensional neural activity through the lens of such an internal model offers insight into how one’s prior beliefs about the effector affect the transformation of sensory inputs into population-level motor commands on a timescale of tens of milliseconds.

To date, it has been difficult to identify such an internal model due the complexities of non-linear effector dynamics and multiple sensory feedback modalities, the need to monitor many neurons simultaneously, and the lack of an appropriate statistical algorithm. To overcome these difficulties, we leveraged a closed-loop brain-machine interface (BMI) paradigm (Figure 1A) in rhesus monkeys, which translates neural activity from the primary motor cortex (M1) into movements of a computer cursor (Green and Kalaska, 2011). A BMI represents a simplified and well-defined feedback control system, which facilitates the study of internal models (Golub et al., 2016). In particular, the BMI mapping from neural activity to movements is completely specified by the experimenter and can be chosen to define linear cursor dynamics, the relevant sensory feedback can be limited to one modality (in this case, vision), and all neural activity that directly drives the cursor is recorded.

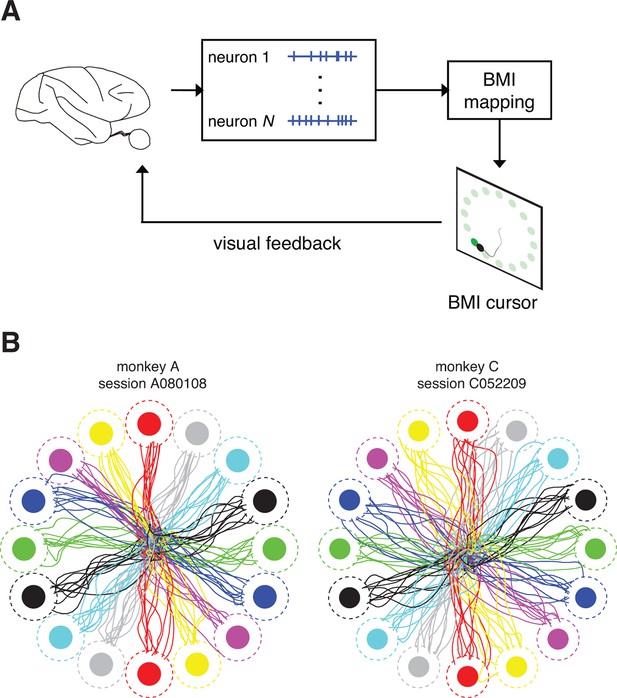

Closed-loop control of a brain-machine interface (BMI) cursor.

(A) Schematic view of the brain-machine interface. Subjects produce neural commands to drive a cursor to hit visual targets under visual feedback. (B) Cursor trajectories from the first 10 successful trials to each of 16 instructed targets (filled circles) in representative data sets. Target acquisition was initiated when the cursor visibly overlapped the target, or equivalently when the cursor center entered the cursor-target acceptance zone (dashed circles). Trajectories shown begin at the workspace center and proceed until target acquisition. Data are not shown during target holds.

During proficient BMI control, as with other behavioral tasks, subjects make movement errors from time to time. One possible explanation for these errors is that they arise due to sensory or motor “noise” that varies randomly from one trial to the next (Harris and Wolpert, 1998; Osborne et al., 2005; Faisal et al., 2008). Another possibility, which is the central hypothesis in this study, is that a substantial component of movement errors is structured and can be explained by a mismatch between the subject’s internal model of the BMI and the actual BMI mapping.

Testing this hypothesis required the development of a novel statistical method for estimating the subject’s internal model from the recorded M1 activity, BMI cursor movements, and behavioral task goals. The internal model represents the subject’s prior beliefs about the physics of the BMI cursor, as well as how the subject’s neural activity drives the cursor. To justify the study of internal models in a BMI context, we first asked whether subjects show evidence of internal prediction during BMI control. Next, we asked whether interpreting M1 activity through extracted internal models could explain movement errors that are present throughout proficient BMI control and long-standing deficiencies in control of BMI movement speed. Finally, because a key feature of internal models is their ability to adapt (Shadmehr et al., 2010), we altered the BMI mapping and asked whether the internal model adapted in a manner consistent with the new BMI mapping.

An important distinction that we make relative to previous work is that we are not asking circuit-level questions about how and where in the brain these internal models operate. Rather, we seek a statistical representation of the subject’s prior beliefs about the BMI mapping (i.e., an internal model) that can be used to explain behavioral errors. Although internal models might not reside in M1 (Shadmehr, 1997; Pasalar et al., 2006; Miall et al., 2007; Mulliken et al., 2008; Lisberger, 2009), their computations influence activity in M1. Thus, by examining the moment-by-moment relationship between M1 population activity and task objectives, it may be possible to extract a detailed representation of the subject’s internal model.

Results

We trained two rhesus monkeys to modulate neural activity to drive movements of a computer cursor to hit targets in a two-dimensional workspace (Figure 1B). The family of BMI mappings that we used is represented by:

where is the cursor state (position and velocity), comprises the recorded M1 activity, and , , and are the parameters of the BMI mapping. All experiments began with a closed-loop calibration of an intuitive BMI mapping, which was designed to provide proficient control on par with the majority of studies in the field (Serruya et al., 2002; Velliste et al., 2008; Ganguly and Carmena, 2009; Suminski et al., 2010; Hauschild et al., 2012; Ifft et al., 2013; Sadtler et al., 2014). Subjects indeed demonstrated proficient and stable control of the BMI, with success rates of nearly 100%, and movement times on average faster than one second (Figure 1—figure supplement 1).

The BMI provides an ideal paradigm for studying internal models because it simplifies several key complexities of native limb control. First, native limb control involves effectors with non-linear dynamics, and the causal relationship between the recorded neural activity and limb movements is not completely understood. In contrast, the causal relationship between recorded neural activity and BMI cursor movements is completely specified by the experimenter (through , and in Equation 1), and can be chosen to be linear (as in Equation 1). Second, native limb control involves multiple modalities of sensory feedback (e.g., proprioception and vision), which makes it difficult for the experimenter to know how the subject combines sources of sensory information. In the BMI, task-relevant sensory feedback is limited to a single modality (vision), which is completely specified by the experimenter ( in Equation 1). Finally, the neural activity that directly drives the BMI is completely specified by the recorded population activity ( in Equation 1), whereas typically only a subset of neurons driving limb movements is recorded. We can thereby reinterpret the full set of BMI control signals using an internal model in a more concrete manner than is currently possible with limb movements.

Subjects compensate for sensory feedback delays while controlling a BMI

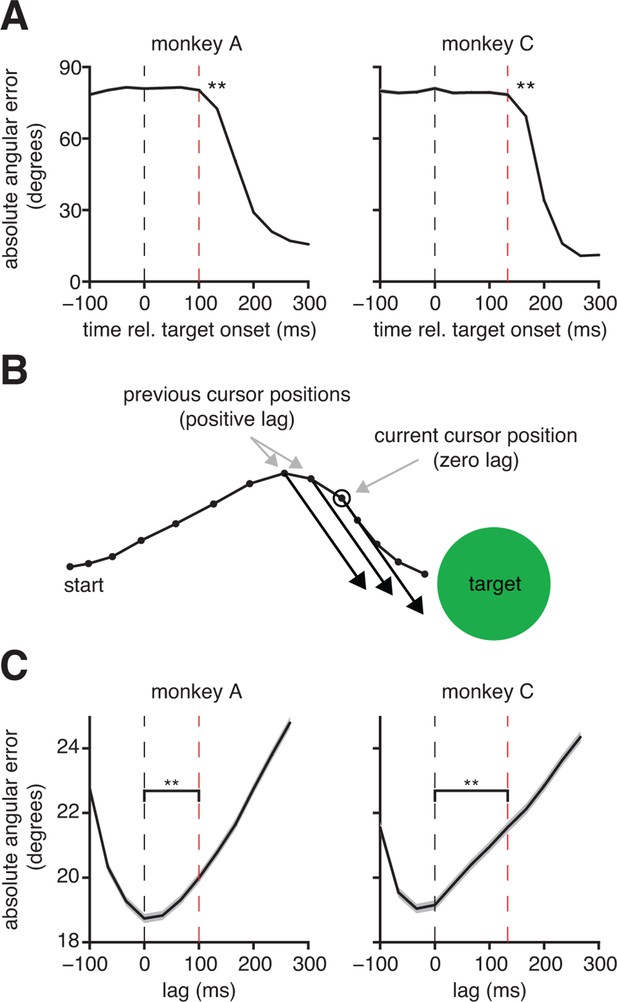

Because internal models have not previously been studied in a BMI context, we sought evidence of internal prediction. A hallmark of internal prediction is compensation for sensory feedback delays (Miall et al., 2007; Shadmehr et al., 2010; Farshchiansadegh et al., 2015). To assess the visuomotor latency experienced by a subject in our BMI system, we measured the elapsed time between target onset and the appearance of target-related activity in the recorded neural population (Figure 2A). The delays we measured ( ms, monkey A; ms, monkey C) are consistent with visuomotor latencies reported in arm reaching studies of single-neurons in primary motor cortex (Schwartz et al., 1988). Next, we asked whether subjects produced motor commands consistent with the current cursor position, which was not known to the subject due to visual feedback delay, or whether motor commands were more consistent with a previous, perceived position (Figure 2B,C and Figure 2—figure supplement 1). If subjects did not compensate for visual feedback delays and aimed from the most recently available visual feedback of cursor position, we would expect errors to be smallest at lags of 100 ms and 133 ms relative to the current cursor position for monkeys A and C, respectively (dashed red lines in Figure 2C). Rather, we found that these error curves had minima at lags close to 0 ms (dashed black lines in Figure 2C), indicating that motor commands through the BMI mapping pointed closer to the targets when originating from the current cursor position than from any previous position. This finding suggests that subjects use an internal model to internally predict the current cursor position.

Subjects compensate for sensory feedback delays while controlling a BMI.

(A) The visuomotor latency experienced by a subject in our BMI system was assessed by measuring the elapsed time between target onset and the first significant () decrease in angular error. If that first decrease was detected timesteps following target onset, we concluded that the visuomotor latency was at least timesteps (red dashed lines). For both subjects, the first significant difference was highly significant (**, two-sided Wilcoxon test with Holm-Bonferroni correction for multiple comparisons; = 5908 trials; monkey C: = 4578 trials). (B) Conceptual illustration of a single motor command (black arrows) shifted to originate from positions lagged relative to the current cursor position (open circle). In this example, the command points farther from the target as it is shifted to originate from earlier cursor positions. (C) Motor commands pointed closer to the target when originating from the current cursor position (zero lag) than from outdated (positive lag) cursor positions that could be known from visual feedback alone (**, two-sided Wilcoxon test; monkey A: = 33,660 timesteps across 4489 trials; monkey C: = 31,214 timesteps across 3639 trials). Red lines indicate subjects’ inherent visual feedback delays from panel A. Shaded regions in panels A and C (barely visible) indicate SEM.

Because we have not yet explicitly identified the subject’s internal model, motor commands were defined in this analysis using the BMI mapping, which is external to the subject. If the internal model bears similarities to the BMI mapping, it is reasonable to use the BMI mapping as a proxy for the internal model to assess feedback delay compensation. With evidence that subjects engage an internal model during BMI control, we next asked whether we could explicitly identify an internal model from the recorded neural activity.

Internal model mismatch explains the majority of subjects’ control errors

The BMI mapping, which determines the cursor movements displayed to the subject, provides one relevant, low-dimensional projection of the high-dimensional neural activity. With evidence that subjects use an internal model during closed-loop BMI control, we asked whether mismatch between an internal model and the actual BMI mapping could explain the subject’s moment-by-moment aiming errors. This requires identifying the subject’s internal model, which could reveal a different projection of the high-dimensional neural activity, representing the subject’s internal beliefs about the cursor state. Because of the closed-loop nature of the BMI paradigm, the subject continually updates motor control decisions as new visual feedback of the cursor becomes available. To resolve these effects, the internal model needs to operate on a timescale of tens of milliseconds (in this case, a single timestep of the BMI system) on individual experimental trials. The extraction of such a rich internal model has been difficult prior to this study due to the lack of an appropriate statistical framework.

To overcome this limitation, we developed an internal model estimation (IME) framework, which extracts, from recorded population activity, a fully parameterized internal model along with a moment-by-moment account of the internal prediction process (Figure 3A). In the IME framework, the subject internally predicts the cursor state according to:

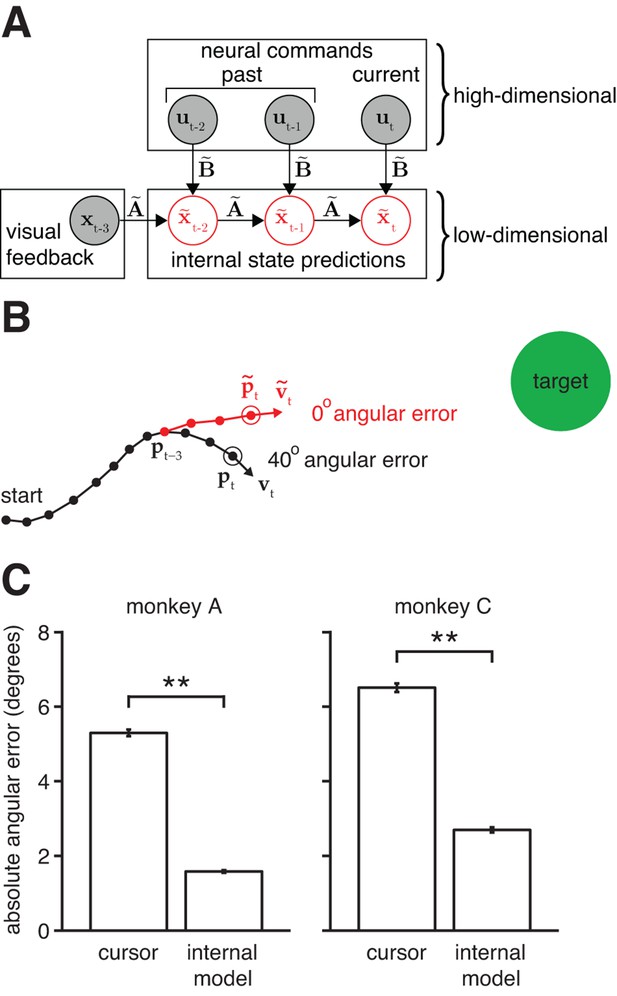

Mismatch between the internal model and the BMI mapping explains the majority of the subjects’ cursor movement errors.

(A) At each timestep, the subject’s internal state predictions () are formed by integrating the visual feedback () with the recently issued neural commands () using the internal model (). We defined cursor states and internal state predictions to include components for position and velocity (i.e., ). (B) Cursor trajectory (black line) from a BMI trial that was not used in model fitting. Red whisker shows the subject’s internal predictions of cursor state as extracted by IME. The critical comparison is between the actual cursor velocity (; black arrow) and the subject’s internal prediction of cursor velocity (; red arrow). (C) Cross-validated angular aiming errors based on IME-extracted internal models are significantly smaller than cursor errors from the BMI mapping (**, two-sided Wilcoxon test; monkey A: = 5908 trials; monkey C: = 4577 trials). Errors in panel B are from a single timestep within a single trial. Errors in panel C are averaged across timesteps and trials. Errors in panels B and C incorporate temporal smoothing through the definition of the BMI mapping and the internal model, and are thus not directly comparable to the errors shown in Figure 2C, which are based on single-timestep velocity commands needed for additional temporal resolution. Error bars (barely visible) indicate SEM.

where is the subject’s internal prediction about the cursor state (position and velocity), is a vector of recorded neural activity, and , , and are the parameters of the subject’s internal model. This form of the internal model was chosen to be analogous to the BMI mapping from Equation 1 so that the actual BMI mapping lies within the family of internal models that we consider. Additionally, this formulation aligns with recent studies of skeletomotor (Shadmehr and Krakauer, 2008) and oculomotor (Frens, 2009) control, and a vast literature of control theory (Anderson and Moore, 1990).

The primary concept of the IME framework is that, at each timestep, the subject internally predicts the current cursor state by recursively applying Equation 2 (starting from the most recently available sensory feedback) and generates neural activity consistent with aiming straight to the target relative to this internal prediction (see the 'Framework for internal model estimation (IME)' subsection in 'Materials and methods' and Figure 3—figure supplement 1). At each timestep, IME extracts the entire time-evolution of the subject’s internal state prediction using Equation 2 as an internal forward model. This evolution can be visualized in the form of a whisker (Figure 3B) that begins at the cursor position of the most recently available feedback and unfolds according to the extracted internal model. At each new timestep, the subject forms a new internal prediction that incorporates newly received visual feedback. If the internal model exactly matches the BMI mapping, the subject’s internal predictions would exactly match the cursor trajectory. A visualization of an example internal model and BMI mapping is given in Figure 3—figure supplement 2.

The central hypothesis in this study is that movement errors arise from a mismatch between the subject’s internal model of the BMI and the actual BMI mapping. The alternative to this hypothesis is that the subject’s internal model is well-matched to the BMI mapping, and movement errors result from other factors, such as “noise” in the sensorimotor system, subjects’ inability to produce certain patterns of neural activity, or subjects disengaging from the task. Our key finding is that recorded neural commands were markedly more consistent with the task goals when interpreted through subjects’ internal models than when viewed through the BMI mapping (Figure 3C). Subjects’ internal models deviated from the actual BMI mappings such that control errors evaluated through extracted internal models were substantially smaller than actual cursor errors: extracted internal models explained roughly 65% of cursor movement errors (70%, monkey A; 59%, monkey C). Although this finding does not preclude other factors (e.g., spiking noise or subject disengagement) from contributing toward movement errors, it does suggest their contribution is substantially smaller than previously thought, due to the large effect of internal model mismatch.

We found that the majority of the explanatory power of extracted internal models was in their ability to identify structure in the high-dimensional neural activity (Figure 3—figure supplement 3). This structure was captured in the internal model by the mapping from high-dimensional neural activity to low-dimensional kinematics ( in Equation 2), which need not match the BMI mapping ( in Equation 1). Consistent with this finding, internal models fit to low-dimensional behavior rather than high-dimensional neural activity were not able to explain cursor errors (Figure 3—figure supplement 4).

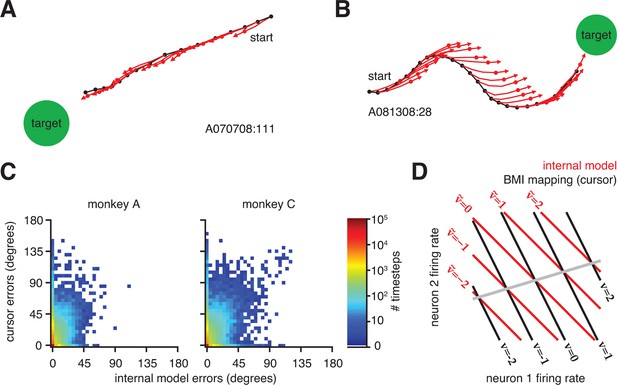

That a majority of cursor errors can be explained by mismatch of the internal model is not to say that control through the BMI mapping was poor–in fact control was proficient and stable (Figure 1B and Figure 1—figure supplement 1). Rather, extracted internal models predicted movements that consistently pointed straight to the target, regardless of whether the actual cursor movements did (Figure 4A) or did not (Figure 4B and Figure 4—figure supplement 1) point straight to the target. On most trials, BMI cursor trajectories proceeded roughly straight to the target (Figure 4A). On these trials, internal model predictions aligned with actual cursor movements, resulting in small errors through both the BMI mapping and the extracted internal model. In a smaller subset of trials, actual cursor movements were more circuitous and thus had relatively large errors. Previously, the reason behind these seemingly incorrect movements was unknown, and one possibility was that the subject simply disengaged from the task. When interpreted through the extracted internal model, however, neural activity during these circuitous trials appears correct, suggesting that the subject was engaged but was acting under an internal model that was mismatched to the BMI mapping (Figure 4B and Figure 4—figure supplement 1). In other words, when armed with knowledge of the subject’s internal model, outwardly irrational behavior (i.e., circuitous cursor movements) appears remarkably rational. Across all trials, the majority of neural activity patterns had low or zero error as evaluated through extracted internal models, regardless of whether errors of the actual cursor movements (i.e., through the BMI mapping) were large or small (Figure 4C and Figure 4—figure supplement 2).

Neural activity appears correct through the internal model, regardless of how the actual cursor moved.

(A) Typical trial in which the cursor followed a direct path (black) to the target. Internal model predictions (red whiskers) also point straight to the target. (B) Trial with a circuitous cursor trajectory. Internal model predictions point straight to the target throughout the trial, regardless of the cursor movement direction (same color conventions as in panel A). (C) Timestep-by-timestep distribution of BMI cursor and internal model errors. Neural activity at most timesteps produced near-zero error through the internal model, despite having a range of errors through the BMI mapping. (D) Hypothetical internal model (red) and BMI mapping (black) relating 2D neural activity to a 1D velocity output. This is a simplified visualization of Equations 1 and 2, involving only the and parameters, respectively. Each contour represents activity patterns producing the same velocity through the internal model (, red) or BMI mapping (, black). Because of internal model mismatch, many patterns result in different outputs through the internal model and the BMI. However, some patterns result in the same output through both the internal model and the BMI (gray line). Here we illustrate using a 2D neural space and 1D velocity space. In experiments with -dimensional neural activity and 2D velocity, activity patterns producing identical velocities through both the internal model and the cursor span a -dimensional space.

When cursor trajectories were circuitous, it was not uncommon for some internal model predictions (whiskers) to match the actual cursor movement while others did not, even within the same trial (Figure 4B). Given a single internal model, how can some patterns of neural activity result in whiskers aligned to the cursor trajectory, while others patterns produce whiskers that deviate from the cursor trajectory? This is possible due to mathematical operation of mapping from high-dimensional neural activity patterns to low-dimensional cursor states. Figure 4D provides a conceptual illustration of a simplified BMI mapping:

and a simplified internal model:

each of which relies only on a mapping ( or ) from neural activity () to cursor velocity ( or ). We focus on and here (without considering and from Equations 1 and 2) because of the aforementioned finding that the majority of the internal model mismatch effect is captured by differences between and (Figure 3—figure supplement 3). Given a mismatched BMI mapping (black lines) and internal model (red lines), many neural activity patterns will produce different velocities through the BMI mapping versus the internal model. However, a subset of activity patterns (gray line) will produce identical velocities through both the BMI mapping and the internal model. These patterns lie in the nullspace of (i.e., solutions to the equation ). In the example trials shown in Figure 4A,B and Figure 4—figure supplement 1, internal model predictions (red) that match the actual cursor movement (black) correspond to neural activity patterns along the gray line in Figure 4D. Predictions not matching the cursor movement correspond to neural activity patterns anywhere off the gray line in Figure 4D.

Two alternative hypotheses do not explain the effect of internal model mismatch

The data presented thus far support our central hypothesis that internal model mismatch is a primary source of movement errors. Next we asked whether it might be possible to have arrived at this result under the alternate hypothesis that the internal model is well-matched to the BMI mapping. We address two specific cases of this alternative hypothesis and show that they do not explain the observed effect of internal model mismatch.

First, we explored the possibility that the subject might have a well-matched internal model, but has systematic difficulties producing the neural activity patterns required to drive the cursor in all directions in the 2D workspace using the BMI mapping. This could result in an estimated internal model that appears to be mismatched to the BMI mapping. Although M1 cannot readily produce all possible patterns of high-dimensional neural activity (Sadtler et al., 2014), we observed that subjects could readily produce the full range of movement directions through the BMI mapping (Figure 3—figure supplement 5). Gaps between producible movement directions were typically less than 1/4 of a degree, which is substantially smaller than the cursor errors shown in Figure 3C. This suggests that our main finding of internal model mismatch cannot be explained by subjects’ inability to produce particular neural activity patterns.

Second, we explored the possibility that the subject intended to produce neural commands that were correct according to the BMI mapping, but that those intended commands were corrupted by “noise” that is oriented such that errors appear smaller through the extracted internal model than through the BMI mapping. Here we define noise as spiking variability not explained by the desired movement direction under the BMI mapping. If spiking variability is correlated across neurons, it is possible to identify a mapping that best attenuates that variability. To determine whether correlated spiking variability could explain the effect of internal model mismatch, we simulated neural activity according to this alternative hypothesis in a manner that preserved the statistics of the real data (Figure 3—figure supplement 6). If this simulation produced results that match our findings from the real data, it would indicate that our main finding can be explained by the alternate hypothesis. However, this was not the case. Simulated neural activity was more consistent with the BMI mapping than the extracted internal model, which contrasts with our finding from the recorded neural activity.

Statistical controls for validating observed effects

To further validate the main results presented above, we implemented four statistical controls. First, we ensured that our findings were not simply artifacts of overfitting the data. Second, we removed the high-dimensional structure from the neural activity while preserving the cursor movements, and show that resulting extracted internal models no longer provided explanatory power. Third, we ensured that internal model predictions do not trivially point toward the targets. Finally, we explored a variety of forms for the internal model and found that a simplified form does not account for the data. Here we describe each of these four statistical controls in additional detail.

One possible concern when interpreting the findings presented above is that internal models might be simply overfitting the data. To rule out this possibility, all findings presented throughout this paper are cross-validated (see the 'Computing cross-validated internal model predictions' subsection in 'Materials and methods'). Internal models were fit using a subset of trials as training data. Then, trials that were held out during fitting were used to evaluate each extracted internal model. If the extracted internal models had overfit the training data, we would expect those internal models to generalize poorly to the held-out data. However, this was not the case. Internal models explained the majority of cursor errors in the held-out data (Figure 3C), demonstrating that extracted internal models captured real, task-relevant structure in the recorded neural activity.

In addition to properly cross-validating our results, we performed a control analysis to show that extracted internal models identified reliable, task-appropriate structure in the high-dimensional neural activity. Here we extracted internal models using neural activity that had been shuffled across timesteps in a manner that preserved the cursor movements through the BMI mapping (Figure 3—figure supplement 7). If our results could be explained by internal models that simply overfit noise in the data, we would expect internal models fit to these shuffled data data sets to again explain a majority of cursor errors. However, internal models extracted from these shuffled data sets could no longer explain cursor errors, indicating that IME does not identify effects when they do not exist in the data. This result is consistent with our findings that the majority of the explanatory power of extracted internal models relies on structure in the high-dimensional neural activity (Figure 3—figure supplement 3), and that cursor errors cannot be explained by internal models when high-dimensional neural activity is replaced by low-dimensional behavioral measurements during model fitting (Figure 3—figure supplement 4).

If an internal model prediction points toward the target, it is not trivially due to our inclusion of straight-to-target aiming during model fitting (see the 'Computing cross-validated internal model predictions' subsection in 'Materials and methods'). Although target positions were used during model fitting, they were never used when computing internal model predictions from the data (e.g., when constructing the whiskers in Figure 3B, Figure 4A,B, and Figure 4—figure supplement 1). Each whisker was constructed in a held-out trial using only visual feedback (consisting of a single timestep of cursor position and velocity), the recorded neural activity up through the current timestep, and the internal model extracted from the training data. Because of our aforementioned cross-validation procedures, when the neural command is used to compute the movement error at timestep , that neural command had not been seen previously (i.e., it was not used when fitting the internal model, when estimating the subject’s internal cursor state prediction, when calibrating the BMI mapping, nor when determining the current position of the actual BMI cursor). A whisker that points straight to the target in the held-out data thus reveals that, when interpreted through the subject’s internal model, the recorded neural activity would have driven the cursor straight to the target.

Finally, we explored a variety of approaches to modeling the subject’s internal tracking process and found that models demonstrated similarly high degrees of explanatory power as long as they could capture high-dimensional structure in the neural activity. However, a simplified internal model that does not account for any form of internal forward prediction was not consistent with our data (Figure 3—figure supplement 8).

Internal model mismatch explains limitations in speed dynamic range

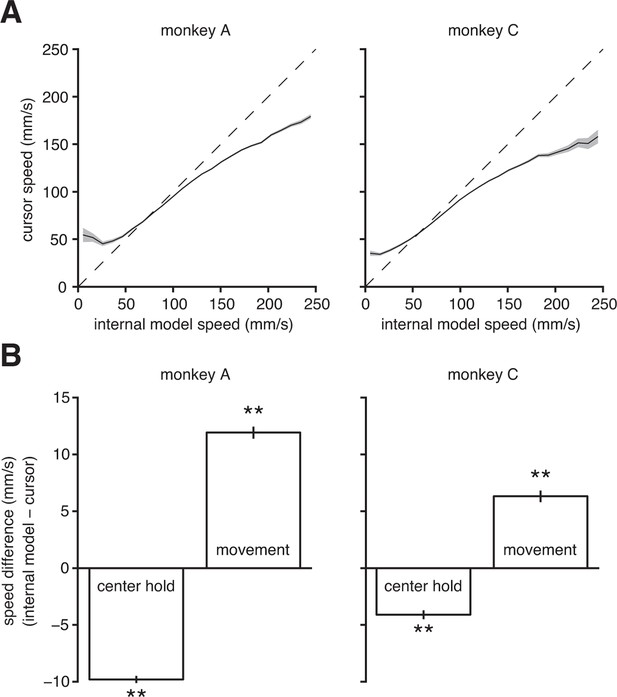

A major limitation in BMI performance is the ability to control cursor speed (Gilja et al., 2012; Golub et al., 2014). Gilja et al. (2012) and Golub et al. (2014) have proposed solutions to improve control of BMI speed (in particular, with respect to stopping the BMI cursor at targets). However, it is still an open question as to why BMI speed control is deficient in the first place. In addition to explaining the subjects’ aiming errors, we asked whether mismatch between the internal model and BMI mapping could also explain subjects’ difficulty in controlling cursor speed. Using the extracted internal model, we could compare the subject’s intended speed (from the internal model) to the speed of the actual BMI cursor at each timestep. We found that low intended speeds were systematically overestimated, and high intended speeds were systematically underestimated by the BMI mapping (Figure 5A). Furthermore, we discovered that the subjects intended to hold the cursor steadier during the initial hold period and move the cursor faster during the movement than what occurred during experiments (Figure 5B). Note that we make no assumptions about movement speed when extracting the internal model (see the 'Framework for internal model estimation (IME)' subsection in 'Materials and methods').

Internal model mismatch limits the dynamic range of BMI cursor speeds.

(A) BMI cursor speeds across the range of intended (i.e., internal model) speeds. At low intended speeds, BMI speeds were higher than intended, whereas for mid-to-high intended speeds, BMI speeds were lower than intended. Shaded regions indicate SEM. (B) During the hold period prior to target onset, intended speeds were significantly lower than those produced through the BMI mapping. During movement, intended speeds were significantly higher than those produced through the BMI. Error bars indicate SEM (**, two-sided Wilcoxon test; monkey A: trials; monkey C: trials). In panels A and B internal models were used to predict intended speed on trials not used during model fitting.

To gain insight into this speed mismatch, we can use extracted internal models to examine the discrepancies between intended and actual speeds at the level of individual units and on the timescale of a single 33-ms timestep (Figure 5—figure supplement 1). These systematic differences between intended and actual cursor speeds indicate that internal model mismatch limits realizable dynamic range of BMI movement speeds. These findings suggest that the longstanding deficiencies in BMI speed control may be a consequence of internal model mismatch.

Perturbations drive internal model adaptation

A key feature of an internal model is its ability to adapt. Arm reaching studies have demonstrated behavioral evidence of internal model adaptation (Shadmehr and Mussa-Ivaldi, 1994; Thoroughman and Shadmehr, 2000; Joiner and Smith, 2008; Taylor et al., 2014). Behavioral learning has also been demonstrated in the context of BMIs (Taylor, 2002; Carmena et al., 2003; Jarosiewicz et al., 2008; Ganguly and Carmena, 2009; Chase et al., 2012; Sadtler et al., 2014). While these BMI studies suggest that subjects adapt their internal models to better match the BMI mapping, a direct assessment has been difficult without access to those internal models. With the ability to extract a subject’s internal model, here we asked whether extracted internal models adapt in accordance with perturbations to the BMI mapping (Figure 6). In one monkey, an initial block of trials under an intuitive BMI mapping was followed by a block of trials under a perturbed BMI mapping. All data analyzed prior to this section were recorded during intuitive trials. The intuitive and perturbed mappings were of the form of Equation 1, but each used different values in the matrix . The perturbed BMI mapping effectively rotated the pushing directions of a subset of the recorded units, such that the global effect resembled a visuomotor rotation (see the 'Behavioral task' subsection in 'Materials and methods'). Previous studies have shown that perturbations of this type can be learned by monkeys (Wise et al., 1998; Paz et al., 2005; Chase et al., 2012).

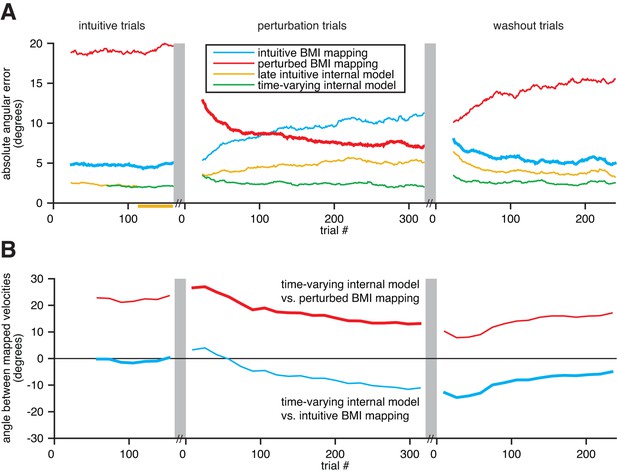

Extracted internal models capture adaptation to perturbations.

(A) Cross-validated angular errors computed by interpreting monkey A neural activity through BMI mappings and internal models. The intuitive BMI mapping (blue) defined cursor behavior during the intuitive and washout trials. The perturbed BMI mapping (red) defined cursor behavior during the perturbation trials. The late intuitive internal model (yellow) was extracted from the last 48 intuitive trials (yellow bar). A time-varying internal model (green) was extracted from a moving window of the 48 preceding trials. Values were smoothed using a causal 24-trial boxcar filter and averaged across 36 experiments. (B) Differences between monkey A’s time-varying internal model and the BMI mappings, assessed through the high-dimensional neural activity. For each round of 16 trials, neural activity from those trials was mapped to velocity through the time-varying internal model, the intuitive BMI mapping, and the perturbed BMI mapping. Signed angles were taken between velocities computed through the time-varying internal model and the intuitive BMI mapping (blue) and between velocities computed through the time varying internal model and the perturbed BMI mapping (red). Values were averaged across 36 experiments.

For each experiment, we interpreted recorded population activity through the intuitive and perturbed BMI mappings, as well as through two views of the subject’s internal model: a time-varying internal model extracted from a moving window of 48 trials, and a late intuitive internal model extracted from the last 48 intuitive trials. We could then quantify changes in the subject’s internal model and assess which BMI mapping or internal model was most consistent with the neural activity, relative to task goals (Figure 6A). To avoid circularity, trials used to evaluate the BMI mappings and internal models were not used when fitting the internal models nor when calibrating the BMI mappings.

Errors through the intuitive BMI mapping describe the actual cursor performance during the intuitive and washout trials (thick blue traces; analogous to cursor errors in Figure 3C), and how that mapping would have performed had it been in effect during the perturbation trials (thin blue trace). Similarly, errors through the perturbed BMI mapping describe the actual cursor performance during the perturbation trials (thick red trace), and how that mapping would have performed had it been in effect during the intuitive and washout trials (thin red traces). Behavioral learning was evident in that errors through the perturbed BMI mapping were large in early perturbation trials and decreased continuously throughout the perturbation trials. A detailed characterization of this behavioral learning can be found in (Chase et al., 2012).

Our key finding in this analysis is that extracted internal models adapted in a manner consistent with the BMI perturbations (Figure 6B). During the perturbation trials, the time-varying internal model adapted to better match the perturbed BMI mapping (red trace trends toward zero). Similarly, during the washout trials, the time-varying internal model adapted to better match the intuitive BMI mapping (blue trace trends toward zero). Had the subject’s internal model not adapted, or if the adaptation was not reflected in the extracted internal model, we would expect the traces in Figure 6B to be flat. Rather than being static entities, the extracted internal models were dynamic with timescales independent of experimenter-induced changes to the BMI mapping.

Consistent with our central hypothesis, internal model mismatch was present throughout the intuitive, perturbation, and washout trials. During intuitive trials, errors through the time-varying internal model were substantially lower than errors through the intuitive BMI mapping (green trace lower than blue trace in Figure 6A), which is consistent with our main findings in Figure 3C. Because the subject’s internal model adapts, errors through the time-varying internal model remained substantially smaller than errors through the BMI mappings across the perturbation and washout trials as well (green trace remains low across Figure 6A). Although behavioral errors decreased over the course of the perturbation and washout trials, internal model mismatch was still present following adaptation (red and blue traces are nonzero during late perturbation and washout trials, respectively, in Figure 6B).

It could have been that this internal model mismatch was only substantial during early intuitive trials before the subject had accrued enough experience to form a stable internal model. This was not the case. The subject’s internal model was stable throughout the intuitive session, as evidenced by the nearly constant angular differences between velocities mapped through the time-varying internal model and the BMI mapping (red and blue traces are roughly flat in Figure 6B) and the nearly identical errors through the time-varying internal model and the (static) late intuitive internal model (green and yellow traces overlap in Figure 6A wherever cross-validated errors can be computed). Consistent with a stable internal model, behavioral performance was stable throughout the intuitive trials (blue trace is flat in Figure 6A), and internal model mismatch explained a steady fraction of behavioral errors (green trace is also flat, and substantially lower than blue trace). During the perturbation session, the subject’s internal model diverges from this stabilized state (yellow trace diverges from green trace in Figure 6A and both traces are non-constant in Figure 6B).

Discussion

In this work, we asked what gives rise to behavioral errors during feedback motor control. In a BMI paradigm, we hypothesized that a mismatch between the subject’s internal model and the actual BMI mapping could explain errors in BMI cursor movement. To assess this, we first found evidence that subjects compensate for sensory feedback delays. Then, we reinterpreted the neural population activity recorded during closed-loop BMI control in terms of a rich internal model that operates on a timescale of tens of milliseconds. We found (i) that subjects’ neural activity was often correct according to the internal model even when cursor movements were circuitous (thereby explaining 65% of cursor errors), and (ii) that subjects intended to hold the cursor steadier during initial hold periods and to drive the cursor faster during movements, relative to observed behavior. Furthermore, when the BMI mapping was perturbed, the subject’s internal model changed in a manner consistent with the new BMI mapping.

Internal models influence activity in M1

In this study, we considered neural population activity recorded in M1. It is reasonable to ask how it is possible to deduce anything about internal models from M1 activity if we are not recording i) signals from the neural circuits that implement internal models (e.g., cerebellum), nor ii) the internal copy signals that enable internal model computations. The rationale is the following. First, the neural activity recorded in M1 is likely to be downstream of the internal model computations, whether they be in cerebellum (Shadmehr, 1997; Pasalar et al., 2006; Miall et al., 2007; Lisberger, 2009; Huang et al., 2013), posterior parietal cortex (Shadmehr, 1997; Mulliken et al., 2008), dorsal premotor cortex (Shadmehr, 1997), or elsewhere. Thus, the internal model is likely to influence the neural activity produced in M1. By relating the neural activity recorded in M1 to the behavioral task on a moment-by-moment basis, we should be able to infer properties of the upstream internal model. Second, previous studies indicate that internal copy signals (e.g., efference copy, corollary discharge) carry information about movement intent, in particular a copy of the movement intent that M1 sends to the motor effector (Crapse and Sommer, 2008; Huang et al., 2013; Schneider et al., 2014; Azim et al., 2014). Although we are not directly recording the internal copy signal, the information in the internal copy relevant to movement intent is likely also present in the recorded M1 activity, and this is what we leveraged. In short, we make no claims about the neural circuitry implementing internal models, but rather we infer statistical properties of the internal models from their downstream consequences in M1. Using this rationale, we extracted internal models from M1 population activity.

We chose to capture the subject’s internal model using a forward model framework (Equation 2 and Figure 3A) because it is both highly interpretable and consistent with a large body of behavioral and computational studies (Shadmehr and Krakauer, 2008; Frens, 2009). Our results do not preclude the use of other types of internal models, such as an inverse model (Shadmehr and Mussa-Ivaldi, 1994; Kawato, 1999), whose acquisition and function is believed to be tightly coupled to that of the forward model (Wolpert and Kawato, 1998).

Avoiding circularity when extracting an internal model

We presented four important lines of evidence that indicate that the extracted internal models are meaningful, and not a result of logical circularity during model fitting or overfitting to noise in the data. First, extracted internal models explain a majority of behavioral errors on trials not seen during model fitting (Figure 3C). Here, extracted internal models identified structure in the high-dimensional neural activity that indicated straight-to-target movement intent, even when the cursor behavior was circuitous. Internal model predictions on held-out trials could not trivially point toward the targets because that held-out neural activity had not been used during model fitting, and because target positions were never used when constructing internal model predictions from held-out trials. Second, the finding that intended speed is better predicted by internal models than the BMI mapping (Figure 5B) lends an additional independent validation of those internal models, since no assumptions were made about intended movement speed when fitting internal models. Third, when we perturbed the BMI mapping, extracted internal models revealed adaptation consistent with the particular perturbations (Figure 6).

Finally, we performed a series of scientific and statistical control analyses. We showed that our data are not consistent with two versions of the alternative hypothesis, in which the subject’s internal model is well-matched to the BMI mapping (Figure 3—figure supplement 5 and Figure 3—figure supplement 6). Further, we asked whether extracted internal models could explain the observed behavioral errors without access to structure in the high-dimensional neural activity beyond that which defined cursor movements. We considered two different alterations to the data from which internal models were extracted: one in which we replaced the high-dimensional neural activity with low-dimensional cursor velocities (Figure 3—figure supplement 4) and another in which we shuffled the neural activity in a manner that preserved cursor velocities through the BMI mapping (Figure 3—figure supplement 7). In both cases, we found that the extracted internal models no longer offered a consistent explanation for the observed behavioral errors, thereby demonstrating that the explanatory power of the extracted internal models does not arise from logical circularity or overfitting.

Relationship between internal models and BMI mappings

An extracted internal model and a BMI mapping are closely related. They take a similar mathematical form (Equations 1 and 2) and both project high-dimensional population activity to a low-dimensional kinematic space. A key difference between internal models and BMI mappings is that internal models are dynamic entities whose properties can change during motor adaptation. In contrast, the BMI mappings are chosen by the experimenter or by a computer algorithm. Critically, in experiments in which we abruptly applied a perturbed BMI mapping, we found that extracted internal models dynamically adjusted in a manner appropriate for the task and at a timescale independent of changes to the BMI mapping (Figure 6). The ability to interpret neural activity through the subject’s internal model, while the subject controls the cursor through some BMI mapping (e.g., Figure 4A,B, Figure 6A and Figure 4—figure supplement 1), offers a unique glimpse into the subject’s movement intentions, sensory prediction errors, and motor adaptation.

Given the substantial fraction of behavioral errors that are explained by internal model mismatch during control under the intuitive BMI mapping, it is perhaps surprising that we did not find evidence of behavioral or internal model adaptation during those trials (Figure 6). A way to reconcile these findings is that, in contrast to the frequent movement errors experienced after the BMI mapping was perturbed, there was a relative paucity of errors during the intuitive trials. As a result, there may not have been sufficient pressure to improve upon a “good enough” internal model (Loeb, 2012). Had the subject been given more experience with the same BMI mapping (Ganguly and Carmena, 2009), the internal model may have converged to the BMI mapping. Nevertheless, our findings indicate that the subject’s learning process may be a key limitation in BMI performance (Sadtler et al., 2014). It may be possible to overcome these limitations in the subject’s neural adaptation process through complementary innovations in designing the BMI mapping (Shenoy and Carmena, 2014). For example, applying an extracted internal model as the BMI mapping might improve performance during closed-loop BMI control. Indeed, a recent study incorporating the concept of internal tracking has demonstrated substantial gains in closed-loop BMI performance (Gilja et al., 2012). Future studies will be required to determine whether further improvements in performance might be possible by using the IME framework toward designing the BMI mapping.

Leveraging multi-dimensional structure in population activity

The insights gained in this study were made possible because we monitored the subject’s high-dimensional neural activity. Because the BMI mapping and the subject’s internal model are high-to-low dimensional mappings, neural activity that was consistently correct under the internal model sometimes resulted in aberrant behavior through the BMI mapping. We would not have been able to observe or explain this phenomenon by analyzing the BMI cursor movements in isolation (Figure 3—figure supplement 4). In particular, by replacing all instances of neural activity (i.e., the in Equation 2) with actual cursor velocities (or analogously, with actual hand velocities from an arm reaching task), IME becomes limited to predicting the subject’s velocity intent to be a scaled and rotated (in two-dimensions) version of the actual velocity. In contrast, access to the high-dimensional neural activity enabled the identification of the subject’s intended movements without constraining them to have a consistent relationship with actual movements.

Prior beliefs, and their role in sensation and behavior, have been the focus of many studies, including those on visual perception (Kersten et al., 2004; Komatsu, 2006; Berkes et al., 2011), perceptual decision-making (Ma and Jazayeri, 2014), and sensorimotor learning (Körding and Wolpert, 2004; Turnham et al., 2011). Our work provides a means for extracting a rich representation of prior beliefs (i.e., the internal model) that can combine past sensory input with multi-dimensional neural processes to drive moment-by-moment motor control decisions. We found that outwardly aberrant behavior and behavioral limitations could be explained by taking into account the subject’s prior beliefs. By recording simultaneously from multiple neurons and developing the appropriate statistical algorithms, it may be possible to extract similarly rich prior beliefs in other systems.

Materials and methods

Neural recordings

Request a detailed protocolTwo male rhesus macaques (Maccaca mulatta) were each implanted with a 96-channel microelectrode array (Blackrock Microsystems, Salt Lake City, UT) targeting proximal arm area of primary motor cortex. Signals were amplified, bandpass filtered (250 Hz - 8 kHz) and manually sorted (Plexon Sort Client, box sort) with a 96-channel Plexon MAP system (Plexon, Dallas, TX). Recorded neuronal units were either well-isolated single cells or multiple cells that could not be well separated but as a group were tuned to intended movement direction. In each session, we recorded 26.0 3.4 (monkey A) and 39.2 3.9 (monkey C) neuronal units (mean one standard deviation). Spike counts were taken in nonoverlapping 33-ms bins throughout the behavioral task (see 'Behavioral task'). All animal procedures were approved by the Institutional Animal Care and Use Committee of the University of Pittsburgh.

Behavioral task

Request a detailed protocolSubjects modulated neural activity to drive movements of a virtual cursor in a 2D brain-machine interface (BMI) task. The cursor (radius: 7–8 mm, monkey A; 6 mm monkey C) and targets (same radii as cursor) were displayed to the subject on a frontoparallel stereoscopic display (Dimension Technologies, Rochester, NY) with a refresh rate of 60 Hz. Display updates were subject to a latency of up to 2 refresh cycles (0–33.3 ms). Target positions were chosen pseudorandomly from a set of 16 evenly-spaced radial targets (center-to-target distance: 85 mm, monkey A; 72–73 mm, monkey C). Each trial began with the cursor at the workspace center, where the subject was required to hold the cursor to visibly overlap a central target (center hold requirement randomly selected for each trial: 50–350 ms, monkey A; 50–150 ms, monkey C). Following completion of the initial hold, a peripheral target appeared, instructing the subject to initiate a cursor movement. Target acquisition was recorded as the first timestep during which the cursor visibly overlapped the peripheral target. Following target acquisition, the subject was required to hold the cursor steady without losing visible overlap between the cursor and target (target hold requirement randomly selected for each trial: 50–100 ms, monkey A; 50 ms, monkey C). A limit was placed on the time between target onset and target acquisition (1.5–2 s, monkey A; 1.2–2 s, monkey C). A trial was deemed failed and terminated if visible overlap between cursor and target was lost before satisfying either hold requirement. If all requirements were met, a trial was deemed successful, and the subject was provided with a water reward (120 , monkey A; 120–130 , monkey C). Arms were restrained, and little to no hand movements were observed (although hand positions were not recorded).

The analyzed data were subsets of data from larger experiments. The experimental details for monkey A have been described previously (all no invisible zone conditions from Chase et al., 2012). Briefly, each experiment began with roughly 40 trials that were used to calibrate the intuitive BMI mapping (see 'Calibration of the BMI mapping'). Following calibration was a block of 169 8.1 successful trials under this intuitive BMI mapping. Next, the BMI mapping was systematically perturbed and held constant for 365 126 successful trials. Each perturbation effectively rotated a random subset of recorded units’ decoded pushing directions (DPDs), as in Figure 3—figure supplement 2B, by a particular angle (5 experiments with 25% of units’ DPDs rotated ; 20 experiments with 50% of units’ DPDs rotated 60°; 11 experiments with 100% of units’ DPDs rotated 30°). In 33 of 36 experiments, perturbation trials were followed by 360 ± 237 successful washout trials, during which the perturbation was removed, and the BMI mapping was restored to the intuitive mapping. Unless noted otherwise, analyses of monkey A data refer to intuitive trials. Data from the perturbation and washout trials appear only in Figure 6. Each of the 36 experiments comprising these data took place on a unique day.

For monkey C, BMI cursor control alternated between the 2D task (described above) and a 3D task (described below). All monkey C trials analyzed in this work came from the 2D task. Each day began with roughly 40–50 trials to calibrate an intuitive BMI mapping. Following calibration, subsequent blocks alternated between the 2D task and the 3D task, with the first of these tasks chosen randomly each day. The 3D task was similar to the 2D task, except that the cursor was allowed to move in 3D, and targets were distributed about the surface of a workspace-centered sphere. Blocks with the 2D task consisted of 277 ± 70.4 trials, and blocks with the 3D task consisted of 527 ± 252 trials. Each day consisted of either 3 or 4 blocks. Monkey C experiments did not include trials under a perturbed BMI mapping. The 18 2D blocks analyzed in this work took place on 12 unique days.

The BMI mapping

Request a detailed protocolBMI cursor position and velocity were determined from recorded spike counts according to a BMI mapping:

where at timestep , is the cursor position, is the cursor velocity, ms is the timestep duration, is the mean spike count vector recorded simultaneously across neuronal units over the past 5 timesteps (167 ms), and and are the parameters that map neural activity to cursor velocity. Note that the BMI mapping (Equations 5 and 6) can be written equivalently in the form of Equation 1:

where the cursor state, , concatenates cursor position and velocity.

In some of the following analyses, we required more precise time resolution than could be achieved by analyzing the 5-timestep smoothed velocity commands that drove the BMI cursor (Equation 6). For fine-timescale analyses, we defined single-timestep (i.e., unsmoothed) velocity commands as:

where is the vector of recorded spike counts during the single timestep , and and are the decoding parameters that were applied online, as in Equation 6. Note that in Equation 6 is the average of single-timestep velocity commands, .

Calibration of the BMI mapping

Request a detailed protocolCalibration of parameters and of the intuitive BMI mapping was done in closed-loop and followed the population vector algorithm (Georgopoulos et al., 1983). Details on this closed-loop calibration have been published previously in Chase et al. (2012). For monkey A, an initial sequence of 8 evenly-spaced radial targets was presented to the subject while the cursor remained stationary at the workspace center. Then, an initial set of BMI parameters was determined by regressing the average spike rates for each trial in this sequence against the corresponding target directions. A second sequence of 8 trials followed, with cursor movements determined by the initial parameter set, but with assistance provided by attenuating velocities perpendicular to target directions. Following this second sequence of trials, new decoding parameters were determined by regressing spike rates from all previous trials against the corresponding target directions. This process was repeated for typically 5 sequences (40 trials), with less assistance in each subsequent sequence until no assistance was provided. The schedule of assistance was determined on an ad-hoc basis. The intuitive BMI mapping calibrated from these trials was then used for the subsequent block of analyzed trials (see 'Behavioral task').

For monkey C, the first task of each day was randomly selected between the 2D and 3D tasks. If the first task was 2D, calibration followed the same procedure as with monkey A. If the first task was 3D, each calibration sequence consisted of 10 targets equidistant from the workspace center. Eight of these targets were on the corners of a workspace-centered cube. The remaining 2 targets were nearly straight out and straight in along the z-direction, but slightly offset so that the cursor was not visually obscured at the central start position. Because these target directions were specified in 3D, calibration regressions resulted in parameters and that could map neural activity to 3D velocity. When the task switched to 2D, the parameters and were set to the first two rows of and , respectively, corresponding to mapped velocities in the frontoparallel plane only. These 3D calibrations typically spanned five 10-trial sequences (50 trials).

Characterizing inherent visuomotor latencies

Request a detailed protocolBMI subjects experience an inherent visual feedback delay. To assess the visuomotor latency experienced by a subject in our BMI system, we measured the elapsed time between target onset and the appearance of target-related activity in the recorded neural population (Figure 2A). To determine the first timestep at which neural activity contained target information, we found the first significant decrease in angular error relative to baseline error. For each trial, baseline error was defined to be the average of absolute angular errors prior to target onset. Here, the angular error at timestep was defined to be the angle by which the cursor would have missed the target had it continued from its current position, , in the direction of the single-timestep velocity command, , from Equation 8. Single-timestep commands () were analyzed here (as opposed to smoothed cursor velocities, ) for improved temporal resolution. Because absolute angular errors range from , one might reasonably expect baseline error to be roughly . Baseline errors shown are less than because angular errors were computed relative to the cursor-target overlap zone (i.e., taking into account cursor and target radii; see Figure 2—figure supplement 1). When errors were instead computed relative to the target center, baseline errors were roughly , and identified latencies were unaffected (data not shown). Had we introduced an arbitrary additional delay to the display updates (Willett et al., 2013), we would expect a commensurate increase in the identified feedback delay.

Assessing feedback delay compensation

Request a detailed protocolBecause of the visual feedback delay (Figure 2A), at timestep the subject cannot yet directly access the timestep cursor position. To determine whether subjects compensated for the visual feedback delay, we asked whether neural activity recorded at timestep was more appropriate for the timestep cursor position or for a previous cursor position. Across a range of lags, , we computed the angular errors of single-timestep velocity commands, (as in Equation 8), as if they had originated at lagged positions (Figure 2B).

Here, angular errors were defined to be the absolute angle by which the cursor would have missed the target had it originated at position and continued in the direction of the single-timestep velocity command , taking into account the radii of the cursor and the target (i.e., in Figure 2—figure supplement 1). This error metric was chosen because it reflects the task goal, that to succeed in a trial, the subject had to to acquire visible overlap between the cursor and the target (Figure 2—figure supplement 1).

By taking into account cursor and target radii, this error metric is influenced by cursor-to-target distance. Specifically, velocity commands originating from positions close to the target will have smaller errors under this definition than the same velocity commands originating far from the target (Figure 2—figure supplement 1). Without accounting for this distance-to-target bias, absolute angular errors might appear smaller for lags that are less positive because these lagged cursor positions will tend to be closer to the targets than cursor positions with more positive lags (e.g., tends to be closer to the target when ms than when ms). To ensure that this distance-to-target bias did not influence our conclusions about feedback delay compensation, errors were computed for the same exact subset of cursor positions across lags. This selection process preserves cursor-to-target distances across lags and thus ensures that the same exact error bias is applied at each lag. To this end, we included in this analysis only cursor positions for which all required lags of neural activity were recorded within the corresponding trial. Further, we only considered cursor positions that were presented at least 100 ms following target onset to ensure that recorded neural activity could plausibly reflect target position given a feedback delay of 100 ms. To determine the error value for a particular lag along the curves in Figure 2C, we first averaged all absolute angular errors for that lag within each trial, and then averaged across trials. A preliminary version of this analysis using different experiments has appeared in conference form (Golub et al., 2012).

Framework for internal model estimation (IME)

Request a detailed protocolThe IME framework is a statistical tool we developed to extract from neural population activity i) a subject’s internal model of the BMI mapping, and ii) the subject’s timestep-by-timestep internal predictions about the cursor state. The central concept underlying the IME framework is that at each timestep, the subject internally predicts the current cursor position using outdated visual feedback and a recollection of previously-issued neural commands (representative of efference copy or corollary discharge [Crapse and Sommer, 2008]), and issues the next neural command with the intention of driving the cursor straight toward the target from the up-to-date prediction of the current cursor position (Figure 3B).

Formally, the IME framework is a probabilistic model defined by Equations 9–14 . The subject’s internal model, as introduced in Figure 3A, is is represented as follows:

where and are the subject’s internal predictions of the timestep cursor position and velocity when the subject is sitting at timestep , is the timestep of the BMI system (33 ms), is a vector of the spike counts recorded simultaneously across the neuronal units at timestep , , and are parameters capturing the subject’s internal model, and is a Gaussian random variable (with isotropic noise variance, ) representing internal predictions not captured by the internal model. More specifically, represents the subject’s internal conception of the physical properties of the cursor, and represents the subject’s internal conception of how neural activity drives movement of the cursor. Note that the subject’s internal model in Equations 9 and 10 can be written in the form of Equation 2:

where the subject’s internal state prediction, , includes the internal prediction of cursor position, , and velocity, . For simplicity in Equation 2, we omitted the noise term, the superscript notation, and the distinction between spike count vectors recorded at a single timestep, , and average spike count vectors across 5 timesteps, (more details on smoothing are given below).

Visual feedback grounds the subject’s internal predictions with reality. At timestep , the subject’s internal prediction of the cursor position and velocity at the feedback delay (, as discussed in Parameter fitting for the IME framework) match the most recently available cursor position and velocity from visual feedback:

The internal model in Equations 9 and 10 is then applied recursively (i.e., across ) to arrive at up-to-date predictions, and , about the current cursor state. The resulting set of internal predictions corresponds to the whiskers shown in Figure 3, Figure 4, and Figure 4—figure supplement 1.

Finally, we incorporate the notion of straight-to-target aiming intention with:

where is the target position, is a non-negative distance scale parameter, and is a Gaussian random variable (with isotropic noise variance, ) representing internal velocity predictions that do not point straight to the target. Since the target was held constant within each BMI trial, took on the same value for all timesteps corresponding to a particular trial. Intuitively, Equation 14 says that when the subject internally believed the cursor to be at position , the intended velocity command, , ought to point in the direction of the target, . The distance scale parameters, , allow the data to determine the intended speed (i.e., velocity magnitude) at each timestep. This parameterization allows us to avoid imposing a-priori assumptions about the subject’s intended speed. During model fitting, larger values of tend to be learned for timesteps when the distance to target is large (from ), and smaller values tend to be learned when this distance is small. In this manner, there are no assumptions imposed upon intended speed during model fitting. Rather, the learned internal model determines intended speed from the data. Additionally, the linear form of Equation 14 was chosen so all latent variables, , and observed variables, , are jointly Gaussian.

Throughout control, new visual feedback continues to arrive, and new neural commands are issued at each timestep. IME captures this progression by including a new set of internal predictions (i.e., a new whisker) at each timestep. For example, at timestep , the subject receives new feedback about the cursor state, and , and accordingly forms a new set of internal predictions for . The full IME probabilistic graphical model is drawn in Figure 3—figure supplement 1 to visually depict this instantiation of Equations 9–14 at each timestep during control.

Through Equation 14 we assume that the subject attempts to move the cursor straight to the target from an internal estimate of the current position. We believe that straight-to-target aiming is a reasonable first-order assumption because the BMI cursor, on average, moves straight to the target during proficient control (see Figure 1B). It may be possible to incorporate other movement objectives, such as minimizing endpoint error (Harris and Wolpert, 1998) or movement jerk (Flash and Hogan, 1985), in the IME framework, which may yield even greater explanatory power. However, at present, there is not clear evidence that these other movement objectives underlie BMI cursor control, so we apply only the basic straight-to-target movement objective in this work.

Both the BMI mapping (Equations 5–7) and the internal model representation (Equations 9–11) implement smoothness across BMI cursor velocities and internal velocity predictions, respectively. The details of this smoothing are subtly different between the BMI and the IME framework. To mitigate the effects of neural spiking noise, the BMI mapping smooths cursor velocities by incorporating neural activity at each timestep through the 5-timestep boxcar filter, as described following Equation 6. Temporal smoothing in internal velocity predictions is achieved through the subject’s internal prior belief about how the internal velocity prediction at one timestep influences the prediction at the next timestep, as encoded by .

In a preliminary IME formulation we presented recently in conference form (Golub et al., 2013), the subject’s internal state prediction was modeled using position only, rather than using both position and velocity, as we have here. The inclusion of velocity has several important advantages. First, it allows the model to capture the subject using feedback about cursor velocity to internally predict cursor position and velocities. Second, including velocity in the state enables IME to automatically determine the degree of smoothness in internal velocity predictions, based on the data, by fitting an appropriate .

Parameter fitting for the IME framework

Request a detailed protocolWe fit IME models using expectation maximization (EM) (Dempster et al., 1977), a maximum likelihood estimation technique for latent variable models. Training data for each trial consisted of recorded spike counts and actual cursor positions for timesteps beginning at movement onset and ending at target acquisition, as well as the target position for that trial. Movement onset for a given trial was defined as the first timestep at which the cursor speed, projected in the center-to-target direction, exceeded 15% of its maximum from that trial. During the E-step, posterior distributions, P(), are computed over the internal states given a set of model parameters. Intuitively, these posteriors are distributions over whiskers that compromise between satisfying the internal model (Equations 9 and 10) and straight-to-target aiming (Equation 14). During the M-step, these posterior distributions are used to update the model parameters, , and . We typically ran EM for 5000 iterations, but allowed fewer iterations if model parameters converged sooner. Although the feedback delay parameter, , can be determined using standard model selection techniques (Golub et al., 2013), we fixed this parameter ( ms, monkey A; ms, monkey C) for simplicity and to remain consistent with our experimental characterization of the visuomotor latency from Figure 2A.

Computing cross-validated internal model predictions

Request a detailed protocolThroughout our results, if an internal state prediction (whisker) points toward the target, it is not trivially due to our inclusion of straight-to-target aiming into IME (Equation 14). Rather, whiskers that point toward targets are evidence of real structure in the data. We ensure that whiskers do not trivially point toward targets by using cross-validation techniques whenever evaluating or visualizing extracted internal models and their corresponding internal state predictions (whiskers). For a given experimental session, trials were randomly assigned to folds such that each fold consisted of one trial to each unique target. We employed -fold cross-validation, where was the number of folds in a given experimental session. Internal models were fit to the data in folds (training data), and the data from the held-out fold (test data) were used when evaluating the extracted internal model.

Although target positions were used to incorporate the notion of straight-to-target aiming during model fitting (through Equation 14), neither targets nor Equation 14 were used when evaluating extracted internal models on held-out data (relevant for Figures 3–6, Figure 3—figure supplement 3, Figure 3—figure supplement 4, Figure 3—figure supplement 7, Figure 4—figure supplement 1, and Figure 4—figure supplement 2). Rather, whiskers were defined as the expected value of the internal state predictions given only available visual feedback and previously issued neural activity according to the probabilistic model, using only Equations 9–13 and not Equation 14):

We found that cross-validated whiskers consistently pointed straight to targets. This result did not trivially need to be the case, as those targets were not used to construct the whiskers. Rather, given internal models extracted from the training data, the statistical structure underlying the recorded neural activity in the test data was consistent with aiming straight to targets from internal predictions of cursor position.

Visualizing an extracted internal model