CEM500K, a large-scale heterogeneous unlabeled cellular electron microscopy image dataset for deep learning

Figures

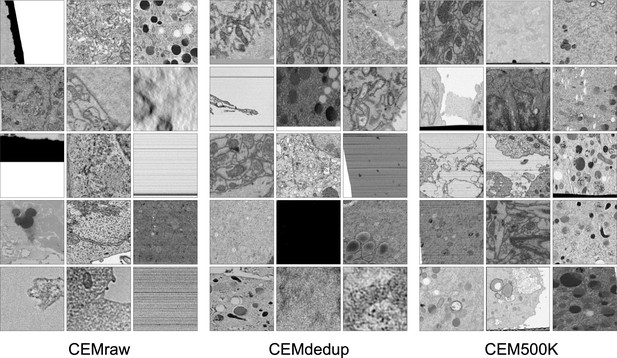

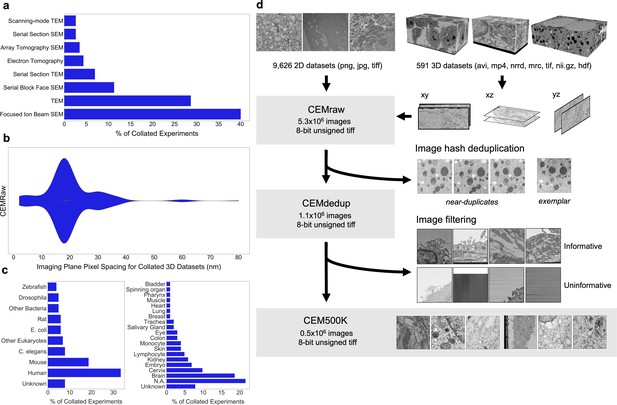

Preparation of a deep learning appropriate 2D EM image dataset rich with relevant and unique features.

(a) Percent distribution of collated experiments grouped by imaging technique: TEM, transmission electron microscopy; SEM, scanning electron microscopy. (b) Distribution of imaging plane pixel spacings in nm for volumes in the 3D corpus. (c) Percent distribution of collated experiments by organism and tissue origin. (d) Schematic of our workflow: 2D electron microscopy (EM) image stacks (top left) or 3D EM image volumes sliced into 2D cross-sections (top right) were cropped into patches of 224 × 224 pixels, comprising CEMraw. Nearly identical patches excepting a single exemplar were eliminated to generate CEMdedup. Uninformative patches were culled to form CEM500K.

-

Figure 1—source data 1

Details of imaging technique, organism, tissue type and imaging plane pixel spacing in collated imaging experiments.

- https://cdn.elifesciences.org/articles/65894/elife-65894-fig1-data1-v1.xlsx

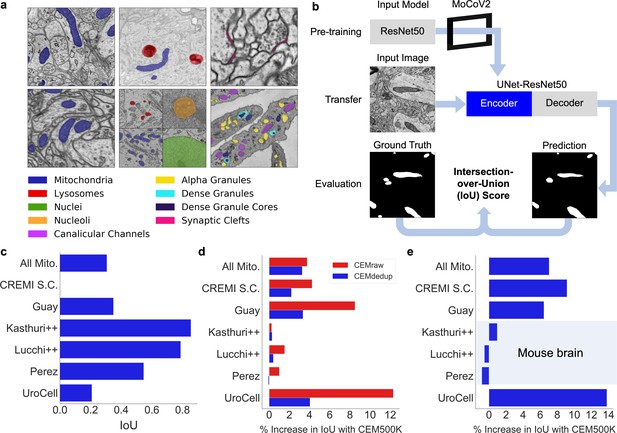

CEM500K pre-training improves the transferability of learned features.

(a) Example images and colored label maps from each of the six publicly available benchmark datasets: clockwise from top left: Kasthuri++, UroCell, CREMI Synaptic Clefts, Guay, Perez, and Lucchi++. The All Mitochondria benchmark is a superset of these benchmarks and is not depicted. (b) Schematic of our pre-training, transfer, and evaluation workflow. Gray blocks denote trainable models with randomly initialized parameters; blue block denotes a model with frozen pre-trained parameters. (c) Baseline Intersection-over-Union (IoU) scores for each benchmark achieved by skipping MoCoV2 pre-training. Randomly initialized parameters in ResNet50 layers were transferred directly to UNet-ResNet50 and frozen during training. (d) Measured percent difference in IoU scores between models pre-trained on CEMraw vs. CEM500K (red) and on CEMdedup vs. CEM500K (blue). (e) Measured percent difference in IoU scores between a model pre-trained on CEM500K over the mouse brain (Bloss) pre-training dataset. Benchmark datasets comprised exclusively of electron microscopy (EM) images of mouse brain tissue are highlighted.

-

Figure 2—source data 1

IoU scores achieved with different datasets used for pre-training.

- https://cdn.elifesciences.org/articles/65894/elife-65894-fig2-data1-v1.xlsx

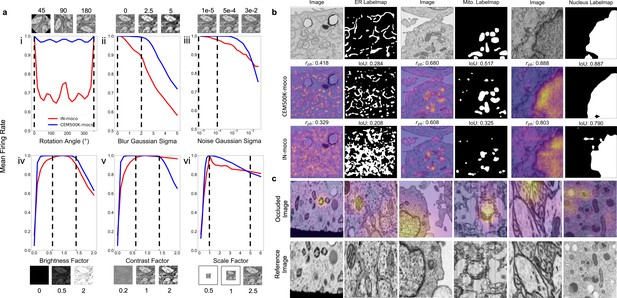

Features learned from CEM500K pre-training are more robust to image transformations and encode for semantically meaningful objects with greater selectivity.

(a) Mean firing rates calculated between feature vectors of images distorted by (i) rotation, (ii) Gaussian blur, (iii) Gaussian noise, (iv) brightness v. contrast, (vi) scale. Dashed black lines show the range of augmentations used for CEM500K + MoCoV2 during pre-training. For transforms in the top row, the undistorted images occur at x = 0; bottom row, at x = 1. (b) Evaluation of features corresponding to ER (left), mitochondria (middle), and nucleus (right). For each organelle, the panels show: input image and ground truth label map (top row), heatmap of CEM500K-moco activations of the 32 filters most correlated with the organelle and CEM500K-moco binary mask created by thresholding the mean response at 0.3 (middle row), IN-moco activations and IN-moco binary mask (bottom row). Also included are Point-Biserial correlation coefficients (rpb) values and Intersection-over-Union scores (IoUs) for each response and segmentation. All feature responses are rescaled to range [0, 1]. (c) Heatmap of occlusion analysis showing the region in each occluded image most important for forming a match with a corresponding reference image. All magnitudes are rescaled to range [0, 1].

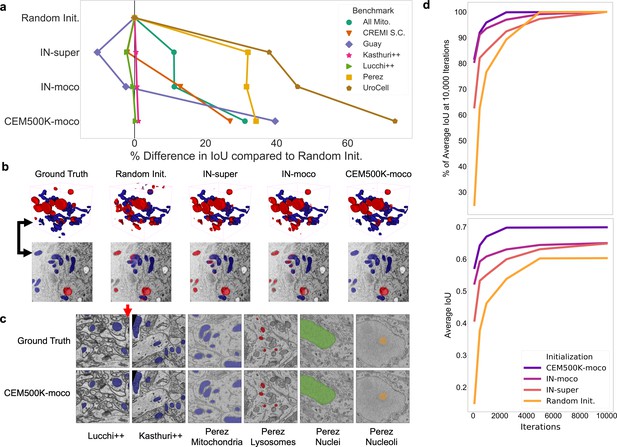

Models pre-trained on CEM500K yield superior segmentation quality and training speed on all segmentation benchmarks.

(a) Plot of percent difference in segmentation performance between pre-trained models and a randomly initialized model. (b) Example segmentations on the UroCell benchmark in 3D (top) and 2D (bottom). The black arrows show the location of the same mitochondrion in 2D and in 3D. (c) Example segmentations from all 2D-only benchmark datasets. The red arrow marks a false negative in ground truth segmentation detected by the CEM500K-moco pre-trained model. (d) Top, average IoU scores as a percent of the average IoU after 10,000 training iterations, bottom, absolute average IoU scores over a range of training iteration lengths.

-

Figure 4—source data 1

IoU scores for different pre-training protocols.

- https://cdn.elifesciences.org/articles/65894/elife-65894-fig4-data1-v1.xlsx

-

Figure 4—source data 2

IoU scores for different training iterations by pre-training protocol .

- https://cdn.elifesciences.org/articles/65894/elife-65894-fig4-data2-v1.xlsx

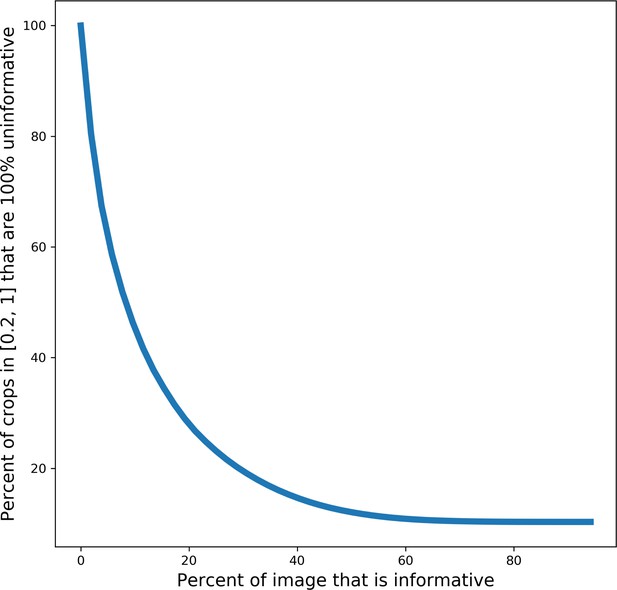

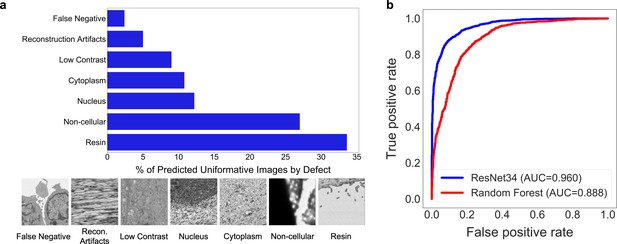

Deduplication and image filtering.

(a) Breakdown of fractions (top) and representative examples (bottom) of patches labeled ‘uninformative’ by a trained deep learning (DL) model based on defect (as determined by a human annotator). (b) Receiver operating characteristic curve for the DL model classifier and a Random Forest classifier evaluated on a holdout test set of 2000 manually labeled patches (1000 informative and 1000 uninformative).

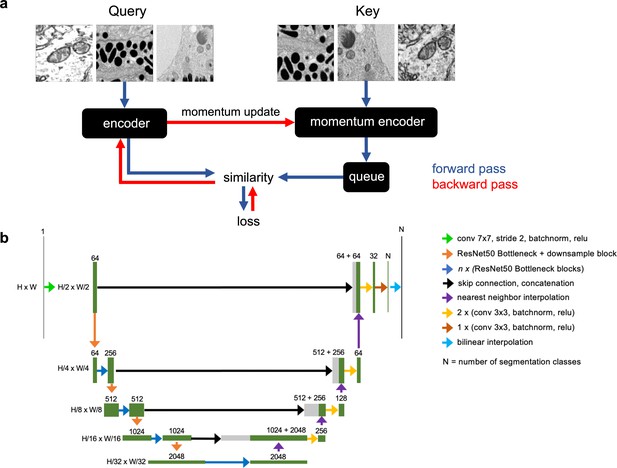

Schematics of the MoCoV2 algorithm and UNet-ResNet50 model architecture.

(a) Shows a single step in the MoCoV2 algorithm. A batch of images is copied; images in each copy of the batch are independently and randomly transformed and then shuffled into a random order (the first batch is called the query and the second is called the key). Query and key are encoded by two different models, the encoder and momentum encoder, respectively. The encoded key is appended to the queue. Dot products of every image in the query with every image in the queue measure similarity. The similarity between an image in the query and its match from the key is the signal that informs parameter updates. More details in He et al., 2019. (b) Detailed schematic of the UNet-ResNet50 architecture.

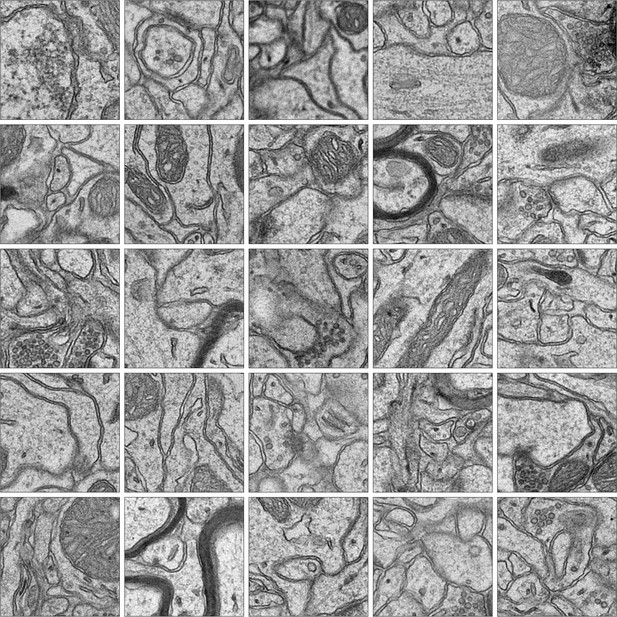

Randomly selected images from the Bloss et al., 2018 pre-training dataset.

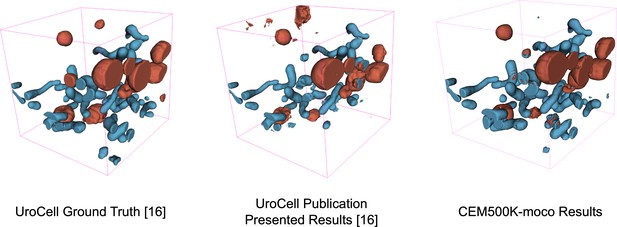

Visual comparison of results on the UroCell benchmark.

The ground truth and Authors’ Best Results are taken from the original UroCell publication (Žerovnik Mekuč et al., 2020). The results from the CEM500K-moco pre-trained model have been colorized to approximately match the originals; 2D label maps were not included in the UroCell paper.

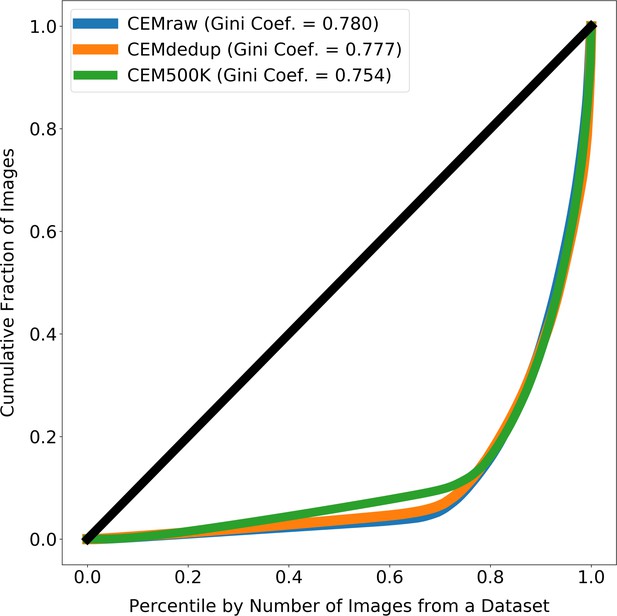

Images from source electron microscopy (EM) volumes are unequally represented in the subsets of CEM.

The line at 45° shows the expected curve for perfect equality between all source volumes (i.e. each volume would contribute the same number of images to CEMraw, CEMdedup, or CEM500K). Gini coefficients measure the area between the Lorenz Curves and the line of perfect equality, with 0 meaning perfect equality and 1 meaning perfect inequality. For each subset of cellular electron microscopy (CEM), approximately 20% of the source 3D volumes account for 80% of all the 2D patches.

Tables

Comparison of segmentation Intersection-over-Union (IoU) results for benchmark datasets from models randomly initialized and pre-trained with MoCoV2 on the Bloss dataset, and CEMraw, CEMdedup, and CEM500K.

* denotes benchmarks that exclusively contain electron microscopy (EM) images from mouse brain tissue. The best result for each benchmark is highlighted in bold and underlined.

| Benchmark | Random Init. (No Pre-training) | Bloss et al., 2018 | CEMraw | CEMdedup | CEM500K |

|---|---|---|---|---|---|

| All Mitochondria | 0.306 | 0.694 | 0.719 | 0.722 | 0.745 |

| CREMI Synaptic Clefts | 0.000 | 0.242 | 0.254 | 0.259 | 0.265 |

| Guay | 0.349 | 0.380 | 0.372 | 0.391 | 0.404 |

| *Kasthuri++ | 0.855 | 0.907 | 0.913 | 0.913 | 0.915 |

| *Lucchi++ | 0.788 | 0.899 | 0.880 | 0.890 | 0.894 |

| *Perez | 0.547 | 0.874 | 0.854 | 0.866 | 0.869 |

| UroCell | 0.208 | 0.638 | 0.652 | 0.699 | 0.729 |

| *Average Mouse Brain | 0.730 | 0.893 | 0.883 | 0.890 | 0.893 |

| Average Other | 0.216 | 0.489 | 0.499 | 0.518 | 0.536 |

Comparison of segmentation IoU scores for different weight initialization methods versus the best results on each benchmark as reported in the publication presenting the segmentation task.

All IoU scores are the average of five independent runs. References listed after the benchmark names indicate the sources for Reported IoU scores.

| Benchmark | Training Iterations | Random Init. | IN-super | IN-moco | CEM500K-moco | Reported |

|---|---|---|---|---|---|---|

| All Mitochondria | 10000 | 0.587 | 0.653 | 0.653 | 0.770 | – |

| CREMI Synaptic Clefts | 5000 | 0.000 | 0.196 | 0.226 | 0.254 | – |

| Guay (Guay et al., 2020) | 1000 | 0.308 | 0.275 | 0.300 | 0.429 | 0.417 |

| Kasthuri++ (Casser et al., 2018) | 10000 | 0.905 | 0.908 | 0.911 | 0.915 | 0.845 |

| Lucchi++ (Casser et al., 2018) | 10000 | 0.894 | 0.865 | 0.892 | 0.895 | 0.888 |

| Perez (Perez et al., 2014) | 2500 | 0.672 | 0.886 | 0.883 | 0.901 | 0.821 |

| Lysosomes | – | 0.842 | 0.838 | 0.816 | 0.849 | 0.726 |

| Mitochondria | – | 0.130 | 0.860 | 0.866 | 0.884 | 0.780 |

| Nuclei | – | 0.984 | 0.987 | 0.986 | 0.988 | 0.942 |

| Nucleoli | – | 0.731 | 0.859 | 0.865 | 0.885 | 0.835 |

| UroCell | 2500 | 0.424 | 0.584 | 0.618 | 0.734 | – |

Additional files

-

Source data 1

Details of image datasets acquired from external sources.

- https://cdn.elifesciences.org/articles/65894/elife-65894-data1-v1.xlsx

-

Source data 2

Zipped folder containing .xl files for Figure 1, 2 and 4 source data.

- https://cdn.elifesciences.org/articles/65894/elife-65894-data2-v1.zip

-

Supplementary file 1

Details of benchmarks used in this paper.

- https://cdn.elifesciences.org/articles/65894/elife-65894-supp1-v1.docx

-

Supplementary file 2

IoU scores for pre-training with CEM500K after removing benchmark data from pre-training dataset .

- https://cdn.elifesciences.org/articles/65894/elife-65894-supp2-v1.docx

-

Supplementary file 3

IoU scores on Guay benchmark using different hyperparameter choices.

- https://cdn.elifesciences.org/articles/65894/elife-65894-supp3-v1.docx

-

Transparent reporting form

- https://cdn.elifesciences.org/articles/65894/elife-65894-transrepform-v1.docx