Cutting Edge: Lessons from Fraxinus, a crowd-sourced citizen science game in genomics

Abstract

In 2013, in response to an epidemic of ash dieback disease in England the previous year, we launched a Facebook-based game called Fraxinus to enable non-scientists to contribute to genomics studies of the pathogen that causes the disease and the ash trees that are devastated by it. Over a period of 51 weeks players were able to match computational alignments of genetic sequences in 78% of cases, and to improve them in 15% of cases. We also found that most players were only transiently interested in the game, and that the majority of the work done was performed by a small group of dedicated players. Based on our experiences we have built a linear model for the length of time that contributors are likely to donate to a crowd-sourced citizen science project. This model could serve a guide for the design and implementation of future crowd-sourced citizen science initiatives.

https://doi.org/10.7554/eLife.07460.001Introduction

Ash dieback is a disease caused by the fungal pathogen Hymenoscyphus fraxineus, and it has devastated populations of ash trees (Fraxinus excelsior) across Europe in recent years. When ash dieback was discovered in the wild in the east of England for the first time, in 2012, the present authors set up the OpenAshDieBack (OADB) project as a crowdsourcing platform to allow scientists across the world to contribute to the genomic analysis of the pathogen and the host (MacLean et al., 2013). Subsequently, we developed and released Fraxinus, a Facebook-based game, to allow non-specialists to improve genetic variant predictions from DNA sequence data arising from the OADB project (MacLean, 2013).

DNA sequence alignment is a hard problem that seeks to arrange two or more genome sequences in order to identify regions of similarity. When short fragments are being aligned with a longer sequence, the longer sequence is often considered to be a reference sequence that should not be altered. The process of alignment requires the best overall match between the two sequences to be found first: this ‘global alignment’ is then followed by a finer-grained ‘local alignment’ that involves modifying the short sequences by, for example, inserting small gaps or deleting short stretches of the sequence.

Alignment is a computationally intensive process, and many computer programs (e.g., BWA aligner [Li and Durbin, 2009]) have been devised that implement and optimize alignments according to various measures of similarity. A straightforward measure is percent identity (in which the number of identical nucleotides in the alignment is calculated as a proportion of whole alignment length). Once the alignment process is complete, any differences between the two sequences can be considered a genetic variation. These differences can be single nucleotide polymorphisms (SNPs), in which a single nucleotide differs, or insertion–deletion polymorphisms (INDELs), in which longer stretches are different. Software like SAMTools (Li et al., 2009) can identify the variants from alignments.

Citizen-science projects are an excellent opportunity to engage the public in science and to harness the human intelligence of large numbers of non-specialists to make progress on inherently difficult research tasks. The citizen-science approach has been used in astronomy (Lintott et al., 2008), protein folding (Cooper et al., 2010), and genetics (Kawrykow et al., 2012; Curtis, 2014; Ranard et al., 2014). However, computational approaches to the identification of genetic variants can be error-prone, and it has been shown that the pattern-recognition skills of humans can improve DNA sequence alignments (Kawrykow et al., 2012). Therefore, we created Fraxinus to improve the automated alignments produced by computational approaches.

Over the first year, Fraxinus was loaded with variant calls based on cDNA-sequence reads generated from four different samples collected at locations in Norfolk in the UK: Kenninghall Wood (KW1), Ashwellthorpe Wood (AT1 and AT2), and Upton Broad and Marshes (UB1) (Saunders et al., 2014). The game presented the player with a pre-selected small section of genome sequence from the KW1 reference strain and rows of sequence from one of the other test variants (MacLean, 2013), with each DNA sequence represented as a string of colored leaves. The game provided tools that allow the player to shift the sequence relative to the reference and to edit the sequence in such a way as to introduce deletions or gaps. The aim of the game for the player was to produce the best alignment, allowing her/him to claim the puzzle as their own and score points. The game was played in Facebook and took advantage of the player's social network to invite new players. The wider social network was used to encourage the replay of puzzles. We stored player names, scores, and the resulting alignments for later analysis. Here, we describe the Fraxinus game, the results of alignment comparisons, the response the game received, and details of parameters fitted to replicate player dynamics.

Results and discussion

The BWA aligner (Li and Durbin, 2009) and SAMTools (Li et al., 2009) were used to identify SNPs and INDELs in AT1, AT2 and UB1 against the common reference KW1 (see ‘Materials and methods’). Initially a data set of 1000 SNPs and 160 INDELs were loaded into Fraxinus (Table 1). For ease of playing, we limited the maximum number of sequences per puzzle to 20 (Figure 1—figure supplement 1A): in total 10,087 puzzles were created from the 1160 variants. Fraxinus was released on 13 August 2013 and by 4 August 2014 (51 weeks) had received 63,132 visits from 25,614 unique addresses in 135 countries (Table 2, Figure 1—figure supplement 2). Most of these were from the UK (57%), followed by the US, Canada, France, and Germany.

Number of variants and derived puzzles used in Fraxinus version 1

| Fungal sample | SNP | INDEL | ||

|---|---|---|---|---|

| Variants | Puzzles | Variants | Puzzles | |

| Ashwellthorpe1 | 250 | 2937 | 53 | 521 |

| Ashwellthorpe2 | 353 | 1121 | 51 | 170 |

| Upton broad and marshes1 | 397 | 4964 | 56 | 374 |

| Total | 1000 | 9022 | 160 | 1065 |

-

SNP, single nucleotide polymorphisms; INDEL, insertion–deletion polymorphism.

Details about player visits and contributions made to Fraxinus

| Description | Details |

|---|---|

| Start date | 2013-08-12 |

| Date until | 2014-08-04 |

| Game duration in days | 358 |

| Total number of visits | 63,132 |

| Total number of players | 25,614 |

| Mean new visits % per day | 26.7 |

| Mean visit duration in minutes | 25.3 |

| Total time contributed in days | 924 |

In the first 6 months, when the first batch of puzzles was retired, we received 154,038 alignment answers, with an approximate log-normal distribution of answers for 10,087 puzzles (Figure 1—figure supplement 1B). Each puzzle was played on average by 11.48 (standard deviation 7.08) players, and not all alignment answers were informative (we received 3.6 empty alignments per puzzle; Supplementary file 1, Table S1). We compared 7620 selected puzzles for differences in player and software alignments: in 4701 (61.7%) puzzles the alignments from all the high-scoring players differed from the computational alignment (Figure 1A). A further 2765 (36.3%) of the player alignment answers were identical to those from software. And with the exception of 154 puzzles (2%), the high-scoring players converged on the same answer (Figure 1A). Most puzzles had replicate analyses; 6834 (89.7%) had two or more high-scoring players (Figure 1—figure supplement 1C). Together, these results indicate a high accuracy of alignment across puzzles and endorse the notion that replay leads to useful replication.

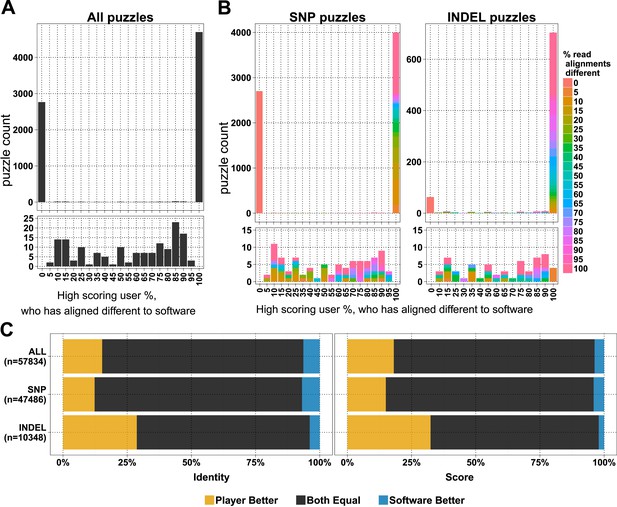

Comparison of player and software alignments for 7260 selected puzzles.

(A) Number of puzzles (y-axis) vs percentage of high-scoring players who produce alignments different to the alignment produced by the BWA mem software (x-axis): in 4701 of these puzzles, the alignments produced by all of the high-scoring players were different to the alignment produced by the software (rightmost column; difference = 100%); in 2765 of puzzles, the alignments produced by all of the high-scoring players were the same as the alignment produced by the software (leftmost column; difference = 0%). Only a small number of puzzles (154) were between these two extremes (see lower panel, which expands the y-axis for differences between 5% and 95%). (B) Single nucleotide polymorphism (SNP) and insertion–deletion polymorphism (INDEL) puzzles presented separately and color coded with a heat map depicting the percent of read alignments contributing to the difference between player and software. (C) Comparison of alignments from the 4701 puzzles that had all high-scoring players aligned different to the software: the left panel is based on percent identity between sequences; the right panel is based on the Fraxinus game score (see Fraxinus game setup in ‘Materials and methods’).

In total, in 57,834 cases, the player alignment differed from the alignment produced by the computational method. In 15.26% of these cases, the players achieved a higher percent identity; in 6.37% of cases, the computational method achieved a higher percent identity; and the percent identities were equal for the remaining 78.37% of alignments (Figure 1C). Players aligned a higher proportion of INDELs (85.4% of 822) differently to software than SNPs (58.8% of 6798; Figure 1B). Players scored higher in 29% of INDEL alignments, with software scoring better in only 4% of alignments. For SNPs players scored better in 12% of puzzles with software scoring better in 7% (Supplementary file 1, Table S2).

The series of steps involved in these solutions is not yet recorded by Fraxinus, but previous work on the FoldIt game has shown that recording and sharing protein-folding recipes allow rapid development of novel algorithms (Khatib et al., 2011); we are hopeful that similar improvements will be possible with Fraxinus.

Most of the visitors to the Fraxinus game were interested only casually and did not play beyond either the introduction or tutorial (Table 3). Only 7357 (28.72%) of players answered puzzles and, more surprisingly, 49 players (0.7%) contributed to half of the answers (74,356 answers) that we received (Figure 2A). New players and returning players devoted an average of 12.5 min and 29.7 min per visit, respectively (Figure 2—figure supplement 1A). On average, each returning player made 2.3 visits per day, thereby contributing 70 min of game play per day; this indicates that the players were spending sufficient time to go through the process of realignment and are probably now experts in the alignment process.

Details about categories of players visiting Fraxinus

| Description | No. of players | Percent |

|---|---|---|

| Viewed introduction | 6115 | 23.87 |

| Completed tutorial and scanned puzzles | 7958 | 31.07 |

| Attempted puzzles | 4184 | 16.33 |

| Scored puzzles | 7357 | 28.72 |

| Total players | 25,614 | 100 |

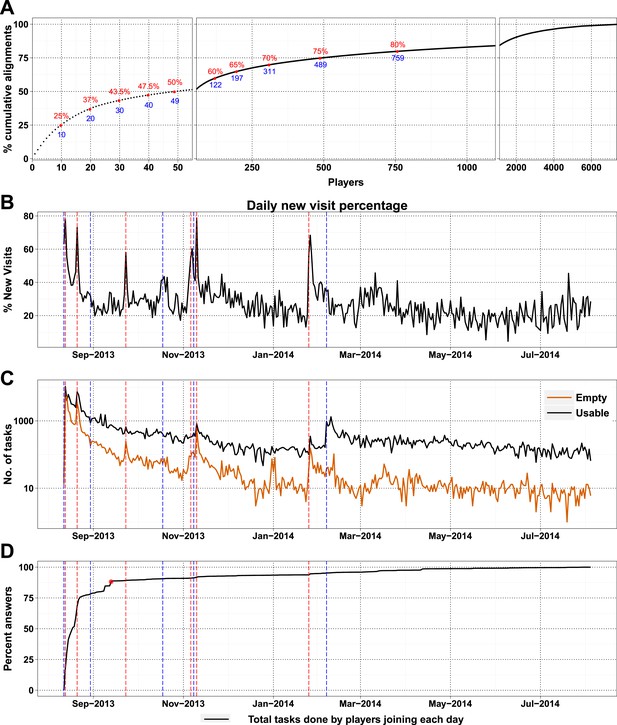

High-scoring players and press releases.

(A) Cumulative contribution by players is plotted against player rank (based on the number of useful answers the player contributed): the ten best players contributed 25% of useful answers. (B) Percent of new visits received daily to Fraxinus vs date, with dashed red lines representing press releases and dashed blue lines representing mention on social media. (C) Number of usable (black line) and empty (orange line) tasks provided by players vs date. Press releases led to prominent peaks in the number of empty tasks and less prominent peaks in the number of usable tasks. (D) Cumulative contribution (by all players) vs date: 88% of the answers were provided within the first month (red dot).

As a high-profile project, a number of media-wide publicity events were organized to increase awareness of the game. There were clear peaks of new visitors (mean 151.4) on dates with publicity (Figure 2B) with events in traditional media having a much stronger impact than social media alone (Supplementary file 1, Table S3). The number of returning players showed only a small increase (mean 2.6 returning per publicity event; Figure 2—figure supplement 1B). Most (62%) players joining on a press release date played only on that day, and 97.5% of all players played for 10 days or less (Figure 2—figure supplement 2A). However, new players were more motivated, if they joined on a press release date and submitted 6.8 times more answers on average than those joining on other dates (Figure 2—figure supplement 2B). A marked increase in empty answers was also observed on press release dates (Figure 2C), indicating that new players joining on these days have contributed to more empty answers than useful answers. This suggests that care need to be taken in assuming that all time contributed by players is equally productive. In total, 88% of all answers were provided by players who joined within the first 30 days (Figure 2D). Therefore, most of the analysis in Fraxinus was carried out within the first few months by players enthused by the initial publicity, with some benefit resulting from subsequent publicity.

The number of daily visits followed a power law distribution, (Figure 3—figure supplement 1) in the period presented. The power law distribution is also followed by new players and returning players (Figure 2—figure supplement 1C). There were very few players who visited regularly (Figure 3—figure supplement 2). There are 33 players who were active on 60 or more days, while 8 of these were active for over 200 days (Supplementary file 1, Table S4), with one player being active for 332 out of 350 days, since joining. The distribution indicates that we can expect a surprisingly long life for Fraxinus in the order of years (Figure 3A). Despite appearing contradictory to the observation that most answers were submitted in the first few months, a small number of players are visiting frequently, although this is likely to decline in the future.

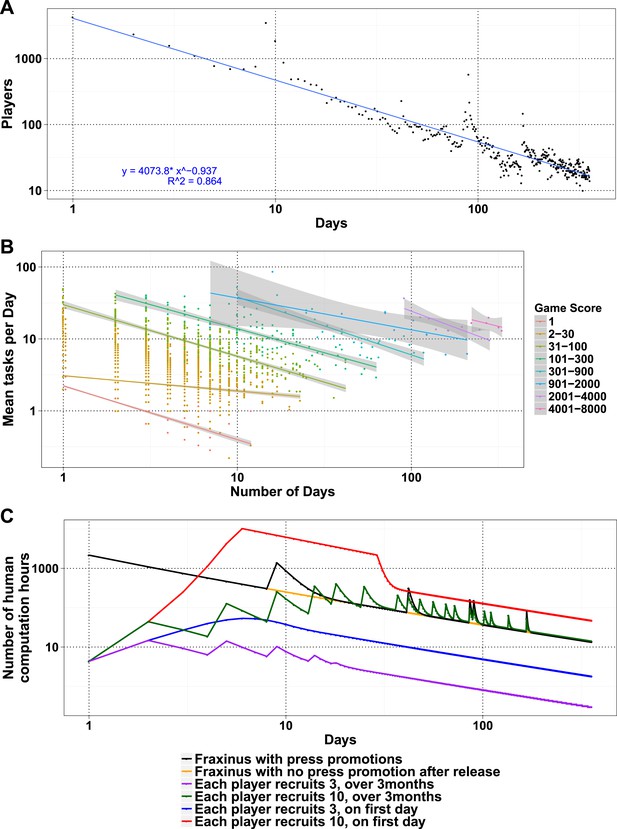

Modeling human computation for citizen science projects.

(A) Actual data showing number of players on each day (y-axis) vs time in days since game release (x-axis) for Fraxinus. The observed distribution of players visiting the game page daily is fitted to a linear model on log scales. (B) The enthusiasm of players decreases irrespective of their success at the game. The mean number of tasks completed per day (y-axis) is plotted against the number of days, the players were active (x-axis) for groups of players in similar score groups (color coded based on their scores); contribution decreases over time for all groups. (C) Predictions from a model that predicts work done (measured in computation hours; y-axis) as a function of days since game release (x-axis) for six different scenarios: Fraxinus with press releases at and after launch (black); with a press release at launch, but no subsequent press releases (orange); each player recruits three new players (NPs) over a period of 3 months (purple); each player recruits 10 NPs over a period of 3 months (green); each player recruits three NPs on first day (blue); and each player recruits 10 NPs on first day (red).

We examined whether success at the game affected longevity of contribution. The mean puzzles contributed per day for groups with different total game scores decreased in a linear fashion for all scoring bins, indicating that players' enthusiasm for the puzzles or cause decreased over time, irrespective of their success at the game (Figure 3B). Using these demographic parameters as a base, we developed a simple model to predict the productivity of any proposed crowdsourcing project. The model returns the number of players contributing to a project per day, based on an initial expected cohort of players, a returning player rate, and a new player rate. We can then calculate the work ultimately done for a task that takes a given amount of time to learn and execute. Our model is formulated as a decreasing power law relationship (‘Materials and methods’) and allows for arbitrary increase in players as per those observed on press release days. The model accordingly recapitulates the observed result from Fraxinus (Figure 3C). Predicted work time in the model was 935.53 days, while the actual total visit time was 924 days. Similarly, by modeling the impact of press releases, we predicted 150.11 days of additional play; the actual figure was 142.14 days.

To apply the model and ultimately estimate the work from potential studies, it is important to estimate the size of the initial player cohort and the returning/new player rate. One approach would be to use the actual number of players and returning/new player rate, as measured during the early stages of the project, as estimates. Our experience with Fraxinus has been one of constantly decreasing player numbers, in spite of repeated press releases (Figure 3C), and the parameters of our player demographic distribution and the interest in our game have not been such that we received increases in player numbers after the initial release. Fraxinus was a very front-loaded game that did not hold the interest or grow beyond the initial crowd we reached via the media.

Similar decaying trends were also observed with other citizen science projects hosted on Zooniverse (Ponciano et al., 2014; Sauermann and Franzoni, 2015) and for web searches for trending terms (such as ‘Ebola’; Figure 3—figure supplement 3), which suggests that the decay in interest observed in crowdsourcing games is similar to that in other topics like the news. This situation is not inevitable; it is possible for the player cohort to grow over time, if the slope of the power law expression in positive player numbers can increase. In practice, this is equivalent to the number of active or new players exceeding those leaving. This could be achieved by an enthusiastic ‘viral’ growth spread, and the results from the model in this case are similar to those from SIR (susceptible, infected, and recovered) or rumor-spreading models (Zhao et al., 2013). However, a viral strategy does not result in unlimited growth of player numbers, when the potential audience is saturated; instead, the amount of work performed begins to decline (Figure 3C). The work obtained by a viral strategy does not exceed that by a front-loaded strategy unless somewhat unrealistic growth rate is assumed (e.g., each player invites ten more players on the first day, and there is no restriction on the total available players; Figure 3C).

A smaller amount of work than that seen in the front-loaded strategy is done if the players are allowed to defer inviting friends over a time period. We allowed a random time delay of up to 3 months between joining and inviting new players and saw that the overall work was less than if invitation took place in the first 3 days. It is clear that by having a large initial cohort and not adopting a viral strategy, we maximized the work expected from Fraxinus. By applying our model to a proposed crowdsourcing project, it is possible to balance resources (such as public outreach, time taken to analyze data, and initial cohorts of contributors) in order to make the most of potential contributors and to determine whether the approach is likely to be feasible and worthwhile.

Conclusions

Fraxinus posed a problem that non-specialists were able to positively contribute to. From the patterns of access and return, we observed that the amount of work done is limited to a surprisingly small fraction of contributors, particularly in view to the number of people who have volunteered effort. In the case of Fraxinus, we have been able to build up a small community of skilled users who are willing to collaborate and contribute to our goals. However, it is clear that scientists wishing to take advantage of crowdsourcing for citizen-science projects must be extremely focused to get value out. Our model of the human computing power that is available for a citizen-science project provides a guide for the design and implementation of future projects.

Materials and methods

Fungal materials and sequences

Request a detailed protocolKenninghall Wood1 (KW1) is an isolate of H. fraxineus that was collected and isolated from Kenninghall Wood, Norfolk, UK. KW1 DNA was isolated, and 251 bp paired-end genomic library sequenced using Illumina Miseq. KW1 draft version1 (v1) of genome was assembled using ABySS 1.3.4. Further details of KW1 library preparation and genome assembly can be found from Saunders et al. (2014). Three samples of infected ash branches collected from Ashwellthorpe wood (AT) and Upton broad and Marshes (UB) are referred to as AT1, AT2, and UB1, respectively. RNA was isolated from pith material of infected branches using an RNeasy Plant Mini kit (Qiagen, Manchester, UK). RNA-seq library was prepared using Illumina Truseq kit with 200 bp insert size. Paired-end RNA-seq was carried out on Illumina GAII with read length of 76 bp.

Variant detection

Request a detailed protocolPaired-end RNA-seq libraries from three samples AT1, AT2, and UB1 were used for variant detection against the KW1 version1 genome assembly. Paired-end RNA-seq reads were aligned to KW1 v1 contigs using BWA (Li and Durbin, 2009) mem (v0.7.4) with default settings. SAMTools (Li et al., 2009) (v0.1.17) was used to generate sorted BAM files; mpileup and bcftools (view -vcN) commands were used to generate variants. Sequence reads with mapping quality scores less than 20 were ignored in variant selection. Variants called at positions where reference base was unknown were excluded. Positions selected to load on Fraxinus version 1 must have had a minimum coverage of 10. All RNA-seq sequences, BAM, and variant VCF files generated in this study were submitted to the European Nucleotide Archive (ENA) (http://www.ebi.ac.uk/ena) under accession number PRJEB7998.

Fraxinus game setup

Request a detailed protocolThe Fraxinus game interface presents a 21 base reference at the top, and nucleotides are displayed in leaf shape with following colors—green (A), red (T), yellow (G), orange (C), and gray (N). Puzzles were populated with 2–20 reads to be aligned by players. The following scoring scheme was employed with integer values: match score = 5, mismatch score = −3, gap opening score = −5, and gap extension score = −2. Upon joining each new player was taken to an introduction on ash dieback and its impact and subsequently to a tutorial that explains how game play can progress. Players completing the tutorial were awarded one point. Players were then presented with a game index page listing various options, such as choosing a puzzle to solve, the leader board top players, leader board of friends, and any notification. For each puzzle, players needed to realign the reads against a reference sequence, score as high as they could, and then submit their answer. Players received a point for each answer they submitted and an additional point for submitting the answer with the highest score. If another player beat their score and submitted an answer with a new highest score, they would then get the additional point transferred from the previous player along with a point for submission of answer—this event is referred as ‘stealing’. However, if a player matched a previous high score, they did not receive the bonus point. Therefore, we credited only the first player, who provided the highest scoring alignment. Players did not get an extra point for improving their own score on a game, until someone else had ‘stolen’ the puzzle (by getting a higher score). Each answer submitted by players was stored in a database for subsequent analysis. Player activities, such as accessing the introduction, completion of the tutorial, puzzles accessed, and answers submitted, were stored in a database.

Variants included in Fraxinus game

Request a detailed protocolWe selected 1000 SNP variants and 160 INDEL variants from the RNA-seq analysis of three infected samples (Table 1). Variant position and 10 bases on either side were used to extract sequence read information from BWA alignment BAM file. Extracted reads were used to generate one or more puzzles with a maximum of 20 reads per puzzle; resulting in 9022 puzzles from 1000 SNP variants and 1065 puzzles from 160 INDEL variants. The game database was uploaded with generated puzzles that included reference sequence name, 21 base reference sequence hosting the variant, variant position, and details of reads included to realign. Alignment positions were randomly scrambled for included reads, so that players could realign, independent of the information from software alignments.

Puzzle alignment comparison

Request a detailed protocolFor 10,087 puzzles, we received 154,038 answers, of which 35,921 puzzles were empty. Further details on the number of answers are provided in Supplementary file 1, Table S1. As reads were selected with in the 21 base window (10 bases either side of the variant position), there were reads that did not cover the variant allele and led to misalignments by the player within the window (Figure 1—figure supplement 3). Therefore, we focused our comparison using only reads covering the variant allele according to software alignments and restricted our analysis to 7620 puzzles carrying these reads. The absolute match/mismatch score ratio used in our game is 1.67 (5/3) expected a conservation of 50% between read and the reference and is higher than 0.33, 0.5, and 1, which are used for 99%, 95%, and 75% conservation between reference and read sequences (States et al., 1991). Therefore, to be comparable between the alignments by players and software, we realigned the BWA alignments using the scoring system employed in Fraxinus game (BWA options -A 5 -B 3 -O 5 -E 2). Player alignments were stored in CIGAR format (Li et al., 2009) with associated alignment start position. However, player alignment start position was set to start of the read, regardless of whether a read was soft clipped or not. So this has been corrected in the analysis. Each puzzle reference nucleotide position was taken randomly from software read alignment information. Therefore, reference sequence position information was corrected from the selected input variants data. For initial comparison of puzzles, position-corrected player alignment CIGARs were compared with BWA CIGAR strings from score adjusted BWA realignments. Then alignments were categorized as similar or different to software based on all high-scoring player outcomes for each puzzle. For each puzzle, the percent of high-scoring players aligned different software was calculated, in addition to percent of reads contributing to the difference. Database dump, data analysis scripts, and source are made available at the authors github (repository Fraxinus version1 data analysis).

Read alignment comparison

Request a detailed protocolPuzzles, which had all the high-scoring player alignments different to software, were selected, and individual read alignments from these puzzles were extracted and compared to the software read alignments. Individual read alignments were compared in two ways: (1) by calculating percent identity between read and the reference; (2) by calculating the alignment score employed in the Fraxinus game. Both percentage and score calculations for alignments were computed for the bases with in the 21 base game window and for whole read alignments. Any gaps opened and extended were considered as a mismatch in calculating percent identity between read and reference. Alignment score calculation within the game window was normalized per base to be comparable between software and player alignments.

Game page visit analysis

Request a detailed protocolWe used Google Analytics (GA) to record visits and study daily activity to the game page. The open source Ruby API for GA (Google api ruby client samples) was used to extract analytics information regularly. Extracted data included details, such as daily number of player visits, number of new players and returning players, percent new players and returning players, number of new players and returning players, mean duration of daily visit, and mean duration of visit by each player type. We extracted information about geographical distribution of the visits and number of players from each country. Player activity information from 12 August 2013 to 4 August 2014 (358 days) was used to generate reports about the trend of player visits and duration of visits by player type and player geographical distribution. GA uses persistent cookies to identify each player, which may result in an overestimation of number of unique players, especially if the cookies are deleted from the machine. From the comparison of number players registered in the Fraxinus game database and number of players counted from GA, we found that on average 7.25% of additional number of players were recorded by GA. Based on the number of new players and returning players visiting from the Fraxinus database, we estimated that each returning player visits 2.28 times per day. Based on the mean duration of new and returning player visit length, we estimated that 12.5 min was the duration by new players and 29.65 min was the duration spent by each returning player per visit.

Impact of press releases

Request a detailed protocolTo asses the impact of press releases, players joining on or up to 3 days from a press release date were selected, and the total number of useful answers provided by them until 4 August 2014 was pooled. Similar analysis was done for players joining on remaining dates to compute control day player contributions. To calculate number of new and returning players resulting from press releases, the numbers of new and returning players from the selected dates were subtracted by the mean of respective player numbers from 10 previous days.

Human contribution estimation

Request a detailed protocolWe used the data from Fraxinus to combine the play parameters into an equation that can calculate the productivity of a crowdsourcing project. A number of new players and returning players visiting Fraxinus daily were used to fit a power law relationship of y = axb; where y = player number, a = cohort of players on day 1, x = time since release, and b = rate of decay of players. We have fit separate equations for the number of new players, NP = ax−1.303; and the number of returning players, RP = (a/5x)−0.764. A parameter z was included to increase the value of NP at arbitrary points to simulate the effect of media or outreach attention. The actual work done by a crowdsourcing project depends on the time taken to complete each task and can be calculated,

where W = Total human computation contribution, H = human computation time, E = education cost (total number of players * tutorial length), T = task length. And H is calculated using following equation,

Ci = initial cohort of players (5000), Cr = fraction of the cohort of players returning (1/5), Cn = new players joining due to press release as a function of time, d = day of game, f = end day (358), Tn = mean processing time contributed by a new player in minutes (12.5 min), Tr = mean processing time contributed by a returning player in minutes (70 min), α = rate of decay of new players (−1.303), β = rate of decay of returning players (−0.764), N = effectiveness of time contributed by new players (1), E = effectiveness of time contributed by returning players (1).

We provide code implementing the model as used in these analyses at http://nbviewer.ipython.org/github/shyamrallapalli/fraxinus_version1_data_analysis/blob/master/fraxinus_visits_model/Players-nonplayers.ipynb.

Data availability

-

RNA sequencing and variant discovery in the ash dieback pathogen (Hymenoscyphus fraxineus), using several infected samples collected from woodlands of Norfolk, UKPublicly available at the EBI European Nucleotide Archive (Accession no: PRJEB7998).

References

-

Online citizen science games: opportunities for the biological sciencesApplied & Translational Genomics 3:90–94.https://doi.org/10.1016/j.atg.2014.07.001

-

Fraxinus version1 data analysisURL: https://github.com/shyamrallapalli/fraxinus_version1_data_analysis.

-

Google api ruby client samplesURL: https://github.com/google/google-api-ruby-client-samples/tree/master/service_account.

-

Algorithm discovery by protein folding game playersProceedings of the National Academy of Sciences of USA 108:18949–18953.https://doi.org/10.1073/pnas.1115898108

-

The sequence alignment/map format and samtoolsBioinformatics 25:2078–2079.https://doi.org/10.1093/bioinformatics/btp352

-

Galaxy Zoo: morphologies derived from visual inspection of galaxies from the Sloan Digital Sky SurveyMonthly Notices of the Royal Astronomical Society 389:1179–1189.https://doi.org/10.1111/j.1365-2966.2008.13689.x

-

Volunteers' engagement in human computation astronomy projectsComputing in Science & Engineering 16:52–59.https://doi.org/10.1109/MCSE.2014.4

-

Crowdsourcing—harnessing the masses to advance health and medicine, a systematic reviewJournal of General Internal Medicine 29:187–203.https://doi.org/10.1007/s11606-013-2536-8

-

Crowd science user contribution patterns and their implicationsProceedings of the National Academy of Sciences of USA 112:679–684.https://doi.org/10.1073/pnas.1408907112

-

SIR rumor spreading model in the new media agePhysica A 392:995–1003.https://doi.org/10.1016/j.physa.2012.09.030

Decision letter

-

Peter RodgersReviewing Editor; eLife, United Kingdom

eLife posts the editorial decision letter and author response on a selection of the published articles (subject to the approval of the authors). An edited version of the letter sent to the authors after peer review is shown, indicating the substantive concerns or comments; minor concerns are not usually shown. Reviewers have the opportunity to discuss the decision before the letter is sent (see review process). Similarly, the author response typically shows only responses to the major concerns raised by the reviewers.

Thank you for sending your work entitled “Fraxinus: a citizen science DNA alignment game where players beat software & reveal a winning strategy for crowdsourcing” for consideration at eLife. Your article has been favourably evaluated by three reviewers, but needs to be revised in response to the following comments from the referees before we can reach a final decision on publication.

Summary:

The paper, through the analysis of data arising from the platform game Fraxinus, proposes a linear model that predicts the likely outcomes of crowdsourcing projects. The authors, by means of a descriptive analysis, show a case in which the crowd shows higher performances than the machine. Predicting the outcomes of a crowdsourcing project is certainly an interesting topic whose implications are relevant not only for scientists themselves, but also for R&D managers, administrators of granting agencies, and policy makers. The article describes a novel crowdsourcing project and provides relevant insights for crowdsourcing in bioscience. Nonetheless, there is considerable scope for the manuscript to be improved.

Essential revisions:

1) The title of this paper describes Fraxinus as “a citizen science DNA alignment game where players beat machines” and the Abstract states that Fraxinus players “matched or improved computational alignments in 94% of cases”. However, it turns out that only 15% of the time players gained a higher percent identity (and in 78% of alignments the percent identity was equal). Therefore, the claims in the title and Abstract need to be toned down.

The authors should also slightly shorten the paragraph containing the comparison of performances between the crowd and the machine. This topic, despite its relevance, has been already addressed by literature (e.g. Cooper at al. 2010).

2) In the Abstract the authors claim that their model will provide a framework for the design and implementation of future citizen-science initiatives. However, the paper just provides limited hints of such a framework. The authors should consider extending this section. The authors should also consider adding a paragraph about the generalizability of the model, possibly containing evidences of its goodness (e.g. through the prediction of the outcomes of other crowdsourcing project).

3) Many key concepts necessary to describe multiple sequence alignments and genetic variant predictions are explained only brief. Please supply more detailed descriptions for the benefit of readers who are not familiar with these concepts.

4) The authors should explain their model more fully. In particular, they should better explain the model assumptions (e.g. why press releases only influence the behavior of new players?) and the implications of these assumptions. Crowston and Fagnot (2008), Panciera, Halfaker and Terveen (2009), and Jackson and colleagues (2015WP) develop dynamic models of virtual collaboration that may be useful in thinking about this issue.

5) The authors should consider a refinement of their model to address the following points:

Results and Discussion, first paragraph: The authors claim that 49 players (0.7%) contributed to half of the total answers. However, the authors do not consider this information when they developed their model.

Similarly, they write (Results and Discussion, fifth paragraph) that not all time contributed by players is equally productive. However, they make this assumption in their model. The authors should explain the reasons of these choices.

A number of recent studies (e.g. Sauermann and Franzoni 2015) describes user's contribution pattern and highlights that the idea of a homogenous group of participants is far from reality. The authors should consider these contributions to refine their model.

Using Google Analytics persistent cookies may generate a bias in assessing both the number of new players (overestimation) and the number of returning players (underestimation). The authors should describe how they took into account this bias in their computation.

6) Concerning the results, it would be good to have a justification for the parameters used. Why do you choose a 21 nt game window? Do you try the game with a longer window? Could you expect better results if a longer window is used? In the same sense, the alignments are compared in two ways; by calculating the percent identity and by computing an alignment score. With respect to the measure of identity, why the authors did not use ‘standard’ measures such as Sum of Pairs, and Total Column metrics? What about comparing the score of the alignments using the score functions reported by state of the art MSA algorithms?

7) It is not clear to the reader what software/computational method the players are being compared to, especially in the figures and tables. Please explicitly the software/computational method in the captions of the relevant figures and tables.

8) The section ‘Human contribution estimation’ needs some revision. If the formulae given in this section are standard formulae taken from the literature, please supply references. And if these formulae are not standard formulae, please supply more information about how they were derived.

9) Please consider adding the following references and discussing them in the text at the appropriate place:

Open-Phylo: a customizable crowd-computing platform for multiple sequence alignment Genome Biology 14, R116 (2013). This paper seems to have a lot of common features with the study presented here, and it can be used for comparison purposes.

Algorithm discovery by protein folding game players PNAS 108, 18949-18953 (2011). This paper could be used to develop the idea that the alignment strategies used by players could be implemented in algorithms for potential improvements.

It would be useful to be explicit about the relationship between the present work and previous publications (MacLean et al., GigaScience, 2013; MacLean et al., eLife, 2013).

https://doi.org/10.7554/eLife.07460.017Author response

1) The title of this paper describes Fraxinus as “a citizen science DNA alignment game where players beat machines” and the Abstract states that Fraxinus players “matched or improved computational alignments in 94% of cases”. However, it turns out that only 15% of the time players gained a higher percent identity (and in 78% of alignments the percent identity was equal). Therefore, the claims in the title and Abstract need to be toned down.

We think the claim is justified in the Abstract. As the reviewers point out, in 78% of cases the algorithms and players are equally good, in 15% of the remaining the players are better – hence “matched or improved” – and we think this large proportion of equaling and bettering is a substantial. We would be happy to rephrase this in the title: “Fraxinus: a citizen science game where players can improve software DNA alignments & a model for strategising in crowdsourcing”.

The authors should also slightly shorten the paragraph containing the comparison of performances between the crowd and the machine. This topic, despite its relevance, has been already addressed by literature (e.g. Cooper at al. 2010).

We take this paragraph to be the second in Results and Discussion that describes the specific findings on performance between player and machine in Fraxinus. We have edited to reduce the word count and removed the mention of gene functions. Cooper et al. describe FoldIt has had success with players doing protein structures – not sequence alignments – and do not describe the specific results for DNA sequence alignment as in Fraxinus. It is not a given that the crowd would beat the computer in this different problem domain and it is important that the extent to which crowd and machine differ in Fraxinus specifically is reported. With this paragraph we do simply this and show that the game is a useful tool that fulfilled its primary purpose. There isn't much more in this paragraph other than some terse statements about player machine differences and we can't edit it much further and retain the important, specific information.

2) In the Abstract the authors claim that their model will provide a framework for the design and implementation of future citizen-science initiatives. However, the paper just provides limited hints of such a framework. The authors should consider extending this section. The authors should also consider adding a paragraph about the generalizability of the model, possibly containing evidences of its goodness (e.g. through the prediction of the outcomes of other crowdsourcing project).

In the Abstract we have replaced the word ‘framework’ with ‘guide’. We have added text describing how the model may be generalized to the discussion in Conclusions and a brief mention in the Abstract.

We agree that showing the goodness of the model through predictions would be useful, however despite making numerous data requests from other published projects we were not able to get user/player visit data from any other crowdsourcing project. We would need these data to verify and calibrate our model and predictions. Hence we can only make predictions based on assumed parameters but not falsify them, which isn't very useful and doesn't help in the aim of showing goodness.

In supplemental material we have added data that do show the model accurately represents the interest and attention on topics as revealed by Google Trends data. We show the decay of interest in the topic of Ebola over recent time and the model's prediction of the same. Games and topics are analogous in the initial interest component of the crowdsourcing so can be taken as a somewhat close proxy of interest in a crowdsourcing game. We have added a new supplementary figure (Figure 3–figure supplement 3) of these data.

3) Many key concepts necessary to describe multiple sequence alignments and genetic variant predictions are explained only brief. Please supply more detailed descriptions for the benefit of readers who are not familiar with these concepts.

We have added a paragraph on these concepts to the Introduction.

4) The authors should explain their model more fully. In particular, they should better explain the model assumptions (e.g. why press releases only influence the behavior of new players?) and the implications of these assumptions.

Press releases do not only influence the behaviour of new players, we observed that press releases bring new players but data show the returning players increased only minimally, this difference is covered by the model also and described in Figure 2–figure supplement 2 on the behaviour of players that are new versus those returning. It isn't really an assumption since we observed the difference and model it directly.

Crowston and Fagnot (2008), Panciera, Halfaker and Terveen (2009), and Jackson and colleagues (2015WP) develop dynamic models of virtual collaboration that may be useful in thinking about this issue.

The studies suggested by the reviewers address virtual collaborations observed during crowdsourcing projects especially Wikipedia. These require expertise in the field, also each article is edited by multiple individuals who virtually collaborate to complete the task. Collaboration between players is not possible in Fraxinus, rather players compete to gain higher scores and they are not required to have knowledge of DNA alignments. The fundamental observations in these references are similar to ours, i.e. that a core set of individuals/players contribute most and these individuals provide regular time contributions. These assumptions are already built in to our linear model.

5) The authors should consider a refinement of their model to address the following points:

Results and Discussion, first paragraph: The authors claim that 49 players (0.7%) contributed to half of the total answers. However, the authors do not consider this information when they developed their model.

These are already incorporated, we model different decay rate for returning and new players and the model doesn't explicitly create player agents that return work but rather models the change in number of players. Hence the model is a model of time donated and therefore by proxy the work done. It is therefore not necessary be so explicit and our model can remain generic. The amount of work done is assumed to be a function of the time donated.

Similarly, they write (Results and Discussion, fifth paragraph) that not all time contributed by players is equally productive. However, they make this assumption in their model. The authors should explain the reasons of these choices.

Again we do consider this but it isn't a feature of Fraxinus, we didn't find that new players were much worse at the task than returning players. As described the model is one of time donated rather than player agents doing work, and the function describing work done should be adjusted accordingly by the final user. We do not assume constant productivity in our work done calculations, only time spent. The difference in the model is merely a difference between a simple effectiveness factor for each of the new or returning players contributions. In Fraxinus these factors are equal so could be ignored and were not therefore in our description of the Fraxinus parameterised model. We have made this more explicit in the revised description of the model and mention how these can be applied.

A number of recent studies (e.g. Sauermann and Franzoni 2015) describes user's contribution pattern and highlights that the idea of a homogenous group of participants is far from reality. The authors should consider these contributions to refine their model.

We have considered the effects of non-homogenous groups, we have dedicated returning players that donate time and leave at one rate and casually non-returning players that leave at another rate, and we have incorporated the different effectiveness of new and experienced players in the revised description of the model.

Using Google Analytics persistent cookies may generate a bias in assessing both the number of new players (overestimation) and the number of returning players (underestimation). The authors should describe how they took into account this bias in their computation.

Since we store each player’s Facebook ID in the database we do not need to rely solely on Google Analytics to identify players. We did compare these, Google Analytics does 7% overestimation and this is explained in the Methods section. As described, the majority of the calculations for estimation of new and returning players have been taken from player activity from our database, not directly from Google Analytics.

6) Concerning the results, it would be good to have a justification for the parameters used. Why do you choose a 21 nt game window?

Although many scientists have big screens to do their work with, in the general public there is a screen size limitation. Most people at time of game design were using computers with around 1440 pixel width. Indeed Google Analytics of player screen resolutions indicate that ∼72% of game plays were accessed on a screen resolution of 1440pixel or smaller and ∼99% game plays on a 1920pixel or smaller screens. To design a game we need to take into account the amount of screen that needs to be taken up by the Facebook window and its features and adverts which left a smaller still section to work with. At design phase 21 nt was the size that fits well given a big enough for a useable and viewable game. The nucleotides on screen had to be big enough to be manipulated as well as seen so graphics size quickly became a problem.

Do you try the game with a longer window?

Not in production, only during development where longer windows proved to be too small to use well.

Could you expect better results if a longer window is used?

Alignments would take longer to do so people would play fewer alignments, so less replication would be done. Some alignments may improve but consensus accuracy from replication may decrease. I suppose it depends on what are considered better results in this context. Is longer better, or is better?

In the same sense, the alignments are compared in two ways; by calculating the percent identity and by computing an alignment score. With respect to the measure of identity, why the authors did not use ‘standard’ measures such as Sum of Pairs, and Total Column metrics?

It is not correct to assess these alignments as Multiple Sequence Alignments (of which Sum of Pairs and Total Column are metrics). These are serial, and independent pairwise sequence alignments (which has a bearing on the comments below too), each sequence aligned is a read from a genome sequencer, an independent measurement of the sequence, they are not and should not be taken en masse and assessed as an MSA.

The percent identity that we used is an intuitive and straightforward measure that captures the variants well in pairwise sequence alignments and the score we used is the aligner's own score so set a level playing field for the comparison of player and aligner performance.

What about comparing the score of the alignments using the score functions reported by state of the art MSA algorithms?

This is exactly what we did, we used BWA mem, the most widely used and a very modern algorithm that is about state-of-the-art as it gets for sequence read alignment. This is mentioned on the first line in the Methods section.

7) It is not clear to the reader what software/computational method the players are being compared to, especially in the figures and tables. Please explicitly the software/computational method in the captions of the relevant figures and tables.

We mention this on the first line in the Results and Discussion section, we have reiterated it later in the main text at an appropriate place, in the captions and in the Methods section.

8) The section ‘Human contribution estimation’ needs some revision. If the formulae given in this section are standard formulae taken from the literature, please supply references. And if these formulae are not standard formulae, please supply more information about how they were derived.

This is a standard definite integral, but in there is a typo that makes it hard to read (strictly it made a nonsense of the formula – apologies.) Nonetheless supplying information about the derivation of these isn't really helpful, it is a common sort of formula and we do supply descriptions and values for each variable. That said though, after discussions we feel that a different notation would be more readable, intuitive and avoid the need for deriving the formula. Instead we propose to represent the model as a discrete sum over the days of a crowdsourcing project. This is functionally equivalent to the continuous integral since the model works on whole days anyway and is more in line with the simulations we carried out and the provided code. The variables have all been renamed (hopefully these will be easier to remember) in the new annotation but represent the same things plus the explicitly stated effectiveness factor (ignored previously in Fraxinus because it was 1). We have amended the text to include this new notation. We also provide the code for the model as used in this analysis [http://nbviewer.ipython.org/github/shyamrallapalli/fraxinus_version1_data_analysis/blob/master/fraxinus_visits_model/Players-nonplayers.ipynb] (fraxinus_visits_model).

9) Please consider adding the following references and discussing them in the text at the appropriate place:

Open-Phylo: a customizable crowd-computing platform for multiple sequence alignment Genome Biology 14, R116 (2013). This paper seems to have a lot of common features with the study presented here, and it can be used for comparison purposes.

The Open-Phylo project is an elaboration of the original Phylo project (which we cite). Open-Phylo extends the Phylo project and this paper describes an exciting new crowd computing platform which scientists can use to upload their own puzzles for solution by the crowd. It does not describe the results from the Phylo multiple sequence alignment citizen sequence project (in the original, cited paper). We believe that the paper cited is the best one for comparison.

Algorithm discovery by protein folding game players PNAS 108, 18949-18953 (2011). This paper could be used to develop the idea that the alignment strategies used by players could be implemented in algorithms for potential improvements.

We have developed the idea slightly in Results and cited the method described in this paper.

It would be useful to be explicit about the relationship between the present work and previous publications (MacLean et al., GigaScience, 2013; MacLean et al., eLife, 2013).

We have clarified briefly the content of each of these when cited.

https://doi.org/10.7554/eLife.07460.018Article and author information

Author details

Funding

Biotechnology and Biological Sciences Research Council (BBSRC) (Nornex)

- Dan MacLean

Department for Environment, Food and Rural Affairs (Defra) (Nornex)

- Diane GO Saunders

Gatsby Charitable Foundation (None)

- Dan MacLean

John Innes Foundation (None)

- Dan MacLean

The Genome Analysis Centre (National Capability Grant)

- Dan MacLean

John Innes Foundation (Emeritus Fellowship)

- J Allan Downie

The funders had no role in study design, data collection and interpretation, or the decision to submit the work for publication.

Acknowledgements

We thank Edward Chalstrey, Martin Page, Joe Win, Chris Bridson, and Chris Wilson for technical assistance, and Jodie Pike for Illumina sequencing assistance. We also thank Zoe Dunford and John Innes Centre External Relations team for media outreach and for promoting the game. We also wish to thank all the Fraxinus players who were too modest to be included as co-authors: all contributions are valuable and all are equally appreciated.

Publication history

- Received:

- Accepted:

- Version of Record published:

Copyright

© 2015, Rallapalli et al.

This article is distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use and redistribution provided that the original author and source are credited.

Metrics

-

- 0

- citations

Views, downloads and citations are aggregated across all versions of this paper published by eLife.