Learning cortical representations through perturbed and adversarial dreaming

Figures

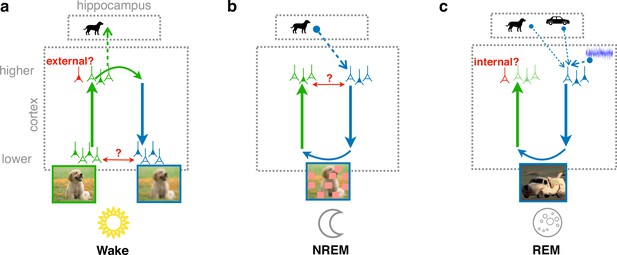

Cortical representation learning through perturbed and adversarial dreaming (PAD).

(a) During wakefulness (Wake), cortical feedforward pathways learn to recognize that low-level activity is externally driven and feedback pathways learn to reconstruct it from high-level neuronal representations. These high-level representations are stored in the hippocampus. (b) During non-rapid eye movement sleep (NREM), feedforward pathways learn to reconstruct high-level activity patterns replayed from the hippocampus affected by low-level perturbations, referred to as perturbed dreaming. (c) During rapid eye movement sleep (REM), feedforward and feedback pathways operate in an adversarial fashion, referred to as adversarial dreaming. Feedback pathways generate virtual low-level activity from combinations of multiple hippocampal memories and spontaneous cortical activity. While feedforward pathways learn to recognize low-level activity patterns as internally generated, feedback pathways learn to fool feedforward pathways.

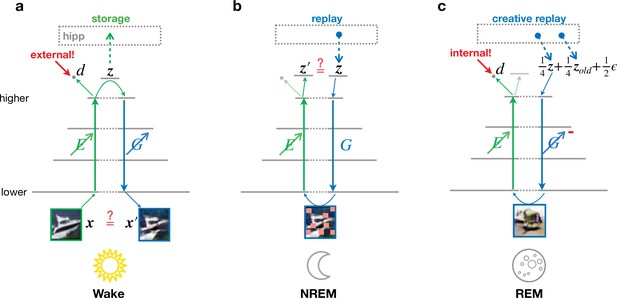

Different objectives during wakefulness, non-rapid eye movement (NREM), and rapid eye movement (REM) sleep govern the organization of feedforward and feedback pathways in perturbed and adversarial dreaming (PAD).

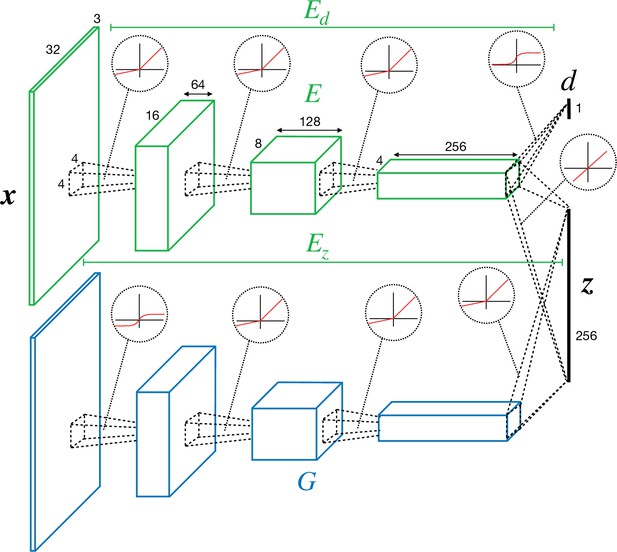

The variable corresponds to 32 × 32 image, is a 256-dimensional vector representing the latent layer (higher sensory cortex). Encoder (, green) and generator (, blue) networks project bottom-up and top-down signals between lower and higher sensory areas. An oblique arrow () indicates that learning occurs in a given pathway. (a) During Wake, low-level activities are reconstructed. At the same time, learns to classify low-level activity as external (red target ‘external!’) with its output discriminator . The obtained latent representations are stored in the hippocampus. (b) During NREM, the activity stored during wakefulness is replayed from the hippocampal memory and regenerates visual input from the previous day perturbed by occlusions, modeled by squares of various sizes applied along the generated low-level activity with a certain probability (see Materials and methods). In this phase, adapts to reproduce the replayed latent activity. (c) During REM, convex combinations of multiple random hippocampal memories ( and ) and spontaneous cortical activity (), here with specific prefactors, generate a virtual activity in lower areas. While the encoder learns to classify this activity as internal (red target ‘internal!’), the generator adversarially learns to generate visual inputs that would be classified as external. The red minus on indicates the inverted plasticity implementing this adversarial training.

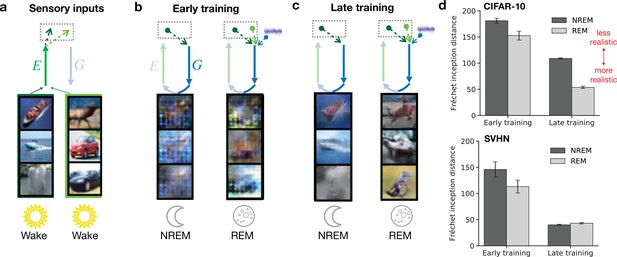

Both non-rapid eye movement (NREM) and rapid eye movement (REM) dreams become more realistic over the course of learning.

(a) Examples of sensory inputs observed during wakefulness. Their corresponding latent representations are stored in the hippocampus. (b, c) Single episodic memories (latent representations of stimuli) during NREM from the previous day and combinations of episodic memories from the two previous days during REM are recalled from hippocampus and generate early sensory activity via feedback pathways. This activity is shown for early (epoch 1) and late (epoch 50) training stages of the model. (d) Discrepancy between externally driven and internally generated early sensory activity as measured by the Fréchet inception distance (FID) (Heusel et al., 2018) during NREM and REM for networks trained on CIFAR-10 (top) and SVHN (bottom). Lower distance reflects higher similarity between sensory-evoked and generated activity. Error bars indicate ±1 SEM over four different initial conditions.

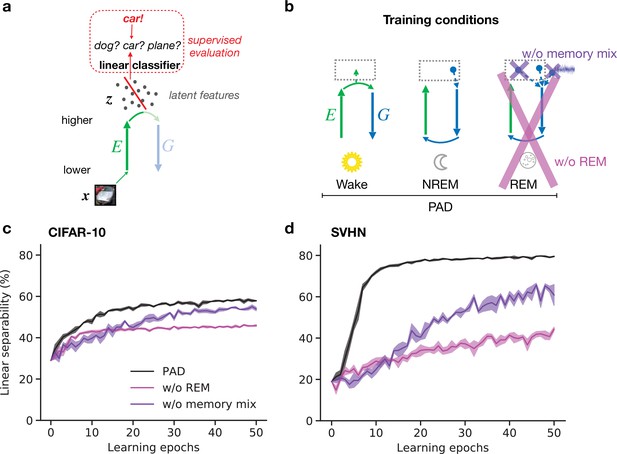

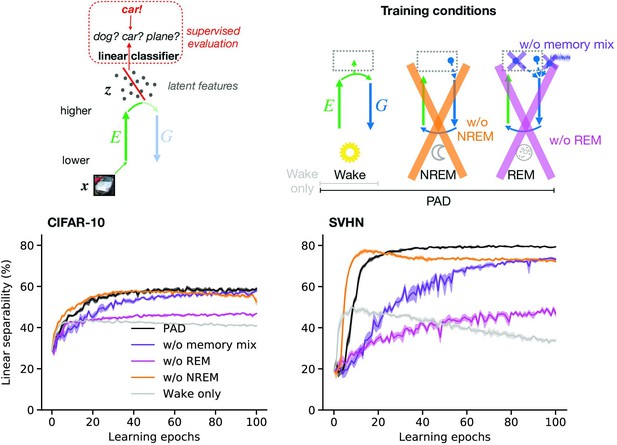

Adversarial dreaming during rapid eye movement (REM) improves the linear separability of the latent representation.

(a) A linear classifier is trained on the latent representations inferred from an external input to predict its associated label (here, the category ‘car’). (b) Training phases and pathological conditions: full model (perturbed and adversarial dreaming [PAD], black), no REM phase (pink) and PAD with a REM phase using a single episodic memory only (‘w/o memory mix’, purple). (c, d) Classification accuracy obtained on test datasets (c: CIFAR-10; d: SVHN) after training the linear classifier to convergence on the latent space for each epoch of the --network learning. Full model (PAD): black line; without REM: pink line; with REM, but without memory mix: purple line. Solid lines represent mean, and shaded areas indicate ±1 SEM over four different initial conditions.

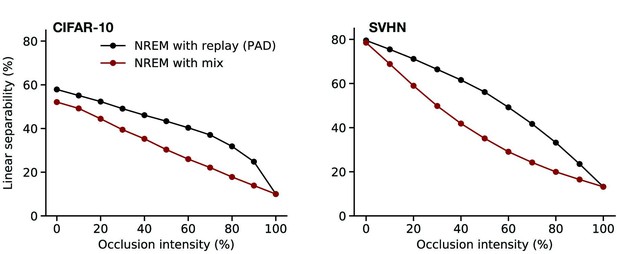

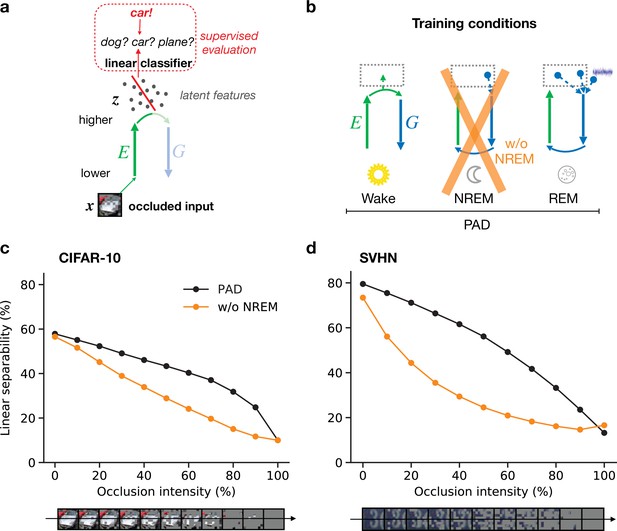

Perturbed dreaming during non-rapid eye movement (NREM) improves robustness of latent representations.

(a) A trained linear classifier (Figure 4) infers class labels from latent representations. The classifier was trained on latent representations of original images, but evaluated on representations of images with varying levels of occlusion. (b) Training phases and pathological conditions: full model (perturbed and adversarial dreaming [PAD], black), without NREM phase (w/o NREM, orange). (c, d) Classification accuracy obtained on the test dataset (c: CIFAR-10; d: SVHN) after 50 epochs for different levels of occlusion (0% to 100%). Full model (PAD): black line; w/o NREM: orange line. SEM over four different initial conditions overlap with data points. Note that due to an unbalanced distribution of samples the highest performance of a naive classifier is 18.9% for the SVHN dataset.

Effects of non-rapid eye movement (NREM) and rapid eye movement (REM) sleep on latent representations.

(a) Inputs are mapped to their corresponding latent representations via the encoder . Principal component analysis (PCA; Jolliffe and Cadima, 2016) is performed on the latent space to visualize its structure (b–d). Clustering distances (e, f) are computed directly on latent features . (b–d) PCA visualization of latent representations projected on the first two principal components. Full circles represent clean images, open circles represent images with 30% occlusion. Each color represents an object category from the SVHN dataset (purple: ‘0’; cyan: ‘1’; yellow: ‘2’; red: ‘3’). (e) Ratio between average intra-class and average inter-class distances in latent space for randomly initialized networks (no training, gray), full model (black), model trained without REM sleep (w/o REM, pink), and model trained without NREM sleep (w/o NREM, orange) for unoccluded inputs. (f) Ratio between average clean-occluded (30% occlusion) and average inter-class distances in latent space for the full model (black), w/o REM (pink), and w/o NREM (orange). Error bars represent SEM over four different initial conditions.

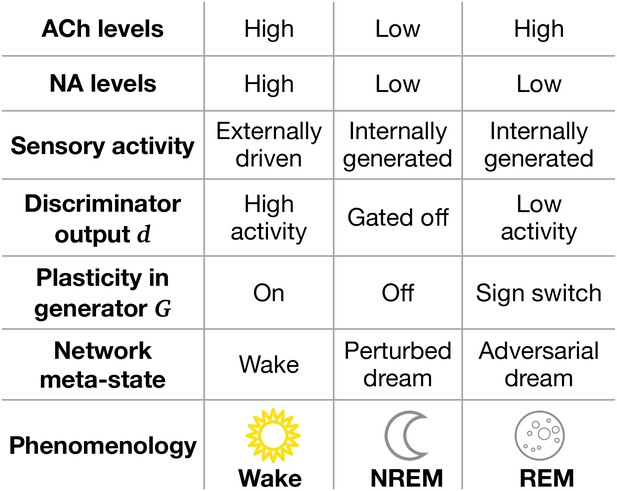

Model features and physiological counterparts during Wake, non-rapid eye movement (NREM), and rapid eye movement (REM) phases.

ACh: acetylcholine; NA: noradrenaline. ‘Sign switch’ indicates that identical local errors lead to opposing weight changes between Wake and REM sleep.

Convolutional neural network (CNN) architecture of encoder/discriminator and generator used in perturbed and adversarial dreaming (PAD).

Varying size and intensity of occlusions on example images from CIFAR-10.

Image occlusions vary along two parameters: occlusion intensity, defined by the probability to apply a gray square at a given position, and square size (s).

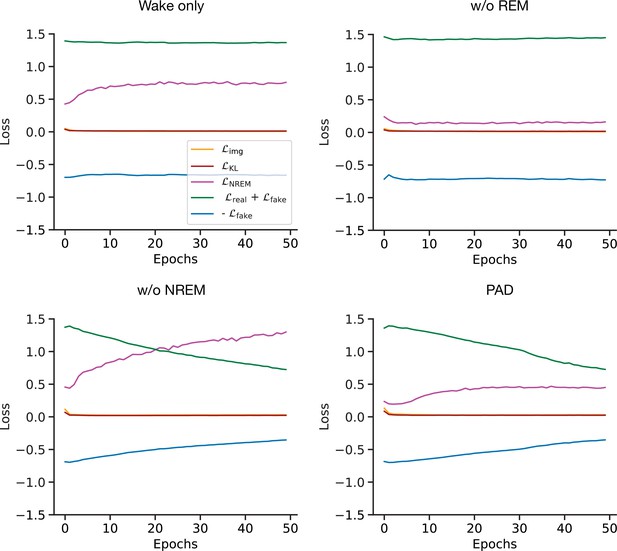

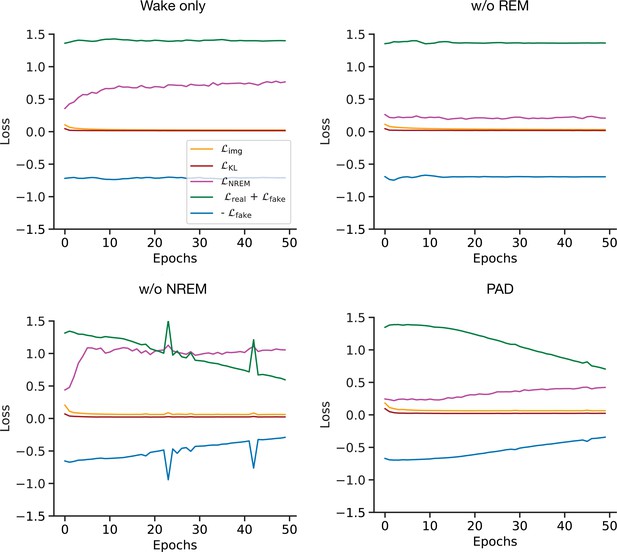

Training losses for the full and pathological models with the CIFAR-10 dataset.

Evolution of training losses used to optimize and networks (see Materials and methods) over training epochs for the full and pathological models.

Linear classification performance for the full model and all pathological conditions.

For details, see Figure 4.

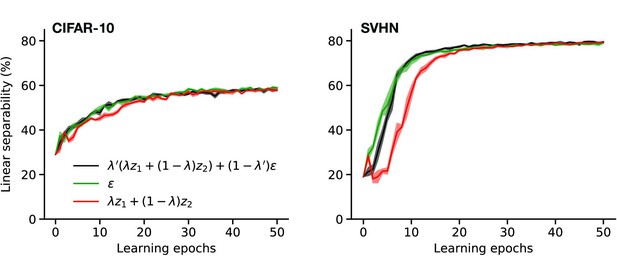

Linear classification performance for different mixing strategies during rapid eye movement (REM).

Linear separability of latent representations with training epochs for perturbed and adversarial dreaming (PAD) trained with different REM phases: one driven by a convex combination of mixed memories and noise (black), one by pure noise (green), and one by mixed memories only (red). For details, see Figure 4.

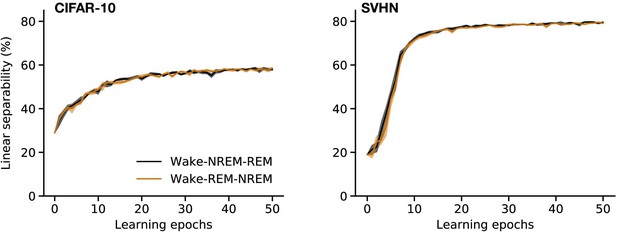

Linear classification performance for different order of sleep phases.

Linear separability of latent representations with training epochs for perturbed and adversarial dreaming (PAD) trained when non-rapid eye movement (NREM) precedes rapid eye movement (REM) phase (Wake–NREM–REM, black) or when REM precedes NREM (Wake–REM–NREM, brown).

Tables

Final classification performance for the full model and all pathological conditions for unoccluded images.

Mean and standard error of the mean (SEM) over four different initial condition of linear separability of latent representations at the end of training (epoch 50) for perturbed and adversarial dreaming (PAD) and its pathological variants.

| Dataset | PAD | W/o memory mix | W/o REM | W/o NREM | Wake only |

|---|---|---|---|---|---|

| CIFAR-10 | 58.25 ± 0.70 | 53.87 ± 0.85 | 46.00 ± 0.43 | 58.00 ± 0.34 | 42.25 ± 0.54 |

| SVHN | 78.92 ± 0.40 | 60.87 ± 5.07 | 42.30 ± 1.51 | 73.25 ± 0.22 | 41.93 ± 0.65 |

-

REM: rapid eye movement; NREM: non-rapid eye movement.