Neural mechanisms underlying the temporal organization of naturalistic animal behavior

Abstract

Naturalistic animal behavior exhibits a strikingly complex organization in the temporal domain, with variability arising from at least three sources: hierarchical, contextual, and stochastic. What neural mechanisms and computational principles underlie such intricate temporal features? In this review, we provide a critical assessment of the existing behavioral and neurophysiological evidence for these sources of temporal variability in naturalistic behavior. Recent research converges on an emergent mechanistic theory of temporal variability based on attractor neural networks and metastable dynamics, arising via coordinated interactions between mesoscopic neural circuits. We highlight the crucial role played by structural heterogeneities as well as noise from mesoscopic feedback loops in regulating flexible behavior. We assess the shortcomings and missing links in the current theoretical and experimental literature and propose new directions of investigation to fill these gaps.

Introduction

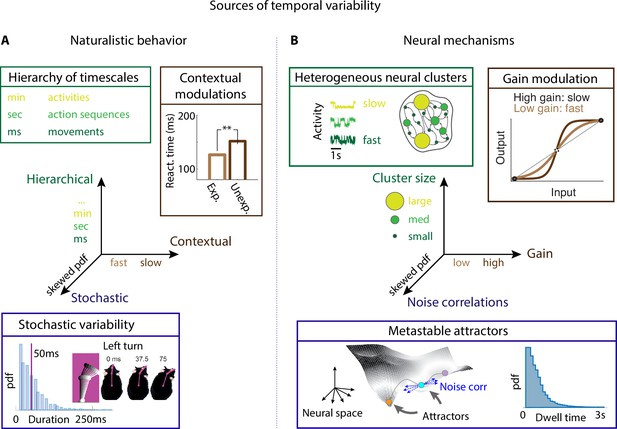

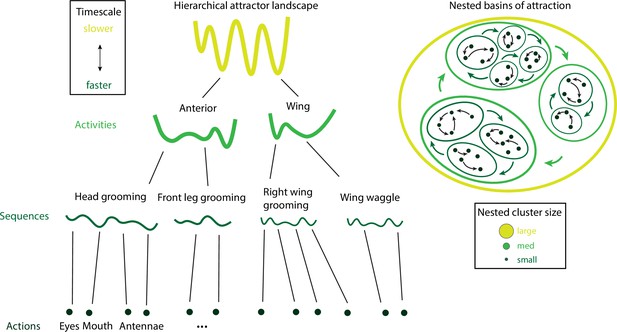

Naturalistic animal behavior exhibits a striking amount of variability in the temporal domain (Figure 1A). An individual animal’s behavioral variability can be decomposed across at least three axes: hierarchical, contextual, and stochastic. The first source of variability originates from the vast hierarchy of timescales underlying self-initiated, spontaneous behavior ranging from milliseconds to minutes in animals (and to years for humans). On the sub-second timescale, animals perform fast movements varying from tens to hundreds of milliseconds. In rodents, these movements include whisking, sniffing, and moving their limbs. On the slower timescales of seconds, animals concatenate these fast movements into behavioral sequences of self-initiated actions, such as exploratory sequences (moving around an object while sniffing, whisking, and wagging their noses) or locomotion sequences (coordinating limb and head movements to reach a landmark). These sequences follow specific syntax rules (Berridge et al., 1987) and can last several seconds. On the timescales of minutes or longer, mice may repeat the ‘walk and explore’ behavioral sequence multiple times, when engaged in some specific activity, such as foraging, persisting toward their goal for long periods. In these simple examples, a freely moving mouse exhibits behavior whose temporal organization varies over several orders of magnitudes simultaneously, ranging from the sub-second scale (actions), to several seconds (behavioral sequences), to minutes (goals to attain). A leading theory to explain the temporal organization of naturalistic behavior is that behavioral action sequences arrange in a hierarchical structure (Tinbergen, 1951; Dawkins, 1976; Simon, 1962), where actions are nested into behavioral sequences that are then grouped into activities. This hierarchy of timescales is ubiquitously observed across species during naturalistic behavior.

Neural mechanisms underlying the temporal organization of naturalistic animal behavior.

(A) Three sources of temporal variability in naturalistic behavior: hierarchical (from fast movements, to behavioral action sequences, to slow activities and long-term goals), contextual (reaction times are faster when stimuli are expected), and stochastic (the distribution of ‘turn right’ action in freely moving rats is right-skewed). (B) Neural mechanisms underlying each source of temporal variability: hierarchical variability may arise from recurrent networks with a heterogeneous distribution of neural cluster sizes; contextual modulations from neuronal gain modulation; stochastic variability from metastable attractor dynamics where transitions between attractors are driven by low-dimensional noise, leading to right-skewed distributions of attractor dwell times. Panel (A) adapted from Figure 2 of Jaramillo and Zador, 2011. Panel (A, bottom) reproduced from Figure 6 of Findley et al., 2021.

The second source of temporal variability stems from the different contexts in which a specific behavior can be performed. For example, reaction times to a delivered stimulus can be consistently faster when an animals is expecting it compared to slower reaction times when the stimulus is unexpected (Jaramillo and Zador, 2011). Contextual sources can be either internally generated (modulations of brain state, arousal, expectation) or externally driven (pharmacological, optogenetic or genetic manipulations; or changes in task difficulty or environment).

After controlling for all known contextual effects, an individual’s behavior still exhibits a large amount of residual temporal variability across repetitions of the same behavioral unit. This residual variability across repetitions can be quantified as a property of the distribution of movement durations, which is typically very skewed with an exponential tail (Wiltschko et al., 2015; Findley et al., 2021; Recanatesi et al., 2022), suggesting a stochastic origin. We define as stochastic variability the fraction of variability in the expression of a particular behavioral unit, which cannot be explained by any readily measurable variables.

These three aspects of temporal variability (hierarchical, contextual, stochastic) can all be observed in the naturalistic behavior of a single individual during their lifetime. Other sources of behavioral variability have also been investigated. Variability in movement execution and body posture (Dhawale et al., 2017) may originate from the motor periphery, such as noise in force production within muscles (van Beers et al., 2004), or from the deterministic chaotic dynamics arising from neural activity (Costa et al., 2021) or the biomechanical structure of an animal’s body (Loveless et al., 2019). One additional source is individual phenotypic variability across different animals, an important aspect of behavior in ethological and evolutionary light (Honegger and de Bivort, 2018). Although we will briefly discuss some features of variability in movement execution and phenotypic individuality, the main focus of this review will be on the three main axes of variability described above, which pertain to the behavior of a single animal.

From this overview, we conclude that the temporal structure of naturalistic, self-initiated behavior can be decomposed along at least three axes of temporal variability (Figure 1A): a large hierarchy of simultaneous timescales at which behavior unfolds, ranging from milliseconds to minutes; contextual modulations affecting the expression of behavior at each level of this hierarchy; and stochasticity in the variability of the same behavioral unit across repetition in the same context. These three axes may or may not interact depending on the scenarios and the species. Here, we will review recent theoretical and computational results establishing the foundations of a mechanistic theory that explains how these three sources of temporal variability can arise from biologically plausible computational motifs. More specifically, we will address the following questions:

Neural representation: How are self-initiated actions represented in the brain and how are they concatenated into behavioral sequences?

Stochasticity: What neural mechanisms underlie the temporal variability observed in behavioral units across multiple repetitions within the same context?

Context: How do contextual modulations affect the temporal variability in behavior, enabling flexibility in action timing and behavioral sequence structure?

Hierarchy: How do neural circuits generate the vast hierarchy of timescales from milliseconds to minutes, hallmark of naturalistic behavior?

The mechanistic approach we will review is based on the theory of metastable attractors (Figure 1B), which is emerging as a unifying principle expounding many different aspects of the dynamics and function of neural circuits (La Camera et al., 2019). We will first establish a precise correspondence between behavioral units and neural attractors at the level of self-initiated actions (the lowest level of the temporal hierarchy). Then we will show how the emergence of behavioral sequences originates from sequences of metastable attractors. Our starting point is the observation that transitions between metastable attractors can be driven by the neural variability internally generated within a local recurrent circuit. This mechanism can naturally explain the action timing variability of stochastic origin. We will examine biologically plausible mesoscopic circuits that can learn to flexibly execute complex behavioral sequences. We will then review the neural mechanisms underlying contextual modulations of behavioral variability. We will show that the average transition time between metastable attractors can be regulated by changes in single-cell gain. Gain modulation is a principled neural mechanism mediating the effects of context, which can be induced by either internal or external perturbations and supported by different neuromodulatory and cortico-cortical pathways; or by external pharmacological or experimental interventions. Finally, we will review how a large hierarchy of timescales can naturally and robustly emerge from heterogeneities in a circuit’s structural connectivity motifs, such as neural clusters with heterogeneous sizes. Although most of the review is focused on the behavior of individual animals, we discuss how recent results on multianimal interactions and social behavior may challenge existing theories of naturalistic behavior and brain function. Appendices 1–3 provide guides to computational methods for behavioral video analyses and modeling; theoretical and experimental aspects of attractors dynamics; and biologically plausible models of metastable attractors.

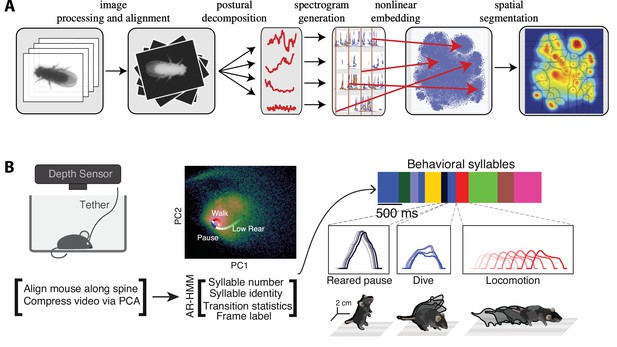

The stochastic nature of naturalistic behavior

The study of naturalistic behavior based on animal videos has recently undergone a revolution due to the spectacular accuracy and efficiency of computational methods for animal pose tracking (Stephens et al., 2008; Berman et al., 2014; Hong et al., 2015; Mathis et al., 2018; Pereira et al., 2019; Nourizonoz et al., 2020; Segalin et al., 2020); see Appendix 1 and Table 1 for details). These new methods work across species and conditions, ushering a new era for computational neuroethology (Anderson and Perona, 2014; Brown and de Bivort, 2018; Datta et al., 2019). They have led to uncovering a quantitative classification of self-initiated behavior revealing a stunning amount of variability both in its lexical features (which actions to choose, in which order) and in its temporal dimension (when to act) (Berman et al., 2016; Wiltschko et al., 2015; Markowitz et al., 2018; Marshall et al., 2021; Recanatesi et al., 2022). Different ways to characterize the behavioral repertoire on short timescales have been developed, depending on the underlying assumptions of whether the basic units of behavior are discrete or continuous (Appendix 1). One can define stereotypical behaviors or postures by clustering probability density maps of spectrotemporal features extracted from behavioral videos, as in the example of fruit flies (Berman et al., 2014; Berman et al., 2016; (Figure 2A) or rats (Marshall et al., 2021; Figure 7B). Alternatively, one can capture actions or postures as discrete states of a state–space model based on Markovian dynamics, each state represented as a latent state autoregressive trajectory accounting for stochastic movement variability (Figure 2). In the case of discretization of the behavioral repertoire, the definition of the smallest building block of behavior depends on the level of granularity that best suits the scientific question being investigated. Within this discrete framework, state–space models were applied successfully to Caenorhabditis elegans (Linderman et al., 2019), Drosophila (Tao et al., 2019), zebrafish (Johnson et al., 2020), and rodents (Wiltschko et al., 2015; Markowitz et al., 2018). At the timescale of actions and postures, transition times between consecutive actions are well described by a Poisson process (Killeen and Fetterman, 1988), characterized by a right-skewed distribution of inter-action intervals (Figure 3). In this and the next section, we will focus on short timescales (up to a few seconds) where state–space models can provide parsimonious accounts of behavior. However, these models fail to account for longer timescale and non-Markovian structure in behavior, and in later sections we will move to a more data-driven approach to investigate these aspects of behavior (see Appendix 1).

Definitions of behavioral units (see Appendix 1 for how to extract these features from videos).

| Action | The simplest building blocks of behavior are at the lowest level of the hierarchy. This definition depends on the level of granularity (spatiotemporal resolution) required and the scientific questions to be investigated (see Appendix 1). A widely used definition of action is a short stereotypical trajectory in posture space (synonyms include movemes, syllables [Anderson and Perona, 2014; Brown and de Bivort, 2018]), operationally defined as a discrete latent trajectory of an autoregressive state–space model fit to pose-tracking time-series data (Figure 2; Wiltschko et al., 2015; Findley et al., 2021). An alternative definition is in terms of short spectrotemporal representations from a time-frequency analysis of videos (Berman et al., 2016; Marshall et al., 2021). Examples include poking in or poking out of a nose port, waiting at a port, pressing a lever. |

| Behavioral action sequence | A combination of actions concatenated in a meaningful yet stereotyped way, lasting up to a few seconds. A sequence can occur during trial-based experimental protocols (e.g., a short sequence of actions aimed at obtaining a reward in an operant conditioning task [Geddes et al., 2018; Murakami et al., 2014]; running between opposite ends of a linear track [Maboudi et al., 2018]) or during spontaneous periods (e.g., repeatedly scratching own head; picking up and manipulating an object). |

| Activity | A concatenation of multiple behavioral sequences, often repeated and of variable duration, typically aimed at obtaining a goal and lasting up to minutes or even hours. Examples include grooming, foraging, mating, feeding, and exploration. Activities typically unfold in naturalistic freely moving settings devoid of experimenter-controlled trial structure. |

The complex spatiotemporal structure in naturalistic behavior.

(A) Identification of behavioral syllables in the fruit fly. From left to right: raw images of Drosophila melanogaster (1) are segmented from background, rescaled, and aligned (2), then decomposed via principal component analysis (PCA) into a low-dimensional time series (3). A Morlet wavelet transform yields a spectrogram for each postural mode (4), mapped into a two-dimensional plane via t-distributed stochastic neighbor embedding (t-SNE) (5). A watershed transform identifies individual peaks from one another (6). (B) Identification of behavioral action sequences from 3D videos with MoSeq. An autoregressive hidden Markov model (AR-HMM, right) fit to PCA-based video compression (center) identifies hidden states representing actions (color bars, right, top: color-coded intervals where each HMM state is detected). Panel (A) reproduced from Figures 2 and 5 of Berman et al., 2014. Panel (B) adapted from Figure 1 of Wiltschko et al., 2015.

Metastable attractors in secondary motor cortex can account for the stochasticity in action timing.

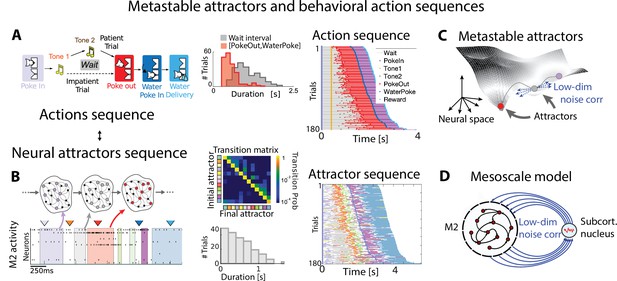

(A) In the Marshmallow experiment, a freely moving rat poked into a wait port, where tone 1 signaled the trial start. The rat could poke out at any time (after tone 2, played at random times, in patient trials; or before tone 2, in impatient trials) and move to the reward port to receive a water reward (large and small for patient and impatient trials, respectively). Center: distributions of wait times (gray) and intervals between Poke Out and Water Poke (red) reveal a large temporal variability across trials. Right: behavioral sequences (sorted from shortest to longest) in a representative session. (B) Left: representative neural ensemble activity from M2 during an impatient trial (tick marks indicate spikes; colored arrows indicate the rat’s actions, same colors as in A) with overlaid hidden Markov model (HMM) states, interpreted as neural attractors each represented as a set of coactivated neurons within a network (colored intervals indicate HMM states detected with probability above 80%). Top center: transition probability matrix between HMM states. Bottom center: the distribution of state durations (representative gray pattern from left plot) is right-skewed, suggesting a stochastic origin of state transitions. Right: sequence of color-coded HMM states from all trials in the representative session of panel (A). (C) Schematic of an attractor landscape: attractors representing HMM states in panel (C) are shown as potential wells. Transitions between consecutive attractors are driven by low-dimensional correlated noise. (D) Schematic of a mesoscopic neural circuit generating stable attractor sequences with variable transition times comprising a feedback loop between M2 and a subcortical nucleus. Panels (A) and (B) adapted from Figures 1 and 5 of Recanatesi et al., 2022.

Self-initiated actions and ensemble activity patterns in premotor areas

We begin the investigation of naturalistic behavior starting at the lowest level of the hierarchy and examining the neural mechanisms underlying self-initiated actions. How does the brain encode the intention to perform an action? How are consecutive actions concatenated into a behavioral sequence? Unrestrained naturalistic behavior, as it has been recently characterized in the foundational studies mentioned above, revealed a vast repertoire of dozens to hundreds of actions (Berman et al., 2014; Wiltschko et al., 2015; Markowitz et al., 2018; Marques et al., 2018; Costa et al., 2019; Schwarz et al., 2015) (although the repertoire depends on the coarse-graining scale of the behavioral analysis), leading to a combinatorial explosion in the number of possible action sequences. To tame the curse of dimensionality, typical of unconstrained naturalistic behavior, a promising approach is to control for the lexical variability in behavioral sequences and design a naturalistic task where an animal performs a single behavioral sequence of self-initiated actions, where each action retains its unrestrained range of temporal variability. Murakami et al., 2014, Murakami et al., 2017, and Recanatesi et al., 2022 adopted this strategy to train freely moving rats to perform a self-initiated task (the rodent version of the ‘Marshmallow task’; Mischel and Ebbesen, 1970, Figure 3A), where a specific set of actions had to be performed in a fixed order to obtain a reward. The many repetitions of the same behavioral sequence yielded a large sample size to elucidate the source of temporal variability across trials. Action timing retained the temporal variability hallmark of naturalistic behavior, characterized by skewed distributions of action durations (Figure 3A).

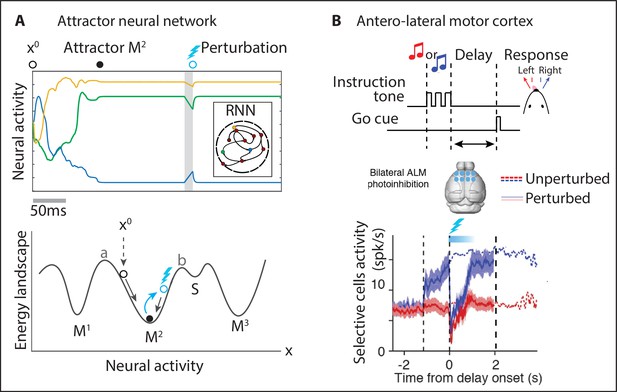

What is the neural mechanism generating the large variability in action timing? A large number of studies implicated the secondary motor cortex (M2) in rodents as part of a distributed network involved in motor planning in head-fixed mice (Li et al., 2016; Barthas and Kwan, 2017) and controlling the timing of self-initiated actions in freely moving rats (Murakami et al., 2014; Murakami et al., 2017). Ensemble activity recorded in M2 during the Marshmallow task unfolded via sequences of multineuron-firing patterns, each one lasting hundreds of milliseconds to a few seconds; within each pattern, neurons fired at an approximately constant rate (Figure 3B). Such long dwell times, much longer than typical single-neuron time constants, suggest that the observed metastable patterns may be an emergent property of the collective circuit dynamics within M2 and reciprocally connected brain regions. Crucially, both neural and behavioral sequences were highly reliable yet temporally variable, and the distribution durations of action and neural pattern durations were characterized by a right-skewed distribution. This temporal heterogeneity suggests that a stochastic mechanism, such as that found in noise-driven transitions between metastable states, could contribute to driving transitions between consecutive patterns within a sequence (see below). A dictionary between actions and neural pattern could be established, revealing that the onset of specific patterns reliably preceded upcoming self-initiated actions (Figure 3A and B;e.g., the onset of the red pattern reliably precedes the poke out movement). The dictionary trained on the rewarded sequence generalized to epochs where the animal performed erratic nonrewarded behavior, where pattern onset predicted upcoming actions as well. The use of state–space models with underlying Markovian dynamics as generative models, capturing both naturalistic behavior (Wiltschko et al., 2015; Batty et al., 2019; Johnson et al., 2020; Parker et al., 2021; Findley et al., 2021) and the underlying neural pattern sequences (Maboudi et al., 2018; Linderman et al., 2019; Recanatesi et al., 2022), is a powerful tool to bridge the first two levels of the temporal hierarchy in naturalistic behavior: a link from actions to behavioral sequences. This generative framework further revealed fundamental aspects of neural coding in M2 ensembles, such as their distributed representations, dense coding, and single-cell multistability (Recanatesi et al., 2022; Mazzucato et al., 2015).

Action timing variability from metastable attractors

Neural patterns in M2 may represent attractors of the underlying recurrent circuit dynamics. Attractors are a concept in the theory of dynamical systems, defined as the set of states a system evolves to starting from a large set of initial conditions. The central feature of an attractor is that, if the system is perturbed slightly away from an attractor, it tends to return to it. Within the context of neural activity, an attractor is a persisting pattern of population activity where neurons fire at an approximately constant rate for an extended period of time (see Appendix 1 for models of attractor dynamics). Foundational work in head-fixed mice showed that licking preparatory activity in M2 during a delayed response task is encoded by choice-specific discrete attractors (Inagaki et al., 2019). Attractors can be stable (Amit and Brunel, 1997), as observed in monkey IT cortex in working memory tasks (Fuster and Jervey, 1981; Miyashita and Chang, 1988). Attractors can also be metastable (see Appendix 3), when they typically last for hundreds of milliseconds and noise fluctuations spontaneously trigger transitions to a different attractor (Deco and Hugues, 2012; Litwin-Kumar and Doiron, 2012).

Metastable attractors can be concatenated into sequences, which can either be random, as observed during ongoing periods in sensory cortex (Mazzucato et al., 2015), or highly reliable, encoding the evoked response to specific sensory stimuli (Jones et al., 2007; Miller and Katz, 2010; Mazzucato et al., 2015), or underlying freely moving behavior (Maboudi et al., 2018; Recanatesi et al., 2022). In particular, M2 ensemble activity in the Marshmallow experiment was consistent with the activity generated by a specific sequence of metastable attractors (Figure 3). The main hypothesis underlying this model is that the onset of an attractor drives the initiation of a specific action as determined by the action/pattern dictionary (Figure 3A and B) and the dwell time in a given attractor sets the inter-action-interval. The dynamics of the relevant motor output and the details of variability in movement execution are generated downstream of this attractor circuit (see Figure 5 and ‘Discussion’ section). The main features observed in the M2 ensemble dynamics during the Marshmallow task (i.e., long-lived patterns with a right-skewed dwell-time distribution, concatenated into highly reliable pattern sequences) can be explained by a two-area mesoscopic network where a large recurrent circuit (representing M2) is reciprocally connected to a small circuit lacking recurrent couplings (a subcortical area likely representing the thalamus [Guo et al., 2018; Guo et al., 2017; Inagaki et al., 2022], see Figure 5C). In this biologically plausible model, metastable attractors are encoded in the M2 recurrent couplings, and transitions between consecutive attractors are driven by low-dimensional noise fluctuations arising in the feedback projections from the subcortical nucleus back to M2. As a consequence of the stochastic origin of the transitions, the distribution of dwell times for each attractor is right-skewed, closely matching the empirical data. This model’s prediction was confirmed in the data, where the ensemble fluctuations around each pattern were found to be low-dimensional and oriented in the direction of the next pattern in the sequence (Figure 3). This model presents a new interpretation for low-dimensional (differential) correlations: although their presence in sensory cortex may be detrimental to sensory encoding (Moreno-Bote et al., 2014), their presence in motor circuits seems to be essential for motor generation during naturalistic behavior (Recanatesi et al., 2022).

Open issues

The hypothesis that preparatory activity for upcoming actions is encoded in discrete attractors in M2 has been convincingly demonstrated using causal manipulations in head-fixed preparations in mice (see Appendix 1), although a causal test of this hypothesis in freely moving animals is currently lacking. A shortcoming of the metastable attractor model of action timing in Recanatesi et al., 2022 is the unidentified subcortical structure where the low-dimensional variability is originating. Thalamus and basal ganglia are both likely candidates as part of a large reciprocally connected mesoscopic circuit underlying action selection and execution (see Figure 5B), and more work is needed to precisely identify the origin of the low-dimensional variability.

The metastable attractor model assumes the existence of discrete units of behavior at the level of actions, although large variability in movement execution originating downstream of cortical areas may blur the distinction between the discrete behavioral units. The extent to which behavior can be interpreted as a sequence of discrete behavioral units or, rather, a superposition of continuously varying poses (see Appendix 1 for an in-depth discussion of this issue) is currently open for debate.

At higher levels of the behavioral hierarchy, repetitions of the same behavioral sequence (such as a jump attempt, an olfactory search trial, or a waiting trial, Figures 2 and 3) exhibit large temporal variability as well, characterized by right-skewed distributions (Lottem et al., 2018). It remains to be examined whether temporal variability in sequence duration may originate from a hierarchical model where sequences themselves are encoded in slow-switching metastable attractors in a higher cortical area or a distributed mesoscopic circuit (see Figure 5C and Figure 9).

A theory of metastable dynamics in biologically plausible models

A variety of neural circuit models have been proposed to generate metastable dynamics (see Appendix 3). However, a full quantitative understanding of the metastable regime is currently lacking. Such theory is within reach in the case of recurrent networks of continuous rate units. In circuits where metastable dynamics arises from low-dimensional correlated variability (Recanatesi et al., 2022), dynamic mean field methods could be deployed to predict the statistics of switch times from underlying biological parameters. In biologically plausible models based on spiking circuits, it is not known how to quantitatively predict switching times from underlying network parameters. Phenomenological birth–death processes fit to spiking network simulations can give a qualitative understanding of the on–off cluster dynamics (Huang and Doiron, 2017; Shi et al., 2022), and it would be interesting to derive these models from first principles. Mean field methods for leaky-integrate-and-fire networks can give a qualitative prediction of the effects of external perturbations on metastable dynamics, explaining how changes in an animal’s internal state can affect circuit dynamics (Mazzucato et al., 2019; Wyrick and Mazzucato, 2021). These qualitative approaches should be extended to fully quantitative ones. A promising method, deployed in random neural networks, is based on the universal colored-noise approximation to the Fokker–Planck equation, where switch times between metastable states can be predicted from microscopic network parameters such as neural cluster size (Stern et al., 2021). Finally, a crucial direction for future investigation is to improve the biological plausibility of metastable attractor models to incorporate different inhibitory cell types. Progress along this line will open the way to quantitative experimental tests of the metastable attractor hypothesis using powerful optogenetic tools.

Stochastic individuality

One important source of behavioral variability is phenotypic variability across different individuals with identical genetic profile, an important aspect of behavior in ethological and evolutionary light (Honegger and de Bivort, 2018). Stochastic individuality is defined as the part of the phenotypic variability in nonheritable effects that cannot be predicted from measurable variables such as learning or other developmental conditions – such as behavioral differences in identical twins reared in the same environment. Signatures of stochastic individuality have been found in rodents (Kawai et al., 2015; Marshall et al., 2021) and flies (Tao et al., 2019). Existing theoretical models have not examined which neural mechanisms may underlie this individuality. This variability may arise from differences in developmental wiring of brain circuits related to axonal growth. The metastable attractor model in Recanatesi et al., 2022 may naturally accommodate some stochastic individuality. The location of each attractor in firing rate space is drawn from a random distribution, so that across-animal variability may stem from different random realization of the attractor landscape with the same underlying hyperparameters (i.e., mean and covariance of the neural patterns).

Variability in movements and body posture

A large source of variability in behavior originates from variability in movement execution (Dhawale et al., 2017). In a delayed response task in overtrained primates, preparatory neural activity in premotor areas could only account for half of the trial-to-trial variability in movement execution (Churchland et al., 2006). This fraction of motor variability originating from preparatory neural activity can be potentially explained by the mesoscopic attractor model in Figure 3D. The remaining fraction may originate from the motor periphery, such as noise in force production within muscles (van Beers et al., 2004), which is not accounted for by our attractor models. In humans, trial-to-trial motor variability can be interpreted as a means to update control policies and motor output within a reinforcement learning paradigm (Wu et al., 2014). It is challenging to dissect this finer movement variability from the state–space model approach to behavioral classification as it aims at capturing discrete stereotypical movement features. Variability in postural behavior can also stem from purely deterministic dynamics, which have been modeled using state–space reconstruction based on delay embedding of multivariate time series (Costa et al., 2019; Costa et al., 2021; Costa et al., 2021). These methods are fundamentally different from state–space models: while the latter assume an underlying probabilistic origin of variability, the former assume that variability arises from deterministic chaos. In order to capture the finer scale of movements and body posture in C. elegans (Schwarz et al., 2015; Szigeti et al., 2015), a data-driven state–space reconstruction revealed that locomotion behavior exhibits signature of chaos and can be explained in terms of unstable periodic orbits (Costa et al., 2021). A completely different approach posits that variability in movements emerges from an animal’s body mechanics in the absence of any neural control. Strikingly, in a mechanical model of the Drosophila larvae body, the animal’s complex exploratory behaviors were shown to emerge solely based on the features of the body biomechanics in the absence of any neural dynamics (Loveless et al., 2019). In particular, behavioral variability stems from an anomalous diffusion process arising from the deterministic chaotic dynamics of the body.

Contextual modulation of temporal variability

The second source of temporal variability in naturalistic behavior arises from contextual modulations, which can be internally or externally driven. When internally driven, they may arise from changes in brain or behavioral state such as arousal, expectation, or task engagement. When externally driven, they may arise from changes in environmental variables, from the experimenter’s imposed task conditions, or from manipulations such as pharmacological, optogenetic, or genetic ones. Contextual modulations can affect several qualitatively different aspects of behavioral units at each level of the hierarchy (actions, sequences, activities): average duration; usage frequency; and transition probabilities between units. Moreover, context may also change the motor execution of a behavioral unit, for example, by improving the vigor of a certain movement upon learning or motivation. For each type of modulation, we will give several examples and review computational mechanisms that may explain them.

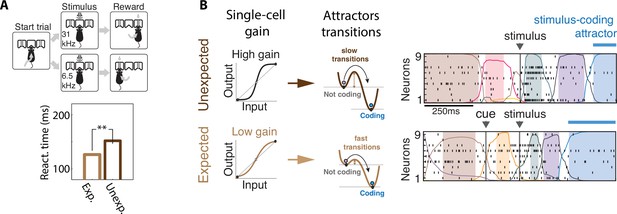

Action timing

The distribution of self-initiated action durations typically exhibits large variability, whose characteristic timescale can be extracted from their average duration (Figure 3). The average timing of an action is strongly modulated by contextual factors, both internally and externally driven. Examples of internally generated contextual factors include expectation and history effects. When events occur at predictable instants, anticipation improves performance such as reaction times. This classic effect of expectation was documented in an auditory two-alternative choice task (Jaramillo and Zador, 2011), where freely moving rats were rewarded for correctly discriminating the carrier frequency of a frequency-modulated target sound immersed in pure-tone distractors (Figure 4A). The target could occur early or late within each sound presentation, and temporal expectations on target timing were modulated by changing the ratio of trials with early or late targets within each block. When manipulating the expectations about sound timing, valid expectations accelerated reaction times and improved detection accuracy, showing enhanced perception. The auditory cortex is necessary to perform this task, and firing rates in auditory cortex populations are modulated by temporal expectations.

Contextual modulations mediated by changes in internal states.

(A) Expectation modulates reaction times. Top: freely moving rats were trained to initiate a trial by choosing either side port depending on the frequency features of a presented stimulus (a target frequency embedded in a train of distractors) to collect reward. Bottom: reaction time for targets that were expected (light brown) was faster than unexpected (dark brown). (B) Expectation induces faster stimulus coding. Contextual modulations that accelerate stimulus coding and reaction times may operate via a decrease in single-cell intrinsic gain (left), lowering the energy barrier separating noncoding attractors to the stimulus-coding attractor (center). Lower barriers allow for faster transitions into the stimulus coding attractor, mediating faster encoding of sensory stimuli in the expected condition compared to the unexpected condition. Right: representative ensemble activity from rats gustatory cortex in two trials where the taste delivery was expected (bottom) or unexpected (top). The onset of the taste-coding attractor (blue) occurs earlier when the taste delivery is expected. Panel (A) adapted from Figures 1 and 2 of Jaramillo and Zador, 2011. Panel (B) adapted from Figure 5 of Mazzucato et al., 2019.

Contextual changes in brain state may also be induced by varying levels of neuromodulators. In the self-initiated waiting task of Figure 3A, optogenetic activation of serotonergic neurons in the dorsal raphe nucleus selectively prolonged the waiting period, leading to a more patient and less impulsive behavior, but did not affect the timing of other self-initiated actions (Fonseca et al., 2015). Internally generated contextual modulations include history effects, which can affect the timing of self-initiated actions. In an operant conditioning task, where freely moving mice learned to press a lever for a minimum duration to earn a reward (Fan et al., 2012), the distribution of action timing showed dependence on the outcome of the preceding trial. After a rewarded trial, mice exhibited longer latency to initiate the next trial, but shorter press durations; after a failure, the opposite behavior occurred, with shorter latency to engage and longer press durations. Trial history effects are complex and action-specific and depend on several other factors, including prior movements, and wane with increasing inter-trial intervals. Inactivation experiments showed that these effects rely on frontal areas such as medial prefrontal and secondary motor cortices (Murakami et al., 2014; Murakami et al., 2017; Schreiner et al., 2021).

Although the contextual modulations considered so far occur on a fast timescale of a few trials, they may also be the consequence of associative learning. In a lever press task, mice learned to adjust the average duration of the lever press to different criteria in three different conditions where lever presses were always rewarded regardless of duration or only rewarded if longer than 800 or 1600 ms. Within each of the three conditions, the distribution of action timing exhibited large temporal variability, yet the average duration was starkly different between the three criteria as the mice learned the different criteria (Fan et al., 2012; Schreiner et al., 2021). Reaction times to sensory stimuli and self-initiated waiting behavior, in the form of long lever presses or nose-pokes, have emerged as a fruitful approach to test hypotheses on contextual modulations and decision-making in naturalistic scenarios.

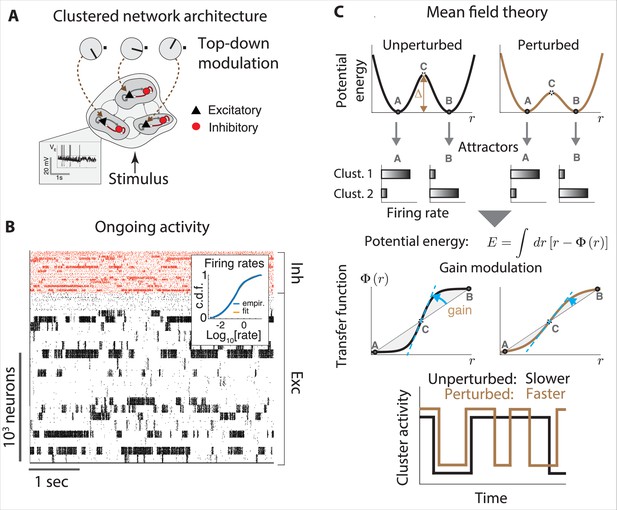

Controlling action timing via neuronal gain modulation

The paramount role of contextual modulations in regulating action timing during naturalistic scenarios has been well documented. However, the neural mechanisms underlying these effects remain elusive. Results from head-fixed preparations revealed some possible explanations, which have the potential to generalize to the freely moving case. Within the paradigm of metastable attractors (see Appendix 3), the speed at which cortical activity encodes incoming stimuli can be flexibly controlled in a state-dependent manner by transiently changing the baseline level of afferent input currents to a local cortical circuit. These baseline changes may be driven by top-down projections from higher cortical areas or by neuromodulators. In a recurrent circuit exhibiting attractor dynamics, changes in baseline levels modulate the average transition times between metastable attractors (Mazzucato et al., 2019; Wyrick and Mazzucato, 2021). In these models, attractors are represented by potential wells in the network’s energy landscape, and the height of the barrier separating two nearby wells determines the probability of transition between the two corresponding attractors (lower barriers are easier to cross and lead to faster transitions, Figure 4B). Changes in input baseline that decrease (increase) the barrier height lead to faster (slower) transitions to the coding attractor, in turn modulating reaction times.

Although it is not possible to measure potential wells directly in the brain, using mean field theory one can show that the height of these potential wells is directly proportional to the neuronal gain as measured by single-cell transfer functions (for an explanation, see Appendix 3). In particular, a decrease (increase) in pyramidal cells gain can lead to faster (slower) average action timing. This biologically plausible computational mechanism was proposed to explain the acceleration of sensory coding observed when gustatory stimuli are expected compared to when they are delivered as a surprise (Samuelsen et al., 2012; Mazzucato et al., 2019); and the faster encoding of visual stimuli observed in V1 populations during locomotion periods compared to when the mouse sits still (Wyrick and Mazzucato, 2021). Transition rates between attractors may also be modulated by varying the amplitude and color of the fluctuations in the synaptic inputs (see Appendix 3), while keeping the barrier heights fixed. Changes in E/I background synaptic inputs were also shown to induce gain modulation and changes in integration time (Chance et al., 2002).

How can a neural circuit learn to flexibly adjust its responses to stimuli or the timing of self-initiated actions? Theoretical work has established gain modulation as a general mechanism to flexibly control network activity in recurrent network models of motor cortex (Stroud et al., 2018). Individual modulation of each neuron’s gain can allow a recurrent network to learn a variety of target outputs through reward-based training and combine learned modulatory gain patterns to generate new movements. After learning, cortical circuits can control the speed of an intended movement through gain modulation and affect the shape or the speed of a movement. Although the model in Stroud et al., 2018 could not account for the across-repetition temporal variability in action timing, it is tempting to speculate that a generalization of this learning framework to incorporate the metastable attractor models of Mazzucato et al., 2019 could allow a recurrent circuit to learn flexible gain modulation via biologically plausible synaptic plasticity mechanisms (Litwin-Kumar and Doiron, 2014). This hypothetical model could potentially explain the contextual effects of learning on action timing observed in Fan et al., 2012; Schreiner et al., 2021, and the acceleration of reaction times in the presence of auditory expectations (Figure 4A; Jaramillo and Zador, 2011). Although this class of models has not been directly tested in freely moving assays, we believe that pursuing this promising direction could lead to important insights.

Behavioral sequences

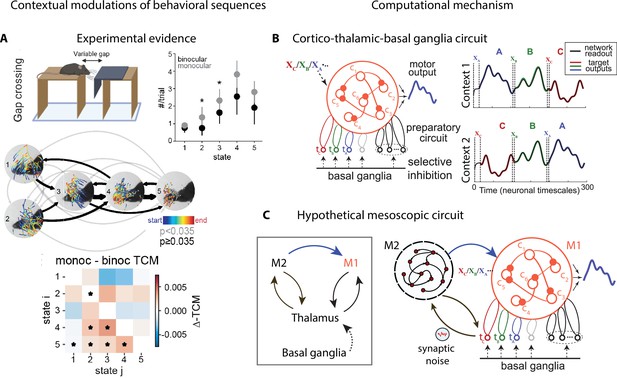

Contextual modulations of temporal variability may affect other aspects of natural behavior beyond average action timing, such as the frequency of occurrence of an action (‘state usage’) or the transition probabilities between actions within a behavioral sequence (Figure 5A). These effects can be uncovered by analyzing freely moving behavioral videos with state–space models, such as the autoregressive hidden Markov model (HMM) (Wiltschko et al., 2015; Figures 2B and 5A and Appendix 1). Differences in state usage and transition probabilities between conditions may shed light on the computational strategies animals deploy to solve complex ethological tasks, such as those involving sensorimotor integration. For example, in a distance estimation task where mice were trained to jump across a variable gap, a comparison of monocular and binocular mice revealed the different visually guided strategies mice may use to perform a successful jump. Mice performed more vertical head movements under monocular conditions compared to control (Figure 5A, states 2 and 3 occur more frequently in the monocular condition), revealing a reliance on motion parallax cues (Parker et al., 2021).

Contextual modulations of behavioral sequences.

(A) Freely moving mice were rewarded for successfully jumping across a variable gap. Center: example traces of eye position from five movement states labeled with autoregressive hidden Markov model (AR-HMM) of DeepLabCut-tracked points during the decision period (progressing blue to red in time) in average temporal order (arrow line widths are proportional to transition probabilities between states; gray black; states 2–3 represent vertical head movements). Top right: frequency of occurrence of each state for binocular (black) and monocular (gray) conditions. Bottom: difference between monocular and binocular transition count matrices; red transitions are more frequent in monocular, blue in binocular (*p<0.01). (B) Left: cortex–thalamus–basal ganglia circuit for behavioral sequence generation. The basal ganglia projections select thalamic units () needed for either motif execution or preparation. During preparation, the cortical population ci also receives an input xm specific to the upcoming motif m. Right: generation of sequences of arbitrary orders, using preparatory periods (between vertical dashed lines) before executing each motif. (C) Hypothetical cortex–thalamus–basal ganglia circuit for behavioral sequence generation combining the metastable attractor model (Figure 2C and D) with the model of panel (B). Secondary motor cortex (M2)provides the input to primary motor cortex (M1),setting the initial conditions for each motif . A thalamus–M2 feedback loop sustains the metastable attractors in M2, and synaptic noise in these thalamus-to-M2 projections generates temporal variability in action timing.

© 2021, Parker et al. Panel A reproduced from Figure 3 of Parker et al., 2021 published under the CC BY-NC-ND 4.0 license. Further reproduction of the panel must follow the terms of this license.

© 2021, Logiaco et al. Panels B and C are adapted from Figure 4 of Logiaco et al., 2021. They are not covered by the CC-BY 4.0 license and further reproduction of these panels would need permission from the copyright holder.

During ongoing periods, in the absence of a task, animal behavior features a large variety of actions and behavioral sequences (Figure 2). Experimentally controlled manipulations can lead to strong changes in ongoing behavior reflected both in changes of state usage and of transition probabilities between actions, resulting in different repertoires of behavioral sequences. Examples of manipulations include exposing mice to innately aversive odors and other changes in their surrounding environment; optogenetic activation of corticostriatal pathways (Wiltschko et al., 2015); and pharmacological treatment (Wiltschko et al., 2020). In the latter study, a classification analysis predicted with high accuracy which drug and specific dose was administered to the mice from a large panel of compounds at multiple doses. Comparison of state usage and transition rates can also reveal subtle phenotypical changes in the structure of ongoing behavior in genetically modified mice compared to wildtype ones. This phenotypic fingerprinting has led to insights into the behavior of mouse models of autism spectrum disorder (Wiltschko et al., 2015; Wiltschko et al., 2020; Klibaite et al., 2021).

Computational mechanisms underlying flexible behavioral sequences

Recent studies have begun to shed light on the rules that may control how animals learn and execute behavioral sequences. These studies revealed various types of contextual modulations such as changes in the occurrence of single actions or in the transition probabilities between pairs of actions and proposed potential mechanisms underlying these effects. Biologically plausible models of mesoscopic neural circuits can generate complex sequential activity (Logiaco et al., 2021; Murray and Escola, 2017). In a recent model of sequence generation (Logiaco and Escola, 2020; Logiaco et al., 2021), an extensive library of behavioral motifs and their flexible rearrangement into arbitrary sequences relied on the interaction between motor cortex, basal ganglia, and thalamus. In this model (Figure 5B), the basal ganglia sequentially disinhibit motif-specific thalamic units, which in turn trigger motif preparation and execution via a thalamocortical loop with the primary motor cortex (M1). Afferent inputs to M1 set the initial conditions for motif execution. This model represents a biologically plausible neural implementation of a switching linear dynamical system (LDS) (Linderman et al., 2017; Nassar et al., 2018), a class of generative models whose statistical structure can capture the spatiotemporal variability in naturalistic behavior (see Appendix 1).

How do animals learn context-dependent behavioral sequences? Within the framework of corticostriatal circuits, sequential activity patterns can be learned in an all-inhibitory circuit representing the striatum (Murray and Escola, 2017). Learning in this model is based on biologically plausible synaptic plasticity rules, consistent with the decoupling of learning and execution suggested by lesion studies showing that cortical circuits are necessary for learning, but that subcortical circuits are sufficient for expression of learned behaviors (Kawai et al., 2015). This model can achieve contextual control over temporal rescaling of the sequence speed and facilitate flexible expression of distinct sequences via selective activation and concatenation of specific subsequences. Subsequent work uncovered a new computational mechanism underlying how motor cortex, thalamus, and the striatum coordinate their activity and plasticity to learn complex behavior on multiple timescales (Murray and Escola, 2020). The combination of fast cortical learning and slow subcortical learning may give rise to a covert learning process through which control of behavior is gradually transferred from cortical to subcortical circuits, while protecting learned behaviors that are practiced repeatedly against overwriting by future learning.

Open issues

It remains to clarify the exact extent to which the stochastic and contextual variability are independent sources. In principle, one could hypothesize that detailed knowledge and control of all experimental variables and behavioral state and their history might explain part of the trial-to-trial variability as contextual variability conditioned on these variables. For example, in the Marshmallow task about 10% of the temporal variability in waiting times could be attributed to a trial history effect, which relied on an intact medial prefrontal cortex and was abolished with its inactivation (Murakami et al., 2017). Moreover, in a subset of sessions this fraction of variability could be predicted by the activity of transient neurons before trial onset (Murakami et al., 2014; Murakami et al., 2017). The remaining 90% of the unexplained across-repetition variability was attributed to a stochastic mechanism, likely originating from metastable dynamics (Recanatesi et al., 2022). In general, it is an open question to investigate whether what we think of as noise driving trial-to-trial variability could just be another name for a contextual variable that we have not yet quantified. Alternatively, stochastic variability could be genuinely different from contextual variability and originate from noise inherent in neural spiking or other activity-dependent mechanisms.

A mesoscopic circuit for flexible action sequences

The computational mechanisms discussed so far can separately account for some specific features of contextual modulations, but none of the existing models can account for all of them. Metastable attractor models, where an attractor/action dictionary can be established, explain how the large variability in action timing may arise from correlated neural noise driving transitions between attractors (Figure 3C). These models can explain how contextual modulations of action timing may arise from neuronal gain modulation (Figure 4B). However, it is not known whether such models can explain the flexible rearrangement of actions within a behavioral sequence (Figure 5A). Conversely, models of flexible sequence execution and learning (Logiaco et al., 2021; Murray and Escola, 2017; Stroud et al., 2018) can explain the latter effect, but do not incorporate temporal variability in action timing across repetition. We would like to propose a hypothetic circuit model that combines the cortex–basal ganglia–thalamic model of Figure 5B together with the metastable attractor model of preparatory activity of Figure 3C and D to provide a tentative unified model of temporally variable yet flexible behavioral sequences. In the original model of Figure 5B, the movement motif A in M1 is executed following a specific initial condition , representing an external input to M1. In the larger mesoscopic circuit of Figure 5C, we can interpret as the preparatory activity for the motif A, dynamically set by an M2 attractor, which encodes the upcoming motif, and mediated by an M2-to-M1 projection. In the model of Figure 5B, the motif in M1 is selected by the activation of a thalamic nucleus , which is gated in by basal ganglia-to-thalamus projections. In the larger mesoscopic circuit of Figure 5C, the thalamic nuclei are also part of a feedback loop with M2 (already present in the model of Figure 3D) responsible for sustaining the metastable attractors encoding the preparatory activity for motif . Presynaptic noise in the thalamus-to-M2 projections implement the variability in action timing via noise-driven transitions between M2 attractors. Another prominent source of behavioral variability, the one in movement execution, can potentially be incorporated into this model. Specifically, fluctuations in neural preparatory activity (which account for up to half of the total variability in movement execution; Churchland et al., 2006) can be naturally incorporated as trial-to-trial variability in the initial conditions for motifs in M1, originating from firing rate variability in M2 attractors upstream and in the M2-to-M1 projections. This model represents a direct circuit implementation of the state–space models of behavior based on Markovian dynamics: discrete states representing actions/motifs correspond to discrete M2 attractors, whereas the continuous latent trajectories underlying movement execution correspond to low-dimensional trajectories of M1 populations driving muscle movements. Moreover, this model can provide a natural and parsimonious neural implementation for the contextual modulations of behavioral sequences (Figure 5A). Two different neural mechanisms can drive this behavioral flexibility: either a variation in the depth of the M2 attractor potential wells mediated by gain modulations (Figure 4B) or a change in the noise amplitude originating at thalamus-to-M2 projections. These contextual modulations induce changes in transition times between consecutive actions/attractors. As a consequence, the temporal dynamics are only Markovian when conditioned on each context and the across-context dynamics exhibits non-Markovian features such as context-specific transition rates. This class of models can serve as a useful testing ground for generating mechanistic hypotheses and guide future experimental design.

Flexible sequences in birdsongs

A promising model system to investigate the neural mechanisms of flexible motor sequences is songbirds. Canary songs consist of repeated syllables called phrases, and the ordering of these phrases follows long-range rules in which the choice of what to sing depends on the song structure many seconds prior (Markowitz et al., 2013). These long-range contextual modulations are correlated to the activity of neurons in the high vocal center (HVC, a premotor area) (Cohen et al., 2020). Seminal work has established that motor variability can be actively generated and controlled by the brain for the purpose of learning (Olveczky et al., 2005; Kao et al., 2005). Variability in song production is not simply due to intrinsic noise in motor pathways but is introduced into robust nucleus of the arcopalium (RA, a motor cortex analog) by a dedicated upstream area lateral magnocellular nucleus of the anterior nidopallium (LMAN, analog to a premotor area), which is required for song learning. Theoretical modeling further revealed that this motor variability could rely on topographically organized projections from LMAN to RA for amplifying correlated neural fluctuations (Darshan et al., 2017). Strikingly, this mechanism provides universal predictions for the statistics of babbling shared by songbirds and human infants. Timing variability may allow animals to explore the temporal aspects of a given sequence of behavior independently of the choices of actions. Animals could learn proper timing of an action sequence by a search in timing space independent of action selection and vice versa. Future work should explore the advantages of temporal variability in driving learning of precise timing.

Contextual modulations of behavior may occur on longer timescales as well, including those induced by circadian rhythms, hormones, and other changes in internal states; and they may span the whole lifetime of an individual, such as homeostasis and development. Social behavior provides another source of complex contextual modulations. We briefly address these different aspects of contextual modulations below.

Variability on longer timescales

Internal states, such as hunger (Corrales-Carvajal et al., 2016), and circadian rhythms (Patke et al., 2020) induce daily modulations of several aspects of behavior. In the fruit fly, different clusters of clock neurons are implicated in regulating rhythmic behaviors, including wake–sleep cycles, locomotion, feeding, mating, courtship, and metabolism. Activation of circadian clock neurons in different phases of the cycle drives expression of specific behavioral sequences, and targeted manipulations of particular clusters of clock neurons are sufficient to recapitulate those sequences artificially (Dissel et al., 2014; Yao and Shafer, 2014). In mammals, the circadian pacemaker is located in the hypothalamus, interacting with a complex network of neuronal peripheral signals downstream of it (Hastings et al., 2018; Schibler et al., 2015). Other sources of contextual variability include variations in the levels of hormones and neuromodulators, which modulate behavioral sequences on longer timescales (Nelson, 2005). Although hormones and neuromodulators may not directly drive expression of specific behaviors, they typically prime an animal to elicit hormone-specific responses to particular stimuli in the appropriate behavioral context, as observed prominently during social behavior such as aggression and mating (Schibler et al., 2015). Statistical models based on Markovian dynamics, such as state–space models, whose building blocks are sub-second movements syllables, are challenged by contextual modulations occurring on very long timescales. Introducing by hand a hierarchical structure in these models might mitigate this issue (Tao et al., 2019). However, approaches based on discrete states, such as HMMs, are not well suited to capture long-term modulation of continuous nature as in the case of circadian rhythms. Fitting a discrete model to slow continuous dynamics would lead to the proliferation of a large number of discrete states tiling the continuous trajectory (Alba et al., 2020; Recanatesi et al., 2022). Data-driven models may provide a more promising way to reveal slow behavioral modulations, although they have not been tested in this realm yet (Marshall et al., 2021). Alternative methods based on continuous latents, such as Gaussian processes (Yu et al., 2008), might also provide useful alternative approaches. In trial-based experiments, tensor component analysis was shown to capture slow drifts in latent variables as well (Williams et al., 2018). Although the behavioral effects of these long-term sources of contextual modulations have not been included in current models of neural circuits, the theoretical framework based on attractor networks in Figure 5C could be augmented to account for them. An afferent higher-order cortical area, recurrently connected to M2, may toggle between different behavioral sequences and control long-term variations in their expression, for example, via gain modulation. A natural candidate for this controller area is the medial prefrontal cortex, which is necessary to express long-term biases in the waiting task (Figure 3; Murakami et al., 2017). In this augmented model, a top-down modulatory input to the higher-order controller, representing afferent inputs from circadian clock neurons or neuromodulation, may modulate the expression of different behavioral sequences implementing these long-term modulations.

Aging

Other sources of contextual modulation of behavior may act on even longer timescales spanning the whole lifetime of an individual (Churgin et al., 2017), such as homeostasis and development. The interplay between these two mechanisms may explain how the motor output of some neural circuits maintains remarkable stability in the face of the large variability in neural activity observed across a population or in the same individual during development (Prinz et al., 2004; Bucher et al., 2005). Across the entire life span, different brain areas develop, mature, and decline at different moments and to different degrees (Sowell et al., 2004; Yeatman et al., 2014). Connectivity between areas develops at variable rates as well (Yeatman et al., 2014). It would be interesting to investigate whether these different mechanisms, unfolding over the life span of an individual, can be accounted for within the framework of multiarea attractor networks presented above.

Social behavior and contextual modulations

Although most of the experiments discussed in this review entail the behavior of individual animals, contextual modulations of behavior are prominently observed in naturalistic assays comprising the interaction between pairs of animals, such as hunting and social behaviors. In the prey–capture paradigm, a mouse pursues, captures, and consumes live insect prey (Hoy et al., 2016; Michaiel et al., 2020). Prey–capture behavior was found to strongly depend on context. Experimental control of the surrounding environment revealed that mice rely on vision for efficient prey–capture. In the dark, the hunting behavior is severely impaired: only at close range to the insect is the mouse able to navigate via auditory cues. Another remarkable example of context-dependent social behavior was demonstrated during male–female fruit fly mating behavior (Calhoun et al., 2019). During courtship bouts, male flies modulate their songs using specific feedback cues from their female partner such as their relative position and orientation. A simple way to model the relationship between sensory cues and the choice of a specific song in terms of linear ‘filters’ (Coen et al., 2014), where a common assumption is that the sensorimotor map is fixed. Relaxing this assumption and allowing for moment-to-moment transition between more than one sensorimotor map via a hidden Markov model with generalized linear model emissions (GLM-HMM), Calhoun et al., 2019 uncovered latent states underlying the mating behavior corresponding to different sensorimotor strategies, each strategy featuring a specific mapping from feedback cues to song modes. Combining this insight with targeted optogenetic manipulation revealed that neurons previously thought to be command neurons for song production are instead responsible for controlling the switch between different internal states, thus regulating the courtship strategies. Finally, a tenet of naturalistic behavior is vocal communication, which combines aspects of sensory processing and motor generation in the realm of complex social interactions. A particularly exciting model system is the marmoset, where new techniques to record neural activity in freely moving animals during social behavior and vocalization (Nummela et al., 2017) together with newly developed optogenetic tools (MacDougall et al., 2016) and multi-animal pose tracking algorithms (Pereira et al., 2020b; Lauer et al., 2021) hold the promise to push the field into entirely new domains (Eliades and Miller, 2017).

Hierarchical structure

The temporal organization of naturalistic behavior exhibits a clear hierarchical structure (Tinbergen, 1951; Dawkins, 1976; Simon, 1962), where actions are nested into behavioral sequences that are then grouped into activities. Higher levels in the hierarchy emerge at longer timescales: actions/movements occur on a sub-second scale, behavioral sequences span at most a few seconds and activities last for longer periods of several seconds to minutes. The crucial aspect of this behavioral hierarchy is its complexity: an animal’s behavior unfolds along all timescales simultaneously. What is the organization of this vast spatiotemporal hierarchy? What are the neural mechanisms supporting and generating this nested temporal structure?

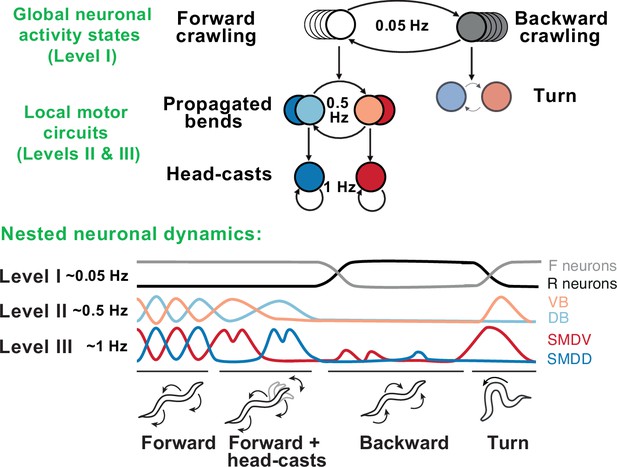

A case study: C. elegans locomotor behavior

Remarkable progress in both behavioral and neurophysiological aspects of the hierarchy has been made in the worm C. elegans (Figure 6). The worm locomotor behavior exhibits a clear hierarchical organization with three distinct timescales: sub-second body-bends and head-casts, second-long crawling undulations, and slow reverse-forward cycles (Gomez-Marin et al., 2016). At the neuronal level, this hierarchy is generated by nested neuronal dynamics where upper-level motor programs are supported by slow activity spread across many neurons, while lower-level behaviors are represented by fast local dynamics in small multifunctional populations (Kaplan et al., 2020). Persistent activity driving higher-level behaviors gates faster activity driving lower-level behaviors, such that, at lower levels, neurons show dynamics spanning multiple timescales simultaneously (Hallinen et al., 2021). Specific lower-level behaviors may only be accessed via switches at upper levels, generating a nonoverlapping, tree-like hierarchy, in which no lower-level state is connected to multiple upper-level states.

Behavioral and neural hierarchies in C. elegans.

Top: behavioral hierarchy in C. elegans. A 0.05Hz cycle drives switches between forward and reverse crawling states, with intermediate level 0.5Hz crawling undulations, and lower level 1Hz head-casts. Bottom: slow dynamics across whole-brain circuits reflect upper-hierarchy motor activity; fast dynamics in motor circuits drive lower-hierarchy movements. Slower dynamics tightly constrain the state and function of faster ones. Adapted from Figure 8 of Kaplan et al., 2020.

At the top of this motor hierarchy, we find a much longer-lasting organization of states in terms of exploration, exploitation, and quiescence. In contrast to the strict, tree-like structure observed in the motor hierarchy, lower-level motor features are shared across these states, albeit with different frequency of occurrence. Whereas the motor hierarchy is directly generated by neuronal activity, this state-level hierarchy may rely on neuromodulation (Ben Arous et al., 2009; Flavell et al., 2013)

Behavioral hierarchies in flies and rodents

How much of this tight correspondence between behavioral and neural hierarchies generalizes from worms to insects and mammals? Are action sequences organized as a chain? Alternatively, is there a hierarchical structure where individual actions, intermediate subsequences, and overall sequences can be flexibly combined? A chain-like organization would require a single controller concatenating actions, but could be prone to error or disruption. A hierarchical structure could be error-tolerant and flexible at the cost of requiring controllers at different levels of the hierarchy (Geddes et al., 2018). We will first examine compelling evidence from the fruit fly and then discuss emerging results in rodent experiments.

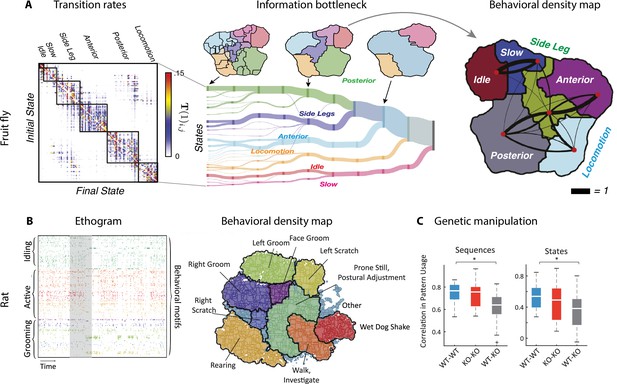

By analyzing videos of ground-based fruit fly during long sessions of spontaneous behavior (Figure 2B), Berman et al., 2014 and Berman et al., 2016 mapped the entire behavioral space of freely moving flies, identifying a hundred stereotyped, frequently reoccurring actions, interspersed with bouts of nonstereotyped behaviors. Recurring behavioral categories emerged as peaks in the behavioral space probability landscape, labeled as walking, running, head grooming, and wing grooming (Figure 7A). In order to uncover the organization of behavior at different timescales, the authors estimated which behavioral representations (movements, sequences, or activities) could optimally predict the fly’s future behavior on different temporal horizons (from 50 ms to minutes), applying the information bottleneck method (Tishby et al., 2000). This predictive algorithm revealed multiple timescales in the fly behavior, organized into a hierarchical structure reflecting the underlying behavioral programs and internal states. The near future could be optimally predicted by segmenting behavior according to actions at the fastest level of the hierarchy. The optimal representation of behavior that could optimally predict the distant future up to minutes away was based on slower, coarser groups of actions grouped into activities. These longer timescales manifest as nested blocks in the transition probability matrix, implying that a strongly non-Markovian structure emerges on longer timescales (Alba et al., 2020; Figure 7A).

Hierarchical structure in freely moving fly and mouse behavior.

(A) Hierarchical variability in the fruit fly behavior. The Markov transition probability matrix (left) between postures reveals a clustered structure upon applying the predictive information bottleneck with six clusters (black outline on the left plot). Labels (e.g., ‘anterior,’ ‘side leg’) refer to movements involving specific body parts. Center: hierarchical organization for optimal solutions of the information bottleneck for predicting behavior on increasingly slower timescales (varying clusters from 25 to 1, left to right; colored vertical bars are proportional to the percentage of time a fly spends in each cluster). Right: behavioral clusters are contiguous in behavioral space (same clusters as in the transition matrix in the left panel; black lines represent transitions probabilities between states, the thicker the more likely). (B) Left: a temporal pattern matching algorithm detected repeated behavioral patterns (rows, sorted in grooming, active, idling classes; color-coded clusters explained in panel B) in freely moving rats behavioral recordings. Right: data from 16 rats co-embedded in a two-dimensional t-distributed stochastic neighbor embedding (t-SNE) behavioral map was clustered with a watershed transform, revealing behavioral clusters segregated to different regions of the map. The color-coded ethogram on the left is annotated from these behavioral clusters. (C) Sequence and state usage probabilities for wild-type and Fmr1-KO rats show a significantly decreased correlation between different genotypes. Panel (A) adapted from Figures 1 and 5 of Berman et al., 2016. Panels (B) and (C) adapted from Figures 3, 4, and 6 of Marshall et al., 2021.

A hallmark of the fly behavior emerging from this analysis is that the different branches of this hierarchy are segregated into a tree-like, nonoverlapping structure: for example, actions occurring during grooming do not occur during locomotion. This multiscale representation was leveraged to dissect the descending motor pathways in the fly. Optogenetic activation of single neurons during spontaneous behavior revealed that most of the descending neurons drove stereotyped behaviors that where shared by multiple neurons and often depended on the behavioral state prior to activation (Cande et al., 2018). An alternative statistical approach based on a hierarchical HMM revealed that, although all flies use the same set of low-level locomotor features, individual flies vary considerably in the high-level temporal structure of locomotion, and in how this usage is modulated by different odors (Tao et al., 2019). This behavioral idiosyncrasy of individual-to-individual phenotypic variability has been traced back to specific genes (Ayroles et al., 2014) regulating neural activity (Buchanan et al., 2015) in the central complex of the fly brain. This series of studies suggest that the fly behavior is organized hierarchically in a tree-like structure from actions to sequences to activities. This hierarchy seems to lack flexibility, such that behavioral units at lower levels are segregated into different hierarchical branches and cannot be rearranged at higher levels. Although a more flexible structure seems to emerge during social interactions (see above and Calhoun et al., 2019).

What is the structure of the behavioral hierarchy in mammals? We will start by considering the first two levels of the hierarchy, namely, how actions concatenate into sequences. A recent experimental tour de force demonstrated the existence of a hierarchical structure in the learning and execution of heterogeneous sequences in a freely moving operant task (Geddes et al., 2018). Mice learned to perform a ‘penguin dance’ consisting of a sequence of two or three consecutive left lever presses (LL or LLL) followed by a sequence of two or more right lever presses (RR or RRR) to obtain a reward. Mice acquired the sequence hierarchically rather than sequentially, a learning scheme that is inconsistent with the classic reinforcement learning paradigm, which predicts sequence learning occurs in the reverse order of execution (Sutton and Barto, 2018). Using closed-loop optogenetic stimulation, the authors revealed the differential roles played by striatal direct and indirect pathways in controlling, respectively, the expression of a single action (either L or R), or a fast switch from one subsequence to the next (from the LL block to RR, and from RR to the reward approach).

What is the structure of the rodent behavioral hierarchy at slower timescales? A multiscale analysis of ongoing behavior was performed using rat body piercing, which allowed for tracking of the three-dimensional pose with high accuracy (Figure 7B; Marshall et al., 2021). A watershed algorithm applied to behavioral probability density maps revealed the emergence of hierarchical behavioral categories at different temporal scales. Examining the behavioral transition matrix at different timescales, signature of non-Markovian dynamics peaked at 10–100 s timescales. On 15 s timescales, pattern sequences emerged featuring sequentially ordered actions, such as grooming sequences of the face followed by the body. On minute-long timescales, states of varying arousal or task engagement emerged, lacking stereotyped sequential ordering. Although the results of Marshall et al., 2021 seem to suggest the presence of a flexible, overlapping structure in the rat hierarchy, consistent with Geddes et al., 2018 and different from the tree-like hierarchy in flies (Berman et al., 2016), the analysis of Marshall et al., 2021 lacked the information bottleneck step, which was crucial to capture the fly behavior on long timescales. Future work may shed light on the nature of behavioral hierarchies in mammals using the information bottleneck.

Manipulation experiments could help clarify the structure of the rodent behavioral hierarchy and whether it allows for flexible behavioral sequences. Earlier studies of contextual modulations of rodent freely moving behavior by pharmacological or genetic manipulations were mostly confined to comparison of usage statistics of single actions (Wiltschko et al., 2015). However, more recent studies (Marshall et al., 2021; Klibaite et al., 2021), which mapped the rodent behavioral hierarchy, allowed a multiscale assessment of contextual effects. In a rat model of the fragile X syndrome, while mutant and wildtype rats had similar locomotor behavior, the former showed abnormally long grooming epochs, characterized by different behavioral sequences and states compared to wildtypes (Figure 7C). This study highlights the advantages of multiscale comparative taxonomy of naturalistic behavior to investigate behavioral manifestations of complex conditions such as autism spectrum disorder and classify the behavioral effects of different drugs. Another benefit of this multiscale approach is to allow the investigation of the individuality of rodent behavior, revealing that, although all animals drew from a common set of temporal patterns, differences between individual rats were more pronounced at longer timescales compared to short ones (Marshall et al., 2021). It would be interesting to further explore the features of individuality across species as a way of testing ecological ideas such as ‘bet-hedging’ (Honegger and de Bivort, 2018).

Multiple timescales of neural activity

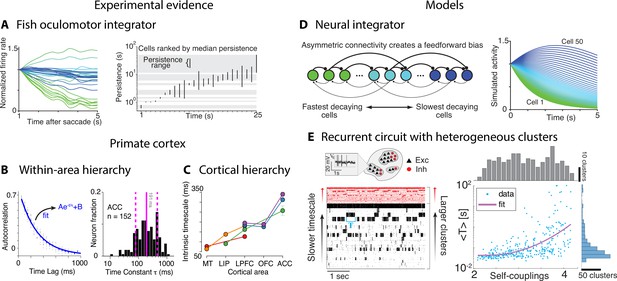

What are the neural mechanisms generating the hierarchy of timescales observed during naturalistic behavior? Is there evidence that neural activity is simultaneously varying over multiple timescales? Although no studies directly addressed these questions yet, a number of experimental and theoretical approaches have provided evidence for multiple timescales of neural activity. Some evidence for temporal heterogeneity in neural activity was reported in restrained animals during stereotyped behavioral assays. A heterogeneous distribution of timescales of neural activity was found in the brainstem of the restrained larval zebrafish by measuring the decay time constant of persistent firing across a population of neurons comprising the oculomotor velocity-to-position neural integrator (Figure 8A; Miri et al., 2011). The decay times varied over a vast range 0.5–50 s across cells in individual larvae. This heterogeneous distribution of timescales was later confirmed in the primate oculomotor brainstem (Joshua et al., 2013), suggesting that timescale heterogeneity is a common feature of oculomotor integrators conserved across phylogeny. Single-neuron activity may also encode a long memory of task-related variables. In head-fixed monkeys performing a competitive game task, temporal traces of the reward delivered in previous trials were encoded in single-neuron spiking activity in frontal areas over a wide range of timescales, obeying a power law distribution up to 10 consecutive trials (Bernacchia et al., 2011).

Computational principles underlying the heterogeneity of timescales.