Modeling resource allocation strategies for insecticide-treated bed nets to achieve malaria eradication

eLife assessment

This study presents a valuable finding on the optimal prioritization in different malaria transmission settings for the distribution of insecticide-treated nets to reduce the malaria burden. The evidence supporting the claims of the authors is solid. The work will be of interest from a global funder perspective, though somewhat less relevant for individual countries.

https://doi.org/10.7554/eLife.88283.3.sa0Valuable: Findings that have theoretical or practical implications for a subfield

- Landmark

- Fundamental

- Important

- Valuable

- Useful

Solid: Methods, data and analyses broadly support the claims with only minor weaknesses

- Exceptional

- Compelling

- Convincing

- Solid

- Incomplete

- Inadequate

During the peer-review process the editor and reviewers write an eLife Assessment that summarises the significance of the findings reported in the article (on a scale ranging from landmark to useful) and the strength of the evidence (on a scale ranging from exceptional to inadequate). Learn more about eLife Assessments

Abstract

Large reductions in the global malaria burden have been achieved, but plateauing funding poses a challenge for progressing towards the ultimate goal of malaria eradication. Using previously published mathematical models of Plasmodium falciparum and Plasmodium vivax transmission incorporating insecticide-treated nets (ITNs) as an illustrative intervention, we sought to identify the global funding allocation that maximized impact under defined objectives and across a range of global funding budgets. The optimal strategy for case reduction mirrored an allocation framework that prioritizes funding for high-transmission settings, resulting in total case reductions of 76% and 66% at intermediate budget levels, respectively. Allocation strategies that had the greatest impact on case reductions were associated with lesser near-term impacts on the global population at risk. The optimal funding distribution prioritized high ITN coverage in high-transmission settings endemic for P. falciparum only, while maintaining lower levels in low-transmission settings. However, at high budgets, 62% of funding was targeted to low-transmission settings co-endemic for P. falciparum and P. vivax. These results support current global strategies to prioritize funding to high-burden P. falciparum-endemic settings in sub-Saharan Africa to minimize clinical malaria burden and progress towards elimination, but highlight a trade-off with ‘shrinking the map’ through a focus on near-elimination settings and addressing the burden of P. vivax.

Introduction

Global support for malaria eradication has fluctuated in response to changing health policies over the past 75 years. From near global endemicity in the 1900’s over 100 countries have eliminated malaria, with 10 of these certified malaria-free by the World Health Organization (WHO) in the last two decades (Feachem et al., 2010; Shretta et al., 2017; Weiss et al., 2019). Despite this success, 41% and 57% of the global population in 2017 were estimated to live in areas at risk of infection with Plasmodium falciparum and Plasmodium vivax, respectively (Weiss et al., 2019; Battle et al., 2019). In 2021 there were an estimated 247 million new malaria cases and over 600,000 deaths, primarily in children under 5 years of age (World Health Organization, 2007; World Health Organization, 2022b). Mosquito resistance to the insecticides used in vector control, parasite resistance to both first-line therapeutics and diagnostics, and local active conflicts continue to threaten elimination efforts (World Health Organization, 2007; World Health Organization, 2022a). Nevertheless, the global community continues to strive towards the ultimate aim of eradication, which could save millions of lives and thus offer high returns on investment (Chen et al., 2018; Strategic Advisory Group on Malaria Eradication, 2020).

The global goals outlined in the Global Technical Strategy for Malaria (GTS) 2016–2030 include reducing malaria incidence and mortality rates by 90%, achieving elimination in 35 countries, and preventing re-establishment of transmission in all countries currently classified as malaria-free by 2030 (World Health Organization, 2007; World Health Organization, 2015). Various stakeholders have also set timelines for the wider goal of global eradication, ranging from 2030–2050 (World Health Organization, 2007; World Health Organization, 2020, Chen et al., 2018; Strategic Advisory Group on Malaria Eradication, 2020). However, there remains a lack of consensus on how best to achieve this longer-term aspiration. Historically, large progress was made in eliminating malaria mainly in lower-transmission countries in temperate regions during the Global Malaria Eradication Program in the 1950s, with the global population at risk of malaria reducing from around 70% of the world population in 1950 to 50% in 2000 (Hay et al., 2004). Renewed commitment to malaria control in the early 2000s with the Roll Back Malaria initiative subsequently extended the focus to the highly endemic areas in sub-Saharan Africa (Feachem et al., 2010). Whilst it is now widely acknowledged that the current tool set is insufficient in itself to eradicate the parasite, there continues to be debate about how resources should be allocated (Snow, 2015). Some advocate for a focus on high-burden settings to lower the overall global burden (World Health Organization, 2007; World Health Organization, 2019), while others call for increased funding to middle-income low-burden countries through a ‘shrink the map strategy’ where elimination is considered a driver of global progress (Newby et al., 2016). A third set of policy options is influenced by equity considerations including allocating funds to achieve equal allocation per person at risk, equal access to bed nets and treatment, maximize lives saved, or to achieve equitable overall health status (World Health Organization, 2007; World Health Organization, 2013, Raine et al., 2016).

Global strategies are influenced by international donors, which represent 68% of the global investment in malaria control and elimination activities (World Health Organization, 2007; World Health Organization, 2022b). The Global Fund and the U.S. President’s Malaria Initiative are two of the largest contributors to this investment. Their strategies pursue a combination approach, prioritizing malaria reduction in high-burden countries while achieving sub-regional elimination in select settings (The Global Fund, 2021, United States Agency for International Development & Centers for Disease Control and Prevention, 2021). Given that the global investment for malaria control and elimination still falls short of the 6.8 billion USD currently estimated to be needed to meet GTS 2016–2030 goals (World Health Organization, 2007; World Health Organization, 2022b), an optimized strategy to allocate limited resources is critical to maximizing the chance of successfully achieving the GTS goals and longer-term eradication aspirations.

In this study, we use mathematical modeling to explore the optimal allocation of limited global resources to maximize the long-term reduction in P. falciparum and P. vivax malaria. Our aim is to determine whether financial resources should initially focus on high-transmission countries, low-transmission countries, or a balance between the two across a range of global budgets. In doing so, we consider potential trade-offs between short-term gains and long-term impact. We use compartmental deterministic versions of two previously developed and tested individual-based models of P. falciparum and P. vivax transmission, respectively (Griffin et al., 2010; White et al., 2018). Using the compartmental model structures allows us to fully explore the space of possible resource allocation decisions using optimization, which would be prohibitively costly to perform using more complex individual-based models. Furthermore, to evaluate the impact of resource allocation options, we focus on a single intervention - insecticide-treated nets (ITNs). Whilst in reality, national malaria elimination programs encompass a broad range of preventative and therapeutic tools alongside different surveillance strategies as transmission decreases, this simplification is made for computational feasibility, with ITNs chosen as they (a) provide both an individual protective effect and population-level transmission reductions (i.e. indirect effects); (b) are the most widely used single malaria intervention other than first-line treatment; and (c) extensive distribution and costing data are available that allow us to incorporate their decreasing technical efficiency at high coverage.

Results

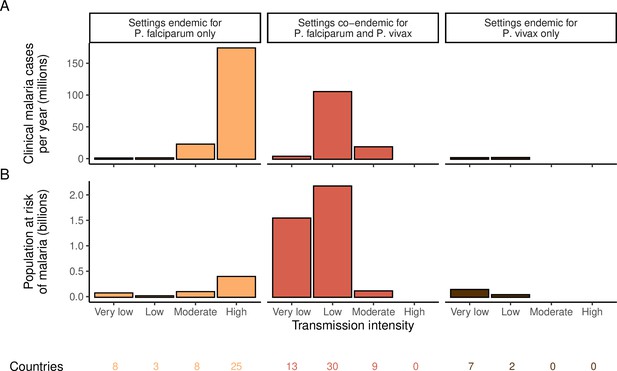

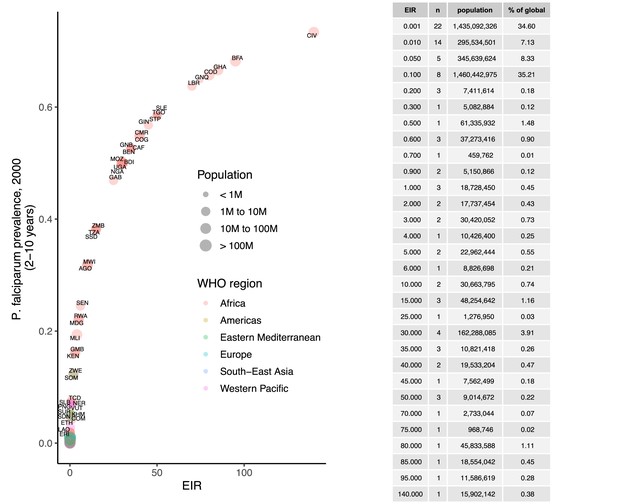

We identified 105 malaria-endemic countries based on 2000 P. falciparum and P. vivax prevalence estimates (before the scale-up of interventions), of which 44, 9, and 52 were endemic for P. falciparum only, P. vivax only, and co-endemic for both species, respectively. Globally, the clinical burden of malaria was focused in settings of high transmission intensity endemic for P. falciparum only, followed by low-transmission settings co-endemic for P. falciparum and P. vivax (Figure 1A). Conversely, 89% of the global population at risk of malaria was located in co-endemic settings with very low and low transmission intensities (Figure 1B). All 25 countries with high transmission intensity and 11 of 17 countries with moderate transmission intensity were in Africa, while almost half of global cases and populations at risk in low-transmission co-endemic settings originated in India.

Global distribution of P. falciparum and P. vivax malaria burden in 2000 (in the absence of insecticide-treated nets) obtained from the Malaria Atlas Project (Weiss et al., 2019; Battle et al., 2019).

(A) The annual number of clinical cases and (B) the population at risk of malaria across settings with different transmission intensities and endemic for P. falciparum, P. vivax, or co-endemic for both species. The number of countries in each setting is indicated below the figure.

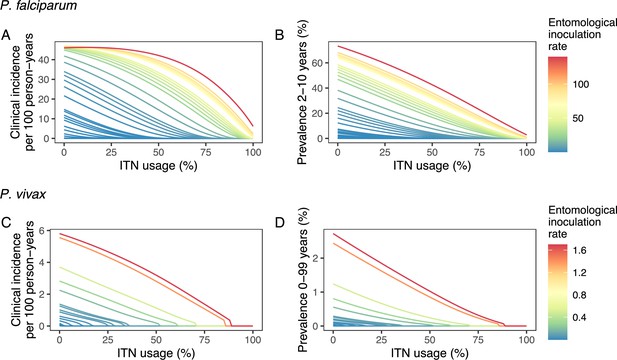

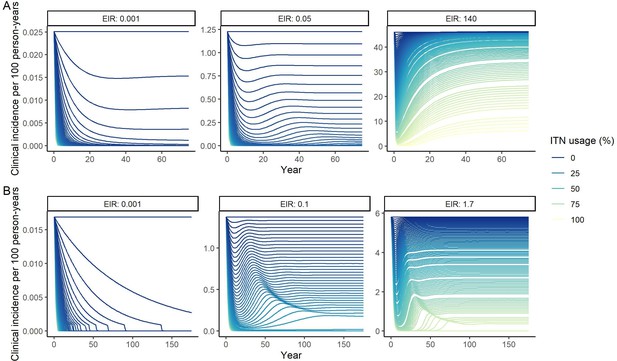

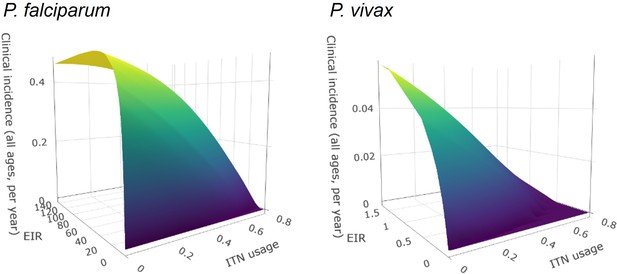

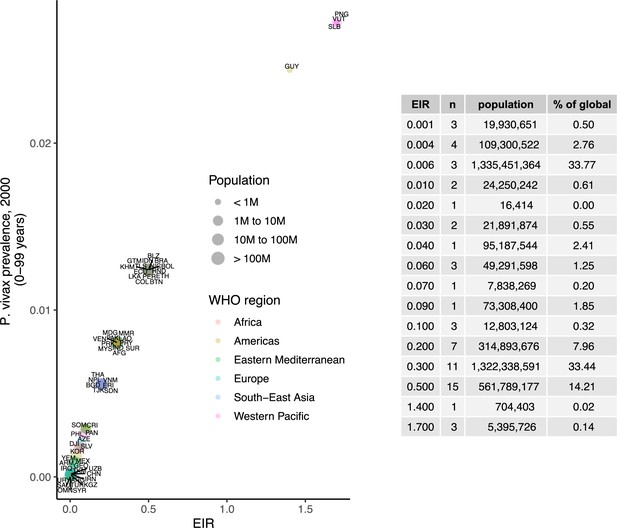

Deterministic compartmental versions of two previously published and validated mathematical models of P. falciparum and P. vivax malaria transmission dynamics (Griffin et al., 2010; Griffin et al., 2014; Griffin et al., 2016; White et al., 2018) were used to explore associations between ITN use and clinical malaria incidence. In model simulations, the relationship between ITN usage and malaria infection outcomes varied by the baseline entomological inoculation rate (EIR), representing local transmission intensity, and parasite species (Figure 2). The same increase in ITN usage achieved a larger relative reduction in clinical incidence in low-EIR than in high-EIR settings. Low levels of ITN usage were sufficient to eliminate malaria in low-transmission settings, whereas high ITN usage was necessary to achieve a substantial decrease in clinical incidence in high-EIR settings. At the same EIR value, ITNs also led to a larger relative reduction in P. falciparum than P. vivax clinical incidence. However, ITN usage of 80% was not sufficient to lead to the full elimination of either P. falciparum or P. vivax in the highest transmission settings. In combination, the models projected that ITNs could reduce global P. falciparum and P. vivax cases by 83.6% from 252.0 million and by 99.9% from 69.3 million in 2000, respectively, assuming a maximum ITN usage of 80%.

Modeled impact of insecticide-treated net (ITN) usage on malaria epidemiology by the setting-specific transmission intensity, represented by the baseline entomological inoculation rate.

The impact on the clinical incidence and prevalence of P. falciparum malaria (panels A and B) and on the clinical incidence and prevalence of P. vivax malaria (panels C and D) is shown. Panels A and C represent the clinical incidence for all ages.

We next used a non-linear generalized simulated annealing function to determine the optimal global resource allocation for ITNs across a range of budgets. We defined optimality as the funding allocation across countries which minimizes a given objective. We considered two objectives: first, reducing the global number of clinical malaria cases, and second, reducing both the global number of clinical cases and the number of settings not having yet reached a pre-elimination phase. The latter can be interpreted as accounting for an additional positive contribution of progressing towards elimination on top of a reduced case burden (e.g. general health system strengthening through a reduced focus on malaria). To relate funding to the impact on malaria, we incorporated a non-linear relationship between costs and ITN usage, resulting in an increase in the marginal cost of ITN distribution at high coverage levels (Bertozzi-Villa et al., 2021). We considered a range of fixed budgets, with the maximum budget being that which enabled achieving the lowest possible number of cases in the model. Low, intermediate, and high budget levels refer to 25%, 50%, and 75% of this maximum, respectively.

In our main analysis, we ignored the time dimension over which funds are distributed, instead focusing on the endemic equilibrium reached for each level of allocation (sensitivity to this assumption is explored in a second analysis with dynamic re-allocation every 3 years). The optimal strategies were compared with three existing approaches to resource allocation: (1) prioritization of high-transmission settings, (2) prioritization of low-transmission (near-elimination) settings, and (3) proportional allocation by disease burden. Strategies prioritizing high- or low-transmission settings involved the sequential allocation of funding to groups of countries based on their transmission intensity (from highest to lowest EIR or vice versa). The proportional allocation strategy mimics the current allocation algorithm employed by the Global Fund: budget shares are distributed according to the malaria disease burden in the 2000–2004 period (The Global Fund, 2019). To allow comparison with this existing funding model, we also started allocation decisions from the year 2000.

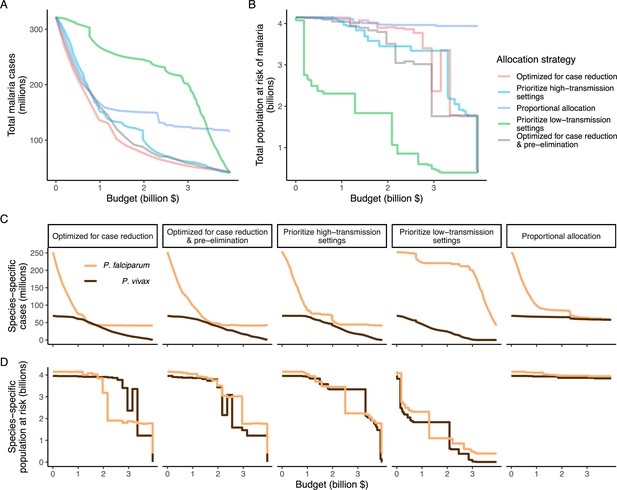

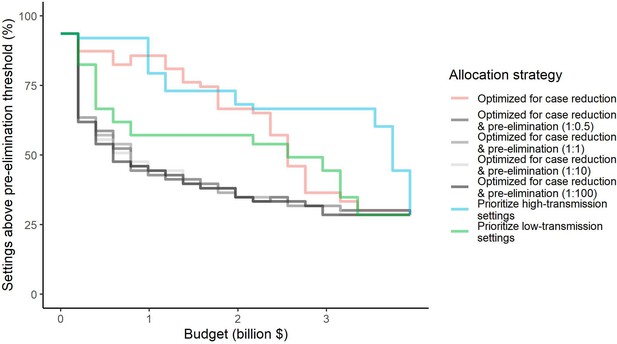

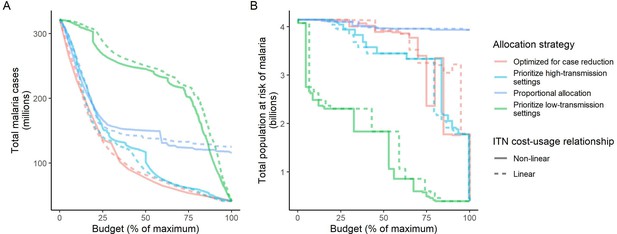

We found that the optimal strategies for reducing total malaria cases (i.e. global burden) and for case reduction and pre-elimination to be similar to the strategy that prioritized funding for high-transmission settings. These three strategies achieved the largest reductions in global malaria cases at all budgets, including reductions of 76%, 73%, and 66% at the intermediate budget level, respectively (Figure 3A, Table 1). At low to intermediate budgets, the proportional allocation strategy also reduced malaria cases effectively by up to 53%. While these four scenarios had very similar effects on malaria cases at low budgets, they diverged with increasing funding, where the proportional allocation strategy did not achieve substantial further reductions. Depending on the available budget, the optimal strategy for case reduction averted up to 31% more cases than prioritization of high-transmission settings and 64% more cases than proportional allocation, corresponding to respective differences of 37.9 and 74.5 million cases globally.

Global clinical cases and population at risk of malaria under different allocation strategies at varying budgets.

The impact on total malaria cases (panel A), total population at risk (panel B), individual P. falciparum and P. vivax cases (panel C), and population at risk of either species (panel D) are shown. Budget levels range from 0, representing no usage of insecticide-treated nets, to the budget required to achieve the maximum possible impact. Optimizing for case reduction generally leads to declining populations at risk as the budget increases, but this is not guaranteed due to the possibility of redistribution of funding between settings to minimize cases. The strategy optimizing case reduction and pre-elimination shown here places the same weighting (1:1) on reaching pre-elimination in a setting as on averting total cases, but conclusions were the same for weights of 0.5–100 on pre-elimination.

Relative reduction in malaria cases and population at risk under different allocation strategies.

Reductions are shown relative to the baseline of 321 million clinical cases and 4.1 billion persons at risk in the absence of interventions. Low, intermediate, and high budget levels represent 25%, 50%, and 75% of the maximum budget, respectively. The strategy optimizing case reduction and pre-elimination shown here places the same weighting (1:1) on reaching pre-elimination in a setting as on averting total cases.

| Clinical cases | Population at risk | ||||

|---|---|---|---|---|---|

| Scenario | Budget level | Number (millions) | Relative reduction (%) | Number (billions) | Relative reduction (%) |

| Optimized for case reduction | Low | 136.1 | 58 | 4.1 | 0 |

| Intermediate | 77.0 | 76 | 3.9 | 6 | |

| High | 53.9 | 83 | 2.4 | 42 | |

| Maximum | 41.5 | 87 | 0.4 | 91 | |

| Optimized for case reduction & pre-elimination | Low | 161.3 | 50 | 4.0 | 3 |

| Intermediate | 87.8 | 73 | 3.5 | 16 | |

| High | 58.8 | 82 | 1.8 | 58 | |

| Maximum | 41.5 | 87 | 0.4 | 91 | |

| Prioritize high-transmission settings | Low | 153.9 | 52 | 4.0 | 2 |

| Intermediate | 109.5 | 66 | 3.4 | 17 | |

| High | 61.8 | 81 | 3.3 | 19 | |

| Maximum | 41.5 | 87 | 0.4 | 91 | |

| Proportional allocation | Low | 166.9 | 48 | 4.1 | 1 |

| Intermediate | 150.4 | 53 | 4.0 | 4 | |

| High | 123.8 | 61 | 4.0 | 4 | |

| Maximum | 116.0 | 64 | 3.9 | 5 | |

| Prioritize low-transmission settings | Low | 268.2 | 17 | 2.3 | 44 |

| Intermediate | 245.2 | 24 | 1.8 | 56 | |

| High | 202.1 | 37 | 0.5 | 88 | |

| Maximum | 41.5 | 87 | 0.4 | 91 | |

We additionally found there to be a trade-off between reducing global cases and reducing the global population at risk of malaria. Both the optimal strategies and the strategy prioritizing high-transmission settings did not achieve substantial reductions in the global population at risk until large investments were reached (Figure 3B, Table 1). Even at a high budget, the global population at risk was only reduced by 19% under the scenario prioritizing high-transmission settings, with higher reductions of 42–58% for the optimal strategies, while proportional allocation had almost no effect on this outcome. Conversely, diverting funding to prioritize low-transmission settings was highly effective at increasing the number of settings eliminating malaria, achieving a 56% reduction in the global population at risk already at intermediate budgets. However, this investment only led to a minimal reduction of 24% in total malaria case load (Figure 3, Table 1). At high budget levels, prioritizing low-transmission settings resulted in up to 3.8 times (a total of 159.4 million) more cases than the optimal allocation for case reduction. Despite the population at risk remaining relatively large with the optimal strategy for case reduction and pre-elimination, it nevertheless led to pre-elimination in more malaria-endemic settings than all other strategies (Appendix 1—figure 7), in addition to close to minimum cases across all budgets (Figure 3).

The allocation strategies also had differential impacts on P. falciparum and P. vivax cases, with case reductions generally occurring first for P. falciparum except when prioritizing low-transmission settings. P. vivax cases were not substantially affected at low global budgets for all other allocation strategies, and proportional allocation had almost no effect on reducing P. vivax clinical burden at any budget (Figure 3C), leading to a temporary increase in the proportion of total cases attributable to P. vivax relative to P. falciparum. The global population at risk remained high with the optimal strategy for case reduction even at high budgets, partly due to a large remaining population at risk of P. vivax infection (Figure 3D), which was not targeted when aiming to minimize total cases (Figure 1).

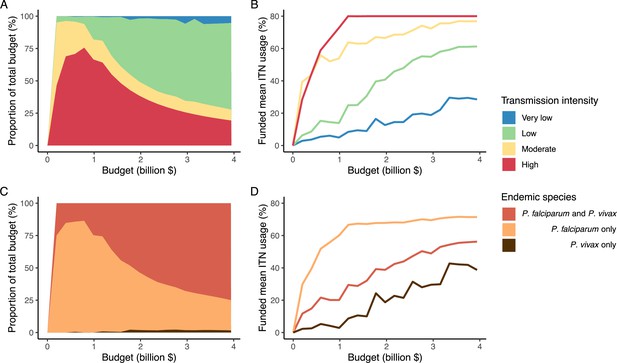

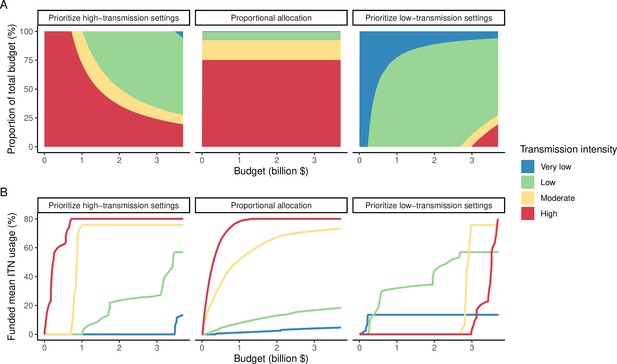

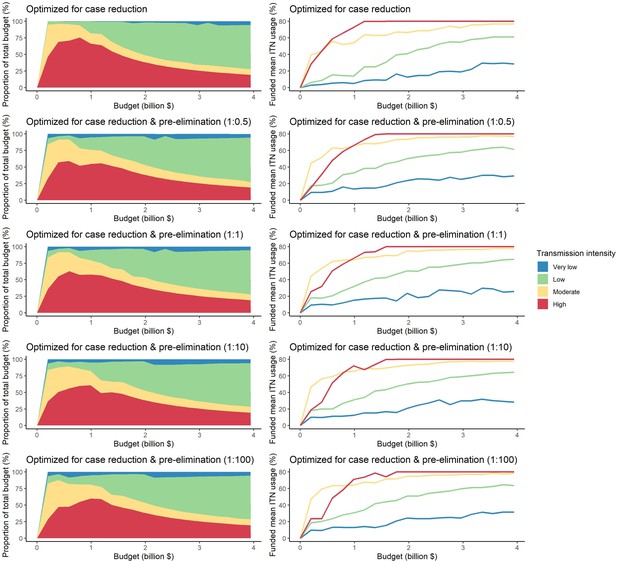

The optimized distribution of funding to minimize clinical burden depended on the available global budget and was driven by the setting-specific transmission intensity and the population at risk (Figure 4, Figure 1). With very low to low budget levels, as much as 85% of funding was allocated to moderate to high transmission settings (Figure 4A, Appendix 1—figure 8A). This allocation pattern led to the maximum ITN usage of 80% being reached in settings of high transmission intensity and smaller population sizes even at low budgets, while maintaining lower levels in low-transmission settings with larger populations (Figure 4B, Appendix 1—figure 8B). The proportion of the budget allocated to low and very low transmission settings increased with increasing budgets, and low transmission settings received the majority of funding at intermediate to maximum budgets. This allocation pattern remained very similar when optimizing for both case reduction and pre-elimination (Appendix 1—figure 9). Similar patterns were also observed for the optimized distribution of funding between settings endemic for only P. falciparum compared to P. falciparum and P. vivax co-endemic settings (Figure 4C–D), with the former being prioritized at low to intermediate budgets. At the maximum budget, 70% of global funding was targeted at low- and very low-transmission settings co-endemic for both parasite species.

Optimal strategy for funding allocation across settings to minimize malaria case burden at varying budgets.

Panels show optimized allocation patterns across settings of different transmission intensities (panels A and B) and different endemic parasite species (panels C and D). The proportion of the total budget allocated to each setting (panels A and C) and the resulting mean population usage of insecticide-treated nets (ITNs) (panels B and D) are shown.

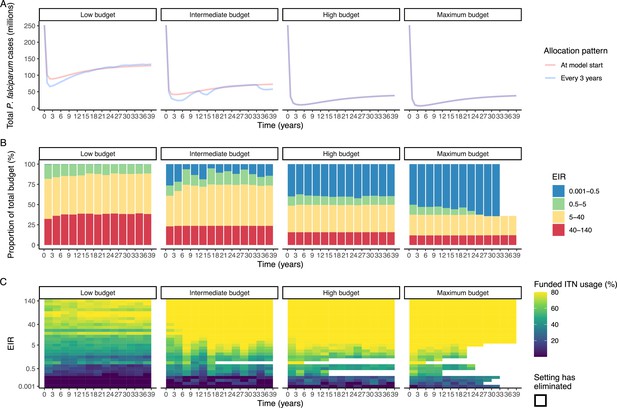

To evaluate the robustness of the results, we conducted a sensitivity analysis on our assumption of ITN distribution efficiency. Results remained similar when assuming a linear relationship between ITN usage and distribution costs (Appendix 1—figure 10). While the main analysis involves a single allocation decision to minimize long-term case burden (leading to a constant ITN usage over time in each setting irrespective of subsequent changes in burden), we additionally explored an optimal strategy with dynamic re-allocation of funding every 3 years to minimize cases in the short term. At high budgets, capturing dynamic changes over time through re-allocation of funding based on minimizing P. falciparum cases every 3 years led to the same case reductions over time as a one-time optimization with the allocation of a constant ITN usage (Appendix 1—figure 11). At lower budgets, re-allocation every 3 years achieved a higher impact at several timepoints, but total cases remained similar between the two approaches. Although reallocation of resources from settings which achieved elimination to higher transmission settings did not lead to substantially fewer cases, it reduced total spending over the 39 year period in some cases (Appendix 1—figure 11).

Discussion

Our study highlights the potential impact that funding allocation decisions could have on the global burden of malaria. We estimated that optimizing ITN allocation to minimize global clinical incidence could, at a high budget, avert 83% of clinical cases compared to no intervention. In comparison, the optimal strategy to minimize the clinical incidence and maximize the number of settings reaching pre-elimination averted 82% of clinical cases, prioritizing high-transmission settings 81%, proportional allocation 61%, and prioritizing low-transmission settings 37%. Our results support initially prioritizing funding towards reaching high ITN usage in the high-burden P. falciparum- endemic settings to minimize global clinical cases and advance elimination in more malaria-endemic settings, but highlight the trade-off between this strategy and reducing the global population at risk of malaria as well as addressing the burden of P. vivax.

Prioritizing low-transmission settings demonstrated how focusing on ‘shrinking the malaria map’ by quickly reaching elimination in low-transmission countries diverts funding away from the high-burden countries with the largest caseloads. Prioritizing low-transmission settings achieved elimination in 42% of settings and reduced the global population at risk by 56% when 50% of the maximum budget had been spent, but also resulted in 3.2 times more clinical cases than the optimal allocation scenario. Investing a larger share of global funding towards high-transmission settings aligns more closely with the current WHO ‘high burden to high impact’ approach, which places an emphasis on reducing the malaria burden in the 11 countries which comprise 70% of global cases (World Health Organization, 2007; World Health Organization, 2019). Previous research supports this approach, finding that the 20 highest-burden countries would need to obtain 88% of global investments to reach case and mortality risk estimates in alignment with GTS goals (Patouillard et al., 2017). This is similar to the modeled optimized funding strategy presented here, which allocated up to 76% of very low budgets to settings of high transmission intensity located in sub-Saharan Africa. An initial focus on high- and moderate-transmission settings is further supported by our results showing that a balance can be found between achieving close to optimal case reductions while also progressing towards elimination in the maximum number of settings. Even within a single country, targeting interventions to local hot-spots has been shown to lead to higher cost savings than universal application (Barrenho et al., 2017), and could lead to elimination in settings where untargeted interventions would have little impact (Bousema et al., 2012).

Assessing optimal funding patterns is a global priority due to the funding gap between supply and demand for resources for malaria control and elimination (World Health Organization, 2007; World Health Organization, 2022b). However, allocation decisions will remain important even if more funding becomes available, as some of the largest differences in total cases between the modeled strategies occurred at intermediate to high budgets. Our results suggest that most of global funding should only be focused in low-transmission settings co-endemic for P. falciparum and P. vivax at high budgets once ITN use has already been maximized in high-transmission settings. Global allocation decisions are likely to affect P. falciparum and P. vivax burden differently, which could have implications for the future global epidemiology of malaria. For example, with a focus on disease burden reduction, a temporary increase in the proportion of malaria cases attributable to P. vivax was projected, in line with recent observations in near-elimination areas (Battle et al., 2019; Price et al., 2020). Nevertheless, even when international funding for malaria increased between 2007–2009, African countries remained the major recipients of financial support, while P. vivax-dominant countries were not as well funded (Snow et al., 2010). This serves as a reminder that achieving the elimination of malaria from all endemic countries will ultimately require targeting investments so as to also address the burden of P. vivax malaria.

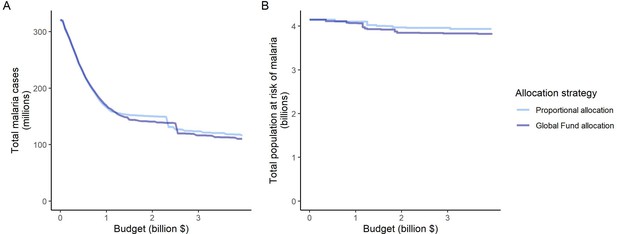

Different priorities in resource allocation decisions greatly affect which countries receive funding and what health benefits are achieved. The modeled strategies follow key ethical principles in the allocation of scarce healthcare resources, such as targeting those of greatest need (prioritizing high-transmission settings, proportional allocation) or those with the largest expected health gain (optimized for case reduction, prioritizing high-transmission settings) (World Health Organization, 2007; World Health Organization, 2013). Allocation proportional to disease burden did not achieve as great an impact as other strategies because the funding share assigned to settings was constant irrespective of the invested budget and its impact. In modeling this strategy, we did not reassign excess funding in high-transmission settings to other malaria interventions, as would likely occur in practice. This illustrates the possibility that such an allocation approach can potentially target certain countries disproportionally and result in further inequities in health outcomes (Barrenho et al., 2017). From an international funder perspective, achieving vertical equity might, therefore, also encompass higher disbursements to countries with lower affordability of malaria interventions (Barrenho et al., 2017), as reflected in the Global Fund’s proportional allocation formula which accounts for the economic capacity of countries and specific strategic priorities (The Global Fund, 2019). While these factors were not included in the proportional allocation used here, the estimated impact of these two strategies was nevertheless very similar (Appendix 1—figure 12).

While our models are based on country patterns of transmission settings and corresponding populations in 2000, there are several factors leading to heterogeneity in transmission dynamics at the national and sub-national levels which were not modeled and limit our conclusions. Seasonality, changing population size, and geographic variation in P. vivax relapse patterns or in mosquito vectors could affect the projected impact of ITNs and optimized distribution of resources across settings. The two representative Anopheles species used in the simulations are also both very anthropophagic, which may have led to an overestimation of the effect of ITNs in some settings. By using ITNs as the sole means to reduce mosquito-to-human transmission, we did not capture the complexities of other key interventions that play a role in burden reduction and elimination, the geospatial heterogeneity in cost-effectiveness and optimized distribution of intervention packages on a sub-national level, or related pricing dynamics (Conteh et al., 2021; Drake et al., 2017). For P. vivax in particular, reducing the global economic burden and achieving elimination will depend on the incorporation of hypnozoitocidal treatment and G6PD screening into case management (Devine et al., 2021). Furthermore, for both parasites, intervention strategies generally become more focal as transmission decreases, with targeted surveillance and response strategies prioritized over widespread vector control. Therefore, policy decisions should additionally be based on analysis of country-specific contexts, and our findings are not informative for individual country allocation decisions. Results do, however, account for non-linearities in the relationship between ITN distribution and usage to represent changes in cost as a country moves from control to elimination: interventions that are effective in malaria control settings, such as widespread vector control, may be phased out or limited in favor of more expensive active surveillance and a focus on confirmed diagnoses and at-risk populations (Shretta et al., 2017). We also assumed that transmission settings are independent of each other, and did not allow for the possibility of re-introduction of disease, such as has occurred throughout the Eastern Mediterranean from imported cases (World Health Organization, 2007). While our analysis presents allocation strategies to progress toward eradication, the results do not provide insight into the allocation of funding to maintain elimination. In practice, the threat of malaria resurgence has important implications for when to scale back interventions.

Our analysis demonstrates the most impactful allocation of a global funding portfolio for ITNs to reduce global malaria cases. Unifying all funding sources in a global strategic allocation framework as presented here requires international donor allocation decisions to account for available domestic resources. National governments of endemic countries contribute 31% of all malaria-directed funding globally (World Health Organization, 2020), and government financing is a major source of malaria spending in near-elimination countries in particular (Haakenstad et al., 2019). Within the wider political economy which shapes the funding landscape and priority setting, there remains substantial scope for optimizing allocation decisions, including improving the efficiency of within-country allocation of malaria interventions. Subnational malaria elimination in localized settings within a country can also provide motivation for continued elimination in other areas and friendly competition between regions to boost global elimination efforts (Lindblade and Kachur, 2020). Although more efficient allocation cannot fully compensate for projected shortfalls in malaria funding, mathematical modeling can aid efforts in determining optimal approaches to achieve the largest possible impact with available resources.

Materials and methods

Transmission models

Request a detailed protocolWe used deterministic compartmental versions of two previously published individual-based transmission models of P. falciparum and P. vivax malaria to estimate the impact of varying ITN usage on clinical incidence in different transmission settings. The P. falciparum model has previously been fitted to age-stratified data from a variety of sub-Saharan African settings to recreate observed patterns in parasite prevalence (PfPR2-10), the incidence of clinical disease, immunity profiles, and vector components relating to rainfall, mosquito density, and the EIR (Griffin et al., 2016). We developed a deterministic version of an existing individual-based model of P. vivax transmission, originally calibrated to data from Papua New Guinea but also shown to reproduce global patterns of P. vivax prevalence and clinical incidence (White et al., 2018). Models for both parasite species are structured by age and heterogeneity in exposure to mosquito bites, and account for human immunity patterns. They model mosquito transmission and population dynamics, and the impact of scale-up of ITNs in identical ways. Full assumptions, mathematical details, and parameter values can be found in Appendix 1 and in previous publications (Griffin et al., 2010; Griffin et al., 2014; Griffin et al., 2016; White et al., 2018).

Data sources

Request a detailed protocolWe calibrated the model to baseline transmission intensity in all malaria-endemic countries before the scale-up of interventions, using the year 2000 as an indicator of these levels in line with the current allocation approach taken by the Global Fund (The Global Fund, 2019). Annual EIR was used as a measure of parasite transmission intensity, representing the rate at which people are bitten by infectious mosquitoes. We simulated models to represent a wide range of EIRs for P. falciparum and P. vivax. These transmission settings were matched to 2000 country-level prevalence data resulting in EIRs of 0.001–80 for P. falciparum and 0.001–1.3 for P. vivax. P. falciparum estimates came from parasite prevalence in children aged 2–10 years and P. vivax prevalence estimates came from light microscopy data across all ages, based on standard reporting for each species (Weiss et al., 2019; Battle et al., 2019). The relationship between parasite prevalence and EIR for specific countries is shown in Appendix 1—figures 5 and 6. In each country, the population at risk for P. falciparum and P. vivax malaria was obtained by summing WorldPop gridded 2000 global population estimates (Tatem, 2017) within Malaria Atlas Project transmission spatial limits using geoboundaries (Runfola et al., 2020) (Appendix 1: Country-level data and modeling assumptions on the global malaria distribution). The analysis was conducted on the national level, since this scale also applies to funding decisions made by international donors (The Global Fund, 2019). As this exercise represents a simplification of reality, population sizes were held constant, and projected population growth is not reflected in the number of cases and the population at risk in different settings. Seasonality was also not incorporated in the model, as EIRs are matched to annual prevalence estimates and the effects of seasonal changes are averaged across the time frame captured. For all analyses, countries were grouped according to their EIR, resulting in a range of transmission settings compatible with the global distribution of malaria. Results were further summarized by grouping EIRs into broader transmission intensity settings according to WHO prevalence cut-offs of 0–1%, 1–10%, 10–35%, and ≥35% (World Health Organization, 2007; World Health Organization, 2022a). This corresponded approximately to classifying EIRs of less than 0.1, 0.1–1, 1–7, and 7 or higher as very low, low, moderate and high transmission intensity, respectively.

Interventions

Request a detailed protocolIn all transmission settings, we simulated the impact of varying coverages of ITNs on clinical incidence. While most countries implement a package of combined interventions, to reduce the computational complexity of the optimization we considered the impact of ITN usage alone in addition to 40% treatment of clinical disease. ITNs are a core intervention recommended for large-scale deployment in areas with ongoing malaria transmission by WHO (Winskill et al., 2019; World Health Organization, 2007; World Health Organization, 2022a) and funding for vector control represents much of the global investments required for malaria control and elimination (Patouillard et al., 2017). Modeled coverages represent population ITN usage between 0 and 80%, with the upper limit reflective of common targets for universal access (Koenker et al., 2018). In each setting, the models were run until clinical incidence stabilized at a new equilibrium with the given ITN usage.

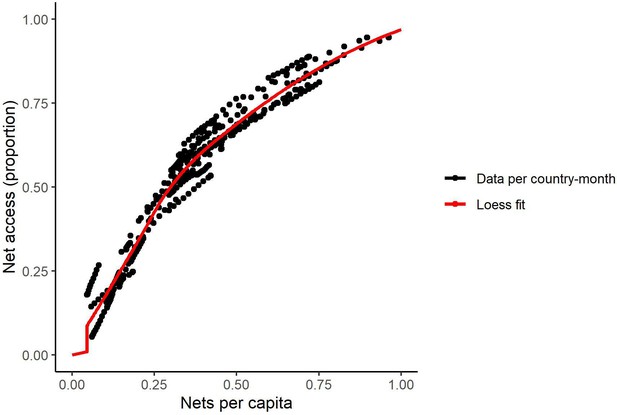

Previous studies have shown that, as population coverage of ITNs increases, the marginal cost of distribution increases as well (Bertozzi-Villa et al., 2021). We incorporated this non-linearity in costs by estimating the annual ITN distribution required to achieve the simulated population usage based on published data from across Africa, assuming that nets would be distributed on a 3-yearly cycle and accounting for ITN retention over time (Appendix 1). The cost associated with a given simulated ITN usage was calculated by multiplying the number of nets distributed per capita per year by the population size and by the unit cost of distributing an ITN, assumed to be $3.50 (Sherrard-Smith et al., 2022).

Optimization

Request a detailed protocolThe optimal funding allocation for case reduction was determined by finding the allocation of ITNs b across transmission settings that minimizes the total number of malaria cases at equilibrium. Case totals were calculated as the sum of the product of clinical incidence cinci and the population pi in each transmission setting i. Simultaneous optimization for case reduction and pre-elimination was implemented with an extra weighting term in the objective function, corresponding to a reduction in total remaining cases by a proportion w of the total cases averted by the ITN allocation, C. This, therefore, represents a positive contribution for each setting reaching the pre-elimination phase. The weighting on pre-elimination compared to case reduction was 0 in the scenario optimized for case reduction, and varied between 0.5 and 100 times in the other optimization scenarios. Resource allocation must respect a budget constraint, which requires that the sum of the cost of the ITNs distributed cannot exceed the initial budget B, with the initial number of ITNs distributed in setting and c the cost of a single pyrethroid-treated net. The second constraint requires that the ITN usage must be between 0 and 80% (Koenker et al., 2018), with ITN usage being a function of ITNs distributed, as shown in the following equation.

The optimization was undertaken using generalized simulated annealing (Xiang et al., 2013). We included a penalty term in the objective function to incorporate linear constraints. Further details can be found in Appendix 1.

The optimal allocation strategy for minimizing cases was also examined over a period of 39 years using the P. falciparum model, comparing a single allocation of a constant ITN usage to minimize clinical incidence at 39 years, to reallocation every 3 years (similar to Global Fund allocation periods The Global Fund, 2016) leading to varying ITN usage over time. At the beginning of each 3 year period, we determined the optimized allocation of resources to be held fixed until the next round of funding, with the objective of minimizing 3 year global clinical incidence. Once P. falciparum elimination is reached in a given setting, ITN distribution is discontinued, and in the next period, the same total budget B will be distributed among the remaining settings. We calculated the total budget required to minimize case numbers at 39 years and compared the impact of re-allocating every 3 years with a one-time allocation of 25%, 50%, 75%, and 100% of the budget. To ensure computational feasibility, 39 years was used as it was the shortest time frame over which the effect of re-distribution of funding from countries having achieved elimination could be observed.

Analysis

We compared the impact of the two optimal allocation strategies (scenarios 1 A and 1B) and three additional allocation scenarios on global malaria cases and the global population at risk. Modeled scenarios are shown in Table 2. Scenarios 1C-1E represent existing policy strategies that involve prioritizing high-transmission settings, prioritizing low-transmission (near-elimination) settings, or resource allocation proportional to disease burden in the year 2000. Global malaria case burden and the population at risk were compared between baseline levels in 2000 and after reaching an endemic equilibrium under each scenario for a given budget.

Overview of modeled scenarios for allocation of funding to different transmission settings.

Strategies 1A-1E compare resource allocation scenarios using clinical incidence values from each transmission setting at equilibrium after insecticide-treated net (ITN) coverage has been introduced. Strategies 2A-2B are compared as part of the allocation over time sub-analysis. EIR: entomological inoculation rate.

| Strategy | Modeling approach/assumptions | |

|---|---|---|

| 1A | Optimized for total malaria case reduction | Generalized simulated annealing is used to determine the optimal allocation of a given budget to minimize the total number of global malaria cases. |

| 1B | Optimized for total malaria case reduction and pre-elimination | Generalized simulated annealing is used to determine the optimal allocation of a given budget to minimize the total number of global malaria cases while placing a premium on the pre-elimination phase being reached in a setting. |

| 1C | Prioritize high-transmission settings | Funding is allocated to groups of countries according to transmission intensity (P. falciparum + P. vivax entomological inoculation rate, EIR). For a given budget, the transmission settings with the highest EIR are prioritized, increasing ITN coverage in increments of 1% in each setting until malaria is eliminated or until an increase in coverage leads to no further decrease in cases, before allocating to the next-highest EIR setting. |

| 1D | Prioritize low-transmission (near-elimination) settings | Funding is allocated to groups of countries according to transmission intensity (P. falciparum + P. vivax EIR). For a given budget, the transmission settings with the lowest EIR are prioritized, increasing ITN coverage in increments of 1% in each setting until malaria is eliminated or until an increase in coverage leads to no further decrease in cases, before allocating to the next-lowest EIR setting. |

| 1E | Proportional allocation | Funding is allocated to groups of countries in proportion to their disease burden. Budget shares are calculated using country data from the World Malaria Report (World Health Organization, 2007; World Health Organization, 2020) and account for the country-specific total malaria cases (P. falciparum and P. vivax), deaths, incidence and mortality rate in 2000–2004, scaled by the subsequent increase in the population at risk (The Global Fund, 2019). |

| 2A | One-time optimized allocation for P. falciparum case reduction | Generalized simulated annealing is used to determine the optimized allocation at a given budget, minimizing the total number of global P. falciparum cases after 39 years, resulting in constant ITN usage in each setting over this time period. |

| 2B | Optimized allocation every three years for P. falciparum case reduction | Generalized simulated annealing is used to determine the optimized allocation at a given budget, minimizing the total number of global P. falciparum cases after every 3 year period for 39 years, allowing ITN usage to vary in each setting every 3 years. |

Certification of malaria elimination requires proof that the chain of indigenous malaria transmission has been interrupted for at least 3 years and a demonstrated capacity to prevent return transmission (World Health Organization, 2007; World Health Organization, 2018). In our analysis, transmission settings were defined as having reached malaria elimination once less than one case remained per the setting’s total population. Once a setting reaches elimination, the entire population is removed from the global total population at risk, representing a ‘shrink the map’ strategy. The pre-elimination phase was defined as having reached less than 1 case per 1000 persons at risk in a setting (Mendis et al., 2009).

All strategies were evaluated at different budgets ranging from 0 to the minimum investment required to achieve the lowest possible number of cases in the model (noting that ITNs alone are not predicted to eradicate malaria in our model). No distinctions were made between national government spending and international donor funding, as the purpose of the analysis was to look at resource allocation and not to recommend specific internal and external funding choices.

All analyses were conducted in R v. 4.0.5 (R Foundation for Statistical Computing, Vienna, Austria). The sf (v. 0.9–8, Pebesma, 2018), raster (v. 3.4–10, Hijmans and Van Etten, 2012), and terra (v.1.3–4, Hijmans et al., 2022) packages were used for spatial data manipulation. The Akima package (v.0.6–2.2, Akima et al., 2022) was used for surface development, and the GenSA package (v.1.1.7, Gubian et al., 2023) for model optimization.

Appendix 1

Mathematical models

Overview

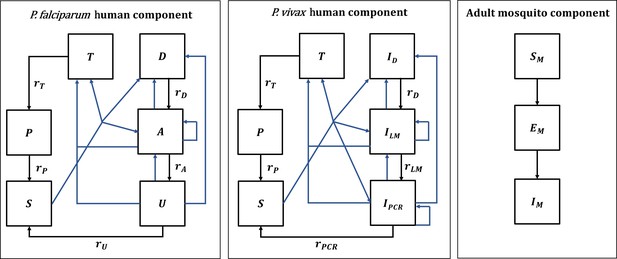

In this paper, we use an existing deterministic, compartmental, mathematical model of P. falciparum malaria transmission between humans and mosquitoes, which was originally calibrated to age-stratified data from settings across sub-Saharan Africa (Griffin et al., 2016). We also developed a deterministic version of an existing individual-based model of P. vivax transmission, originally calibrated to data from Papua New Guinea but also shown to reproduce global epidemiological patterns (White et al., 2018). Both models are structured by age and heterogeneity in exposure to mosquito bites, and allow for the presence of maternal immunity at birth and naturally acquired immunity across the life course. The mosquito and vector control components are modeled identically in both models, except for the force of infection acting on mosquitoes. A diagram of the model structures with human and adult mosquito components is shown in Appendix 1-figure 1.

Population and transmission dynamics were modeled separately for both species, assuming they are independent of each other, because the epidemiological significance of biological interactions between the parasites within hosts remains unclear (Mueller et al., 2013).

Note that while the term ‘individuals’ may be used in descriptions, the models are compartmental and do not track individuals; compartments represent the average number of people in a given state.

Malaria transmission model; diagram adapted from Griffin et al., 2016 and White et al., 2018.

Humans move through six states in the models for both species: S (susceptible), D (untreated symptomatic infection), T (successfully treated symptomatic infection), A (asymptomatic infection), U (asymptomatic sub-patent infection), and P (prophylaxis) in the P. falciparum model, and S (susceptible), ID (untreated symptomatic infection), T (successfully treated symptomatic infection), ILM (asymptomatic light microscopy detectable blood-stage infection), IPCR (asymptomatic sub-microscopic PCR detectable blood-stage infection) and P (prophylaxis) in the P. vivax model. New infections (including superinfections) are highlighted in blue but parameters are not shown. Rates rD, rA, rU, rT, rP, rLM, and rPCR determine the mean duration of each state. Hypnozoites states in the P. vivax model are not shown on the diagram. Adult female mosquitoes move through three model compartments: Sm (susceptible), Em (exposed), and Im (infected).

Human demography

In both the P. falciparum and P. vivax models, the aging process in the human population follows an exponential distribution. Humans can reach a maximum age of 100 years and experience a constant death rate of 1/21 per year based on the assumed median age of the population. The birth rate was assumed to equal the mortality rate so that the population remains stable over time. Demographic changes over time are, therefore, not accounted for.

In all following sections, human demographic processes are omitted from the equations of the transmission models and the immunity models for simplicity. All compartments experience the same constant mortality rate, while all births occur in the susceptible compartment.

Heterogeneity in mosquito biting rates

In both models, exposure to mosquito bites is assumed to depend on age, due to varying body surface area and behavioral patterns.

The relative biting rate at age is calculated as:

Where and are estimated parameters determining the relationship between age and biting rate.

Additionally, the human population in the model is stratified according to lifetime relative biting rate which represents the heterogeneity in exposure to mosquito bites that occurs at various spatial scales, for example, due to attractiveness of humans to mosquitoes, housing standards and proximity to mosquito breeding sites, and is described by a log-normal distribution with a mean of 1, as follows:

P. falciparum human model component

In the P. falciparum model, humans move through four states of transmission and are present in only one of the six states at each timestep: susceptible (S), untreated symptomatic infection (D), successfully treated symptomatic infection (T), asymptomatic infection (A), asymptomatic sub-patent infection (U), and prophylaxis (P). Individuals in the model are born susceptible to infection but are temporarily protected by maternal immunity during the first six months of life. Humans are exposed to infectious bites from mosquitoes and are infected at a rate Λ, representing the force of infection from mosquitoes to humans. The force of infection depends on an individual’s pre-erythrocytic immunity, the age-dependent biting rate, and the mosquito population size and level of infectivity. Following a latent period, dE, and depending on clinical immunity levels, a proportion ϕ of infected individuals develop clinical disease, while the remaining move into the asymptomatic infection state. A proportion fT of those with clinical disease are successfully treated. Treated individuals recover from infection at the rate rT and move to the prophylaxis state, which represents a period of drug-dependent partial protection from reinfection. Recovery from untreated symptomatic infection to the asymptomatic infection state occurs at a rate rD, while those with asymptomatic infection develop a sub-patent infection at a rate rA. The sub-patent infection and prophylaxis states then clear infection and return to the susceptible state at rates rU and rP, respectively. In the susceptible compartment, re-infection can occur, while asymptomatic and sub-patent infections are also susceptible to superinfection, potentially giving rise to further clinical cases. P. falciparum parameters are listed in Appendix 1—table 1.

The human component of the model is described by the following set of partial differential equations with regard to time and age :

Note that age- and time-dependence in state variables and parameters, as well as mortality and birth rates, are omitted in equations for clarity.

Accounting for the heterogeneity and age-dependence in mosquito biting rates described above, the force of infection and the EIR for age at time are given by:

Where is the mean entomological inoculation rate (EIR) experienced by adults at time , and is the probability that a human will be infected when bitten by an infectious mosquito.

The mean EIR experienced by adults is represented by:

Where is the mosquito biting rate in humans, is the compartment for adult infectious mosquitoes (see vector model component), and is a normalization constant for the biting rate over various age groups with a population age distribution of , as follows.

The probability of infection b, probability of clinical symptomatic disease ϕ, and recovery rate from asymptomatic infection rA, all depend on immunity levels. The acquisition and decay of naturally-acquired immunity is tracked dynamically in the model and is driven by both age and exposure. Naturally-acquired immunity affects three different outcomes in the model, leading to: (1) a reduced probability of developing a blood-stage infection following an infectious bite due to pre-erythrocytic immunity, , (2) a reduced probability of progression to clinical disease following infection, dependent on exposure-driven and maternally acquired clinical immunity, and , and (3) a reduced probability of a blood-stage infection being detected by microscopy, dependent on acquired immunity to the detectability of infection, .

The following partial differential equations represent exposure-driven immunity levels at time and age .

Pre-erythrocytic immunity:

Clinical immunity:

Detection immunity:

Where parameters represent a refractory period during which the different types of immunity cannot be further boosted after receiving a boost, and where parameters stand for the mean duration of the different types of immunity.

Maternal immunity is acquired and lost as follows:

Where is the average duration of maternal immunity, is the proportion of the mother’s clinical immunity acquired by the newborn, and denotes the clinical immunity level of a 20-year-old woman.

Immunity levels are converted into time- and age-dependent probabilities using Hill functions.

The probability that a human will be infected when bitten by an infectious mosquito, , can be represented as:

Where is the maximum probability of infection (with no immunity), is the maximum relative reduction in the probability of infection due to immunity, and and are scale and shape parameters estimated during model fitting.

The probability of a new blood-stage infection becoming symptomatic, , is represented by:

Where is the maximum probability of becoming symptomatic (with no immunity), is the maximum relative reduction in the probability of becoming symptomatic due to immunity, and and are scale and shape parameters, respectively.

Immunity can also lead to blood-stage infections becoming sub-patent with low parasitemias. The probability that an asymptomatic infection is detectable by microscopy, , is represented by:

Where is the minimum probability of detectability (with full immunity), and and are scale and shape parameters, respectively. is an age-dependent function modifying the detectability of infection:

With γD and D representing shape and scale parameters, and representing the time-scale at which immunity changes with age.

P. falciparum human model parameter values.

Full details can be found in the original publication (Griffin et al., 2016), including references for parameters and intervals for the prior and posterior distributions (median values of the posterior distribution are used in model simulations).

| Parameter | Symbol | Estimate |

|---|---|---|

| Human infection duration (days) | ||

| Latent period | 12 | |

| Patent infection | 195 | |

| Clinical disease (untreated) | 5 | |

| Treatment of clinical disease | 5 | |

| Sub-patent infection | 110.299 | |

| Prophylaxis | 15 | |

| Age and heterogeneity | ||

| Age-dependent biting parameter | 0.85 | |

| Age-dependent biting parameter | 8 years | |

| Variance of the log heterogeneity in biting rates | 1.67 | |

| Pre-erythrocytic immunity reducing probability of infection | ||

| Duration of refractory period in which immunity is not boosted | 7.19919 days | |

| Duration of pre-erythrocytic immunity | 10 years | |

| Maximum probability of infection due to no immunity | 0.590076 | |

| Maximum relative reduction in probability of infection due to immunity | 0.5 | |

| Scale parameter | 43.8787 | |

| Shape parameter | 2.15506 | |

| Immunity reducing probability of clinical disease | ||

| Duration of refractory period in which immunity is not boosted | 6.06349 days | |

| Duration of clinical immunity | 30 years | |

| New-born immunity relative to mother’s clinical immunity | 0.774368 | |

| Duration of maternal immunity | 67.6952 days | |

| Maximum probability of clinical disease due to no immunity | 0.791666 | |

| Maximum relative reduction in probability of clinical disease due to immunity | 0.000737 | |

| Scale parameter | 18.02366 | |

| Shape parameter | 2.36949 | |

| Immunity reducing probability of detection | ||

| Duration of refractory period in which immunity is not boosted | 9.44512 days | |

| Duration of detection immunity | 10 years | |

| Minimum probability of detection due to maximum immunity | 0.160527 | |

| Scale parameter | 1.577533 | |

| Shape parameter | 0.476614 | |

| Scale parameter relating age to immunity | 21.9 years | |

| Time-scale at which immunity changes with age | 0.007055 | |

| Shape parameter relating age to immunity | 4.8183 |

P. vivax human model component

In the P. vivax model, acquisition, and recovery from blood-stage infection in the absence of treatment is also represented by four compartments: susceptible (S), untreated symptomatic infection (ID), successfully treated symptomatic infection (T), asymptomatic patent blood-stage infection detectable by light microscopy (ILM), asymptomatic sub-microscopic infection not detectable by light microscopy, but detectable by PCR (IPCR), and prophylaxis (P). Additionally, the model represents the liver stage of P. vivax infection by tracking average hypnozoite batches in the population. Hypnozoites can form after an infectious bite and remain dormant in the liver for up to several years, which can give rise to relapse blood-stage infections. P. vivax parameters are listed in Appendix 1—table 2.

New blood-stage infections can, therefore, originate from either mosquito bites or relapses and are represented by the force of infection . The force of infection depends on the age-dependent biting rate, the mosquito population size and its level of infectivity, the probability of infection resulting from an infectious bite, the latent period between sporozoite inoculation and development of blood-stage merozoites, dE, as well as relapse infections from the liver stage. Upon infection, a proportion of humans develop infection detectable by light microscopy (LM), while the remainder have low-density parasitemia and move into the IPCR compartment. A proportion of those with LM-detectable infection develop a clinical episode, of which a proportion are successfully treated with a blood-stage antimalarial. Treated individuals recover from infection at rate rT and move to the prophylaxis state, which provides temporary protection from reinfection before becoming susceptible again at a rate rP. Recovery from clinical disease to asymptomatic LM-detectable infection, from asymptomatic LM-detectable infection to asymptomatic PCR-detectable infection, and from asymptomatic PCR-detectable infection to susceptibility occur at rates rD, rLM, and rPCR, respectively. Newborns are susceptible to infection, have no hypnozoites, and are temporarily protected by maternal immunity. Reinfection is possible after recovery, and those with asymptomatic blood stage infections (ILM and IPCR) are susceptible to superinfection, potentially giving rise to further clinical cases.

The dynamics of hypnozoite infection in the model describe the accumulation and clearance of batches of hypnozoites in the liver, whereby each new (super-)infection from an infectious mosquito bite creates a new batch. This process occurs for each model compartment and is described in detail in the original publication (White et al., 2018). Hypnozoites from any batch can re-activate and cause a relapse at a rate , and batches are cleared at a constant rate , which reduces the number of batches from to . For computational efficiency, the possible number of batches in the population must be limited to a maximum value , so that superinfections among the population with do not lead to an increase in hypnozoite batch numbers. We assumed a maximum batch number of 2, which increased computational efficiency and aligned with modeled distributions of hypnozoite batch numbers in the population for the simulated low transmission intensities.

The human component of the model is then described by the following set of partial differential equations with regard to time and age :

Where and are the relapse and clearance rates of hypnozoite batch , respectively. Age- and time-dependence in state variables and parameters, as well as mortality and birth rates, are omitted in the equations for clarity.

The equations reflect the accumulation of hypnozoite batches from to due to infections arising from new infectious bites (), but not due to relapse infections (). The total force of blood-stage infection is, therefore:

Similar to the P. falciparum model, the force of infection from mosquito bites accounts for heterogeneity and age-dependence in mosquito biting rates as follows:

Where is the mean entomological inoculation rate (EIR) experienced by adults at time , and is the probability that a human will be infected when bitten by an infectious mosquito. In the P. vivax model, is a constant and does not depend on immunity levels. In the calculation of the mean EIR experienced by adults, is the mosquito biting rate in humans, is the compartment for adult infectious mosquitoes (see vector model component), and is a normalization constant for the biting rate over various age groups with a population age distribution of .

Transmission dynamics in the model are influenced by anti-parasite (AP) and clinical immunity (AC) against P. vivax. Anti-parasite immunity is assumed to reduce the probability of blood-stage infections achieving high enough density to be detectable by light microscopy () and to increase the rate at which sub-microscopic infections are cleared (). Clinical immunity reduces the probability that LM-detectable infections progress to clinical disease (). Like for P. falciparum, the dynamics of the acquisition and decay of naturally-acquired immunity in the model depend on age and exposure. For P. vivax, immunity levels are boosted by both primary infections and relapses and are described by the following set of partial differential equations with regards to time and age :

Anti-parasite immunity:

Clinical immunity:

Where parameters represent a refractory period during which the different types of immunity cannot be further boosted after receiving a boost, and where parameters stand for the rates of decay of the different types of immunity. refers to the hypnozoite batch (with being the maximum number of hypnozoite batches).

The levels of maternally acquired anti-parasite and clinical immunity are calculated as:

Where is the average duration of maternal immunity, is the proportion of the mother’s immunity acquired by the newborn, and and denote the anti-parasite and clinical immunity levels of a 20-year-old woman averaged over their hypnozoite batches, respectively.

Immunity levels are then converted into time-dependent probabilities using Hill functions.

The probability that a blood-stage infection becomes detectable by LM, , can be represented as:

Where is the minimum probability of LM-detectable infection (with full immunity), is the maximum probability of LM-detectable infection (with no immunity), and and are scale and shape parameters estimated during model fitting.

The probability of an LM-detectable blood-stage infection becoming symptomatic, , is represented by:

Where is the minimum probability of developing a clinical episode (with full immunity), is the maximum probability of a clinical episode (with no immunity), and and are scale and shape parameters.

The recovery rate from IPCR is calculated as . The average duration of a low-density blood-stage infection, , is represented by:

Where is the minimum duration (with full immunity), is the maximum duration (with no immunity), and and are scale and shape parameters.

P. vivax human model parameter values.

Full details can be found in the original publication (White et al., 2018) including references for parameters and intervals for the prior and posterior distributions.

| Parameter | Symbol | Estimate |

|---|---|---|

| Human infection duration (days) | ||

| Latent period | 10 | |

| Light microscopy-detectable asymptomatic infection | 10 | |

| Clinical disease (untreated) | 5 | |

| Treatment of clinical disease | 1 | |

| Prophylaxis | 28 | |

| Age, heterogeneity, and probability of infection | ||

| Age-dependent biting parameter | 0.85 | |

| Age-dependent biting parameter | 8 years | |

| Variance of the log heterogeneity in biting rates | 1.29 | |

| Probability of blood-stage infection upon infectious mosquito bite | 0.5 | |

| Hypnozoite parameters | ||

| Relapse rate | 0.024 per day | |

| Clearance rate | 0.0026 per day | |

| Maternal immunity | ||

| New-born immunity relative to mother’s clinical immunity | 0.421 | |

| Duration of maternal immunity | 35.148 days | |

| Anti-parasite immunity reducing probability of light microscopy-detectable infection and duration of PCR-detectable infection | ||

| Duration of refractory period in which immunity is not boosted | 19.77 days | |

| Duration of anti-parasite immunity | 10 years | |

| Maximum probability of detectability by light microscopy due to no immunity | 0.8918 | |

| Minimum probability of detectability by light microscopy due to full immunity | 0.0043 | |

| Scale parameter for detectability by light microscopy | 27.52 | |

| Shape parameter for detectability by light microscopy | 2.403 | |

| Maximum duration of PCR-detectable infection due to no immunity | 70 days | |

| Minimum duration of PCR-detectable infection due to full immunity | 10 days | |

| Scale parameter for duration of PCR-detectable infection | 9.9 | |

| Shape parameter for duration of PCR-detectable infection | 4.602 | |

| Clinical immunity reducing probability of clinical disease | ||

| Duration of refractory period in which immunity is not boosted | 7.85 days | |

| Duration of detection immunity | 30 years | |

| Maximum probability of clinical disease due to no immunity | 0.8605 | |

| Minimum probability of clinical disease due to full immunity | 0.018 | |

| Scale parameter for clinical disease | 11.538 | |

| Shape parameter for clinical disease | 2.250 | |

Mosquito component of the P. falciparum and P. vivax model

The mosquito components of the P. falciparum and P. vivax models capture adult mosquito transmission dynamics, as well as larval population dynamics, and are nearly identical. Modeled vector bionomics correspond to Anopheles gambiae s.s. and Anopheles punctulatus for P. falciparum and P. vivax transmission, respectively.

Mosquito transmission model

Adult mosquitoes move between three states, (susceptible), (exposed), and (infectious), as follows:

is the force of infection from humans to mosquitos, represents the time-varying adult mosquito emergence rate, μ is the adult mosquito death rate, and represents the extrinsic incubation period. represents the probability that a mosquito survives between being infected and sporozoites appearing in the salivary glands and is calculated as .

The force of infection experienced by the vector is the sum of the contribution to mosquito infections from all human infectious states. As described for the human model components for both species, it also depends on the mosquito biting rate in humans (which depends on net usage), , and a normalization constant for the biting rate over various age groups, .

Force of infection experienced by mosquitoes in the P. falciparum model

In the P. falciparum model, the force of infection acting on mosquitoes is represented by:

Where , , , and represent the human-to-mosquito infectiousness for untreated symptomatic infection, treated symptomatic infection, asymptomatic infection, and asymptomatic sub-patent infection, respectively. is the time lag between parasitemia with asexual parasite stages and gametocytemia to account for the time to P. falciparum gametocyte development.

The infectiousness of humans with asymptomatic infection, , is reduced by a lower probability of detection of infection by microscopy due to the assumption that lower parasite densities are less detectable. While infectiousness parameters and are constant, infectivity for asymptomatic infection is calculated as follows:

Where is the immunity-dependent probability that an asymptomatic infection is detectable by microscopy (Equation 14) and the parameter γ1 was estimated during the original model fitting in previous publications (Griffin et al., 2010; Griffin et al., 2014; Griffin et al., 2016).

Force of infection experienced by mosquitoes in the P. vivax model

In the P. vivax model, the force of infection acting on mosquitoes is represented by:

Where , , , and represent the human-to-mosquito infectiousness for untreated symptomatic infection, treated symptomatic infection, asymptomatic LM-detectable infection and asymptomatic PCR-detectable infection, respectively. Due to the quicker development of P. vivax gametocytes compared to P. falciparum, there is assumed to be no delay between infection and infectiousness in humans.

Larval development

For both P. falciparum and P. vivax the larval stage model, shown in the following equations, is based on the previously described model in White et al., 2011. Female adult mosquitoes lay eggs at a rate . Upon hatching from eggs, larvae progress through early and late larvae stages ( and compartments) before developing into to the pupal stage . Adult female mosquitoes emerge from the pupal stage in Equation (29), which is calculated as .

The duration of each larval stage is represented by , , and . The larval stages are regulated by density-dependent mortality rates, with a time-varying carrying capacity, , that represents the ability of the environment to sustain breeding sites through different periods of the year and with the density of larvae in relation to the carrying capacity regulated by a parameter . Since seasonality in transmission dynamics was not modeled at the country level in this analysis, the carrying capacity was assumed to be constant throughout the year. The carrying capacity determines the mosquito density and hence the baseline transmission intensity in the absence of interventions. It is calculated as:

Where is the initial female mosquito density, is the baseline mosquito death rate, and is defined as:

In this equation, the number of eggs laid per day, , is defined as:

Where is the maximum number of eggs per oviposition per mosquito. The adult mosquito death rate μ and the mosquito feeding rate are affected by the use of ITNs and further described in the following section on modeling vector control. Full details on the derivation of the egg-laying rate and the carrying capacity have been previously published (White et al., 2011).

Modeling the impact of ITNs

ITNs are modeled as described previously (Griffin et al., 2010; Griffin et al., 2016). Mosquito population and transmission dynamics are affected by the use of ITNs in four ways: the mosquito death rate is increased, the feeding or gonotrophic cycle is increased, the proportion of bites taken on protected and unprotected people is changed, and the proportion of bites taken on humans relative to animals is affected. The probability that a blood-seeking mosquito successfully feeds on a human (as opposed to being repelled or killed) will depend on species-dependent bionomics and behaviors of the mosquito, as well as the anti-vectoral interventions present in the human population. Parameter values can be found in Appendix 1—table 3.

Mosquito feeding behavior

In the model there are four possible outcomes of a mosquito feeding attempt:

The mosquito bites a non-human host.

The mosquito attempts to bite a human host but is killed by the ITN before biting.

The mosquito successfully feeds on a human host and survives that feeding attempt.

The mosquito attempts to bite a human host but is repelled by the ITN without feeding, and repeats the attempt to find a blood meal source.

We define the probability of a mosquito biting a human host during a single attempt as , the probability that a mosquito bites a human host and survives the feeding attempt as , and the probability of a mosquito being repelled without feeding as . These probabilities exclude natural vector mortality, so that for a population without protection from ITNs (e.g. prior to their introduction), and .

The presence of ITNs modifies these probabilities of surviving a feeding attempt or being repelled without feeding. Upon entering a house with ITNs, mosquitoes can experience three different outcomes: being repelled by the ITN without feeding (probability ), being killed by the ITN before biting (probability ), or feeding successfully (probability ). It is assumed that all biting attempts inside a house occur in humans. The repellency of ITNs in terms of the insecticide and barrier effect decays over time, giving the following probabilities:

Where is the maximum probability of a mosquito being repelled by a bednet and is the minimum probability of being repelled by a bednet that no longer has insecticidal activity and possibly holes reducing the barrier effect. represents the rate of decay of the effect of ITNs over time since their distribution and is calculated as . The killing effect of ITNs decreases at the same constant rate from a maximum probability of . In model simulations, ITNs are distributed every three years.

With representing the population not covered by an ITN and representing the population covered by an ITN, this gives the following probabilities of successfully feeding, , and being repelled without feeding, , during a single feeding attempt on a human:

Where is the proportion of the population in the respective group, and is the proportion of bites taken on humans in bed, which was derived from previous publications (Griffin et al., 2010).

During a single feeding attempt (which may be on animals or humans), the average probability of mosquitoes feeding or being repelled without feeding, and , are then:

Where is the proportion of bites taken on humans in the absence of any vector control intervention.

Effect of ITNs on mosquito mortality

The average probability of mosquitoes being repelled without feeding in the model affects the mosquito feeding rate, , as follows:

Where is the time spent looking for a blood meal in the absence of vector control, and is the time spent resting between blood meals, which is assumed to be unaffected by ITN usage.

The average probabilities of feeding or being repelled also affect the probability of surviving the period of feeding, , as follows:

Where is the baseline mosquito death rate in the absence of interventions.

The probability of surviving the period of resting, , is not affected by ITNs:

This allows to calculate the mosquito mortality rate affecting mosquito population dynamics in the set of Equations (29):

Effect of ITNs on the force of infection acting on humans and mosquitoes

In the presence of ITNs, the anthropophagy (the proportion of successful bites which are on humans) of mosquitoes is represented by parameter . This is affected by ITN usage as follows:

Further details on the assumptions in this calculation can be found in an earlier publication (Griffin et al., 2010).

This then gives the biting rate on humans, , as shown in the equations for the force of infection experienced by humans (Equations 4–6) and by mosquitoes (Equations 4–6):

Effect of ITNs on larval development

The mosquito death rate μ and the feeding rate also influence the calculation of the carrying capacity and the egg-laying rate in Equation 34 and Equation 36, thereby affecting larval development.

Mosquito model and insecticide-treated net (ITN) parameter.

Full details on parameter values can be found in the original publications (Griffin et al., 2010; Griffin et al., 2016; White et al., 2011; White et al., 2018), including references and intervals for the prior and posterior distributions for fitted parameters (median values of the posterior distribution are used in model simulations).

| P. falciparum (Anopheles gambiae s.s.) | P. vivax (Anopheles punctulatus) | ||

|---|---|---|---|

| Infectiousness of humans to mosquitoes | |||

| Lag from parasites to infectious gametocytes | 12.5 days | - | |

| Untreated clinical disease | 0.068 | 0.8 | |

| Treated clinical disease | 0.022 | 0.4 | |