Peer review process

Not revised: This Reviewed Preprint includes the authors’ original preprint (without revision), an eLife assessment, public reviews, and a provisional response from the authors.

Read more about eLife’s peer review process.Editors

- Reviewing EditorNoah CowanJohns Hopkins University, Baltimore, United States of America

- Senior EditorTamar MakinUniversity of Cambridge, Cambridge, United Kingdom

Reviewer #1 (Public Review):

The present study examines whether one can identify kinematic signatures of different motor strategies in both humans and non-human primates (NHP). The Critical Stability Task (CST) requires a participant to control a cursor with complex dynamics based on hand motion. The manuscript includes datasets on performance of NHPs collected from a previous study, as well as new data on humans performing the same task. Further human experiments and optimal control models highlight how different strategies lead to different patterns of hand motion. Finally, classifiers were developed to predict which strategy individuals were using on a given trial. There are several strengths to this manuscript. I think the CST task provides a useful behavioural task to explore the neural basis of voluntary control. While reaching is an important basic motor skill, there is much to learn by looking at other motor actions to address many fundamental issues on the neural basis of voluntary control. I also think the comparison between human and NHP performance is important as there is a common concern that NHPs can be overtrained in performing motor tasks leading to differences in their performance as compared to humans. The present study highlights that there are clear similarities in motor strategies of humans and NHPs. While the results are promising, I would suggest that the actual use of these paradigms and techniques likely need some improvement/refinement. Notably, the threshold or technique to identify which strategy an individual is using on a given trial needs to be more stringent given the substantial overlap in hand kinematics between different strategies.

The most important goal of this study is to set up future studies to examine how changes in motor strategies impact neural processing. I have a few concerns that I think need to be considered. First, a classifier was developed to identify whether a trial reflected Position Control with success deemed to be a probability of >70% by the classifier. In contrast, a probability of <30% was considered successfully predicting Velocity Control (Uncertain bandwidth middle 40%). While this may be viewed as acceptable for purposes of quantifying behaviour, I'm not sure this is strict enough for interpreting neural data. Figure 7A displays the OFC Model results for the two strategies and demonstrates substantial overlap for RMS of Cursory Position and Velocity at the lowest range of values. In this region, individual trials for humans and NHP are commonly identified as reflecting Position Control by the classifier although this region clearly also falls within the range expected for Velocity Control, just a lower density of trials. The problem is that neural data is messy enough, but having trials being incorrectly labelled will make it even messier when trying to quantify differences in neural processing between strategies. A further challenge is that trials cannot be averaged as the patterns of kinematics are so different from trial-to-trial. One option is to just move up the threshold from >70%/<30% to levels where you have a higher confidence that performance only reflects one of the two strategies (perhaps 95/5% level). Another approach would be to identify the 95% confidence boundary for a given strategy and only classify a trial as reflecting a given strategy when it is inside its 95% boundary, but outside the other strategies 95% boundary (or some other level separation). A higher threshold would hopefully also deal with the challenge of individuals switching strategies within a trial. Admittedly, this more stringent separation will likely drop the number of trials prohibitively, but there is a clear trade-off between number of trials and clean data. For the future, a tweak to the task could be to lengthen the trial as this would certainly increase separation between the two conditions.

While the paradigm creates interesting behavioural differences, it is not clear to me what one would expect to observe neurally in different brain regions beyond paralleling kinematic differences in performance. Perhaps this could be discussed. One extension of the present task would be to add some trials where visual disturbances are applied near the end of the trial. The prediction is that there would be differences in the kinematics of these motor corrections for different motor strategies. One could then explore differences in neural processing across brain regions to identify regions that simply reflect sensory feedback (no differences in the neural response after the disturbance), versus those involved in different motor strategies (differences in neural responses after the disturbance).

It seems like a mix of lambda values are presented in Figure 5 and beyond. There needs to be some sort of analysis to verify that all strategies were equally used across lambda levels. Otherwise, apparent differences between control strategies may simply reflect changes in the difficulty of the task. It would also be useful to know if there were any trends across time? Strategies used for blocks of trials or one used early when learning and then changing later.

Figure 2 highlights key features of performance as a function of task difficulty. Lines 187 to 191 highlight similarities in motor performance between humans and NHPs. However, there is a curious difference in hand/cursor Gain for Monkey J. Any insight as to the basis for this difference?

Reviewer #2 (Public Review):

The goal of the present study is to better understand the 'control objectives' that subjects adopt in a video-game-like virtual-balancing task. In this task, the hand must move in the opposite direction from a cursor. For example, if the cursor is 2 cm to the right, the subject must move their hand 2 cm to the left to 'balance' the cursor. Any imperfection in that opposition causes the cursor to move. E.g., if the subject were to move only 1.8 cm, that would be insufficient, and the cursor would continue to move to the right. If they were to move 2.2 cm, the cursor would move back toward the center of the screen. This return to center might actually be 'good' from the subject's perspective, depending on whether their objective is to keep the cursor still or keep it near the screen's center. Both are reasonable 'objectives' because the trial fails if the cursor moves too far from the screen's center during each six-second trial.

This task was recently developed for use in monkeys (Quick et al., 2018), with the intention of being used for the study of the cortical control of movement, and also as a task that might be used to evaluate BMI control algorithms. The purpose of the present study is to better characterize how this task is performed. What sort of control policies are used. Perhaps more deeply, what kind of errors are those policies trying to minimize? To address these questions, the authors simulate control-theory style models and compare with behavior. They do in both in monkeys and in humans.

These goals make sense as a precursor to future recording or BMI experiments. The primate motor-control field has long been dominated by variants of reaching tasks, so introducing this new task will likely be beneficial. This is not the first non-reaching task, but it is an interesting one and it makes sense to expand the presently limited repertoire of tasks. The present task is very different from any prior task I know of. Thus, it makes sense to quantify behavior as thoroughly as possible in advance of recordings. Understanding how behavior is controlled is, as the authors note, likely to be critical to interpreting neural data.

From this perspective - providing a basis for interpreting future neural results - the present study is fairly successful. Monkeys seem to understand the task properly, and to use control policies that are not dissimilar from humans. Also reassuring is the fact that behavior remains sensible even when task-difficulty become high. By 'sensible' I simply mean that behavior can be understood as seeking to minimize error: position, velocity, or (possibly) both, and that this remains true across a broad range of task difficulties. The authors document why minimizing position and minimizing velocity are both reasonable objectives. Minimizing velocity is reasonable, because a near-stationary cursor can't move far in six seconds. Minimizing position error is reasonable, because the trial won't fail if the cursor doesn't stray far from the center. This is formally demonstrated by simulating control policies: both objectives lead to control policies that can perform the task and produce realistic single-trial behavior. The authors also demonstrate that, via verbal instruction, they can induce human subjects to favor one objective over the other. These all seem like things that are on the 'need to know' list, and it is commendable that this amount of care is being taken before recordings begin, as it will surely aid interpretation.

Yet as a stand-alone study, the contribution to our understanding of motor control is more limited. The task allows two different objectives (minimize velocity, minimize position) to be equally compatible with the overall goal (don't fail the trial). Or more precisely, there exists a range of objectives with those two at the extreme. So it makes sense that different subjects might choose to favor different objectives, and also that they can do so when instructed. But has this taught us something about motor control, or simply that there is a natural ambiguity built into the task? If I ask you to play a game, but don't fully specify the rules, should I be surprised that different people think the rules are slightly different?

The most interesting scientific claim of this study is not the subject-to-subject variability; the task design makes that quite likely and natural. Rather, the central scientific result is the claim that individual subjects are constantly switching objectives (and thus control policies), such that the policy guiding behavior differs dramatically even on a single-trial basis. This scientific claim is supported by a technical claim: that the authors' methods can distinguish which objective is in use, even on single trials. I am uncertain of both claims.

Consider Figure 8B, which reprises a point made in Figure 1&3 and gives the best evidence for trial-to-trial variability in objective/policy. For every subject, there are two example trials. The top row of trials shows oscillations around the center, which could be consistent with position-error minimization. The bottom row shows tolerance of position errors so long as drift is slow, which could be consistent with velocity-error minimization. But is this really evidence that subjects were switching objectives (and thus control policies) from trial to trial? A simpler alternative would be a single control policy that does not switch, but still generates this range of behaviors. The authors don't really consider this possibility, and I'm not sure why. One can think of a variety of ways in which a unified policy could produce this variation, given noise and the natural instability of the system.

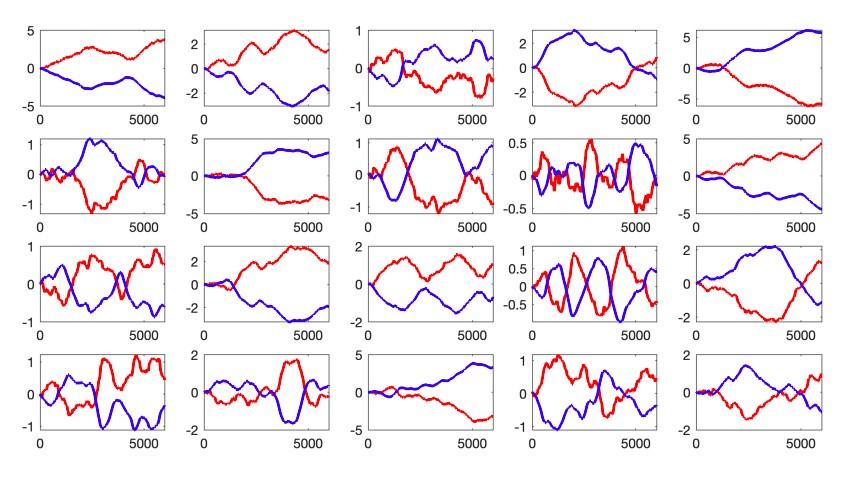

Indeed, I found that it was remarkably easy to produce a range of reasonably realistic behaviors, including the patterns that the authors interpret as evidence for switching objectives, based on a simple fixed controller. To run the simulations, I made the simple assumption that subjects simply attempt to match their hand position to oppose the cursor position. Because subjects cannot see their hand, I assumed modest variability in the gain, with a range from -1 to -1.05. I assumed a small amount of motor noise in the outgoing motor command. The resulting (very simple) controller naturally displayed the basic range of behaviors observed across trials (see Image 1)

Peer review image 1.

Some trials had oscillations around the screen center (zero), which is the pattern the authors suggest reflects position control. In other trials the cursor was allowed to drift slowly away from the center, which is the pattern the authors suggest reflects velocity control. This is true even though the controller was the same on every trial. Trial-to-trial differences were driven both by motor noise and by the modest variability in gain. In an unstable system, small differences can lead to (seemingly) qualitatively different behavior on different trials.

This simple controller is also compatible with the ability of subjects to adapt their strategy when instructed. Anyone experienced with this task likely understands (or has learned) that moving the hand slightly more than 'one should' will tend to shepherd the cursor back to center, at the cost of briefly high velocity. Using this strategy more sparingly will tend to minimize velocity even if position errors persist. Thus, any subject using this control policy would be able to adapt their strategy via a modest change in gain (the gain linking visible cursor position to intended hand position).

This model is simple, and there may be reasons to dislike it. But it is presumably a reasonable model. The nature of the task is that you should move your hand opposite where the cursor is. Because you can't see your hand, you will make small mistakes. Due to the instability of the system, those small mistakes have large and variable effects. This feature is likely common to other controllers as well; many may explicitly or implicitly blend position and velocity control, with different trials appearing more dominated by one versus the other. Given this, I think the study presents only weak evidence that individual subjects are switching their objective on individual trials. Indeed, the more parsimonious explanation may be that they aren't. While the study certainly does demonstrate that the control policy can be influenced by verbal instructions, this might be a small adjustment as noted above.

I thus don't feel convinced that the authors can conclusively tell us the true control policy being used by human and monkey subjects, nor whether that policy is mostly fixed or constantly switching. The data are potentially compatible with any of these interpretations, depending on which control-style model one prefers.

I see a few paths that the authors might take if they chose.

--First, my reasoning above might be faulty, or there might be additional analyses that could rule out the possibility of a unified policy underlying variable behavior. If so, the authors may be able to reject the above concerns and retain the present conclusions. The main scientifically novel conclusion of the present study is that subjects are using a highly variable control policy, and switching on individual trials. If this is indeed the case, there may be additional analyses that could reveal that.

--Second, additional trial types (e.g., with various perturbations) might be used as a probe of the control policy. As noted below, there is a long history of doing this in the pursuit system. That additional data might better disambiguate control policies both in general, and across trials.

--Third, the authors might find that a unified controller is actually a good (and more parsimonious) explanation. Which might actually be a good thing from the standpoint of future experiments. Interpretation of neural data is likely to be much easier if the control policy being instantiated isn't in constant flux.

In any case, I would recommend altering the strength of some conclusions, particularly the conclusion that the presented methods can reliably discriminate amongst objectives/policies on individual trials. This is mentioned as a major motivation on multiple occasions, but in most of these instances, the subsequent analysis infers the objective only across trial (e.g., one must observe a scatterplot of many trials). By Figure 7, they do introduce a method for inferring the control policy on individual trials, and while this seems to work considerably better than chance, it hardly appears reliable.

In this same vein I would suggest toning down aspects of the Introduction and Discussion. The Introduction in particular is overly long, and tries to position the present study as unique in ways that seem strained. Other studies have built links between human behavior, monkey behavior, and monkey neural data (for just one example, consider the corpus of work from the Scott lab that includes Pruszynski et al. 2008 and 2011). Other studies have used highly quantitative methods to infer the objective function used by subjects (e.g. Kording and Wolpert 2004). The very issue that is of interest in the present study - velocity-error-minimization versus position-error-minimization - has been extensively addressed in the smooth pursuit system. That field has long combined quantitative analyses of behavior in humans and monkeys, along with neural recordings. Many pursuit experiments used strategies that could be fruitfully employed to address the central questions of the present study. For example, error stabilization was important for dissecting the control policy used by the pursuit system. By artificially stabilizing the error (position or velocity) at zero, or at some other value, one can determine the system's response. The classic Rashbass step (1961) put position and velocity errors in opposition, to see which dominates the response. Step and sinusoidal perturbations were useful in distinguishing between models, as was the imposition of artificially imposed delays. The authors note the 'richness' of the behavior in the present task, and while one could say the same of pursuit, it was still the case that specific and well-thought through experimental manipulations were pretty critical. It would be better if the Introduction considered at least some of the above-mentioned work (or other work in a similar vein). While most would agree with the motivations outlined by the authors - they are logical and make sense - the present Introduction runs the risk of overselling the present conclusions while underselling prior work.

Reviewer #3 (Public Review):

This paper considers a challenging motor control task - the critical stability task (CST) - that can be performed equally well by humans and macaque monkeys. This task is of considerable interest since it is rich enough to potentially yield important novel insights into the neural basis of behavior in more complex tasks that point-to-point reaching. Yet it is also simple enough to allow parallel investigation in humans and monkeys, and is also easily amenable to computational modeling. The paper makes a compelling argument for the importance of this type of parallel investigation and the suitability of the CST for doing so.

Behavior in monkeys and in human subjects suggests that behavior seems to cluster into different regimes that seem to either oscillate about the center of the screen, or drift more slowly in one direction. The authors show that these two behavioral regimes can be reliably reproduced by instructing human participants to either maintain the cursor in the center of the screen (position control objective), or keep the cursor still anywhere in the screen (velocity control objective) - as opposed to the usual 'instruction' to just not let the cursor leave the screen. A computational model based on optimal feedback control can similarly reproduce the two control regimes when the costs are varied

Overall, this is a creative study that successfully leverages experiments in humans and computational modeling to gain insight into the nature of individual differences in behavior across monkeys (and people). The approach does work and successfully solves the core problem the authors set out to address. I do think that more comprehensive approaches might be possible that might involve, e.g. using a richer set of behavioral features to classify behavior, fitting a parametric class of control objectives rather than assuming a binary classification, and exploring the reliability of the inference process in more detail.

In addition, the authors do fully establish that varying control objectives is the only way to obtain the different behavioral phenotypes observed. It may, for instance, be possible that some other underlying differences (e.g. the sensitivity to effort costs or the extent of signal-dependent noise) might also lead to a similar range of behaviors as varying the position versus velocity costs.

Specific Comments:

The simulations convincingly show that varying the control objective via the cost function can reproduce the different observed behavioral regimes. However, in principle, the differences in behavior among the monkeys and among the humans in Experiment 1 might not necessarily be due to difference in other aspects of the model. For instance, for a fixed cost function, differences in motor execution noise might perhaps lead the model to favor a position-like strategy or a velocity-like strategy. Or differences in the relative effort cost might alter the behavioral phenotype. Given that the narrative is about inferring control objectives, it seems important to rule out more systematically that some other factor might not potentially dictate each individual's style of performing the task. One approach to rule this out might be to try to formally fit the parameters of the model (or at least a subset of them) under a fixed cost function (e.g. velocity-based), and check whether the model might still recover the different regimes of behavior when parameters *other than the cost function* are varied.

The approach to the classification problem is somewhat ad hoc and based on fairly simplistic, hand-picked features (RMS position and RMS velocity). I do wonder whether a more comprehensive set of behavioral features might enable a clearer separation between strategies, or might even reveal that the uninstructed subjects were doing something qualitatively different still from the instructed groups. Different control objectives ought to predict meaningfully different control policies - that is, different ways of updating hand position based on current state of the cursor and hand - e.g. the hand/cursor gain, which does clearly differ across instructed strategies. Would it be possible to distinguish control strategies more accurately based on this level of analysis, rather than based on gross task metrics? Might this point to possible experimental interventions (e.g. target jumps) that might validate the inferred objective?

It seems that the classification problem cannot be solved perfectly, at least on a single-trial level. Although it works out that the classification can recover which participants were given which instructions, it's not clear how robust this classification is. It should be straightforward to estimate the reliability of the strategy classification by simulating participants and deriving a "confusion matrix", i.e. calculating how often e.g. data generated under a velocity-control objective gets mis-classified as following a position-control objective. It's not clear how this kind of metric relates to the decision confidence outputted by the classifier.

The problem of inferring the control objective is framed as a dichotomy between position control and velocity control. In reality, however, it may be a continuum of possible objectives, based on the relative cost for position and velocity. How would the problem differ if the cost function is framed as estimating a parameter, rather than as a classification problem?