Peer review process

Not revised: This Reviewed Preprint includes the authors’ original preprint (without revision), an eLife assessment, public reviews, and a provisional response from the authors.

Read more about eLife’s peer review process.Editors

- Reviewing EditorMarkus PlonerDepartment of Neurology and TUM-Neuroimaging Center, TUM School of Medicine and Health, Technical University of Munich (TUM), Munich, Germany

- Senior EditorChristian BüchelUniversity Medical Center Hamburg-Eppendorf, Hamburg, Germany

Reviewer #1 (Public Review):

Summary:

This study examined the role of statistical learning in pain perception, suggesting that individuals' expectations about a sequence of events influence their perception of pain intensity. They incorporated the components of volatility and stochasticity into their experimental design and asked participants (n = 27) to rate the pain intensity, their prediction, and their confidence level. They compared two different inference strategies - optimal Bayesian inference vs. heuristic-employing Kalman filters and model-free reinforcement learning and showed that the expectation-weighted Kalman filter best explained the temporal pattern of participants' ratings. These results provide evidence for a Bayesian inference perspective on pain, supported by a computational model that elucidates the underlying process.

Strengths:

Their experimental design included a wide range of input intensities and the levels of volatility and stochasticity.

Weaknesses:

- Selection of candidate computational models: While the paper juxtaposes the simple model-free RL model against a Kalman Filter model in the context of pain perception, the rationale behind this choice remains ambiguous. It prompts the question: could other RL-based models, such as model-based RL or hierarchical RL, offer additional insights? A more detailed explanation of their computational model selection would provide greater clarity and depth to the study.

- Effects of varying levels of volatility and stochasticity: The study commendably integrates varying levels of volatility and stochasticity into its experimental design. However, the depth of analysis concerning the effects of these variables on model fit appears shallow. A looming concern is whether the superior performance of the expectation-weighted Kalman Filter model might be a natural outcome of the experimental design. While the non-significant difference between eKF and eRL for the high stochasticity condition somewhat alleviates this concern, it raises another query: Would a more granular analysis of volatility and stochasticity effects reveal fine-grained model fit patterns?

- Rating instruction: According to Fig. 1A, participants were prompted to rate their responses to the question, "How much pain DID you just feel?" and to specify their confidence level regarding their pain. It is difficult for me to understand the meaning of confidence in this context, given that they were asked to report their *subjective* feelings. It might have been better to query participants about perceived stimulus intensity levels. This perspective is seemingly echoed in lines 100-101, "the primary aim of the experiment was to determine whether the expectations participants hold about the sequence inform their perceptual beliefs about the intensity of the stimuli."

- Relevance to clinical pain: While the authors underscore the relevance of their findings to chronic pain, they did not include data pertaining to clinical pain. Notably, their initial preprint seemed to encompass data from a clinical sample (https://www.medrxiv.org/content/10.1101/2023.03.23.23287656v1), which, for reasons unexplained, has been omitted in the current version. Clarification on this discrepancy would be instrumental in discerning the true relevance of the study's findings to clinical pain scenarios.

- Paper organization: The paper's organization appears a little bit weird, possibly due to the removal of significant content from their initial preprint. Sections 2.1-2.2 and 2.4 seem more suitable for the Methods section, while 2.3 and 2.4.1 are the only parts that present results. In addition, enhancing clarity through graphical diagrams, especially for the experimental design and computational models, would be quite beneficial. A reference point could be Fig. 1 and Fig. 5 from Jepma et al. (2018), which similarly explored RL and KF models.

Reviewer #2 (Public Review):

Summary:

The present study aims to investigate whether learning about temporal regularities of painful events, i.e. statistical learning can influence pain perception. To this end, sequences of heat pain stimuli with fluctuating intensity are applied to 27 healthy human participants. The participants are asked to provide ratings of perceived as well as predicted pain intensity. Using an advanced modelling strategy, the results reveal that statistical expectations and confidence scale the judgment of pain in sequences of noxious stimuli as predicted by hierarchical Bayesian inference theory.

Strengths:

This is a highly interesting and novel finding with potential implications for the understanding and treatment of chronic pain where pain regulation is deficient. The paradigm is clear, the analysis is state-of-the-art, the results are convincing, and the interpretation is adequate.

Reviewer #3 (Public Review):

Summary:

I am pleased to have had the opportunity to review this manuscript, which investigated the role of statistical learning in the modulation of pain perception. In short, the study showed that statistical aspects of temperature sequences, with respect to specific manipulations of stochasticity (i.e., randomness of a sequence) and volatility (i.e., speed at which a sequence unfolded) influenced pain perception. Computational modelling of perceptual variables (i.e., multi-dimensional ratings of perceived or predicted stimuli) indicated that models of perception weighted by expectations were the best explanation for the data. My comments below are not intended to undermine or question the quality of this research. Rather, they are offered with the intention of enhancing what is already a significant contribution to the pain neuroscience field. Below, I highlight the strengths and weaknesses of the manuscript and offer suggestions for incorporating additional methodological details.

Strengths:

- The manuscript is articulate, coherent, and skilfully written, making it accessible and engaging.

- The innovative stimulation paradigm enables the exploration of expectancy effects on perception without depending on external cues, lending a unique angle to the research.

- By including participants' ratings of both perceptual aspects and their confidence in what they perceived or predicted, the study provides an additional layer of information to the understanding of perceptual decision-making. This information was thoughtfully incorporated into the modelling, enabling the investigation of how confidence influences learning.

- The computational modelling techniques utilised here are methodologically robust. I commend the authors for their attention to model and parameter recovery, a facet often neglected in previous computational neuroscience studies.

- The well-chosen citations not only reflect a clear grasp of the current research landscape but also contribute thoughtfully to ongoing discussions within the field of pain neuroscience.

Weaknesses:

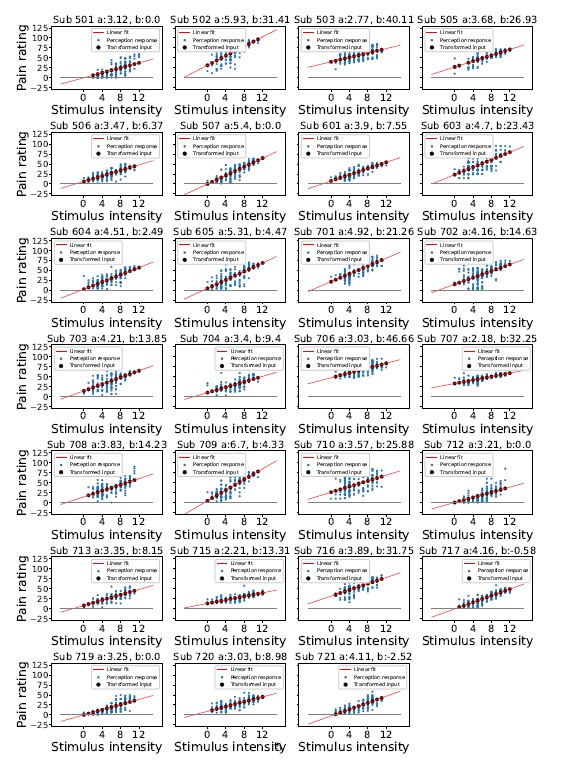

- In Figure 1, panel C, the authors illustrate the stimulation intensity, perceived intensity, and prediction intensity on the same scale, facilitating a more direct comparison. It appears that the stimulation intensity has been mathematically transformed to fit a scale from 0 to 100, aligning it with the intensity ratings corresponding to either past or future stimuli. Given that the pain threshold is specifically marked at 50 on this scale, one could logically infer that all ratings falling below this value should be deemed non-painful. However, I find myself uncertain about this interpretation, especially in relation to the term "arbitrary units" used in the figure. I would greatly appreciate clarification on how to accurately interpret these units, as well as an explanation of the relationship between these values and the definition of pain threshold in this experiment.

- The method of generating fluctuations in stimulation temperatures, along with the handling of perceptual uncertainty in modelling, requires further elucidation. The current models appear to presume that participants perceive each stimulus accurately, introducing noise only at the response stage. This assumption may fail to capture the inherent uncertainty in the perception of each stimulus intensity, especially when differences in consecutive temperatures are as minimal as 1{degree sign}C.

- A key conclusion drawn is that eKF is a better model than eRL. However, a closer examination of the results reveals that the two models behave very similarly, and it is not clear that they can be readily distinguished based on model recovery and model comparison results.

Regarding model recovery, the distinction between the eKF and eRL models seems blurred. When the simulation is based on the eKF, there is no ability to distinguish whether either eKF or eRL is better. When the simulation is based on the eRL, the eRL appears to be the best model, but the difference with eKF is small. This raises a few more questions. What is the range of the parameters used for the simulations? Is it possible that either eRL or eKF are best when different parameters are simulated? Additionally, increasing the number of simulations to at least 100 could provide more convincing model recovery results.

Regarding model comparison, the authors reported that "the expectation-weighted KF model offered a better fit than the eRL, although in conditions of high stochasticity, this difference was short of significance against the eRL model." This interpretation is based on a significance test that hinges on the ratio between the ELPD and the surrounding standard error (SE). Unfortunately, there's no agreed-upon threshold of SEs that determines significance, but a general guideline is to consider "several SEs," with a higher number typically viewed as more robust. However, the text lacks clarity regarding the specific number of SEs applied in this test. At a cursory glance, it appears that the authors may have employed 2 SEs in their interpretation, while only depicting 1 SE in Figure 4.

- With respect to parameter recovery, a few additional details could be included for completeness. Specifically, while the range of the learning rate is understandably confined between 0 and 1, the range of other simulated parameters, particularly those without clear boundaries, remains ambiguous. Including scatter plots with the simulated parameters on the x-axis and the recovered parameters on the y-axis would effectively convey this missing information. Furthermore, it would be beneficial for the authors to clarify whether the same priors were used for both the modelling results presented in the main paper and the parameter recovery presented in the supplementary material.

- While the reliance on R-hat values for convergence in model fitting is standard, a more comprehensive assessment could include estimates of the effective sample size (bulk_ESS and/or tail_ESS) and the Estimated Bayesian Fraction of Missing Information (EBFMI), to show efficient sampling across the distribution. Consideration of divergences, if any, would further enhance the reliability of the results.

- The authors write: "Going beyond conditioning paradigms based in cuing of pain outcomes, our findings offer a more accurate description of endogenous pain regulation." Unfortunately, this statement isn't substantiated by the results. The authors did not engage in a direct comparison between conditioning and sequence-based paradigms. Moreover, even if such a comparison had been made, it remains unclear what would constitute the gold standard for quantifying "endogenous pain regulation."