Cochlear Synaptopathy: Unravelling hidden hearing loss

Age-related neural changes start to occur in the brain during middle age. For example, hearing loss can begin at around 40 years of age. However, many middle-aged adults who struggle to understand speech in noisy environments have clinically normal hearing tests. This 'hidden hearing loss' could be due to faults in the transmission of sound signals to the brain that are not detectable with conventional tests (Liberman, 2024).

Loud noises, typical aging processes or ototoxic drugs can cause irreversible damage to the synapses between the inner hair cells of the cochlea – the sensory receptors in the inner ear – and the auditory nerve fibers that transmit sound information from the cochlea to the upper auditory processing stages in the brain (Kujawa and Liberman, 2009). This damage, known as cochlear synaptopathy, is usually permanent and can lead to delayed degeneration of the cochlear nerve. However, research in mice has shown that sound detection thresholds can return to normal a few days after injury. The precise impact of cochlear synaptopathy on human hearing and how it might impact our ability to understand sounds, such as speech in noisy environments, is still under debate (for a recent review, see Liu et al., 2024).

One of the reasons for this is that cochlear synaptopathy can only be studied indirectly in humans, using non-invasive techniques (Bramhall et al., 2019). Since these measures only detect correlation, efforts have been made to verify findings using cross-species studies and computational models (Bharadwaj et al., 2022; Encina-Llamas et al., 2019).

It is also challenging to evaluate the impact of cochlear synaptopathy on real-world speech perception. Some studies using simplified tasks, such as distinguishing digits or vowels, suggest a link between loss or dysfunction of auditory nerve fibers and individuals experiencing hearing difficulties. However, such tasks may oversimplify the complex nature of natural speech perception, potentially underestimating the role of the brain. Meanwhile, in more complex speech-in-noise tasks, commonly used indicators often cannot predict speech intelligibility scores as effectively as cognitive or attentional measures do (Kamerer et al., 2019; DiNino et al., 2022; Gómez-Álvarez et al., 2023). Now, in eLife, Aravindakshan Parthasarathy and colleagues – including Maggie Zink and Leslie Zhen as joint first authors – report new ways to overcome these obstacles (Zink et al., 2024).

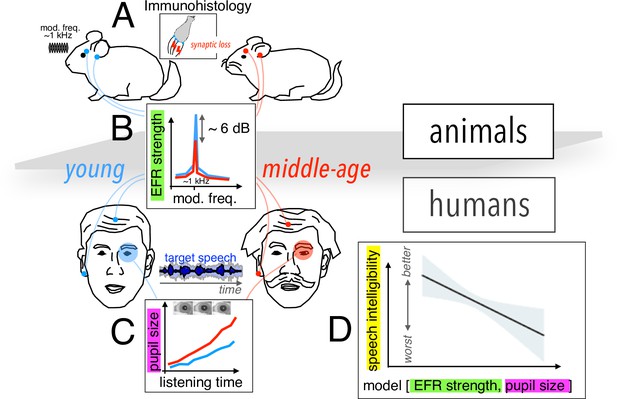

To tackle the first challenge, the team – which is based at the University of Pittsburgh and Virginia Polytechnic Institute and State University – employed a cross-species approach, studying young and middle-aged humans as well as gerbils, which have the same hearing frequency range as humans (Figure 1A). Using electrodes placed on the earlobe and on the top of the forehead, Zink et al. measured the envelope following response (EFR), reflecting the ability of neurons to track rapid changes in stimulus envelope. This measurement, which can act as a proxy to detect cochlear synaptopathy, revealed a comparable decline in neuronal activity in both species from younger to middle-aged groups (Figure 1B).

Assessing cochlear synaptopathy in humans and rodents.

(A) Zink et al. used a cross-species approach to assess hidden hearing loss in humans and gerbils. Immunohistology in gerbils confirmed these animals suffered from cochlear synaptopathy. (B) A reduction in envelope following response (EFR) strength was found between middle-aged (red) and younger (blue) humans, and gerbils. (C) Larger pupil size was found in middle-aged humans decoding speech in noise. The magnitude of the ectroencephalographic-based assessment of sound envelope encoding (EEG) and pupil size were both significant predictors of speech intelligibility differences beyond hearing thresholds (D), and as such constitute the hidden dimensions of hearing deficits.

Further histology studies in gerbils counting the average number of remaining auditory nerve fibers per inner hair cell confirmed that these animals did indeed suffer from cochlear synaptopathy, highlighting a potential association between lower EFR magnitude and cochlear synaptopathy in humans. A computational auditory model showed that the size of the EFR decrease with age observed by Zink et al. is consistent with the proportional loss of synapses for these groups measured in previous histological and analytical studies (Wu et al., 2019; Zilany et al., 2014).

To address the second challenge, Zink et al. assessed speech-in-noise perception using a custom-designed task of intermediate complexity, while simultaneously using pupillometry to monitor pupil size, which is thought to be a proxy of listening effort (i.e., for the level of cognitive resources required by the brain to process the incoming neural signal; Figure 1C and D). Their results revealed an increased listening effort in middle-aged test subjects, even though intelligibility scores remained the same throughout the test. Crucially, a statistical model including predictors for both cochlear synaptopathy and listening effort showed that each factor contributed significantly to the speech-in-noise difficulties in the human cohort (Figure 1E).

In conclusion, while Zink et al. do not establish causation, their work represents a valuable step in understanding how early cochlear synaptopathy in middle-aged adults may contribute to speech-in-noise deficits. It also suggests that cognitive and attentional factors may play an even larger role than previously expected.

Yet, our understanding of how cochlear synaptopathy distorts neural coding and perception of sound in humans is still overly simplistic, and many questions remain unanswered. For example, little is known regarding how central processing stages of the brain are impacted and potentially compensate impaired peripheral sound transduction (Resnik and Polley, 2021). Moreover, it remains unclear which specific sound features’ encoding are distorted by cochlear synaptopathy and finally lead to degraded perceptual capacities, as many behavioral studies in animals with drug-induced cochlear synaptopathy show no evidence of impairment (Henry et al., 2024).

Middle age is a significant period during which age-related changes start to occur in the brain that could shed light on potential neurodegenerative conditions later in life, hearing loss being one of them. Further research is needed before a behavioral marker of cochlear synaptopathy becomes available at the clinic: a first step will be to refine current test proxies and to develop sensitive neural markers that could help identify the early onset of conditions such as dementia.

References

-

Investigating the effect of cochlear synaptopathy on envelope following responses using a model of the auditory nerveJournal of the Association for Research in Otolaryngology 20:363–382.https://doi.org/10.1007/s10162-019-00721-7

-

The role of cognition in common measures of peripheral synaptopathy and hidden hearing lossAmerican Journal of Audiology 28:843–856.https://doi.org/10.1044/2019_AJA-19-0063

-

Adding insult to injury: cochlear nerve degeneration after “temporary” noise-induced hearing lossThe Journal of Neuroscience 29:14077–14085.https://doi.org/10.1523/JNEUROSCI.2845-09.2009

-

BookNoise damage and hidden hearing loss: cochlear synaptopathy in animals and humansIn: Liberman MC, editors. Occupational Hearing Loss, Fourth Edition. CRC Press. pp. 248–270.https://doi.org/10.1201/b23379-11

-

Hidden hearing loss: Fifteen years at a glanceHearing Research 443:108967.https://doi.org/10.1016/j.heares.2024.108967

-

Updated parameters and expanded simulation options for a model of the auditory peripheryThe Journal of the Acoustical Society of America 135:283–286.https://doi.org/10.1121/1.4837815

Article and author information

Author details

Publication history

Copyright

© 2024, Ponsot

This article is distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use and redistribution provided that the original author and source are credited.

Metrics

-

- 1,601

- views

-

- 122

- downloads

-

- 1

- citation

Views, downloads and citations are aggregated across all versions of this paper published by eLife.

Citations by DOI

-

- 1

- citation for umbrella DOI https://doi.org/10.7554/eLife.104936