Efficient sampling and noisy decisions

Abstract

Human decisions are based on finite information, which makes them inherently imprecise. But what determines the degree of such imprecision? Here, we develop an efficient coding framework for higher-level cognitive processes in which information is represented by a finite number of discrete samples. We characterize the sampling process that maximizes perceptual accuracy or fitness under the often-adopted assumption that full adaptation to an environmental distribution is possible, and show how the optimal process differs when detailed information about the current contextual distribution is costly. We tested this theory on a numerosity discrimination task, and found that humans efficiently adapt to contextual distributions, but in the way predicted by the model in which people must economize on environmental information. Thus, understanding decision behavior requires that we account for biological restrictions on information coding, challenging the often-adopted assumption of precise prior knowledge in higher-level decision systems.

Main text

'We rarely know the statistics of the messages completely, and our knowledge may change … what is redundant today was not necessarily redundant yesterday.’ Barlow, 2001.

Introduction

It has been suggested that the rules guiding behavior are not arbitrary, but follow fundamental principles of acquiring information from environmental regularities in order to make the best decisions. Moreover, these principles should incorporate strategies of information coding in ways that minimize the costs of inaccurate decisions given biological constraints on information acquisition, an idea known as efficient coding (Attneave, 1954; Barlow, 1961; Niven and Laughlin, 2008; Sharpee et al., 2014). While early applications of efficient coding theory have primarily been to early stages of sensory processing (Laughlin, 1981; Ganguli and Simoncelli, 2014; Wei and Stocker, 2015), it is worth considering whether similar principles may also shape the structure of internal representations of higher-level concepts, such as the perceptions of value that underlie economic decision making (Louie and Glimcher, 2012; Polanía et al., 2019; Rustichini et al., 2017). In this work, we contribute to the efficient coding framework applied to cognition and behavior in several respects.

A first aspect concerns the range of possible internal representation schemes that should be considered feasible, which determines the way in which greater precision of discrimination in one part of the stimulus space requires less precision of discrimination elsewhere. Implementational architectures proposed in previous work assume a population coding scheme in which different neurons have distinct 'preferred' stimuli (Ganguli and Simoncelli, 2014; Wei and Stocker, 2015). While this is clearly relevant for some kinds of low-level sensory features such as orientation, it is not obvious that this kind of internal representation is used in representing higher-level concepts such as economic values. We instead develop an efficient coding theory for a case in which an extensive magnitude (something that can be described by a larger or smaller number) is represented by a set of processing units that 'vote' in favor of the magnitude being larger rather than small. The internal representation therefore necessarily consists of a finite collection of binary signals.

Our restriction to representations made up of binary signals is in conformity with the observation that neural systems at many levels appear to transmit information via discrete stochastic events (Schreiber et al., 2002; Sharpee, 2017). Moreover, cognitive models with this general structure have been argued to be relevant for higher-order decision problems such as value-based choice. For example, it has been suggested that the perceived values of choice options are constructed by acquiring samples of evidence from memory regarding the emotions evoked by the presented items (Shadlen and Shohamy, 2016). Related accounts suggest that when a choice must be made between alternative options, information is acquired via discrete samples of information that can be represented as binary responses (e.g., 'yes/no' responses to queries) (Norman, 1968; Weber and Johnson, 2009). The seminal decision by sampling (DbS) theory (Stewart et al., 2006) similarly posits an internal representation of magnitudes relevant to a decision problem by tallies of the outcomes of a set of binary comparisons between the current magnitude and alternative values sampled from memory. The architecture that we assume for imprecise internal representations has the general structure of proposals of these kinds; but we go beyond the above-mentioned investigations, in analyzing what an efficient coding scheme consistent with our general architecture would be like.

A second aspect concerns the objective for which the encoding system is assumed to be optimized. Information maximization theories (Laughlin, 1981; Ganguli and Simoncelli, 2014; Wei and Stocker, 2015) assume that the objective should be maximal mutual information between the true stimulus magnitude and the internal representation. While this may be a reasonable assumption in the case of early sensory processing, it is less obvious in the case of circuits involved more directly in decision making, and in the latter case an obvious alternative is to ask what kind of encoding scheme will best serve to allow accurate decisions to be made. In the theory that we develop here, our primary concern is with encoding schemes that maximize a subject's probability of giving a correct response to a binary decision. However, we compare the coding rule that would be optimal from this standpoint to one that would maximize mutual information, or to one that would maximize the expected value of the chosen item.

Third, we extend our theory of efficient coding to consider not merely the nature of an efficient coding system for a single environmental frequency distribution assumed to be permanently relevant — so that there has been ample time for the encoding rule to be optimally adapted to that distribution of stimulus magnitudes — but also an efficient approach to adjusting the encoding as the environmental frequency distribution changes. Prior discussions of efficient coding have often considered the optimal choice of an encoding rule for a single environmental frequency distribution that is assumed to represent a permanent feature of the natural environment (Laughlin, 1981; Ganguli and Simoncelli, 2014). Such an approach may make sense for a theory of neural coding in cortical regions involved in early-stage processing of sensory stimuli, but is less obviously appropriate for a theory of the processing of higher-level concepts such as economic value, where the idea that there is a single permanently relevant frequency distribution of magnitudes that may be encountered is doubtful.

A key goal of our work is to test the relevance of these different possible models of efficient coding in the case of numerosity discrimination. Judgments of the comparative numerosity of two visual displays provide a test case of particular interest given our objectives. On the one hand, a long literature has argued that imprecision in numerosity judgments has a similar structure to psychophysical phenomena in many low-level sensory domains (Nieder and Dehaene, 2009; Nieder and Miller, 2003). This makes it reasonable to ask whether efficient coding principles may also be relevant in this domain. At the same time, numerosity is plainly a more abstract feature of visual arrays than low-level properties such as local luminosity, contrast, or orientation, and therefore can be computed only at a later stage of processing. Moreover, processing of numerical magnitudes is a crucial element of many higher-level cognitive processes, such as economic decision making; and it is arguable that many rapid or intuitive judgments about numerical quantities, even when numbers are presented symbolically, are based on an 'approximate number system' of the same kind as is used in judgments of the numerosity of visual displays (Piazza et al., 2007; Nieder and Dehaene, 2009). It has further been argued that imprecision in the internal representation of numerical magnitudes may underly imprecision and biases in economic decisions (Khaw et al., 2020; Woodford, 2020).

It is well-known that the precision of discrimination between nearby numbers of items decreases in the case of larger numerosities, in approximately the way predicted by Weber's Law, and this is often argued to support a model of imprecise coding based on a logarithmic transformation of the true number (Nieder and Dehaene, 2009; Nieder and Miller, 2003). However, while the precision of internal representations of numerical magnitudes is arguably of great evolutionary relevance (Butterworth et al., 2018; Nieder, 2020), it is unclear why a specifically logarithmic transformation of number information should be of adaptive value, and also whether the same transformation is used independent of context (Pardo-Vazquez et al., 2019; Brus et al., 2019). Here, we report new experimental data on numerosity discrimination by human participants, where we find that our data are most consistent with an efficient coding theory for which the performance measure is the frequency of correct comparative judgments, and where people economize on the costs associated to learn about the statistics of the environment.

Results

A general efficient sampling framework

We consider a situation in which the objective magnitude of a stimulus with respect to some feature can be represented by a quantity . When the stimulus is presented to an observer, it gives rise to an imprecise representation in the nervous system, on the basis of which the observer produces any required response. The internal representation can be stochastic, with given values being produced with conditional probabilities that depend on the true magnitude. Here, we are more specifically concerned with discrimination experiments, in which two stimulus magnitudes and are presented, and the subject must choose which of the two is greater. We suppose that each magnitude has an internal representation , drawn independently from a distribution that depends only on the true magnitude of that individual stimulus. The observer’s choice must be based on a comparison of with .

One way in which the cognitive resources recruited to make accurate discriminations may be limited is in the variety of distinct internal representations that are possible. When the complexity of feasible internal representations is limited, there will necessarily be errors in the identification of the greater stimulus magnitude in some cases, even assuming an optimal decoding rule for choosing the larger stimulus on the basis of and . One can then consider alternative encoding rules for mapping objective stimulus magnitudes to feasible internal representations. The answer to this efficient coding problem generally depends on the prior distribution from which the different stimulus magnitudes are drawn. The resources required for more precise internal representations of individual stimuli may be economized with respect to either or both of two distinct cognitive costs. The first goal of this work is to distinguish between these two types of efficiency concerns.

One question that we can ask is wheter the observed behavioral responses are consistent with the hypothesis that the conditional probabilities are well-adapted to the particular frequency distribution of stimuli used in the experiment, suggesting an efficient allocation of the limited encoding neural resources. The assumption of full adaptation is typically adopted in efficient coding formulations of early sensory systems (Laughlin, 1981; Wei and Stocker, 2017), and also more recently in applications of efficient coding theories in value-based decisions (Louie and Glimcher, 2012; Polanía et al., 2019; Rustichini et al., 2017).

There is also a second cost in which it may be important to economize on cognitive resources. An efficient coding scheme in the sense described above economizes on the resources used to represent each individual new stimulus that is encountered; however, the encoding and decoding rules are assumed to be precisely optimized for the specific distribution of stimuli that characterizes the experimental situation. In practice, it will be necessary for a decision maker to learn about this distribution in order to encode and decode individual stimuli in an efficient way, on the basis of experience with a given context. In this case, the relevant design problem should not be conceived as choosing conditional probabilities once and for all, with knowledge of the prior distribution from which will be drawn. Instead, it should be to choose a rule that specifies how the probabilities should adapt to the distribution of stimuli that have been encountered in a given context. It then becomes possible to consider how well a given learning rule economizes on the degree of information about the distribution of magnitudes associated with one’s current context that is required for a given level of average performance across contexts. This issue is important not only to reduce the cognitive resources required to implement the rule in a given context (by not having to store or access so detailed a description of the prior distribution), but in order to allow faster adaptation to a new context when the statistics of the environment can change unpredictably (Młynarski and Hermundstad, 2019).

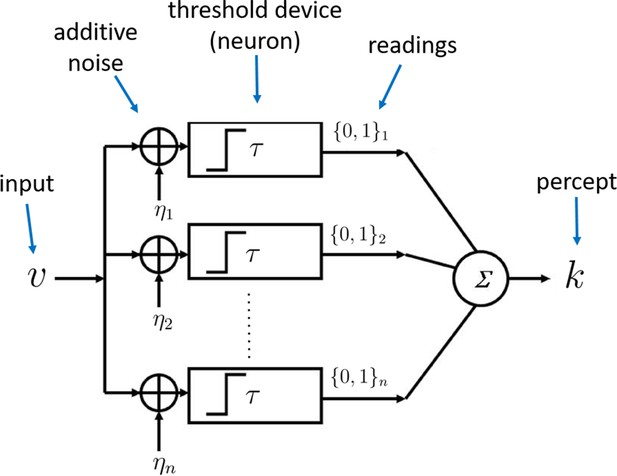

Coding architecture

We now make the contrast between these two types of efficiency more concrete by considering a specific architecture for internal representations of sensory magnitudes. We suppose that the representation of a given stimulus will consist of the output of a finite collection of processing units, each of which has only two possible output states ('high' or 'low' readings), as in the case of a simple perceptron. The probability that each of the units will be in one output state or the other can depend on the stimulus that is presented. We further restrict the complexity of feasible encoding rules by supposing that the probability of a given unit being in the 'high’ state must be given by some function that is the same for each of the individual units, rather than allowing the different units to coordinate in jointly representing the situation in some more complex way. We argue that the existence of multiple units operating in parallel effectively allows multiple repetitions of the same 'experiment’, but does not increase the complexity of the kind of test that can be performed. Note that we do not assume any unavoidable degree of stochasticity in the functioning of the individual units; it turns out that in our theory, it will be efficient for the units to be stochastic, but we do not assume that precise, deterministic functioning would be infeasible. Our resource limits are instead on the number of available units, the degree of differentiation of their output states, and the degree to which it is possible to differentiate the roles of distinct units.

Given such a mechanism, the internal representation of the magnitude of an individual stimulus will be given by the collection of output states of the processing units. A specification of the function then implies conditional probabilities for each of the possible representations. Given our assumption of a symmetrical and parallel process, the number of units in the 'high' state will be a sufficient statistic, containing all of the information about the true magnitude that can be extracted from the internal representation. An optimal decoding rule will therefore be a function only of , and we can equivalently treat (an integer between 0 and ) as the internal representation of the quantity . The conditional probabilities of different internal representations are then

The efficient coding problem for a given environment, specified by a particular prior distribution will be to choose the encoding rule so as to allow an overall distribution of responses across trials that will be as accurate as possible (according to criteria that we will elaborate further below). We can further suppose that each of the individual processing units is a threshold unit, that produces a 'high’ reading if and only if the value exceeds some threshold where is a random term drawn independently on each trial from some distribution (Figure 1). The encoding function can then be implemented by choosing an appropriate distribution . This implementation requires that be a non-decreasing function, as we shall assume.

Architecture of the sampling mechanism.

Each processing unit receives noisy versions of the input , where the noisy signals are i.i.d. additive random signals independent of . The output of the neuron for each sample is 'high' (one) reading if and zero otherwise. The noisy percept of the input is simply the sum of the outputs of each sample given by .

Limited cognitive resources

One measure of the cognitive resources required by such a system is the number of processing units that must produce an output each time an individual stimulus is evaluated. We can consider the optimal choice of in order to maximize, for instance, average accuracy of responses in a given environment , in the case of any bound on the number of units that can be used to represent each stimulus. But we can also consider the amount of information about the distribution that must be used in order to decide how to encode a given stimulus . If the system is to be able to adapt to changing environments, it must determine the value of (the probability of a 'high' reading) as a function of both the current and information about the distribution , in a way that must now be understood to apply across different potential contexts. This raises the issue of how precisely the distribution associated with the current context is represented for purposes of such a calculation. A more precise representation of the prior (allowing greater sensitivity to fine differences in priors) will presumably entail a greater resource cost or very long adaptation periods.

We can quantify the precision with which the prior is represented by supposing that it is represented by a finite sample of independent draws from the prior (or more precisely, from the set of previously experienced values, an empirical distribution that should after sufficient experience provide a good approximation to the true distribution). We further assume that an independent sample of previously experienced values is used by each of the processing units (Figure 1). Each of the individual processing units is then in the 'high' state with probability . The complete internal representation of the stimulus is then the collection of independent realizations of this binary-valued random variable. We may suppose that the resource cost of an internal representation of this kind is an increasing function of both and .

This allows us to consider an efficient coding meta-problem in which for any given values the function is chosen so as to maximize some measure of average perceptual accuracy, where the average is now taken not only over the entire distribution of possible occurring under a given prior but over some range of different possible priors for which the adaptive coding scheme is to be optimized. We wish to consider how each of the two types of resource constraint (a finite bound on as opposed to a finite bound on ) affects the nature of the predicted imprecision in internal representations, under the assumption of a coding scheme that is efficient in this generalized sense, and then ask whether we can tell in practice how tight each of the resource constraints appears to be.

Efficient sampling for a known prior distribution

We first consider efficient coding in the case that there is no relevant constraint on the size of , while instead is bounded. In this case, we can assume that each time an individual stimulus must be encoded, a large enough sample of prior values is used to allow accurate recognition of the distribution and the problem reduces to a choice of a function that is optimal for each possible prior

Maximizing mutual information

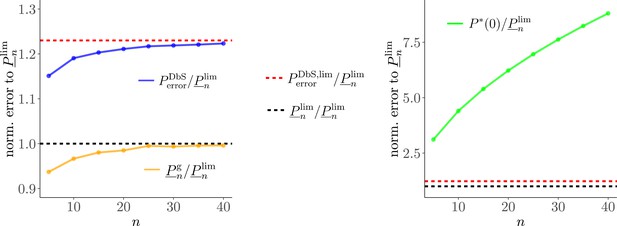

The nature of the resource-constrained problem to be optimized depends on the performance measure that we use to determine the usefulness of a given encoding scheme. A common assumption in the literature on efficient coding has been that the encoding scheme maximizes the mutual information between the true stimulus magnitude and its internal representation (Ganguli and Simoncelli, 2014; Polanía et al., 2019; Wei and Stocker, 2015). We start by characterizing the optimal for a given prior distribution , according to this criterion. It can be shown that for large , the mutual information between and (hence the mutual information between and ) is maximized if the prior distribution over is Jeffreys’ prior (Clarke and Barron, 1994)

also known as the arcsine distribution. Hence, the mapping induces a prior distribution over θ given by the arcsine distribution (Figure 2a, right panel). Based on this result, it can be shown that the optimal encoding rule that guarantees maximization of mutual information between the random variable and the noisy encoded percept is given by (see Appendix 1)

where is the CDF of the prior distribution .

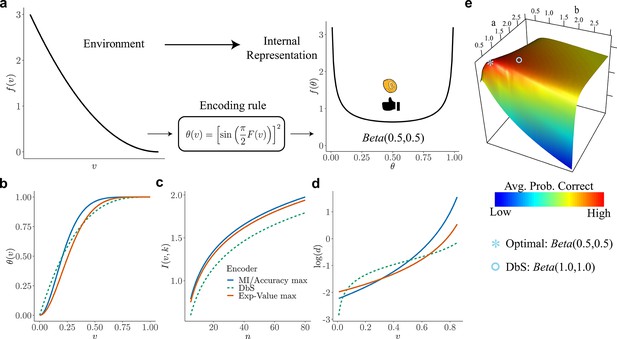

Overview of our theory and differences in encoding rules.

(a) Schematic representation of our theory. Left: example prior distribution of values encountered in the environment. Right: Prior distribution in the encoder space (Equation 2) due to optimal encoding (Equation 3). This optimal mapping determines the probability of generating a 'high' or 'low' reading. The ex-ante distribution over that guarantees maximization of mutual information is given by the arcsine distribution (Equation 2). (b) Encoding rules for different decision strategies under binary sampling coding: accuracy maximization (blue), reward maximization (red), DbS (green dashed). (c) Mutual information for the different encoding rules as a function of the number of samples . As expected increases with , however the rule that results in the highest loss of information is DbS. (d) Discriminability thresholds (log-scaled for better visualization) for the different encoding rules as a function of the input values for the prior given in panel a. (e) Graphical representation of the perceptual accuracy optimization landscape. We plot the average probability of correct responses for the large- limit using as benchmark a Beta distribution with parameters and . The blue star shows the average error probability assuming that is the arcsine distribution (Equation 2), which is the optimal solution when the prior distribution in known. The blue open circle shows the average error probability based on the encoding rule assumed in DbS, which is located near the optimal solution. Please note that when formally solving this optimization problem, we did not assume a priori that the solution is related to the beta distribution. We use the beta distribution in this figure just as a benchmark for visualization. Detailed comparison of performance for finite samples is presented in Appendix 7.

Accuracy maximization for a known prior distribution

So far, we have derived the optimal encoding rule to maximize mutual information. However, one may ask what the implications are of such a theory for discrimination performance. This is important to investigate given that achieving channel capacity does not necessarily imply that the goals of the organism are also optimized (Park and Pillow, 2017). Independent of information maximization assumptions, here, we start from scratch and investigate what are the necessary conditions for minimizing discrimination errors given the resource-constrained problem considered here. We solve this problem for the case of two alternative forced choice tasks, where the average probability of error is given by (see Appendix 2)

where represents the probability of erroneously choosing the alternative with the lowest value given a noisy percept (assuming that the goal of the organism in any given trial is to choose the alternative with the highest value). Here, we want to find the density function that guarantees the smallest average error (Equation 4). The solution to this problem is (Appendix 2)

which is exactly the same prior density function over that maximizes mutual information (Equation 2). Crucially, please note that we have obtained this expression based on minimizing the frequency of erroneous choices and not the maximization of mutual information as a goal in itself. This provides a further (and normative) justification for why maximizing mutual information under this coding scheme is beneficial when the goal of the agent is to minimize discrimination errors (i.e., maximize accuracy).

Optimal noise for a known prior distribution

Based on the coding architecture presented in Figure 1, the optimal encoding function can then be implemented by choice of an appropriate distribution . It can be shown that discrimination performance can be optimized by finding the optimal noise distribution (Appendix 3) (McDonnell et al., 2007)

Remarkably, this result is independent of the number of samples available to encode the input variable, and generalizes to any prior distribution (recall that is defined as its cumulative density function).

This result reveals three important aspects of neural function and decision behavior: First, it makes explicit why a system that evolved to code information using a coding scheme of the kind assumed in our framework must be necessarily noisy. That is, we do not attribute the randomness of peoples’ responses to a particular set of stimuli or decision problem to unavoidable randomness of the hardware used to process the information. Instead, the relevant constraints are assumed to be the limited set of output states for each neuron, the limited number of neurons, and the requirement that the neurons operate in parallel (so that each one’s output state must be statistically independent of the others, conditional on the input stimulus). Given these constraints, we show that it is efficient for the operation of the neurons to be random. Second, it shows how the nervous system may take advantage of these noisy properties by reshaping its noise structure to optimize decision behavior. Third, it shows that the noise structure can remain unchanged irrespective of the amount of resources available to guide behavior (i.e., the noise distribution does not depend on , Equation 6). Please note however, that this minimalistic implementation does not directly imply that the samples in our algorithmic formulation are necessarily drawn in this way. We believe that this implementation provides a simple demonstration of the consequences of limited resources in systems that encode information based on discrete stochastic events (Sharpee, 2017). Interestingly, it has been shown that this minimalistic formulation can be extended to more realistic population coding specifications (Nikitin et al., 2009).

Efficient coding and the relation between environmental priors and discrimination

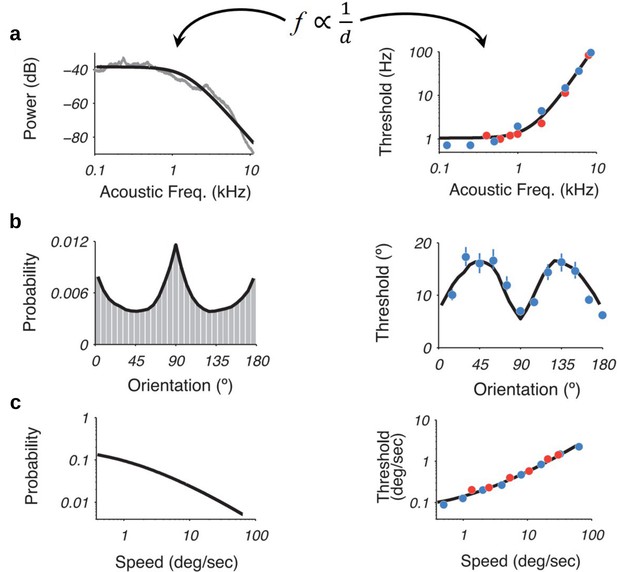

The results presented above imply that this encoding framework imposes limitations on the ability of capacity-limited systems to discriminate between different values of the encoded variables. Moreover, we have shown that error minimization in discrimination tasks implies a particular shape of the prior distribution of the encoder (Equation 5) that is exactly the prior density that maximizes mutual information between the input and the encoded noisy readings (Equation 2, Figure 2a right panel). Does this imply a relation between prior and discriminability over the space of the encoded variable? Intuitively, following the efficient coding hypothesis, the relation should be that lower discrimination thresholds should occur for ranges of stimuli that occur more frequently in the environment or context.

Recently, it was shown that using an efficiency principle for encoding sensory variables (e.g., with a heterogeneous population of noisy neurons [Ganguli and Simoncelli, 2016]) it is possible to obtain an explicit relationship between the statistical properties of the environment and perceptual discriminability (Ganguli and Simoncelli, 2016). The theoretical relation states that discriminability thresholds should be inversely proportional to the density of the prior distribution . Here, we investigated whether this particular relation also emerges in the efficient coding scheme that we propose in this study.

Remarkably, we obtain the following relation between discriminability thresholds, prior distribution of input variables, and the number of limited samples (Appendix 4):

Interestingly, this relationship between prior distribution and discriminability thresholds holds empirically across several sensory modalities (Appendix 4), thus once again demonstrating that the efficient coding framework that we propose here seems to incorporate the right kind of constraints to explain observed perceptual phenomena as consequences of optimal allocation of finite capacity for internal representations.

Maximizing the expected size of the selected option (fitness maximization)

Until now, we have studied the case when the goal of the organism is to minimize the number of mistakes in discrimination tasks. However, it is important to consider the case when the goal of the organism is to maximize fitness or expected reward (Pirrone et al., 2014). For example, when spending the day foraging fruit, one must make successive decisions about which tree has more fruits. Fitness depends on the number of fruit collected which is not a linear function of the number of accurate decisions, as each choice yields a different amount of fruit.

Therefore, in the case of reward maximization, we are interested in minimizing reward loss which is given by the following expression

where is the probability of choosing option when the input values are and . Thus, the goal is to find the encoding rule which guarantees that the amount of reward loss is as small as possible given our proposed coding framework.

Here we show that the optimal encoding rule that guarantees maximization of expected value is given by

where is a normalizing constant which guarantees that the expression within the integral is a probability density function (Appendix 5). The first observation based on this result is that the encoding rule for maximizing fitness is different from the encoding rule that maximizes accuracy (compare Equations 3 and 9), which leads to a slight loss of information transmission (Figure 2c). Additionally, one can also obtain discriminability threshold predictions for this new encoding rule. Assuming a right-skewed prior distribution, which is often the case for various natural priors in the environment (e.g., like the one shown in Figure 2a), we find that discriminability for small input values is lower for reward maximization compared to perceptual maximization, however this pattern inverts for higher values (Figure 2d). In other words, when we intend to maximize reward (given the shape of our assumed prior, Figure 2a), the agent should allocate more resources to higher values (compared to the perceptual case), however without completely giving up sensitivity for lower values, as these values are still encountered more often.

Efficient sampling with costs on acquiring prior knowledge

In the previous section, we obtained analytical solutions that approximately characterize the optimal in the limit as is made sufficiently large. Note however that we are always assuming that is finite, and that this constrains the accuracy of the decision maker’s judgments, while is instead unbounded and hence no constraint.

The nature of the optimal function is different, however, when is small. We argue that this scenario is particularly relevant when full knowledge of the prior is not warranted given the costs vs benefits of learning, for instance, when the system expects contextual changes to occur often. In this case, as we will formally elaborate below, it ceases to be efficient for θ to vary only gradually as a function of , rather than moving abruptly from values near zero to values near one (Appendix 6). In the large- limiting case, the distributions of sample values used by the different processing units will be nearly the same for each unit (approximating the current true distribution ). Then if were to take only the values zero and one for different values of its arguments, the units would simply produce copies of the same output (either zero or one) for any given stimulus and distribution . Hence only a very coarse degree of differentiation among different stimulus magnitudes would be possible. Having vary more gradually over the range of values of in the support of instead makes the representation more informative. But when is small (e.g., because of costs vs benefits of accurately representing the prior ), this kind of arbitrary randomization in the output of individual processing units is no longer essential. There will already be considerable variation in the outputs of the different units, even when the output of each unit is a deterministic function of , owing to the variability in the sample of prior observations that is used to assess the nature of the current environment. As we will show below, this variability will already serve to allow the collective output of the several units to differentiate between many gradations in the magnitude of , rather than only being able to classify it as 'small' or 'large' (because either all units are in the 'low’ or 'high’ states).

Robust optimality of decision by sampling

Because of the way in which sampling variability in the values used to adapt each unit’s encoding rule to the current context can substitute for the arbitrary randomization represented by the noise term (see Figure 1), a sharp reduction in the value of need not involve a great loss in performance relative to what would be possible (for the same limit on ) if were allowed to be unboundedly large (Appendix 7). As an example, consider the case in which , so that each unit ’s output state must depend only on the value of the current stimulus and one randomly selected draw from the prior distribution . A possible decision rule that is radically economical in this way is one that specifies that the unit will be in the 'high' state if and only if In this case, the internal representation of a stimulus will be given by the number out of independent draws from the contextual distribution with the property that the contextual draw is smaller than , as in the model of decision by sampling (DbS) (Stewart et al., 2006). However, it remains to be determined to what degree it might be beneficial for a system to adopt such coding strategy.

In any given environment (characterized by a particular contextual distribution ), DbS will be equivalent to an encoding process with an architecture of the kind shown in Figure 1, but in which the distribution (compare to the optimal noise distribution for the full prior adaptation case in Equation 6). This makes vary endogenously depending on the contextual distribution . And indeed, the way that varies with the contextual distribution under DbS is fairly similar to the way in which it would be optimal for it to vary in the absence of any cost of precisely learning and representing the contextual distribution. This result implies that will be a monotonic transformation of a function that increases more steeply over those regions of the stimulus space where is higher, regardless of the nature of the contextual distribution. We consider its performance in a given environment, from the standpoint of each of the possible performance criteria considered for the case of full prior adaptation (i.e., maximize accuracy or fitness), and show that it differs from the optimal encoding rules under any of those criteria (Figure 2b–d). In particular, here, we show that using the encoding rule employed in DbS results in considerable loss of information compared to the full-prior adaptation solutions (Figure 2c). An additional interesting observation is that for the strategy employed in DbS, the agent appears to be more sensitive for extreme input values, at least for a wide set of skewed distributions (e.g., for the prior distribution in Figure 2a, the discriminability thresholds are lower at the extremes of the support of ). In other words, agents appear to be more sensitive to salience in the DbS rule. Despite these differences, here it is important to emphasize that in general for all optimization objectives, the encoding rules will be steeper for regions of the prior with higher density. However, mild changes in the steepness of the curves will be represented in significant discriminability differences between the different encoding rules across the support of the prior distribution (Figure 2d).

While the predictions of DbS are not exactly the same as those of efficient coding in the case of unbounded , under any of the different objectives that we consider, our numerical results show that it can achieve performance nearly as high as that of the theoretically optimal encoding rule; hence radically reducing the value of does not have a large cost in terms of the accuracy of the decisions that can be made using such an internal representation (Appendix 7 and Figure 2e). Under the assumption that reducing either or would serve to economize on scarce cognitive resources, we formally prove that it might well be most efficient to use an algorithm with a very low value of (even as assumed by DbS), while allowing to be much larger (Appendix 6, Appendix 7).

Crucially, here, it is essential to emphasize that the above-mentioned results are derived for the case of a particular finite number of processing units (and a corresponding finite total number of samples from the contextual distribution used to encode a given stimulus), and do not require that must be large (Appendix 6, Appendix 7).

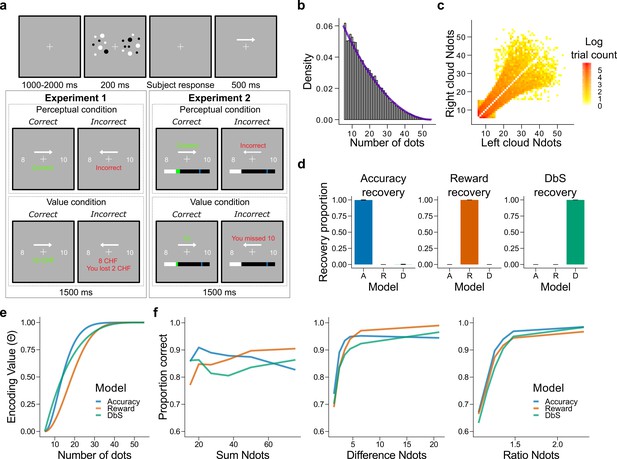

Testing theories of numerosity discrimination

Our goal now is to compare back-to-back the resource-limited coding frameworks elaborated above in a fundamental cognitive function for human behavior: numerosity perception. We designed a set of experiments that allowed us to test whether human participants would adapt their numerosity encoding system to maximize fitness or accuracy rates via full prior adaptation as usually assumed in optimal models, or whether humans employ a 'less optimal' but more efficient strategy such as DbS, or the more established logarithmic encoding model.

In Experiment 1, healthy volunteers (n = 7) took part in a two-alternative forced choice numerosity task in which each participant completed ∼2400 trials across four consecutive days (Materials and methods). On each trial, they were simultaneously presented with two clouds of dots and asked which one contained more dots, and were given feedback on their reward and opportunity losses on each trial (Figure 3a). Participants were either rewarded for their accuracy (perceptual condition, where maximizing the amount of correct responses is the optimal strategy) or the number of dots they selected (value condition, where maximizing reward is the optimal strategy). Each condition was tested for two consecutive days with the starting condition randomized across participants. Crucially, we imposed a prior distribution with a right-skewed quadratic shape (Figure 3b), whose parametrization allowed tractable analytical solutions of the encoding rules , and , that correspond to the encoding rules for Accuracy maximization, Reward maximization, and DbS, respectively (Figure 3e and Materials and methods). Qualitative predictions of behavioral performance indicate that the accuracy-maximization model is the most accurate for trials with lower numerosities (the most frequent ones), whereas the reward-maximization model outperforms the others for trials with larger numerosities (trials where the difference in the number of dots in the clouds, and thus the potential reward, is the largest, Figure 2d and Figure 3f). In contrast, the DbS strategy presents markedly different performance predictions, in line with the discriminability predictions of our formal analyses (Figure 2c,d).

Experimental design, model simulations and recovery.

(a) Schematic task design of Experiments 1 and 2. After a fixation period (1–2 s) participants were presented two clouds of dots (200 ms) and had to indicate which cloud contained the most dots. Participants were rewarded for being accurate (perceptual condition) or for the number of dots they selected (value condition) and were given feedback. In Experiment 2 participants collected on correctly answered trials a number of points equal to a fixed amount (perceptual condition) or a number equal to the dots in the cloud they selected (value condition) and had to reach a threshold of points on each run. (b) Empirical (grey bars) and theoretical (purple line) distribution of the number of dots in the clouds of dots presented across Experiments 1 and 2. (c) Distribution of the numerosity pairs selected per trial. (d) Synthetic data preserving the trial set statistics and number of trials per participant used in Experiment 1 was generated for each encoding rule (Accuracy (left), Reward (middle), and DbS (right)) and then the latent-mixture model was fitted to each generated dataset. The figures show that it is theoretically possible to recover each generated encoding rule. (e) Encoding function for the different sampling strategies as a function of the input values (i.e., the number of dots). (f) Qualitative predictions of the three models (blue: Accuracy, red: Reward, green: Decision by Sampling) on trials from Experiment 1 with . Performance of each model as a function of the sum of the number of dots in both clouds (left), the absolute difference between the number of dots in both clouds (middle) and the ratio of the number of dots in the most numerous cloud over the less numerous cloud (right).

In our modelling specification, the choice structure is identical for the three different sampling models, differing only in the encoding rule (Materials and methods). Therefore, answering the question of which encoding rule is the most favored for each participant can be parsimoniously addressed using a latent-mixture model, where each participant uses , or to guide their decisions (Materials and methods). Before fitting this model to the empirical data, we confirmed the validity of our model selection approach through a validation procedure using synthetic choice data (Figure 3d, Figure 3—figure supplement 1, and Materials and methods).

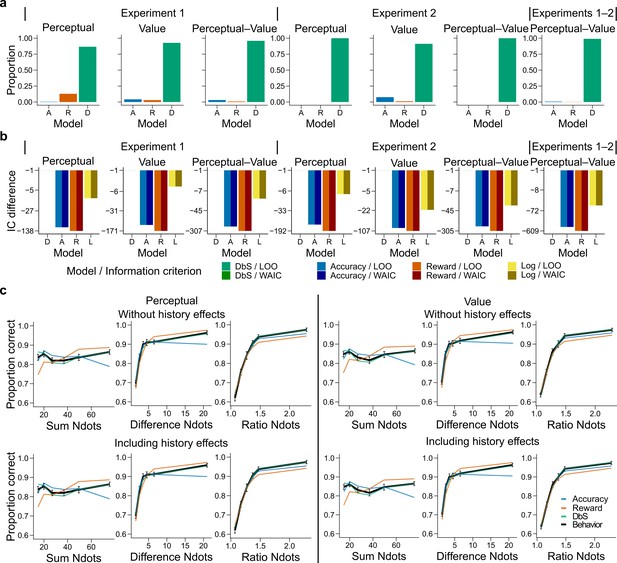

After we confirmed that we can reliably differentiate between our competing encoding rules, the latent-mixture model was initially fitted to each condition (perceptual or value) using a hierarchical Bayesian approach (Materials and methods). Surprisingly, we found that participants did not follow the accuracy or reward optimization strategy in the respective experimental condition, but favored the DbS strategy (proportion that DbS was deemed best in the perceptual and value conditions, Figure 4). Importantly, this population-level result also holds at the individual level: DbS was strongly favored in 6 out of 7 participants in the perceptual condition, and seven out of seven in the value condition (Figure 4—figure supplement 1). These results are not likely to be affected by changes in performance over time, as performance was stable across the four consecutive days (Figure 4—figure supplement 2). Additionally, we investigated whether biases induced by choice history effects may have influenced our results (Abrahamyan et al., 2016; Keung et al., 2019; Talluri et al., 2018). Therefore, we incorporated both choice- and correctness-dependence history biases in our models and fitted the models once again (Materials and methods). We found similar results to the history-free models ( in perceptual and in value conditions, Figure 4c). At the individual level, DbS was again strongly favored in 6 out of 7 participants in the perceptual condition, and 7 out of 7 in the value condition (Figure 4—figure supplement 1).

Behavioral results.

(a) Bars represent proportion of times an encoding rule (Accuracy [A, blue], Reward [R, red], DbS [D, green]) was selected by the Bayesian latent-mixture model based on the posterior estimates across participants. Each panel shows the data grouped for each and across experiments and experimental conditions (see titles on top of each panel). The results show that DbS was clearly the favored encoding rule. The latent vector posterior estimates are presented in Figure 4—figure supplement 4. (b) Difference in LOO and WAIC between the best model (DbS (D) in all cases) and the competing models: Accuracy (A), Reward (R) and Logarithmic (L) models. Each panel shows the data grouped for each and across experimental conditions and experiments (see titles on top of each panel). (c) Behavioral data (black, error bars represent SEM across participants) and model predictions based on fits to the empirical data. Data and model predictions are presented for both the perceptual (left panels) or value (right panels) conditions, and excluding (top panels) or including (bottom panels) choice history effects. Performance of data model predictions is presented as function of the sum of the number of dots in both clouds (left), the absolute difference between the number of dots in both clouds (middle) and the ratio of the number of dots in the most numerous cloud over the less numerous cloud (right). Results reveal a remarkable overlap of the behavioral data and predictions by DbS, thus confirming the quantitative results presented in panels a and b.

In order to investigate further the robustness of this effect, we introduced a slight variation in the behavioral paradigm. In this new experiment (Experiment 2), participants were given points on each trial and had to reach a certain threshold in each run for it to be eligible for reward (Figure 3a and Materials and methods). This class of behavioral task is thought to be in some cases more ecologically valid than trial-independent choice paradigms (Kolling et al., 2014). In this new experiment, either a fixed amount of points for a correct trial was given (perceptual condition) or an amount equal to the number of dots in the chosen cloud if the response was correct (value condition). We recruited a new set of participants (n = 6), who were tested on these two conditions, each for two consecutive days with the starting condition randomized across participants (each participant completed trials). The quantitative results revealed once again that participants did not change their encoding strategy depending on the goals of the task, with DbS being strongly favored for both perceptual and value conditions ( and , respectively; Figure 4a), and these results were confirmed at the individual level where DbS was strongly favored in 6 out of 6 participants in both the perceptual and value conditions (Figure 4—figure supplement 1). Once again, we found that inclusion of choice history biases in this experiment did not significantly affect our results both at the population and individual levels. Population probability that DbS was deemed best in the perceptual () and value () conditions (Figure 4—figure supplement 1), and at the individual level DbS was strongly favored in 6 out of 6 participants in the perceptual condition and 5 of 6 in the value condition (Figure 4—figure supplement 1). Thus, Experiments 1 and 2 strongly suggest that our results are not driven by specific instructions or characteristics of the behavioral task.

As a further robustness check, for each participant we grouped the data in different ways across experiments (Experiments 1 and 2) and experimental conditions (perceptual or value) and investigated which sampling model was favored. We found that irrespective of how the data was grouped, DbS was the model that was clearly deemed best at the population (Figure 4) and individual level (Figure 4—figure supplement 3). Additionally, we investigated whether these quantitative results specifically depended on our choice of using a latent-mixture model. Therefore, we also fitted each model independently and compared the quality of the model fits based on out-of-sample cross-validation metrics (Materials and methods). Once again, we found that the DbS model was favored independently of experiment and conditions (Figure 4).

One possible reason why the two experimental conditions did not lead to differences could be that, after doing one condition for two days, the participants did not adapt as easily to the new incentive rule. However, note that as the participants did not know of the second condition before carrying it out, they could not adopt a compromise between the two behavioral objectives. Nevertheless, we fitted the latent-mixture model only to the first condition that was carried out by each participant. We found once again that DbS was the best model explaining the data, irrespective of condition and experimental paradigm (Figure 4—figure supplement 7). Therefore, the fact that DbS is favored in the results is not an artifact of carrying out two different conditions in the same participants.

We also investigated whether the DbS model makes more accurate predictions than the widely used logarithmic model of numerosity discrimination tasks (Dehaene, 2003). We found that DbS still made better out-of-sample predictions than the log-model (Figure 4b, Figure 5f,g). Moreover, these results continued to hold after taking into account possible choice history biases (Figure 4—figure supplement 4). In addition to these quantitative results, qualitatively we also found that behavior closely matched the predictions of the DbS model remarkably well (Figure 4c), based on virtually only one free parameter, namely, the number of samples (resources) . Together, these results provide compelling evidence that DbS is the most likely resource-constrained sampling strategy used by participants in numerosity discrimination tasks.

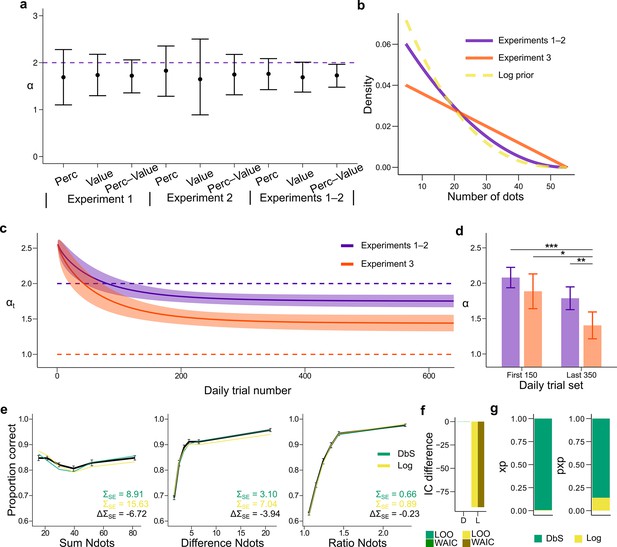

Prior adaptation analyses.

(a) Estimation of the shape parameter for the DbS model by grouping the data for each and across experimental conditions and experiments. Error bars represent the 95% highest density interval of the posterior estimate of at the population level. The dashed line shows the theoretical value of . (b) Theoretical prior distribution in Experiments 1 and 2 (, purple) and 3 (, orange). The dashed line represents the value of of our prior parametrization that approximates the DbS and log discriminability models. (c) Posterior estimation of (Equation 18) as a function of the number of trials in each daily session for Experiments 1 and 2 (purple) and Experiment 3 (orange). The results reveal that, as expected, reaches a lower asymptotic value . Error bars represent ± SD of 3000 simulated values drawn from the posterior estimates of the HBM (see Materials and methods). (d) Model fit to the first 150 and last 350 trials of each daily session. The parameter was allowed to vary between the first and last sets of daily trials and between Experiments 1–2 and Experiment 3. In Experiment 3, is lower in the last set of trials compared to the first set of trials (). In addition, for the last trials is lower for Experiment 3 than for Experiments 1–2 (). This confirms that the results presented in panel c are not artifacts of the adaptation parametrization assumed for . Error bars represent ± SD of the posterior chains of the corresponding parameter. (*P<0.05, **P<0.01, and ***P<0.001). (e) Behavioral data (black) and model fit predictions of the DbS (green) and Log (yellow) models. Performance of each model as a function of the sum of the number of dots in both clouds (left), the absolute difference between the number of dots in both clouds (middle) and the ratio of the number of dots in the most numerous cloud over the less numerous cloud (right). Error bars represent SEM (f) Difference in LOO and WAIC between the best fitting DbS (D) and logarithmic encoding (Log) model. (g) Population exceedance probabilities (xp, left) and protected exceedance probabilities (pxp, right) for DbS (green) vs Log (yellow) of a Bayesian model selection analysis (Stephan et al., 2009): , . These results provide a clear indication that the adaptive DbS explains the data better than the Log model.

Recent studies have also investigated behavior in tasks where perceptual and preferential decisions have been investigated in paradigms with identical visual stimuli (Dutilh and Rieskamp, 2016; Polanía et al., 2014; Grueschow et al., 2015). In these tasks, investigators have reported differences in behavior, in particular in the reaction times of the responses, possibly reflecting differences in behavioral strategies between perceptual and value-based decisions. Therefore, we investigated whether this was the case also in our data. We found that reaction times did not differ between experimental conditions for any of the different performance assessments considered here (Figure 4—figure supplement 5). This further supports the idea that participants were in fact using the same sampling mechanism irrespective of behavioral goals.

Here it is important to emphasize that all sampling models and the logarithmic model of numerosity have the same degrees of freedom (performance is determined by in the sampling models and Weber’s fraction in the log model, Materials and methods). Therefore, qualitative and quantitative differences favoring the DbS model cannot be explained by differences in model complexity. It could also be argued that normal approximation of the binomial distributions in the sampling decision models only holds for large enough . However, we find evidence that the large- optimal solutions are also nearly optimal for low values (Appendix 7). Estimates of in our data are in general (Table 1) and we find that the large- rule is nearly optimal already for (Appendix 7). Therefore the asymptotic approximations should not greatly affect the conclusions of our work.

Resource parameter fits.

Fits of the resource parameter for the Accuracy, Reward and Decision by Sampling (DbS) models including data across experiments and conditions (perceptual (P) or value (V)) either including or ignoring choice history effects. The values represent the mean ± SD of the posterior distributions at the population level for parameter . Note that Reward and in particular the DbS encoding models require a higher number of resources than the Accuracy model, which is coherent with the fact that the Accuracy model allocates its resources to maximize efficiency, therefore reducing the number of resources needed to reach a given accuracy. DbS has the highest values of because it is the most inefficient model.

| Model | |||||

|---|---|---|---|---|---|

| Experiment | Condition | History effects | nAccuracy | nReward | nDbS |

| 1 | V | not included | 15.24 ± 3.09 | 17.54 ± 3.98 | 24.40 ± 5.16 |

| 2 | V | not included | 22.48 ± 2.43 | 27.58 ± 3.81 | 35.40 ± 3.44 |

| 1 | P | not included | 15.19 ± 3.99 | 17.84 ± 4.85 | 24.64 ± 6.59 |

| 2 | P | not included | 20.99 ± 1.59 | 24.22 ± 1.93 | 33.54 ± 2.45 |

| 1 | P/V | not included | 15.33 ± 3.41 | 17.25 ± 4.45 | 24.15 ± 5.75 |

| 2 | P/V | not included | 21.30 ± 0.96 | 25.27 ± 1.99 | 33.90 ± 1.51 |

| 1/2 | V | not included | 18.56 ± 2.04 | 22.05 ± 2.73 | 29.52 ± 3.25 |

| 1/2 | P | not included | 17.91 ± 2.09 | 20.66 ± 2.59 | 28.62 ± 3.51 |

| 1/2 | P/V | not included | 17.93 ± 1.87 | 21.03 ± 2.46 | 28.58 ± 3.04 |

| 1 | V | included | 15.50 ± 3.13 | 17.50 ± 3.91 | 24.68 ± 5.08 |

| 2 | V | included | 22.92 ± 2.37 | 28.07 ± 3.73 | 36.18 ± 2.91 |

| 1 | P | included | 15.41 ± 3.81 | 17.96 ± 4.88 | 24.70 ± 6.62 |

| 2 | P | included | 21.57 ± 1.71 | 24.88 ± 2.17 | 34.37 ± 2.93 |

| 1 | P/V | included | 15.16 ± 3.55 | 17.43 ± 4.39 | 24.30 ± 5.94 |

| 2 | P/V | included | 21.80 ± 0.92 | 25.81 ± 1.86 | 34.60 ± 1.40 |

| 1/2 | V | included | 18.86 ± 2.07 | 22.48 ± 2.75 | 29.85 ± 3.17 |

| 1/2 | P | included | 18.15 ± 2.17 | 21.11 ± 2.72 | 29.01 ± 3.47 |

| 1/2 | P/V | included | 18.22 ± 1.93 | 21.34 ± 2.50 | 29.12 ± 3.12 |

Dynamics of adaptation

Up to now, fits and comparison across models have been done under the assumption that the participants learned the prior distribution imposed in our task. If participants are employing DbS, it is important to understand the dynamical nature of adaptation in our task. Note that the shape of the prior distribution is determined by the parameter (Figure 5b, Equation 10 in Materials and methods). First, we made sure based on model recovery analyses that the DbS model could jointly and accurately recover both the shape parameter and the resource parameter based on synthetic data (Figure 3—figure supplement 2). Then we fitted this model to the empirical data and found that the recovered value of the shape parameter closely followed the value of the empirical prior with a slight underestimation (Figure 5a). Next, we investigated the dynamics of prior adaptation. To this end, we ran a new experiment (Experiment 3, n = 7 new participants) in which we set the shape parameter of the prior to a lower value compared to Experiments 1–2 (Figure 5b, Materials and methods). We investigated the change of over time by allowing this parameter to change with trial experience (Equation 18, Materials and methods) and compared the evolution of for Experiments 1 and 2 (empirical ) with Experiment 3 (empirical , Figure 5b). If participants show prior adaptation in our numerosity discrimination task, we hypothesized that the asymptotic value of should be higher for Experiments 1–2 than for Experiment 3. First, we found that for Experiments 1–2, the value of quickly reached an asymptotic value close to the target value (Figure 5c). On the other hand, for Experiment 3 the value of continued to decrease during the experimental session, but slowly approaching its target value. This seemingly slower adaptation to the shape of the prior in Experiment 3 might be explained by the following observation. The prior parametrized with in Experiment 3 is further away from an agent hypothesized to have a natural numerosity discrimination based on a log scale (, Materials and methods), which is closer in value to the shape of the prior in Experiments 1 and 2 (). Irrespective of these considerations, the key result to confirm our adaptation hypothesis is that the asymptotic value of is lower for Experiment 3 compared to Experiments 1 and 2 ().

In order to make sure that this result was not an artifact of the parametric form of adaptation assumed here (Equation 18, Materials and methods), we fitted the DbS model to trials at the beginning and end of each experimental session allowing to be a free but fixed parameter in each set of trials. The results of these new analyses are virtually identical to the results obtained with the parametric form, in which is smaller at the end of Experiment 3 sessions relative to beginning of Experiments 1 and 2 (), beginning of Experiments 3 () and end of Experiments 1 and 2 (, Figure 5d). In this model, we did not allow to freely change for each condition, and therefore a concern might be that the results might be an artifact of changes in , which could for example change with the engagement of the participants across the session. Given that we already demonstrated that both parameters and are identifiable, we fitted the same model as in Figure 5d, however this time we allowed to be free parameter alongside . We found that the results obtained in Figure 5d remained virtually unchanged (Figure 5—figure supplement 3), in addition to the result that the resource parameter remained virtually identical across the session (Figure 5—figure supplement 3).

We further investigated evidence for adaptation using an alternative quantitative approach. First, we performed out-of-sample model comparisons based on the following models: (i) the adaptive- model, (ii) free- model with free but non-adapting over time, and (iii) fixed- model with . The results of the out-of-sample predictions revealed that the best model was the free- model, followed closely by the adaptive- model () and then by fixed- model (). However, we did not interpret the apparent small difference between the adaptive- and the free- models as evidence for lack of adaptation, given that the more complex adaptive- model will be strongly penalized after adaptation is stable. That is, if adaptation is occurring, then the adaptive- only provides a better fit for the trials corresponding to the adaptation period. After adaptation, the adaptive- should provide a similar fit than the free- model, however with a larger complexity that will be penalized by model comparison metrics. Therefore, to investigate the presence of adaptation, we took a closer quantitative look at the evolution of the fits across trial experience. We computed the average trial-wise predicted Log-Likelihood (by sampling from the hierarchical Bayesian model) and compared the differences of this metric between the competing models and the adaptive model. We hypothesized that if adaptation is taking place, the adaptive- model would have an advantage relative to the free- model at the beginning of the session, with these differences vanishing toward the end. On the other hand, the fixed- should roughly match the adaptive- model at the beginning and then become worse over time, but these differences should stabilize after the end of the adaptation period. The results of these analyses support our hypotheses (Figure 5—figure supplement 2), thus providing further evidence of adaptation, highlighting the fact that the DbS model can parsimoniously capture adaptation to contextual changes in a continuous and dynamical manner. Furthermore, we found that the DbS model again provides more accurate qualitative and quantitative out-of-sample predictions than the log model (Figure 5e,f).

Discussion

The brain is a metabolically expensive inference machine (Hawkes et al., 1998; Navarrete et al., 2011; Stone, 2018). Therefore, it has been suggested that evolutionary pressure has driven it to make productive use of its limited resources by exploiting statistical regularities (Attneave, 1954; Barlow, 1961; Laughlin, 1981). Here, we incorporate this important — often ignored — aspect in models of behavior by introducing a general framework of decision-making under the constraints that the system: (i) encodes information based on binary codes, (ii) has limited number of samples available to encode information, and (iii) considers the costs of contextual adaptation.

Under the assumption that the organism has fully adapted to the statistics in a given context, we show that the encoding rule that maximizes mutual information is the same rule that maximizes decision accuracy in two-alternative decision tasks. However, note that there is nothing privileged about maximizing mutual information, as it does not mean that the goals of the organism are necessarily achieved (Park and Pillow, 2017; Salinas, 2006). In fact, we show that if the goal of the organism is instead to maximize the expected value of the chosen options, the system should not rely on maximizing information transmission and must give up a small fraction of precision in information coding. Here, we derived analytical solution for each of these optimization objective criteria, emphasizing that these analytical solutions were derived for the large- limiting case. However, we have provided evidence that these solutions continue to be more efficient relative to DbS for small values of , and more importantly, they remain nearly optimal even at relatively low values of , in the range of values that might be relevant to explain human experimental data (Appendix 7).

Another key implication of our results is that we provide an alternative explanation to the usual conception of noise as the main cause of behavioral performance degradation, where noise is usually artificially added to models of decision behavior to generate the desired variability (Ratcliff and Rouder, 1998; Wang, 2002). On the contrary, our work makes it formally explicit why a system that evolved to encode information based on binary codes must be necessarily noisy, also revealing how the system could take advantage of its unavoidable noisy properties (Faisal et al., 2008) to optimize decision behavior (Tsetsos et al., 2016). Here, it is important to highlight that this conclusion is drawn from a purely homogeneous neural circuit, in other words, a circuit in which all neurons have the same properties (in our case, the same activation thresholds). This is not what is typically observed, as neural circuits are typically very heterogeneous. However, in the neural circuit that we consider here, it could mean that the firing thresholds can vary across neurons (Orbán et al., 2016), which could be used by the system to optimize the required variability of binary neural codes. Interestingly, it has been shown in recent work that stochastic discrete events also serve to optimize information transmission in neural population coding (Ashida and Kubo, 2010; Nikitin et al., 2009; Schmerl and McDonnell, 2013). Crucially, in our work we provide a direct link of the necessity of noise for systems that aim at optimizing decision behavior under our encoding and limited-capacity assumptions, which can be seen as algorithmic specifications of the more realistic population coding specifications mentioned above (Nikitin et al., 2009). We argue that our results may provide a formal intuition for the apparent necessity of noise for improving training and learning performance in artificial neural networks (Dapello et al., 2020; Findling and Wyart, 2020), and we speculate that an implementation of 'the right' noise distribution for a given environmental statistic could be seen as a potential mechanism to improve performance in capacity-limited agents generally speaking (Garrett et al., 2011). We acknowledge that based on the results of our work, we cannot confirm whether this is the case for higher order neural circuits, however, we leave it as an interesting theoretical formulation, which could be addressed in future work.

Interestingly, our results could provide an alternative explanation of the recent controversial finding that dynamics of a large proportion of LIP neurons likely reflect binary (discrete) coding states to guide decision behavior (Latimer et al., 2015; Zoltowski et al., 2019). Based on this potential link between their work and ours, our theoretical framework generates testable predictions that could be investigated in future neurophysiological work. For instance, noise distribution in neural circuits should dynamically adapt according to the prior distribution of inputs and goals of the organism. Consequently, the rate of 'step-like' coding in single neurons should also be dynamically adjusted (perhaps optimally) to statistical regularities and behavioral goals.

Our results are closely related to Decision by Sampling (DbS), which is an influential account of decision behavior derived from principles of retrieval and memory comparison by taking into account the regularities of the environment, and also encodes information based on binary codes (Stewart et al., 2006). We show that DbS represents a special case of our more general efficient sampling framework, that uses a rule that is similar to (though not exactly like) the optimal encoding rule that assumes full (or costless) adaptation to the prior statistics of the environment. In particular, we show that DbS might well be the most efficient sampling algorithm, given that a reduction in the full representation of the prior distribution might not come at a great loss in performance. Interestingly, our experimental results (discussed in more detail below) also provide support for the hypothesis that numerosity perception is efficient in this particular way. Crucially, DbS automatically adjusts the encoding in response to changes in the frequency distribution from which exemplars are drawn in approximately the right way, while providing a simple answer to the question of how such adaptation of the encoding rule to a changing frequency distribution occurs, at a relatively low cost.

On a related line of work, Bhui and Gershman, 2018 develop a similar, but different specification of DbS, in which they also consider only a finite number of samples that can be drawn from the prior distribution to generate a percept, and ask what kind of algorithm would be required to improve coding efficiency. However, their implementation differs from ours in various important ways (see Appendix 8 for a detailed discussion). One of the main distinctions is that they consider the case in which only a finite number of samples can be drawn from the prior and show that a variant of DbS with kernel-smoothing is superior to its standard version. However, a key difference to our implementation is that they allow the kernel-smoothed quantity (computed by comparing the input with a sample from the prior distribution) to vary continuously between 0 and 1, rather than having to be either 0 or 1 as in our implementation (Figure 1). Thus, they show that coding efficiency can be improved by allowing a more flexible implementation of the coding scheme for the case when the agent is allowed to draw few samples from the prior distribution (Appendix 8). On the other hand, we restrict our framework to a coding scheme that is only allowed to encode information based on zeros or ones, where we show that coding efficiency can be improved relative to DbS only under a more complete knowledge of the prior distribution, where the optimal solutions can be formally derived in the large- limit. Nevertheless, we have shown that even under the operation of few sampling units, the optimal rules will be still superior to the standard DbS (if the agent has fully adapted to the statistics of the environment in a given context), even when a few number of processing units are available to generate decision relevant percepts.

We tested these resource-limited coding frameworks in non-symbolic numerosity discrimination, a fundamental cognitive function for behavior in humans and other animals, which may have emerged during evolution to support fitness maximization (Nieder, 2020). Here, we find that the way in which the precision of numerosity discrimination varies with the size of the numbers being compared is consistent with the hypothesis that the internal representations on the basis of which comparisons are made are sample-based. In particular, we find that the encoding rule varies depending on the frequency distribution of values encountered in a given environment, and that this adaptation occurs fairly quickly once the frequency distribution changes.

This adaptive character of the encoding rule differs, for example, from the common hypothesis of a logarithmic encoding rule (independent of context), which we show fits our data less well. Nonetheless, we can reject the hypothesis of full optimality of the encoding rule for each distribution of values used in our experiments, even after participants have had extensive experience with a given distribution. Thus, a possible explanation of why DbS is the favored model in our numerosity task is that accuracy and reward maximization requires optimal adaptation of the noise distribution based on our imposed prior, requiring complex neuroplastic changes to be implemented, which are in turn metabolically costly (Buchanan et al., 2013). Relying on samples from memory might be less metabolically costly as these systems are plastic in short time scales, and therefore a relatively simpler heuristic to implement allowing more efficient adaptation. Here, it is important to emphasize, as it has been discussed in the past (Tajima et al., 2016; Polanía et al., 2015), that for decision-making systems beyond the perceptual domain, the identity of the samples is unclear. We hypothesize, that information samples derive from the interaction of memory on current sensory evidence depending on the retrieval of relevant samples to make predictions about the outcome of each option for a given behavioral goal (therefore also depending on the encoding rule that optimizes a given behavioral goal).

Interestingly, it was recently shown that in a reward learning task, a model that estimates values based on memory samples from recent past experiences can explain the data better than canonical incremental learning models (Bornstein et al., 2017). Based on their and our findings, we conclude that sampling from memory is an efficient mechanism for guiding choice behavior, as it allows quick learning and generalization of environmental contexts based on recent experience without significantly sacrificing behavioral performance. However, it should be noted that relying on such mechanisms alone might be suboptimal from a performance- and goal-based point of view, where neural calibration of optimal strategies may require extensive experience, possibly via direct interactions between sensory, memory and reward systems (Gluth et al., 2015; Saleem et al., 2018).

Taken together, our findings emphasize the need of studying optimal models, which serve as anchors to understand the brain's computational goals without ignoring the fact that biological systems are limited in their capacity to process information. We addressed this by proposing a computational problem, elaborating an algorithmic solution, and proposing a minimalistic implementational architecture that solves the resource-constrained problem. This is essential, as it helps to establish frameworks that allow comparing behavior not only across different tasks and goals, but also across different levels of description, for instance, from single cell operation to observed behavior (Marr, 1982). We argue that this approach is fundamental to provide benchmarks for human performance that can lead to the discovery of alternative heuristics (Qamar et al., 2013; Gardner, 2019) that could appear to be in principle suboptimal, but that might be in turn the optimal strategy to implement if one considers cognitive limitations and costs of optimal adaptation. We conclude that the understanding of brain function and behavior under a principled research agenda, which takes into account decision mechanisms that are biologically feasible, will be essential to accelerate the elucidation of the mechanisms underlying human cognition.

Materials and methods

Participants

The study tested young healthy volunteers with normal or corrected-to-normal vision (total n = 20, age 19–36 years, nine females: n = 7 in Experiment 1, two females; n = 6 new participants in Experiment 2, three females; n = 7 new participants in Experiment 3, four females). Participants were randomly assigned to each experiment and no participant was excluded from the analyses. Participants were instructed about all aspects of the experiment and gave written informed consent. None of the participants suffered from any neurological or psychological disorder or took medication that interfered with participation in our study. Participants received monetary compensation for their participation in the experiment partially related to behavioral performance (see below). The experiments conformed to the Declaration of Helsinki and the experimental protocol was approved by the Ethics Committee of the Canton of Zurich (BASEC: 2018–00659).

Experiment 1

Request a detailed protocolParticipants (n = 7) carried out a numerosity discrimination task for four consecutive days for approximately one hour per day. Each daily session consisted of a training run followed by 8 runs of 75 trials each. Thus, each participant completed ∼2400 trials across the four days of experiment.

After a fixation period (1–1.5 s jittered), two clouds of dots (left and right) were presented on the screen for 200 ms. Participants were asked to indicate the side of the screen where they perceived more dots. Their response was kept on the screen for 1 s followed by feedback consisting of the symbolic number of dots in each cloud as well as the monetary gains and opportunity losses of the trial depending on the experimental condition. In the value condition, participants were explicitly informed that each dot in a cloud of dots corresponded to 1 Swiss Franc (CHF). Participants were informed that they would receive the amount in CHF corresponding to the total number of dots on the chosen side. At the end of the experiment a random trial was selected and they received the corresponding amount. In the accuracy condition, participants were explicitly informed that they could receive a fixed reward (15 Swiss Francs (CHF)) for each correct trial. This fixed amount was selected such that it approximately matched the expected reward received in the value condition (as tested in pilot experiments). At the end of the experiment, a random trial was selected and they would receive this fixed amount if they chose the cloud with more dots (i.e., the correct side). Each condition lasted for two consecutive days with the starting condition randomized across participants. Only after completing all four experiment days, participants were compensated for their time with 20 CHF per hour, in addition to the money obtained based on their decisions on each experimental day.

Experiment 2