Independent and interacting value systems for reward and information in the human brain

Peer review process

This article was accepted for publication as part of eLife's original publishing model.

History

- Version of Record published

- Accepted Manuscript published

- Accepted

- Received

- Preprint posted

Decision letter

-

David BadreReviewing Editor; Brown University, United States

-

Michael J FrankSenior Editor; Brown University, United States

In the interests of transparency, eLife publishes the most substantive revision requests and the accompanying author responses.

Decision letter after peer review:

[Editors’ note: the authors submitted for reconsideration following the decision after peer review. What follows is the decision letter after the first round of review.]

Thank you for submitting your work entitled "Independent and interacting value systems for reward and information in the human prefrontal cortex" for consideration by eLife. Your article has been reviewed by 3 peer reviewers, and the evaluation has been overseen by a Reviewing Editor and a Senior Editor. The reviewers have opted to remain anonymous.

Our decision has been reached after consultation between the reviewers. Based on these discussions and the individual reviews below, we regret to inform you that your work will not be considered further for publication in eLife.

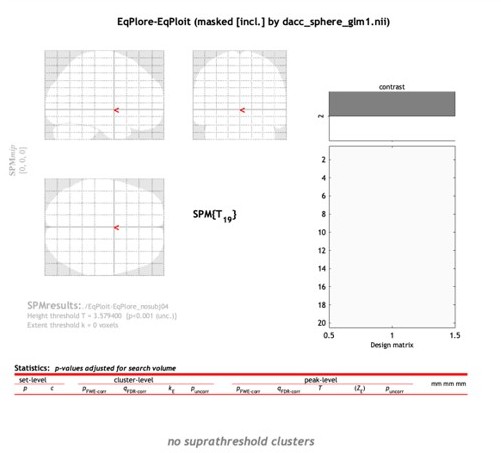

The study reports evidence from fMRI for independent brain systems that value reward versus information. An elegant free choice task is used during fMRI to show that people value both reward and information. Then, using model-based fMRI and a method of deconfounding information and value, a dissociation is reported between the dACC and vmPFC tracking information and reward, respectively.

The reviewers found this to be an important topic and were in largely in agreement that this dissociation would be an advance of interest to the field. However, the reviewers also identified a substantial number of serious concerns that raised doubt regarding these observations and their interpretation. Some of these are addressable with relatively straightforward additional analyses. However, others might also be addressable, but the path forward is less clear. At the very least, they would require considerable additional work and possibly collection of new data. Thus, after considerable discussion, it was decided that the work required to address these serious concerns was extensive enough that it would be beyond that expected of a revision at eLife. As a result, the consensus was to reject the paper. However, given the promise of the paper, the reviewers and editors also agreed that were the paper to be extensively reworked in order to address all of the points raised, then we would be willing to consider the paper again at eLife as a new submission.

I have attached the original reviews to this letter, as the reviewers provide detailed points and suggestions there. However, I wish to highlight a few points that the reviewers emphasized in discussion and that led to our decision.

Dissociating instrumental versus non-instrumental utility. To support the main conclusion that dACC does code for information value, not reward (i.e. dACC and vmPFC constitute an independent value system), one would need to show that dACC does not code for long-term reward. However, the key control analysis for ACC between information utility and inherent value is not convincing because of the assumptions it makes about people's beliefs (see comments by R1). However, addressing this might require post-hoc assessments of participant's beliefs or collection of new data. One reviewer suggested an analysis of behavior along these lines:

-describe the task more clearly so that we can understand the generative process,

-find a way to calculate the objective instrumental value of information in the task (or the value that participants should have believed it had, given their instructions).

-fit a model to behavior to estimate subjective information value

-check how much of the subjective value of information can be accounted for its objective instrumental value.

Regardless of the approach taken, it was agreed that addressing this point is essential for the conclusions to be convincing.

Behavioral Fits. R3 raises some detailed concerns regarding the model fits that are serious and might impact interpretation of the model-based ROI analysis. As such, these are quite important to address. During discussion, one reviewer suggested that the problem might be that the omega and γ parameters are trading off and behavior can be fit equally well by both. This is resulting in extreme and variable fits across participants (you can see this in an apparent negative correlation between your omega and γ parameters; Spearman's rho = -0.57, p = 0.008). Other parameters are showing similar issues (α and β, for example). Among these is omega and β (correlation of about -.6), which will make separating reward and information difficult. This reviewer suggests that you consider analyzing the data in terms of the model's total estimated subjective value (i.e. -omega*I), which incorporates the effects of both the omega and the γ parameters. They also suggest that you consider reformulating the choice function to separate reward terms from information terms, for example, applying terms like Β*Q – Omega*I. Regardless of whether you take these suggestions, the model fitting and recoverability of the parameters appears to be a significant issue to address. At a minimum, some recoverability analysis and analysis of the correspondence between model's and subject's behavior will be required.

- Support for two independent systems. Several comments from reviewers highlighted issues that diminish support for the core separable system finding. These are well summarized in R2's comments, but they center around the definition of reward and information terms, correlations between information and reward, no test of for a double dissociation, and the lack of control for other correlated variables.

The reviews contain many suggestions of ways these issues might be addressed. However, in discussion, the reviewers suggested the alternate regressors for reward be included as:

1) second highest option's value (V2) – avg(value chosen, V3)

2) entropy over the three options' values (V1,V2,V3)

In addition to the following covariates:

1) minimum value of the three decks

2) maximum value of the three decks

3) average value of the three decks

4) RT

They also emphasized that at least two models of brain activity should be considered. One that includes raw value (regressors for a chosen option's expected reward and information) and one that includes relative value (relative excepted reward and information). These last approaches are detailed in the comments from reviewers.

- Novelty. R3's comments raised a concern regarding the novelty of the contribution on first reading. However, after discussion, this reviewer came around on this point and was persuaded of its novelty. Nonetheless, placing the present findings the context of prior work locating dissociations between dACC and other brain regions is important. This reviewer has provided important guidance along these lines.

Reviewer #1:

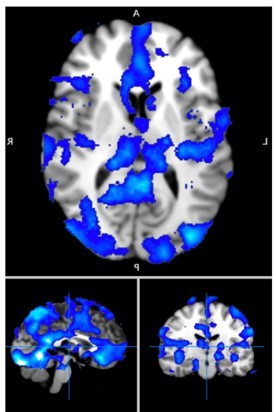

Cogliati Dezza and colleagues report fMRI findings showing independent systems for valuing reward vs information. They use an elegant task in which participants freely choose between three decks of cards, after having gone through a sequence of forced choice trials which that control their sampling of the decks and prior information about their reward values. They operationally define information gain in terms of the number of times an option has been previously sampled (under the assumption that fewer samples = more information gain from the next sample) and show that people value both immediate reward and its informational gain. They interestingly show that simple univariate fMRI analysis of either information or reward would be confounded, but report that a multivariate analysis of activity related to the deck being chosen shows that vmPFC activations primarily track reward, dACC tracks information, and striatum tracks the choice probability reflecting its total value.

The key report that information and reward may be valued by separate neural systems in frontal cortex is clearly important and valuable for the field. This would be in contrast to recent studies which primarily reported areas that have similar responses for both information and reward value (Charpentier et al., PNAS 2018; Kobayashi and Hsu, PNAS 2019). This idea is plausible, appealing, and has strong implications for brain function. However, I have some concerns about whether the manuscript is fully convincing.

1. Figure 1 that sets up the paper would benefit from a clear organization where it shows in parallel the two hypotheses, the findings of univariate analysis (i.e. similar under both hypotheses), and crucially, also shows the simulated findings of the improved analysis+experiment advocated in this paper, in the same format (i.e. clearly different under the two hypotheses, presumably by showing only a non-zero red bar for the information system and only a non-zero blue bar for the reward system, and that the estimates of the two effects aren't negatively correlated).

This last simulation seems crucial since their current simulations show the flaws of bad approaches but do not show the validity of their current approach, which needs to be proven to know that we can trust the key findings in Figure 4.

Similarly, I would suggest retitling Figure 3 something like "APPARENT symmetrical opposition…" to make to clear that they are saying that the findings shown in Figure 3A,B are invalid. Otherwise nothing in this figure tells the reader that they are not supposed to trust them.

2. I greatly appreciate their control analysis on a very important point: how much of the information value in behavior and brain activity in this task is due to the information's instrumental utility in obtaining points from future choices (the classic exploration-exploitation tradeoff) vs. its non-instrumental utility (e.g. satisfying a sense of curiosity). It is important to understand which (or both) the task is tapping into, since these could be mediated by different neural information systems. If this dissociation is not possible, it would be important to discuss this limitation carefully in the introduction and interpretation.

I am not sure I understand the current control analysis, and am not fully confident this can be dissociated with this task. The logic of the control analysis is that if dACC is involved in long-term reward maximization its activity should be lower before choices of an unsampled deck in contexts when the two sampled decks gave relatively high rewards, presumably because the unsampled deck is less worth exploring. I think I see where they are coming from, but this is only obviously true if people believe each deck's true mean reward is drawn independently from the true mean rewards of the other two decks. However, this may not be the true setup of the task or how people understand it.

The paper's methods do not describe the generative distributions in enough detail for me to understand them (the methods describe how the final payoff was generated, but not how the numbers from the 6 forced choices were generated, or how the numbers from the forced choices relate to the final payoff). But in their previous interesting behavior paper they used different High Reward and Low Reward contexts where all decks had true means that were High or Low. If they did the same in this paper then I am not sure their control analysis would make sense, since the deck values would be positively correlated.

Broadly speaking, their logic seems to be that if people value information for its instrumental utility there should be a Reward x Information interaction so that they value information more for decks with high expected reward (potential future targets for exploitation) than low expected reward (not worth exploring). I am not sure if this would be strong evidence about whether information value is instrumental because it has been reported that people may also value non-instrumental information in relation to expected reward (e.g. Kobayashi et al., Nat Hum Behav 2019). But if they do want to use this logic, wouldn't it be simpler to just add an interaction term to their behavioral and neural models and test whether it is significant?

Also, their current findings seem to imply a puzzling situation where participants presumably do make use of the information at least in part for its instrumental value in helping them make future choices (as shown a similar task by Wilson et al), but their brain activity in areas sensitive to information only reflects non-instrumental utility. I am not sure how to interpret this.

However they address these, I have two suggestions.

First, whatever test they do to dissociate instrumental vs non-instrumental utility, it would be ideal if they can do it on both behavior and neural activity. That way they can resolve the puzzle because they can find which type of utility is reflected in behavior and then test how the same utility is reflected in brain activity.

Second, they apparently manipulated the time horizon (giving people from 1 to 6 free choices). If so, this nice manipulation could potentially test this, right? Time horizon has been shown by Wilson et al. to regulate exploration vs exploitation. The instrumental value of information gained by exploration should be higher for long time horizons, whereas the non-instrumental value of information (e.g. from satisfying curiosity) has no obvious reason to scale with time horizon.

3. They analyze the first free-choice trial because "information and reward are orthogonalized by the experiment design", but is this true? They say Wilson et al. showed this in their two option task but it is unclear it applies to this three option task. Particularly because they do not analyze activity associated with all decks, but rather in terms of the chosen deck, and participants chose decks based on both info and reward. Depending on a person's choice strategy the info and reward of the chosen deck may not be orthogonal, even though the info and reward considered over all possible decks they could choose are orthogonal.

Basically, it would be valuable to prove their point by including supplemental scatterplots with tests for correlation between whichever information and reward regressors they use in the behavioral model and in the neural model. If they do have some correlation, they should show their analysis handles them properly.

4. I am a little confused by how they compare information value and reward value. In the model from their previous paper and used in this paper they say, roughly speaking, that the value of a deck = (expected reward value from the deck) + w*(expected information value from the deck). So, I thought that they would use the reward and information value from the chosen deck as their regressors. But they subtract the reward values of other decks from the reward term. I am not sure why. They cite studies saying that "VmPFC appears not only to code reward-related signals but to specifically encode the relative reward value of the chosen option", but if so, then if they want to test if vmPFC codes information-related signals shouldn't they also test if it encodes the relative information value? (Or does this not matter because It,j(c==1) is linearly related to It,j(c==1) – mean(It,j(c==2),It,j(c==3)) in this task so the findings would be the same in either case?). And conversely, if dACC encodes information value of a deck shouldn't they test if it encodes the reward value of that deck, instead of relative reward value? It's also confusing that in this paper and their previous paper they argue that

Basically I want to make sure the difference in vmPFC vs. dACC coding is truly due to reward vs information coding rather than relative vs non-relative coding.

Reviewer #2:

This is an interesting and well-motivated study, using a clever experimental design and model-based fMRI. The authors argue their results support two independent value systems in the prefrontal cortex, one for reward and one for information. Based on separate GLMs, it is argued for a double dissociation, with relative reward (but not information gain) coded in vmPFC and information gain (but not relative reward) encoded in dACC. While I am sympathetic to this characterization, I have concerns about several of the key analyses conducted, and their interpretation that, I believe, call into question the main conclusions of the study.

1. Correlations between relative reward and information gain terms. The methods state: "Because information and reward are expected to be partially correlated, the intent of GLMs was to allow us to investigate the effects of the second parametric modulator after accounting for variance that can be explained by the first parametric modulator." I had thought the purpose of the task design was to orthogonalize reward and information. What is the correlation between regressors and what is driving the correlation? Are these terms correlated because people often switch to choose options that have high expected reward but have also not been sampled as frequently, in the unequal information trials? If these terms were in fact experimentally dissociated, it should be possible to allow them to compete for variance within the same GLM without any orthogonalization. This is an important issue to address in order to support the central claims in the paper.

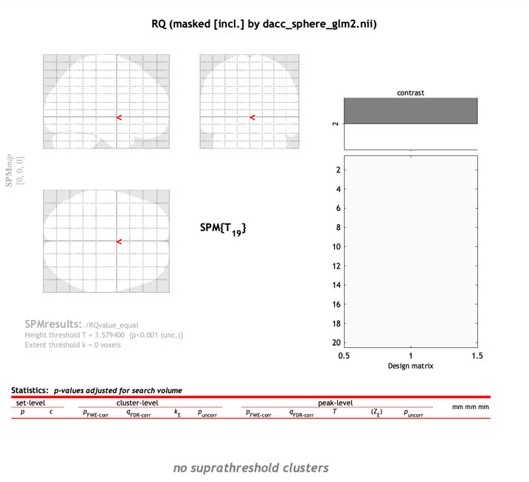

2. If dACC is specifically encoding the "information value" then there should be no effect of the relative value on equal information condition trials. By design, these trials should appropriately control for any information sampling difference between options. What is the effect of the negative relative value in the dACC on these equal information trials (or similar terms as defined in my next point)?

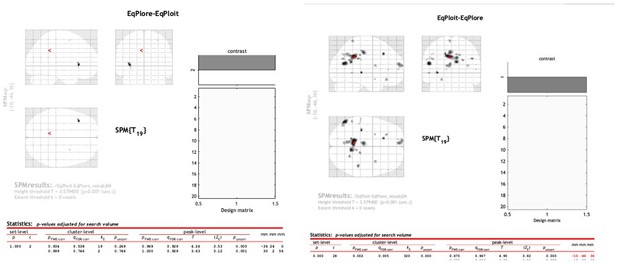

3. Relative value definition. In the analyses presented, the negative effect of relative reward in dACC is going in the predicted direction (negatively) and looks marginal. This may result from how the relative reward term was defined. What does the effect in dACC look like if relative reward is instead defined as V2 – V1, V2 – avg(V2,V3), or even entropy(V1,V2,V3)? A similar question applies to the vmPFC analyses. Did the relative value defined as the Vc – avg(V2, V3) explain VMPFC activity better than Vc – V2 or even -entropy(V1,V2,V3)?

4. Double dissociation. The authors are effectively arguing that their data supports a double dissociation between VMPFC encoding relative reward but not information gain and dACC for information gain but not relative reward, but this is not directly tested. This claim can be supported by a statistical interaction between region and variable type. To test this hypothesis appropriately, the authors should therefore perform an F-test on the whole brain or a rmANOVA on the two ROIs, using the β coefficients resulting from a single GLM.

5. Information gain or directed exploration (or both)? Based on the equal and unequal information conditions that were all modeled in the GLMs, it seems likely that the information gain would be high (0 or 2 samples in the unequal information trials) only when subjects choose the more uncertain option, which is equivalent to directed exploration (provided the relative reward is controlled for). Conversely, it would be low when subjects choose the better-known option and are therefore exploiting known information, and intermediate in the equal information condition. Thus, would it be fair to say that the activity in dACC could equally be driven by directed exploration decisions (also equivalent to switching away from a better known "default" option), rather than necessarily "coding for information gain" per se? One way to address this is to show that the information gain is still reflected when subjects choose the more sampled options, or to include an additional explore/exploit regressor in the GLM.

6. Background literature in the introduction. Some of the assertions concerning vmPFC and dACC function in the introduction could be further queried.

For example, the introduction states: "In general, vmPFC activity appears to reflect the relative reward value of immediate, easily-obtained, or certain outcomes, while dACC activity signals delay, difficulty, or uncertainty in realizing prospective outcomes." While I do agree that some evidence supports a distinction between current outcomes in vmPFC and prospective outcomes in dACC, there is considerable evidence that vmPFC only reflects the relative chosen value for trials in which a comparison is more difficult and integration amongst attributes is required. For example, in both fMRI and MEG studies, the vmPFC effect of value difference goes away for "no-brainer" decisions in which both the reward probability and the magnitude are greater (dominant) for one of the options, rendering integration unnecessary and the decision easier – see Hunt et al., Nature Neurosci. 2012, PLoS CB, 2013; Jocham et al., Nature Neurosci., 2014. In addition, vmPFC is typically found to reflect relative subjective value in inter-temporal choice studies (e.g. Kable and Glmicher, Nature Neurosci., 2007; Nicolle et al., Neuron., 2011, etc.). Interestingly, vmPFC does not show relative value effects for physical effort-based decisions, whereas the dACC does (see Prevost et al., J. Neurosci. 2010; Lim et al., Klein-FLugge et al. J. Neurosci., 2016; Harris and Lim, J. Neurosci., 2016). Thus, vmPFC relative value effects are observed for difficult decisions requiring integration and comparison, including for uncertain and delayed outcomes, but potentially not when integrating effort-based action costs. This is also consistent with lesion work in humans and monkeys (Rudebeck et al., J. Neurosci., 2008; Camille et al., J. Neurosci., 2011).

In addition, there is considerable evidence from single unit recording to support the interpretation that ACC neurons do not only encode information gain or information value but integrate multiple attributes into a value (or value-like) effect, even in the absence of any possibility for exploration and, thus, information gain. For example, Kennerley et al., JoCN 2009, Nature Neurosci. 2011 have shown that ACC neurons, in particular, multiplex across multiple variables including reward amount, probability, and effort such that neurons encode each attribute aligned to the same valence (e.g. positive value neurons encode reward amount and probability positively and effort negatively, and negative value neurons do the opposite), consistent with an integrated value effect, even in the absence of any information gain. This literature could be incorporated into the Introduction, or the assumptions toned down some.

7. Experimental details. Some key details appear to be omitted in the manuscript or are only defined in the Methods but are important to understanding the Results. For instance:

– The information gained is defined as follows: "negative value relates to the information to be gained about each deck by participants". However, it is unclear for which choice (deck) this is referring to. I assume it is for the chosen deck on the first free choice trial? Or is it the sum/average over the decks? This should be made clearer.

– It would be helpful it the relative reward and Information gain regressors were defined in the main text.

– What details were subjects told about the true generative distributions for reward payouts.

– What do subjects know about the time horizon, which is important for modulating directed exploration? This could assist in interpreting the dACC effect in particular. Indeed, manipulating the time horizon was a key part of the Wilson et al. original task design and would assist in decoupling the reward and information, if it is manipulated here.

– How are the ROIs defined? If it is based on Figure 2F, the activation called dACC appears to be localized to pre-SMA. This should therefore be re-labelled or a new ROI should be defined.

8. Brain-behavior relationships. If dACC is specifically important for information gain computations, then it would follow that those subjects who show more of a tendency for directed exploration behaviorally would also show a greater neural effect of information gain in ACC and vice versa for vmPFC and relying on relative reward values. Is there any behavioral evidence that can further support the claims about their specific functions?

9. Reporting of all GLM results. It would be helpful to produce a Table showing all results from all GLMs. For example, where else in the brain showed an effect of choice probability, etc.?

Reviewer #3:

The manuscript describes an fMRI study that tests whether vmPFC and dACC independently code for relative reward and information gain, respectively, suggesting an independent value system. The paper argues that the two variables have been confounded in previous studies, leading researchers to advocate for a single value system in which vmPFC and dACC serve functionally opposed roles, with vmPFC coding for positive outcome values (e.g. reward) and dACC coding for negative outcome values (e.g. difficulty, costs or uncertainty). The study deploys a forced-choice and a free-choice version of a multi-armed bandit task in which participants select between three decks to maximize reward. It also includes an RL model (gkRL) that is fit to participant's behavior. Variables of the fitted model are used as GLM regressors to identify regions with activity related to relative reward, as well as information gain. The study finds symmetrical activity in vmPFC and dACC (e.g. vmPFC correlates positively, dACC negatively with reward) if correlations between relative reward and information gain are not taken into account. In contrast, the study finds that vmPFC and dACC independently code for relative reward and information gain, respectively, if confounds between reward and information gain are accounted for in the analysis. The manuscript concludes that these findings support an independent value system as opposed to dual value system of PFC.

I found this paper to be well-written and consider it to be an interesting addition to a rich literature on neural correlates of information. However, I got the impression that the manuscript oversells the novelty of its results and fails to situate itself in the existing literature (Comments 1-2). Moreover, some of the key references appear to be misrepresented in favor of the study's conclusions (Comment 3). While most of the methodology appears sound (though see Comment 6 in the statistical comments section), the RL model yields questionable model fits (Comment 4). The latter is troubling given that reported analyses rely on regressors retrieved from the RL model. Finally, the study may be missing a proper comparison to alternative models (Comment 5).

Below, I elaborate on concerns that will hopefully be useful in reworking this paper for a future submission.

1. Novelty of the findings: A main contribution of the paper is the finding that vmPFC and dACC code independently for reward and information gain, respectively, when accounting for a confound between reward and information. However, it should be noted that several other decision-making studies found a similar distinction between vmPFC and dACC by orthogonalizing reward value and uncertainty (e.g. Blair et al. 2006, Daw et al., 2006, Marsh et al. 2007, FitzGerald et al., 2009; Kim et al., 2014). In light of this work, the novelty distinction drawn in this manuscript appears exaggerated. Rather than advocating for functionally opposing roles between dACC and vmPFC, these articles support interpretations similar to the Dual Systems View. While the present manuscript includes a citation of such prior work ("other studies have reported dissociations between dACC and vmPFC during value-based decision-making [citations]", lines 52-53), the true distinction remains nebulous.

2. Relevance of the studied confound: I commend this study for addressing a confound between reward and information in multi-armed bandit tasks of this type. However, the manuscript overstates the generality of this confound, as a motivation for this study. A reader of the manuscript might get the impression that most of the relevant literature is affected by this confound, when in fact the confound only applies to a subset of value-based decision-making studies in which information can be gained through choice. It is unclear how the confound applies to decision-making studies in which values for stimuli cannot be learned through choice (e.g. preference-based decisions). A large proportion of decision-making research fits this description. It is not clear how value would be confounded with information in this domain. Finally, the study focuses on the *relative* value of options, whereas many studies do not (see the references above).

3. Mischaracterization of the literature: In Table 1, and throughout the text, the manuscript lists studies in which vmPFC and dACC are shown to be positively or negatively correlated with decision variables. It is then implied that these studies advocate a "Single Value System", i.e. that regions such as vmPFC and dACC constitute a "single distributed system that performs a cost-benefit analysis" (line 36). Yet, most of the cited work does not advocate for this interpretation. For instance, cited work on foraging theory (e.g. Rushworth et al., 2012) makes the opposite claim, suggesting that vmPFC and dACC are involved in different forms of decision-making. Furthermore, the cited study by Shenhav et al. (2016) neither explicitly promotes the interpretation that vmPFC and dACC show "symmetrically opposed activity", nor does it suggest that they are in "functional opposition" in value-based choice (lines 53-58). Instead, the authors of Shenhav et al. (2016) suggest that their findings support the view that vmPFC and dACC are associated with distinct roles in decision-making, such as vmPFC being associated with reward, and dACC being associated with uncertainty or conflict. Another cited study from Shenhav et al. (2019) also does not advocate for "emerging views regarding the symmetrically opposing roles of dACC and vmPFC in value-based choice" (line 336). Instead, the cited article draws a strong distinction between (attentionally-insensitive) reward encoding in vmPFC vs. (attention-dependent) conflict coding in dACC.

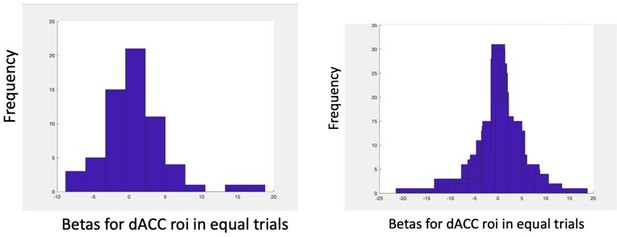

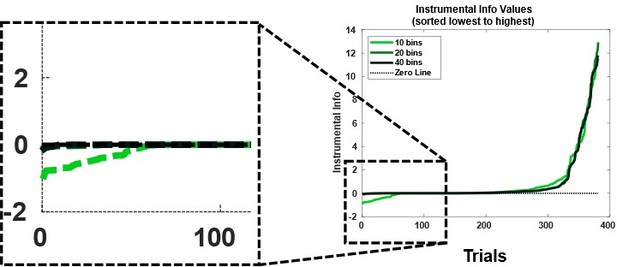

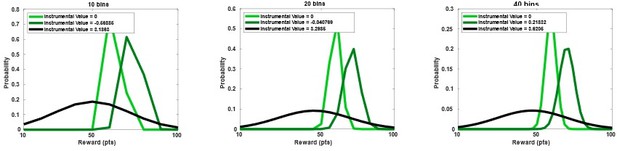

4. Model fits: The study's main fMRI results (e.g. that dACC independently codes for information gain) are based on regressors extracted from variables of the fitted RL model (e.g. information gain). Yet, more than a third of the participants yields model fits that could lead one to question the validity of the GLM regressors, in particular the regressor for information gain. One of the fitted model parameters, labeled "γ", exponentiates the history of previous observations to compute the amount of information associated with a deck. In line 420 it is written that "[γ] is constrained to be > 0". However, γ appears to be 0 for three out of twenty participants (6, 11, 14, see Table S2). If I understand the model correctly, then information associated with any deck (line 416) would evaluate to 1 for those participants, irrespective of which decks they observed. That is, the model fit suggests that these participants show no sensitivity to information across decks. A second parameter, omega, appears to scale how much information gain weighs into the final value for a deck (line 425). Again, if I understand correctly, the lower omega the less the RL agent weighs information gain when computing the final value associated with a deck. Thus, if omega evaluates close to 0, the agent would barely consider information gain when choosing a deck based on its final value. Given this, I am surprised that omega varies vastly in magnitude across participants, ranging from 0.26 to 48.66. Four out of twenty participants (1, 3, 19, 20, see Table S2) have fitted values for omega smaller than 1. This makes one question whether these participants actually use information to guide their decision making, relative to other participants. Relatedly, I was a bit surprised that the GLM implements – I_{t,j}(c) as regressor for information gain, instead of – omega * I_{t,j}(c). If omega is small, then the former regressor may not be a good proxy for information gain that is actually used to guide decision-making. Altogether, these model fits (seven out of twenty participants) may undermine the validity of "information associated with a deck" when used as a regressor for information gain in the fMRI analysis (at least in GLMs 2, 3 and 4).

5. Model comparison: In light of the previous comment, I wonder whether the gkRL model is best suited to explain behavior in this task, and, thus, whether it serves as a proper foundation for the intended fMRI analysis. As the manuscript notes in lines 408-409, the "model was already validated for this task and it was better able to explain participants' behavior compared to other RL models". However, this evaluation was conducted in a different study with (presumably) a different sample of participants. The manuscript should nevertheless provide a convincing argument for deploying this model to this study, as well as for using corresponding regressors in the fMRI analysis. This could be achieved by demonstrating that the model does a better job in explaining behavior compared to a standard RL model (without the information term) ‘for each of these participants’.

[Editors’ note: further revisions were suggested prior to acceptance, as described below.]

Thank you for resubmitting your work entitled "Independent and Interacting Value Systems for Reward and Information in the Human Brain" for further consideration by eLife. Your revised article has been evaluated by Michael Frank (Senior Editor) and a Reviewing Editor and the three reviewers who previously reviewed your paper.

The reviewers felt that the revised manuscript has been improved. Indeed, in some cases, the reviewers felt that revisions were extensive and commendable; for example, in addressing whether the information value is instrumental or non-instrumental.

However, there were several other fundamental points that were missed in the revision. The reviewers and reviewing editor discussed these points at length, and it was decided that most could be addressed in some form but simply were not. These include issues related to the placement of these results in the broader literature and characterization of previous studies, the definition of relative reward, the incorporation of the omega parameter in the information term, matching of covariates in the GLM, and a number of gaps in the added analyses, such as the switching versus default behavior. It was noted that some other issues like the orthogonalization approach or the confounding of the information term with other variables were also not addressed, and there was some discussion of whether these are addressable. Nonetheless, these outstanding issues were all viewed as important.

Thus, the consensus was to send this back for another revision. However, we must emphasize that if these points are not addressed in revision, the manuscript may not be considered acceptable for publication at eLife. I have included the full and detailed reviews from each reviewer in this letter so you can address them directly in your revision.

Reviewer #1:

The revised manuscript addresses some concerns raised by the reviews, by adding separate analyses that examine the effect of absolute versus relative reward and information gain, an analysis of parameter recovery and a formal comparison to a standard RL model. However, the revised manuscript still misses to address a number of critical issues:

1. Novelty: The revised manuscript makes an attempt of distinguishing its claims from prior work. However, the reader may still be confused about how the authors situate their findings in contrast to prior work. The manuscript seeks to promote an "independent value system for information in human PFC", in contrast to single value system view that "vmPFC positively and dACC are negatively contributing to the net-value computation". The authors state that "[e]ven for studies in which dACC and vmPFC activity is dissociated activity in vmPFC is generally linked to reward value, while activity in dACC is often interpreted as indexing negative or non-rewarding attributes of a choice (including ambiguity, difficulty, negative reward value, cost and effort)". This statement requires further elaboration. Why can a non-rewarding attribute not be considered independent of reward, thus promoting a dual-systems view? That is, the reader might wonder: are the non-rewarding attributes of choice in the cited studies necessarily in opposition to reward, contributing to the same "net-value computation" and suggesting a symmetrical opposition? For some of these attributes, this is not necessarily the case (see Comment 2 for a specific example).

2. Mischaracterization of literature: The revised manuscript continues to mischaracterize the existing literature, suggesting that the studies of Shenhav et al. interpret ACC and vmPFC as participating in a single reward system-although the revision appears to partly avoid this characterization by dropping the Shenhav et al. (2018) reference. Regardless, there are several reasons for why one should not interpret these studies as advocating for and/or interpreting their findings as supportive of a single-value system:

– As the authors of this manuscript state in their response, the Expected Value of Control (EVC; 2013) framework suggests that certain forms of conflict or uncertainty may be considered when computing EVC. However, the 2016 and 2018 studies do consider forms of conflict and uncertainty that are not explicitly taken into account when computing the expected value of a control signal. For instance, in the 2018 study, EVC is expressed a function of reward, not uncertainty or conflict (Equation 3):

EV_t = [Pr(Mcorrect) * Reward(MCorrect)] + [Pr(Ccorrect) * Reward(CCorrect)]

– Irrespective of the way in which the EVC is computed, EVC theory neither suggests that dACC activity is reflective of a single value, nor does it explicitly propose that dACC activity correlates with EVC. Shenhav et al. (2013, 2016) communicate this in their work.

– It is worth noting that Shenhav et al. (2014, 2018b, 2019; see references below) make the case that dACC vs. vmPFC are engaged in different kinds of valuation (with dACC being more tied to uncertainty and vmPFC being more tied to reward). The authors may want to consider this work when situating their study in the existing literature (see Comment 1):

Shenhav, A. and Buckner, R.L. (2014). Neural correlates of dueling affective reactions to win-win choices. Proceedings of the National Academy of Sciences 111(30): 10978-10983

Shenhav, A., Dean Wolf, C.K., and Karmarkar, U.R. (2018). The evil of banality: When choosing between the mundane feels like choosing between the worst. Journal of Experimental Psychology: General 147(12): 1892-1904.

Shenhav, A. and Karmarkar, U.R. (2019). Dissociable components of the reward circuit are involved in appraisal versus choice. Scientific Reports 9(1958): 1-12.

– Finally, the statements made in Shenhav et al. do simply not express a single-value system view, as erroneously suggested by the authors of this manuscript. For convenience of the authors, reviewers and editors, I attached excerpts from the Discussion sections of the relevant 2016 and 2018 articles below.

That is, the manuscript continues to mischaracterize these studies when stating that they interpret ACC and vmPFC as a single value reward system. I respectfully ask the authors to consider these points in their current and future work.

3. Model fits: I commend the authors for including an analysis of parameter recoverability as well as a model comparison with a standard RL model. However, the model comparison should indicate which trials were included in the analysis (all first free choices, as used for parameter estimation?). As indicated in my previous review, the analysis should also report the outcome of this model comparison, separately for each participant (i.e. a table with BIC values for gkRL and standardRL, for each participant), so that the reader can evaluate whether gkRL is generally better (not just on average).

4. Subjective information gain: I apologize if I missed this, but the authors' response doesn't seem to address the following issue raised earlier: The analysis refrains from taking into account the *subjective* information gain that is actually factored into the model's choice. As re-iterated by the editor, I was a bit surprised that the GLM implements – I_{t,j}(c) as regressor for information gain, instead of – omega * I_{t,j}(c). If omega is small, then the former regressor may not be a good proxy for information gain that is actually used to guide decision-making. This issue was implicitly raised in comment 8 of Reviewer 2, to which the authors replied that "all the neural activity relates to subjects' behavior". The claimed brain-behavior relationship would be more internally valid if the participant-specific parameter omega is considered in the analysis.

5. Consideration of Covariates: The authors state that "Standard Deviation and Chosen-Second were highly correlated with RelReward" and "[f]or this reason, these covariates were not considered any further". Does this mean that these covariates are confounded with relative reward, and that the authors cannot dissociate whether vmPFC encodes Standard Deviation (as the author's proxy for decision entropy) or Chosen-Second? Also, why aren't all covariates included when examining the effect of information gain (both relative and absolute) on both vmPFC and dACC activity, as well as the effect of expected reward? It would seem appropriate to include at least non-correlating covariates in all analyses to allow for a proper comparison across GLMs. Furthermore, it would seem reasonable to include RT as a covariate despite it not being correlated with information gain. First, information gain is not the only factor being considered. Second, a lack of statistical correlation with information gain doesn't imply that RT cannot explain additional variance when included in the GLM.

Excerpts of Discussion from

Shenhav, A., Straccia, M. A., Botvinick, M. M., and Cohen, J. D. (2016). Dorsal anterior cingulate and ventromedial prefrontal cortex have inverse roles in both foraging and economic choice. Cognitive, Affective, and Behavioral Neuroscience, 16(6), 1127-1139:

"Although these findings collectively paint a picture inconsistent with a foraging account of dACC, it is important to note that the difficulty-related activations we observed lend themselves to a number of possible interpretations. These include accounts of dACC as monitoring for cognitive demands such as conflict/uncertainty (Botvinick, Braver, Barch, Carter, and Cohen, 2001; Cavanagh and Frank, 2014), error likelihood (Brown and Braver, 2005), and deviations from predicted response-outcome associations (Alexander and Brown, 2011); indicating the aversiveness of exerting the associated cognitive effort (Botvinick, 2007); explicitly comparing between candidate actions (e.g., Hare, Schultz, Camerer, O'Doherty, and Rangel, 2011); and regulating online control processes (Dosenbach et al., 2006; Posner, Petersen, Fox, and Raichle, 1988; Power and Petersen, 2013). In line with a number of these accounts, we recently proposed that dACC integrates control-relevant values (including factors such as reward, conflict, and error likelihood) in order to make adjustments to candidate control signals (Shenhav, Botvinick, and Cohen, 2013). In this setting, one of multiple potentially relevant control signals is the decision threshold for the current and future trials, adjustments of which have been found to be triggered by current trial conflict (i.e., difficulty) and mediated by dACC and surrounding regions (Cavanagh and Frank, 2014; Cavanagh et al., 2011; Danielmeier, Eichele, Forstmann, Tittgemeyer, and Ullsperger, 2011; Frank et al., 2015; Kerns et al., 2004). Our findings also do not rule out the possibility that dACC activity will in other instances track the likelihood of switching rather than sticking with one's current strategy – as has been observed in numerous studies of default override (see Shenhav et al., 2013) – over and above signals related to choice difficulty. However, our results do suggest that a more parsimonious interpretation of such findings would first focus on the demands or aversiveness of exerting control to override a bias, rather than on the reward value of the state being switched to.

Another recent study has questioned the necessity of dACC for foraging valuation by showing that this region does not track an analogous value signal in a delay of gratification paradigm involving recurring stay/switch decisions (McGuire and Kable, 2015). Instead, this study found that vmPFC played the most prominent evaluative role for these decisions (tracking the value of persisting toward the delayed reward). The authors concluded that vmPFC may therefore mediate evaluations in both foraging and traditional economic choice. Our study tested this assumption directly within the same foraging task that was previously used to suggest otherwise. We confirmed that vmPFC tracked relative chosen value similarly in both task stages. As is the case for our (inverse) findings in dACC, there are a number of possible explanations for this correlate of vmPFC activity, over and above the salient possibility that these activations reflect the output of a choice comparison process (Boorman et al., 2009; Boorman et al., 2013; Hunt et al., 2012). First, it may be that vmPFC activity is in fact tracking ease of choice or some utility associated therewith (cf. Boorman et al., 2009), such as the reward value associated with increased cognitive fluency (Winkielman, Schwarz, Fazendeiro, and Reber, 2003). Similarly, it may be the case that this region is tracking confidence in one's decision (possibly in conjunction with value-based comparison), as has been reported previously (De Martino, Fleming, Garrett, and Dolan, 2013; Lebreton, Abitbol, Daunizeau, and Pessiglione, 2015). A final possibility is that this region is not tracking ease or confidence per se, but a subtle byproduct thereof: decreased time spent on task. Specifically, it is possible that a greater proportion of the imposed delay period in this study and in KBMR was filled with task-unrelated thought when participants engaged with an easier choice, leading to greater representation of regions of the so-called "task-negative" or default mode network (Buckner, Andrews-Hanna, and Schacter, 2008), including vmPFC. While this is difficult to rule out in the current study, our finding of similar patterns of vmPFC activity in our previous experiment (which omitted an imposed delay) counts against this hypothesis. These possibilities notwithstanding, our results at least affirm McGuire and Kable's conclusion that the vmPFC's role during foraging choices does not differ fundamentally from its role during traditional economic choices."

Excerpts of Discussion from

Shenhav, A., Straccia, M. A., Musslick, S., Cohen, J. D., and Botvinick, M. M. (2018). Dissociable neural mechanisms track evidence accumulation for selection of attention versus action. Nature communications, 9:

"Our findings within dACC are consistent with previous proposals that this region signals demands for cognitive control (e.g., conflict, error likelihood [34,56,57]) and that these demands may be differentially encoded across different populations within dACC [44,45]. Most notably, our findings are broadly consistent with the recent proposal that dACC signals such demands in a hierarchical manner [29,32,40] (cf. Refs. [14,58]). Specifically, it has been suggested that dACC contains a topographic representation of potential control demands, with more caudal regions reflecting demands at the level of individual motor responses and more rostral regions reflecting demands at increasing levels of abstraction (e.g., at the level of effector-agnostic response options). According to this framework, it is reasonable to assume that this rostrocaudal axis might encode uncertainty regarding which attribute to attend more rostrally than uncertainty regarding which response to select. Under the added assumption that our participants were heavily biased towards attending the high-reward attribute and became increasingly likely to attend the low-reward attribute as its coherence increased (cf. Figure 2) – potentially narrowing their relative likelihood of attending either stimulus and thereby increasing uncertainty over which attribute to attend – our findings could be interpreted as further evidence for such an axis of uncertainty. However, such an interpretation remains speculative in the absence of additional measures of attentional allocation (e.g., eyetracking within a task that uses spatially segregated attributes). Our findings may also be consistent with a more recent proposal that a similar axis within dACC tracks the likelihood of responses and outcomes (e.g., error likelihood) at similarly increasing levels of abstraction [33]. Collectively these accounts of the current findings are consistent with our theory that regions of dACC integrate information regarding the costs and benefits of control allocation (including traditional signals of control demand) in order to adaptively adjust control allocation28,34.

The dACC signals we observed are also consistent with evaluation processes unrelated to control per se, indicating for instance the costs of maintaining the current course of action in caudal dACC and the value of pursuing an alternate course of action (cf. foraging) in rostral dACC [18,19]. The connection between rostral dACC activity and choices to follow evidence for the low-reward attribute can be seen as further support for such an account (though this could similarly reflect adjustments of attentional allocation). Our current study is limited in adjudicating between these two accounts because increasing evidence in support of an alternative attentional target in our task (i.e., increased coherence of the low-reward attribute) necessarily leads to greater uncertainty regarding whether to continue to focus on the high-reward attribute. However, given that evidence for foraging-specific value signals in dACC remains inconsistent [34,37,59], an interpretation of our findings that appeals to cognitive costs or demands may be more parsimonious. That said, future studies are required to substantiate the current interpretation by demonstrating that the dACC's response to the would-be tempting alternative (the high-coherence low-reward attribute) decreases when the relative coherence and reward of the alternate attribute are such that the decision to switch one's target of attention is easy (cf. Ref. [31]).

In contrast to dACC, where activity tracked how little evidence was available to support the chosen response (i.e., to discriminate between the correct and incorrect response), vmPFC instead tracked the evidence in favor of the chosen response, in a manner proportional to the reward expected for information about each attribute. This finding is broadly consistent with previous findings in the value-based decision making literature, where vmPFC is often associated with the value of the chosen option and/or its relationship to the value of the unchosen option [60,61]. The fact that vmPFC's weights on these attributes were not proportional to the weight each attribute was given in the final decision suggests that vmPFC may have played less of a role in determining how this information was used to guide a response, than in providing an overall estimate of expected reward. In addition to any incidental influence it may have on the perceptual decision on a given trial, this reward estimate could provide a learning signal about the task context more generally (e.g., overall reward rate [24,48,49] or confidence in one's performance [50,51]), consistent with our observation that this region encodes elements of reward expected from a previous trial. While our findings are suggestive, the degree to which vmPFC guides and/or is guided by decisions regarding what to attend deserves further examination within studies that measure attention allocation while systematically varying reward as well as the degree of control one has over one's outcomes (versus, for instance, being instructed what to attend). It will also be worth directly contrasting vmPFC correlates of attribute evidence when attention is guided by reward (as in the current study) versus instruction (e.g., Ref. [3])."

Reviewer #2:

The authors propose that there are "dedicated" and "independent" reward value and information value systems driving choice in the medial frontal cortex, respectively situated in vmPFC and dACC. This is a major claim that would be highly significant in understanding the neural basis of decision making, if true, and therefore commendable. In their revision, the authors clarified several important points. However, I am afraid that their response to my and the other reviewers' previous concerns has not convinced me that their data clearly support this claim. While some of my previous comments were addressed, others concerns were mostly unaddressed.

The authors have designed a task that builds on a similar task from Wilson et al. (2014) that set out to experimentally dissociate reward difference and information difference between choice options. However, rather than use those terms for the fMRI analysis and/or focus separately on conditions in which these terms are experimentally dissociable (such as considering equal and unequal information trials separately), the way in which the relative reward and information terms are defined for the fMRI analysis introduces a significant confound between the relative reward and information gain terms (as the authors themselves point out in the manuscript and Figure 1). Apparently, this is done because the design does may not be sufficiently well powered to focus on each condition separately. The approach adopted to try to deal with this confound is instead to estimate separate GLMs (3 and 4) and orthogonalise one term with respect to the other in reverse order. This approach does not appear to "control for the confound", but instead assigns all shared variance to the un-orthogonalised term. That is, the technique does not affect the results of the orthogonalized regressor but instead assigns any shared variance to the unorthogonalised regressor (see e.g. Andrade et al., 1999). Thus, in their analysis of relative reward, the relative reward regressor is given explanatory power that might derive from information value, and in the analysis for information value, the reverse is true. Notwithstanding the interesting results from their simulations, this approach makes the interpretation of the β coefficients and the claim of independence challenging, particularly if one of the scenarios included in the simulations (independent reward value and information systems, or single value system) does not accurately capture the true underlying processes in the brain. More generally, because the two terms are highly correlated, the model-based approach across all trials requires that their computational model can precisely identify each term of interest accurately and independently. This is the same problem noted in other previous studies of exploration/exploitation that experimentally dissociating relative reward and relative information was supposed to address (at least as I understood it).

Relative Reward Definition

As I stated in my previous review, the precise definition of relative reward is important for determining what computation is driving the signals measured in vmPFC and dACC. Consideration of alternative terms was appropriately applied to analysis of vmPFC (following reviewer 1 and my suggestions), but not to analysis of dACC activity. Specifically, my suggestions to compare different relative value terms, specifically V2-E(V2,V3) and entropy(V1,V2,V3) (definitions that are based on dACC effects in past studies) for dACC activity were not performed (or reported). I suspect one of these would show an effect in dACC, but it's not possible to tell because they did not address the question or show the results. Importantly, as I pointed out previously, there is evidence in their Figure 4E for a marginal? negative effect of the relative reward term they did test in the dACC ROI. This suggests that getting the term's formulation correct could easily result in a significant negative effect. If there were an effect of either of these alternative terms in dACC, it would go against one of the central claims of the paper. In the authors' reply, rather than test these alternatives, they seemed to simply say they did not know what my comment had meant.

Information Value Confounds

In their task design the information term (I(c)) is directly proportional to the number of times an item is chosen during forced choice trials. Therefore, this term is highly correlated with the novelty/familiarity of that option. The authors clarified that they did not cue to subject about the horizon (as done in a previous task by Wilson et al. 2014). The authors state cueing the horizon did not matter in prior behavioral studies of their task (which is somewhat surprising if subjects are engaging in directed exploration), but in any case this means that the number of samples is the only variable determining the "information value". Had the horizon been cued, then one could at least test whether dACC activity increased with the planning horizon, since there would be an opportunity to benefit from exploration when this was greater than 1, and this manipulation would be useful because it would mean I(c) was not solely determined by the number of times an option had been chosen in forced choice trials and therefore highly correlated with familiarity/novelty of a deck.

Importantly, the I(c) term also appears to be confounded by the choice of the subject. As I noted in my previous review comment, because the I(c) term is only modeled at the first free choice, its inverse (which is what is claimed to be reflected in dACC activity) will be highest whenever the subject explores. Therefore, the activity in dACC could instead be related to greater average activity on exploratory compared to exploitative choices, as has been suggested previously, without any encoding of "information value" per se. For this reason I suggested including the choice (exploratory vs exploitative or switching away from the most selected "default" option) as a covariate of no interest to account for this possibility. This comment was not directly addressed. Instead, the authors make a somewhat convoluted argument that switching away from a default in their task corresponds to not exploring, since explore choices were the more frequent free choices in their task (though this would not be the case for the current game, where the forced choices would likely determine the current "default" option). If the dACC is in fact encoding the "information value" then this effect should remain despite inclusion of the explore/exploit choice, meaning it should be present on other trials when they do not choose to explore. This concern was not addressed, although it is unclear if their experimental design provides sufficient independent variance to test this on exploit trials.

As I pointed out in my original review, if the dACC shows no effect of relative value (or the alternative terms described above) as claimed, then there should be no effect of the relative value on the "equal information" trials. The authors do report no significant effects at a p<0.001 whole-brain threshold on these trials, which they acknowledge may be underpowered. It would be more convincing to show this analysis in the dACC ROI that is used for other analyses. A significant effect on these trials that experimentally dissociate relative reward from information would also run counter to one of the main claims in the paper.

For the above reasons, I do not believe my previous concerns were addressed and that the data support some of the central claims of the manuscript.

References:

Andrade A, Paradis AL, Rouquette S, Poline JB (1999) Ambiguous results in functional neuroimaging data analysis due to covariate correlation. Neuroimage 10:483-486.

Reviewer #3:

This is a greatly improved manuscript. The authors have done substantial work thoroughly revising their manuscript including carefully addressing almost all of my original comments. These have made the paper considerably more methodologically solid and clarified their presentation, but have even allowed them to uncover additional results that strengthen the paper, such as providing evidence that ACC activity related to information is especially related to the non-instrumental value of information.

I do have a few questions and concerns for the authors to address regarding the methodological details of some of their newly added analyses (comments 1-4). I also have some suggestions to improve their presentation of the results to help readers better understand them (comments 5-6 and most minor comments).

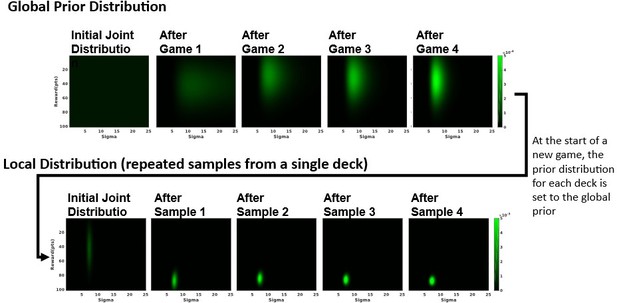

1. The authors have included a new analysis that nicely addresses my comments about the need to distinguish between neural signals encoding Instrumental vs Non-Instrumental Information. I greatly appreciate them developing the quite painstaking and elaborate computations necessary to estimate the instrumental value of information in this task! Furthermore, this led them to report a very interesting result that supports their big picture message, that ACC activity is most related to their estimate of Information Gain from their gkRL model of behavior, while vmPFC is most related to their estimate of Instrumental Information. This is very striking, and if true, is an important contribution to our understanding of how neural systems value information.

I have a few comments about this:

- Overall, the whole approach is explained very clearly. It appears methodologically solid, strongly motivated, and well-founded in Bayesian decision theory.

There is one missing step that needs to be explained, though: the final step for how they use the Bayes-optimal long term values for each option (which I will call "Bayes Instrumental Value") to compute the Instrumental Information which they used as a regressor. I don't quite understand how they did this. Reading the methods, it sounds almost as if they set Instrumental Information = Bayes Instrumental Value. If so, I don't think that would be correct, since then a large component of "Instrumental Information" would actually just be the ordinary expected gain of reward from choosing an option (e.g. based on prior beliefs about option reward distributions and on the mean experienced rewards from that option during the current game), not specifically the value of the information obtained from that option (i.e. the improvement in future rewards due to gaining knowledge to make better decisions on future trials). I worry that the authors might have done this, since their analysis suggests that Instrumental Information may be positively correlated with experienced rewards (e.g. it was correlated with similar activations in vmPFC as RelReward). It is also odd that "The percentage of trials in which most informative choices had positive Instrumental Information was ~ 22%" since surely the instrumental value of information in this task should almost always be at least somewhat positive and should only be zero if participants know they are at the end of the time horizon, which should never occur on first free choice trials.

In general, a simple way to compute the instrumental value of information (VOI) in decision problems is to take the difference between the total reward value of choosing an option including the benefit of its information (i.e. the Bayes Instrumental Value that the authors have already computed) minus the reward value the option would have had if it provided the decision maker with the same reward but did not provide any information (which I the authors may be able to calculate by re-running their Bayesian procedure with a small modification, such as constraining the model to not update its within-game belief distributions based on the outcome of the first free choice trial, thus effectively ignoring the information from the first free-choice trial while still receiving its reward). Thus, we would have a simple decomposition of value like this:

Bayes Instrumental Value = Reward Value Without Information + Instrumental Value of Information

- Relatedly, I was surprised by their fit to behavior that predicts choices based on Instrumental Information and Information Gain. Given the strong effects of expected reward on choice (Figure 2D), I naively would have expected them to include an additional term for reward value, in order to decompose behavior into three parts, "Reward Value Without Information" representing simple reward seeking independent of information, "Instrumental Value of Information" representing the component of information seeking driven by the instrumental demands of the task, and "Information Gain" representing the variable the authors believe corresponds to the non-instrumental value of information (or total value of information) reflecting the subjective preferences of individuals. The way I suggested above to compute Instrumental Information would allow this kind of decomposition.

- I suggest adding a caveat in the methods or discussion to mention that the Bayesian learner may not reflect each individual's subjective estimate of the instrumental value of information, since the task was designed to have some ambiguity allowing the participants to have different beliefs about the task, by not fully explaining the task's generative distribution.

2. The difference between the confounded analysis (Figure 3C) and non-confounded analysis (Figure 4E) is very striking and clear for vmPFC but is not quite as clear for ACC. It looks like the non-confounded analysis of ACC produces slightly less positive β for information and slightly less negative β for reward (from about -0.5 to about -0.35, though I cannot compare this data precisely because these plots do not have ticks on the y-axis). While is true the effect went from significant to non-significant, I am not sure how much this change supports the black and white interpretation in this paper that ACC BOLD signal reflects information completely independently of reward. It might support a less black and white (but still scientifically important and valuable) distinction that ACC primarily reflects information but may still weakly reflect reward. I understand it may not be possible to 'prove a negative' here, but it would be nice if the authors could show stronger evidence about this in ACC, e.g. by breaking down the 3-way ANOVA to ask whether removing the confound had significant effects in each area individually (vmPFC and ACC). Otherwise, it might be better to state the conclusions about ACC and information vs. reward in a less strictly dichotomous/independent way.

3. The analysis of switching strategy is a valuable control, and I agree with the authors on their interesting point that in this task the default strategy would appear to be information seeking rather than exploitation. Can the authors report the results for ACC? The paper seems to describe this section as providing evidence about what ACC encodes, so I was expecting them to report no significant effect of Switch-Default in ACC, but I only see results reported for frontopolar cortex.

4. The analysis of choice difficulty is also a very welcome control. My one suggestion for the authors to consider is also correlating chosen Information Gain with a direct index of choice difficulty according to the gkRL model of behavior that they fit to each participant (e.g. setting choice difficulty to be the difference between V of the best deck with V of the next-best deck). This would be more convincing to choice difficulty enthusiasts, because reaction times can be influenced by many factors in addition to choice difficulty, and previous papers arguing for dACC role in choice difficulty (e.g. Shenhav et al., Cogn Affect Behav Neurosci 2016) define choice difficulty in terms of model-derived value differences (or log odds of choosing an option). The gkRL model is put forward as the best model of behavior in this task in the paper, so it would presumably give a more direct estimate of choice difficulty than reaction time.

5. One of the main messages of the paper is that correlations between reward and information may cause ACC and vmPFC to appear to have opposing activity under univariate analysis, even if ACC and vmPFC actually have independent activity under multivariate analysis.

On this point, the authors nicely addressed my first comment by greatly improving and clarifying Figure 1 showing their simulation results about this confound. The authors also addressed my third comment, which was also on this topic.

However, part of the way they explain this in the text could do with some re-phrasing, since in its current form it could be confusing for readers. Specifically, they make some statements as if information and reward were uncorrelated ("Both Wilson et al. and our task orthogonalize reward and information by adding different task conditions." and "the use of the forced-choice task allows to orthogonalize available information and reward delivered to participants in the first free choice trial") but make other statements as if they were correlated ("However, in order to better estimate the neural activity over the overall performance we adopted a trial-by-trial model-based fMRI analyses. Since reward value and information value are correlated due to subjects' choices during the experiment, this introduces an information-reward confound in our analysis.").

After puzzling over this and carefully re-reading the Wilson et al. paper I understand what they are saying. Here is what I think they want to say. On the first free-choice trial, if you consider all available options, an option's information and that option's reward are uncorrelated. However, if you only consider the chosen option (as most of their analyses do) that option's information and reward are negatively correlated.

If this is the case, the authors need to clarify their explanations, especially specifying when "information" and "reward" refer to all options or only to the chosen option. For example, instead of "orthogonalize available information and reward delivered to participants in the first free choice trial" I think it would be more clear and accurate to say "orthogonalize experienced reward value and experienced information for all available options on the first free-choice trial".

Relatedly, in my opinion this whole issue would be clearer if the authors took my earlier suggestion of simply showing a scatterplot of the two variables they claim are causing the problem due to their correlation (presumably "chosen RelReward" vs "chosen Information Gain"), for instance in Figure 1 or in a supplementary figure. This would be even more powerful if they put it next to a plot of the analogous variables from considering all options showing a lack of correlation (e.g. "all options RelReward" vs "all options Information Gain") thus demonstrating that the problematic correlation is induced by focusing the analysis on the chosen option. Then readers can clearly see what two variables they are talking about, how they are correlated, and why the correlation emerges. It seems critical to show this correlation prominently to the readers, since the authors are holding up this correlation, and the need to control for it, as a central message of the paper.

6. The paper has a very important discussion about how broadly applicable their approach to non-confounded analysis of decision variables is to the neuroscience literature. There is one part that seems especially important to me but may be hard for readers to understand from the current explanation:

"Furthermore, we acknowledge that this confound may not explicitly emerge in every decision-making studies such as preference-based choice. However, the confound in those decision types is reflected in subjects' previous experiences (e.g., the expression of a preference for one type of food over another are consistent, and therefore the subject reliably selects that food type over others on a regular basis). The control of this confound is even more tricky as it is "baked in" by prior experiences rather than learned over the course of an experimental session. Additionally, our results suggest that other decision dimensions involved in most decision-making tasks (e.g., effort and motivation, cost, affective valence, or social interaction) may also be confounded in the same manner."

Here is what I think the authors are saying: In their task, people made decisions based on both reward and other variables (i.e. information), which induced a correlation between the chosen option's reward value and its other variables. If the authors had analyzed neural activity related to the chosen option only in terms of reward value, they would have thought that ACC and vmPFC had negatively related activity to each other, and had opposite relationships to reward value. In effect, any brain area that purely encoded any one of the chosen option's variables (e.g. information) would appear to have activity somewhat related to all of the other decision-relevant variables. Thankfully, the authors were able to avoid this problem. However, crucially, they were only able to avoid this problem because their task was designed to manipulate the information variable. This let them model its effects on behavior, estimate the information gain on each trial, and do multivariate analysis to disentangle it from reward. Therefore, the same type of confound could be lurking in other experiments that analyze neural activity related to chosen options. This potential confound may be especially hazardous in tasks that are complex or that attempt to be ecologically-valid, since they may have less knowledge or control of the precise variables that participants are using to make their decisions, and hence less ability to uncover those hidden decision-relevant variables and disentangle them from reward value.

If this is what they are saying, then I agree that this is a very important point. I suggest giving a more in-depth explanation to make this clear. Also, I think it would be good to state that this confound only applies to analyses of options that have correlated decision-relevant variables (e.g. due to the task design, or due to the analysis including options based on the participant's choice, such as analysis of the chosen option or the unchosen option).

[Editors’ note: further revisions were suggested prior to acceptance, as described below.]

Thank you for resubmitting your work entitled "Independent and Interacting Value Systems for Reward and Information in the Human Brain" for further consideration by eLife. Your revised article has been evaluated by Michael Frank (Senior Editor) and a Reviewing Editor and three reviewers.

The revised manuscript has been greatly improved and after some discussion the reviewers agreed that the concerns had been addressed in a responsive revision. However, two points arose in the revision that, after consultation, the reviewers still felt should be addressed, at the very least with clarification and clear discussion of their strengths and weaknesses in the text. These were:

a) Reviewers were confused about why instrumental information was negative on the majority of trials, given that a Bayesian optimal learner was used. The concern is elaborated by a reviewer, as follows:

"I am still puzzled why they say the most informative deck only has positive Instrumental Information on 20% of trials and often has negative Instrumental Information.

The rebuttal is completely correct to point out that instrumental information can be negative. However, this happens when the decision maker is suboptimal at using information so it performs better without some information (e.g. the paper they cite uses examples like "not knowing whether a client is guilty could improve a solicitor's performance"). In this paper the formula is supposed to be using a Bayes optimal learner, that knows the structure of their task in more detail than the actual participants. A Bayes optimal learner should make optimal use of information, and should never be hurt by information, so instrumental information should always be positive or at least non-negative, right?

The rebuttal says that instrumental information can be negative in a specific condition in this task with unequal reward, unequal information, and the most informative option has lower reward value than the other options. However, I do not see why this should be the case. Providing information should never hurt a Bayesian with an accurate model of the task. Also, the specific condition should only be a fraction of trials, it is surprising if something occurring on those trials could account for the fact that 80% of the trials, they report have zero or negative instrumental information. Finally, it is strange that instrumental information would be negative on those trials because they are ones where one deck has not been sampled and has low expected reward, so it seems to me that there is high instrumental value in learning from information that it has a low value. This information is needed for the participant to learn that the deck has a low value and should be avoided going forward, otherwise if the participant did not learn anything from this information, they would be likely to choose it on their second choice. All of this makes me suspect a bug in the code."

This issue is important to resolve. You might just consider working through the computation in a simple example the rebuttal letter.

b) The omega*I versus I distinction was still confusing to reviewers. This concern is detailed by the reviewer, as follows: