A simple regulatory architecture allows learning the statistical structure of a changing environment

Figures

Learning environment statistics can benefit living systems, but is a difficult problem.

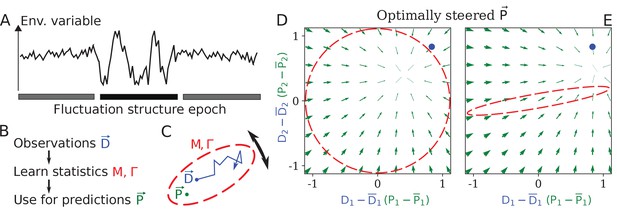

(A) An environment is characterized not only by its current state, but also by its fluctuation structure, such as variances and correlations of fluctuating environmental parameters. In this work, we consider an environment undergoing epochs that differ in their fluctuation structure. Epochs are long compared to the physiological timescale, but switch faster than the evolutionary timescale. (B) The fluctuation structure can inform the fitness-maximizing strategy, but cannot be sensed directly. Instead, it would need to be learned from past observations, and used to inform future behavior. (C) To formalize the problem, we consider a situation where some internal physiological quantities must track fluctuating external factors undergoing a random walk. Since it is impossible to react instantaneously, always lags behind . The dashed ellipse illustrates the fluctuation structure of (encoded in parameters and Γ, see text), and changes on a slower timescale than the fluctuations of . (D, E) The optimal behavior in the two-dimensional version of our problem, under a constrained maximal rate of change . For a given current (blue dot), the optimal control strategy would steer any current (green arrows) toward the best guess of the future , which depends on the fluctuation structure (red ellipse: (D) fluctuations are uncorrelated and isotropic; (E) fluctuations have a preferred direction). The optimal strategy is derived using control theory (Appendix 1, section 'Control theory calculation').

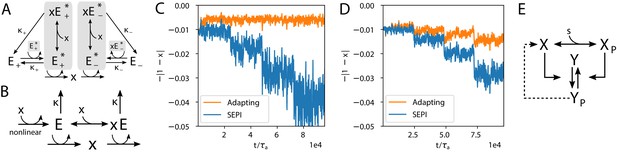

The regulatory architecture we consider is a simple generalization of end-product inhibition.

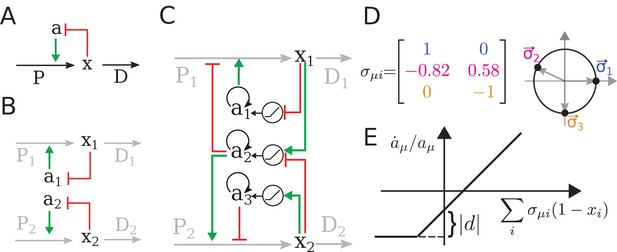

(A) Simple end-product inhibition (SEPI) for one metabolite. Green arrows show activation, red arrows inhibition. (B) Natural extension of SEPI to several metabolites. (C) We consider regulatory architectures with more regulators than metabolites, with added self-activation (circular arrows) and a nonlinear activation/repression of regulators by the metabolite concentrations xi (pictograms in circles). (D) Visualizing a regulation matrix for two metabolites. In this example, the first regulator described by activates the production of x1; the second inhibits x1 and activates x2. For simplicity, we choose vectors of unit length, which can be represented by a dot on the unit circle. This provides a convenient way to visualize a given regulatory architecture. (E) The nonlinear dependence of regulator activity dynamics on metabolite concentrations xi in our model (see Equation 4).

The regulatory architecture we consider successfully learns environment statistics, and outperforms simple end-product inhibition.

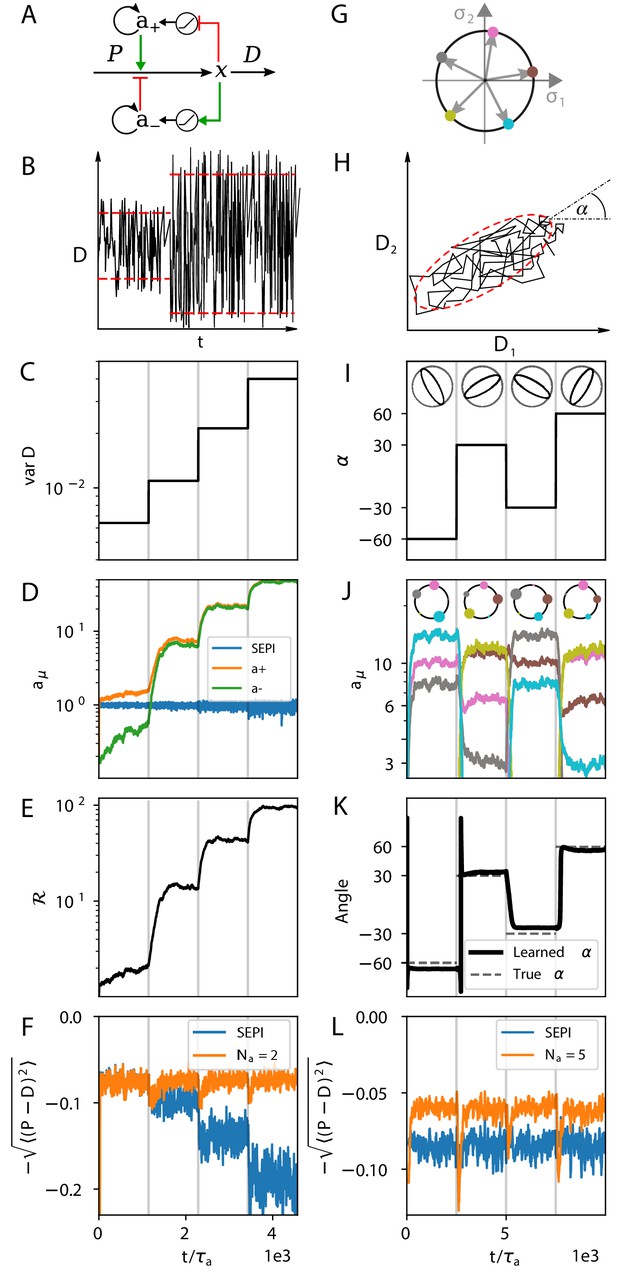

Left column in one dimension, right column in two. (A) Regulation of a single metabolite with one activator and one repressor . (B, C) The variance of is increased step-wise (by increasing Γ). (D) Regulator activities respond to the changing statistics of . For SEPI, the activity of its single regulator is unchanged. (E) Faced with larger fluctuations, our system becomes more responsive. (F) As fluctuations increase, SEPI performance drops, while the circuit of panel A retains its performance. (G) In the 2d case, we consider a system with regulators; visualization as in Figure 2D. (H) Cartoon of correlated demands with a dominant fluctuation direction (angle α). (I) We use α to change the fluctuation structure of the input. (J) Regulator activities respond to the changing statistics of . Colors as in panel G. (K) The direction of largest responsiveness (‘learned angle’; see text) tracks the α of the input. (L) The system able to learn the dominant direction of fluctuations outperforms the SEPI architecture, even if the timescale of SEPI is adjusted to match the faster responsiveness of the system (see Appendix 1, section 'Parameters used in figures'). Panels B and H are cartoons.

The ability to learn statistics is most useful when fluctuations are large and/or strongly correlated.

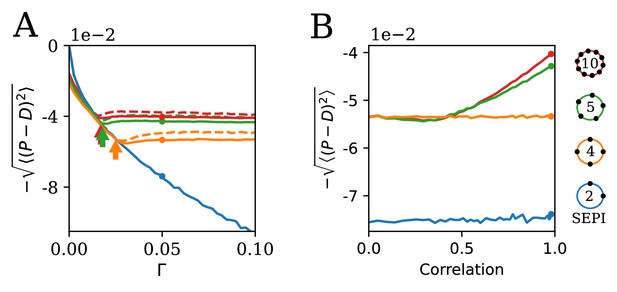

(A) The performance of different circuits shown as a function of Γ, which scales the fluctuation magnitude (input is two-dimensional and correlated, angle , anisotropy ). Once the fluctuations become large enough to activate the learning mechanism, performance stabilizes; in contrast, the SEPI performance continues to decline. Arrows indicate the theoretical prediction for the threshold value of Γ; see Appendix 1, section 'The minimal Γ needed to initiate adaptation'. Dashed lines indicate the theoretical performance ceiling (calculated at equivalent Control Input Power, see text). (B) Comparison of circuit performance for inputs of the same variance, but different correlation strengths. regulators arranged as shown can learn the variance but not correlation; the SEPI architecture is unable to adapt to either. Parameter Γ is held constant at 0.05; the marked points are identical to those highlighted in panel A (and correspond to fluctuation anisotropy ).

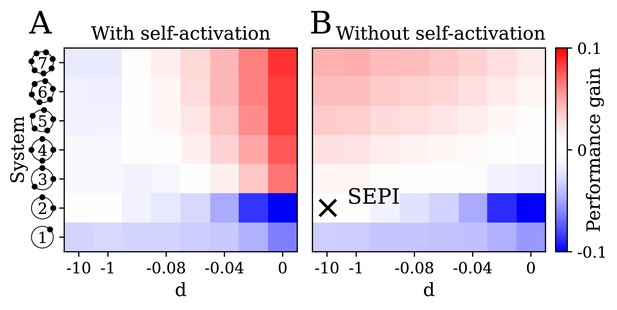

The key ingredients enabling learning are an excess of regulators, nonlinearity, and self-activation.

(A) System performance in the two-dimensional case , shown as a function of the number of regulators (vertical axis) and the strength of nonlinearity (horizontal axis; is indistinguishable from a linear system with ). Color indicates performance gain relative to the SEPI architecture; performance is averaged over angle α (see Figure 3H). (B) Same as panel A, for a model without self-activation (see text). The SEPI-like architecture (linear with ) is highlighted.

Realistic implementations.

(A) An alternative circuit that implements the logic described above, but with different forms of the key ingredients, including a Hill-function nonlinearity. Here, the circuit is based on a pair of self-activating enzymes which can be in an active () or inactive state (). For details see Equation (5). (B) Another circuit capable of learning fluctuation variance to better maintain homeostasis of a quantity . Synthesis and degradation of are catalyzed by the same bifunctional enzyme, whose production is regulated nonlinearly by itself. For more details see Equations (6) and (7). (C) The circuit in panel A performs well at the homeostasis task of maintaining ’s concentration at 1, despite the changing variance of the input. For comparison, we’ve included a SEPI analogue of the circuit, described in Appendix 1, section 'Realistic biochemical implementations'. (D) Same as panel C, but with the circuit from panel B. Note that the ’SEPI’ line is different here, and is now a SEPI analogue of the circuit in panel B. (E) Solid arrows: a common two-component architecture of bacterial sensory systems with a bifunctional histidine kinase (X) and its cognate response regulator (Y). Adding an extra regulatory link (nonlinear auto-amplification, dashed arrow) can endow this system with self-tuned reactivity learning the statistics of the input; see text.

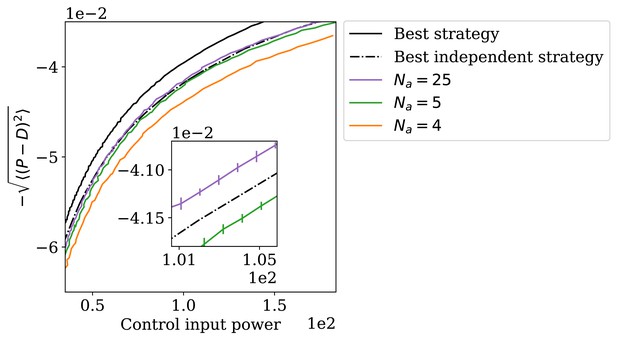

The adapting system can perform better than the best independence-assuming strategy.

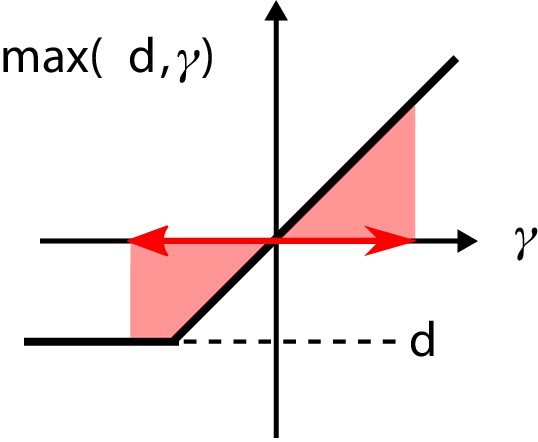

The nonlinearity in the regulatory architecture.

If the fluctuations of the input are large enough, the average over the nonlinearity is positive, causing additional growth of the regulator concentration .

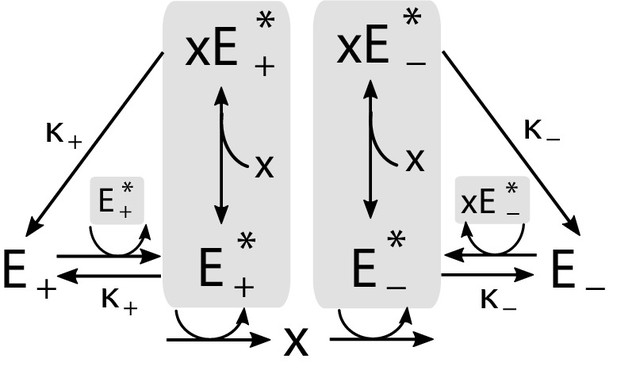

Implementation of the regulatory mechanism based on a pair of self-activating enzymes which can be in an active () or inactive state ().

Gray shading indicates catalysts of reactions.

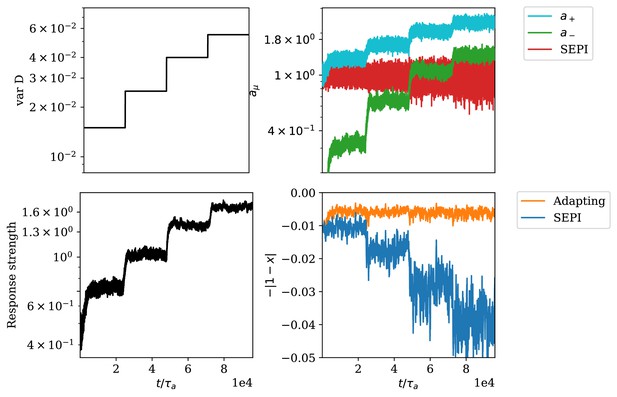

Adaptation of responsiveness to an increasing variance of environmental fluctuations.

(A) Step-wise increase of the variance of . (B) Time-series of regulator concentrations, where and correspond to the total concentrations of and respectively. (C) The responsiveness of the system as defined in Equation (S31). (D) The deviation of the metabolite concentration from its target value.

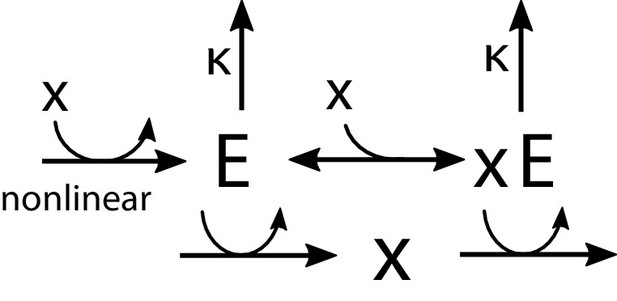

Regulation by allosteric forms of one enzyme .

The unbound form activates the production of , while the bound form promotes its degradation. The synthesis of is regulated nonlinearly by the metabolite concentration .

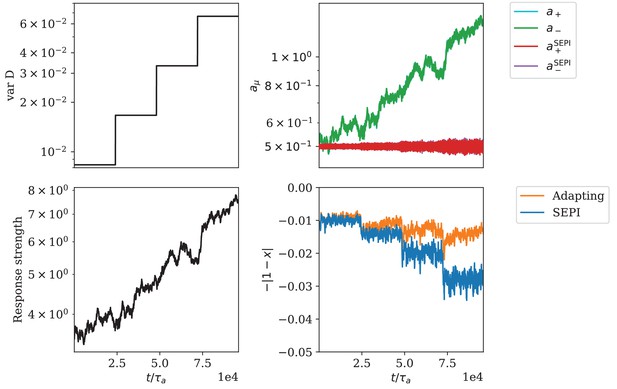

Adaptation of responsiveness for the circuit architecture based on a bifunctional enzyme.

(A) The variance of is increased step-wise. (B) Change of regulator activities. The regulator activities and overlap strongly and cannot be distinguished in this panel. (C) The response strength of the system. (D) The mismatch of the metabolite concentration from its target value.

Additional files

-

Source code 1

Python 3.7.4 simulation code and scripts to reproduce all figures.

- https://cdn.elifesciences.org/articles/67455/elife-67455-code1-v1.zip

-

Transparent reporting form

- https://cdn.elifesciences.org/articles/67455/elife-67455-transrepform-v1.pdf