Machine Learning: Finding the right type of cell

Since the advent of microscopy in the 17th century, it has become well established that organisms are divided into tissues made up of different types of cells, with cells of the same type typically performing the same role. This simplifies the task of understanding a biological system immensely, as there are many fewer cell types than individual cells (Masland, 2001).

Categorizing tissues and cell types has always been done manually, usually by grouping cells that look the same based on their shape, internal structures and various other features. This is also true for images collected using modern day techniques, such as electron microscopy, which can provide three-dimensional reconstructions of tissue samples, or even entire small organisms less than a millimeter cube in size.

While electron microscopy images can be automatically subdivided or ‘segmented’ into individual cells, assigning each one to a cell type by hand is both difficult and time consuming; for example, in a recent project, it took several experts many months to categorize one half of the fruit fly brain (Scheffer et al., 2020). The whole task becomes even more challenging if the object being studied is not a well-known model organism. Now, in eLife, Valentyna Zinchenko, Johannes Hugger, Virginie Uhlmann Detlev Arendt and Anna Kreshuk of the European Molecular Biology Laboratory report a new method that could simplify this process (Zinchenko et al., 2023).

Zinchenko et al. based their program on a machine learning method called unsupervised, contrastive learning (van den Oord et al., 2018). The program works by grouping cell types without having received prior examples of ‘human-classified’ cell types or features (i.e., it is unsupervised) and by finding features that maximize the difference (or contrast) between examples that should be grouped together and those that should not. The method requires many examples, both of cells that should be grouped together, and those that should not. For the positive examples (those that should be grouped together), Zinchenko et al. created synthetic copies of each existing cell with minor modifications, such as different rotations, reflections, and texture or shape variations. In this case, the original cell and the modified cell should be grouped together. For negative examples (those that are of different types), they picked pairs of cells at random from their sample. This will be wrong occasionally but it is sufficiently accurate to train their model while allowing unsupervised operation.

Machine learning was then applied to find features shared by the positive examples only. The system combined the learned features of each original cell into a vector that summarizes the cell’s shape and texture. The team found that cells belonging to the same type were close together within the space of the vector, which can be visualized and interpreted by existing dimension reduction techniques (McInnes et al., 2018).

Zinchenko et al. then tested their model on a three-dimensional reconstruction of the annelid worm (Platynereis dumerilii) obtained through electron microscopy. Their computer model was able to match the different cell types and could identify subgroups of cells that could not be distinguished using human-specified features. Moreover, when compared to a gene expression map of the whole animal, the cells that had been classified as similar based on their features also shared similar genetic signatures, more so than cells that had previously been clustered using ‘human-designed’ features.

Next, they extended their classification method to consider both the shape and texture of each cell, and a combination of these features of all physically adjacent cells. Grouping these enhanced features revealed different tissues and organs within the animal. The classification system of the model strongly agreed with human results, but also found subtle tissue distinctions and rare features that had been overlooked by humans examining the same data set. For example, the analyses revealed a specific type of neuron in the midgut region of the worm, which had previously not been confirmed to be located in this area of the body.

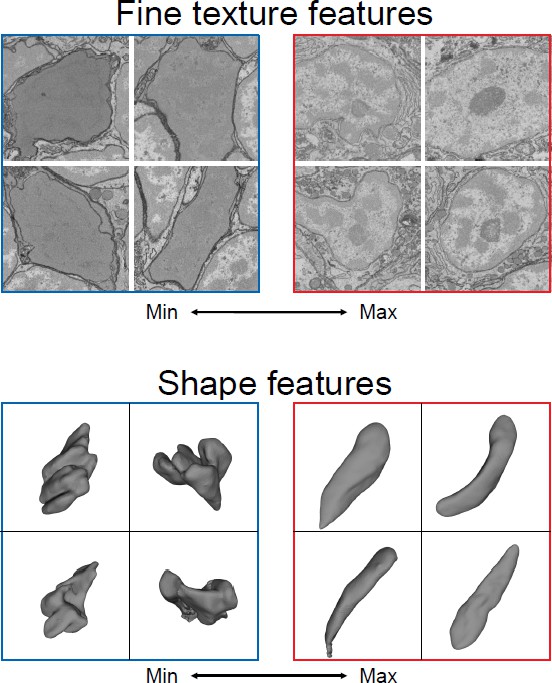

The ‘unsupervised’ aspect of the method created by Zinchenko et al. is critical because it means the program does not require a full library of the relevant cell types (or a full list of the features that can distinguish between the cell types). Instead, the program learns these characteristics from the data itself (Figure 1). This is particularly useful for systems where the cell types are not known. Moreover, it is not restricted to using cell features that humans deem important, such as the roundness of a cell or the presence of dark vesicles. This means that the model can often outperform humans and work without bias, as it is not told what to expect and is thus less likely to overlook rare or unexpected cell types.

Making cell and tissue classification an automatic process.

Three-dimensional reconstructions of organisms using electron microscopy harbor a multitude of scientific data about cell composition and structure. Zinchenko et al. created a machine learning system that can identify different features to distinguish one cell type from another. Since these features are learned, they do not necessarily correspond to human terms commonly used to describe the shape or texture of cells (such as rounded or speckled). The above images show cells with the best and worst match to two learned features, which can then be used to determine which aspect of the cell the feature corresponds to, such as texture (top) or shape (bottom).

Image credit: Adapted from Figure 3, Zinchenko et al., 2023 (CC BY 4.0).

Electron microscopy and related techniques provide an incredible level of detail, including the shape, location and structure of every cell. But analyzing this flood of data by hand is nearly impossible and automated techniques are desperately needed to unlock the potential of these findings (Eberle and Zeidler, 2018). Significant progress has already been made in turning some tasks, such as cell segmentation and identifying synapses, into automatic processes, leaving cell and tissue identification as some of the most time-consuming manual steps (Januszewski et al., 2018; Huang et al., 2018). By helping to automate this step, Zinchenko et al. make a critical step in the journey of understanding these invaluable but intimidating data sets.

References

-

Fully-automatic synapse prediction and validation on a large data setFrontiers in Neural Circuits 12:87.https://doi.org/10.3389/fncir.2018.00087

-

The fundamental plan of the retinaNature Neuroscience 4:877–886.https://doi.org/10.1038/nn0901-877

Article and author information

Author details

Publication history

- Version of Record published: February 16, 2023 (version 1)

Copyright

© 2023, Scheffer

This article is distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use and redistribution provided that the original author and source are credited.

Metrics

-

- 1,306

- views

-

- 74

- downloads

-

- 0

- citations

Views, downloads and citations are aggregated across all versions of this paper published by eLife.

Download links

Downloads (link to download the article as PDF)

Open citations (links to open the citations from this article in various online reference manager services)

Cite this article (links to download the citations from this article in formats compatible with various reference manager tools)

Further reading

-

- Computational and Systems Biology

- Genetics and Genomics

Gene expression is known to be affected by interactions between local genetic variation and DNA accessibility, with the latter organized into three-dimensional chromatin structures. Analyses of these interactions have previously been limited, obscuring their regulatory context, and the extent to which they occur throughout the genome. Here, we undertake a genome-scale analysis of these interactions in a genetically diverse population to systematically identify global genetic–epigenetic interaction, and reveal constraints imposed by chromatin structure. We establish the extent and structure of genotype-by-epigenotype interaction using embryonic stem cells derived from Diversity Outbred mice. This mouse population segregates millions of variants from eight inbred founders, enabling precision genetic mapping with extensive genotypic and phenotypic diversity. With 176 samples profiled for genotype, gene expression, and open chromatin, we used regression modeling to infer genetic–epigenetic interactions on a genome-wide scale. Our results demonstrate that statistical interactions between genetic variants and chromatin accessibility are common throughout the genome. We found that these interactions occur within the local area of the affected gene, and that this locality corresponds to topologically associated domains (TADs). The likelihood of interaction was most strongly defined by the three-dimensional (3D) domain structure rather than linear DNA sequence. We show that stable 3D genome structure is an effective tool to guide searches for regulatory elements and, conversely, that regulatory elements in genetically diverse populations provide a means to infer 3D genome structure. We confirmed this finding with CTCF ChIP-seq that revealed strain-specific binding in the inbred founder mice. In stem cells, open chromatin participating in the most significant regression models demonstrated an enrichment for developmental genes and the TAD-forming CTCF-binding complex, providing an opportunity for statistical inference of shifting TAD boundaries operating during early development. These findings provide evidence that genetic and epigenetic factors operate within the context of 3D chromatin structure.

-

- Computational and Systems Biology

- Developmental Biology

Organisms utilize gene regulatory networks (GRN) to make fate decisions, but the regulatory mechanisms of transcription factors (TF) in GRNs are exceedingly intricate. A longstanding question in this field is how these tangled interactions synergistically contribute to decision-making procedures. To comprehensively understand the role of regulatory logic in cell fate decisions, we constructed a logic-incorporated GRN model and examined its behavior under two distinct driving forces (noise-driven and signal-driven). Under the noise-driven mode, we distilled the relationship among fate bias, regulatory logic, and noise profile. Under the signal-driven mode, we bridged regulatory logic and progression-accuracy trade-off, and uncovered distinctive trajectories of reprogramming influenced by logic motifs. In differentiation, we characterized a special logic-dependent priming stage by the solution landscape. Finally, we applied our findings to decipher three biological instances: hematopoiesis, embryogenesis, and trans-differentiation. Orthogonal to the classical analysis of expression profile, we harnessed noise patterns to construct the GRN corresponding to fate transition. Our work presents a generalizable framework for top-down fate-decision studies and a practical approach to the taxonomy of cell fate decisions.