Author response:

The following is the authors’ response to the previous reviews

Reviewer #1:

Comment:

The authors quantified information in gesture and speech, and investigated the neural processing of speech and gestures in pMTG and LIFG, depending on their informational content, in 8 different time-windows, and using three different methods (EEG, HD-tDCS and TMS). They found that there is a time-sensitive and staged progression of neural engagement that is correlated with the informational content of the signal (speech/gesture).

Strengths:

A strength of the paper is that the authors attempted to combine three different methods to investigate speech-gesture processing.

We sincerely appreciate the reviewer’s recognition of our efforts in employing a multi-method approach, which integrates three complementary experimental paradigms, each leveraging distinct neurophysiological techniques to provide converging evidence.

In Experiment 1, we found that the degree of inhibition in the pMTG and LIFG was strongly associated with the overlap in gesture-speech representations, as quantified by mutual information. Experiment 2 revealed the time-sensitive dynamics of the pMTG-LIFG circuit in processing both unisensory (gesture or speech) and multisensory information. Experiment 3, utilizing high-temporal-resolution EEG, independently replicated the temporal dynamics of gesture-speech integration observed in Experiment 2, further validating our findings.

The striking convergence across these methodologically independent approaches significantly bolsters the robustness and generalizability of our conclusions regarding the neural mechanisms underlying multisensory integration.

Comment 1: I thank the authors for their careful responses to my comments. However, I remain not convinced by their argumentation regarding the specificity of their spatial targeting and the time-windows that they used.

The authors write that since they included a sham TMS condition, that the TMS selectively disrupted the IFG-pMTG interaction during specific time windows of the task related to gesture-speech semantic congruency. This to me does not show anything about the specificity of the time-windows itself, nor the selectivity of targeting in the TMS condition.

(1) Selection of brain regions (IFG/pMTG)

We thank the reviewer for their thoughtful consideration. The choice of the left IFG and pMTG as regions of interest (ROIs) was informed by a meta-analysis of fMRI studies on gesture-speech integration, which consistently identified these regions as critical hubs (see Author response table 1 for detailed studies and coordinates).

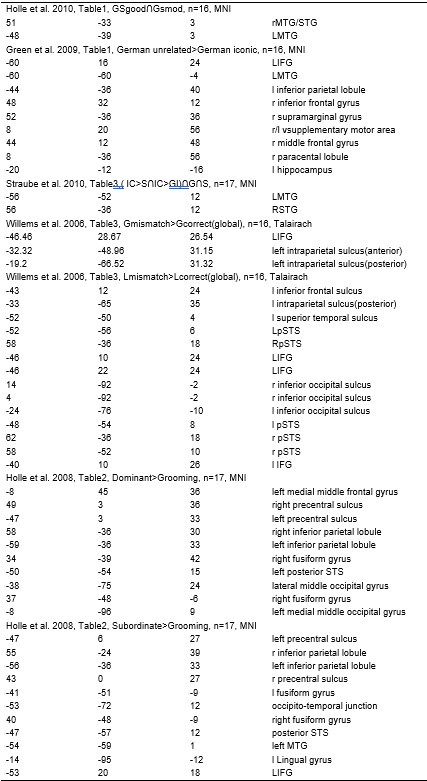

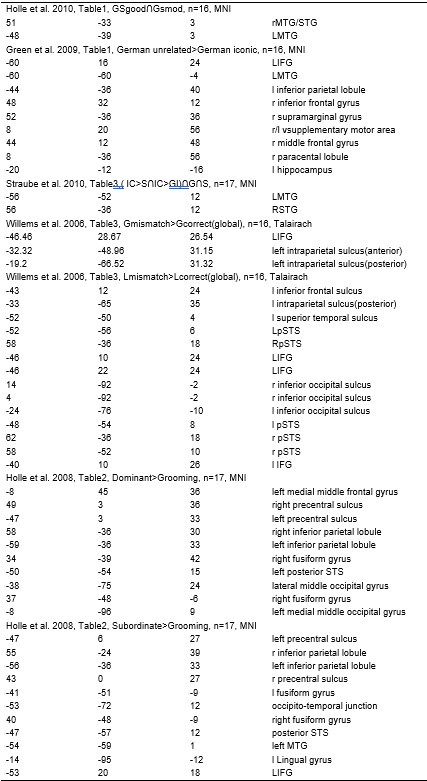

Author response table 1.

Meta-analysis of previous studies on gesture-speech integration.

Based on the meta-analysis of previous studies, we selected the IFG and pMTG as ROIs for gesture-speech integration. The rationale for selecting these brain regions is outlined in the introduction in Lines 63-66: “Empirical studies have investigated the semantic integration between gesture and speech by manipulating their semantic relationship[15-18] and revealed a mutual interaction between them19-21 as reflected by the N400 latency and amplitude14 as well as common neural underpinnings in the left inferior frontal gyrus (IFG) and posterior middle temporal gyrus (pMTG)[15,22,23].”

And further described in Lines 77-78: “Experiment 1 employed high-definition transcranial direct current stimulation (HD-tDCS) to administer Anodal, Cathodal and Sham stimulation to either the IFG or the pMTG”. And Lines 85-88: ‘Given the differential involvement of the IFG and pMTG in gesture-speech integration, shaped by top-down gesture predictions and bottom-up speech processing [23], Experiment 2 was designed to assess whether the activity of these regions was associated with relevant informational matrices”.

In the Methods section, we clarified the selection of coordinates in Lines 194-200: “Building on a meta-analysis of prior fMRI studies examining gesture-speech integration[22], we targeted Montreal Neurological Institute (MNI) coordinates for the left IFG at (-62, 16, 22) and the pMTG at (-50, -56, 10). In the stimulation protocol for HD-tDCS, the IFG was targeted using electrode F7 as the optimal cortical projection site[36], with four return electrodes placed at AF7, FC5, F9, and FT9. For the pMTG, TP7 was selected as the cortical projection site[36], with return electrodes positioned at C5, P5, T9, and P9.”

The selection of IFG or pMTG as integration hubs for gesture and speech has also been validated in our previous studies. Specifically, Zhao et al. (2018, J. Neurosci) applied TMS to both areas. Results demonstrated that disrupting neural activity in the IFG or pMTG via TMS selectively impaired the semantic congruency effect (reaction time costs due to semantic incongruence), while leaving the gender congruency effect unaffected.

These findings identified the IFG and pMTG as crucial hubs for gesture-speech integration, guiding the selection of brain regions for our subsequent studies.

(2) Selection of time windows

The five key time windows (TWs) analyzed in this study were derived from our previous TMS work (Zhao et al., 2021, J. Neurosci), where we segmented the gesture-speech integration period (0–320 ms post-speech onset) into eight 40-ms windows. This interval aligns with established literature on gesture-speech integration, particularly the 200–300 ms window noted by the reviewer. As detailed in Lines (776-779): “Procedure of Experiment 2. Eight time windows (TWs, duration = 40 ms) were segmented in relative to the speech IP. Among the eight TWs, five (TW1, TW2, TW3, TW6, and TW7) were chosen based on the significant results in our prior study[23]. Double-pulse TMS was delivered over each of the TW of either the pMTG or the IFG”.

In our prior work (Zhao et al., 2021, J. Neurosci), we employed a carefully controlled experimental design incorporating two key factors: (1) gesture-speech semantic congruency (serving as our primary measure of integration) and (2) gesture-speech gender congruency (implemented as a matched control factor). Using a time-locked, double-pulse TMS protocol, we systematically targeted each of the eight predefined time windows (TWs) within the left IFG, left pMTG, or vertex (serving as a sham control condition). Our results demonstrated that a TW-selective disruption of gesture-speech integration, indexed by the semantic congruency effect (i.e., a cost of reaction time because of semantic conflict), when stimulating the left pMTG in TW1, TW2, and TW7 but when stimulating the left IFG in TW3 and TW6. Crucially, no significant effects were observed during either sham stimulation or the controlled gender congruency factor (Figure 3 from Zhao et al., 2021, J. Neurosci).

This triple dissociation - showing effects only for semantic integration, only in active stimulation, and only at specific time points - provides compelling causal evidence that IFG-pMTG connectivity plays a temporally precise role in gesture-speech integration.

Noted that this work has undergone rigorous peer review by two independent experts who both endorsed our methodological approach. Their original evaluations, provided below:

Reviewer 1: “significance: Using chronometric TMS-stimulation the data of this experiment suggests a feedforward information flow from left pMTG to left IFG followed by an information flow from left IFG back to the left pMTG. The study is the first to provide causal evidence for the temporal dynamics of the left pMTG and left IFG found during gesture-speech integration.”

Reviewer 2: “Beyond the new results the manuscript provides regarding the chronometrical interaction of the left inferior frontal gyrus and middle temporal gyrus in gesture-speech interaction, the study more basically shows the possibility of unfolding temporal stages of cognitive processing within domain-specific cortical networks using short-time interval double-pulse TMS. Although this method also has its limitations, a careful study planning as shown here and an appropiate discussion of the results can provide unique insights into cognitive processing.”

References:

Willems, R.M., Ozyurek, A., and Hagoort, P. (2009). Differential roles for left inferior frontal and superior temporal cortex in multimodal integration of action and language. Neuroimage 47, 1992-2004. 10.1016/j.neuroimage.2009.05.066.

Drijvers, L., Jensen, O., and Spaak, E. (2021). Rapid invisible frequency tagging reveals nonlinear integration of auditory and visual information. Human Brain Mapping 42, 1138-1152. 10.1002/hbm.25282.

Drijvers, L., and Ozyurek, A. (2018). Native language status of the listener modulates the neural integration of speech and iconic gestures in clear and adverse listening conditions. Brain and Language 177, 7-17. 10.1016/j.bandl.2018.01.003.

Drijvers, L., van der Plas, M., Ozyurek, A., and Jensen, O. (2019). Native and non-native listeners show similar yet distinct oscillatory dynamics when using gestures to access speech in noise. Neuroimage 194, 55-67. 10.1016/j.neuroimage.2019.03.032.

Holle, H., and Gunter, T.C. (2007). The role of iconic gestures in speech disambiguation: ERP evidence. J Cognitive Neurosci 19, 1175-1192. 10.1162/jocn.2007.19.7.1175.

Kita, S., and Ozyurek, A. (2003). What does cross-linguistic variation in semantic coordination of speech and gesture reveal?: Evidence for an interface representation of spatial thinking and speaking. J Mem Lang 48, 16-32. 10.1016/S0749-596x(02)00505-3.

Bernardis, P., and Gentilucci, M. (2006). Speech and gesture share the same communication system. Neuropsychologia 44, 178-190. 10.1016/j.neuropsychologia.2005.05.007.

Zhao, W.Y., Riggs, K., Schindler, I., and Holle, H. (2018). Transcranial magnetic stimulation over left inferior frontal and posterior temporal cortex disrupts gesture-speech integration. Journal of Neuroscience 38, 1891-1900. 10.1523/Jneurosci.1748-17.2017.

Zhao, W., Li, Y., and Du, Y. (2021). TMS reveals dynamic interaction between inferior frontal gyrus and posterior middle temporal gyrus in gesture-speech semantic integration. The Journal of Neuroscience, 10356-10364. 10.1523/jneurosci.1355-21.2021.

Hartwigsen, G., Bzdok, D., Klein, M., Wawrzyniak, M., Stockert, A., Wrede, K., Classen, J., and Saur, D. (2017). Rapid short-term reorganization in the language network. Elife 6. 10.7554/eLife.25964.

Jackson, R.L., Hoffman, P., Pobric, G., and Ralph, M.A.L. (2016). The semantic network at work and rest: Differential connectivity of anterior temporal lobe subregions. Journal of Neuroscience 36, 1490-1501. 10.1523/JNEUROSCI.2999-15.2016.

Humphreys, G. F., Lambon Ralph, M. A., & Simons, J. S. (2021). A Unifying Account of Angular Gyrus Contributions to Episodic and Semantic Cognition. Trends in neurosciences, 44(6), 452–463. https://doi.org/10.1016/j.tins.2021.01.006

Bonner, M. F., & Price, A. R. (2013). Where is the anterior temporal lobe and what does it do?. The Journal of neuroscience : the official journal of the Society for Neuroscience, 33(10), 4213–4215. https://doi.org/10.1523/JNEUROSCI.0041-13.2013

Comment 2: It could still equally well be the case that other regions or networks relevant for gesture-speech integration are targeted, and it can still be the case that these timewindows are not specific, and effects bleed into other time periods. There seems to be no experimental evidence here that this is not the case.

The selection of IFG and pMTG as regions of interest was rigorously justified through multiple lines of evidence. First, a comprehensive meta-analysis of fMRI studies on gesture-speech integration consistently identified these regions as central nodes (see response to comment 1). Second, our own previous work (Zhao et al., 2018, JN; 2021, JN) provided direct empirical validation of their involvement. Third, by employing the same experimental paradigm, we minimized the likelihood of engaging alternative networks. Fourth, even if other regions connected to IFG or pMTG might be affected by TMS, the distinct engagement of specific time windows of IFG and pMTG minimizes the likelihood of consistent influence from other regions.

Regarding temporal specificity, our 2021 study (Zhao et al., 2021, JN, see details in response to comment 1) systematically examined the entire 0-320ms integration window and found that only select time windows showed significant effects for gesture-speech semantic congruency, while remaining unaffected during gender congruency processing. This double dissociation (significant effects for semantic integration but not gender processing in specific windows) rules out broad temporal spillover.

Comment 3: To be more specific, the authors write that double-pulse TMS has been widely used in previous studies (as found in their table). However, the studies cited in the table do not necessarily demonstrate the level of spatial and temporal specificity required to disentangle the contributions of tightly-coupled brain regions like the IFG and pMTG during the speech-gesture integration process. pMTG and IFG are located in very close proximity, and are known to be functionally and structurally interconnected, something that is not necessarily the case for the relatively large and/or anatomically distinct areas that the authors mention in their table.

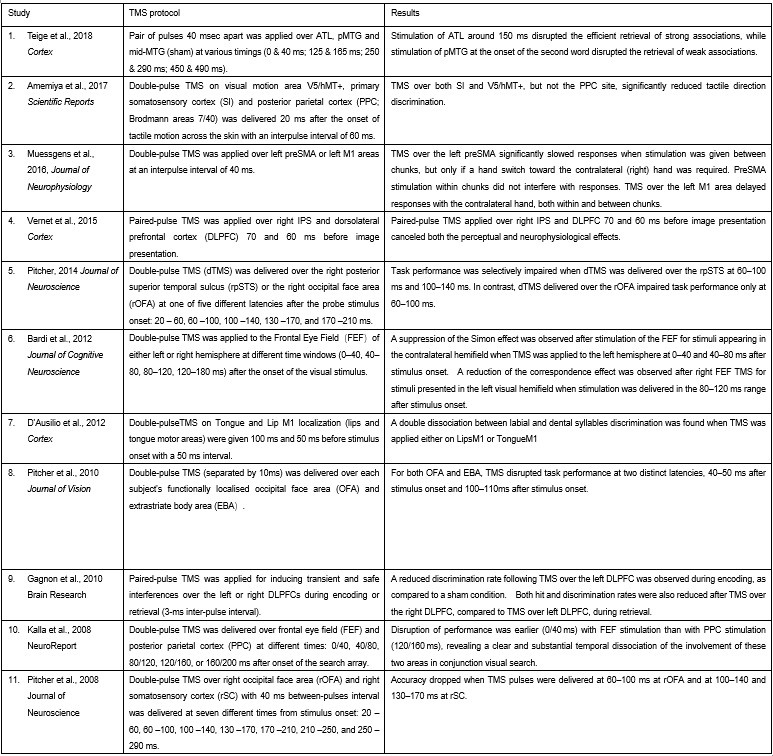

Our methodological approach is strongly supported by an established body of research employing double-pulse TMS (dpTMS) to investigate neural dynamics across both primary motor and higher-order cognitive regions. As documented in Author response table 1, multiple studies have successfully applied this technique to: (1) primary motor areas (tongue and lip representations in M1), and (2) semantic processing regions (including pMTG, PFC, and ATL). Particularly relevant precedents include:

(1) Teige et al. (2018, Cortex): Demonstrated precise spatial and temporal specificity by applying 40ms-interval dpTMS to ATL, pMTG, and mid-MTG across multiple time windows (0-40ms, 125-165ms, 250-290ms, 450-490ms), revealing distinct functional contributions from ATL versus pMTG.

(2) Vernet et al. (2015, Cortex): Successfully dissociated functional contributions of right IPS and DLPFC using 40ms-interval dpTMS, despite their anatomical proximity and functional connectivity.

These studies confirm double-pulse TMS can discriminate interconnected nodes at short timescales. Our 2021 study further validated this for IFG-pMTG.

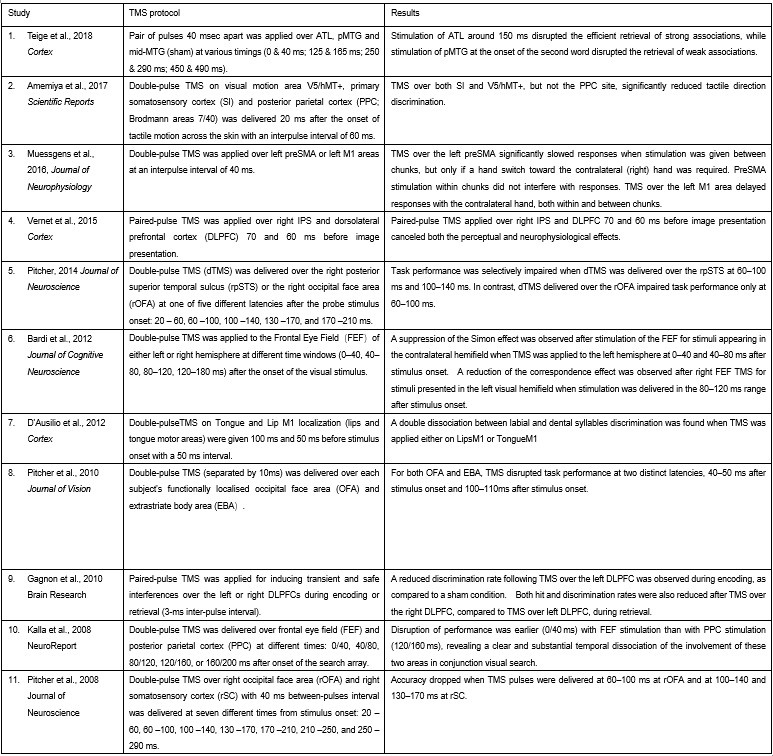

Author response table 2.

Double-pulse TMS studies on brain regions over 3-60 ms time interval

References:

Teige, C., Mollo, G., Millman, R., Savill, N., Smallwood, J., Cornelissen, P. L., & Jefferies, E. (2018). Dynamic semantic cognition: Characterising coherent and controlled conceptual retrieval through time using magnetoencephalography and chronometric transcranial magnetic stimulation. Cortex, 103, 329-349.

Vernet, M., Brem, A. K., Farzan, F., & Pascual-Leone, A. (2015). Synchronous and opposite roles of the parietal and prefrontal cortices in bistable perception: a double-coil TMS–EEG study. Cortex, 64, 78-88.

Comment 4: But also more in general: The mere fact that these methods have been used in other contexts does not necessarily mean they are appropriate or sufficient for investigating the current research question. Likewise, the cognitive processes involved in these studies are quite different from the complex, multimodal integration of gesture and speech. The authors have not provided a strong theoretical justification for why the temporal dynamics observed in these previous studies should generalize to the specific mechanisms of gesture-speech integration..

The neurophysiological mechanisms underlying double-pulse TMS (dpTMS) are well-characterized. While it is established that single-pulse TMS can produce brief artifacts (typically within 0–10 ms) due to transient cortical depolarization (Romero et al., 2019, NC), the dynamics of double-pulse TMS (dpTMS) involve more intricate inhibitory interactions. Specifically, the first pulse increases membrane conductance via GABAergic shunting inhibition, effectively lowering membrane resistance and attenuating the excitatory impact of the second pulse. This results in a measurable reduction in cortical excitability at the paired-pulse interval, as evidenced by suppressed motor evoked potentials (MEPs) (Paulus & Rothwell, 2016, J Physiol). Importantly, this neurophysiological mechanism is independent of cognitive domain and has been robustly demonstrated across multiple functional paradigms.

In our study, we did not rely on previously reported timing parameters but instead employed a dpTMS protocol using a 40-ms inter-pulse interval. Based on the inhibitory dynamics of this protocol, we designed a sliding temporal window sufficiently broad to encompass the integration period of interest. This approach enabled us to capture and localize the critical temporal window associated with ongoing integrative processing in the targeted brain region.

We acknowledge that the previous phrasing may have been ambiguous, a clearer and more detailed description of the dpTMS protocol has now been provided in Lines 88-92: “To this end, we employed chronometric double-pulse transcranial magnetic stimulation, which is known to transiently reduce cortical excitability at the inter-pulse interval]27]. Within a temporal period broad enough to capture the full duration of gesture–speech integration[28], we targeted specific timepoints previously implicated in integrative processing within IFG and pMTG [23].”

References:

Romero, M.C., Davare, M., Armendariz, M. et al. Neural effects of transcranial magnetic stimulation at the single-cell level. Nat Commun 10, 2642 (2019). https://doi.org/10.1038/s41467-019-10638-7

Paulus W, Rothwell JC. Membrane resistance and shunting inhibition: where biophysics meets state-dependent human neurophysiology. J Physiol. 2016 May 15;594(10):2719-28. doi: 10.1113/JP271452. PMID: 26940751; PMCID: PMC4865581.

Obermeier, C., & Gunter, T. C. (2015). Multisensory Integration: The Case of a Time Window of Gesture-Speech Integration. Journal of Cognitive Neuroscience, 27(2), 292-307. https://doi.org/10.1162/jocn_a_00688

Comment 5: Moreover, the studies cited in the table provided by the authors have used a wide range of interpulse intervals, from 20 ms to 100 ms, suggesting that the temporal precision required to capture the dynamics of gesture-speech integration (which is believed to occur within 200-300 ms; Obermeier & Gunter, 2015) may not even be achievable with their 40 ms time windows.

Double-pulse TMS has been empirically validated across neurocognitive studies as an effective method for establishing causal temporal relationships in cortical networks, with demonstrated sensitivity at timescales spanning 3-60 m. Our selection of a 40-ms interpulse interval represents an optimal compromise between temporal precision and physiological feasibility, as evidenced by its successful application in dissociating functional contributions of interconnected regions including ATL/pMTG (Teige et al., 2018) and IPS/DLPFC (Vernet et al., 2015). This methodological approach combines established experimental rigor with demonstrated empirical validity for investigating the precisely timed IFG-pMTG dynamics underlying gesture-speech integration, as shown in our current findings and prior work (Zhao et al., 2021).

Our experimental design comprehensively sampled the 0-320 ms post-stimulus period, fully encompassing the critical 200-300 ms window associated with gesture-speech integration, as raised by the reviewer. Notably, our results revealed temporally distinct causal dynamics within this period: the significantly reduced semantic congruency effect emerged at IFG at 200-240ms, followed by feedback projections from IFG to pMTG at 240-280ms. This precisely timed interaction provides direct neurophysiological evidence for the proposed architecture of gesture-speech integration, demonstrating how these interconnected regions sequentially contribute to multisensory semantic integration.

Comment 6: I do appreciate the extra analyses that the authors mention. However, my 5th comment is still unanswered: why not use entropy scores as a continous measure?

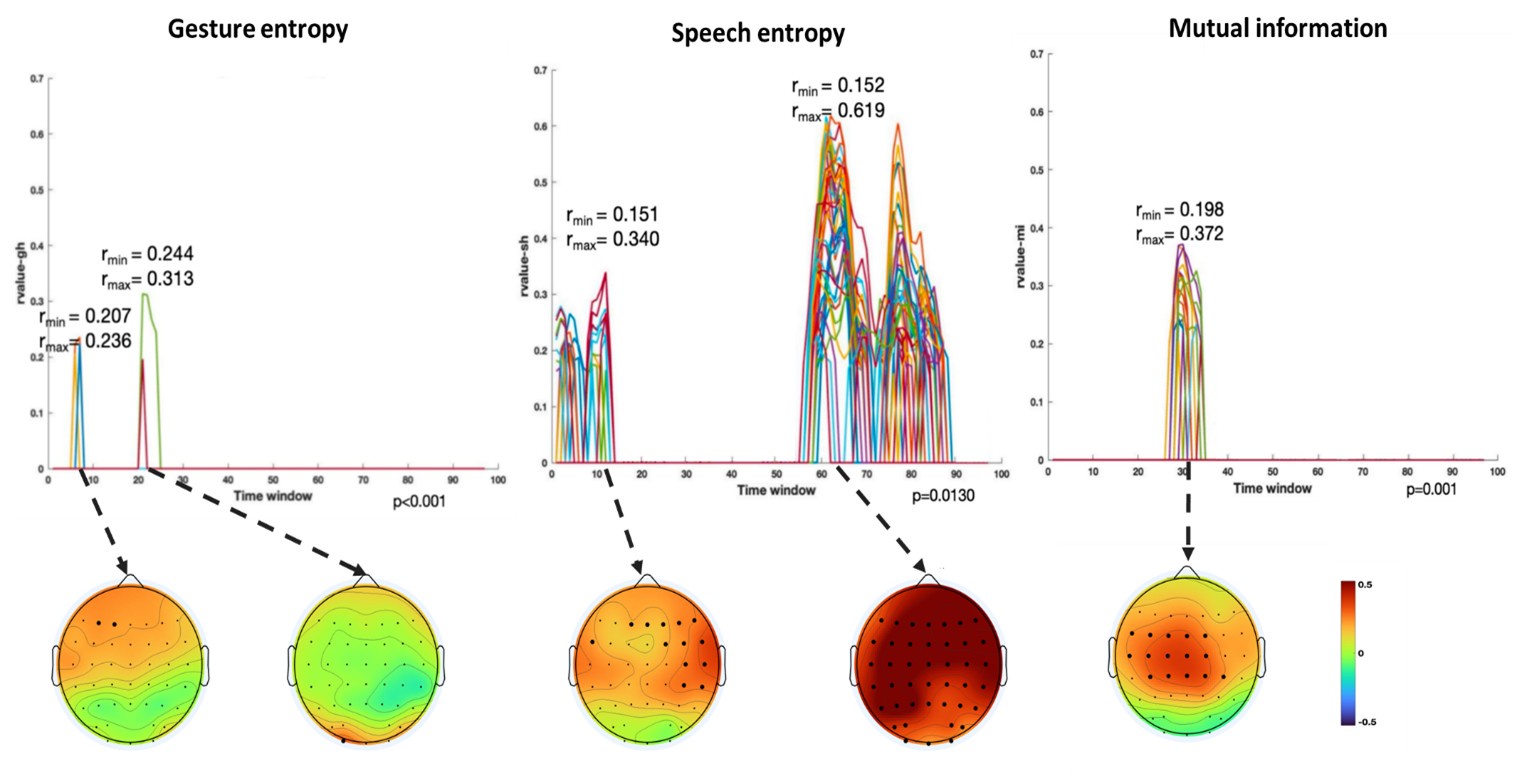

Analysis with MI and entropy as continuous variables were conducted employing Representational Similarity Analysis (RSA) (Popal et.al, 2019). This analysis aimed to build a model to predict neural responses based on these feature metrics.

To capture dynamic temporal features indicative of different stages of multisensory integration, we segmented the EEG data into overlapping time windows (40 ms in duration with a 10 ms step size). The 40 ms window was chosen based on the TMS protocol used in Experiment 2, which also employed a 40 ms time window. The 10 ms step size (equivalent to 5 time points) was used to detect subtle shifts in neural responses that might not be captured by larger time windows, allowing for a more granular analysis of the temporal dynamics of neural activity.

Following segmentation, the EEG data were reshaped into a four-dimensional matrix (42 channels × 20 time points × 97 time windows × 20 features). To construct a neural similarity matrix, we averaged the EEG data across time points within each channel and each time window. The resulting matrix was then processed using the pdist function to compute pairwise distances between adjacent data points. This allowed us to calculate correlations between the neural matrix and three feature similarity matrices, which were constructed in a similar manner. These three matrices corresponded to (1) gesture entropy, (2) speech entropy, and (3) mutual information (MI). This approach enabled us to quantify how well the neural responses corresponded to the semantic dimensions of gesture and speech stimuli at each time window.

To determine the significance of the correlations between neural activity and feature matrices, we conducted 1000 permutation tests. In this procedure, we randomized the data or feature matrices and recalculated the correlations repeatedly, generating a null distribution against which the observed correlation values were compared. Statistical significance was determined if the observed correlation exceeded the null distribution threshold (p < 0.05). This permutation approach helps mitigate the risk of spurious correlations, ensuring that the relationships between the neural data and feature matrices are both robust and meaningful.

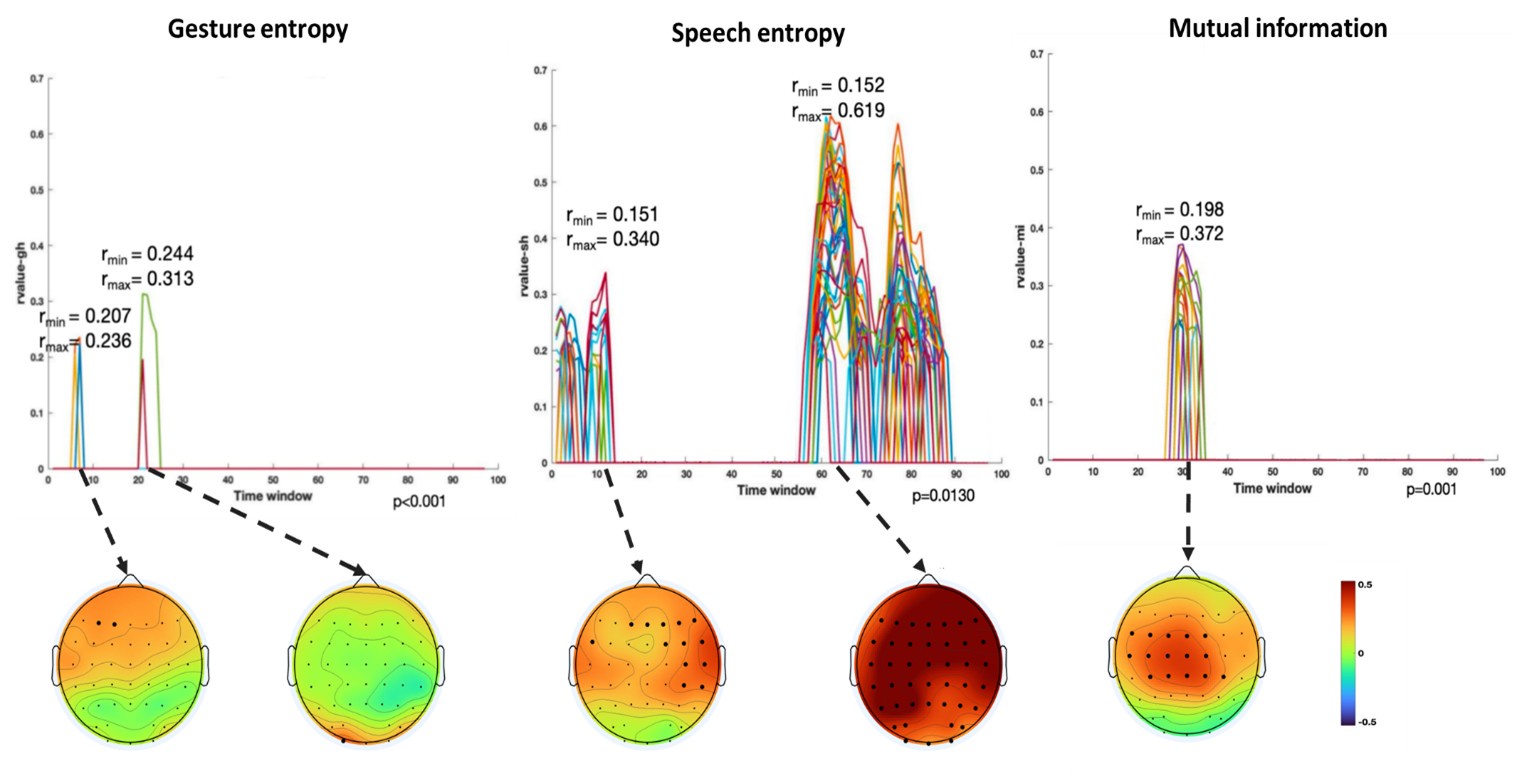

Finally, significant correlations were subjected to clustering analysis, which grouped similar neural response patterns across time windows and channels. This clustering allowed us to identify temporal and spatial patterns in the neural data that consistently aligned with the semantic features of gesture and speech stimuli, thus revealing the dynamic integration of these multisensory modalities across time. Results are as follows:

(1) Two significant clusters were identified for gesture entropy (Figure 1 left). The first cluster was observed between 60-110 ms (channels F1 and F3), with correlation coefficients (r) ranging from 0.207 to 0.236 (p < 0.001). The second cluster was found between 210-280 ms (channel O1), with r-values ranging from 0.244 to 0.313 (p < 0.001).

(2) For speech entropy (Figure 1 middle), significant clusters were detected in both early and late time windows. In the early time windows, the largest significant cluster was found between 10-170 ms (channels F2, F4, F6, FC2, FC4, FC6, C4, C6, CP4, and CP6), with r-values ranging from 0.151 to 0.340 (p = 0.013), corresponding to the P1 component (0-100 ms). In the late time windows, the largest significant cluster was observed between 560-920 ms (across the whole brain, all channels), with r-values ranging from 0.152 to 0.619 (p = 0.013).

(3) For mutual information (MI) (Figure 1 right), a significant cluster was found between 270-380 ms (channels FC1, FC2, FC3, FC5, C1, C2, C3, C5, CP1, CP2, CP3, CP5, FCz, Cz, and CPz), with r-values ranging from 0.198 to 0.372 (p = 0.001).

Author response image 1.

Results of RSA analysis.

These additional findings suggest that even using a different modeling approach, neural responses, as indexed by feature metrics of entropy and mutual information, are temporally aligned with distinct ERP components and ERP clusters, as reported in the current manuscript. This alignment serves to further consolidate the results, reinforcing the conclusion we draw. Considering the length of the manuscript, we did not include these results in the current manuscript.

Reference:

Popal, H., Wang, Y., & Olson, I. R. (2019). A guide to representational similarity analysis for social neuroscience. Social cognitive and affective neuroscience, 14(11), 1243-1253.

Comment 7: In light of these concerns, I do not believe the authors have adequately demonstrated the spatial and temporal specificity required to disentangle the contributions of the IFG and pMTG during the gesture-speech integration process. While the authors have made a sincere effort to address the concerns raised by the reviewers, and have done so with a lot of new analyses, I remain doubtful that the current methodological approach is sufficient to draw conclusions about the causal roles of the IFG and pMTG in gesture-speech integration.

To sum up:

(1) Empirical validation from our prior work (Zhao et al., 2018,2021,JN): The selection of IFG and pMTG as target regions was informed by both: (1) a comprehensive meta-analysis of fMRI studies on gesture-speech integration, and (2) our own prior causal evidence from Zhao et al. (2018, J Neurosci), with detailed stereotactic coordinates provided in the attached Response to Editors and Reviewers letter. The temporal parameters were similarly grounded in empirical data from Zhao et al. (2021, J Neurosci), where we systematically examined eight consecutive 40-ms windows spanning the full integration period (0-320 ms). This study revealed a triple dissociation of effects - occurring exclusively during: (i)semantic integration (but not control tasks), (ii) active stimulation (but not sham), and (iii) specific time windows (but not all time windows)- providing robust causal evidence for the spatiotemporal specificity of IFG-pMTG interactions in gesture-speech processing. Notably, all reviewers recognized the methodological strength of this dpTMS approach in their evaluations (see attached JN assessment for details).

(2) Convergent evidence from Experiment 3: Our study employed a multi-method approach incorporating three complementary experimental paradigms, each utilizing distinct neurophysiological techniques to provide converging evidence. Specifically, Experiment 3 implemented high-temporal-resolution EEG, which independently replicated the time-sensitive dynamics of gesture-speech integration observed in our double-pulse TMS experiments. The remarkable convergence between these methodologically independent approaches -demonstrating consistent temporal staging of IFG-pMTG interactions across both causal (TMS) and correlational (EEG) measures - significantly strengthens the validity and generalizability of our conclusions regarding the neural mechanisms underlying multisensory integration.

(3) Established precedents in double-pulse TMS literature: The double-pulse TMS methodology employed in our study is firmly grounded in established neuroscience research. As documented in our detailed Response to Editors and Reviewers letter (citing 11 representative studies), dpTMS has been extensively validated for investigating causal temporal dynamics in cortical networks, with demonstrated sensitivity at timescales ranging from 3-60 ms. Particularly relevant precedents include: 1. Teige et al. (2018, Cortex) successfully dissociated functional contributions of anatomically proximal regions (ATL vs. pMTG vs.mid-MTG) using 40-ms-interval double-pulse TMS; 2. Vernet et al. (2015, Cortex) effectively distinguished neural processing in interconnected frontoparietal regions (right IPS vs. DLPFC) using 40-ms double-pulse TMS parameters. Both parameters are identical to those employed in our current study.

(4) Neurophysiological Plausibility: The neurophysiological basis for the transient double-pulse TMS effects is well-established through mechanistic studies of TMS-induced cortical inhibition (Romero et al.,2019; Paulus & Rothwell, 2016).

Taking together, we respectfully submit that our methodology provides robust support for our conclusions.