A differentiable Gillespie algorithm for simulating chemical kinetics, parameter estimation, and designing synthetic biological circuits

eLife Assessment

This important study introduces a fully differentiable variant of the Gillespie algorithm as an approximate stochastic simulation scheme for complex chemical reaction networks, allowing kinetic parameters to be inferred from empirical measurements of network outputs using gradient descent. The concept and algorithm design are convincing and innovative. While the proofs of concept are promising, some questions are left open about implications for more complex systems that cannot be addressed by existing methods. This work has the potential to be of significant interest to a broad audience of quantitative and synthetic biologists.

https://doi.org/10.7554/eLife.103877.3.sa0Important: Findings that have theoretical or practical implications beyond a single subfield

- Landmark

- Fundamental

- Important

- Valuable

- Useful

Convincing: Appropriate and validated methodology in line with current state-of-the-art

- Exceptional

- Compelling

- Convincing

- Solid

- Incomplete

- Inadequate

During the peer-review process the editor and reviewers write an eLife Assessment that summarises the significance of the findings reported in the article (on a scale ranging from landmark to useful) and the strength of the evidence (on a scale ranging from exceptional to inadequate). Learn more about eLife Assessments

Abstract

The Gillespie algorithm is commonly used to simulate and analyze complex chemical reaction networks. Here, we leverage recent breakthroughs in deep learning to develop a fully differentiable variant of the Gillespie algorithm. The differentiable Gillespie algorithm (DGA) approximates discontinuous operations in the exact Gillespie algorithm using smooth functions, allowing for the calculation of gradients using backpropagation. The DGA can be used to quickly and accurately learn kinetic parameters using gradient descent and design biochemical networks with desired properties. As an illustration, we apply the DGA to study stochastic models of gene promoters. We show that the DGA can be used to: (1) successfully learn kinetic parameters from experimental measurements of mRNA expression levels from two distinct Escherichia coli promoters and (2) design nonequilibrium promoter architectures with desired input–output relationships. These examples illustrate the utility of the DGA for analyzing stochastic chemical kinetics, including a wide variety of problems of interest to synthetic and systems biology.

Introduction

Randomness is a defining feature of our world. Stock market fluctuations, the movement of particles in fluids, and even the change of allele frequencies in organismal populations can all be described using the language of stochastic processes. For this reason, disciplines as diverse as physics, biology, ecology, evolution, finance, and engineering have all developed tools to mathematically model stochastic processes (Van Kampen, 1992; Gardiner, 2009; Rolski et al., 2009; Wong and Hajek, 2012). In the context of biology, an especially fruitful area of research has been the study of stochastic gene expression in single cells (McAdams and Arkin, 1997; Elowitz et al., 2002; Raj and van Oudenaarden, 2008; Sanchez and Golding, 2013). The small number of molecules involved in gene expression make stochasticity an inherent feature of protein production and numerous mathematical and computational techniques have been developed to model gene expression and relate mathematical models to experimental observations (Paulsson, 2005; Wilkinson, 2018).

One prominent computational algorithm for understanding stochasticity in gene expression is the Gillespie algorithm, with its Direct Stochastic Simulation Algorithm variant being the most commonly used method (Doob, 1945; Gillespie, 1977). The Gillespie algorithm is an extremely efficient computational technique used to simulate the time evolution of a system in which events occur randomly and discretely (Gillespie, 1977). Beyond gene expression, the Gillespie algorithm is widely employed across numerous disciplines to model stochastic systems characterized by discrete, randomly occurring events including epidemiology (Pineda-Krch, 2008), ecology (Parker and Kamenev, 2009; Dobramysl et al., 2018), neuroscience (Benayoun et al., 2010; Rijal et al., 2024), and chemical kinetics (Gillespie, 1976; Gillespie, 2007).

Here, we revisit the Gillespie algorithm in light of the recent progress in deep learning and differentiable programming by presenting a ‘fully differentiable’ variant of the Gillespie algorithm we dub the differentiable Gillespie algorithm (DGA). The DGA modifies the traditional Gillespie algorithm to take advantage of powerful automatic differentiation libraries for example, PyTorch (Paszke et al., 2019), Jax (Bradbury et al., 2018), and Julia (Bezanson et al., 2017) and gradient-based optimization. The DGA allows us to quickly fit kinetic parameters to data and design discrete stochastic systems with a desired behavior. Our work is similar in spirit to other recent work that seeks to harness the power of differentiable programming to accelerate scientific simulations (Liao et al., 2019; Schoenholz and Cubuk, 2020; Wei et al., 2019; Degrave et al., 2019; Arya et al., 2022; Arya et al., 2023; Bezgin et al., 2023). The DGA’s use of differential programming tools also complements more specialized numerical methods designed for performing parameter sensitivity analysis on Gillespie simulations such as finite-difference methods (Anderson, 2012; Srivastava et al., 2013; Thanh et al., 2018), the likelihood ratio method (Glynn, 1990; McGill et al., 2012; Núñez and Vlachos, 2015), and pathwise derivative methods (Sheppard et al., 2012).

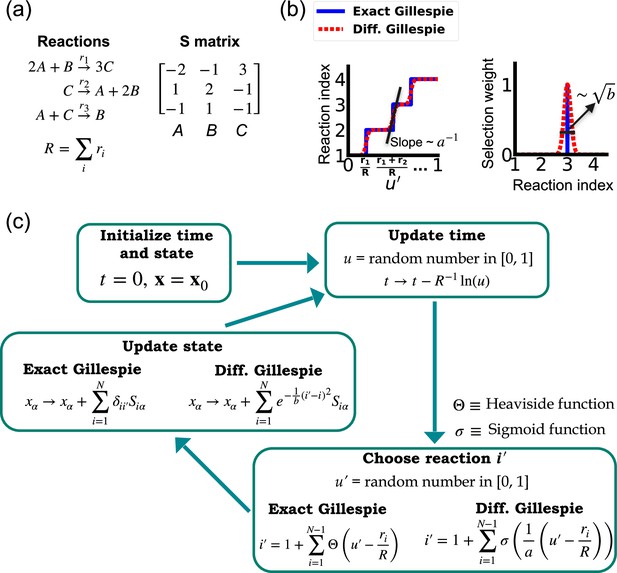

One of the difficulties in formulating a differentiable version of the Gillespie algorithm is that the stochastic systems it treats are inherently discrete. For this reason, there is no obvious way to take derivatives with respect to kinetic parameters without making approximations. As shown in Figure 1, in the traditional Gillespie algorithm both the selection of the index for the next reaction and the updates of chemical species are both discontinuous functions of the kinetic parameters. To circumnavigate these difficulties, the DGA modifies the traditional Gillespie algorithm by approximating discrete operations with continuous, differentiable functions, smoothing out abrupt transitions to facilitate gradient computation via automatic differentiation (Figure 1). This significant modification preserves the core characteristics of the original algorithm while enabling integration with modern deep learning techniques.

Comparison between the exact Gillespie algorithm and the differentiable Gillespie algorithm (DGA) for simulating chemical kinetics.

(a) Example of kinetics with reactions with rates . (b) Illustration of the DGA’s approximations: replacing the non-differentiable Heaviside and Kronecker delta functions with smooth sigmoid and Gaussian functions, respectively. (c) Flow chart comparing exact and differentiable Gillespie simulations.

One natural setting for exploring the efficacy of the DGA is recent experimental and theoretical works exploring stochastic gene expression. Here, we focus on a set of beautiful experiments that explore the effect of promoter architecture on steady-state gene expression (Jones et al., 2014). An especially appealing aspect of Jones et al., 2014 is that the authors independently measured the kinetic parameters for these promoter architectures using orthogonal experiments. This allows us to directly compare the predictions of DGA to ground truth measurements of kinetic parameters. We then extend our considerations to more complex promoter architectures (Lammers et al., 2023) and illustrate how the DGA can be used to design circuits with a desired input–output relation.

Results

A differentiable approximation to the Gillespie algorithm

Before proceeding to discussing the DGA, we start by briefly reviewing how the traditional Gillespie algorithm simulates discrete stochastic processes. For concreteness, in our exposition, we focus on the chemical system shown in Figure 1 consisting of three species, A, B, and C, whose abundances are described by a state vector . These chemical species can undergo chemical reactions, characterized by rate constants, where , and a stoichiometric matrix whose ith row encodes how the abundance of species changes due to reaction . Note that in what follows, we will often supress the dependence of the rates on and simply write .

In order to simulate such a system, it is helpful to discretize time into small intervals of size . The probability that a reaction with rate occurs during such an interval is simply . By construction, we choose to be small enough that and that the probability that a reaction occurs in any interval is extremely small and well described by a Poisson process. This means that naively simulating such a process is extremely inefficient because, in most intervals, no reactions will occur.

Gillespie algorithm

The Gillespie algorithm circumnavigates this problem by: (1) exploiting the fact that the reactions are independent so that the rate at which any reaction occurs is also described by an independent Poisson process with rate and (2) the waiting time distribution of a Poisson process with rate is the exponential distribution . The basic steps of the Gillespie algorithm are illustrated in Figure 1.

The simulation begins with the initialization of time and state variables:

where is the simulation time. One then samples the waiting time distribution for a reaction to occur to determine when the next the reaction occurs. This is done by drawing a random number from a uniform distribution over and updating

Note that this time update is a fully differentiable function of the rates .

In order to determine which of the reactions occurs after a time , we note that probability that reaction occurs is simply given by . Thus, we can simply draw another random number and choose such that equals the smallest integer satisfying

The reaction abundances are then updated using the stoichiometric matrix

Unlike the time update, both the choice of the reaction and the abundance updates are not differentiable since the choice of the reaction is a discontinuous function of the parameters .

Approximating updates in the Gillespie with differentiable functions

In order to make use of modern deep learning techniques and modern automatic differentiation packages, it is necessary to modify the Gillespie algorithm in such as way as to make the choice of reaction index (Equation 2) and abundance updates (Equation 3) differentiable functions of the kinetic parameters. To do so, we rewrite Equation 2 using a sum of Heaviside step function (recall if and if :

This formulation of index selection makes clear the source of non-differentiability. The derivative of the with respect to does not exist at the transition points where the Heaviside function jumps (see Figure 1b).

This suggests a natural modification of the Gillespie algorithm to make it differentiable – replacing the Heaviside function by a sigmoid function of the form

where we have introduced a ‘hyper-parameter’ that controls the steepness of the sigmoid and plays an analogous role to temperature in a Fermi function in statistical mechanics. A larger value of results in a steeper slope for the sigmoid functions, thereby more closely approximating the true Heaviside functions which is recovered in the limit (see Figure 1b). With this replacement, the index selection equation becomes

Note that in making this approximation, our index is no longer an integer, but instead can take on all real values between 0 and . However, by making sufficiently small, Equation 6 still serves as a good approximation to the discrete jumps in Equation 4. In general, is a hyperparameter that is chosen to be as small as possible while still ensuring that the gradient of with respect to the kinetic parameters can be calculated numerically with high accuracy. For a detailed discussion, please see Appendix 1—figure 1 and Appendix 1.

Since the index is no longer an integer but a real number, we must also modify the abundance update in Equation 3 to make it fully differentiable. To do this, we start by rewriting Equation 3 using the Kronecker delta (where if and if ) as

Since is no longer an integer, we can approximate the Kronecker delta by a Gaussian function, to arrive at the approximate update equation

The hyperparameter is generally chosen to be as small as possible while still ensuring numerical stability of gradients (Appendix 1—figure 1). Note by using an abundance update of the form Equation 8, the species abundances are now real numbers. This is in stark contrast with the exact Gillespie algorithm where the abundance update (Equation 7) ensures that the are all integers.

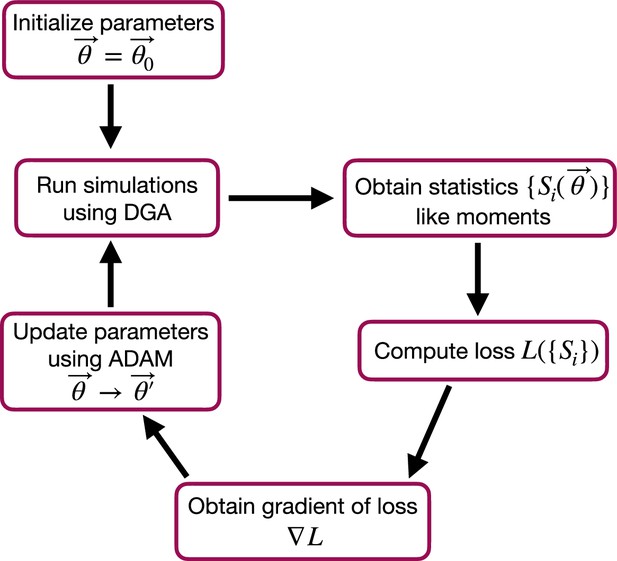

Combining the DGA with gradient-based optimization

The goal of making Gillespie simulations differentiable is to enable the computation of the gradient of a loss function, , with respect to the kinetics parameters . A loss function quantifies the difference between simulated and desired values for quantities of interest. For example, when employing the DGA in the context of fitting noisy gene expression models, a natural choice for is the difference between the simulated and experimentally measured moments of mRNA/protein expression (or alternatively, the Kullback–Leibler divergence between the experimental and simulated mRNA/protein expression distributions if full distributions can be measured). When using the DGA to design gene circuits, the loss function can be any function that characterizes the difference between the simulated and desired values of the input–output relation.

The goal of the optimization using the DGA is to find parameters that minimize the loss. The basic workflow of a DGA-based optimization is shown in Figure 2. One starts with an initial guess for the parameters . One then uses DGA algorithm to simulate the systems and calculate the gradient of the loss function . One then updates the parameters, moving in the direction of the gradient using gradient descent or more advanced methods such as ADAM (Kingma and Ba, 2014; Mehta et al., 2019), which uses adaptive estimates of the first and second moments of the gradients to speed up convergence to a local minimum of the loss function.

Flow chart of the parameter optimization process using the differentiable Gillespie algorithm (DGA).

The process begins by initializing the parameters . Simulations are then run using the DGA to obtain statistics like moments. These statistics are used to compute the loss , and the gradient of the loss is obtained. Finally, parameters are updated using the ADAM optimizer, and the process iterates to minimize the loss.

The price of differentiability

A summary of the DGA is shown in Figure 1. Unsurprisingly, differentiability comes at a price. The foremost of these is that unlike the Gillespie algorithm, the DGA is no longer exact. The DGA replaces the exact discrete stochastic system by an approximate differentiable stochastic system. This is done by allowing both the reaction index and the species abundances to be continuous numbers. Though in theory, the errors introduced by these approximations can be made arbitrarily small by choosing the hyper-parameters and small enough (see Figure 1), in practice, gradients become numerically unstable when and are sufficiently small (see Appendix 1 and Appendix 1—figure 1).

In what follows, we focus almost exclusively on steady-state properties that probe the ‘bulk’, steady-state properties of the stochastic system of interest. We find the DGA works well in this setting. However, we note that the effect of the approximations introduced by the DGA may be pronounced in more complex settings such as the calculation of rare events, modeling of tail-driven processes, or dealing with non-stationary time series.

In order to better understand the DGA in the context of stochastic gene expression, we benchmarked the DGA on a simple two-state promoter model inspired by experiments in Escherichia coli (Jones et al., 2014). This simple model had several advantages that make it well suited for exploring the performance of DGA. These include the ability to analytically calculate mRNA expression distributions and independent experimental measurements of kinetic parameters.

Two-state promoter model

Gene regulation is tightly regulated at the transcriptional level to ensure that genes are expressed at the right time, place, and in the right amount (Phillips et al., 2012). Transcriptional regulation involves various mechanisms, including the binding of transcription factors to specific DNA sequences, the modification of chromatin structure, and the influence of non-coding RNAs, which collectively control the initiation and rate of transcription (Phillips et al., 2012; Sanchez et al., 2013; Phillips et al., 2019). By orchestrating these regulatory mechanisms, cells can respond to internal signals and external environmental changes, maintaining homeostasis and enabling proper development and function.

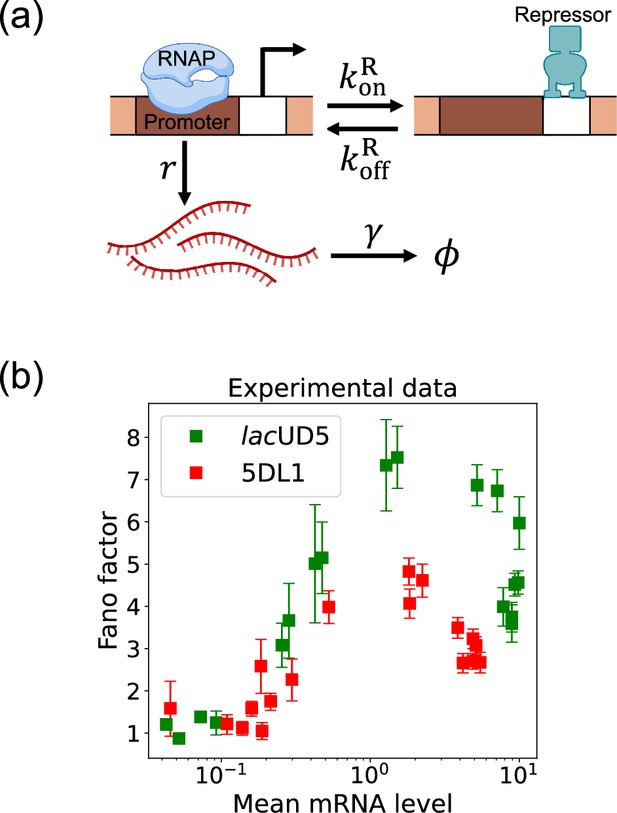

Here, we focus on a classic two-state promoter gene regulation (Jones et al., 2014). Two-state promoter systems are commonly studied because they provide a simplified yet powerful model for understanding gene regulation dynamics. These systems, characterized by promoters toggling between active and inactive states, offer insights into how genes are turned on or off in response to various stimuli (see Figure 3a). The two-state gene regulation circuit involves the promoter region, where RNA polymerase (RNAP) binds to initiate transcription and synthesize mRNA molecules at a rate . A repressor protein can also bind to the operator site at a rate and unbind at a rate . When the repressor is bound to the operator, it prevents RNAP from accessing the promoter, effectively turning off transcription. mRNA is also degraded at a rate . An appealing feature of this model is that both mean mRNA expression and the Fano factor can be calculated analytically and there exist beautiful quantitative measurements of both these quantities (Figure 3b). For this reason, we use this two-state promoters to benchmark the efficacy of DGA below.

Two-state gene regulation architecture.

(a) Schematic of gene regulatory circuit for transcriptional repression. RNA polymerase (RNAP) binds to the promoter region to initiate transcription at a rate , leading to the synthesis of mRNA molecules (red curvy lines). mRNA is degraded at a rate . A repressor protein can bind to the operator site, with association and dissociation rates and , respectively. (b) Experimental data from Phillips et al., 2019, showing the relationship between the mean mRNA level and the Fano factor for two different promoter constructs: lacUD5 (green squares) and 5DL1 (red squares).

Characterizing errors due to approximations in the DGA

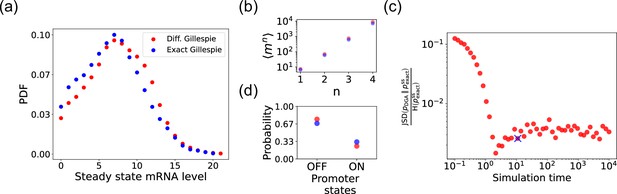

We begin by testing the DGA to do forward simulations on the two-state promoter system described above and comparing the results to simulations performed with the exact Gillespie algorithm (see Appendix 2 for simulation details). Figure 4a compares the probability distribution function (PDF) for the steady-state mRNA levels obtained from the DGA (in red) and the exact Gillespie simulation (in blue). The close overlap of these distributions demonstrates that the DGA can accurately replicate the results of the exact Gillespie simulation. This is also shown by the very close match of the first four moments of the mRNA count between the exact Gillespie and the DGA in Figure 4b, though the DGA systematically overestimates these moments. As observed in Figure 4a, the DGA also fails to accurately capture the tails of the underlying PDF. This discrepancy arises because rare events result from very frequent low-probability reaction events where the sigmoid approximation used in the DGA significantly impacts the reaction selection process and, consequently, the final simulation results.

Accuracy of the differentiable Gillespie algorithm (DGA) in simulating the two-state promoter architecture in Figure 3a.

Comparison between the DGA and exact simulations for (a) steady-state mRNA distribution, (b) moments of the steady-state mRNA distribution, and (d) the probability for the promoter to be in the ‘ON’ or ‘OFF’ state. (c) Ratio of the Jensen–Shannon divergence between the differentiable Gillespie probability distribution function (PDF) and the exact steady-state PDF , and the Shannon entropy of the exact steady-state PDF. In all of the plots, 2000 trajectories are used. The simulation time used in panels (a), (b), and (d) is marked by blue ‘x’. Parameter values: , , , , , and .

Next, we compare the accuracy of the DGA in simulating mRNA abundance distributions across a range of simulation times (see Figure 4c). The accuracy is quantified by the ratio of the Jensen–Shannon divergence between the differentiable Gillespie PDF and the exact steady-state PDF , and the entropy of the exact steady-state PDF. For probability distributions and over the same discrete space , the JSD and H are defined as:

where and denotes the Kullback–Leibler divergence

The ratio normalizes divergence by entropy, enabling meaningful comparison across systems. As expected, the ratio decreases with increasing simulation time, indicating convergence toward the steady-state distribution of the exact Gillespie simulation. By ‘steady-state distribution’, we mean the long-term probability distribution of states that the exact Gillespie algorithm approaches after a simulation time of 104. The saturation of the ratio at approximately 0.003 for long simulation times is due to the finite values of and . In percentage terms, this ratio represents a 0.3% divergence, meaning that the DGA’s approximation introduces only a 0.3% deviation from the exact distribution, relative to the total uncertainty (entropy) in the exact system.

Finally, the bar plot in Figure 4d shows simulation results for the probability of the promoter being in the ‘OFF’ and ‘ON’ states as predicted by the DGA (in red) and the exact Gillespie simulation (in blue). The differentiable Gillespie overestimates the probability of being in the ‘OFF’ state and underestimates the probability of being in the ‘ON’ state. Nonetheless, given the discrete nature of this system, the DGA does a reasonable job of matching the results of the exact simulations.

As we will see below, despite these errors the DGA is able to accurately capture gradient information and hence works remarkably well at gradient-based optimization of loss functions.

Parameter estimation using the DGA

In many applications, one often wants to estimate kinetic parameters from experimental measurements of a stochastic system (Tian et al., 2007; Munsky et al., 2009; Komorowski et al., 2009; Villaverde et al., 2019). For example, in the context of gene expression, biologists are often interested in understanding biophysical parameters such as the rate at which promoters switch between states or a transcription factor unbinds from DNA. However, estimating kinetic parameters in stochastic systems poses numerous challenges because the vast majority of methods for parameter estimation are designed with deterministic systems in mind. Moreover, it is often difficult to analytically calculate likelihood functions making it difficult to perform statistical inference. One attractive method for addressing these difficulties is to combine differentiable Gillespie simulations with gradient-based optimization methods. By choosing kinetic parameters that minimize the difference between simulations and experiments as measured by a loss function, one can quickly and efficiently estimate kinetic parameters and error bars.

Loss function for parameter estimation

To use the DGA for parameter estimation, we start by defining a loss function that measures the discrepancy between simulations and experiments. In the context of the two-state promoter model (Figure 3), a natural choice of loss function is the square error between the simulated and experimentally measured mean and standard deviations of the steady-state mRNA distributions:

where and denote the mean and standard deviation obtained from DGA simulations, and and are the experimentally measured values of the same quantities. Having specified the loss function and parameters, we then use the gradient-based optimization to minimize the loss and find the optimal parameters (see Figure 2). Note that in general the solution to the optimization problem need not be unique (see below).

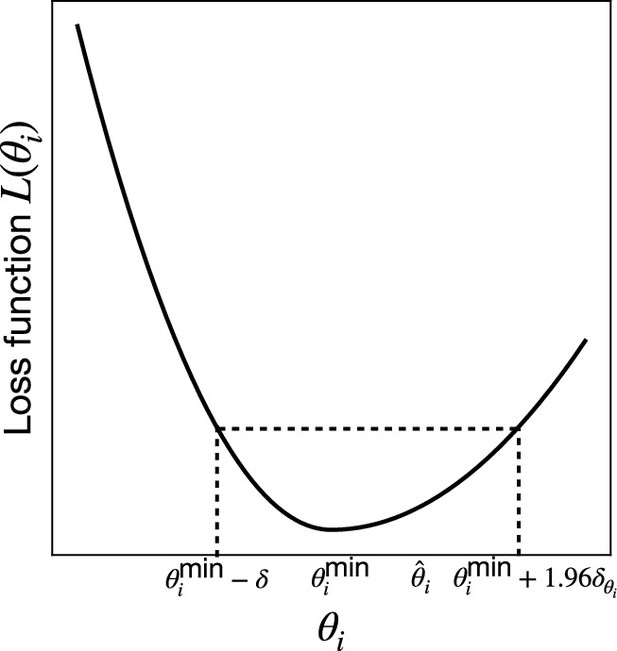

Confidence intervals and visualizing loss landscapes

Given a set of learned parameters that minimize , one would also ideally like to assign a confidence interval (CI) to this estimate that reflect how constrained these parameters are. One natural way to achieve this is by examining the curvature of the loss function as the parameter varies around its minimum value, . Motivated by this, we define the 95% CIs for parameter by:

where

and . A detailed explanation of how to numerically estimate the CIs is given in Appendix 3.

One shortcoming of Equation 13 is that it treats each parameter in isolation and ignores correlations between parameters. On a technical level, this is reflected in the observation that the CIs only know about the diagonal elements of the full Hessian . This shortcoming is especially glaring when there are many sets of parameters that all optimize the loss function (Einav et al., 2018; Razo-Mejia et al., 2018). As discuss below, this is often the case in many stochastic systems including the two-state promoter architecture in Figure 3. For this reason, it is often useful to make two dimensional plots of the loss function . To do so, for each pair of parameters, we simply sample the parameters around their optimal value and forward simulate to calculate the loss function . We then use this simulations to create two-dimensional heat maps of the loss function. This allows us to identify ‘soft directions’ in parameter space, where the loss function changes slowly, indicating weak sensitivity to specific parameter combinations.

Parameter estimation on synthetic data

Before proceeding to experiments, we start by benchmarking the DGA’s ability to perform parameter estimation on synthetic data generated using the two-state promoter model shown in Figure 3. This model nominally has four independent kinetic parameters: the rate at which repressors bind the promoter, ; the rate at which the repressor unbinds from the promoter, ; the rate at which mRNA is produced, ; and the rate at which mRNA degrades, . Since we are only concerned with steady-state properties of the mRNA distribution, we choose to measure time in units of the off rate and set in everything that follows. In Appendix 4, we make use of exact analytical results for and to show that the solution to the optimization problem specified by loss function in Equation 11 is degenerate – there are many combinations of the three parameters that all optimize . On the other hand, if one fixes the mRNA degradation rate , this degeneracy is lifted and there is a unique solution to the optimization problem for the two parameters . We discuss both these cases below.

Generating synthetic data

To generate synthetic data, we randomly sample the three parameters: , , and within the range , while keeping fixed at 1. In total, we generate 20 different sets of random parameters. We then perform exact Gillespie simulations for each set of parameters. Using these simulations, we obtain the mean and standard deviation of the mRNA levels, which are then used as input to the loss function in Equation 11. We then use the DGA to estimate the parameters using the procedure outlined above and compare the resulting predictions with ground truth values for simulations.

Estimating parameters in the non-degenerate case

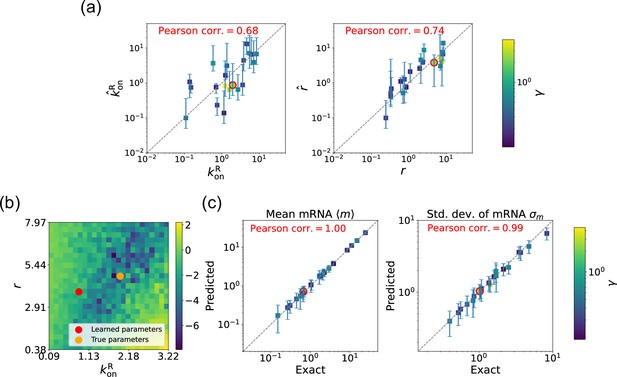

We begin by considering the case where the mRNA degradation rate is known and the goal is to estimate the two other parameters: the repressor binding rate and the mRNA production rate . As discussed above, in this case, the loss function in Equation 11 has a unique minima, considerably simplifying the inference task. Figure 5a shows a scatter plot of the learned and the true parameter values for wide variety of choices of . As can be seen, there is very good agreement between the true parameters and learned parameters. Figure 5c shows that even when the true and learned parameters differ, the DGA can predict the mean and standard deviation of the steady-state mRNA distribution almost perfectly (see Appendix 5 for discussion of how error bars were estimated). To better understand this, we selected a set of learned parameters: , , and . We then plotted the loss function in the neighborhood of these parameters (Figure 5b). As can be seen, the loss function around the true parameters is quite flat and the learned parameters live at the edge of this flat region. The flatness of the loss function reflects the fact that the mean and standard deviation of the mRNA distribution depend weakly on the kinetic parameters.

Gradient-based learning via differentiable Gillespie algorithm (DGA) is applied to the synthetic data for the gene expression model in Figure 3a.

Parameters are fixed at 1, with and for a simulation time of 10. (a) Scatter plot of true versus inferred parameters ( and ) with constant. Error bars are 95% confidence intervals (CIs). Panel (b) plots the logarithm of the loss function near a learned parameter set (shown in red circles in (a)), showing insensitivity regions. Panel (c) compares true and predicted mRNA mean and standard deviation with 95% CIs.

Estimating parameters for the degenerate case

We now estimate parameters for the two-state promoter model when all three parameters , , and are unknown. As discussed above, in this case, there are many sets of parameters that all minimize the loss function in Equation 11. Figure 6a shows a comparison between the learned and true parameters along with a heat map of the loss function for one set of synthetic parameters (Figure 6b). As can be seen in the plots, though the true parameters and learned parameter values differ significantly, they do so along ‘sloppy’ directions where loss function is flat. Consistent with this intuition, we performed simulations comparing the mean and standard deviation of the steady-state mRNA levels using the true and learned parameters and found near-perfect agreement across all of the synthetic data (Figure 6c).

Gradient-based learning via differentiable Gillespie algorithm (DGA) is applied to the synthetic data for the gene expression model in Figure 3a.

Parameters are fixed at 1, with and for a simulation time of 10. (a) Scatter plot of true versus inferred parameters (, , and ). Error bars are 95% confidence intervals (CIs). Panel (b) plots the logarithm of the loss function near a learned parameter set (shown in red circles in (a)), showing insensitivity regions. Panel (c) compares true and predicted mRNA mean and standard deviation with 95% CIs.

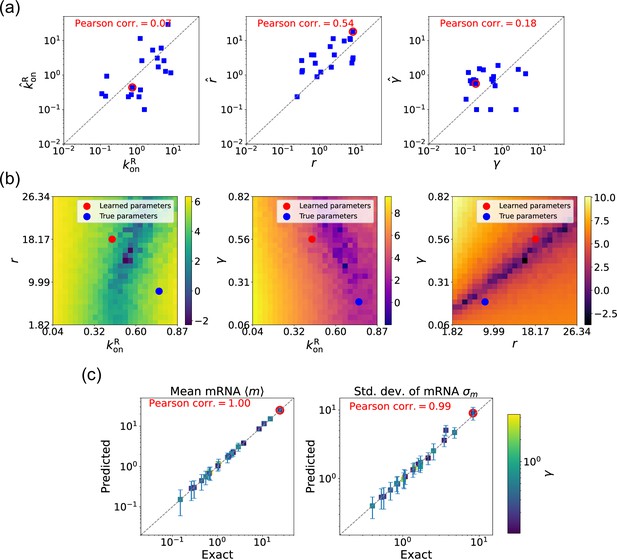

Parameter estimation on experimental data

In the previous section, we demonstrated that our DGA can effectively obtain parameters for synthetic data. However, real experimental data often contains noise and variability, which can complicate the parameter estimation process. To test the DGA in this more difficult setting, we reanalyze experiments by Jones et al., 2014 which measured how mRNA expression changes in a system well described by the two-state gene expression model in Figure 3. In these experiments, two constitutive promoters lacUD5 and 5DL1 (with different transcription rates ) were placed under the control of a LacI repressor through the insertion of a LacI binding site. By systematically varying LacI concentrations, the authors were able to adjust the repressor binding rate . mRNA fluorescence in situ hybridization was employed to measure mRNA expression, providing data on both mean expression levels and the variability as quantified by the Fano factor for both promoters (see Figure 3b).

Given a set of measurements of the mean and Fano factor for a promoter (lacUD5 and 5DL1), we construct a loss function of the form:

where runs over data points (each with a different lac repressor concentration) and and are the mean and standard deviation obtained from a sample of DGA simulations. This loss function is chosen because, at its minimum, and for all . As above, we set , and focus on estimating the other three parameters . When performing our gradient-based optimization, we assume that the transcription rate and the mRNA degradation rate are the same for all data points , while allowing to vary across data points . This reflects the fact that is a function of the lac repressor concentration which, by design, is varied across data points (see Appendix 6 for details on how this optimization is implemented and calculation of error bars).

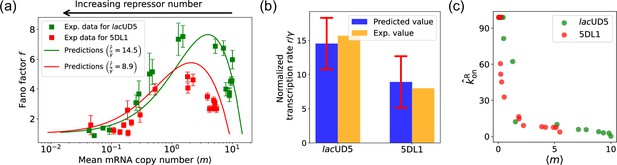

The results of this procedure are summarized in Figure 7. We find that for the lacUD5 promoter , and that varies from a minimum value of 0.18 to a maximum value of 99.0. For the 5DL1 promoters and and varies between 3.64 and 99.0. Recall that we have normalized all rates to the repressor unbinding rate . These values indicate that mRNA transcription occurs much faster compared to the unbinding of the repressor, suggesting that once the promoter is in an active state, it produces mRNA rapidly. The relatively high mRNA degradation rates indicate a mechanism for fine-tuning gene expression levels, ensuring that mRNA does not persist too long in the cell, which could otherwise lead to prolonged expression even after promoter deactivation.

Fitting of experimental data from Jones et al., 2014 using the differentiable Gillespie algorithm (DGA).

(a) Comparison between theoretical predictions from the DGA (solid curves) and experimental values of mean and the Fano factor for the steady-state mRNA levels are represented by square markers, along with the error bars, for two different promoters, lacUD5 and 5DL1. Solid curves are generated by using DGA to estimate , , and and using this as input to exact analytical formulas. (b) Comparison between the inferred values of using DGA with experimentally measured values of this parameter from Jones et al., 2014. (c) Inferred values as a function of the mean mRNA level.

As expected, the repressor binding rates decrease with the mean mRNA level (see Figure 7c). The broad range of repressor binding rates shows that the system can adjust its sensitivity to repressor concentration, allowing for both tight repression and rapid activation depending on the cellular context.

Figure 7a shows a comparison between the predictions of the DGA (solid curves) and the experimental data (squares) for mean mRNA levels and the Fano factor . The theoretical curves are obtained by using analytical expression for and from Gillespie, 2007 with parameters estimated from the DGA. We find that for the lacUD5 and the 5DL1 promoters, the mean percentage errors for predictions of the Fano factor are 25% and 28%, respectively (see Appendix 6).

An appealing feature of Jones et al., 2014 is that the authors performed independent experiments to directly measure the normalized transcription rate (namely the ratio of the transcription rate and the mRNA degradation rate). This allows us to compare the DGA predictions for these parameters to ground truth measurements of kinetic parameters. In Figure 7b, the predictions of the DGA agree remarkably well for both the lacUD5 and 5DL1 promoters.

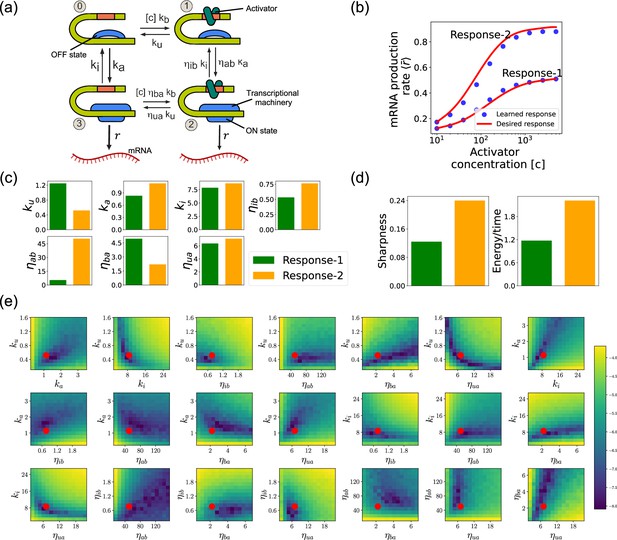

Designing gene regulatory circuits with desired behaviors

Another interesting application of the DGA is to design stochastic chemical or biological networks that exhibit a particular behavior. In many cases, this design problem can be reformulated as identifying choices of parameter that give rise to a desired behavior. Here, we show that the DGA is ideally suited for such a task. We focus on designing the input–output relation of a four state promoter model of gene regulation (Lammers et al., 2023). We have chosen this more complex promoter architecture because, unlike the two-state promoter model analyzed above, it allows for nonequilibrium currents. In making this choice, we are inspired by numerous recent works have investigated how cells can tune kinetic parameters to operate out of equilibrium in order to achieve increased sharpness/sensitivity (Nicholson and Gingrich, 2023Lammers et al., 2023; Zoller et al., 2022; Wong and Gunawardena, 2020; Dixit et al., 2024).

Model of nonequilibrium promoter

We focus on designing the steady-state input–output relationship of the four-state promoter model of gene regulation model shown in Figure 8a; Lammers et al., 2023. The locus can be in either an ‘ON’ state where mRNA is transcribed at a rate or an ‘OFF’ state where the locus is closed and there is no transcription. In addition, a transcription factor (assumed to be an activator) with concentration can bind to the locus with a concentration dependent rate in the ‘OFF’ state and a rate in the ‘ON’ rate. The activator can also unbind at a rate in the ‘OFF’ state and a rate in the ‘ON’ state. The average mRNA production rate (averaged over many samples) in this model is given by

where () is the steady-state probability of finding the system in each of the ‘ON’ states (see Figure 8a).

Design of the four-state promoter architecture using the differentiable Gillespie algorithm (DGA).

(a) Schematic of four-state promoter model. (b) Target input–output relationships (solid curves) and learned input–output relationships (blue dots) between activator concentration and average mRNA production rate. (c) Parameters learned by DGA for the two responses in (b). (d) The sharpness of the response , and the energy dissipated per unit time for two responses in (b). (e) Logarithm of the loss function for the learned parameter set for Response-2, revealing directions (or curves) of insensitivity in the model’s parameter space. The red circles are the learned parameter values.

Such promoter architectures are often studied in the context of protein gradient-based development (Lammers et al., 2023; Estrada et al., 2016; Owen and Horowitz, 2023). One well-known example of such a gradient is the dorsal protein gradient in Drosophila, which plays a crucial role in determining the spatial boundaries of gene expression domains during early embryonic development. In this context, the sharpness of the response as a function of activator concentration is a critical aspect. High sharpness ensures that the transition between different gene expression domains occurs over a very narrow region, leading to well-defined and precise boundaries. Inspired by this, our objective is to determine the parameters such that the variation in as a function of the activator concentration follows a desired response. We consider the two target responses (shown in Figure 8b) of differing sharpness, which following Lammers et al., 2023 we quantify as . For simplicity, we use sixth-degree polynomials to model the input–output functions, with the x-axis plotted on a logarithmic scale. We note that our results do not depend on this choice and any other functional form works equally well.

Loss function

In order to use the DGA to learn a desired input–output relation, we must specify a loss function that quantifies the discrepancy between the desired and actual responses of the promoter network. To construct such a loss function, we begin by discretizing the activator concentration into logarithmically spaced points, , where . For each , we denote the corresponding average mRNA production rate (see Equation 15). After discretization, the loss function is simply the square error between the desired response, , and the current response, , of the circuit

where denotes the predicted average mRNA production rates obtained from the DGA simulations given the current parameters . To compute for a concentration , we perform DGA simulations (indexed by capital letters ) using the DGA and use these simulations to calculate the fraction of time spent in transcriptionally active states (states and in Figure 8a). If we denote the fraction of time spent in state in simulation by , then we can calculate the probability of being in state by

and use Equation 15 to calculate

As before, we optimize this loss using gradient descent (see Figure 2). We assume that the transcription rate is known (this just corresponds to an overall scaling of mRNA numbers). Since we are concerned only with steady-state properties, we fix the activator binding rate to a constant value, . This is equivalent to measuring time in units of . We then use gradient descent to optimize the remaining seven parameters governing transitions between promoter states.

Assessing circuits found by the DGA

Figure 8b shows a comparison between the desired and learned input–output relations. This is good agreement between the learned and desired responses, showing that the DGA is able to design dose–response curves with different sensitivities and maximal values. Figure 8c shows the learned parameters for both response curves. Notably, the degree of activation resulting from transcription factor binding, denoted by , is substantially higher for the sharper response (Response-2). In contrast, the influence on transcription factor binding due to activation, represented by , is reduced for the sharper response curve. Additionally, the unbinding rate is observed to be lower for the sharper response. However, it is essential to approach these findings with caution, as the parameters are highly interdependent. These interdependencies can be visualized by plotting the loss function around the optimized parameter values. Figure 8e shows two dimensional heat maps of the loss function for Response-2. There are seven free parameters, resulting in a total of 21 possible 2D slices of the loss function within the seven-dimensional loss landscape.

The most striking feature of these plots is the central role played by the parameters and which must both be high, suggesting that the sharpness in Response-2 may result from creating a high-flux nonequilibrium cycle through the four promoter states (see Figure 8a). This observation is consistent with recent works suggesting that creating such nonequilibrium kinetics represents a general design principle for engineering sharp responses (Lammers et al., 2023; Zoller et al., 2022; Wong and Gunawardena, 2020; Dixit et al., 2024). To better understand if this is indeed what is happening, we quantified the energy dissipation per unit time (power consumption), , in the nonequilibrium circuit. The energetic cost of operating biochemical networks can be quantified using ideas from nonequilibrium thermodynamics using a generalized Ohm’s law of the form (Lammers et al., 2023; Qian, 2007; Mehta and Schwab, 2012; Lan et al., 2012; Lang et al., 2014; Mehta et al., 2016)

where we have defined a nonequilibrium drive

and the nonequilibrium flux

where and are the probabilities of finding the system in state 0 and 1, respectively. Figure 8d shows a comparison between energy consumption and sharpness of the two learned circuits. Consistent with the results of Lammers et al., 2023, we find that the sharper response curve is achieved by consuming more energy.

Discussion

In this paper, we introduced a fully differentiable variant of the Gillespie algorithm, the DGA. By integrating differentiable components into the traditional Gillespie algorithm, the DGA facilitates the use of gradient-based optimization techniques, such as gradient descent, for parameter estimation and network design. The ability to smoothly approximate the discrete operations of the traditional Gillespie algorithm with continuous functions facilitates the computation of gradients via both forward- and reverse-mode automatic differentiation, foundational techniques in machine learning, and has the potential to significantly expand the utility of stochastic simulations. Our work demonstrates the efficacy of the DGA through various applications, including parameter learning and the design of simple gene regulatory networks.

We benchmarked the DGA’s ability to accurately replicate the results of the exact Gillespie algorithm through simulations on a two-state promoter architecture. We found the DGA could accurately approximate the moments of the steady-state distribution and other major qualitative features. Unsurprisingly, it was less accurate at capturing information about the tails of distributions. We then demonstrated that the DGA could be accurately used for parameter estimation on both simulated and real experimental data. This capability to infer kinetic parameters from noisy experimental data underscores the robustness of the DGA, making it a potentially powerful computation tool for real-world applications in quantitative biology. Furthermore, we showcased the DGA’s application in designing biological networks. Specifically, for a complex four-state promoter architecture, we learned parameters that enable the gene regulation network to produce desired input–output relationships. This demonstrates how the DGA can be used to rapidly design complex biological systems with specific behaviors. We expect computational design of synthetic circuits with differentiable simulations to become an increasingly important tool in synthetic biology.

There remains much work still to be done. In this paper, we focused almost entirely on properties of the steady states. However, a powerful aspect of the traditional Gillespie algorithm is that it can be used to simulate dynamical trajectories. How to adopt the DGA to utilize dynamical data remains an extremely important open question. In addition, it will be interesting to see if the DGA can be adapted to understand the kinetic of rare events. It will also be interesting to compare the DGA with other recently developed approximation methods such as those based on tensor networks (Strand et al., 2022; Nicholson and Gingrich, 2023). Beyond the gene regulatory networks, extending the DGA to handle larger and more diverse datasets will be crucial for applications in epidemiology, evolution, ecology, and neuroscience. On a technical level, this may be facilitated by developing more sophisticated smoothing functions and adaptive algorithms to improve numerical stability and convergence.

The DGA could also be extended to stochastic spatial systems by incorporating reaction–diffusion master equations or lattice-based models. Its differentiability may enable efficient optimization of spatially heterogeneous reaction parameters. However, such extensions may need to address computational scalability and stability in high-dimensional spaces, especially in processes such as diffusion-driven pattern formation or spatial gene regulation.

Materials and methods

A detailed explanation of how the DGA is implemented using PyTorch is given in the Appendix. In addition, all code for the DGA is available on Github at our Github repository https://github.com/Emergent-Behaviors-in-Biology/Differentiable-Gillespie-Algorithm (copy archived at Rijal, 2025).

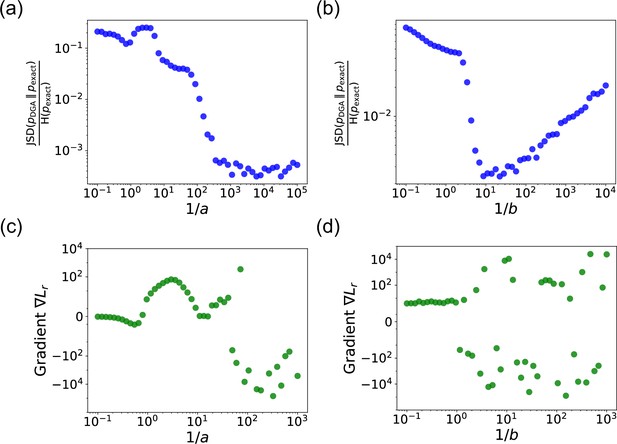

Appendix 1

Balancing accuracy and stability: hyperparameter tradeoffs

In this section, we explore the tradeoffs involved in tuning the hyperparameters and in the DGA. These hyperparameters are crucial for balancing the accuracy and numerical stability of the DGA in approximating the exact Gillespie algorithm.

The hyperparameter controls the steepness of the sigmoid function used to approximate the Heaviside step function in reaction selection. Similarly, determines the sharpness of the Gaussian function used to approximate the Kronecker delta function in abundance updates. A larger value of or results in a steeper sigmoid or Gaussian function, thus more closely approximating the discrete functions in the exact Gillespie algorithm.

Accuracy of the forward DGA simulations

To assess the impact of these hyperparameters on the accuracy of the DGA, we measure the ratio of the Jensen–Shannon divergence between the DGA-generated PDF and the exact PDF , normalized by the entropy of the exact steady-state PDF (see Equation 9). This ratio, , provides a measure of how closely the DGA approximates the exact Gillespie algorithm.

Appendix 1—figure 1 shows the ratio as a function of the sharpness parameters and . In panel (a), is fixed at 20, and is varied. In panel (b), is fixed at 200, and is varied. Some key insights can be drawn from these plots.

First, as or increases, the ratio decreases, indicating that the DGA’s approximation becomes more accurate. This is because steeper sigmoid and Gaussian functions better mimics the discrete steps of the exact Gillespie algorithm. Interestingly, while the ratio decreases for both parameters, plateaus at high values, whereas rises at high values. This plateau occurs because the sigmoid function used for reaction selection becomes so steep that it effectively becomes a step function, beyond which further steepening has negligible impact. As becomes very large, the width of the Gaussian function used for abundance updates becomes extremely narrow. In such a scenario, it becomes increasingly improbable for the chosen reaction index to fall within this narrow width, especially because the reaction indices are not exact integers but are instead near-integer continuous values. Therefore, as becomes very large, the discrepancy between the DGA-generated probabilities and the exact probabilities widens, causing the ratio to increase.

In panels (a) and (b), we plot the ratio of the Jensen–Shannon divergence between the differentiable Gillespie PDF and the exact steady-state PDF , and the Shannon entropy of the exact steady-state PDF, as a function of the two sharpness parameters and .

In panel (a), ; in panel (b), . The simulation time is set to 10. In panels (c) and (d), for these same values, we show the gradient of the loss function with respect to the parameter near the true parameter values. In all the plots, the values of the rates are: , , , and . 5000 trajectories are used to obtain the PDFs, while 200 trajectories are used to obtain the gradients.

Stability of the backpropagation of DGA simulations

Numerical stability for gradient computation is crucial. Therefore, it is important to examine how the gradient behaves as a function of the hyperparameters. Panels (c) and (d) of Appendix 1—figure 1 provide insights into this behavior. The plots show the gradient of the loss function with respect to the parameter near the true parameter values. The gradients are computed for the loss function in Equation 11 with and equal to 8 and 2.5, respectively, for the parameter values , , , and . With these parameter values, the true mean and standard deviation are equal to 6.67 and 3.94, respectively.

As or increases, the gradients become more accurate, but their numerical stability can be compromised. This is evidenced by the increased variability and erratic behavior in the gradients at very high sharpness values (see Appendix 1—figure 1). Hence, very large values of or lead to oscillations and convergence issues, highlighting the need for a balance between accuracy and stability.

The tradeoff between accuracy and numerical stability is evident. This necessitates careful tuning of and to ensure stable and efficient optimization. In practice, this involves selecting values that provide sufficient approximation quality without compromising the stability of the gradients.

Appendix 2

Implementation of the DGA in PyTorch

This section explains the implementation of the DGA used to simulate the two-state promoter model. The implementation can be adapted to any model where the stoichiometric matrix and propensities are known. The model involves promoter state switching and mRNA production/degradation (Figure 3a), and the algorithm is designed to handle these sub-processes effectively.

Stoichiometric matrix:

The stoichiometric matrix is a key component in modeling state changes due to reactions. Each row represents a reaction, and each column corresponds to a state variable (promoter state and mRNA level). The matrix for the two-state promoter model includes:

Reaction 1: Promoter state transitions from the OFF state (–1) to the ON state (+1).

Reaction 2: Production of mRNA.

Reaction 3: Promoter state transitions from the ON state (+1) to the OFF state (–1).

Reaction 4: Degradation of mRNA.

The stoichiometric matrix for this model is:

In this matrix, the rows correspond to the reactions listed above, and the columns represent the promoter state and mRNA level, respectively.

Propensity calculations:

The levels vector is a two-dimensional vector where the first element represents the promoter state (–1 or +1) and the second element represents the mRNA number . Propensities are the rates at which reactions occur, given the current state of the system. In our PyTorch implementation, the propensities are calculated using the following expressions:

propensities = torch.stack([

kon * torch.sigmoid(-c * levels[0]),

# Promoter state switching from –1 to +1

r * torch.sigmoid(-c * levels[0]),

# mRNA production

torch.sigmoid(c * levels[0]),

# Promoter state switching from +1 to –1

g * levels [1]

# mRNA degradation

])Each propensity corresponds to a different reaction:

Promoter state switching from –1 to +1: The value of kon * torch.sigmoid(-c * levels[0]) is around when the levels[0] (promoter state) is around –1 and close to zero when levels[0] is around +1. The sigmoid function ensures a smooth transition, allowing differentiability and preventing abrupt changes in rates. The constant controls the sharpness of the sigmoid function.

mRNA production: The rate is proportional to and modulated by the same sigmoid function, torch.sigmoid(-c * levels[0]), such that the rate is equal to only when the promoter is in –1 state.

Promoter state switching from +1 to –1: The rate is set to 1 and modulated by the sigmoid function, torch.sigmoid(c * levels[0]), such that the rate is equal to 1 only when the promoter is in +1 state.

mRNA degradation: The rate is proportional to the current mRNA level, g * levels [1], reflecting the natural decay of mRNA with rate .

Using the sigmoid function in the propensity calculations is crucial for ensuring smooth and differentiable transitions between states. This smoothness is essential for gradient-based optimization methods, which rely on continuous and differentiable functions to compute gradients effectively. Without the sigmoid function, the propensity rates could change abruptly, leading to numerical instability and difficulties in optimizing the model parameters.

Reaction selection function: We define a function reaction_selection that selects the next reaction to occur based on the transition points and a random number between [0,1]. The function basically implements using Equation 6. The transition points are first calculated from the cumulative sum of reaction rates normalized to the total rate.

Gillespie simulation function: The main gillespie_simulation function uses the previously described functions, each with specific roles, to perform the actual simulation step-by-step, as shown in Figure 1. This function iterates through the number of simulations, updating the system’s state and the propensities of each reaction at each step.

State jump function: We define another function state_jump that calculates the state change vector when a reaction occurs. It uses a Gaussian function to smoothly transition between states based on the selected reaction index and the stoichiometry matrix (see Equation 8).

Appendix 3

Estimating confidence intervals for parameters

In this section, we describe the methodology used to estimate the confidence intervals for the parameters using polynomial fitting and numerical techniques. This approach uses the results of multiple simulations to determine the range within which the parameter is likely to lie, based on the curvature of the loss function around its minimum value. Specifically, we perform the following steps:

Parameter initialization: Set up and initialize the necessary parameters. This includes defining the number of evaluation points, the number of simulations, the simulation time, and the hyperparameters , , and .

Range generation: For each set of learned parameters , generate a range of values for the parameter of interest while keeping the other parameters fixed at their learned values. Let the learned value of the parameter be . Then the range of evaluation is approximately , depending on the flatness of the loss landscape around its minimum.

Simulation and loss calculation: For each value in this range, perform forward DGA simulations to calculate the mean and standard deviation of the results, using which the loss function is computed and stored.

Polynomial fitting: Fit a polynomial of degree 6 to the computed loss values across the range of . Compute the first and second derivatives of the fitted polynomial to identify the minimum and evaluate the curvature.

Identifying minimum: Identify the valid minimum of the loss function by solving for the roots of the first derivative and filtering out the points where the second derivative is positive.

Curvature and standard deviation calculation: Calculate the curvature of the loss landscape at its minimum using its second derivative at the minimum. An estimation of the standard deviation of the parameter is given by:

7. Confidence interval calculation: The loss function is typically asymmetric around its minimum, with the left side usually steeper than the right (see Appendix 3—figure 1). To determine the right error bar, we use . For the left error bar, we find the point where (see Appendix 3—figure 1). Therefore, the balanced 95% CI for the parameter is given by:

This methodology allows for a robust estimation of the confidence intervals, providing insights into the reliability and precision of the parameter estimates.

Appendix 4

Demonstrating parameter degeneracy in the two-state promoter model

We will now demonstrate the existence of degeneracy in the two-state promoter architecture. Setting in the analytical expressions for the mean and the Fano factor from Jones et al., 2014, we have:

We want to solve Equation D1 for and . By isolating in the expression for , we obtain:

Next, we substitute the expression for from Equation D2 into the expression for the in Equation D1:

Expanding and isolating terms involving :

Rearranging to solve for :

Thus, we obtain:

We substitute back Equation 28 in Equation 23 to obtain :

Equations D3 and D4 indicate that for any given , there exists a corresponding pair of values for and that satisfy the equations. Therefore, the solutions are not unique, demonstrating a high degree of degeneracy in the parameter space. This degeneracy arises because multiple combinations of can produce the same observable and . The lack of unique solutions is a common issue in parameter estimation for complex systems, where different parameter sets can lead to similar system behaviors.

Resolving degeneracy with known

To resolve the degeneracy, we can fix the value of . Now, if the rate is known, we can solve Equation D1 for the other parameters. Starting by rearranging the mean expression from Equation D1:

Substituting Equation D5 in the expression of in Equation D1, we have

Substituting Equation D6 into Equation D5, we obtain:

Equations D6 and D7 provide unique solutions for and given a known value of . By fixing , the degeneracy is resolved, and the remaining parameters can be accurately determined.

Appendix 5

Estimating confidence intervals for and

To assess the reliability of the statistics predicted through DGA-based optimization, we calculate error bars using the following procedure. For the non-degenerate situation, we use the learned parameter values and , and perform forward DGA simulations with many different random seeds. This generates multiple samples of the mean mRNA level and the standard deviation . These samples provide us with the variability due to the stochastic nature of the simulations. The 95% CIs are then determined using their standard deviations, denoted as and . For the mean mRNA level, the CI is calculated as:

For the standard deviation of mRNA levels, the CI is calculated as:

Appendix 6

DGA-based optimization for experimental data and estimation of errors

Optimization procedure

Given a set of measurements of the mean mRNA expression levels () and the Fano factor () for promoters (lacUD5 and 5DL1), we construct a loss function as follows:

where runs over data points (each with a different lac repressor concentration), and and are the mean and standard deviation obtained from a sample of DGA simulations. This loss function is chosen because, at its minimum, and for all .

For the optimization, we set and focus on estimating the parameters . During the gradient-based optimization, the transcription rate and the mRNA degradation rate are assumed to be the same for all data points , while allowing to vary across data points . This reflects the fact that is a function of the lac repressor concentration, which is varied across data points.

Instead of , we actually learn a transformed parameter , which is the probability for the promoter to be in the OFF state. This approach is based on our observation that the gradient of the loss function with respect to is more numerically stable compared to the gradient with respect to .

The parameters are initialized randomly as follows:

is initialized to a random number in [0, 100].

is initialized to a random number between [0, 10].

The values, which depend on the index of the data points, are initially set as linearly spaced points within the range [0.03, 0.97].

The hyperparameters used for the simulations are as follows:

Number of simulations: 200

Simulation time:

Steepness of the sigmoid function:

Sharpness of the Gaussian function:

Steepness of the sigmoid in propensities:

The parameters are iteratively updated to minimize the loss function. During each iteration of the optimization, the following steps are performed:

Forward simulation: The DGA is used to simulate the system, generating predictions for the mean mRNA levels and their standard deviations.

Loss calculation: The loss function is computed based on the differences between the simulated and experimentally measured values of the mean mRNA levels and standard deviations (see Equation F1).

Gradient calculation: The gradients of the loss function with respect to the parameters , , and are calculated using backpropagation.

Parameter update: The ADAM optimizer updates the parameters in the direction that reduces the loss function. ADAM adjusts the learning rates based on the history of gradients and their moments. The learning rate used is 0.1.

The values of the parameters and the loss value are saved after each iteration. The parameter values corresponding to the minimum loss after convergence are picked at the end.

Goodness of fit

To quantitatively assess the goodness of the fit, we define the mean percentage error (MPE) as follows:

where is the predicted Fano factor for the ith data point. This metric provides a measure of the average discrepancy between the predicted and experimental Fano factors, expressed as a percentage of the experimental values.

Error bars

The error bars in the ratio are obtained by applying error propagation to the standard deviations and of the individual values and . The propagated error is given by:

where and are the standard deviations of and , respectively. These standard deviations are obtained using the curvature of the loss function, as discussed earlier.

Data availability

All code for the Differentiable Gillespie Algorithm is freely available on GitHub at https://github.com/Emergent-Behaviors-in-Biology/Differentiable-Gillespie-Algorithm (copy archived at Rijal, 2025). The study uses previously published experimental datasets from Jones et al., 2014. This dataset is publicly available through Science at https://doi.org/10.1126/science.1255301. No additional proprietary or restricted datasets were used in this study.

References

-

An efficient finite difference method for parameter sensitivities of continuous time markov chainsSIAM Journal on Numerical Analysis 50:2237–2258.https://doi.org/10.1137/110849079

-

Avalanches in a stochastic model of spiking neuronsPLOS Computational Biology 6:e1000846.https://doi.org/10.1371/journal.pcbi.1000846

-

Julia: a fresh approach to numerical computingSIAM Review 59:65–98.https://doi.org/10.1137/141000671

-

JAX-Fluids: A fully-differentiable high-order computational fluid dynamics solver for compressible two-phase flowsComputer Physics Communications 282:108527.https://doi.org/10.1016/j.cpc.2022.108527

-

A differentiable physics engine for deep learning in roboticsFrontiers in Neurorobotics 13:6.https://doi.org/10.3389/fnbot.2019.00006

-

Governing principles of transcriptional logic out of equilibriumBiophysical Journal 123:1015–1029.https://doi.org/10.1016/j.bpj.2024.03.020

-

Stochastic population dynamics in spatially extended predator–prey systemsJournal of Physics A 51:063001.https://doi.org/10.1088/1751-8121/aa95c7

-

BookTransactions of the American Mathematical SocietyAmerican Mathematical Society.https://doi.org/10.1090/S0002-9947-1945-0013857-4

-

Stochastic gene expression in a single cellScience 297:1183–1186.https://doi.org/10.1126/science.1070919

-

A general method for numerically simulating the stochastic time evolution of coupled chemical reactionsJournal of Computational Physics 22:403–434.https://doi.org/10.1016/0021-9991(76)90041-3

-

Exact stochastic simulation of coupled chemical reactionsThe Journal of Physical Chemistry 81:2340–2361.https://doi.org/10.1021/j100540a008

-

Stochastic simulation of chemical kineticsAnnual Review of Physical Chemistry 58:35–55.https://doi.org/10.1146/annurev.physchem.58.032806.104637

-

Likelihood ratio gradient estimation for stochastic systemsCommunications of the ACM 33:75–84.https://doi.org/10.1145/84537.84552

-

The energy-speed-accuracy tradeoff in sensory adaptationNature Physics 8:422–428.https://doi.org/10.1038/nphys2276

-

Thermodynamics of statistical inference by cellsPhysical Review Letters 113:148103.https://doi.org/10.1103/PhysRevLett.113.148103

-

Differentiable programming tensor networksPhysical Review X 9:031041.https://doi.org/10.1103/PhysRevX.9.031041

-

Efficient gradient estimation using finite differencing and likelihood ratios for kinetic Monte Carlo simulationsJournal of Computational Physics 231:7170–7186.https://doi.org/10.1016/j.jcp.2012.06.037

-

Energetic costs of cellular computationPNAS 109:17978–17982.https://doi.org/10.1073/pnas.1207814109

-

Landauer in the age of synthetic biology: energy consumption and information processing in biochemical networksJournal of Statistical Physics 162:1153–1166.https://doi.org/10.1007/s10955-015-1431-6

-

Listening to the noise: random fluctuations reveal gene network parametersMolecular Systems Biology 5:318.https://doi.org/10.1038/msb.2009.75

-

Steady state likelihood ratio sensitivity analysis for stiff kinetic Monte Carlo simulationsThe Journal of Chemical Physics 142:044108.https://doi.org/10.1063/1.4905957

-

Size limits the sensitivity of kinetic schemesNature Communications 14:1280.https://doi.org/10.1038/s41467-023-36705-8

-

Extinction in the Lotka-Volterra modelPhysical Review E 80:021129.https://doi.org/10.1103/PhysRevE.80.021129

-

Models of stochastic gene expressionPhysics of Life Reviews 2:157–175.https://doi.org/10.1016/j.plrev.2005.03.003

-

Figure 1 theory meets figure 2 experiments in the study of gene expressionAnnual Review of Biophysics 48:121–163.https://doi.org/10.1146/annurev-biophys-052118-115525

-

GillespieSSA: implementing the gillespie stochastic simulation algorithm in rJournal of Statistical Software 25:1–18.https://doi.org/10.18637/jss.v025.i12

-

Phosphorylation energy hypothesis: open chemical systems and their biological functionsAnnual Review of Physical Chemistry 58:113–142.https://doi.org/10.1146/annurev.physchem.58.032806.104550

-

Exact distribution of the quantal content in synaptic transmissionPhysical Review Letters 132:228401.https://doi.org/10.1103/PhysRevLett.132.228401

-

SoftwareDifferentiable-gillespie-algorithm, version swh:1:rev:c9740a695c52c1d76f260a7a46bbbcab18354380Software Heritage.

-

BookStochastic Processes for Insurance and FinanceJohn Wiley & Sons.https://doi.org/10.1002/9780470317044

-

Regulation of noise in gene expressionAnnual Review of Biophysics 42:469–491.https://doi.org/10.1146/annurev-biophys-083012-130401

-

JAX MD: A framework for differentiable physicsAdvances in Neural Information Processing Systems 33:11428–11441.

-

A pathwise derivative approach to the computation of parameter sensitivities in discrete stochastic chemical systemsThe Journal of Chemical Physics 136:034115.https://doi.org/10.1063/1.3677230

-

Comparison of finite difference based methods to obtain sensitivities of stochastic chemical kinetic modelsThe Journal of Chemical Physics 138:074110.https://doi.org/10.1063/1.4790650

-

Computing time-periodic steady-state currents via the time evolution of tensor network statesThe Journal of Chemical Physics 157:054104.https://doi.org/10.1063/5.0099741

-

Efficient finite-difference method for computing sensitivities of biochemical reactionsProceedings of the Royal Society A 474:20180303.https://doi.org/10.1098/rspa.2018.0303

-

BookStochastic Modelling for Systems BiologyChapman and Hall/CRC.https://doi.org/10.1201/9781351000918

-

BookStochastic Processes in Engineering SystemsSpringer Science & Business Media.https://doi.org/10.1007/978-1-4612-5060-9

-

Gene regulation in and out of equilibriumAnnual Review of Biophysics 49:199–226.https://doi.org/10.1146/annurev-biophys-121219-081542

-

Eukaryotic gene regulation at equilibrium, or non?Current Opinion in Systems Biology 31:100435.https://doi.org/10.1016/j.coisb.2022.100435

Article and author information

Author details

Funding

National Institute of General Medical Sciences (R35GM119461)

- Pankaj Mehta

Chan Zuckerberg Initiative (Investigator grant)

- Pankaj Mehta

The funders had no role in study design, data collection, and interpretation, or the decision to submit the work for publication.

Acknowledgements

This work was supported by NIH NIGMS R35GM119461 to PM and Chan-Zuckerburg Institute Investigator grant to PM. The authors also acknowledge support from the Shared Computing Cluster (SCC) administered by Boston University Research Computing Services. We would also like to thank the Mehta and Kondev groups for useful discussions.

Version history

- Preprint posted:

- Sent for peer review:

- Reviewed Preprint version 1:

- Reviewed Preprint version 2:

- Version of Record published:

Cite all versions

You can cite all versions using the DOI https://doi.org/10.7554/eLife.103877. This DOI represents all versions, and will always resolve to the latest one.

Copyright

© 2025, Rijal and Mehta

This article is distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use and redistribution provided that the original author and source are credited.

Metrics

-

- 1,795

- views

-

- 182

- downloads

-

- 5

- citations

Views, downloads and citations are aggregated across all versions of this paper published by eLife.

Citations by DOI

-

- 3

- citations for umbrella DOI https://doi.org/10.7554/eLife.103877

-

- 2

- citations for Version of Record https://doi.org/10.7554/eLife.103877.3