Leave-One-Trial-Out, LOTO, a general approach to link single-trial parameters of cognitive models to neural data

Abstract

A key goal of model-based cognitive neuroscience is to estimate the trial-by-trial fluctuations of cognitive model parameters in order to link these fluctuations to brain signals. However, previously developed methods are limited by being difficult to implement, time-consuming, or model-specific. Here, we propose an easy, efficient and general approach to estimating trial-wise changes in parameters: Leave-One-Trial-Out (LOTO). The rationale behind LOTO is that the difference between parameter estimates for the complete dataset and for the dataset with one omitted trial reflects the parameter value in the omitted trial. We show that LOTO is superior to estimating parameter values from single trials and compare it to previously proposed approaches. Furthermore, the method makes it possible to distinguish true variability in a parameter from noise and from other sources of variability. In our view, the practicability and generality of LOTO will advance research on tracking fluctuations in latent cognitive variables and linking them to neural data.

https://doi.org/10.7554/eLife.42607.001Introduction

Model-based cognitive neuroscience attempts to link mathematical models of cognitive processes to neural data in order to advance our knowledge of the mind and brain (Forstmann et al., 2011). A particularly promising but challenging approach in this regard is to derive trial-specific values for parameters of cognitive models and to relate these values to trial-specific brain data, which offers insights into cognitive and neural principles at a highly detailed level of analysis (Gluth and Rieskamp, 2017; van Maanen et al., 2011; Turner et al., 2017; Wiecki et al., 2013). In the present work, we introduce a novel technique for capturing trial-specific values of model parameters that is efficient, widely applicable and easy to apply. We briefly summarize related work and methods before turning to our current proposal.

Primarily, the difficulty in linking momentary fluctuations in cognitive states to neural measures lies in specifying the variability in the cognitive model. Often, cognitive models specify the distribution from which this variability is assumed to originate (e.g., a normal distribution), but remain silent about its direction and extent in single trials. Previous attempts to capture parameter variability have often been specific to a single model. For example, van Maanen et al. (2011) derived maximum likelihood estimates for two single-trial parameters of the Linear Ballistic Accumulator (LBA) model (Brown and Heathcote, 2008). Because the LBA model is of special interest for the present paper, we describe it in some detail here. The LBA belongs to the class of sequential sampling models of decision making, which assume that decisions arise from an evidence-accumulation process that continues until a threshold has been reached, indicating that the required amount of evidence for a decision has been gathered. Three critical parameters of the LBA are the rate of evidence accumulation (drift rate), the amount of evidence required to reach a decision (decision threshold), and the point from which the accumulation begins (start point). Importantly, sequential sampling models such as LBA predict accuracy rates and the shape of response times (RT) distributions conjointly. Wiecki et al. (2013) introduced a hierarchical Bayesian modeling tool for another sequential sampling model, the Diffusion Decision Model (DDM) (Ratcliff, 1978). Their tool allows the regresssion of single-trial parameters of the DDM onto trial-by-trial neural measures such as electroencephalography (EEG) or event-related functional magnetic resonance imaging (fMRI) recordings (similar regression-based approaches were proposed by Hawkins et al. (2017) and by Nunez et al. (2017)). Turner and colleagues proposed a related method, whose rationale is to model behavioral and neuroimaging data jointly under a common (hierarchical Bayesian) umbrella (Palestro et al., 2018; Turner et al., 2013; Turner et al., 2015). Although the approach was again tested within the DDM framework, it may be generalized to any other cognitive model. As we have argued before, however, the complexity of this approach might discourage many cognitive neuroscientists from applying it (Gluth and Rieskamp, 2017). Another, but less general, approach of modeling behavioral and brain data jointly was proposed by van Ravenzwaaij et al. (2017).

We recently proposed a comparatively simple and very general approach to capture trial-by-trial variability in cognitive model parameters (Gluth and Rieskamp, 2017). Again, the approach is based on Bayesian principles: it specifies the posterior probability of a parameter value in a specific trial, using the ‘average’ parameter value across all trials and the behavior in the specific trial as the prior and likelihood, respectively. Thus, the method basically answers the question of how the difference between a person’s behavior in specific trials vs. that in all trials can be mapped (in a Bayesian optimal way) onto changes in a specific parameter of interest. For example, a surprisingly fast decision made by an otherwise very cautious decision maker might be attributed to a reduced decision threshold (in the context of sequential sampling models such as LBA or DDM), which then might be linked to altered neural activation in a specific region of the brain such as the pre-supplementary motor area (Gluth et al., 2012; van Maanen et al., 2011). While this approach is both general and comparatively simple, it can require high amounts of computation time, in particular when one seeks to estimate variability in more than one parameter simultaneously.

In the current work, we propose a novel approach to capturing trial-by-trial variability in cognitive model parameters that tries to overcome the shortcomings of specificity, complexity, and inefficiency: Leave-One-Trial-Out (LOTO). Briefly, the idea of LOTO is that the difference in the parameter estimates that are based on all trials vs. those that are based on all trials except a single trial provides a reflection of the ‘true’ parameter value in the left-out trial. The rest of the article is structured as follows: first, we introduce the rationale behind LOTO and exemplify this rationale with a simple toy model. Second, we show the circumstances under which LOTO can be expected to capture trial-by-trial variability appropriately, and explain how LOTO’s performance can be improved by adapting its application, the underlying cognitive model or the experimental design. Third, we illustrate how one can test the assumption of the presence of systematic parameter variability. Fourth, we compare LOTO to previously proposed methods. Fifth, we present a simulation-based power analysis that aims to test whether LOTO can be expected to identify the neural correlates of trial-by-trial fluctuations of model parameters with sufficient power and specificity. Finally, we provide an example analysis, in which we apply LOTO to link variability in a cognitive process (encoding of episodic memory) to brain signals (fMRI activation in the hippocampus). Although we believe that it is essential for the potential user to read the entire article (in order to understand when and why LOTO can be expected to provide acceptable results), we note that some parts of the article are rather technical and may be difficult to understand. Therefore, our suggestion for readers with limited statistical and mathematical knowledge is to read 'Results: II. The LOTO principle', and 'Results: XI.: An example of using LOTO for model-based fMRI', which exemplifies the application of LOTO, and then to proceed directly to the discussion, in which we provide a ‘LOTO recipe’ that provides a step-by-step summary of how LOTO should be applied. The recipe then refers the reader back to relevant specific sections of the article.

Results

I. The LOTO principle

The rationale behind LOTO is simple and intuitive and can be summarized as follows:

When fitting a (cognitive) model to data, all n trials contribute to the model’s goodness-of-fit statistic (e.g., log-likelihood) and thus to the estimation of parameter values.

A single trial, t, shifts the parameter estimation to the direction that accords with the behavior in trial t.

Therefore, if trial t is taken out and the model is fitted again to the n–1 dataset, the new parameter estimate will shift (slightly) to the opposite direction.

Therefore, the (true and unknown) parameter value in t is reflected in the difference between the parameter estimate of all trials and the parameter estimate of all trials except t.

Accordingly, the application of LOTO works as follows:

Estimate all parameters of your model by fitting the model to the entire dataset (e.g., all n trials of a subject).

Re-estimate the parameter(s) of interest by fitting the model to the dataset without trial t; keep all other parameters fixed to their estimates derived in step 1.

Take the difference between the parameter estimate(s) of step 1 and step 2, which will provide a reflection of the parameter value in t (i.e., the difference should be correlated positively with the true parameter value).

Repeat the Steps 2 and 3 for all n trials.

You may then link the obtained vector of trial-by-trial parameter values to any external measure that was recorded at the same time and that has an appropriate temporal resolution (e.g., event-related fMRI data, EEG data, single-unit recordings, eye-tracking data, skin conductance responses; see also Gluth and Rieskamp, 2017).

Note that instead of taking the difference between the estimates for n and n–1 trials in step 3, one could simply multiply the n-1 trials estimates by −1 to obtain LOTO estimates that are positively correlated with the true parameter values. However, we prefer taking the difference, as this nicely separates above-average from below-average parameter values, which will be positive and negative, respectively. In general, it should be obvious that LOTO does not provide single-trial parameter estimates in an absolute sense. Rather, the method provides information about the direction and (relative) amount of deviation of single-trial parameter values from the average. Note that, in most cases, cognitive neuroscientists will be interested in the correlation between trial-by-trial parameter values and neural data, for which absolute values do not matter (Gluth and Rieskamp, 2017). In the rest of the article, we sometimes refer to ‘LOTO estimates of a parameter’, but it should be clear that this refers to estimates in a relative sense only.

II. A ‘toy’ model example

In the following, we illustrate the LOTO principle using the binomial distribution as a ‘toy’ model example. In general, the binomial distributions specifies the probability of observing k successes out of n trials given probability θ, which specifies the probability of a success in each trial. Let us assume that we seek to find the estimate of θ that provides the best account of the observation of k successes in n trials. The Maximum Likelihood Estimate (MLE) of this is simply (e.g., Farrell and Lewandowsky, 2018):

Let us now assume that we believe that θ is not stable but may vary from trial to trial, and that our goal is to estimate this trial-by-trial variability using LOTO. (Note that for this and the following sections, we simply assume that such trial-by-trial variability exists; we address the issue of testing for the presence of trial-by-trial variability in 'Results: Section V'. Also note that for this ‘toy’ model example, LOTO cannot provide more information about parameter θ than what is already given by the observations k; the goal of this section is to exemplify the workflow of LOTO rather than its capabilities.) As explained above, LOTO’s estimate for trial t is the difference between the n and the n–1 estimates of θ. For the binomial model, we thus obtain:

where kt indicates whether a success was observed in trial t (kt = 1) or not (kt = 0). The critical question is whether we can expect the LOTO approach to capture changes in observations meaningfully. To answer this question, we take the derivative of Equation 2 with respect to kt to see how LOTO’s estimate changes as a function of kt:

Equation 3 implies that changes in single-trial observations map linearly and positively onto LOTO’s estimates, which is what we desired. To give an example, let us assume k = 5, n = 10, kt=1 = 1, and kt=2 = 0. Then LOTO’s estimates for t = 1 and t = 2 are 1/18 and −1/18, respectively, with the difference being 1/(n–1) = 1/9. Equation 3 also reveals a general property of LOTO, namely that the change in LOTO is inversely related to the number of trials: naturally, the influence of a single trial on the estimation of a parameter decreases as the number of trials increases. We will further elaborate on this issue in 'Results: Section VII'. Also, we see that LOTOt is not an estimate of θ for trial t in an absolute sense (e.g., a θ of −1/18 is impossible), but that positive and negative LOTOt values indicate above- and below-average single-trial values, respectively.

So far, we have only established a sensible relationship between LOTO’s estimate and the observation kt, but ultimately we are interested in whether LOTO is also related to the underlying trial-wise (data-generating) parameter θt. This depends on how a change in θt is mapped onto a change in kt, which is given by the Fisher information, the variance of the first derivative of the log-likelihood function with respect to θt. For the binomial distribution with n = 1 (i.e., the Bernoulli distribution), the Fisher information is (e.g., Ly et al., 2017):

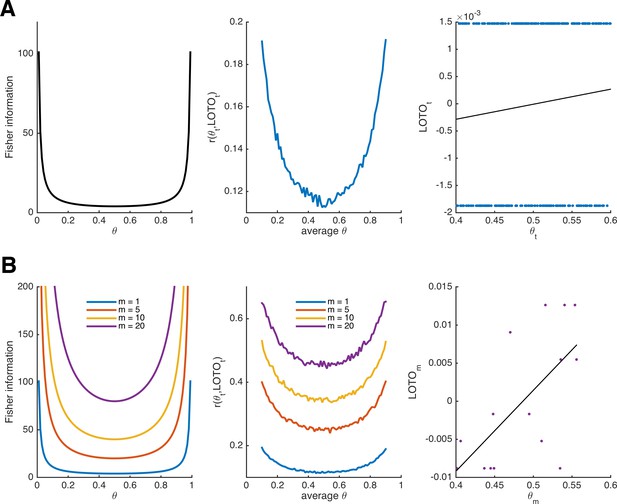

As can be seen in the left panel of Figure 1A, the Fisher information of the Bernoulli distribution indicates that observations are more informative about the underlying parameter at the extremes. Accordingly, LOTO’s performance (i.e., the correlation between the true θt and LOTO’s estimates of it) will also depend on the range of θt. To illustrate this, we ran simulations by drawing 300 values of θt from a uniform distribution with a range of .2 (e.g., between .4 and .6) and repeated this for every range from [0, .2] to [.8, 1] in steps of .01 (detailed specifications of all simulations are provided in 'Materials and methods'). LOTO was then used to recover θt. The middle panel of Figure 1A depicts the average correlation between θt and its LOTO estimate as a function of the average θ. As predicted by the Fisher information, the correlation is higher when θ is close to 0 or close to 1. Even though we do not further discuss the Fisher information in the context of the more complex models in the following sections, the principles of Fisher information also apply there (including the fact that more extreme parameter values are most informative).

Fisher information and performance of LOTO for the binomial distribution.

(A) Fisher information (left panel), average correlation between and LOTO’s estimate (middle panel), and example correlation for values of θt in the range [.4,.6] (right panel) for the Bernoulli distribution. (B) The same as in panel A but generalized to the binomial distribution, that is, assuming that θ is stable over m trials, for different levels of m (the example correlation in the right panel uses m = 20).

Notably, the (average) correlations between θt and LOTO’s estimate are quite low (i.e., < .2). The reason for this can be seen in the right panel of Figure 1A, which shows the correlation for an example of 300 trials in the range [.4, .6]: the estimate of LOTO can only take two values, depending in whether a success was observed in t or not. Fortunately, the performance can be improved by assuming that θ does not change from trial to trial but remains constant over a certain number of trials, which we denote m. The larger m, the more different values LOTO can take (and at the same time, our confidence in the estimate of θ increases). LOTO’s estimate, its derivative with respect to m, and the Fisher information for the Bernoulli distribution generalized to m ≥ 1 (i.e., the binomial distribution) are:

Figure 1B illustrates the increases in Fisher information and LOTO’s performance as m increases. This is true, even though we kept the number of draws to 300 (implying that when m = 20, there are only n/m = 300/20 = 15 values estimated by LOTO; see Figure 1B, right panel). Thus, it might be more promising to track fluctuations over multiple trials (e.g., short blocks or sessions) than to try to capture parameter values in each and every trial. For example, Gluth et al. (2015) investigated memory-based decisions and inferred whether an item had been remembered on the basis of three trials that included the item as a choice option (see also 'Results: Section XI', in which we use LOTO to reproduce this analysis).

We see that LOTO can be applied to the Bernoulli and binomial distributions, but it should be (again) noted that LOTO is not particularly helpful in these cases. This is because LOTO will not provide us with any novel information over and above what is already known from the observations. In other words, LOTO’s estimates are perfectly correlated with kt or km, which is mirrored in the derivatives (Equations 3 and 5b) which depend only on constants n or m. In principle, there are two ways to make LOTO (and any other method for tracking trial-by-trial variability in parameters) more useful. First, one could introduce variations in the task, with these variations being directly linked to the predictions of the cognitive model. Second, one could apply cognitive models that predict a ‘richer’ set of data (e.g., sequential sampling models that predict accuracy rates and RT distributions conjointly; see above). In the next section, we first turn to introducing variations in the task.

III. Task variations and comparison with ‘single-trial fitting’

We demonstrate the importance of using variations in the experimental design that are systematically linked to the prediction of the cognitive model within the context of studying intertemporal choices. Intertemporal choices are decisions between a smaller reward available now or in near future vs. a larger reward available only in the more distant future (an example trial of a typical intertemporal choice task could be: ‘Do you want to receive $20 now or $50 in 20 days?'). Traditionally, these decisions have been modeled using temporal discounting models that describe the decrease in utility ui of an option i as a function of the delay di of its pay-out (Frederick et al., 2002). We apply the hyperbolic discounting (HD) model (Mazur, 1987), according to which:

where xi refers to the amount of reward and κ is the discount rate (i.e., a free parameter modeling the degree to which utilities are lowered by delays; 0 ≤ κ ≤ 1). To derive choice probabilities (that can then be subjected to MLE for the estimation of κ), the HD model is usually combined with the logistic (or soft-max) choice function (e.g., Gluth et al., 2017; Peters et al., 2012), according to which the probability pi of choosing option i from a set of options including i and j is:

where β is a second free parameter modeling the sensitivity of the decision maker to utility differences (β ≥ 0).

Our goal here is to show that by applying LOTO, we can extract information about parameter κ (which we assume to vary from trial to trial) that goes beyond the information already provided by the observations. We do this by looking at the first derivatives of the log-likelihood of the HD model with respect to κ. Let us assume that option i is an ‘immediate’ option, offering amount xi at delay di = 0, so that ui = xi, and that option j is a ‘delayed’ option, offering amount xj (xj > xi) at delay dj (dj > 0). It turns out that the first derivative of the log-likelihood for choosing the immediate option is:

Detailed derivations are provided in the 'Materials and methods'. Similarly, the first derivative of the log-likelihood for choosing the delayed option is:

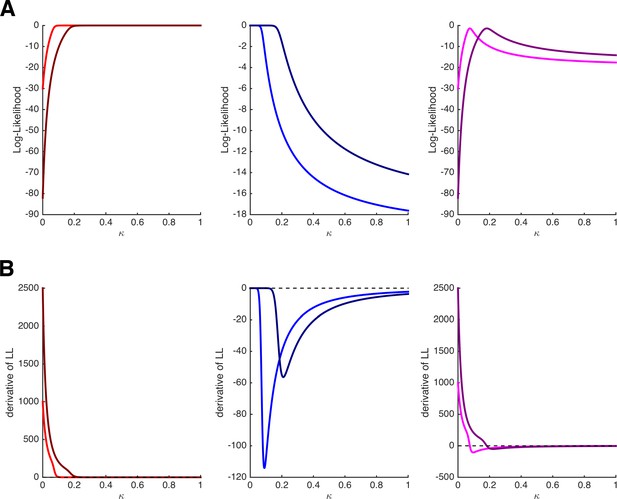

Note that the MLE can be obtained by setting these derivatives to 0. Importantly, we see that in contrast to the derivatives of the binomial distribution, the derivatives of the HD model depend on features of the task, namely on the amount and delay of the delayed option (i.e., xj and dj) and on the amount of the immediate option (i.e., xi), which is hidden in pi. At first glance, this already looks as if the estimation of single-trial values of κt will vary with the task’s features, which is what we desired. Note, however, that both of the terms on the right-hand side of the derivatives are ≥ 0. Thus, by setting the derivatives in Equation 8a and Equation 8b to 0 to obtain the MLEs, parameter κt will take the highest possible value (i.e., κt = 1) whenever the immediate option is chosen and the lowest possible value (i.e., κt = 0) whenever the delayed option is chosen. (Intuitively speaking: the best account for choosing the immediate option is to assume maximal impatience, the best account for choosing the delayed option is to assume is maximal patience.) This is illustrated in Figure 2A and B, which depict the log-likelihoods of choosing i (left panels) or j (middle panels) and their derivatives as a function of κ for two different trials with xi,1 = 20, xj,1 = 50, and dj,1 = 20 in trial 1 (bright colors) and xi,2 = 18, xj,2 = 100, and dj,2 = 25 in trial 2 (dark colors). Independent of the features of the task (i.e., the trial-specific amount and delays), the log-likelihoods of choosing i are monotonically increasing, and their derivatives are always positive, whereas the log-likelihoods of choosing j are monotonically decreasing, and their derivatives are always negative.

Log-likelihoods and derivatives for the hyperbolic discounting model.

(A) Log-likelihoods for choosing the immediate option (left panel), choosing the delayed option (middle panel), and choosing the immediate option once and the delayed option once (right panel) as a function of parameter κ. The bright and dark colors refer to two different trials with different amounts and delays. (B) First derivatives of the log-likelihoods shown in panel A. Note that the estimation of κ will depend on the features of the task only when both options are chosen at least once.

Critically, however, this caveat disappears as soon as each option (i and j) is chosen at least once. For instance, when keeping xi, xj, and dj constant over two trials in which both i and j are chosen once, the derivative of the log-likelihood becomes:

which is positive if pi < .5 but negative if pi > .5. The right panels of Figure 2A and B show the log-likelihoods and their derivatives when assuming that trial 1 or trial 2 were presented twice, and i was chosen in one occasion and j was chosen in the other occasion. Here, the derivatives cross the 0-line, and even more importantly, they do so at different values of κ for the different trials (the MLE of κ for trial 2 is higher because the immediate option is comparatively less attractive than in trial 1).

Why does this allow LOTO to extract more information about κt than that given by the observations? Recall that the estimate of LOTO is the difference between the MLE of κ for all trials and the MLE for all trials except t. As explicated above, these two MLEs will depend on the task features in the n/n–1 trials as long as both choice options (e.g., immediate, delayed) have been chosen at least once in the n/n–1 trials (so that we get non-extreme estimates of κ from the n/n–1 trials). In principle, LOTO can therefore be applied as soon as both options have been chosen at least twice over the course of an experiment (if one option has been chosen in only one trial, then the estimate of κ when excluding this particular trial will be extreme as in the single-trial case). We illustrate this using the two example trials from Figure 2 at the end of the 'Materials and methods'.

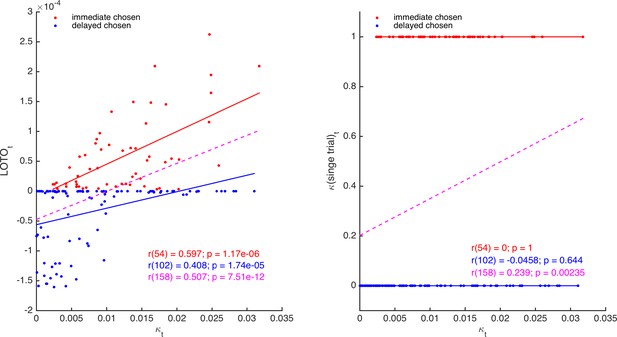

It follows that LOTO should outperform the arguably most elementary method of capturing trial-by-trial parameter variability, which we denote ‘single-trial fitting’. With single-trial fitting, we mean that after estimating all parameters of a model once for all trials, a specific parameter of interest (e.g., κ of the HD model) is fitted again to each trial individually (while all other parameters are fixed to their ‘all-trial’ estimates). Here, we show that LOTO but not single-trial fitting of parameter κ of the HD model is able to extract additional information about the true κt, over and above what is already provided by the observations. Thereto, we generated 160 trials with a constant immediate choice option and variable delayed choice options, and simulated decisions of a participant with κt varying from trial to trial. Then, we applied LOTO and the single-trial fitting method to recover κt. As can be seen in Figure 3, the estimates of LOTO (left panel) but not of single-trial fitting (right panel) are correlated with the true κt after accounting for the observations (i.e., whether the immediate or the delayed option was chosen). In other words, the positive correlation between κt and the single-trial estimates (dashed magenta line) can be attributed entirely to the fact that higher values of κt lead to more choices of the immediate option, but the positive correlation between κt and the LOTO estimates is further driven by how expectable or surprising a decision is (given the trial features xi,t, xj,t, and dj,t).

Comparison of LOTO and single-trial fitting.

The left panel shows the correlation of the trial-specific parameter κt of the HD model with LOTO’s estimates, separately for choosing the immediate (red) and for choosing the delayed (blue) option. The dashed line (magenta) shows the regression slope when collapsing over all trials. The right panel shows the same for the single-trial-fitting approach.

To summarize, one approach to extract information about trial-by-trial changes in a parameter (over and above what is already provided by the observations) is to introduce variations in the experiment that are linked in a systematic manner to the cognitive model. Because its estimates are not solely dependent on the behavior in a single trial but also take all remaining trials into account, LOTO allows to capture this additional information. Note, however, that for specific models, such as sequential sampling models of decision making, LOTO can be useful even without introducing task variations. In the next section, we will look at further improvements in LOTO’s ability to recover trial-specific parameter values by extending the HD model to allow the prediction of choice and RT conjointly.

IV. LOTO benefits from modeling an enriched set of data

Using LOTO to recover trial-specific parameter values of models that predict only binary outcomes, such as choices or accuracy rates, is possible but somewhat limited. This can be seen when looking at the left panel of Figure 3, which suggests that LOTO’s estimates are always higher when the immediate option was chosen. The reason for this is that taking one trial out in which the immediate/delayed option was chosen will always de-/increase the estimation of the discount factor κ, because (as stated above) the single-trial log-likelihood is monotonically in-/decreasing given the choice. (The magnitude of this change varies as a function of the trial-specific choice options, but the sign of this change is determined by the choice.) However, this strict choice-dependent separation might not be plausible; for example, it could very well be that the delayed option is chosen in a trial in which this option offered a very high reward xj with a very low delay dj, even though the actual discount factor in that trial (i.e., κt) was higher than on average. To overcome this limitation, we suggest that the model is extended so that it can take RT data into account. Going back to the example, the intuition is that even if the highly attractive delayed option is chosen, a high discount factor κt will make this decision more difficult, which will result in a comparatively long RT. Applying LOTO to the extended model then allows to capture this RT-specific information.

We extended the HD model by adding the LBA model to it (using an approach that is similar but not equivalent to that used by Rodriguez et al., 2014 and Rodriguez et al., 2015). Specifically, we assume that the difference in utility between the immediate and delayed options is mapped onto their LBA’s expected drift rates as follows:

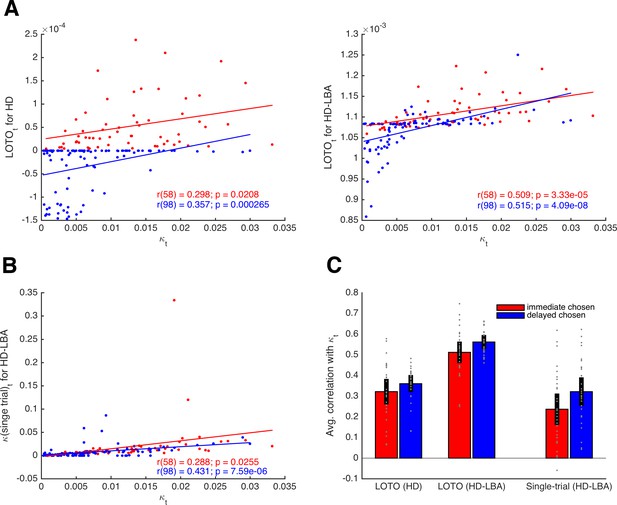

where λ is a free parameter that scales the relationship between utility and expected drift rate. Note that these definitions ensure that the two expected drift rates sum up to 1, following the original specification of the LBA (Brown and Heathcote, 2008). More specifically, νi, and νj represent the means of two independent normal distributions (with standard deviation s), from which the actual drift rates in a trial are assumed to be drawn (see 'Materials and methods'). Choices and RT were simulated for 160 trials of 30 participants and LOTO was applied to the HD and the HD-LBA models. Single-trial fitting was also applied to the HD-LBA model. Figure 4A depicts correlations of an example participant between the true κt and LOTO’s estimate for HD (left panel) and for HD-LBA (right panel). For HD-LBA, the correlation is much improved, and LOTO’s estimates do not strictly separate choices of the immediate and delayed options anymore. The improved performance of LOTO is confirmed when comparing the correlations of all 30 simulated participants (Figure 4C; immediate chosen: t(29) = 10.79, p < .001, Cohen’s d = 1.97, Bayes Factor in favor of the alternative hypothesis BF10 > 1,000; delayed chosen: t(29) = 8.79, p < .001, d = 1.61, BF10 > 1,000).

Comparison of LOTO for the HD and the HD-LBA models.

(A) Correlation of the trial-specific parameter κt with LOTO’s estimates for an example participant. The left panel shows the results when using the HD model, the right panel when using the HD-LBA model. (B) Same as panel (A) but for the single-trial fitting method when using the HD-LBA model. (C) Average correlation for all 30 simulated participants. Individual values (gray dots) are shown together with the 95% confidence intervals of the mean (black error bars).

Notably, the single-trial fitting method applied to the HD-LBA model is also able to capture some variability over and above the information that is already contained in the decisions (Figure 4). This is because RT (and the interplay between RT and decisions) add further information about κt that can be picked up by single-trial fitting. Nonetheless, applying LOTO to HD-LBA yields significantly better recovery of the trial-wise parameter values (Figure 4C; immediate chosen: t(29) = 7.24, p < .001, d = 1.32, BF10 > 1,000; delayed chosen: t(29) = 7.71, p < .001, d = 1.41, BF10 > 1,000). Taken together, recovering trial-by-trial changes in a parameter of a cognitive model with LOTO (but also with other techniques) is much more efficient when the model is able to predict a rich set of single-trial observations, such as RT (and possibly even continuous ratings of choice confidence as in Pleskac and Busemeyer, 2010) in addition to choices or accuracy rates.

V. A non-parametric test for the presence of systematic trial-by-trial variability

So far, our derivations and simulations have rested on the assumption that systematic variability in the parameter of interest exists. As we have argued before, however, this assumption does not have to be correct — not even when the overt behavior appears to be stochastic (Gluth and Rieskamp, 2017). It could be that stochasticity is induced by random fluctuations that are not accounted for by the cognitive model (e.g., randomness in motor execution). When assuming variability in a specific parameter, the risk is that such unsystematic variability is taken up by LOTO even in the absence of any systematic variability in the parameter. Hence, the question is whether one can separate situations in which LOTO captures systematic vs. unsystematic trial-by-trial variability.

To test for the presence of systematic trial-by-trial variability, we propose a non-parametric and simulation-based approach that exploits the improvement in model fit by LOTO: if a model is fitted to n–1 trials, the fit statistic (e.g., the deviance) will improve for two reasons: i) the statistic for the left-out trial is excluded; and ii) the parameter estimate is adjusted so that it optimally fits the n–1 dataset. The rationale of our proposed approach is that the improvement in fit that is attributable to the second reason (i.e., the adjustment) will be lower when there is no systematic trial-by-trial variability in the parameter of interest compared to when there is systematic variability. Practically, we suggest conducting this test in five steps:

For each participant, estimate parameters of the cognitive model for all trials and compute the goodness-of-fit per trial.

For each participant, generate many (e.g., 1,000) simulations of the model for all trials and all participants using the participants’ estimated parameters and assuming no variability in the parameter of interest (i.e., the null hypothesis).

Estimate parameters for these simulated data again, apply LOTO, and compute the LOTO-based improvement in goodness-of-fit for the n–1 trials.

Do the same as in step 3, but for the empirical data.

Test whether the LOTO-based improvement in fit for the empirical data is greater than 95% of the improvements in the simulations that were generated under the null hypothesis.

Note that one advantage of this non-parametric approach is that it does not rely on any assumptions about how the improvement of model fit is distributed under the null hypothesis.

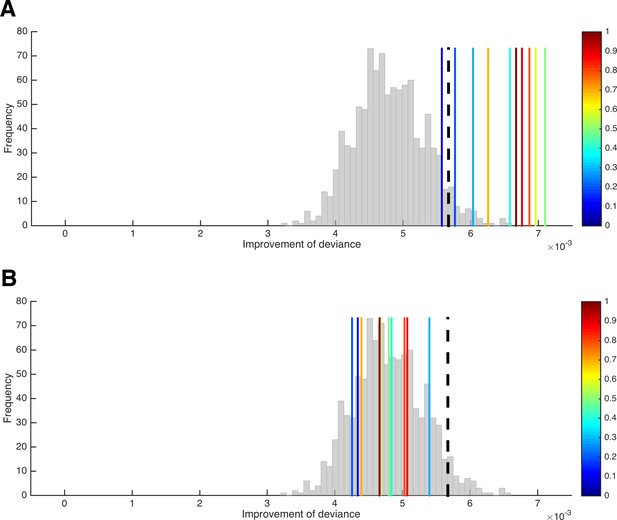

Figure 5A shows the results of this test for the HD model introduced in 'Results: Section III'.

A test for the presence of parameter variability.

(A) Improvement of the HD model’s deviance (in the n–1 trials included per LOTO step) from the ‘all-trials’ fit to the LOTO fit when variability in κ is absent (histogram) and when variability in κ of increasing amount is present (blue to red vertical lines). The black dashed line indicates the 5% of simulations under the null hypothesis with the largest improvement and serves as the statistical threshold. (B) Same as in panel A but with variability in β instead of κ.

We generated 1,000 simulations per participant under the null hypothesis and 10 additional simulations with increasing levels of variability in parameter κ (for details, see 'Materials and methods'). Importantly, the figure shows the average difference in deviance between the ‘all-trial’ fit and the LOTO fits only for the n–1 trials that were included in each LOTO step. This means that the trivial improvement in fit resulting from the fact that LOTO has to explain one trial less is taken out. Thus, the histogram in Figure 5A, which shows the results for the 1,000 simulations under the null hypothesis, confirms our suspicion that LOTO will improve the fit even in the absence of true trial-by-trial variability in parameter κ. However, the continuous vertical lines, which show the results for the simulations with true variability of increasing amount (from blue to red), indicate significantly higher improvements in fit when variability is actually present — except for the lowest level of variability (the dashed black line indicates the 95% threshold).

What happens if there is no systematic variability in the parameter of interest (here: κ), but there is systematic variability in another parameter (here: β)? We can repeat the previous analysis but now simulate variability (of increasing amount) in β instead of κ. (Note that variability in β would mean that the utility difference between choice options is mapped onto the decision in a more/less deterministic way, depending on whether βt is high or low in a trial; for instance, choosing the immediate option in a trial in which the delayed option is very attractive could be attributed to a low βt value instead of a high κt value.) As can be seen in Figure 5B, the improvement in goodness-of-fit when trying to explain variability in β by modeling variability in κ using LOTO does not exceed the improvement under the null hypothesis, and there is no systematic relationship with the amount of variability in β. Thus, we can conclude that finding a significantly higher improvement in fit in our data compared to simulated data under the null hypothesis cannot be due to a misattribution of variability in β to variability in κ. (Of course, this conclusion does not have to hold for other models, parameter sets, and tasks; the test has to be conducted anew for every different situation.)

Variability in behavior (and neural activity) may derive not only from variability in model parameters but also from the processes used to infer the input and output signals of a computation (Drugowitsch et al., 2016; Findling et al., 2018; Loomes et al., 2002; Polanía et al., 2019). For example, choosing the immediate or delayed option in the intertemporal choice task might depend not only on the current impulsiveness (i.e., κ) but also on whether the utility of the delayed option (i.e., uj) is computed correctly or whether the amount xj and the delay dj are perceived accurately. Thus, it might be desirable to examine whether LOTO can dissociate potential variability in these features from variability in the parameter of interest.

Accordingly, we simulated data of the HD model without trial-by-trial variability in κ but with variability in either the amounts xj, the delays dj or the computation of utilities uj of the delayed option. (Note that we ensured that the degree of variability in these variables was similar to that of κ in the previous simulations; see 'Materials and methods'.) Then, we applied LOTO to these simulated data to test whether LOTO misattributes the variability to κ. As can be seen in Figure 5—figure supplement 1, LOTO does not misattribute variability in amounts, but for 3 out of 10 simulations of delay-based variability and for 4 of 10 simulations of utility-based variability, a misattribution could not be ruled out. This appears reasonable given the tight connection of the parameter to both delays and utilities (see Equation 6).

How can the separability of the discount factor κ from the input and output variables x, d, and u be improved? We reasoned that changing the intertemporal choice task by increasing the number of options should help: whereas the discount factor can be assumed to be stable across options (and to vary only from trial to trial), the variability in the input and output variables can be assumed to differ between options (i.e., they are drawn from independent distributions for each option). Accordingly, we extended the simulated task to three options, which can still be considered a reasonable experimental design (e.g., Gluth et al., 2017). We then performed the test for systematic variability using simulated data for this extended task that exhibited true variability in either κ, x, d, or u. As predicted, a significant improvement in model fit over and above the improvement under the null hypothesis of no variability was only found for κ but not for any of the input or output variables (Figure 5—figure supplement 2). In conclusion, LOTO might be an efficient tool to dissociate parameter variability from variability in the input and output signals of computational processes, assuming an appropriate experimental design and careful simulations.

VI. Inferring trial-by-trial variability in more than one parameter

An advantageous feature of LOTO is that the duration of applying the method is almost independent of the number of parameters for which trial-by-trial variability is inferred. The full model is still estimated once for all n trials, and LOTO is still applied n times (but the estimation might take a bit longer for multiple parameters). In this section, we illustrate the application of LOTO to inferring trial-by-trial variability in two parameters of the HD-LBA model: the discount factor κ and the decision threshold b (for details, see 'Materials and methods').

First, we used the non-parametric test from the previous section to check for the presence of systematic variability. In doing this, we simulated 1,000 sets of 30 participants under the null hypothesis and tested whether the simulation with true variability in κ and b provides a significantly improved deviance than those simulations. This was confirmed as only 19 of the 1,000 simulations under the null hypothesis provided a better fit. It should be noted that the explanatory power of this test is somewhat limited, because we can only conclude that there is systematic variability in at least one of the two parameters but not necessarily in both of them.

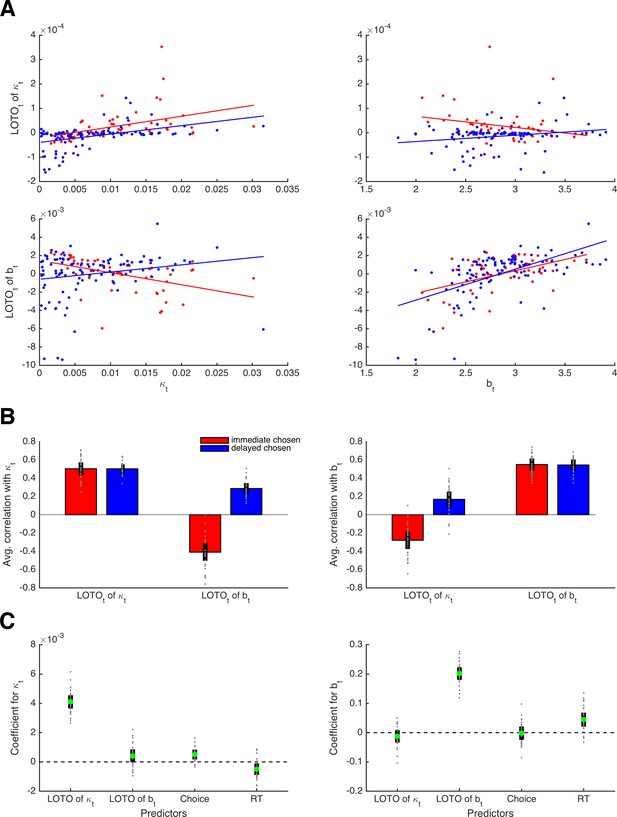

Next, we looked at the correlations between the true κt and bt parameter values and their LOTO estimates for an example participant. As shown in the upper left panel of Figure 6A, LOTO is still capable of recovering the variability in parameter κ from trial to trial for both types of decisions (i.e., immediate and delayed). Similarly, LOTO captures the variability in parameter b (lower right panel). The lower left and upper right panels in Figure 6A show the correlations between the true κt and bt with the ‘wrong’ LOTO estimates of btand κt, respectively. Ideally, one would desire correlations close to zero here, that is, LOTO should not attribute variability in one parameter to variability in another parameter. However, we see that there are modest correlations whose signs depend on the choice. When the immediate option was chosen, the correlations are negative; when the delayed option was chosen, the correlations are positive. These results are confirmed when looking at the average correlations across all 30 simulated participants (Figure 6B): LOTO attributes variability in the ‘correct’ parameters independent of choice and variability in the ‘wrong’ parameters depending on which option was chosen. (Intuitively, a high discount factor κt competes with the decision threshold bt as an alternative explanation for fast choices of the immediate option or slow choices of the delayed option. Similarly, a high decision threshold bt competes with the discount factor κt as an alternative explanation for choices of the option with highest utility.)

Capturing trial-by-trial variability in two parameters.

(A) Correlations of true parameter values κt and bt with their LOTO estimates for an example participant; the on-diagonal elements show the ‘correct’ inferences, the off-diagonal elements show the ‘wrong’ inferences. (B) Average correlations for all simulated participants (left panel: correlation with κt; right panel: correlation with bt). (C) Average standardized regression coefficients of LOTO estimates, choices, and RT when explaining variance in κt (left panel) and bt (right panel). Individual values (gray dots) are shown together with 95% confidence intervals of the mean (black error bars).

Importantly, these results indicate that the observations (i.e., choices and RT) could explain away the undesired correlations while not affecting the desired correlations. To test this, we conducted random-effects linear regression analyses with the true parameter (κt, bt) as the dependent variable and with the LOTO estimates of these parameters together with choices and RT as independent variables (Figure 6C). The standardized regression coefficient (averaged across simulated participants) for LOTO’s estimate of κt when explaining variance in true κt was indeed significantly positive with a very high effect size (t(29) = 28.48, p < .001, d = 5.20, BF10 > 1,000). The coefficient for the ‘wrong’ estimate of bt when explaining variance in κt also reached significance, but the effect was much weaker (t(29) = 3.01, p = .005, d = 0.55, BF10 = 7.74). With respect to explaining variance in true bt, only LOTO’s estimate of the ‘correct’ estimate of bt was significant and strong (t(29) = 30.40, p < .001, d = 5.55, BF10 > 1,000), whereas the effect for the ‘wrong’ estimate of κt did not reach significance (t(29) = −1.93, p = .063, d = −0.45, BF10 = 0.99).

Taken together, LOTO makes it possible to infer trial-by-trial variability in more than one parameter within the same model, revealing information over and above that provided by the observations. At the same time, LOTO’s misattributions of variability to other parameters are largely explained away by the observations. (As before, these conclusions are restricted to the current setting of task, model, and parameters, and have to be tested anew for different settings.) Notably, the random-effects linear regression analysis conducted above is very similar to the conventional approach of analyzing fMRI data on the basis of the General Linear Model (Friston et al., 1994). The ultimate question is not whether LOTO induces some significant misattribution or not, but whether this misattribution can in turn be expected to lead to wrong inferences about the relationship between brain activity and variability in cognitive parameters. We further elaborate on this issue in 'Results: Section X'.

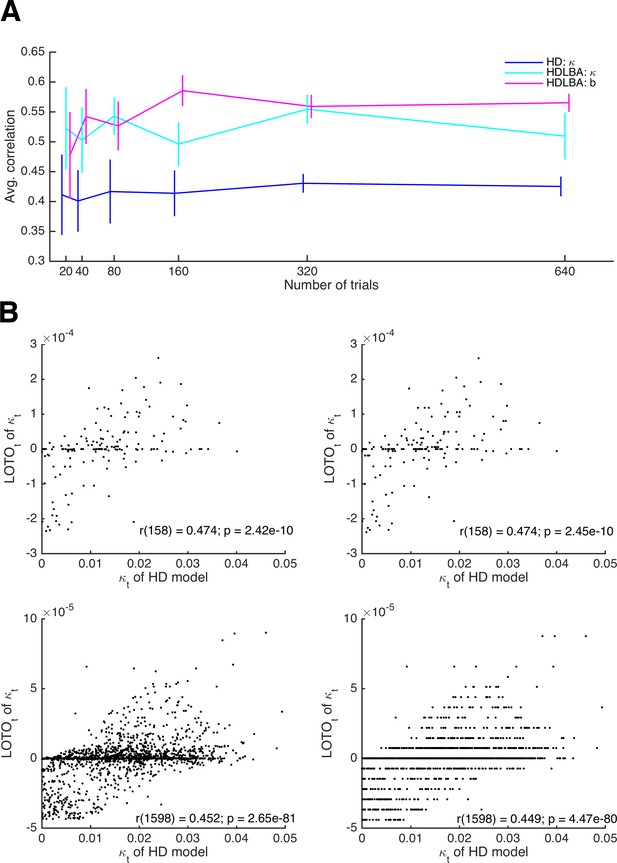

VII. The performance of LOTO as a function of the number of trials

Trial numbers in neuroimaging studies are often low. fMRI studies are particularly limited because scanning hours are expensive, and because the low temporal resolution of fMRI requires long inter-trial intervals in the case of event-related fMRI (which is most suitable for trial-by-trial analyses). Therefore, it is important to understand the extent to which LOTO’s accuracy depends on the number of trials. To test this, we repeated the simulations of the HD and the HD-LBA models for 20, 40, 80, 160, 320, and 640 trials. As shown in Figure 7A, the average extent of the correlations between the true parameter values and the respective estimates of LOTO are largely unaffected by the number of trials. The recovery performance is slightly reduced for the threshold parameter b of the HD-LBA model in the case of 20 trials. Note, however, that the variance of the correlations decreases as the number of trials increases. Therefore, LOTO still benefits from increasing the number of trials in terms of achieving higher statistical power, as we show in 'Results: Section X'.

Relationship between the performance of LOTO and the number of trials.

(A) Average correlations between true parameter values and LOTO estimates for parameters of the HD and the HD-LBA models as a function of the number of trials per simulated participant. (B) Example correlations between true values and LOTO estimates for 160 trials (upper panels) and for 1,600 trials (lower panels), separately for a strict tolerance criterion for convergence of the minimization algorithm (left panels) and for a more lenient, default tolerance criterion (right panels). Error bars indicate 95% confidence intervals of the mean.

In 'Results: Section II', we mentioned that the impact of a single trial on the overall model fit, as well as on the LOTO estimate, decreases as the number of trials increases. If the number of trials is very high, then the improvement in model fit that results from a change in the parameter value might become smaller than the default tolerance criterion set by the minimization algorithm. In other words, the algorithm assumes convergence because the change in model fit caused by taking one trial out is insignificantly small. Figure 7B illustrates this phenomenon. The upper panels show the results for parameter κ of the HD model for 160 trials, the lower panels for 1,600 trials. In the left panels, a strict tolerance criterion was used; in the right panels, the default tolerance criterion in MATLAB was used (for details, see 'Materials and methods'). In the case of 1,600 trials, the tolerance criterion matters: if the default criterion is used, the LOTO estimates exhibit discrete jumps, that is, the same parameter value is estimated for trials with relatively similar observations, because a more fine-graded estimation does not improve the fit beyond the default tolerance criterion. This is not the case for the strict tolerance criterion.

According to the example in Figure 7B, the default tolerance criterion appears to lower the correlation between the true values and the LOTO estimates in the case of 1,600 trials, but only to a minor extent. This observation is confirmed when comparing the correlations across all 30 simulated participants. In the case of 160 trials, the correlations obtained using the strict and the default tolerance criterion are not significantly different (t(29) = 1.27, p = .213, d = 0.23, BF10 = 0.40). In the case of 1,600 trials, there is a significant difference (t(29) = 4.59, p < .001, d = 0.84, BF10 = 323.03), but the difference is small in absolute terms (i.e., on average the correlation is reduced by only .003). In general, we recommend checking the potential impact of the tolerance criterion on LOTO’s performance by running simulations, in particular when LOTO is applied to a task with many trials (i.e., ≥ 1,000).

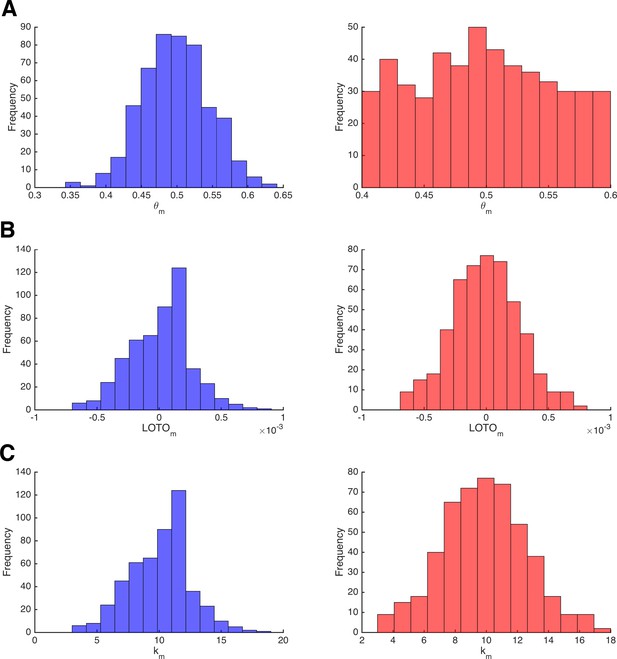

VIII. Invariance of LOTO with respect to the underlying parameter distribution

In contrast to some other methods for inferring trial-by-trial variability in model parameters (van Maanen et al., 2011; Turner et al., 2015), LOTO does not require the assumption that parameters are drawn from specific distributions. Even though it is not the purpose of LOTO, one might wonder whether the distributions of parameters and of LOTO’s estimates can be expected to be similar. To answer this question, we reverted to the initial ‘toy’ model example of the binomial distribution ('Results: Section II'). For this purpose, we sampled 10,000 Bernoulli events, which we split into 500 ‘blocks’ of 20 trials each (see 'Materials and methods'). For each block, parameter θm was drawn from either a normal or a uniform distribution and observations km were then drawn from binomial distributions given θm. Finally, LOTO was applied to infer variability in θ.

The left and right panels of Figure 8A depict the normally and uniformly distributed parameter values of θm, respectively. Similarly, the left and right panels of Figure 8B show the distributions of the corresponding LOTO estimates. The distributions of the true parameter values are markedly different, whereas the distributions of LOTO estimates are very similar. The reason for this can be seen in Figure 8C, which depicts the distribution of observations km, which perfectly match the LOTO distributions (because the observations and LOTO estimates are perfectly correlated for this ‘toy’ model; see 'Results: Section II'). By definition, the observations are distributed binomially and so are the LOTO estimates. Generally speaking, the distribution of LOTO estimates is mostly invariant to the distribution of the underlying parameters but depends much more on how the parameter values map onto the observations via the model and its likelihood function.

Invariance of LOTO with respect to the underlying parameter distribution.

(A) Distribution of the data-generating parameter values θm for two simulations that sample from a normal distribution (left, blue) or from a uniform distribution (right, red). (B) The corresponding distributions of LOTO estimates. (C) The corresponding distributions of observations km.

To generalize these conclusions to the more interesting case of a cognitive model, we sampled single-trial parameter values of κ (i.e., the discount factor) and b (i.e., the decision threshold) of the HD-LBA model either from a normal distribution or from a uniform distribution (details are provided in 'Materials and methods'). The results for parameter κ and for parameter b are presented in Figure 8—figure supplement 1 and Figure 8—figure supplement 2, respectively. Again, the distributions of LOTO estimates appear to be invariant to the shape of the underlying parameter distributions. In this case of the HD-LBA model, the parameter values and LOTO’s estimates are linked via the LBA likelihood function. Most of all, this function depends on the drift rates that are drawn from normal distributions. Expectedly, the LOTO estimates appear to be roughly normally distributed. Importantly, our simulations of the HD-LBA model also suggest that the performance of LOTO (in terms of the correlation between true parameter values and LOTO estimates) does not seem to depend much on the distribution of the underlying parameter values. Hence, we conclude that LOTO cannot be used to infer the shape of the underlying parameter distribution. Despite this limitation, LOTO is highly efficient in detecting significant relationships between cognitive model parameters and neural data, regardless of the underlying parameter distribution.

IX. Comparison of LOTO with related approaches

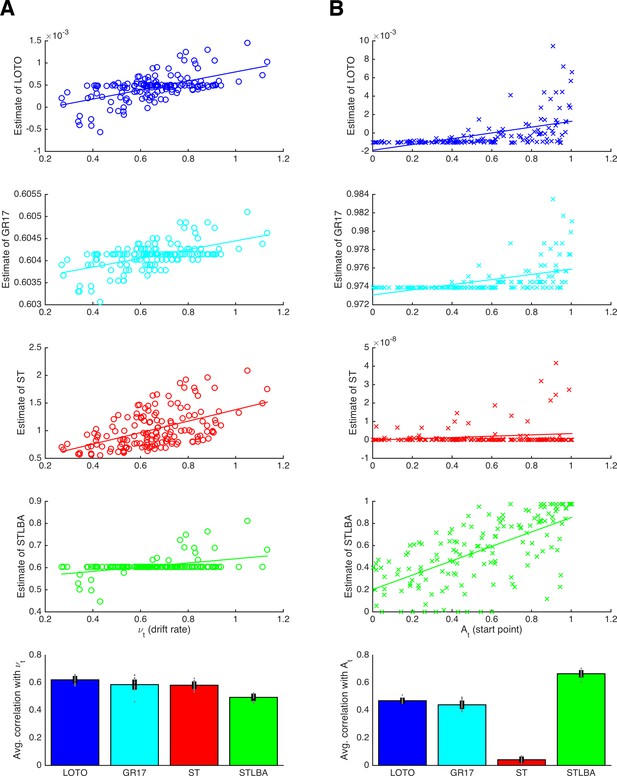

In this section, we compare LOTO’s ability to recover the underlying trial-by-trial variability of cognitive model parameters with alternative techniques. More specifically, we generated synthetic data (i.e., choices and RT) from the LBA model (Brown and Heathcote, 2008), which assumes that the trial-wise drift rates νt and start points At are drawn from normal and uniform distributions, respectively (details are provided in the 'Materials and methods'). We compare LOTO with three other methods: i) with our previously proposed technique (Gluth and Rieskamp, 2017; henceforth ‘GR17’), ii) with ‘single-trial fitting’, and iii) with the ‘Single-Trial LBA’ approach (van Maanen et al., 2011; henceforth ‘STLBA’), which was specifically developed for retrieving maximum-likelihood estimates of trial-wise drift rates and start points of the LBA.

Figure 9 shows correlations between the true drift rates and start points and the values recovered by each method for an example simulation (upper panels), together with average correlations for multiple simulated experiments (lowest panels). From the example correlations, we see that LOTO and GR17 yield very similar results. This is to be expected because the two approaches employ similar principles. In both cases, parameters are estimated for all trials, and those parameters for which trial-by-trial variability is not assumed are fixed to their all-trial estimates; then, both approaches use the discrepancy between the observation in each trial and the observations over all trials to infer plausible trial-specific values. However, the comparison across multiple simulations reveals that LOTO performs significantly better than GR17 for both parameters (drift rate: t(19) = 3.76, p = .001, d = 0.84, BF10 = 28.75; start point: t(19) = 4.86, p < .001, d = 1.09, BF10 = 259.17). The reason for this is that the performance of GR17 depends on the step size with which the range of possible parameter values is scanned, and we were forced to choose an immediate step size to avoid excessive computation times. As a consequence, the GR17 estimates exhibit discrete jumps (similar to those mentioned in the previous section when applying LOTO with a too lenient tolerance criterion), which lower the correlation with the true parameters. In other words, the advantage of LOTO over GR17 is its higher processing speed, which is particularly relevant when modeling variability in multiple parameters.

Comparison of methods for capturing trial-wise parameter values.

(A) Results for the LBA drift rate parameter. The first four panels show correlations for an example participant for the methods LOTO (blue), GR17 (cyan), single-trial fitting (ST; red), and STLBA (green). The lowest panel shows correlations averaged over all simulations. (B) Same as panel A but for the LBA start point parameter. Individual values (gray dots) are shown together with 95% confidence intervals of the mean (black error bars).

The ‘single-trial fitting’ approach yields acceptable results for the drift rate, but is still outperformed by LOTO (t(19) = 19.72, p < .001, d = 4.41, BF10 > 1,000). More dramatically, the method fails completely with respect to recovering the start point values. In our view, this is because adjusting the drift rate is sufficient to account for the observations in a single trial (i.e., whether the choice was correct or not and how fast the choice was), so that there is no information left to be captured by the start point. By contrast, LOTO (and GR17) estimates depend on how the likelihood space of the LBA model changes when one trial is taken out, and this likelihood space depends on both parameters.

Finally, the STLBA method performs worse than LOTO with respect to the drift rate (t(19) = 52.60, p < .001, d = 11.76, BF10 > 1,000) but better with respect to the start point (t(19) = −30.08, p < .001, d = −6.73, BF10 > 1,000). Looking at the example simulations in Figure 9, we see that this is because many single-trial drift rates (but not start points) of the STLBA are equal to the ‘all-trial estimate’. This is a consequence of LBA’s assumption that drift rates are drawn from a normal distribution whereas start points are drawn from a uniform distribution, which induces a ‘ridge’ in the joint density distribution on which one drift rate value (namely the mean of the normal distribution) together with many start points are the maximum-likelihood estimates (L. van Maanen; personal communication, February 2018). Overall, LOTO appears to provide comparatively good, and in fact mostly superior, results when compared to alternative approaches for capturing trial-by-trial variability in cognitive model parameters.

The previous comparison does not include arguably the most powerful approach for linking cognitive models with neural data, which is the joint modeling approach (Turner et al., 2015). This method employs hierarchical Bayesian modeling (Farrell and Lewandowsky, 2018; Lee and Wagenmakers, 2013) to draw both behavioral and neural parameters from a joint, higher-order probability distribution and to fit models to both behavioral and neural data. To compare LOTO to the joint modeling approach, we first implemented Bayesian modeling to obtain single-trial estimates of parameter κ of the HD model (details are provided in the 'Materials and methods'). Then, we extended this to the joint modeling approach by simulating neural data F(κ)t, which we assumed to correlate with κt, and included these data when estimating the model. Thus, the following comparison comprises three methods: LOTO, Bayesian modeling (without joint modeling), and the joint modeling approach.

We compared the three methods with respect to the correlation between the true values of κt and the methods’ estimates (Figure 9—figure supplement 1A) and with respect to the correlation between the neural data F(κ)t and the methods’ estimates (Figure 9—figure supplement 1B). First of all, all three methods provided significant relationships with the true model parameters and with the neural data (all p < .001, all BF10 > 1,000). Expectedly, the correlations illustrate that the Bayesian modeling and the joint modeling approach yielded similar estimates. Averaged over the simulated participants, however, the joint modeling approach outperformed (non-joint) Bayesian modeling with respect to both κt (t(29) = 3.61, p = .001, d = 0.66, BF10 = 21.96) and F(κ)t (t(29) = 4.32, p < .001, d = 0.79, BF10 = 131.84). Remarkably, LOTO outperformed Bayesian modeling (t(29) = 2.82, p = .009, d = 0.51, BF10 = 6.07) as well as the joint modeling approach (t(29) = 2.51, p = .018, d = 0.46, BF10 = 3.33) with respect to κt. With respect to the neural signal F(κ)t, however, LOTO yielded similar correlations compared to Bayesian modeling (t(29) = −0.62, p = .540, d = −0.11, BF10 = 0.26) and was outperformed by the joint modeling approach (t(29) = −3.03, p = .005, d = −0.55, BF10 = 13.78). Overall, the joint modeling approach did indeed provide the best resultsin terms of linking the single-trial neural data to the cognitive model, but the differences between the methods appear to be comparatively small. Taking flexibility and difficulty of implementation into account, LOTO appears to offer an expedient alternative to joint modeling (see also 'Discussion').

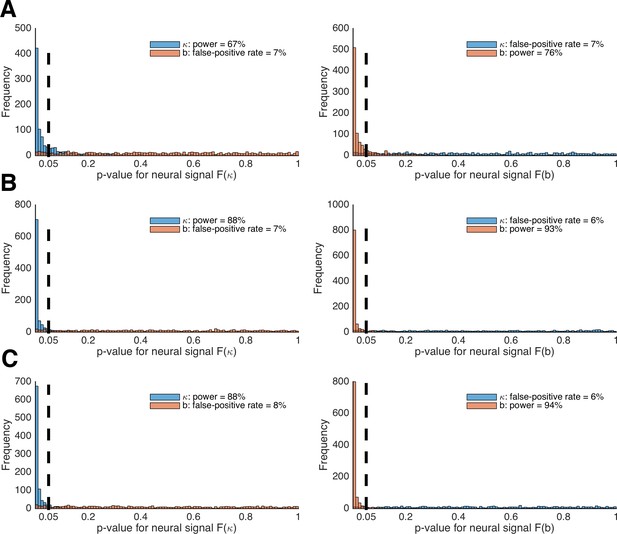

X. A power analysis for linking LOTO estimates to neural data

The ultimate goal of LOTO (and related approaches) is to establish a link between the inferred trial-by-trial variability in parameters of cognitive models and the brain activity measured with tools such as fMRI or EEG. Therefore, the question of whether LOTO is an appropriate tool for a given experiment with a specific design (including task, number of trials, number of participants etc.), cognitive model, and parameter(s) of interest should be answered by testing whether LOTO can be expected to recover the (truly existing) correlation between a neural signal and a parameter. In the case of modeling variability in more than one parameter, it is also important to test whether the potential misattribution of variability by LOTO may lead to incorrect inferences regarding the brain–parameter relationship (see 'Results: Sections V and VI'). We emphasize here that these requirements are not unique to LOTO. Any approach for investigating the neural correlates of trial-by-trial parameter variability should be accompanied by such analyses of statistical power (i.e., sensitivity) and false-positive rates (i.e., specificity).

To link LOTO estimates to neural data, we reverted to the HD-LBA model for which we have inferred variability in two parameters (i.e., discount factor κ and decision threshold b) in 'Results: Section VI'. When simulating trial-specific parameter values κt and bt, as well as choices and RT, we also generated two hypothetical neural signals F(κ)t and F(b)t that were positively correlated with κt and bt, respectively (for details, see 'Materials and methods'). Then, we generated 1,000 datasets of 30 participants with 160 trials each and tested how often the LOTO estimate for a parameter (e.g., κ) correctly explains a significant amount of variability in the associated neural signal (e.g., F[κ]), and how often it incorrectly explains a significant amount of variability in the neural signal associated with the other parameter (e.g., F[b]) over and above that explained by the observations. This was realized by a random-effects linear regression analysis – similar to the General Linear Model approach in fMRI analysis (Friston et al., 1994). Stated differently, we tested i) the sensitivity of LOTO by means of a power analysis, asking how likely it is that LOTO identifies the correct neural signal, and ii) the specificity of LOTO by means of a false-positive analysis, asking how likely it is that LOTO misattributes a neural signal to a different parameter.

Figure 10A depicts the histograms of p-values for tests of whether LOTO’s estimates for κ and b are significantly related to the brain signals F(κ)t (left panel) and F(b)t (right panel), using the setting with 30 participants. According to these results, LOTO achieves a statistical power (or sensitivity) of 67% for parameter κ and of 76% for parameter b, values that are slightly below the threshold of 80% that is usually deemed acceptable. On the other hand, the false-positive rate (or specificity) of LOTO is good, with values lying just slightly above the 5% error rate. This means that the small misattribution of parameter variability seen in 'Results: Section VI' does not carry over much to a misattribution of the neural signal. In Figure 10B, we repeated the analysis for a sample size that is increased by two-thirds (i.e., 50 instead of 30 simulated participants). Expectedly, the statistical power of LOTO improves and lies within acceptable ranges. An alternative solution is to increase the number of trials per participant. Figure 10C depicts the results when the number of participants is kept to 30 but the number of trials is increased by two-thirds (i.e., 267 instead of 160 trials). The improvement in statistical power is comparable to that resulting from the increased sample size. Accordingly, one would conclude from these analyses that investigating the neural basis of trial-by-trial variability in parameters κ and b of the HD-LBA model might require testing more than 30 participants or testing more than 160 trials per participant, whatever is more suitable in a given context (e.g., adding trials might be difficult for event-related fMRI designs that require long inter-trial intervals but less problematic for EEG designs).

Power analysis of LOTO for detecting brain–parameter correlations.

(A) Histogram of the p-values from tests of the significant relationships between κ and b and brain signals F(κ) (left panel) and F(b) (right panel). (B) The same as in panel A but for 50 instead of 30 participants per simulated experiment. (C) The same as in panel A but for 267 instead of 160 trials per participant.

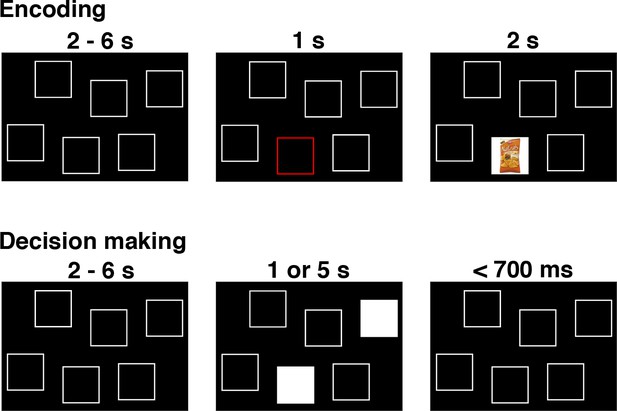

XI. An example of using LOTO for model-based fMRI

In this section, we apply LOTO to a real neuroimaging dataset to further illustrate the method’s use and usability in model-based cognitive neuroscience. The dataset is taken from a previously published fMRI study (Gluth et al., 2015), in which participants were asked to make preferential choices from memory. More specifically, participants first encoded associations between food snacks and screen positions and then decided between pairs of food snacks while seeing only the snacks’ screen positions (Figure 11A). Participants had either one or five seconds in which to retrieve the item–location associations and to deliberate upon the preferred snack option.

The remember-and-decide task (Gluth et al., 2015).

This figure shows one example trial from the encoding and decision-making phases of the task. During an encoding trial, participants learned the association between one out of six different snacks with a specific screen position. During a decision-making trial, two screen positions were highlighted, and participants had to retrieve the two associated snacks from memory in order to choose their preferred option. Here, we apply LOTO to a decision-making model in order to infer memory-related activity during encoding. For a more detailed illustration of the study design, see Figure 1 in Gluth et al. (2015).

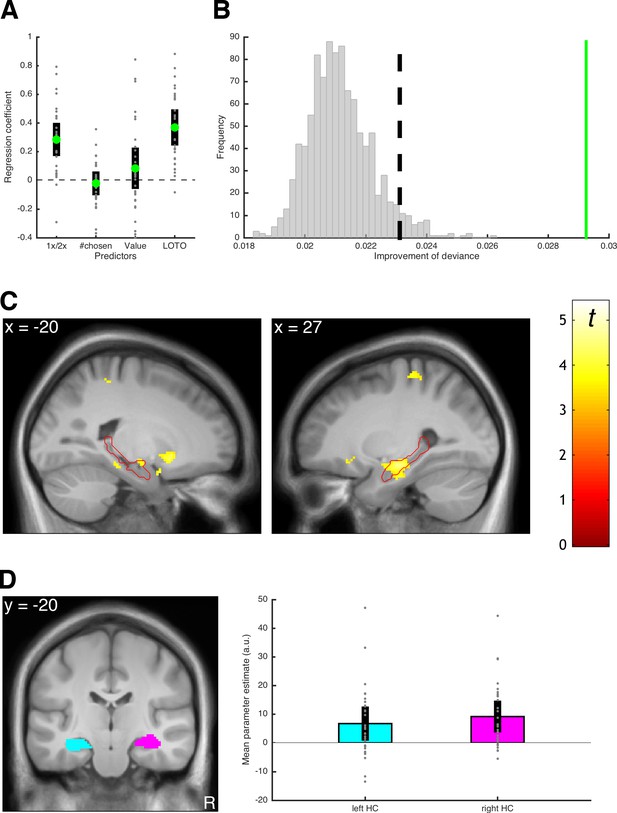

Choice behavior was modeled using an unbounded sequential sampling model that predicted more accurate decisions (i.e., decisions that are more consistent with evaluations of the food snacks in a separate task) with more deliberation time (details on the model and the fMRI analyses are provided in 'Materials and methods'; see also Figure 3 in Gluth et al. (2015)). Importantly, the model estimates the average memory performance of a participant, that is, the probability of accurately remembering a snack when making decisions. In the original study (Gluth et al., 2015), we employed our previously proposed GR2017 method for estimating trial-by-trial variability in order to infer the likelihood of successful memory retrieval for each snack given the choice behavior (intuitively, choosing unattractive snacks is attributed to poor memory performance). Here, we repeat this analysis but use LOTO to make the inference. Notably, LOTO takes advantage of the fact that each snack is a choice option in multiple (i.e., three) choice trials (see 'Results: Section II'). Ultimately, LOTO’s item-specific estimate of memory performance is applied to the fMRI analysis of the encoding phase. In other words, a model-based analysis of the well-established difference in subsequent memory effect (Paller and Wagner, 2002) is conducted with the prediction that variations in memory performance should be positively correlated with activation of the hippocampus (as has been found in our original publication).

Before using LOTO to inform the fMRI analysis, we checked whether the variability estimates could be linked to other behavioral indicators of memory performance and whether the assumption of systematic variability in memory could be justified. To address the first question, we conducted a logistic regression testing whether the LOTO-based estimate predicted correct responses in the cued recall phase (i.e., a phase following the decision-making phase in which participants were asked to recall each snack; see 'Materials and methods' and Gluth et al., 2015). As additional independent variables, this regression analysis also included whether the snack was encoded once or twice, how often a snack was chosen, and the subjective value of the snack. As can be seen in Figure 12A, the LOTO-based estimate of memory explained variance in cued recall performance over and above the other predictor variables (t(29) = 7.44, p < .001, d = 1.36, BF10 > 1,000). To address the question of systematic variability, we followed the procedures described in 'Results: Section V' and generated 1,000 datasets under the null hypothesis of no variability in memory performance. We then compared the LOTO-based improvement in model fit in these datasets with the improvement in the real data. Indeed, the improvement in the real data was much larger than in any of the datasets generated under the null hypothesis (Figure 12B). Furthermore, we checked whether potential variability in parameter σ, which represents the standard deviation of the drift rate (i.e., noise in the decision process), could have been misattributed to the memory-related parameters. (It seems less plausible to assume that variability in the decision process, in contrast to variability in memory, is item-specific. Nevertheless, we included this control analysis for instructional purposes.) Thereto, we repeated the test for the presence of systematic variability with simulated data that included different levels of true variability in σ (for details, see 'Materials and methods'). As can be seen in Figure 12—figure supplement 1, LOTO does not appear to misattribute variability in σ to the memory-related parameters.

Results of the example LOTO application.

(A) Average standardized regression coefficients for predicting memory performance in cued recall (1x/2x refers to how often an item was encoded). (B) Testing for systematic variability in memory; the green line shows the average trial-wise improvement of fit for the real dataset. The vertical dashed line represents the significance threshold based on the simulated data under the null hypothesis of no variability (gray histogram). (C) fMRI signals linked to the LOTO-based estimate of item-specific memory; the red outline depicts the anatomical mask of the hippocampus. (D) ROI analysis with anatomical masks of left and right hippocampus (HC) and average fMRI signals in these ROIs (the analyses in panels C and D are analogous to those in Figure 5 of Gluth et al., 2015). Individual values (gray dots) are shown together with 95% confidence intervals of the mean (black error bars).

Having established that LOTO has the potential to identify meaningful neural correlates of item-specific memory performance, we turned to the fMRI analysis. Here, we employed a frequent approach to model-based fMRI (O'Doherty et al., 2007), which is to add the predictions of a cognitive model as a so-called parametric modulator (PM) of the hemodynamic response during a time window of interest. In our case, this time window is the period during which snack food items are presented during encoding, with the LOTO-based variability estimates serving as the PM (in order to test for a model-based subsequent memory effect). As can be seen in Figure 12C, this analysis revealed fMRI signals in bilateral hippocampus (MNI coordinates of peak voxel in left hippocampus: x = −18, y = −10, z = −12; right hippocampus: 38,–12, −26) that surpassed the statistical threshold corrected for multiple comparisons (left: Z = 3.73; p = .033; right: Z = 4.46, p = .002). These effects are in line with those reported in the original publication, which were based on the GR2017 method and also showed a significant relationship between item-specific memory strength and hippocampal activation. (Note that, as in the original publication, we ruled out alternative explanations for these results by including cued recall performance and subject values of snacks as additional PMs in the analysis; see 'Materials and methods'.)

To illustrate a second analysis approach, we also conducted a region-of-interest (ROI) analysis by extracting the average fMRI signal from anatomically defined masks of the left and right hippocampi (Figure 12D). The average signals in these ROIs were significantly related to the LOTO-based PM (left: t(29) = 2.93, p = .007, d = 0.53, BF10 = 6.42; right: t(29) = 4.36, p < .001, d = 0.80, BF10 = 182.52). Taken together, these findings demonstrate that we were able to reproduce the analysis of a model-based subsequent memory effect reported by Gluth et al. (2015) using LOTO.

Discussion

In this work, we introduce LOTO, a simple yet efficient technique to capture trial-by-trial measures of parameters of cognitive models so that these measures can be related to neural or physiological data. The core principle is to infer single-trial values from the difference in the parameter estimates when fitting a model with all trials vs. a model with one trial being left out. The application of LOTO is simple: first, the full set of parameters of a model is estimated for all n trials; then, the parameters of interest are estimated again n times with each trial being left out once, while the remaining parameters are fixed to their all-trial estimates. In addition to its simplicity, LOTO is also comparatively fast: if fitting a model once to all trials takes u time units, then LOTO’s runtime will be u × n at most (in fact, it can be expected to be even faster because not all parameters are estimated again). And in contrast to the GR2017 approach, the LOTO runtime will not increase (much) if variability in more than one parameter is estimated. Compared to related methods, LOTO appears to provide mostly comparable or even superior results. Finally, it is a very general approach for capturing variability in parameters. In principle, it can be applied to any cognitive model, although we stress the importance of testing the feasibility of LOTO for each model and study design anew.

The LOTO recipe

In this section, we provide a ‘LOTO recipe’ that summarizes the critical steps, tests and checks that one should follow when seeking to apply the method. We link each point to the relevant section(s) in the 'Results'. Thus, despite our recommendation that readers with limited statistical and mathematical knowledge should first consult this recipe, it is indispensable for interested cognitive neuroscientists to read the relevant sections before trying to apply LOTO to their own data. Notably, Steps 1 and 2 of this recipe are to be performed before conducting the experiment. Therefore, LOTO is not suitable for post-hoc analyses of data that have already been acquired with different study goals in mind. Also note that all of the computer codes associated with this article and with LOTO are freely available on the Open Science Framework (https://osf.io/du85f/).

Specify the cognitive model and the parameters for which trial-by-trial variability should be estimated; similarly, specify the design of the study (number of trials and participants, experimental manipulations, neuroscientific method).

What type of observations does the model explain? If it explains only choices, try to extend the model to allow the prediction of RT ('Results: Section IV').

How are the experimental manipulations or conditions related to the model (i.e., which manipulation is predicted to selectively influence a given parameter)? If the model has to be fit to each condition separately, try to adjust the model so that its predictions change directly as a function of the condition ('Results: Section III').

Should variability be captured in each trial or across multiple trials (e.g., small blocks of trials)? If simulations of LOTO (see Step 2 below) do not yield acceptable results, consider pooling multiple trials into small blocks and capturing parameter variability from block to block ('Results: Section II').

Does the neuroscientific method allow the measurement of signals that can be related to trial-by-trial variability? Block-designs for fMRI and ERPs for EEG are less suitable than event-related designs for fMRI and time-frequency analyses for EEG.

Test the feasibility of the LOTO approach for the chosen model and study design.

Test whether LOTO can (in principle) provide sensible estimates of the true trial-specific values of the targeted model parameter(s). Specification of the first derivative of the log-likelihood and the Fisher information (as described in 'Results: Sections II and III') is desirable but often cumbersome or even impossible. Instead, we suggest running simulations ('Results: Sections II–IV').

Check whether LOTO might misattribute random noise by conducting the non-parametric test for the presence of systematic variability ('Results: Section V'). Similarly, check whether LOTO might misattribute variability in other parameters to the parameters of interest by conducting the regression analyses outlined in 'Results: Section VI'.

Estimate the statistical power and if applicable the specificity of LOTO given the model and study design ('Results: Section X'). This might require running a pilot study to obtain a rough estimate of the amount of trial-by-trial variability in model parameters that can be expected (alternatively, existing datasets could be inspected for this purpose).

For experiments with many trials per participant (i.e.,≥1,000), check whether the default tolerance criterion of the parameter estimation method is sufficient or whether there are discrete ‘jumps’ in the LOTO estimates. If necessary, choose a stricter criterion ('Results: Section VII').

Always use the actually planned number of trials, number of participants and manipulations when doing simulations. Always include the observations that are used to estimate parameters (e.g., choices and RT) when testing the potential of LOTO to recover parameter variability. Applying LOTO is meaningless when the method does not yield any additional information over and above that provided by the observations themselves ('Results: Section II').

If the simulations (see Steps 2a,b) do not yield acceptable results, consider increasing the number of participants or the number of trials per participant ('Results: Section X'), or consider adapting the model or experimental paradigm ('Results: Sections II–IV').