Competition between parallel sensorimotor learning systems

Peer review process

This article was accepted for publication as part of eLife's original publishing model.

History

- Version of Record published

- Accepted Manuscript published

- Accepted

- Preprint posted

- Received

Decision letter

-

Kunlin WeiReviewing Editor; Peking University, China

-

Michael J FrankSenior Editor; Brown University, United States

-

Kunlin WeiReviewer; Peking University, China

-

Timothy J CarrollReviewer; The University of Queensland, Australia

In the interests of transparency, eLife publishes the most substantive revision requests and the accompanying author responses.

[Editors’ note: after the initial submission, the editors requested that the authors prepare an action plan. This action plan was to detail how the reviewer concerns could be addressed in a revised submission. What follows is the initial editorial request for an action plan.]

Thank you for sending your article entitled "Competition between parallel sensorimotor learning systems" for peer review at eLife. Your article is being evaluated by 3 peer reviewers, and the evaluation is being overseen by a Reviewing Editor and Michael Frank as the Senior Editor. The reviewers have opted to remain anonymous.

Given the list of essential revisions, including new experiments, the editors and reviewers invite you to respond as soon as you can with an action plan for the completion of the additional work. We expect a revision plan that under normal circumstances can be accomplished within two months, although we understand that in reality revisions will take longer at the moment. We plan to share your responses with the reviewers and then advise further with a formal decision. After full discussion, all reviewers agree that this work if accepted would have a significant impact on the field. However, the reviewers raised a list of major concerns over the proposed model and its data handling. In particular, a lack of strong alternative models, a possible lack of explanatory power for outstanding findings in the field, and a strong reliance on modeling assumptions make it hard to accept the paper/model as it currently stands. We hope the authors can adequately address the following major concerns. [Editors’ note, the major concerns referenced here are detailed later in this decision narrative].

[Editors’ note: the authors provided an initial action plan in response to reviewer comments. Following the initial action plan, the authors were provided additional reviewer comments. These concerns were addressed in a second action plan. Additional reviewer comments were provided after the second action plan. During the composition of a third action plan, a decision was made to reject the manuscript.]

[Editors’ note: the authors submitted for reconsideration following the decision after peer review. What follows is the decision letter after the first round of review.]

Thank you for resubmitting your work entitled "Competition between parallel sensorimotor learning systems" for consideration by eLife. Your article has been reviewed by 3 peer reviewers, one of whom is a member of our Board of Reviewing Editors, and the evaluation has been overseen by Michael J Frank as the Senior Editor. The reviewers have opted to remain anonymous.

All the reviewers concluded that the study is not ready for publishing in eLife. The primary concern is that the direct evidence for supporting the competition model is weak or simply lacking. In the previous manuscript, the only solid, quantitative evidence is the negative correlation between explicit and implicit learning (Figure 3H). But as we mentioned in the last letter decision, this negative correlation can also be obtained by the alternative (and mainstream) model based on both target error and sensory prediction error. You provided a piece of new but essential evidence to differentiate these two models in the revision plan. Still, this new result (i.e., the negative correlation between total learning and implicit learning) is at odds with multiple datasets that have been published. We find this will continue to be a problem even we provide you more time to revise the paper or continue to collect more data as listed in the revision plan. The submission has been on discussion for a prolonged period as the reviewers hope to see solid evidence for the conceptual step-up that you presented in the manuscript. However, we are regretful that the study is not ready for publishing as it currently stands.

[Editors’ note: the authors appealed the editorial decision. The appeal was granted and the authors were invited to submit a revised manuscript and response to reviewer comments. What follows are the reviewer comments provided after the initial submission of the manuscript.]

Concerns:

1. There is no clear testing of alternative models. After Figure 1 and 2, the paper goes on with the competition model only. The independent model will never be able to explain differences in implicit learning for a given visuomotor rotation (VMR) size, so it is not a credible alternative to the competition model in its current form. It seems possible that extensions of that model based on the generalization of implicit learning (Day et al. 2016, McDougle et al. 2017) or task-dependent differences in error sensitivity could explain most of the prominent findings reported here. We hope the authors can show more model comparison results to support their model.

2. The measurement of implicit learning is compromised by not asking participants to stop aiming upon entering the washout period. This happened at three places in the study, once in Experiment 2, once in the re-used data by Fernandez-Ruiz et al., 2011, and once in the re-used data by Mazzoni and Krakauer 2006 (no-instruction group). We would like to know how the conclusion is impacted by the ill-estimated implicit learning.

3. All the new experiments limit preparation time (PT) to eliminate explicit learning. In fact, the results are based on the assumption that shortened PT leads to implicit-only learning (e.g., Figure 4). Is the manipulation effective, as claimed? This paradigm needs to be validated using independent measures, e.g., by direct measurement of implicit and/or explicit strategy.

4. Related to point 3, it is noted that the aiming report produced a different implicit learning estimate than a direct probe in Exp1 (Figure S5E). However, Figure 3 only reported Exp1 data with the probe estimate. What would the data look like with report-estimated implicit learning? Will it show the same negative correlation results with good model matches (Figure 3H)?

5. Figure 3H is one of the rare pieces of direct evidence to support the model, but we find the estimated slope parameter is based on a B value of 0.35, which is unusually high. How is it obtained? Why is it larger than most previous studies have suggested? In fact, this value is even larger than the learning rate estimated in Exp 3 (Figure 6C). Is it related to the small sample size (see below)?

6. The direct evidence supporting a competition of implicit and explicit learning is still weak. Most data presented here are about the steady-state learning amplitude; the authors suggest that steady-state implicit learning is proportional to the size of the rotation (Equation 5). But this is directly contradictory to the experimental finding that implicit learning saturates quickly with rotation size (Morehead et al., 2017; Kim et al., 2018). This prominent finding has been dismissed by the proposed model. On the other hand, one classical finding for conventional VMR is that explicit learning would gradually decrease after its initial spike, while implicit learning would gradually increase. The competition model appears unable to account for this stereotypical interaction. How can we account for this simple pattern with the new model or its possible extensions?

7. General comments about the data:

Experiments 3 and 4 (Figures 6 and 7) do not contribute to the theorization of competition between implicit and explicit learning. The authors appear to use them to show that implicit learning properties (error sensitivity) can be modified, but then this conclusion critically depends on the assumption that the limit-PT paradigm produces implicit-only learning, which is yet to be validated.

Figure 4 is not a piece of supporting evidence for the competition model since those obtained parameters are based on assumptions that the competition model holds and that the limit-PT condition "only" has implicit learning.

8. Concerns about data handling:

All the experiments have a limited number of participants. This is problematic for Exp 1 and 2, which have correlation analysis. Note Fig 3H contains a critical, quantitative prediction of the model, but it is based on a correlation analysis of n=9. This is an unacceptably small sample. Experiment 3 only had 10 participants, but its savings result (argued as purely implicit learning-driven) is novel and the first of its kind.

Experiment 2: This experiment tried to decouple implicit and explicit learning, but it is still problematic. If the authors believe that the amount of implicit adaptation follows a state-space model, then the measure of early implicit is correlated to the amount of late implicit because Implicit_late = (ai)^N * Implicit_early where N is the number of trials between early and late. Therefore the two measures are not properly decoupled. To decouple them, the authors should use two separate ways of measuring implicit and explicit. To measure explicit, they could use aim report (Taylor, Krakauer and Ivry 2011), and to measure implicit independently, they could use the after-effect by asking the participants to stop aiming as done here. They actually have done so in experiment 1 but did not use these values.

Figure 6: To evaluate the saving effect across conditions and experiments, using the interaction effect of ANOVA shall be desired.

Figure 8: The hypothesis that they did use an explicit strategy could explain why the difference between the two groups rapidly vanishes. Also, it is unclear whether the data from Taylor and Ivry (2011) are in favor of one of the models as the separate and shared error models are not compared.

Reviewer #1:

The present study by Albert and colleagues investigates how implicit learning and explicit learning interact during motor adaptation. This topic is under heated debate in the sensorimotor adaptation area in recent years, spurring diverse behavioral findings that warrant a unified computational model. The study is to fulfill this goal. It proposes that both implicit and explicit adaptation processes are based on a common error source (i.e., target error). This competition leads to different behavioral patterns in diverse task paradigms.

I find this study a timely and essential work for the field. It makes two novel contributions. First, when dissecting the contribution of explicit and implicit learning, the current study highlights the importance of distinguishing apparent learning size and covert changes in learning parameters. For example, the overt size of implicit learning could decrease while the related learning parameters (e.g., implicit error sensitivity) remain the same or even increase. Many researchers for long have overlooked this dissociation. Second, the current study also emphasizes the role of target error in typical perturbation paradigms. This is an excellent waking call since the predominant view now is that sensory prediction error is for implicit learning and target error for explicit learning.

Given that the paper aims to use a unified model to explain different phenomena, it mixes results from previous work and four new experiments. The paper's presentation can be improved by reducing the use of jargon and making straightforward claims about what the new experiments produce and what the previous studies produce. I will give a list of things to work on later (minor concerns).

Furthermore, my major concern is whether the property of the implicit learning process (e.g., error sensitivity) can be shown subjective to changes without making model assumptions.

My major concern is whether we can provide concrete evidence that the implicit learning properties (error sensitivity and retention) can be modified. Even though the authors claim that the error sensitivity of implicit learning can be changed, and the change subsequently leads to savings and interference (e.g., Figures 6 and 7), I find that the evidence is still indirect, contingent on model assumptions. Here is a list of results that concern error sensitivity changes.

1. Figures 1 and 2: Instruction is assumed to leave implicit learning parameters unchanged.

2. Figure 4: It appears that the implicit error sensitivity increases during relearning. However, Fig4D cannot be taken as supporting evidence. How the model is constructed (both implicit and explicit learning are based on target error) and what assumptions are made (Low RT = implicit learning, High RT = explicit + implicit) determine that implicit learning's error sensitivity must increase. In other words, the change in error sensitivity resulted from model assumptions; whether implicit error sensitivity by itself changes cannot be independently verified by the data.

3. Figure 6: With limited PT, savings still exists with an increased implicit error sensitivity. Again, this result also relies on the assumption that limited PT leads to implicit-only learning. Only with this assumption, the error sensitivity can be calculated as such.

4. Figure 7: with limited PT anterograde interference persists, appearing to show a reduced implicit error sensitivity. Again, this is based on the assumption that the limited PT condition leads to implicit-only learning.

Across all the manipulations, I still cannot find an independent piece of evidence that task manipulations can modify learning parameters of implicit learning without resorting to model assumptions. I would like to know what the authors take on this critical issue. Is it related to how error sensitivity is defined?

Reviewer #2:

The paper provides a thorough computational test of the idea that implicit adaptation to rotation of visual feedback of movement is driven by the same errors that lead to explicit adaptation (or strategic re-aiming movement direction), and that these implicit and explicit adaptive processes are therefore in competition with one another. The results are incompatible with previous suggestions that explicit adaptation is driven by task errors (i.e. discrepancy between cursor direction and target direction), and implicit adaptation is driven by sensory prediction errors (i.e. discrepancy between cursor direction and intended movement direction). The paper begins by describing these alternative ideas via state-space models of trial by trial adaptation, and then tests the models by fitting them both to published data and to new data collected to test model predictions.

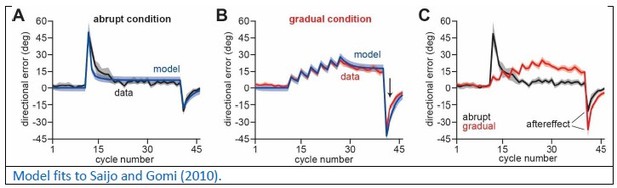

The competitive model accounts for the balance of implicit-explicit adaptations observed previously when participants were instructed how to counter the visual rotation to augment explicit learning across multiple visual rotation sizes (20, 40 and 60 degrees; Neville and Cressman, 2018). It also fits previous data in which the rotation was introduced gradually to augment implicit learning (Saijo and Gomi, 2010). Conversely, a model based on independent adaptation to target errors and sensory prediction errors could not reproduce the previous results. The competitive model also accounts for individual participant differences in implicit-explicit adaptation. Previous work showed that people who increased their movement preparation time when faced with rotated feedback had smaller implicit reach aftereffects, suggesting that greater explicit adaptation led to smaller implicit learning (Fernandes-Ruis et al., 2011). Here the authors replicated this general effect, but also measured both implicit and explicit learning under experimental manipulation of preparation time. Their model predicted the observed inverse relationship between implicit and explicit adaptation across participants.

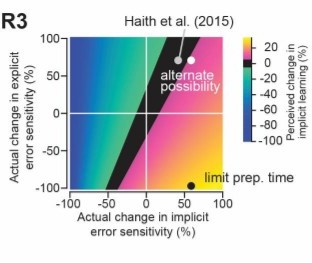

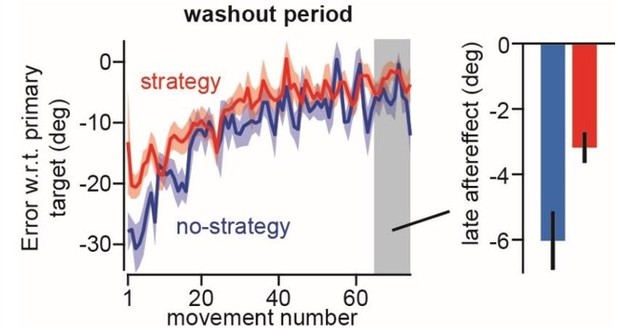

The authors then turned their attention to the issue of persistent sensorimotor memory – both in terms of savings (or benefit from previous exposure to a given visual rotation) and interference (or impaired performance due to exposure to an opposite visual rotation). This is a topic that has a long history of controversy, and there are conflicting reports about whether or not savings and interference rely predominantly on implicit or explicit learning. The competition model was able to account for some of these unresolved issues, by revealing the potential for a paradoxical situation in which the error sensitivity of an implicit learning process could increase without observable increase in implicit learning rate (or even a reduction in implicit learning rate). The authors showed that this paradox was likely at play in a previous paper that concluded that saving is entirely explicit (Haith et al., 2015), and then ran a new experiment with reduced preparation time to confirm some recent reports that implicit learning can result in savings. They used a similar approach to show that long-lasting interference can be induced for implicit learning.

Finally, the authors considered data that has long been cited to provide evidence that implicit adaptation is obligatory and driven by sensory prediction error (Mazzoni and Krakauer, 2006). In this previous paper, participants were instructed to aim to a secondary target when the visual feedback was rotated so that the cursor error towards the primary target would immediately be cancelled. Surprisingly, the participants' reach directions drifted even further away from the primary target over time – presumably in an attempt to correct the discrepancy between their intended movement direction and the observed movement direction. However, a competition model involving simultaneous adaptation to target errors from the primary target and opposing target errors from the secondary target offers an alternative explanation. The current authors show that such a model can capture the implicit "drift" in reach direction, suggesting that two competing target errors rather than a sensory prediction error can account for the data. This conclusion is consistent with more recent work by Taylor and Ivry (2011), which showed that reach directions did not drift in the absence of a second target, when participants were coached to immediately cancel rotation errors in advance. The reason for small implicit aftereffect reported by Taylor and Ivry (2011) is open for interpretation. The authors of the current paper suggest that adaptation to sensory prediction errors could underlie the effect, but an alternative possibility is that there is an adaptive mechanism based on reinforcement of successful action.

Overall, this paper provides important new insights into sensorimotor learning. Although there are some conceptual issues that require further tightening – including around the issue of error sensitivity versus observed adaptation, and the issue of whether or not there is evidence that sensory prediction errors drive visuomotor rotation – I think that the paper will be highly influential in the field of motor control.

Line 19: I think it is possible that more than 2 adaptive processes contribute to sensorimotor adaptation – perhaps add "at least".

Lines 24-27: This section does not refer to specific context or evidence and is consequently obtuse to me.

Line 52: Again – perhaps add "at least".

Line 68: I am not really sure what you are getting at by "implicit learning properties" here. Can you clarify?

Line 203: I think the model assumption that there was zero explicit aiming during washout is highly questionable here. The Morehead study showed early washout errors of less than 10 degrees, whereas errors were ~ 20 degrees in the abrupt and ~35 degrees in the gradual group for Saijo and Gomi. I think it highly likely that participants re-aimed in the opposite direction during washout in this study – what effect would this have on the model conclusions?

Line 382: It would be helpful to explicitly define what you mean by learning, adaptation, error sensitivity, true increases and true decreases in this discussion – the terminology and usage are currently imprecise. For example, it seems to me that according to the zones defined "truth" refers to error sensitivity, and "perception" refers to observed behaviour (or modelled adaptation state), but this is not explicitly explained in the text. This raises an interesting philosophical question – is it the error sensitivity or the final adaptation state associated with any given process that "truly" reflects the learning, savings or interference experienced by that process? Some consideration of this issue would enhance the paper in my opinion.

Figure 5 label: Descriptions for C and D appear inadvertently reversed – C shows effect of enhancing explicit learning and D shows effect of suppressing explicit learning.

Line 438: The method of comparing learning rate needs justification (i.e. comparing from 0 error in both cases so that there is no confound due to the retention factor).

Line 539: I am not sure if I agree that the implicit aftereffect in the no target group need reflect error-based adaptation to sensory prediction error. It could result from a form of associative learning – where a particular reach direction is associated with successful target acquisition for each target. The presence of an adaptive response to SPE does not fit with any of the other simulations in the paper, so it seems odd to insist that it remains here. Can you fit a dual error model to the Taylor and Ivry (single-target) data? I suspect it would not work, as the SPE should cause non-zero drift (i.e. shift the reach direction away from the primary target).

Line 556: Be precise here – do you really mean SPE? It seems as though you only provided quantitative evidence of a competition between errors when there were 2 physical targets.

Line 606: Is it necessarily the explicit response that is cached? I think of this more as the development of an association between action and reward – irrespective of what adaptive process resulted in task success. It would be nice to know what happened to aftereffects after blocks 1 and 2 in study 3. An associative effect might be switched on and off more easily – so if a mechanism of that kind were at play, I would predict a reduced aftereffect as in Huberdeau et al.

Line 720: Ref 12 IS the Mazzoni and Krakauer study, ref 11 involves multiple physical targets, and ref 21 is the Taylor and Ivry paper considered above and in the next point. None of these papers therefore require an explanation based on SPE. However, ref #17 appears to provide a more compelling contradiction to the notion that target errors drive all forms of adaptation – as people adapt to correct a rotation even when there is never a target error (because the cursor jumps to match the cursor direction). A possible explanation that does not involve SPE might be that people retain a memory of the initial target location and detect a "target error" with respect to that location.

Line 737: I don't agree with this – the observation of an after-effect with only 1 physical target but instructed re-aiming so that there was 0 target error, certainly implies some other process besides a visual target error as a driver of implicit learning. However, as argued above, such a process need not be driven by SPE. Model-free processes could be at play…

Line 791: Some more detail on how timing accuracy was assured in the remote experiments is needed. The mouse sample rate can be approximately 250 Hz, but my experience with it is that there can be occasional long delays between samples on some systems. The delay between commands to print to the screen and the physical appearance on the screen can also be long and variable – depending on the software and hardware involved.

Line 861: How did you specify or vary the retention parameters to create these error sensitivity maps?

Line 865: Should not the subscripts here be "e" and "I" rather than "f" and "s"?

Line 1009: Why were data sometimes extracted using a drawing package (presumably by eye? – please provide further details), and sometimes using GRABIT in Matlab?

Line 1073: More detail about the timing accuracy and kinematics of movement are required.

Line 1148: Again – should not the subscripts here be e and I rather than f and s?

Reviewer #3:

In this paper, Albert and colleagues explore the role of target error and sensory prediction error on motor adaptation. They suggest that both implicit and explicit adaptation would be driven by target error. In addition, implicit adaptation is also influenced by sensory prediction error.

While I appreciate the effort that the authors have done to come up with at theory that could account for many studies, there is not a single figure/result that does not suffer from main limitations as I highlight in the major comments below. Overall, the main limitations are:

I believe that the authors neglect some very relevant papers that contradict their theory and model.

– They did not take into account some results on the topic such as Day et al. 2016 and McDougle et al. 2017. It is true that they acknowledge it and discuss it but I am not convinced by their arguments (more on this topic in a major comment about the discussion below).

– They did not take into account the fact that there is no proof that limiting RT is a good way to suppress the explicit component of adaptation. When the number of targets is limited, these explicit responses can then be cached without any RT cost. McDougle and Taylor, Nat Com (2019) demonstrated this for 2 targets (here the authors used only 4 targets). There exist no other papers that proof that limiting RT would suppress the explicit strategy as claimed by the authors. To do so, one needs to limit PT and to measure the explicit or the implicit component. The authors should prove that this assumption holds because it is instrumental to the whole paper. The authors acknowledge this limitation in their discussion but dismiss it quite rapidly. This manipulation is so instrumental to the paper that it needs to be proven, not argued (more on this topic in a major comment about the discussion below).

– They did not take into account the fact that the after-effect is a measure of implicit adaptation if and only if the participants are told to abandon any explicit strategy before entering the washout period (as done in Taylor, Krakauer and Ivry, 2011). This has important consequences for their interpretation of older studies because it was then never asked to participants to stop aiming before entering the washout period as the authors did in experiment 2.

There are also major problems with statistics/design:

– I disagree with the authors that 10-20 people per group (in their justification of the sample size in the second document) is standard for motor adaptation experiments. It is standard for their own laboratories but not for the field anymore. They even did not reach N=10 for some of the reported experiments (one group in experiment 1). Furthermore, this sample size is excessively low to obtain reliable estimation of correlations. Small N correlation cannot be trusted: https://garstats.wordpress.com/2018/06/01/smallncorr/

– Justification of sample size is missing. What was the criteria to stop data collection? Why is the number of participants per group so variable (N=9 and N=13 for experiment 1, N=17 for experiment 2, N=10 for experiment 3, N=10 ? per group for experiment 4). Optional stopping is problematic when linked to data peeking (Armitage, McPherson and Rowe, Journal of the Royal Statistical Society. Series A (General), 1969). It is unclear how optional stopping influences the outcome of the statistical tests of this paper.

– The authors should follow the best practices and add the individual data to all their bar graphs (Rousselet et al. EJN 2016).

– Missing interactions (Nieuwenhuis et al., Nature Neuro 2011), misinterpretation of non-significant p-values (Altman 1995 https://www.bmj.com/content/311/7003/485)

Given these main limitations, I don't think that the authors have a convincing case in favor of their model and I don't think that any of these results actually support the error competition model.

Detailed major comments per equation/figure/section:

Equation 2: the authors note that the sensory prediction error is "anchored to the aim location " (line 104-105) which is exactly what Day et al. 2016 and McDougle et al. 2017 demonstrated. Yet, they did not fully take into account the implication of this statement. If it is so, it means that the optimal location to determine the extent of implicit motor adaptation is the aim location and not the target. Indeed, if the SPE is measured with respect to the aim location and is linked to the implicit system, it means that implicit adaptation will be maximum at the aim location and, because of local generalization, the amount of implicit adaptation will decay gradually when one wants to measure this system away of that optimal location (Figure 7 of Day et al). This means that, the further the aiming direction is from the target, the smaller the amount of implicit adaptation measured at the target location will be. This will result in an artificial negative correlation between the explicit and implicit system without having to relate to a common error source.

Equation 3: the authors do not take into account results from their lab (Marko et al., Herzfeld) and from others (Wei and Kording) that show that the sensitivity to error depends on the size of the rotation.

Equation 5: In this equation, the authors suggest that the steady-state amount of implicit adaptation is directly proportional to the size of the rotation. Thanks to a paradigm similar to Mazzoni and Krakauer 2006, the team of Rich Ivry has demonstrated that the implicit response saturates very quickly with perturbation size (Kim et al., communications Biology, 2018, see their Figure 1).

Data from Cressman, Figure 1: Following Day et al., one should expect that, when the explicit strategy is larger (instruction group in Cressman et al. compared to the no-instruction group), the authors are measuring the amount of implicit adaptation further from aiming direction where it is maximum. As a result, the amount of implicit adaptation appears smaller in the instruction group simply because they were aiming more than the no-instruction group (Figure 1G).

– Figure 1H: the absence of increase in implicit adaptation is not due to competition as claimed by the authors but is due to the saturation of the implicit response with increasing rotation size (Kim et al. 2018). It saturates at around 20{degree sign} for all rotations larger than 6{degree sign}.

Data from Saijo and Gomi: Here the authors interpret the after-effect as a measure of implicit adaptation but it is not as the participants were not told that the perturbation would be switched off.

– Even if these after-effects did represent implicit adaptation, these results could be explained by Kim et al. 2018 and Day et al. 2016. For a 60{degree sign} rotation, the implicit component of adaptation will saturate around 15-20{degree sign} (like for any large perturbation). The explicit component has to compensate for that but it does a better job in the abrupt condition than in the gradual condition where some target error remains. Given that the aiming direction is larger in the abrupt case than in the gradual case, the amount of implicit adaptation is again measured further away from its optimal location in the abrupt case than in the gradual case (Day et al. 2016 and McDougle et al. 2017).

– There is no proof that introducing a perturbation gradually suppresses explicit learning (line 191). The authors did not provide a citation for that and I don't think there is one. People confound awareness and explicit (also valid for line 212). Rather, given that the implicit system saturates at around 20{degree sign} for large rotation, I would expect that the re-aiming accounts for 20{degree sign} of the ~40{degree sign} of adaptation in the gradual condition.

Line 205-215: The chosen parameters appear very subjective. The authors should perform a sensitivity analyses to demonstrate that their conclusions do not depend on these specific parameters.

Figure 3: The after-effect in the study of Fernandez-Ruiz et al. does not solely represent the implicit adaptation component as these researchers did not tell their participants that they should stop aiming during the washout.

– Correlations based on N=9 should not be considered as meaningful. Such correlation is subject to the statistical significance fallacy: it can only be true if it is significant with N=9 while this represents a fallacy (Button et al. 2013).

Experiment 1 of the authors suffer from several limitations:

– small sample size (N=9 for one group). Why is the sample size different between the two groups?

– Limiting RT does not abolish the explicit component of adaptation (Line 1027: where is the evidence that limit PT is effective in abolishing explicit re-aiming?). Haith and colleagues limited reaction time on a small subset of trials. If the authors want to use this manipulation to limit re-aiming, they should first demonstrate that this manipulation is effective in doing so (other authors that have used this manipulation but have failed to do validate it first). I wonder why the authors did not measure the implicit component for their limit PT group like they did in the No PT limit group. That is required to validate their manipulation.

– The negative correlation from Figure 3H can be explained by the fact that the SPE is anchored at the aiming direction and that, the larger the aiming direction is, the further the authors are measuring implicit adaptation away from its optimal location.

– The difference between the PT limit and NO PT limit is not very convincing. First, the difference is barely significant (line 268). Why did the authors use the last 10 epochs for experiment 1 and the last 15 for experiment 2? This looks like a post-hoc decision to me and the authors should motivate their choice and should demonstrate that their results hold for different choice of epochs (last 5, 10, 15 and 20) to demonstrate the robustness of their results. Second, the degrees of freedom of the t-test (line 268) does not match the number of participants (9 vs. 13 but t(30)?)

– Why did the authors measure the explicit strategy via report (Figure S5E) while they don't use those values for the correlations? This looks like a post-hoc decision to me.

Experiment 2:

– This experiment is based on a small sample size to test for correlations (N=17). What was the objective criterion used to stop data collection at N=17 and not at another sample size? This should be reported.

– This experiment does not decouple implicit and explicit in contrast to what the authors pretend. If the authors believe that the amount of implicit adaptation follows a state-space model, then the measure of early implicit is correlated to the amount of late implicit because Implicit_late = (ai)^N * Implicit_early where N is the number of trials between early and late. Therefore the two measures are not properly decoupled. To decouple them, the authors should use two separate ways of measuring implicit and explicit. To measure explicit, they could use aim report (Taylor, Krakauer and Ivry 2011) and to measure implicit independently, they could use the after-effect by asking the participants to stop aiming as done here. They actually have done so in experiment 1 but did not use these values.

Figure 4:

– Data from the last panel of Figure 4B (line 321-333) should be analyzed with a 2x2 ANOVA and not by a t-test if the authors want to make the point that the learning differences were higher in high PT than low PT trials (Nieuwenhuis et al., Nature Neuroscience, 2011).

– Lines 329-330: the authors should demonstrate that the explicit strategy is actually suppressed by measuring it via report. It is possible that limiting PT reduces the explicit strategy but it might not suppress it. Therefore, any remaining amount of explicit strategy could be subject to savings.

– Coltman and Gribble (2019) demonstrated that fitting state-space models to individual subject's data was highly unreliable and that a bootstrap procedure should be preferred. Lines 344-353: the authors should replace their t-tests with permutation tests in order to avoid fitting the model to individual subject's data.

– It is unclear how the error sensitivity parameters analysed in Figure 4D were obtained. I can follow in the Results section but there is basically nothing on this in the methods. This needs to be expanded.

– The authors make the assumption that the amount of implicit adaptation is the same for the high PT target and for the low PT target. What is the evidence that this assumption is reasonable? Those two targets are far apart while implicit adaptation only generalizes locally. Furthermore, low PT target is visited 4 times less frequently than the high PT target. The authors should redo the experiment and should measure the implicit component for the high PT trials to make sure that it is related to the implicit component for the low PT trials.

– I don't understand why the authors illustrated the results from line 344-349 on Figure 4D and not the results of the following lines, which are also plausible. By doing so, the authors biased the results in favor of their preferred hypothesis. This figure is by no means a proof that the competition hypothesis is true. It shows that "if" the competition hypothesis is true, then there are surprising results ahead. The authors should do a better job at explaining that both models provide different interpretation of the data.

Figure 5: This figure also represent a biased view of the results (like Figure 4D). The competition hypothesis is presented in details while the alternative hypothesis is missing. How would the competition map look like with separate errors, especially when taking into account that the SPE (and implicit adaptation) is anchored at the aiming direction (generalization)?

Figure 6:

This figure is compatible with the fact that limiting PT on all trials is not efficient to suppress explicit adaptation, at least less so than doing it on 20% of the trials (see McDougle et al. 2019 for why this is the case) and that the remaining explicit adaptation leads to savings.

– I don't understand why the authors did not measure implicit (and therefore explicit adaptation) directly in these experiment like they did in experiment 2. This would have given the authors a direct readout of the implicit component of adaptation and would have validated the fact that limiting PT might be a good way to suppress explicit adaptation. Again, this proof is missing in the paper.

– Experiment 3 is based on a very limited number of participants (N=10!). No individual data are presented.

– Data from Figure 6C should be analyzed with an ANOVA and an interaction between the factor experiment (Haith vs. experiment 3) and block (1 vs. 2) should be demonstrated to support such conclusion (Nieuwenhuis et al. 2011).

Figure 7:

The data contained in this figure suffer from the same limitations as in the previous graphs: limiting PT does not exclude explicit strategy, sample size is small (N=10 per group), no direct measure of implicit or explicit is provided.

– In addition, no statistical tests are provided beyond the two stars on the graph. The data should be analyzed with an ANOVA (Nieuwenhuis et al. 2011).

– The number of participants per group is never provided (N=20 for both groups together).

– It is unclear to me how this result contributes to the dissociation between the separate and shared error models.

Figure 8:

The whole explanation here seems post-hoc because none of the two models actually account for this data. The authors had to adapt the model to account for this data. Note that despite that, the model would fail to explain the data from Kim et al. 2018 that represents a very similar task manipulation.

– Line 463-467: the authors claim equivalence based on a non-significant p-value (Altman 1995). Given the small effect size, they don’t have any power to detect effects of small or medium size. They cannot conclude that there is no difference. They can only conclude that they don’t have enough power to detect a difference. As a result, it does NOT suggest that implicit adaptation was unaltered by the changes in explicit strategy.

– In Mazzoni and Krakauer, the aiming direction was neither controlled nor measured. As a result, given the appearance of a target error with training, it is possible that the participants aimed in the direction opposite to the target error in order to reduce it. This would have reduced the apparent increase in implicit adaptation. The authors argue against this possibility based on the 47.8{degree sign} change in hand angle due to the instruction to stop aiming. I remained unconvinced by this argument as I would like to get more info about this change (Mean, SD, individual data). Furthermore, it is unclear what the actual instructions were. Asking to stop aiming or asking to bring one’s invisible hand on the primary target will have different effects on the change in hand angle.

– The data during the washout period suffers from the fact that the participants from the no-strategy group were not told to stop aiming. The hypothesis that they did use an explicit strategy could explain why the difference between the two groups rapidly vanishes. In other words, if the authors want to use this experiment to demonstrate support any of their hypotheses, they should redo it properly by telling the participants to stop using any explicit strategy at the start of the washout period to make sure that the after-effect is devoid of any explicit strategy.

– It is unclear whether the data from Taylor and Ivry (2011) are in favor of one of the models as the separate and shared error models are not compared.

Discussion: it is important and positive that the authors discuss the limitation of their approach but I feel that they dismiss potential limitations rather quickly even though these are critical for their conclusions. They need to provide new data to prove those points rather than arguments.

On limiting PT (lines 605-615):

The authors used three different arguments to support the fact that limiting PT suppresses explicit strategy.

– Their first argument is that Haith did not observe savings in low PT trials. This is true but Haith only used low PT trials (with a change in target) on 20% of the trials. Restricting RT together with a switch in target location is probably instrumental in making the caching of the response harder. This is very different in the experiments done by the authors. In addition, one could argue that Haith et al. did not find evidence for savings but that these authors had limited power to detect a small or medium effect size (their N=12 per group). I agree that savings in low PT trials is smaller than in high PT trials but is savings completely absent in low PT trials? Figure 6H shows that learning is slightly better on block 2 compared to block 1. N=12 is clearly insufficient to detect such a small difference.

– Their second argument is that they used four different targets. McDougle et al. demonstrated caching of explicit strategy without RT costs for two targets and impossibility to do so for 12 targets. The authors could use the experimental design of McDougle if they wanted to prove that caching explicit strategies is impossible for four targets. I don't see why you could cache strategies without RT cost for 2 targets but not for 4 targets. This argument in not convincing.

– The last argument of the authors is that they imposed even shorter latencies (200ms) than Haith (300ms). Yet, if one can cache explicit strategies without reaction time cost, it does not matter whether a limit of 200 or 300ms is imposed as there is no RT cost.

On Generalization (line 678-702).

How much does the amount of implicit adaptation decays with increasing aiming direction? Above, I argued that the data from Day et al. would predict a negative correlation between the explicit and the implicit components of adaptation and a decrease in implicit adaptation with increasing rotation size. The authors clearly disagree on the basis of four arguments. None of them convinced me.

– First, they estimate this decrease to be only 5{degree sign} based on these two papers (FigS5A and B but 5C shows ~10{degree sign}). This seems to be a very conservative estimate as Day et al. reported a 10{degree sign} reduction in after-effects for 40{degree sign} of aiming direction (see their Figure 7). An explicit component of 40{degree sign} was measured by Neville and Cressman for a 60{degree sign} rotation. The 10{degree sign} reduction based on a 40{degree sign} explicit strategy fits perfectly with data from Figure 1G (black bars) and Figure 2F. Off course, the experimental conditions will influence this generalization effect but this should push the authors to investigate this possibility rather than to dismiss it because the values do not precisely match. How close should it match to be accepted?

– Second, it is unclear how this generalization changes with the number of targets (argument on lines 687-689). This has never been studied and cannot be used as an argument based on further assumptions. Furthermore, I am not sure that the generalization would be so different for 2 or 4 targets.

– Third, the authors measured the explicit strategy in experiment 1 via report in a very different way than what is usually used by the authors as the participants do not have to make use of them. It seems to be suboptimal as the authors did not use them for their correlation on Figure 3H and the difference reported in Figure S5E is tiny (no stats are provided) but is based on a very limited number of participants with no individual data to be seen. If it is suboptimal for Figure 3H why is it sufficient as an argument?

– Fourth, when interpreting the data of Neville and Cressman (line 690-692), the authors mention that there were no differences between the three targets even though two of them corresponded to aim directions for other targets. As far as I can tell, the absence of difference in implicit adaptation across the three targets is not mentioned in the paper by Neville and Cressman as they collapsed the data across the three targets for their statistical analyses throughout the paper. In addition, I don't understand why we should expect a difference between the three targets. If the SPE and the implicit process are anchored to the aiming direction and given that the aiming direction is different for the three targets, I would not expect that the aiming direction of a visible target would be influenced by the fact that, for some participants, this aiming direction corresponds to the location of an invisible target.

– Finally, the authors argue here about the size of the influence of the generalization effect on the amount of implicit adaptation. They never challenge the fact that the anchoring of implicit adaptation on the aiming direction and the presence of a generalization effect (independently of its size) leads to a negative correlation between the implicit and explicit component of adaptations (their Figure 3) without any need for the competition model.

Final recommendations

– The authors should perform an unbiased analysis of a model that include separate error sources, the generalization effect and a saturation of implicit adaptation with increasing rotation size. In my opinion, such model would account for almost all of the presented results.

– They should redo all the experiments based on limited preparation time and should include direct measures of implicit or/and explicit strategies (for validation purposes). This would require larger group size.

– They should replicate the experiments where they need a measure of after-effect devoid of any explicit strategies as this has only become standard recently (experiment for Figure 2 and Figure 8). Not that for Figure 8, they might want to measure the explicit aim during the adaptation period as well.

[Editors’ note: further revisions were suggested prior to acceptance, as described below.]

Thank you for resubmitting your work entitled "Competition between parallel sensorimotor learning systems" for further consideration by eLife. Your revised article has been evaluated by Michael Frank (Senior Editor) and a Reviewing Editor.

The manuscript has been improved but there are some remaining issues that need to be addressed, as outlined below:

One review raised the concern about Experiment 1, which presented perturbations with increasing rotation size and elicited larger implicit learning than the abrupt perturbation condition. However, this design confounded the condition/trial order and the perturbation size. The other concern is the newly added non-monotonicity data set from Tsay's study. On the one hand, the current paper states that the proposed model might not apply to error clamp learning; on the other hand, this part of the results was "predicted" by the model squarely. Thus, can it count as evidence that the proposed model is parsimonious for all visuomotor rotation paradigms? This message must be clearly stated with a special reference to error-clamp learning.

Please find the two reviewers' comments and recommendations below, and I hope this will be helpful for revising the paper.

Reviewer #1:

The present study investigates how implicit and explicit learning interacts during sensorimotor adaptation, especially the role of performance or target error during this interaction. It is timely for the area that needs a more mechanistic model to explain diverse findings accumulated in recent years. The revision has addressed previous major concerns and provided extra data that supports the idea that implicit and explicit processes compete for a common target error for a large proportion of studies in the area. The paper is thoughtfully organized and convincingly presented (though a bit too long), including a variety of data sets.

As a repeal submission, the current work has successfully addressed previous major concerns:

1) Direct evidence for supporting the competition model is lacking.

The revision added new evidence that total learning is negatively correlated with implicit learning but positively correlated with explicit learning (Figure 4G and H). This part of the data argues against the alternative SPE model and provides direct support to the competition model. The added appendix 3 also includes other studies' data sets to strengthen the supports.

Furthermore, Experiment1 is new with an incremental perturbation to decrease rotation awareness and thus provides new support for the model. This also helps to address the previous concern that the data from Saijo and Gomi is not clean enough due to a lack of stop-aiming instruction during the measurement of implicit learning. The other not-so-clean data set from Fernadez-Ruiz is removed from the main text in the revision. Putting together, these new data sets and analysis results constitute a rich set of direct supports that address the biggest concern in the previous submission.

2) Lack of testing alternative models.

The revision tested an alternative idea that explicit learning compensates for the variation in implicit learning instead of the other way around as in their competition model (Figure 4). It also tested an alternative model based on the generalization of implicit learning anchored on the aiming direction (Figure 5). The evidence is clear: both alternative models failed to capture the data.

3) The limited preparation time appears not to exclude explicit learning.

This concern was raised by all previous reviewers. The reviewers also pointed out a possible indicator of explicit learning, i.e., a small spurious drop in reaching angle at the beginning of the no-aiming washout phase. The revision provided a new experiment to show that the small drop is most likely due to a time-dependent decay of implicit learning during the 30s instruction period. This new data set (Exp 3) is indeed convincing and important. I am impressed with the authors' thoughtful effort to explain this subtle and tricky issue.

In sum, I believe the revision did an excellent job of addressing all previous major concerns with more data, more thorough analysis, and more model comparisons. Here I suggest a few minor changes for this revision:

Line 59: the cited papers (17-21) did not all suggest savings resulted from changes of the implicit learning rate, as implied by the context here.

Some references are not in the right format: ref1 states "Journal of Neuroscience," while refs2 and 4 state "The Journal of neuroscience : the official journal of the Society for Neuroscience." Possibly caused by downloads from google scholar. Please keep it consistent.

Line66: replace the semicolon with a comma. Also, is citation 9 about showing a dominant role of SPE?

Figure 1H: it appears the no-aiming trials were at the end of each stepwise period, but in the Methods, it is stated that these trials appeared twice (at the middle and the end). Which version is true?

L1331: why use bootstrapping here? Why not simply fit the model to individual subjects (given n = 37 here)? Interestingly, when comparing 60-degree stepwise vs. 60-degree abrupt, a direct fit to individuals was used… In the Results, it is stated that a single parameter can parsimoniously "predict" the data. But in the Methods, it is clear that all data were used to "fit" the parameter p_i. Can we call this a prediction, strictly? Is it possible to fit a partial data set to show the same results? Not through bootstrapping but through using, say, two stepwise phases? This type of prediction has been done for Exp2, but not here.

Line 195: the authors ruled out the potential bias caused by the interdependence between implicit and explicit learning as one was obtained from subtracting the other from the total learning. By rearranging the model (Equation4) to have the implicit learning as a function of total learning (as opposed to explicit learning), and by showing the model prediction would not change after this re-arrangement, the authors tried to argue that the modeling results are not free from the inter-dependence of two types of learning. I think that this argument aims for the wrong target. The real concern is that implicit and explicit learning are not independently measured, which is true no matter how the model equation is arranged. The prediction of the rearranged model, of course, would provide the same model prediction since this does not change the model at all, given the inter-dependence of variables. All the data in Figure 1 have this problem. It is a data problem, not a model problem. And, the data refute the alternative, independent model, that is. There is no need to re-arrange the model without solving the problem in data.

The authors added the data set from Tsay et al. to Part 1, which shows a non-monotonic change of implicit learning with increasing rotation. However, this is only one version of their data set obtained by the online platform; the other version of the same experiment conducted in person shows a different picture with constant implicit learning over different rotations. Any particular reason to report one version instead of the other? Here owes an explanation to the readers.

Line 203: dual model -> competition model

Line463: "that the x-axis in Figures 5A-C slightly overestimates explicit strategy", the axis overestimates…

Line 496: this paragraph starts with a why question (why the competition model works better), but it does not address the question but shows it works better.

Figure 6C: not clear what the right panel is without axis labeling and a detailed description in the caption.

Line 563: the family dinner story is interesting and naughty, but our readers might not need it, especially when the paper is already long. I suggest removing it and starting this section with the burning question of why the implicit error sensitivity changes without the changes of implicit learning size.

Line 589: what is Timepoint 1 and 2, never mentioned before or in the figure. It is also confusing with a capital T.

Figure 7C: it would make much better sense to plot total learning in C along with the implicit and explicit learning.

L595: the data presented before in Figures2 are explained in this map illustration. Enhancing or suppressing explicit learning has been conceptualized as moving along the y-axis without changing the implicit error sensitivity. Retrospectively, this is also the case when the model is fitted to these data by assuming a constant implicit learning rate in previous figures. Is there any evidence to support this assumption for model fitting, or can we safely claim varying implicit learning rates could not account for the data better than otherwise?

L685: we observe implicit learning is suppressed as a result of anterograde interference. Without preparation time constraints, the impairment in implicit learning is less. Is there any way to compare their respectively implicit error sensitivity? This would give us some information about how explicit learning compensates.

Figure 10G: the conceptual framework is speculative. It is fine to discuss the possible neurophysiological underpinnings in the Discussion as it currently stands. But we are better off removing it from the Results.

Reviewer #4:

In this paper, Albert et al. test a novel model where explicit and implicit motor adaptation processes share an error signal. Under this error sharing scheme, the two systems compete – that is, the more that explicit learning contributes to behavior, the less that implicit adaptation does. The authors attempt to demonstrate this effect over a variety of new experiments and a comprehensive re-analysis of older experiments. They contend that the popular model of SPEs exclusively driving implicit adaptation (and implicit/explicit independence) does not account for these results. Once target error sensitivity is included into the model, the resulting competition process allows the model to fit a variety of seemingly disparate results. Overall, the competition model is argued to be the correct model of explicit/implicit interactions during visuomotor learning.

I'm of two minds on this paper. On the positive side, this paper has compelling ideas, a laudable breadth and amount of data/analyses, and several strong results (mainly the reduced-PT results). It is important for the motor learning field to start developing a synthesis of the 'Library of Babel' of the adaptation literature, as is attempted here and elsewhere (e.g., D. Wolpert lab's 'COIN' model). On the negative side, the empirical support feels a bit like a patch-work – some experiments have clear flaws (e.g., Exp 1, see below), others are considered in a vacuum that dismisses previous work (e.g., nonmonotonicity effect), and many leftover mysteries are treated in the Discussion section rather than dealt with in targeted experiments. While some of the responses to the previous reviewers are effective (e.g., showing that reduced PT can block strategies), others are not (e.g., all of Exp 1, squaring certain key findings with other published conflicting results, the treatment of generalization). The overall effect is somewhat muddy – a genuinely interesting idea worth pursuing, but unclear if the burden of proof is met.

(1) The stepwise condition in Exp 1 is critically flawed. Rotation size is confounded with time. It is unclear – and unlikely – that implicit learning has reached an asymptote so quickly. Thus, the scaling effect is at best significantly confounded, and at worst nearly completely confounded. I think that this flaw also injects uncertainty into further analyses that use this experiment (e.g., Figure 5).

(2) It could be argued that the direct between-condition comparison of the 60° blocks in Exp 1 rescues the flaw mentioned above, in that the number of completed trials is matched. However, plan- or movement-based generalization (Gonzalez Castro et al., 2011) artifacts, which would boost adaptation at the target for the stepwise condition relative to the abrupt one, are one way (perhaps among others) to close some of that gap. With reasonable assumptions about the range of implicit learning rates that the authors themselves make, and an implicit generalization function similar to previous papers that isolate implicit adaptation (e.g., σ around ~30°), a similar gap could probably be produced by a movement- or plan-based generalization model. [I note here that Day et al., 2016 is not, in my view, a usable data set for extracting a generalization function, see point 4 below.]

(3) The nonmonotonicity result requires disregarding the results of Morehead at al., 2017, and essentially, according to the rebuttal, an entire method (invariant clamp). The authors do mention and discuss that paper and that method, which is welcome. However, the authors claim that "We are not sure that the implicit properties observed in an invariant error context apply to the conditions we consider in our manuscript." This is curious and raises several crucial parsimony issues, for instance: the results from the Tsay study are fully consistent with Morehead 2017. First, attenuated implicit adaptation to small rotations (not clamps; Morehead Figure 5) could be attributed to error cancellation (assuming aiming has become negligible to nonexistent, or never happened in the first place). This (and/or something like the independence model) thus may explain the attenuated 15° result in Figure 1N. Second, and more importantly, the drop-off of several degrees of adaptation from 30°/60° to 90° in Figure 1N is eerily similar to that seen in Morehead '17. Here's what we're left with: An odd coincidence whereby another method predicts essentially these exact results but is simultaneously (vaguely) not applicable. If the authors agree that invariant clamps do limit explicit learning contributions (see Tsay et al. 2020), it would seem that similar nonmonotonicity being present in both rotations and invariant error-clamps works directly against the competition model. Moreover, it could be argued that the weakened 90˚ adaptation in clamps (Morehead) explains why people aim more during 90° rotations. A clear reason, preferably with empirical support, for why various inconvenient results from the invariant clamp literature (see point 6 for another example) are either erroneous, or different enough in kind to essentially be dismissed, is needed.

(4) The treatment given to non-target based generalization (i.e., plan/aim) is useful and a nice revision w/r/t previous reviewer comments. However, there are remaining issues that muddy the waters. First, it should be noted that Day et al. did not counterbalance the rotation sign. This might seem like a nitpick, but it is well known that intrinsic biases will significantly contaminate VMR data, especially in e.g. single target studies. I would thus not rely on the Day generalization function as a reasonable point of comparison, especially using linear regression on what is a Gaussian/cosine-like function. It appears that the generalization explanation is somewhat less handicapped if more reasonable generalization parameterizations are considered. Admittedly, they would still likely produce, quantitatively, too slow of a drop-off relative to the competition model for explaining Exps 2 and 3. This is a quantitative difference, not a qualitative one. W/r/t point 1 above, the use of Exp 1 is an additional problematic aspect of the generalization comparison (Figure 5, lower panels). All in all, I think the authors do make a solid case that the generalization explanation is not a clear winner; but, if it is acknowledged that it can contribute to the negative correlation, and is parameterized without using Day et al. and linear assumptions, I'd expect the amount of effect left over to be smaller than depicted in the current paper. If the answer comes down to a few degrees of difference when it is known that different methods of measuring implicit learning produce differences well beyond that range (Maresch), this key result becomes less convincing. Indeed, the authors acknowledge in the Discussion a range of 22°-45° of implicit learning seen across studies.

(5) I may be missing something here, but is the error sensitivity finding reported in Figure 6 not circular? No savings is seen in the raw data itself, but if the proposed model is used, a sensitivity effect is recovered. This wasn't clear to me as presented.

(6) The attenuation (anti-savings) findings of Avraham et al. 2021 are not sufficiently explained by this model.

(7) Have the authors considered context and inference effects (Heald et al. 2020) as possible drivers of several of the presented results?

https://doi.org/10.7554/eLife.65361.sa1Author response

[Editors’ note: The authors appealed the original decision. What follows is the authors’ response to the first round of review.]

Concerns:

1. There is no clear testing of alternative models. After Figure 1 and 2, the paper goes on with the competition model only. The independent model will never be able to explain differences in implicit learning for a given visuomotor rotation (VMR) size, so it is not a credible alternative to the competition model in its current form. It seems possible that extensions of that model based on the generalization of implicit learning (Day et al. 2106, McDougle et al. 2017) or task-dependent differences in error sensitivity could explain most of the prominent findings reported here. We hope the authors can show more model comparison results to support their model.

Two studies have measured generalization in rotation tasks with instructions to aim directly at the target (Day et al., 2016; McDougle et al., 2017) and have demonstrated that learning generalizes around one’s aiming direction, as opposed to the target direction. As the reviewers note, this generalization rule can contribute to the negative correlation we observed between explicit learning and exclusion-based implicit learning. To address this, in our revised manuscript we directly compare the competition model to an alternative SPE generalization model. Our new analysis is documented in Figure 4 in the revised manuscript. First, we empirically compare the relationship between implicit and explicit learning in our data to generalization curves measured in past studies. In Figures 4A-B we overlay data that we collected in Experiments 2 and 3 with generalization curves measured by Krakauer et al. (2000) and Day et al. (2016). In this way, we test whether generalization is consistent with the correlations between implicit and explicit learning that we measured in our experiments.

In Figures 4B,C, we show dimensioned (i.e., in degrees) relationships between implicit and explicit learning. In Figure 4A, we show a dimensionless implicit measure in which implicit learning is normalized to its “zero strategy” level. To estimate this value, we used the y-intercepts in the linear regressions labeled “data” in Figures 3G and Q. Most notably, the magenta line (Day 1T) shows the aim-based generalization curve measured by Day et al. (2016), where assays were used to tease apart implicit and explicit learning.

These new results demonstrate that implicit learning in Experiments. 2 and 3 (black and brown points) declined about 300% more rapidly with increases in explicit strategy, than predicted by generalization measured by Day et al. (2016). Two empirical considerations would also suggest that the discrepancy between our data and the Day et al. (2006) generalization curve is even larger than that observed in Figures 4A-B.

1. Day et al. (2006) use 1 adaptation target, whereas our tasks used 4 targets. Krakauer et al. (2000) demonstrated that the generalization curve widens with increases in target number (see Figure 4AC). Thus, the Day et al. (2006) study data likely overestimate the extent of generalization-based decay in implicit learning expected in our experimental conditions.

Our “explicit angle” in Figures 4A-B is calculated by subtracting total adaptation and exclusion-based implicit learning. Thus, under the plan-based generalization hypothesis, this measure would overestimate explicit strategy. A generalization-based correction would “contract” our data along the x-axis in Figures 4C-E, increasing the disconnect between our results and the generalization curves. To demonstrate this, we conducted a control analysis where we corrected our explicit measures according to the Day et al. generalization curve (see Appendix 4 in revised manuscript). Our results are shown in Figure . As predicted, correcting explicit measures yielded a poorer match between the data and generalization hypothesis (see discrepancy below each inset).

These analyses show that the alternative hypothesis of plan-based generalization is inconsistent with our measured data, though it could make minor contributions to the relationships we report between implicit and explicit learning (analysis shown on Section 2.2., Lines 442-468 in revised manuscript).

These analyses have relied on an empirical comparison between our data and past generalization studies. But we can also demonstrate mathematically that the implicit and explicit learning patterns we measured are inconsistent with the generalization hypothesis. First, recall that the competition equation states that steady-state implicit learning varies inversely with explicit strategy according to (Equation 4):

We can condense this equation by defining an implicit proportionality constant, p:

These new data provide a way to directly compare the competition model to an SPE generalization model. The key prediction, again, is to test how the relationship between implicit and explicit learning varies with the rotation’s magnitude. To do this, we calculated the implicit proportionality constant (p above) in the competition model and the SPE generalization model, that best matched the measured implicit-explicit relationship. We calculated this value in the 60° learning block alone, holding out all other rotation sizes. We then used this gain to predict the implicit-explicit relationship across the held-out rotation sizes. The competition model is shown in the solid black line in Figure 5D. The Day et al. (2016) generalization model is shown in the gray line. The total prediction error across the held-out 15°, 30°, and 45° rotation periods was two times larger in the SPE generalization model (Figure 4E, repeated-measures ANOVA, F(2,35)=38.7, p<0.001, ηp2=0.689; post-hoc comparisons all p<0.001). Issues with the SPE generalization model were not caused by misestimating the generalization gain, m. We fit the SPE generalization model again this time allowing both π and m to vary to best capture behavior in the 60° period (Figure 4D, SPE gen. best B4). This optimized model generalized very poorly to the held-out data, yielding a prediction error three times larger than the competition model (Figure45D, SPE gen best B4).

To understand why the competition model yielded superior predictions, we fit separate linear regressions to the implicit-explicit relationship measured during each rotation period. The regression slopes and 95% CIs are shown in Figure 4F (data). Remarkably, the measured implicit-explicit slope appeared to be constant across all rotation magnitudes in agreement with the competition theory (Figure 4H, competition). These measurements sharply contrasted with the SPE generalization model, which predicted that the regression slope would decline as the rotation magnitude decreased.

In summary, data in Experiment 1 were poorly described by an SPE learning model with aim-based generalization. While generalization may add to the correlations between implicit and explicit learning, its contribution is small relative to the competition theory. New analyses are described in Section 2.2 in the revised paper.

These analyses all considered how a negative relationship between implicit and explicit learning could emerge due to generalization (as opposed to competition). In our revised work, we consider an additional mechanism by which this inverse relationship could occur. Suppose that implicit learning is driven solely by SPEs as in earlier models. In this case, implicit learning should be immune to changes in explicit strategy. However, a participant that has a better implicit learning system will require less explicit re-aiming to reach a desired adaptation level. In other words, individuals with large SPE-driven learning may use less explicit strategy relative to those with less SPE-driven implicit learning. Like the competition model, this scenario would also yield a negative relationship between implicit and explicit learning, due to the way explicit strategies respond to variation in the implicit system. In other words, the competition equation supposes that the implicit-explicit relationship arises because the implicit system responds to variability in explicit strategy. An SPE learning model supposes that the implicit-explicit relationship arises because the explicit system responds to variability in implicit learning.

To model the latter possibility, suppose that the implicit system responds solely to an SPE. Total implicit learning is given by (Equation 5), and can be re-written as xiss = pir, where π is a gain that depends on implicit learning properties (ai and bi). Thus, implicit learning is centered on pir, but varies according to a normal distribution: xiss = N(pir,σi2) where σi represents the standard deviation in implicit learning. The target error that remains to drive explicit strategy is equal to the rotation minus implicit adaptation: r – xiss. A negative relationship between implicit and explicit strategy will occur when explicit strategy responds in proportion to this error, xess = pe(r – xiss) where pe is the explicit system’s response gain.

Overall, this model predicts important pairwise relationships between implicit learning, explicit strategy, and total adaptation (equations provided on Lines 1577-1620 in paper, and also in Figure 4). First, as noted implicit learning and explicit adaptation will show a negative relationship. Second, increases in implicit learning will tend to increase total adaptation. These increases in implicit learning will leave smaller errors to drive explicit learning, resulting in a negative relationship between explicit strategy and total learning. Figures 5A-C illustrate these pairwise relationships in our revised manuscript.

How do these relationships compare to the competition equation, in which implicit learning is driven by target errors? Suppose that participants choose a strategy that scales with the rotation’s size according to per (pe is the explicit gain) but varies across participants (standard deviation, σe). Thus, xess is given by a normal distribution N(per,σe2). The target error which drives implicit learning is equal to the rotation minus explicit strategy. The competition theory proposes that the implicit system is driven in proportion to this error according to: xiss = pi(r – xess), where π is an implicit learning gain that depends on implicit learning parameters ai and bi (see Equation 4). This model will produce a negative correlation between implicit learning and explicit strategy. People that use a larger strategy will exhibit greater total learning. Simultaneously, increases in strategy will leave smaller errors to drive implicit learning, resulting in a negative relationship between implicit learning and total learning. These 3 predictions are illustrated in Figures 5D-F in our revised manuscript; these relationships correspond to linear equations which are provided on Lines 1577-1620 in paper, and also in Figure 5.

In sum, both an SPE learning model and a target error learning model could exhibit negative participant level correlations between implicit learning and explicit learning (Figures 5A and 5D). However, they make opposing predictions concerning the relationships between total adaptation and each individual learning system. To test these predictions, we considered how total learning was related to implicit and explicit adaptation measured in the No PT Limit group in Experiment 3. These data are now show in Figures 5GandH in our revised work.

Our observations closely agreed with the competition theory; increases in explicit strategy led to increases in total adaptation (Figure 5G, ρ=0.84, p<0.001), whereas increases in implicit learning were associated with decreases in total adaptation (Figure 5H, ρ=-0.70, p<0.001). We repeated similar analyses across additional data sets which also measured implicit learning via exclusion (i.e., no aiming) trials: (1) the 60° rotation condition (combined across gradual and abrupt groups) in Experiment 1, (2) the 60° rotation groups reported by Maresch et al. (2021), and (3) the 60° rotation group described by Tsay et al. (2021). We obtained the same result as in Experiment 3. Participants exhibited negative correlations between implicit learning and explicit strategy, positive correlations between explicit strategy and total adaptation, and negative correlation between implicit learning and total adaptation. These additional results are reported in Figure 5-Supplement 1 in the revised manuscript.

Thus, these additional studies also matched the competition model’s predictions, but rejected the SPE learning model on two accounts: (1) the relationship between implicit learning and total adaptation was negative, not positive as predicted by the SPE model, and (2) the relationship between explicit learning and total adaptation was positive, not negative as predicted by the SPE model.