Competition between parallel sensorimotor learning systems

Abstract

Sensorimotor learning is supported by at least two parallel systems: a strategic process that benefits from explicit knowledge and an implicit process that adapts subconsciously. How do these systems interact? Does one system’s contributions suppress the other, or do they operate independently? Here, we illustrate that during reaching, implicit and explicit systems both learn from visual target errors. This shared error leads to competition such that an increase in the explicit system’s response siphons away resources that are needed for implicit adaptation, thus reducing its learning. As a result, steady-state implicit learning can vary across experimental conditions, due to changes in strategy. Furthermore, strategies can mask changes in implicit learning properties, such as its error sensitivity. These ideas, however, become more complex in conditions where subjects adapt using multiple visual landmarks, a situation which introduces learning from sensory prediction errors in addition to target errors. These two types of implicit errors can oppose each other, leading to another type of competition. Thus, during sensorimotor adaptation, implicit and explicit learning systems compete for a common resource: error.

Editor's evaluation

The interaction between implicit and explicit processes is central for motor learning. The present study builds upon diverse and sometimes seemingly conflicting data sets to propose a computational model, delineating a competing relationship between the explicit and implicit learning process during motor adaptation. The model provides a number of conceptual insights about the nature of error-based learning, not just for researchers in sensorimotor learning but also for those studying human learning in general.

https://doi.org/10.7554/eLife.65361.sa0Introduction

When our movements are perturbed, we become aware of our errors, and through our own strategy, or instructions from a coach, engage an explicit learning system to improve our outcome (Morehead et al., 2017; Mazzoni and Krakauer, 2006). This awareness, is not required to adapt; our brain also uses an implicit learning system that partially corrects behavior without our conscious awareness (Morehead et al., 2017; Mazzoni and Krakauer, 2006). How do these two systems interact during sensorimotor adaptation?

Suppose that both systems learn from the same error. In this case, when one system adapts, it will reduce the error that drives learning in the other system; thus, the two parallel systems will compete to ‘consume’ a common error. Alternatively, suppose the two systems learn from separate errors, and each produces an output to minimize its own error. In this case, when one system adapts to its error, it could change behavior in ways that paradoxically increase the other system’s error.

Current models suggest that adaptation is driven by two distinct error sources: a task error (Leow et al., 2020; Körding and Wolpert, 2004; Langsdorf et al., 2021), and a prediction error (Mazzoni and Krakauer, 2006; Tseng et al., 2007; Kawato, 1999). One leading theory suggests that the explicit system acts to decrease errors in task performance, while the implicit system acts to reduce errors in predicting sensory outcomes (Mazzoni and Krakauer, 2006; Taylor and Ivry, 2011; Wong and Shelhamer, 2012). In this model, strategies have no impact on implicit learning. A second theory suggests that task errors can drive learning in both systems (Leow et al., 2020; Kim et al., 2019; McDougle et al., 2015; Miyamoto et al., 2020). In this model, implicit and explicit systems will compete with one another.

Suppose implicit and explicit systems share at least one common error source. What will happen when experimental conditions enhance one’s explicit strategy? In this case, increases in explicit strategy will siphon away the error that the implicit system needs to adapt, thus reducing total implicit learning without directly changing implicit learning properties (e.g. its memory retention or sensitivity to error). This reduction in implicit learning creates the illusion that the implicit system was directly altered by the experimental manipulation, when in truth, it was only responding to changes in strategy.

Competitive interactions like this highlight the need to distinguish between an adaptive system’s learning properties such as its sensitivity to an error, and its learning timecourse, that is the contribution it makes to overall adaptation at any point in time. In a competitive system, an adaptive processes’ learning timecourse depends not only on its own learning properties, but also its competitors’ learning properties. In cases where implicit and explicit systems share an error source, one system’s behavior can be shaped not only by its past experience, but also by changes in the other system. Thus, competition may play an important role in savings (Haith et al., 2015; Coltman et al., 2019; Kojima et al., 2004; Medina et al., 2001; Mawase et al., 2014) and interference paradigms (Sing and Smith, 2010; Lerner et al., 2020; Caithness et al., 2004) where learning properties change over time. Measuring the interdependence between implicit and explicit learning may help to explain the disconnect between studies that have suggested acceleration in motor learning is subserved solely by explicit strategy (Haith et al., 2015; Huberdeau et al., 2019; Morehead et al., 2015; Avraham et al., 2020; Avraham et al., 2021), and studies that have pointed to concomitant changes in implicit learning systems (Leow et al., 2020; Yin and Wei, 2020; Albert et al., 2021).

Here, we begin by mathematically (McDougle et al., 2015; Miyamoto et al., 2020; Smith et al., 2006; Albert and Shadmehr, 2018; Thoroughman and Shadmehr, 2000) considering the extent to which implicit and explicit systems are engaged by task errors and prediction errors. The hypotheses make diverging predictions, which we test in various contexts. Our work suggests that in some contexts (Mazzoni and Krakauer, 2006; Taylor and Ivry, 2011), prediction errors and task errors both make important contributions to implicit learning (Results Part 3). In other contexts, the data suggest that the implicit system is primarily driven by task errors shared with the explicit system (Results Part 1). In this latter case, the competition theory explains why increases (Neville and Cressman, 2018; Benson et al., 2011) or decreases (Fernandez-Ruiz et al., 2011; Saijo and Gomi, 2010) in explicit strategy cause an opposite change in implicit learning. This model explains why in some cases implicit adaptation can saturate as perturbations grow (Neville and Cressman, 2018; Bond and Taylor, 2015; Tsay et al., 2021a), but not others (Tsay et al., 2021a; Salomonczyk et al., 2011). The model also explains why participants that utilize large explicit strategies can exhibit less implicit (Miyamoto et al., 2020) or procedural learning (Fernandez-Ruiz et al., 2011), than those who do not. Finally, the theory provides an alternate way to interpret implicit contributions to two learning hallmarks: savings (Haith et al., 2015) and interference (Lerner et al., 2020) (Results Part 2).

Altogether, our results illustrate that sensorimotor adaptation is shaped by competition between parallel learning systems, both engaged by task errors.

Results

In visuomotor rotation paradigms, participants move a cursor that travels along a rotated path (Figure 1A). This perturbation causes adaptation, resulting in both implicit recalibration (Figure 1A, implicit) and explicit (intentional) re-aiming (Figure 1A, aim) (Mazzoni and Krakauer, 2006; Taylor and Ivry, 2011; Taylor et al., 2014; Shadmehr et al., 1998).

Total implicit learning is shaped by competition with explicit strategy.

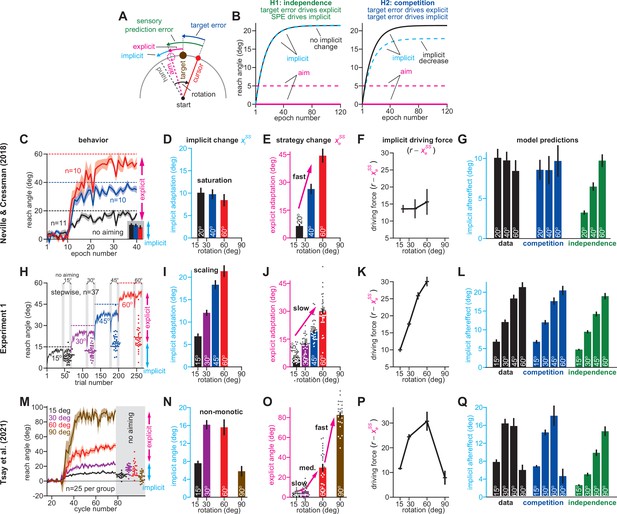

(A). Schematic of visuomotor rotation. Participants move from start to target. Hand path is composed of explicit (aim) and implicit corrections. Cursor path is perturbed by rotation. We explored two hypotheses: prediction error (H1, aim vs. cursor) vs. target error (H2, target vs. cursor) drives implicit learning. (B) Prediction error hypothesis predicts that enhancing aiming (dashed magenta) will not change implicit learning (black vs. dashed cyan) according to the independence equation. Target error hypothesis predicts that enhancing aiming (dashed magenta) will decrease implicit adaptation (black vs. dashed cyan). (C) Data reported by Neville and Cressman, 2018. Participants were exposed to either a 20°, 40°, or 60° rotation. Learning curves are shown. The “no aiming” inset shows implicit learning measured via exclusion trials at the end of adaptation. Explicit strategy was calculated as the voluntary reduction in reach angle during the no aiming period. (D) Implicit learning measured during no aiming period in Neville and Cressman yielded a ‘saturation’ phenotype. (E) Explicit strategies calculated in Neville & Cressman dataset by subtracting exclusion trial reach angles from the total adapted reach angle. (F) The implicit learning driving force in the competition theory: difference between rotation and explicit learning in Neville and Cressman. (G) Implicit learning predicted by the competition and independence models in Neville and Cressman. Models were fit assuming that the implicit learning gain was identical across rotation sizes. (H) Experiment 1. Subjects in the stepwise group (n = 37) experienced a 60° rotation gradually in four steps: 15°, 30°, 45°, and 60°. Implicit learning was measured via exclusion trials (points) twice in each rotation period (gray ‘no aiming’). (I) Total implicit learning calculated during each rotation period in the stepwise group yielded a ‘scaling’ phenotype. (J) Explicit strategies were calculated in the stepwise group by subtracting exclusion trial reach angles from the total adapted reach angle. (K) The implicit learning driving force in the competition theory: difference between rotation and explicit learning in the stepwise group. (L) Implicit learning predicted by the competition and independence models in the stepwise group. Models were fit assuming that implicit learning gain was constant across rotation size. (M) Data reported by Tsay et al., 2021a. Participants were exposed to either a 15°, 30°, 60°, or 90° rotation. Learning curves are shown. The “no aiming” inset shows implicit learning measured via exclusion trials at the end of adaptation. (N) Implicit learning measured during no aiming period in Tsay et al. yielded a ‘non-monotonic’ phenotype. (O) Explicit strategies calculated in Tsay et al. dataset by subtracting exclusion trial reach angles from the total adapted reach angle. (P) Implicit learning driving force in the competition theory: difference between rotation and explicit learning in Tsay et al. (Q) Total implicit learning predicted by the competition and independence models in Tsay et al. Models were fit assuming that the implicit learning gain was identical across rotation sizes. Error bars show mean ± SEM, except in the independence predictions in G, L, and Q; independence predictions show mean and standard deviation across 10,000 bootstrapped samples. Points in H, J, M, and O show individual participants.

-

Figure 1—source code 1

Figure 1 data and analysis code.

- https://cdn.elifesciences.org/articles/65361/elife-65361-fig1-code1-v2.zip

Current models suggest that the rotation r creates two distinct error sources. One error source is the deviation between cursor and target: a target error (Leow et al., 2020; Körding and Wolpert, 2004; Langsdorf et al., 2021). Notably, this target error (Figure 1A, target error) is altered by both implicit (xi) and explicit (xe) adaptation:

In addition, a second error is created due to our expectation that the cursor should move toward where we aimed our movement: a sensory prediction error (SPE) (Mazzoni and Krakauer, 2006; Tseng et al., 2007; Kawato, 1999). SPE is the deviation between the aiming direction (the expected cursor motion) and where we observed the cursor’s actual motion (Figure 1A, sensory prediction error). Critically, because this error is anchored to our aim location, it changes over time in response to implicit adaptation alone:

How does the implicit learning system respond to these two error sources? State-space models describe implicit adaptation as a process of learning and forgetting (McDougle et al., 2015; Miyamoto et al., 2020; Smith et al., 2006; Albert and Shadmehr, 2018; Thoroughman and Shadmehr, 2000):

Forgetting is controlled by a retention factor (ai) which determines how strongly we retain the adapted state. Learning is controlled by error sensitivity (bi) which determines the amount we adapt in response to an error (e.g. an SPE or a target error).

Here, we will contrast two possibilities: (1) the implicit system responds primarily to target error, or (2) the implicit system responds primarily to SPE. In a target error learning system, explicit strategy will reduce the target error in Equation 1. This decrease in target error will lead to a competition between implicit and explicit systems, that is increasing explicit strategy reduces target error, which will then decrease implicit learning. Competition in a target error model will occur over the entire learning timecourse and can lead to unintuitive implicit learning phenotypes (Appendix 1.2). While these implicit behaviors can be observed at any point during adaptation, they are easiest to examine during steady-state adaptation (Appendix 1.1).

Consider how Equation 3 behaves in the steady-state condition. Like adapted behavior (Kim et al., 2019; Albert et al., 2021; Vaswani et al., 2015; Kim et al., 2018), Equation 3 approaches an asymptote with extended exposure to a rotation. This steady-state (Figure 1B, implicit) occurs when learning and forgetting counterbalance each other.

Consider a system where target errors alone drive implicit learning. In this system, total (steady-state) implicit learning is determined by Equations 1 and 3:

Equation 4 demonstrates a competition between implicit and explicit systems; the total amount of implicit adaptation (xiss) is driven by the difference between the rotation r and total explicit adaptation (xess).

Now consider a system where SPEs drive implicit learning. SPEs (Equation 2) are unaltered by strategy. In this case, total implicit learning is determined by Equations 2 and 3:

Equation 5 demonstrates an independence between implicit and explicit systems; the total amount of implicit adaptation depends solely on the rotation’s magnitude, not one’s explicit strategy.

Here, we explore how implicit learning systems respond to explicit strategy, and whether behavior is more consistent with competition or independence. Competition and independence can be studied at any point during the adaptation timecourse (Appendix 1). We will primarily examine steady-state learning, where the competition equation (Equation 4) and independence equation (Equation 5) make simple predictions. The critical insight is that in an independent system (SPE learning), increasing the explicit strategy (Figure 1B, magenta solid and dashed) does not alter implicit adaptation (Figure 1B, independence, compare black and cyan). However, in a competitive system (Equation 4), the same increase in strategy will indirectly decrease implicit learning (Figure 1B, competition, compare black and cyan).

To analyze these possibilities, we begin by examining how changes in explicit strategy alter implicit learning in response to variations in rotation magnitude, experimental instructions, rotation type, and at the individual participant level (Part 1). Next, we describe how competition between implicit and explicit systems could in principle mask changes in implicit learning (Part 2). Finally, we will examine studies which suggest implicit error sources vary across experimental conditions due to the presence and/or absence of multiple visual stimuli in the experimental workspace (Part 3).

Part 1: Measuring how implicit learning responds to changes in explicit strategy

Here, we measure how implicit learning and explicit strategy vary across several factors: (1) rotation size, (2) instructions, (3) gradual versus abrupt rotations, and (4) individual subjects. We will ask whether the variations in implicit and explicit learning are consistent with the competition or independence theories.

Implicit responses to rotation size suggest a competition with explicit strategy

Over extended exposure to a rotation, adaptation appears to saturate (Morehead et al., 2017; Albert et al., 2021; Vaswani et al., 2015; Kim et al., 2018). How does implicit learning contribute to steady-state saturation, and what learning model best describes its behavior?

In Neville and Cressman, 2018, participants adapted to a 20°, 40°, or 60° rotation (Figure 1C). As is common, adaptation reached a steady-state prior to eliminating the target error (Albert et al., 2021; Figure 1C, solid vs. dashed lines). To measure implicit learning, participants were instructed to reach to the target without aiming (Figure 1C, no aiming). The independence model (Equation 5) predicts that the implicit response should scale as the rotation increases. On the contrary, total implicit learning was insensitive to rotation size; it reached only 10° and remained constant despite a threefold increase in rotation magnitude (Figure 1D). To estimate explicit strategy, we subtracted the implicit learning measure from the total adapted response. Opposite to implicit learning, explicit strategy increased proportionally with the rotation’s size (Bond and Taylor, 2015; Tsay et al., 2021a; Figure 1E).

In the competition model, implicit learning is driven by the difference between the rotation and explicit strategy (r – xess in Equation 4). As a result, when an increase in rotation magnitude is matched by an equal increase in explicit strategy (Figure 1—figure supplement 1A, same), the implicit learning system’s driving force will remain constant (Figure 1—figure supplement 1B, same). This constant driving input leads to a phenotype where implicit learning appears to ‘saturate’ with increases in rotation size (Figure 1—figure supplement 1C, same).

To investigate whether this mechanism is consistent with the implicit response, we examined how explicit strategy and the implicit driving force varied with rotation size. As rotation size increased, explicit strategies increased substantially (Figure 1E). Under the competition model, these rapid changes in explicit strategy produced an implicit driving force that responded little to rotation magnitude; while the rotation increased by 40°, the driving force changed by less than 2.5° (Figure 1F). Thus, the competition Equation (Figure 1G, competition) suggested that implicit learning would not vary with rotation size, as we observed in the measured data (Figure 1G, data).

In other words, the competition model suggests that the implicit system can exhibit an unintuitive saturation when its driving input remains constant. The key prediction is that by altering explicit strategy, this driving input will change, changing the implicit response to rotation size. One possibility is to weaken the explicit system’s response to the rotation (Figure 1—figure supplement 1A, slower) which should increase the steady-state of the implicit system (Figure 1—figure supplement 1C, slower).

To test this idea, we used a stepwise rotation (Yin and Wei, 2020). In Experiment 1, participants (n = 37) adapted to a stepwise perturbation which started at 15° but increased to 60° in 15° increments (Figure 1H). Twice toward the end of each rotation block, we assessed implicit adaptation by instructing participants to aim directly to the target (Figure 1H, gray regions). Supplemental analysis suggested that the implicit system reached its steady-state during each learning period (Appendix 2), although this is not required to test the competition theory (Appendix 1.2). Critically, the stepwise rotation onset decreased explicit responses relative to the abrupt rotations used by Neville and Cressman, 2018; explicit strategies increased with a 94.9% gain (change in strategy divided by change in rotation) across the abrupt groups in Figure 1E, but only a 55.5% gain in the stepwise condition shown in Figure 1J. In the competition model, this reduction in strategy increased the implicit system’s driving input (Figure 1K). The increased driving input produced a “scaling” phenotype in the competition model’s implicit response (Figure 1L, competition) which closely matched the measured implicit data (Figure 1I; 1 L, data; rm-ANOVA, F(3,108)=99.9, p < 0.001, ηp2=0.735).

Thus, the implicit system can exhibit both saturation (Figure 1G) and scaling (Figure 1L), consistent with the competition model. Recent work by Tsay et al., 2021a suggests a third steady-state implicit phenotype: non-monotonicity. In their study, the authors examined a wider range in rotation size, 15° to 90° (Figure 1M). A no-aiming period revealed total implicit adaptation each group (n = 25/group). Curiously, whereas implicit learning increased between the 15° and 30° rotations, it appeared similar in the 60° rotation group, and then decreased in the 90° rotation group (Figure 1N). This non-monotonic behavior was inconsistent with the independence model where implicit learning is proportional to rotation size (Figure 1Q, independence).

To determine whether this non-monotonicity could be captured by the competition theory, we considered again how explicit re-aiming increased with rotation size (Figure 1O). We observed an intriguing pattern. When the rotation increased from 15° to 30°, explicit strategy responded with a very low gain (4.5%, change in strategy divided by change in rotation). An increase in rotation size to 60° was associated with a medium-sized gain (80.1%). The last increase to 90° caused a marked change in the explicit system: a 53.3° increase in explicit strategy (177.7% gain). Thus, explicit strategy increased more than the rotation had. Critically, this condition produces a decrease in the implicit driving input in the competition theory (Figure 1—figure supplement 1, faster). Overall, we estimated that this large variation in explicit learning gain (4.5–80.1% to 177.7%) should yield non-monotonic behavior in the implicit driving input (Figure 1P): an increase between 15° and 30°, no change between 30° and 60°, and a decrease between 60° and 90°. As a result, the competition theory (Figure 1Q, competition) exhibited a non-monotonic envelope, which closely tracked the measured data (Figure 1Q, data).

Unfortunately, there is a potential problem in our analysis: implicit and explicit learning measures were not independent, because explicit strategy was estimated using implicit reach angles (i.e. explicit learning equals total learning minus implicit learning). Did this bias our analysis towards the competition model? To answer this question, Equation 4 can be stated as xiss = pi(r – xess) where pi is the learning gain determined by the implicit system’s retention and error sensitivity (i.e. ai and bi). We can replace the explicit strategy (xess) appearing in this equation noting that xess = xTss – xiss, where xTss equals total steady-state adaptation. With this, the model relates implicit learning to total learning: xiss = pi(1 – pi)–1(r – xTss), as opposed to explicit learning, and can be used to test the competition model without correlated learning measures (see Appendix 3). We reexamined all three experiments in Figure 1, using total adaptation to predict implicit learning with the competition model (Figure 1—figure supplement 2). This alternate method yielded nearly identical predictions (Figure 1—figure supplement 2, ‘model-2’) as Equation 4 (Figure 1—figure supplement 2, ‘model-1’). Thus, the qualitative and quantitative correspondence between the competition model and the measured data was not due to how we operationalized implicit and explicit learning (see Appendix 3).

Collectively, these studies demonstrate that the implicit system can exhibit at least three distinct behavioral phenomena: saturation, scaling, or non-monotonicity. The competition model matched all three phenotypes, due to the implicit system’s response to explicit strategy. The SPE learning model described by the independence equation, however, could only produce a scaling phenotype (Figure 1I). Could the SPE learning model be altered to produce implicit learning phenotypes other than scaling? One possibility is that a saturation phenotype (Figure 1D) could be built into the SPE model by adding a restriction, that is an upper bound, on total implicit adaptation, as observed in studies where participants experience invariant error perturbations (Morehead et al., 2017; Kim et al., 2018). With that said, the 10° implicit responses observed across the three rotations in Neville and Cressman, 2018, are much lower than the 20°–25° ceiling suggested by recent error-clamp studies (Kim et al., 2018), and the 35–45° implicit responses observed in some standard rotation studies (Salomonczyk et al., 2011; Maresch et al., 2021). More importantly, a learning model with a rotation-insensitive upper bound on implicit learning would be inconsistent with the scaling (Figure 1I) and nonmonotonic (Figure 1N; see Appendix 6.6) phenotypes we observed. We will explore other extensions to this SPE model in several analyses in the Control analyses section below.

Increase in explicit strategy suppresses implicit learning

The competition model predicts that increasing explicit strategy will decrease implicit learning, even when the rotation size is the same. In contrast, the independence theory predicts that implicit learning will be insensitive to differences in explicit strategy (extensions to this model are considered in Control analyses).

To test these ideas, we considered another condition tested by Neville and Cressman, 2018 where participants were exposed to the same 20°, 40°, or 60° rotation, but received coaching instructions. The coaching sharply improved adaptation over the non-instructed group (Figure 2A, compare purple with black). To understand how implicit and explicit learning contributed to these changes, we analyzed the mean implicit and explicit reach angles measured across all three rotation sizes (each individual response is shown in Figure 2—figure supplement 1).

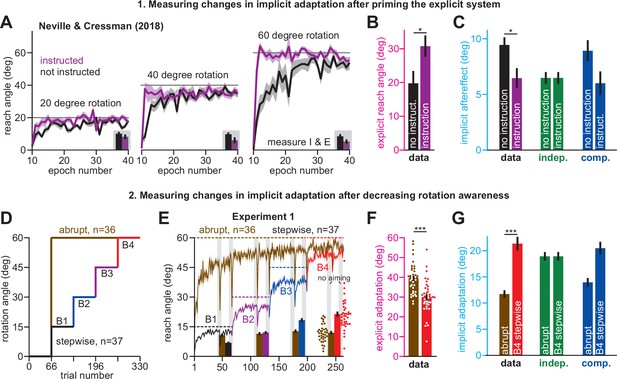

Increases or decreases in explicit strategy oppositely impact implicit adaptation.

(A) Neville and Cressman, 2018 tested participants in two conditions: an uninstructed condition (black) and an instructed condition (purple) where subjects were briefed about the upcoming rotation and its solution. Instruction increased the adaptation rate across three rotation sizes: 20°, 40°, and 60°. Insets in gray shaded area show implicit adaptation measured via exclusion trials at the end of adaptation. (B) Here, we show the average strategy across all rotation sizes in the instructed (black) and uninstructed (purple) conditions. Explicit strategy was calculated by subtracting implicit learning (exclusion trials) from total adaptation. Instruction increased explicit strategy use. (C) The data show implicit adaptation averaged across all three rotation sizes. The independent (SPE learning) and competition (target error learning) models were fit to these data assuming that implicit error sensitivity and retention were identical across rotation sizes and instruction conditions (i.e. identical ai and bi across all six groups). Error bars for model predictions refer to mean and standard deviation across 10,000 bootstrapped samples. (D) In Experiment 1 we tested participants in either an abrupt condition or a stepwise (gradual) condition. Here, we show the rotation schedule. (E) Here, we show learning curves in the abrupt and stepwise conditions in Experiment 1. Bars show implicit adaptation measured during each rotation period (four blocks total) via exclusion trials. Individual learning measures are shown in the terminal 60° learning period for both groups (points at bottom-right). (F) We calculated explicit strategies during the terminal 60° learning period by subtracting implicit learning measures from total adaptation (mean over last 20 trials). Gradual onset reduced explicit strategy use. (G) The data show total implicit learning measured in the 60° rotation period. The competition (blue) and independence (green) models were fit to the data assuming that the implicit learning parameters were the same across the abrupt and stepwise groups. Error bars for the model show the mean and standard deviation across 1,000 bootstrapped samples. Statistics in B, F, and G denote a two-sample t-test: *p < 0.05, ***p < 0.001. Error bars in A, B, C (data), E, F, and G (data) denote mean ± SEM. Points in E and F show individual participants.

-

Figure 2—source code 1

Figure 2 data and analysis code.

- https://cdn.elifesciences.org/articles/65361/elife-65361-fig2-code1-v2.zip

Unsurprisingly, explicit adaptation was enhanced in the participants that received coaching instructions. Explicit re-aiming increased by approximately 10° (Figure 2B, t(61)=2.29, p = 0.026, d = 0.56). However, while instruction enhanced explicit strategy, it suppressed implicit learning, decreasing total implicit learning by approximately 32% (Figure 2C, data, t(61)=2.62, p = 0.011, d = 0.66). To interpret this implicit response, we fit the competition (Equation 4) and independence equations (Equation 5) to the behavior across all experimental conditions (six groups: 3 rotation magnitudes, 2 instruction conditions), while holding the implicit learning parameters in the model constant (i.e. holding ai and bi constant across all conditions).

As in Figure 1, implicit learning in the independence model does not respond to explicit strategy, and is not altered by instruction (Figure 2C, implicit learning, indep.). On the other hand, the competition model accurately suggested that total implicit learning would decrease by approximately 3° (data showed 2.98° decrease, model produced a 2.92° decrease) in response to increases in explicit strategy (Figure 2C, implicit learning, competition, t(61)=2.05, p = 0.045, d = 0.52). Altogether, the competition theory parsimoniously captured how the implicit system responded to explicit instruction (Figure 2C) as well as changes in rotation size (Figure 1G) with the same model parameter set (same ai and bi in the competition equation).

Decrease in explicit strategy enhances implicit learning

Next, we examined how implicit learning responds to decreases in explicit strategy. Yin and Wei, 2020 recently demonstrated that explicit strategies can be suppressed using gradual rotations. The competition theory predicts that decreasing explicit strategy will lead to greater implicit adaptation. We tested this prediction in Exp. 1. Participants were exposed to a 60° rotation, either abruptly (n = 36), or in a stepwise manner (n = 37) where perturbation magnitude increased by 15° across four distinct learning blocks (Figure 2D). We measured implicit and explicit learning during each block, as in Figure 1. To compare gradual and abrupt learning, we analyzed reach angles during the 4th learning block, where both groups experienced the 60° rotation size (Figure 2E).

As in Yin and Wei, 2020, participants in the stepwise condition exhibited a 10° reduction in explicit re-aiming (Figure 2F, two-sample t-test, t(71)=4.97, p < 0.001, d = 1.16). Reductions in strategy led to a decrease in total adaptation in the stepwise group by approximately 4°, relative to the abrupt group (Figure 2E, right-most gray region (last 20 trials); two-sample t-test, t(71)=3.33, p = 0.001, d = 0.78), but an increase in implicit learning by approximately 80% (Figure 2G, data, two-sample t-test, t(71)=6.4, p < 0.001, d = 1.5). Thus, the data presented a curious pattern; greater total adaptation in the abrupt condition was paradoxically associated with reduced implicit adaptation. As expected, these surprising patterns did not match the independence model (Figure 2G, indep.), in which implicit learning does not respond to changes in explicit strategy.

To test whether implicit learning patterns matched the competition model we fit Equation 4 to implicit and explicit reach angles measured in Blocks 1–4, across the stepwise and abrupt conditions, while holding the model’s implicit learning parameters (ai and bi) constant. The competition model correctly predicted that the decrease in strategy in the gradual condition should produce an increase in implicit learning (Figure 2G, comp., two-sample t-test, t(71)=4.97, p < 0.001, d = 1.16). In addition, the competition model predicted a decrease in total learning, consistent again with the data (the model yielded 53.47° total adaptation in abrupt, and 50.42° in gradual: values not provided in Figure 2). The model’s negative correlation between implicit learning and total adaptation occurred in two steps: (1) greater abrupt strategies increased overall adaptation, but (2) siphoned away target errors, reducing implicit adaptation.

We analyzed another hypothesis: changes in implicit adaptation were caused by variation in error sensitivity (e.g. greater implicit error sensitivity in the stepwise condition), rather than competition. Note, however, that the implicit learning gain, pi, is given by pi = bi(1 – ai+ bi)–1. Because the bi term appears in both numerator and denominator, total implicit learning varies slowly with changes in bi (Appendix 4). Accordingly, supplemental analyses (Appendix 4, Figure 2—figure supplement 2) showed that no change in bi could yield the 80% increase in stepwise implicit learning in Figure 2G, let alone the 46% increase in implicit learning in the no-instruction group in Figure 2C. Thus, while variation in implicit error sensitivity might contribute to changes in steady-learning learning, its role is minor compared to error competition.

In summary, we observed that explicit strategies could be suppressed by increasing the rotation gradually. Reductions in explicit strategy were associated with increased implicit adaptation (Figure 2G) as predicted by the competition theory. Furthermore, the same competition theory parameter set (i.e. same ai and bi, see Materials and methods) accurately matched the extent to which implicit learning responded to decreases in explicit strategy (Figure 2G) as well as increases in rotation size (Figure 1L). It is interesting to note that these implicit patterns are broadly consistent with the observation that gradual rotations improve procedural learning (Saijo and Gomi, 2010; Kagerer et al., 1997), although these earlier studies did not properly tease apart implicit and explicit adaptation (see the Saijo and Gomi analysis described in Appendix 5).

Implicit adaptation responds to between-subject differences in explicit adaptation

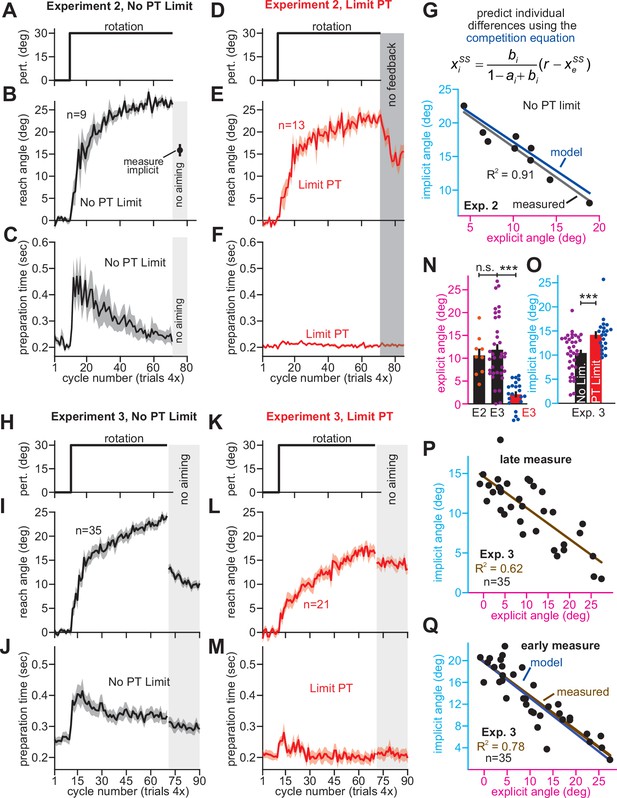

Use of explicit strategy is highly variable between individuals (Miyamoto et al., 2020; Fernandez-Ruiz et al., 2011; Bromberg et al., 2019). According to the competition theory (Equation 4), implicit and explicit learning will negatively co-vary according to a line whose slope and bias are determined by the properties of the implicit learning system (ai and bi). In Experiment 2, we tested this prediction. In one group, we limited preparation time to inhibit time-consuming explicit strategies (Fernandez-Ruiz et al., 2011; McDougle and Taylor, 2019; Figure 3D–F, Limit PT). In the other group, we imposed no preparation time constraints (Figure 3A–C, No PT Limit). We measured ai and bi in the Limit PT group and used these values to predict the implicit-explicit relationship across No PT Limit participants.

Strategy suppresses implicit learning across individual participants.

(A–C) In Experiment 2, participants in the No PT Limit (no preparation time limit) group adapted to a 30° rotation. The paradigm is shown in A. The learning curve is shown in B. Implicit learning was measured via exclusion trials (no aiming). Preparation time is shown in C (movement start minus target onset). (D–F) Same as in A–C, but in a limited preparation time condition (Limit PT). Participants in the Limit PT group had to execute movements with restricted preparation time (F). The task ended with a prolonged no visual feedback period where memory retention was measured (E, gray region). (G) Total implicit and explicit adaptation in each participant in the No PT Limit condition (points). Implicit learning measured during the terminal no aiming probe. Explicit learning represents difference between total adaptation (last 10 rotation cycles) and implicit probe. The black line shows a linear regression. The blue line shows the theoretical relationship predicted by the competition equation which assumes implicit system adapts to target error. The parameters for this model prediction (implicit error sensitivity and retention) were measured in the Limit PT group. (H–J) In Experiment 3, participants adapted to a 30° rotation using a personal computer in the No PT Limit condition. The paradigm is shown in H. The learning curve is shown in I. Implicit learning was measured at the end of adaptation over a 20-cycle period where participants were instructed to reach straight to the target without aiming and without feedback (no aiming seen in I). We measured explicit adaptation as difference between total adaptation and reach angle on first no aiming cycle. We measured ‘early’ implicit aftereffect as reach angle on first no aiming cycle. We measured ‘late’ implicit aftereffect as mean reach angle over last 15 no aiming cycles. (K–M) Same as in H–J, but for a Limit PT condition. (N) Explicit adaptation measured in the No PT Limit condition in Experiment 2 (E2), No PT Limit condition in Experiment (E3, black), and Limit PT condition in Experiment 3 (E3, red). (O) Late implicit learning in the Experiment 3 No PT Limit group (No Lim.) and Experiment 3 Limit PT group (PT Limit). (P) Correspondence between late implicit learning and explicit strategy in the Experiment 3 No PT Limit group. (Q) Same as in G but where model parameters are obtained from the Limit PT group in Experiment 3, and points represent subjects in the No PT Limit group in Experiment 3. Early implicit learning is used. Throughout all insets, error bars indicate mean ± SEM across participants. Statistics in N and O are two-sample t-tests: n.s. means p > 0.05, ***p < 0.001.

-

Figure 3—source code 1

Figure 3 data and analysis code.

- https://cdn.elifesciences.org/articles/65361/elife-65361-fig3-code1-v2.zip

As expected, Limit PT participants dramatically reduced their reach latencies throughout the adaptation period (Figure 3F), whereas the No PT Limit participants exhibited a sharp increase in movement preparation time after perturbation onset (Figure 3C), indicating explicit re-aiming (Langsdorf et al., 2021; Haith et al., 2015; Albert et al., 2021; Fernandez-Ruiz et al., 2011; McDougle and Taylor, 2019). Consistent with explicit strategy suppression, learning proceeded more slowly and was less complete under the preparation time limit (compare Figure 3B&E; two-sample t-test on last 10 adaptation epochs: t(20)=3.27, p = 0.004, d = 1.42).

Next, we measured the retention factor ai during a terminal no feedback period (Figure 3E, dark gray, no feedback) and error sensitivity bi during the steady-state adaptation period. Steady-state implicit error sensitivity (note errors are small at steady-state creating high bi) was consistent with recent literature (Figure 3—figure supplement 1A-C). Together, this retention factor (ai = 0.943) and error sensitivity (bi = 0.35), produced a specific form of Equation 4, xi = 0.86 (30 – xe). We used this result to predict how implicit and explicit learning should vary across participants in the No PT Limit group (Figure 3G, blue line).

To measure implicit and explicit learning in the No PT Limit group, we instructed participants to move their hand through the target without any re-aiming at the end of the rotation period (Figure 3B, no aiming). The precipitous change in reaching angle revealed implicit and explicit components of adaptation (post-instruction reveals implicit; voluntary decrease in reach angle reveals explicit). We observed a striking correspondence between the No PT Limit implicit-explicit relationship (Figure 3G, black dot for each participant; ρ = −0.95) and that predicted by the competition equation (Figure 3G, blue). The slope and bias predicted by Equation 4 (–0.86 and 25.74°, respectively) differed from the measured linear regression by less than 5% (Figure 3G, black line, R2 = 0.91; slope is –0.9 with 95% CI [-1.16,–0.65] and intercept is 25.46° with 95% CI [22.54°, 28.38°]).

In addition, we also asked participants to verbally report their aiming angles prior to concluding the experiment. These responses were variable, with 25% reported in the incorrect direction. Because strategies are susceptible to sign-flipped errors (McDougle and Taylor, 2019), we assumed these misreported strategies represented the correct magnitude, but the incorrect sign, and thus took their absolute value. While reported explicit strategies were on average greater than our probe-based measure, and report-based implicit learning was on average smaller than our probe-based measure (Figure 3—figure supplement 2A&B; paired t-test, t(8)=2.59, p = 0.032, d = 0.7), the two report-based measures exhibited a strong correlation which aligned with the competition theory’s prediction (Figure 3—figure supplement 2C; R2 = 0.95; slope is –0.93 with 95% CI [-1.11,–0.75] and intercept is 25.51° with 95% CI [22.69°, 28.34°]).

In summary, individual participants exhibited an inverse relationship between implicit and explicit learning; participants who used large explicit strategies inadvertently suppressed their implicit learning, a pattern consistent with error-based competition.

Limiting reaction time strongly suppresses explicit strategy and increases implicit learning

Our analysis in Experiment 2 had two important limitations. First, the competition theory used implicit learning parameters measured under limited preparation time conditions (Leow et al., 2020; Fernandez-Ruiz et al., 2011; Leow et al., 2017): how effectively does this condition suppress explicit learning? Second, our individual-level implicit and explicit learning measures were intrinsically correlated because they both depended on probe-based reach angles (i.e. implicit is no aiming probe, and explicit is total learning minus no aiming probe).

To address these limitations, we conducted a laptop-based control experiment (Experiment 3). Participants (n = 35) adapted to a 30° rotation (Figure 3I), but this time, we measured implicit adaptation using the no-aiming instruction over an extended 20-cycle period (Figure 3I, no aiming). We calculated early (the first no-aiming cycle; Figure 3Q) and late (last 15 no-aiming cycles; Figure 3P) implicit learning measures. Explicit strategy was estimated by subtracting the first no-aiming cycle from total adaptation. Thus, our explicit strategy measure was not calculated using late implicit learning trials; these two measures were no longer spuriously correlated. Regardless, we still observed a strong relationship between explicit strategy and late implicit learning; greater strategy use was associated with reduced late implicit adaptation (Figure 3P, ρ = −0.78, p< 0.001).

Next, we repeated this experiment, but under limited preparation time conditions in a separate participant cohort (Figure 3L, Experiment 3, Limit PT, n = 21). As for the Limit PT group in Exp. 2, we imposed a strict bound on reaction time to suppress movement preparation time (compare Figure 3J&M). Once the rotation period ended, participants were told to stop re-aiming. The decrease in reach angle revealed each participant’s explicit strategy (Figure 3N). When no reaction time limit was imposed (No PT Limit), re-aiming totaled 11.86° (Figure 3N, black). In addition, we did not detect a statistically significant difference in re-aiming across Exps. 2 and 3 (t(42)=0.50, p = 0.621). As in earlier reports (Leow et al., 2020; Albert et al., 2021; Fernandez-Ruiz et al., 2011; Leow et al., 2017), limiting reaction time dramatically suppressed explicit strategy, yielding only 2.09° of re-aiming (Figure 3N, red). Thus, these data showed that our limited reaction time technique was highly effective at suppressing explicit strategy.

Consistent with the competition theory, suppressing explicit strategy increased implicit learning by approximately 40% (Figure 3O, No PT Limit vs. Limit PT, two-sample t-test, t(54)=3.56, p < 0.001, d = 0.98). We again used the Limit PT group’s behavior to estimate implicit learning parameters (ai and bi) as we did in Exp. 2 (Figure 3G). Using these parameters, the competition theory (Equation 4) predicted that implicit and explicit adaptation should be related by the line: xi = 0.658(30 – xe). As in Exp. 2, we observed a striking correspondence between this model (Figure 3Q, bottom, model) and the actual implicit-explicit relationship measured in participants in the No PT Limit group (Figure 3Q, bottom, points). The slope and bias predicted by Equation 4 (–0.665 and 19.95°, respectively) differed from the measured linear regression by less than 5% (Figure 3Q, bottom brown line, R2 = 0.78; slope is –0.63 with 95% CI [-0.74,–0.51] and intercept is 19.7° with 95% CI [18.2°, 21.3°]).

In summary, Exp. 3 provided additional evidence that implicit and explicit systems compete with one another at the individual-participant level. Participants who relied more on strategy exhibited reductions in implicit learning, as predicted by the competition theory. Moreover, by limiting preparation time on each trial, explicit strategies were strongly suppressed, allowing us to estimate the time course of the implicit system’s adaptation.

Control analyses

Implicit learning exhibits generalization: a decay in adaptation measured when subjects move to positions across the workspace (Hwang and Shadmehr, 2005; Krakauer et al., 2000; Fernandes et al., 2012). Implicit generalization is centered where participants aim (Day et al., 2016; McDougle et al., 2017). For this reason, implicit learning measured when aiming towards the target, can underapproximate total implicit learning. Subjects that aim more (larger strategy) can exhibit a larger reduction in measured implicit learning. Might this contribute to the negative implicit-explicit correlations in Exps. 1–3?

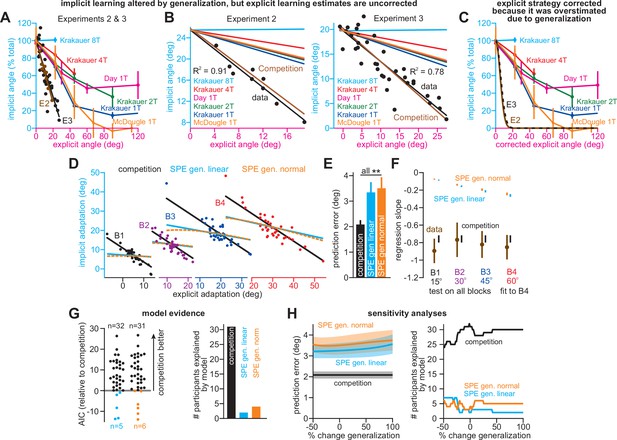

To test this idea, we compared our data to generalization curves measured in past studies (Krakauer et al., 2000; Day et al., 2016; McDougle et al., 2017; Figure 4). Absolute implicit responses are shown in Figure 4B, and normalized measures are shown in Figure 4A (see Appendix 6.1). Implicit learning in Exps. 2&3 declined 300% more rapidly than predicted by past generalization studies (Figure 4A&B). Moreover, this comparison in Figure 4A–C is not appropriate under the generalization hypothesis. In Exps. 2&3, explicit strategies are estimated as total learning minus implicit learning. If implicit learning measured at the target underapproximates total implicit learning measured at the aim location, then the explicit strategies we calculate will overapproximate the actual strategy used by each participant. We need to correct these strategies prior to comparing to past generalization curves (Appendix 6.2). The corrected generalization curves (Figure 4C, E2 and E3 lines) that produce the patterns in Figure 4A&B exhibited an unphysiological narrowing: their standard deviation (width) was 85% smaller than that reported in recent studies (Krakauer et al., 2000; Day et al., 2016; McDougle et al., 2017) (σ is about 5.5° versus 37.76° in McDougle et al., see Appendix 6.1). These same issues occurred in the group-level phenomena that we analyzed in Figures 1 and 2: no plausible generalization curve could explain the implicit response to instruction, rotation onset (abrupt/gradual), and rotation size (Appendices 6.4 and 6.5). As an example, the variations in implicit learning across abrupt and stepwise groups in Exp. 1 would require a generalization curve that is 90% narrower than recent estimates (McDougle et al., 2017) (see Appendix 6.4 and Figure 4—figure supplement 1; σ = 3.87° versus 37.76° in McDougle et al., 2017).

Correlations between implicit and explicit learning are consistent with competition, not SPE generalization.

(A) Aim-centered generalization could create the illusion that implicit and explicit systems compete. To evaluate this possibility, we compared the implicit-explicit relationship in Exps. 2 and 3 to generalization curves reported in Krakauer et al., 2000, Day et al., 2016, and McDougle et al., 2017. The 1T, 2T, 4T, and 8T labels correspond to the number of adaptation targets in Krakauer et al. The gold McDougle et al. curve is particularly relevant because the authors controlled aiming direction on generalization trials and counterbalanced CW and CCW rotations. Data in Exps. 2 and 3 are shown overlaid in the inset. Implicit learning declined about 300% more rapidly with increases in re-aiming than that observed by Day et al. The solid black and brown lines show the competition theory predictions. Implicit learning in Experiments 2 and 3 was normalized to its theoretical maximum, reached when re-aiming is equal to zero. The value used to normalize was determined via linear regression (25.5° in Exp. 2, 19.7° in Exp. 3). (B) Same as in A, but without normalizing implicit learning. Generalization curves were converted to degrees by multiplying the curves in A by the max. implicit learning value in Exp. 2 (25.5°) or Exp. 3 (19.7°). (C) The comparisons in A and B are not correct. Under the generalization hypothesis, each data point’s explicit strategy needs to be corrected according to generalization. This inset shows the true implicit-explicit generalization curve that would be required to produce the data in A and B. The E2 and E3 lines show the Exp. 2 and Exp. 3 curves. (D) Points show implicit and explicit learning measured in the stepwise individual participants studied in Exp. 1 (B1 is 15° period, B2 is 30° period, B3 is 45° period, and B4 is 60° period). Three models were fit to participant data in the 60° period. Competition model fit is shown in black. A linear generalization (SPE gen. linear) with slope set by McDougle et al. is shown in cyan. A Gaussian generalization (SPE gen. normal) with width set by McDougle et al. is shown in gold. Since models were fit to B4 data, the B1, B2, and B3 lines represent predicted behavior. (E) The prediction error (RMSE) in each model’s implicit learning curve across the held-out 15°, 30°, and 45° periods in D. (F) Linear regressions fit to each rotation block in (D). Brown points and lines (data) show the regression slope and 95% CI. The black (competition), cyan (SPE gen. linear), and gold (SPE gen. normal) are model predictions where lines are 95% CIs estimated via bootstrapping. (G) All three models in D–F were fit to individual participant behavior in the stepwise group. At left, the AIC for each model is compared to that of the competition model. At right, the total number of subjects best captured by each model is shown. (H) Same as E and G but where the generalization width was varied in a sensitivity analysis. We tested values between one-half the McDougle et al. generalization curve (–50%) and twice the McDougle et al. generalization curve (+100%). Error bars in E show mean ± SEM. Statistics in E are post-hoc tests following one-way rm-ANOVA: **p < 0.01.

-

Figure 4—source code 1

Figure 4 data and analysis code.

- https://cdn.elifesciences.org/articles/65361/elife-65361-fig4-code1-v2.zip

We extended the independence model with implicit generalization and compared its behavior to the competition theory. The competition model is given by xiss = pi(r – xess), where pi is an implicit learning gain. The SPE generalization model is ximeasured = pirg(xess), where g(xess) encodes generalization (derivation in Appendix 6.2). We specified g(xess) with McDougle et al., 2017. We considered models where g(xess) was linear (Figure 4D–F, SPE gen. linear) and g(xess) was normal (SPE gen. normal). Then we fit each model’s pi to match implicit learning during the 60° stepwise rotation in Exp. 1. We used this gain to predict the implicit-explicit relationship across the three earlier learning periods (B1-B3 in Figure 4D). The generalization models yielded poor matches to the held-out data (model RMSE in Figure 4E, rm-ANOVA, F(2,72)=13.7, p < 0.001, ηp2 = 0.276). Further, a model comparison showed that competition best described individual subject data, minimizing AIC in 84% of stepwise participants (Figure 4G, Appendix 6.3). Poor SPE generalization model performance was not due to misestimating generalization curve properties; we conducted a sensitivity analysis in which we varied the generalization curve’s width. The competition model was superior across the entire range (Figure 4H, Appendix 6.3).

To understand why the competition theory alone generalized across rotation sizes, we fit linear regressions to the data in each rotation period. The regression slopes and 95% CIs are shown in Figure 4F (data). Remarkably, the measured implicit-explicit slope appeared to be constant across all rotation sizes. This invariance was directly consistent with the competition theory (Figure 4F, competition) which possesses an implicit gain pi that remains constant across rotations (like the data). But in generalization models (Figure 4F, generalization), the gain relating implicit and explicit learning is not constant; it changes as the rotation gets larger (see Appendix 6.3). In sum, data in Exps. 1–3 were poorly explained by an SPE model extended with generalization.

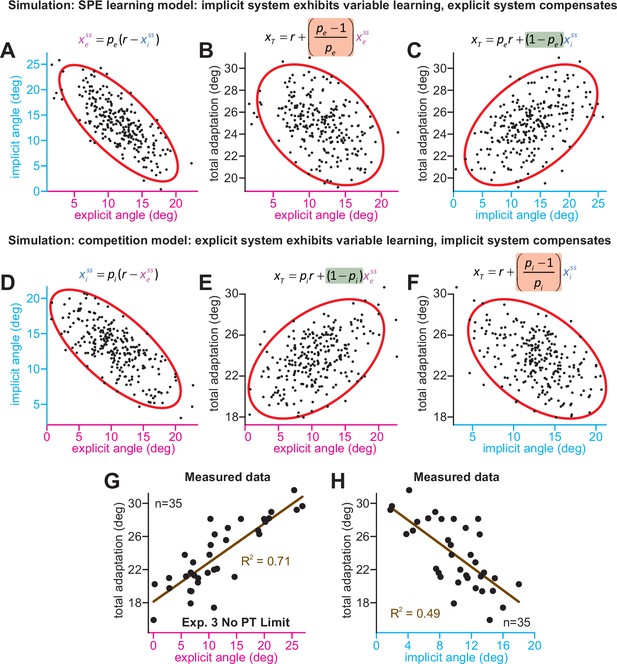

We considered one last control analysis. The competition equation predicts that implicit-explicit correlations are caused by the implicit system’s response to variations in strategy. An SPE learning model could create correlations the opposite way: individuals who possess less implicit learning compensate by increasing their explicit strategy. This scenario can be described by xess = pe(r – xiss) where pe is the explicit response gain. This model has three properties (Appendix 7.2). First, implicit and explicit learning will show a negative relationship (Figure 5A). Second, increases in implicit learning will tend to increase total adaptation (Figure 5C). Finally, increasing implicit learning leaves smaller errors to drive explicit strategy, resulting in a negative correlation between strategy and total adaptation (Figure 5B). While the competition model also predicts negative implicit-explicit correlations (Figure 5D), the other pairwise correlations differ (Appendix 7.1). Increases in explicit strategy lead to greater total learning (Figure 5E), but reduce the error which drives implicit learning, leading to a negative correlation between implicit learning and total adaptation (Figure 5F).

Implicit-explicit correlations with total adaptation match the competition theory.

The competition equation states that xiss = pi(r – xess), where pi is a scalar learning gain depending on ai and bi. The competition between steady-state implicit (xiss) and explicit (xess) adaptation predicted by this model is simulated in D across 250 hypothetical participants. The model pi is fit to data in Experiment 3. Total learning is given by xTss = xiss + xess. These two equations can be used to derive expressions relating total learning (xTss) to steady-state implicit (xiss) and explicit (xess) learning. In E, we show that the competition theory predicts a positive relationship between explicit learning and total adaptation (equation at top derived in Appendix 7, green denotes a positive gain). In F, we show that the competition theory predicts a negative relationship between implicit learning and total adaptation (equation at top derived in Appendix 7, red shading denotes negative gain). In (A–C), we consider an alternative model. Suppose that implicit learning is immune to explicit strategy and varies independently across participants. This is equivalent to the SPE learning model. But in this case, the explicit system could respond to variability in implicit learning via another competition equation: xess = pe(r – xiss). Here, pe is an explicit learning gain (must be less than one to yield a stable system). In A, we show the negative relationship between implicit and explicit adaptation predicted by this alternate SPE learning model. In B, we show that when the explicit system responds to implicit variability (SPE learning) there is a negative relationship between total adaptation and explicit strategy. The equation at top is derived in Appendix 7. In C, we show that the SPE learning model will yield a positive relationship between implicit learning and total adaptation. Equation at top derived in Appendix 7. (G) We measured the relationship between explicit strategy and total adaptation in Exp. 3 (No PT Limit group). Total learning exhibits a positive correlation with explicit strategy. (H) Same concept as in G, but here we show the relationship between total learning and implicit adaptation. The patterns in G and H are consistent with the competition theory (compare with E and F).

-

Figure 5—source code 1

Figure 5 data and analysis code.

- https://cdn.elifesciences.org/articles/65361/elife-65361-fig5-code1-v2.zip

We analyzed these predictions in the No PT Limit group in Exp. 3 (Appendix 7.4). Our observations matched the competition theory; greater explicit strategy was associated with greater total adaptation (Figure 5G, ρ = 0.84, p < 0.001), whereas greater implicit learning was associated with lower total adaptation (Figure 5H, ρ = −0.70, p < 0.001). We repeated these analyses in other datasets (Appendix 7.4) that measured implicit learning with no-aiming probe trials: (1) 60° rotation groups (combined across gradual and abrupt groups) in Experiment 1, (2) 60° groups reported by Maresch et al., 2021 (combined across the CR, IR-E, and IR-EI groups), and (3) 60° rotation group in Tsay et al., 2021a These data matched the competition theory: negative implicit-explicit correlations (Figure 5—figure supplement 1G-I), positive explicit-total correlations (Figure 5—figure supplement 1D-F), and negative implicit-total correlations (Figure 5—figure supplement 1A-C).

In summary, while an SPE learning model could exhibit negative correlations between implicit and explicit adaptation, it does not predict a negative correlation between steady-state implicit learning and total adaptation (nor a positive relationship between steady-state explicit strategy and total adaptation), as we observed in the data. The data were consistent with the competition theory, where the implicit system responds to variations in explicit strategy. However, there is a critical caveat. The predictions outlined above assumed that implicit learning properties (contained within pi) are the same across every participant. This is unlikely to be true, and variation in pi across subjects (e.g. changes in error sensitivity) will undermine some correlations in Figure 5, particularly the relationship between implicit learning and total adaptation. This phenomenon and past studies where it appears to occur are treated in Appendix 8.

Part 2: Competition with explicit learning can mask changes in the implicit learning system

Here, we show that in the competition model, implicit learning may undergo savings, without changing its learning timecourse. Next, we limit preparation time to detect increases and decreases in implicit learning.

Two ways to interpret the implicit response in a savings paradigm

When participants are exposed to the same perturbation twice, they adapt more quickly the second time. This phenomenon is known as savings and is a hallmark of sensorimotor adaptation (Smith et al., 2006; Herzfeld et al., 2014; Zarahn et al., 2008). Multiple studies have attributed this process solely to changes in explicit strategy (Haith et al., 2015; Huberdeau et al., 2019; Morehead et al., 2015; Avraham et al., 2021; Huberdeau et al., 2015).

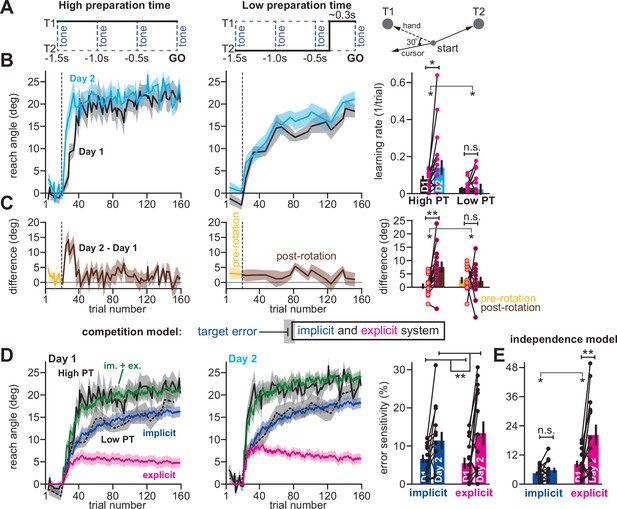

For example, in an earlier work (Haith et al., 2015), we trained participants (n = 14) to reach to one of two targets, coincident with an audio tone (Figure 6A). By shifting the displayed target approximately 300ms prior to tone onset on a minority of trials (20%), we forced participants to execute movements with limited preparation time (Low preparation time; Figure 6A, middle). On all other trials (80%) the target did not switch resulting in high preparation time movements (Figure 6A, left). We measured adaptation to a 30° rotation during high preparation time (Figure 6B, left) and low preparation time trials (Figure 6B, middle) across two separate exposures (Day 1 and Day 2).

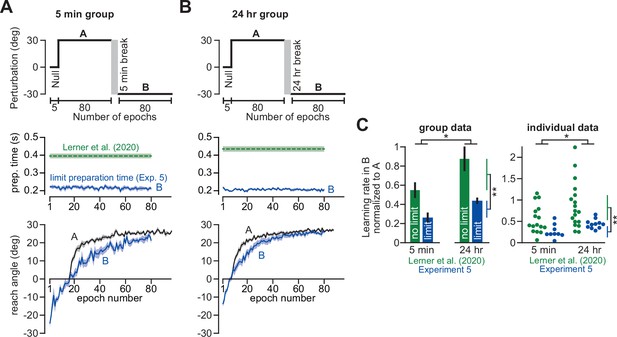

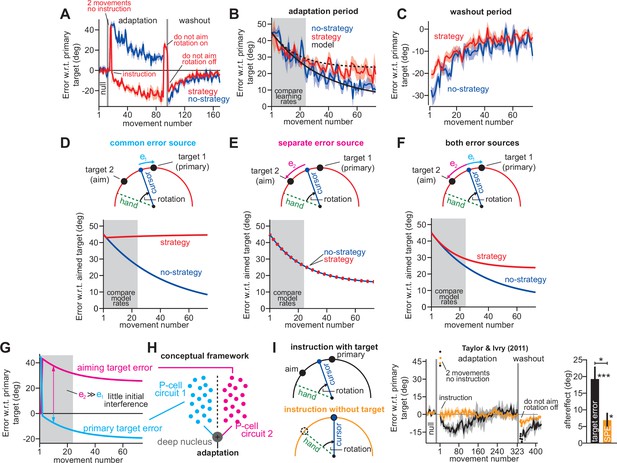

Competition predicts changes in implicit error sensitivity without changes in implicit learning rate.

(A) Haith et al., 2015 instructed participants to reach to Targets T1 and T2 (right). Participants were exposed to a 30° visuomotor rotation at Target T1 only. Participants reached to the target coincident with a tone. Four tones were played with a 500ms inter-tone-interval. On most trials (80%) the same target was displayed during all four tones (left, High preparation time or High PT). On some trials (20%) the target switched approximately 300ms prior to the fourth tone (middle, Low preparation time or Low PT). (B) On Day 1, participants adapted to a 30° visuomotor rotation (Day 1, black) followed by a washout period. On Day 2, participants again experienced a 30° rotation (Day 2, blue). At left, we show the reach angle expressed on High PT trials during Days 1 and 2. Dashed vertical line shows perturbation onset. At middle, we show the same but for Low PT trials. At right, we show learning rate on High and Low PT trials, during each block. (C) As an alternative to the rate measure shown at right in B, we calculated the difference between reach angle on Days 1 and 2. At left and middle, we show the learning curve differences for High and Low PT trials, respectively. At right, we show difference in learning curves before and after the rotation. ‘Pre-rotation’ shows the average of Day 2 – Day 1 prior to rotation onset. ‘Post-rotation’ shows the average of Day 2 – Day 1 after rotation onset. (D) We fit a state-space model to the learning curves in Days 1 and 2 assuming that target errors drove implicit adaptation. Low PT trials captured the implicit system (blue). High PT trials captured the sum of implicit and explicit systems (green). Explicit trace (magenta) is the difference between the High and Low PT predictions. At right, we show error sensitivities predicted by the model. (E) Same as in D, but for a state-space model where implicit learning is driven by SPE, not target error. Model-predicted error sensitivities are shown. Error bars across all insets show mean ± SEM, except for the learning rate in B which displays the median. Two-way repeated-measures ANOVA were used in B, C, D, and E. For B and C, exposure number and preparation time condition were main effects. For D and E exposure number and learning system (implicit vs explicit) were main effects. Significant interactions in B, C, and E prompted follow-up one-way repeated-measures ANOVA (to test simple main effects). Statistical bars where two sets of asterisks appear (at left and right) indicate interactions. Statistical bars with one centered set show main effects or simple main effects. Statistics: n.s. means p > 0.05, *p < 0.05, **p < 0.01.

-

Figure 6—source code 1

Figure 6 data and analysis code.

- https://cdn.elifesciences.org/articles/65361/elife-65361-fig6-code1-v2.zip

To detect savings, we calculated the learning rate on low and high preparation time trials. Savings appeared to require high preparation time; learning rate increased during the second exposure on high preparation time trials, but not low preparation time trials (Figure 6B, right; two-way rm-ANOVA, preparation time by exposure number interaction, F(1,13)=5.29, p = 0.039; significant interaction followed by one-way rm-ANOVA across Days 1 and 2: high prep. time with F(1,13)=6.53, p = 0.024, ηp2=0.335; low preparation time with F(1,13)=1.11, p = 0.312, ηp2=0.079). To corroborate this rate analysis, we also measured savings via early changes in reach angle (first 5 rotation cycles) across Days 1 and 2 (Figure 6C, left and middle). Only high preparation time trials exhibited a statistically significant increase in reach angle, consistent with savings (Figure 6C, right; two-way rm-ANOVA, prep. time by exposure interaction, F(1,13)=13.79, p = 0.003; significant interaction followed by one-way rm-ANOVA across days: high prep. time with F(1,13)=11.84, p = 0.004, ηp2=0.477; low prep. time with F(1,13)=0.029, p = 0.867, ηp2=0.002).

Because explicit strategies can be suppressed by limiting movement preparation time under some conditions (Huberdeau et al., 2019; Fernandez-Ruiz et al., 2011; McDougle and Taylor, 2019), in our initial study we interpreted these data to mean that savings relied solely on time-consuming explicit strategies. Multiple studies have reached similar conclusions (Haith et al., 2015; Huberdeau et al., 2019; Morehead et al., 2015; Avraham et al., 2021; Huberdeau et al., 2015), suggesting that the implicit learning system is not improved by multiple exposures to a rotation.

However, the competition theory provides an alternate possibility: changes in the implicit learning system may occur but are hidden because of competition with explicit learning. To show this unintuitive phenomenon, we fit the competition model to individual participant behavior under the assumption that low preparation time trials relied solely on implicit adaptation, but high preparation time trials relied on both implicit and explicit adaptation. The model generated implicit (Figure 6D, blue) and explicit (Figure 6D, magenta) states that tracked the behavior well on high preparation time trials (Figure 6D, solid black line) and also low preparation time trials (Figure 6D, dashed black line).

Next, we considered the implicit and explicit error sensitivities estimated by the model, which are commonly linked to changes in learning rate (Coltman et al., 2019; Mawase et al., 2014; Lerner et al., 2020; Albert et al., 2021; Herzfeld et al., 2014). The model unmasked a surprising possibility: even though savings was observed only on high preparation time trials, but not low preparation time trials (Figure 6B&C), the model suggested that both the implicit and explicit systems exhibited a statistically significant increase in error sensitivity (Figure 6D, right; two-way rm-ANOVA, within-subject effect of exposure number, F(1,13)=10.14, p = 0.007, ηp2=0.438; within-subject effect of learning process, F(1,13)=0.051, p = 0.824, ηp2=0.004; exposure by learning process interaction, F(1,13)=1.24, p = 0.285).

In contrast, a model where the implicit system adapted to SPEs as opposed to target errors (the independence model) suggested that only the explicit system exhibited a statistically significant increase in error sensitivity (Figure 6E; two-way rm-ANOVA, learning process (i.e. implicit vs explicit) by exposure interaction, F(1,13)=7.016, p = 0.02; significant interaction followed by one-way rm-ANOVA across exposures: explicit system, F(1,13)=9.518, p = 0.009, ηp2=0.423; implicit system, F(1,13)=2.328, p = 0.151, ηp2=0.152).

In summary, when we reanalyzed our earlier data, the competition and independence theories suggested that our data could be explained by two contrasting hypothetical outcomes. If we assumed that implicit and explicit systems were independent, then only explicit learning contributed to savings, as we concluded in our original report. However, if we assumed that the implicit and explicit systems learned from the same error (competition model), then both implicit and explicit systems contributed to savings. Which interpretation is more parsimonious with measured behavior?

Competition with explicit strategy can alter measurement of implicit learning

The idea that implicit error sensitivity can increase without any change in implicit learning rate (Figure 6) is not intuitive. What the competition model suggests is that when the explicit system increases its learning rate as in Figure 6D, it leaves a smaller target error to drive implicit learning. However, despite this decrease in target error, low preparation time learning was similar on Days 1 and 2 (Figure 6B). Because we assumed that low preparation time learning relied on the implicit system, the competition theory required that the implicit system must have experienced an increase in error sensitivity to counterbalance the reduction in target error magnitude. In other words, though increase in implicit error sensitivity did not increase total implicit learning, it still contributed to savings. That is, had implicit error sensitivity remained the same, low preparation time learning would decrease on Day 2, and less overall savings would occur.

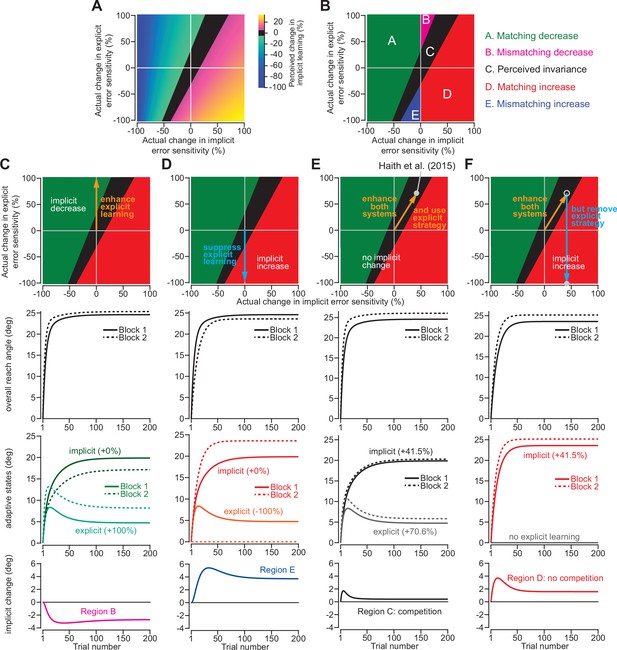

To understand how our ability to detect changes in implicit adaptation can be altered by explicit strategy we constructed a competition map (Figure 7A). Imagine that we want to compare behavior across two timepoints or conditions. Figure 7A shows how changes in implicit error sensitivity (x-axis) and explicit error sensitivity (y-axis) both contribute to measured implicit aftereffects (denoted by map colors), based on the competition equation (note that the origin denotes a 0% change in error sensitivity relative to Day 1 adaptation in Haith et al., 2015). The left region of the map (cooler colors) denotes combinations of implicit and explicit changes that decrease implicit adaptation. The right region of the map (hotter colors) denotes combinations that increase implicit adaptation. The middle black region represents combinations that manifest as a perceived invariance in implicit adaptation ( < 5% absolute change in implicit adaptation).

Changes in implicit adaptation depend on both implicit and explicit error sensitivity.

(A) Here we depict the competition map. The x-axis shows change in implicit error sensitivity between reference and test conditions. The y-axis shows change in explicit error sensitivity. Colors indicate the percent change in implicit adaptation (measured at steady-state) from the reference to test conditions. Black region denotes an absolute change less than 5%. The map was constructed with Equation 8. (B) The map can be described in terms of five different regions. In Region A (matching increase), implicit error sensitivity and total implicit adaption both increase in test condition. Region D is same, but for decreases in error sensitivity and total adaptation. In Region B (mismatching decrease), implicit learning decreases though its error sensitivity is higher or same. In Region E (mismatching increase), implicit learning increases though its error sensitivity is lower or same. Region C shows a perceived invariance where implicit adaptation changes less than 5%. (C) Row 1: effect of enhancing explicit learning. Row 2: total learning increases. Row 3: implicit and explicit learning shown in Blocks 1 and 2, where only difference is 100% increase in explicit error sensitivity. Row 4: change in implicit learning (Block 2–1). (D) Row 1: effect of suppressing explicit learning. Row 2: total learning decreases. Row 3: implicit and explicit learning shown in Blocks 1 and 2, where explicit error sensitivity decreases 100%. Row 4: implicit learning change (Block 2–1). (E) Row 1: model simulation for Haith et al., 2015. Row 2: Total learning increases. Row 3: implicit and explicit learning during Blocks 1 and 2 where implicit error sensitivity increases by 41.5% and explicit error sensitivity increases by 70.6%. Row 4: negligible change in implicit learning (Block 2–1). (F) Same as in E except here explicit strategy is suppressed during Blocks 1 and 2.

-

Figure 7—source code 1

Figure 7 analysis code.

- https://cdn.elifesciences.org/articles/65361/elife-65361-fig7-code1-v2.zip

This map defines several distinct areas (Figure 7B). Region A denotes a ‘matching’ decrease between implicit adaptation and error sensitivity; total implicit learning will decline across two separate learning periods due to a reduction in implicit error sensitivity. Region D is similar. Here, total implicit learning will increase across two separate learning periods due to an increase in implicit error sensitivity.

The other regions show less intuitive cases. In Region B, there is a ‘mismatching’ change in total implicit learning and implicit error sensitivity; here total implicit learning decreases even though implicit error sensitivity has increased or stayed the same. Likewise, in Region E, total implicit learning will increase across two separate learning periods, though implicit error sensitivity has decreased or stayed the same.

Indeed, we have already described these cases in Figure 2. For example, by enhancing the explicit system via coaching (Figure 2A–C), implicit learning decreased. This scenario is equivalent to moving up the y-axis of the map (Figure 7C, top). The same implicit system will decrease its output (Figure 7C, bottom) when normal levels of explicit strategy are increased (Figure 7C, middle). On the other hand, suppressing explicit strategy by gradually increasing the rotation (Figure 2D–G), or limiting reaction time (Figure 3N&O), increased implicit learning without changing any implicit learning properties. This scenario is equivalent to moving down the y-axis of the competition map (Figure 7D, top). The same implicit system will increase its output (Figure 7D, bottom) when normal levels of explicit strategy are then suppressed (Figure 7D, middle).

Now, let us consider the savings experiment in Figure 6. The competition theory predicted (Figure 6D) that explicit error sensitivity increased by approximately 70.6% during the second exposure, whereas the implicit system’s error sensitivity increased by approximately 41.5% (Figure 7E, middle). These changes in implicit and explicit adaptation describe a single point in the competition map, denoted by the gray circle in Figure 7E (top). This experiment occupies Region C, which indicates that despite the 41.5% increase in implicit error sensitivity, the total implicit learning will increase by less than 5% (Figure 7E, bottom). In other words, the competition model suggests the possibility that implicit learning improved between Exposures 1 and 2, but this change was hidden by a dramatic increase in explicit strategy (which suppressed implicit learning during Exposure 2).

To test this prediction, we can suppress explicit adaptation, thus eliminating competition (Figure 7F, middle). Such an intervention would move our experiment from Region C to Region D (Figure 7F, top) where we will observe greater change in the implicit process (Figure 7D, bottom). We examined this possibility in a new experiment.

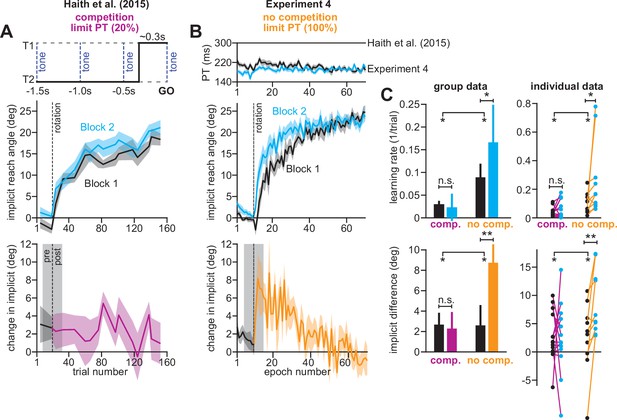

Savings in implicit learning is unmasked by suppression of explicit strategy

In Exp. 4 (Figure 8), participants experienced two 30° rotations, separated by washout trials with veridical feedback (mean reach angle over last three washout cycles was 0.55 ± 0.47°, one-sample t-test against zero, t(9)=1.16, p = 0.28; not shown in Figure 8). To suppress explicit strategy, we restricted reaction time on every trial, which in Exp. 3, greatly reduced explicit learning (Figure 3N; re-aiming decreases from 12° to about 2°). Under these reaction time constraints, participants exhibited reach latencies around 200ms (Figure 8B, top).

Removing explicit strategy reveals savings in implicit adaptation.

(A) Top: Low preparation time (Low PT) trials in Haith et al., 2015 used to isolate implicit learning. Middle: learning during Low PT in Blocks 1 and 2. Bottom: difference in Low PT learning between Blocks 1 and 2. (B) Similar to A, but here (Experiment 4) explicit learning was suppressed on every trial, as opposed to only 20% of trials. To suppress explicit strategy, we restricted reaction time on every trial. The reaction time during Blocks 1 and 2 is shown at top. At middle, we show how participants adapted to the rotation under constrained reaction time. At bottom, we show the difference between the learning curves in Blocks 1 and 2. These two periods were separated by washout cycles with veridical feedback (not shown). (C) Here, we measured savings in Haith et al. (20% of trials had reaction time limit) and Experiment 3 (100% of trials had reaction time limit). Top row: we quantify savings by fitting an exponential curve to each learning curve. Data are the rate parameter associated with the exponential. Left column shows group-level data (median). Right column shows individual participants. Bottom row: we quantify savings by comparing how Blocks 1 and 2 differed before perturbation onset (black), and after perturbation onset (purple and yellow). At left, error bars show mean ± SEM. At right, individual participants are shown. Error bars in A and B indicate mean ± SEM. Statistics in C show mixed-ANOVA (exposure number is within-subject factor, experiment type is between-subject factor). Significant interactions were observed both in rate (top) and angular (bottom) savings measure. Follow-up simple main effects were assessed via one-way repeated-measures ANOVA. Statistical bars where two sets of asterisks appear (at left and right) indicate interactions. Statistical bars with a centered set show simple main effects. Statistics: n.s. means p > 0.05, *p < 0.05, **p < 0.01.

-

Figure 8—source code 1

Figure 8 data and analysis code.

- https://cdn.elifesciences.org/articles/65361/elife-65361-fig8-code1-v2.zip

While occasionally limiting preparation time prevented savings in Haith et al., 2015 (Figure 8A, low preparation time on 20% of trials), inhibiting strategy use on every trial in Experiment 4 yielded the opposite outcome (Figure 8B). Low preparation time learning rates increased by more than 80% in Experiment 4 (Figure 8C top; mixed-ANOVA exposure number by experiment type interaction, F(1,22)=5.993, p = 0.023; significant interaction followed by one-way rm-ANOVA across exposures: Haith et al. with F(1,13)=1.109, p = 0.312, ηp2=0.079; Experiment 4 with F(1,9)=5.442, p = 0.045, ηp2=0.377). Statistically significant increases in reach angle were detected immediately following rotation onset in Experiment 4 (Figure 8B, bottom), but not our earlier data (Figure 8C, bottom; mixed-ANOVA exposure number by experiment interaction, F(1,22)=4.411, p = 0.047; significant interaction followed by one-way rm-ANOVA across exposures: Haith et al. with F(1,13)=0.029, p = 0.867, ηp2=0.002; Experiment 4 with F(1,9)=11.275, p = 0.008, ηp2=0.556).