Nonlinear circuits for naturalistic visual motion estimation

Abstract

Many animals use visual signals to estimate motion. Canonical models suppose that animals estimate motion by cross-correlating pairs of spatiotemporally separated visual signals, but recent experiments indicate that humans and flies perceive motion from higher-order correlations that signify motion in natural environments. Here we show how biologically plausible processing motifs in neural circuits could be tuned to extract this information. We emphasize how known aspects of Drosophila's visual circuitry could embody this tuning and predict fly behavior. We find that segregating motion signals into ON/OFF channels can enhance estimation accuracy by accounting for natural light/dark asymmetries. Furthermore, a diversity of inputs to motion detecting neurons can provide access to more complex higher-order correlations. Collectively, these results illustrate how non-canonical computations improve motion estimation with naturalistic inputs. This argues that the complexity of the fly's motion computations, implemented in its elaborate circuits, represents a valuable feature of its visual motion estimator.

https://doi.org/10.7554/eLife.09123.001eLife digest

Many animals have evolved the ability to estimate the speed and direction of visual motion. They use these estimates to judge their own motion, so that they can navigate through an environment, and to judge how other animals are moving, which allows them to avoid predators or detect prey.

In the 1950s, a physicist and a biologist used measurements of beetle behavior in response to visual stimuli to develop a model for how the brain estimates motion. The model became known as the Hassenstein-Reichardt correlator (HRC). The HRC and related models accurately predict the behavioral and neural responses of insects and mammals to many types of motion stimuli.

However, there are visual stimuli that generate motion percepts in fruit flies (and humans) that cannot be accounted for by the HRC. Are these differences between real brains and the HRC simply imperfections in visual circuits, whose neurons cannot perform idealized mathematical operations, or are these deviations intentional, somehow improving motion estimates? In other words: are the observed deviations a bug or a feature of visual circuits?

To address this question, Fitzgerald and Clark evaluated how different models of motion detection performed when presented with natural scenes. Natural scenes are fundamentally different from most stimuli used in lab, since they contain a rich set of regularities that are not present in simple stimuli. Fitzgerald and Clark compared the ability of the HRC, along with new, more general models, to estimate the speed and direction at which images moved across a screen. This revealed that many models could out-perform the HRC by taking advantage of regularities in natural scenes. Those models that were tuned to perform well with natural scenes could also predict the paradoxical motion percepts that were not predicted by the HRC. This suggests that visual circuits may have evolved to perform well with natural inputs, and the paradoxical motion percepts represent a feature of the real circuit, rather than a bug.

Models that performed well with natural inputs treated light and dark visual information differently. This different treatment of light and dark is a property of most visual systems, but not of the HRC or related models. In the future, these models of motion processing may help us understand how biological details of the fruit fly's visual circuits help it to estimate motion.

https://doi.org/10.7554/eLife.09123.002Introduction

A major goal in neuroscience is to understand how the brain computes behaviorally relevant stimulus properties from streams of incoming sensory data (Sejnowski et al., 1988). Visual motion guides behaviors across the animal kingdom. To navigate, many vertebrates and invertebrates use visual data to estimate the velocity of full field motion, and they use that estimate to judge their motion with respect to their environment (Sperry, 1950; Kalmus, 1964; Reichardt and Poggio, 1976; Orger et al., 2000). Spatially localized motion perception (Hubel and Wiesel, 1962; Barlow and Hill, 1963; Buchner, 1976) is also important, as it can indicate the presence of predators or prey in the environment (Reichardt et al., 1983; Gabbiani et al., 1999; Nordström et al., 2006; Zhang et al., 2012), and spatial velocity gradients allow animals to judge relative distances (Rogers and Graham, 1979; Srinivasan et al., 1991; Kral, 2003; Pick and Strauss, 2005). In principle, different algorithms could be used to estimate different types of motion. However, data suggest that many animals compute local motion over an array of spatially localized elementary motion detectors, or EMDs, and then differentially pool those signals for use in different behaviors and neural operations (Hubel and Wiesel, 1962; Barlow and Hill, 1963; Buchner, 1976; Britten et al., 1992; Gabbiani et al., 1999; Franz and Krapp, 2000; Rust et al., 2006).

The Hassenstein-Reichardt correlator (HRC) was introduced nearly sixty years ago to model the EMD underlying the beetle's optomotor response (Hassenstein and Reichardt, 1956). It has since provided numerous insights into motion-guided behaviors across a variety of insect species. The HRC's successes are most striking in flies, where the HRC accurately predicts a wide variety of behavioral and neural responses (Götz, 1968; Buchner, 1976; Egelhaaf and Borst, 1989; Haag et al., 2004), and even adaptation to stimulus statistics (Borst et al., 2005). The HRC's importance also extends to primates and vertebrates, where the EMDs are often described in terms of the motion energy model (Adelson and Bergen, 1985). In particular, although the HRC and motion energy models differ in terms of their intuition and neuronal bases, both models rely on the same mathematical fact about moving visual stimuli—motion causes pairs of spatially separated points to become correlated when a stimulus moves from one location towards the other (Adelson and Bergen, 1985; van Santen and Sperling, 1985). A simple algebraic identity shows that the HRC and motion energy models are computationally equivalent.

Research on Drosophila's motion detection system has progressed quickly in recent years. With an influx of novel genetic, anatomical, and physiological tools, Drosophila researchers are able to perform experiments that have revealed an intricate neural circuit whose details were not anticipated. For example, separate pathways process the motion of light and dark moving edges (Joesch et al., 2010; Clark et al., 2011; Maisak et al., 2013), and different neurons within these pathways coordinate the motion response depending on the velocity of motion (Ammer et al., 2015). Furthermore, connectomic analysis has revealed that more spatial and temporal channels converge onto the fly's motion computing neurons than had been predicted by the HRC's two-input architecture (Takemura et al., 2013). Going forward, it is critical that the field discovers which of these circuit details are computationally relevant and which are not. Since many of these details go beyond the HRC's premise, we must consider alternate theories if we hope to understand how circuit details contribute to motion estimation.

A large body of theoretical and experimental work supports the hypothesis that visual systems are tailored for functionality in the animal's natural behavioral context (Simoncelli and Olshausen, 2001). For example, photoreceptors adapt effectively across the ecological range of light levels (Juusola and Hardie, 2001), the excess number of OFF vs ON retinal ganglion cells matches the excess information of dark vs light contrasts in natural images (Ratliff et al., 2010), and several learning algorithms predict receptive fields similar to early cortical neurons when applied to natural images (Olshausen and Field, 1996; Bell and Sejnowski, 1997). These examples are special cases of the general hypothesis that the early visual system provides an efficient code for the natural visual environment, and recent research suggests that efficient coding accounts for certain aspects of higher-level coding and perception as well (Tkačik et al., 2010; Hermundstad et al., 2014; Yu et al., 2015).

Several recent studies have established connections between the biological algorithms used for visual motion estimation and the statistical demands of naturalistic motion estimation. Natural stimuli are intricately structured and light–dark asymmetric (Geisler, 2008), and a variety of low and high order correlations characterize motion in such an environment. Although the HRC and motion energy models only respond to pairwise correlations in their inputs (Adelson and Bergen, 1985; van Santen and Sperling, 1985), the Bayes optimal visual motion estimator also incorporates a variety of higher-order correlations of both even and odd order (Potters and Bialek, 1994; Fitzgerald et al., 2011). Accordingly, certain visual stimuli that contain only higher-order correlations induce motion percepts in both vertebrates and insects (Chubb and Sperling, 1988; Quenzer and Zanker, 1991; Zanker, 1993; Orger et al., 2000; Hu and Victor, 2010; Clark et al., 2014), and theoretical work shows that the correlations that characterize these stimuli can also improve motion estimation in natural environments (Clark et al., 2014). This demonstrates that neither the HRC nor the motion energy model can account for the totality of experimentally observed motion percepts and suggests that departures from these canonical models might improve motion estimation accuracy. Relatively little is known about the neural basis of these higher-order motion percepts, although several studies have suggested intriguing commonalities across insect and primate species (Clark et al., 2014; Nitzany et al., 2014).

Here we investigate whether the computational demands imposed by accurate motion estimation in natural environments can illuminate the unexpected details of Drosophila's motion estimation circuit or account for non-Reichardtian motion perception in flies. We study a sequence of five computational models, each of which considers a conceptually new aspect of the motion estimation problem. Since each model succeeds in improving estimation accuracy, these results provide a range of nonlinear circuit mechanisms that flies and other animals might incorporate into their motion estimators. We describe how observed elements of Drosophila's motion estimation circuitry could support such computations (Table 1). Importantly, four of the five models also predict the signs and approximate magnitudes of known non-Reichardtian motion percepts in flies. Since the models were tuned exclusively for estimation accuracy, these results support the view that non-Reichardtian motion percepts probe ethologically relevant aspects of biological motion estimators. More generally, our results posit normative interpretations for some unexpected aspects of the fly's motion estimation circuit and behavior and suggest that non-Reichardtian aspects of fly circuitry and behavior might be closely linked through the statistics of natural scenes.

The different models used in this paper, experimental results that support each model, and references for those results

| Model | Supporting evidence |

|---|---|

| Front-end nonlinearity | • Photoreceptors show nonlinear responses to contrast changes (Laughlin, 1989; Juusola and Hardie, 2001; Juusola and Hardie, 2001; van Hateren and Snippe, 2006) |

| • Some neurons in the early visual system have nonlinear responses that make their output signals nearly uniform (Laughlin, 1981) | |

| Weighted 4-quadrant model | • Visual processing is divided early into ON and OFF channels (Joesch et al., 2010; Clark et al., 2011; Behnia et al., 2014; Meier et al., 2014; Strother et al., 2014) |

| • The two output channels (T4/T5) are sensitive to light and dark edges (Maisak et al., 2013), but their inputs are incompletely rectified (Behnia et al., 2014) | |

| • Stimuli targeting the four quadrants are differentially represented in neural substrates (Clark et al., 2011; Joesch et al., 2013) | |

| Non-multiplicative nonlinearity | • Pure multiplication is not a trivial neural operation (Koch, 2004) |

| • Inputs to T4/T5 are nonlinearly transformed (Behnia et al., 2014), which also contributes to the biologically implemented non-multiplicative nonlinearity | |

| Unrestricted nonlinearity | • The direction-selective neurons T4 receive inputs from more than two types of neurons (Takemura et al., 2013; Ammer et al., 2015) |

| • T4 receives inputs from both its major input channels at overlapping points in space (Takemura et al., 2013) | |

| Extra input nonlinearity | • The direction-selective neuron T4 receives inputs from more than two discrete retinotopic locations (Takemura et al., 2013) |

Results

Flies incorporate motion signals that the HRC neglects

The HRC is the dominant model of motion computation in flies and other insects. In this paper we describe several generalizations of the HRC, but it is helpful to first review this canonical model. The HRC comprises three stages of processing. First, two different temporal filters (here, a low-pass filter and a high-pass filter ) are applied to each of two spatially filtered visual input streams (Figure 1A, ‘Materials and methods’). These four filtered signals are then paired and multiplied (Figure 1A). Finally, the HRC takes the difference between the two multiplied signals to obtain a mirror anti-symmetric motion estimator (Figure 1A). Because the HRC combines its two input channels via a multiplication operation, the average output of the HRC depends only on 2-point correlations in the visual stimulus. We thus refer to the HRC as a 2-point correlator, and we will return to this mathematical characterization of the HRC repeatedly throughout this work.

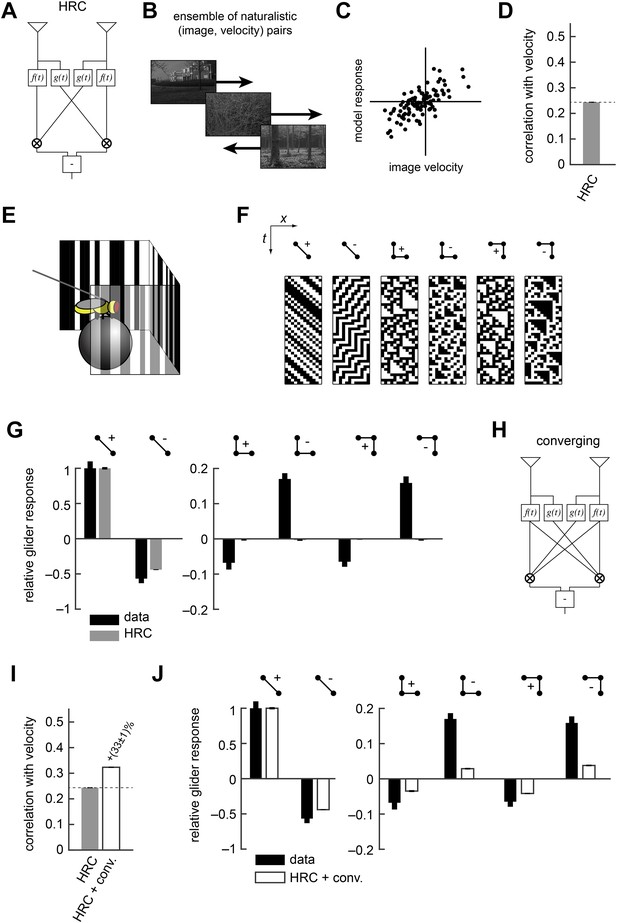

The Hassenstein-Reichardt correlator (HRC) model is an incomplete description of Drosophila's motion estimator.

(A) Diagram of the HRC model. (B) We assessed motion estimation performance across an ensemble of naturalistic motions, each of which consisted of a natural image (van Hateren and van der Schaaf, 1998) and a velocity chosen from a normal distribution. (C) We quantified model accuracy by comparing the model response to the true velocity using the mean squared error. (D) We summarized the error with the correlation coefficient between the model output and the true velocity. (E) In previous work (Clark et al., 2014), we used a panoramic display and spherical treadmill to measure the rotational responses of Drosophila to visual stimuli. (F) We presented flies with binary stimuli called gliders (Hu and Victor, 2010), which imposed specific 2-point and 3-point correlations (Clark et al., 2014). (G) Flies turned in response to 3-point glider stimuli, but these responses cannot be predicted by the standard HRC. (H) Diagram of the converging 3-point correlator, which is designed to detect higher-order motion signals like those found in 3-point glider stimuli. (I) Adding the converging 3-point correlator to the HRC improved motion estimation performance with naturalistic inputs. We optimized weighting coefficients to minimize the mean squared error over the ensemble of naturalistic motions and used cross-validation to protect against over-fitting. (J) This model predicted that Drosophila would weakly turn in response to 3-point glider stimuli.

No motion estimator is perfect for every stimulus, and this paper explores the hypothesis that evolution has tuned Drosophila's motion estimator for visual experiences that are likely to result from ordinary behavior in natural environments (Appendix 1). We assessed the accuracy of the HRC and other motion estimators by approximating naturalistic motion as the rigid translation of natural images (Clark et al., 2014), with a velocity distribution that mimicked Drosophila's natural behavior (Figure 1B, ‘Materials and methods’) (Katsov and Clandinin, 2008). We spatiotemporally filtered the input signals to simulate the responses of two neighboring photoreceptors (‘Materials and methods’). We quantified the performance of each model as the mean squared error between the input velocity and model output. However, we report each model's accuracy as the correlation coefficient between its output and the true velocity (Figure 1C), an intuitive metric that is equivalent to the mean squared error for correctly scaled model outputs (‘Materials and methods’). In isolation, the local HRC was weakly correlated with the velocity of motion (Figure 1D). Although the HRC's performance can be improved by averaging over space and time (Dror et al., 2001; Clark et al., 2014), this study explores how alternate nonlinear processing can improve motion estimation accuracy without sacrificing spatial or temporal resolution (Clark et al., 2014).

Researchers can probe a fly's motion estimate by measuring its behavioral optomotor turning response (Hassenstein and Reichardt, 1956; Götz and Wenking, 1973; Buchner, 1976; Reichardt and Poggio, 1976). We previously measured optomotor responses from flies walking on a spherical treadmill by recording their turning responses to various visual stimuli (Figure 1E) (Clark et al., 2014). We emphasized binary stimuli called gliders (Hu and Victor, 2010) (Figure 1F), which enforce spatiotemporal correlations to interrogate the fly's motion estimation algorithm. For example, 2-point gliders contain only 2-point correlations (first two stimuli, Figure 1F). Drosophila turned in response to these stimuli (Clark et al., 2014) (black bars, left, Figure 1G), and the HRC correctly predicted that flies would respond to both positive and negative 2-point correlations (gray bars, left, Figure 1G). On the other hand, 3-point gliders contain 3-point correlations without 2-point correlations (last four stimuli, Figure 1F). These stimuli generated motion responses in flies (black bars, right, Figure 1G) that the HRC could not explain (gray bars, right, Figure 1G). Thus, behavioral responses to glider stimuli show that the HRC is an incomplete description of fly motion estimation and provide a useful benchmark for evaluating alternate models.

In this study, we tune our models to optimize motion estimation accuracy, rather than to fit the behavioral data, for two main reasons. First, we want to explore the hypothesis that Drosophila's glider responses follow from performance optimization within biologically plausible circuit architectures. Second, we seek models that will generalize well across visual stimuli, and the measured glider responses under-constrain possible motion estimation models. It's useful to illustrate our procedure with a simple example. The HRC does not account for 3-point glider responses because it is insensitive to 3-point correlations. Nevertheless, 3-point correlations are present in natural stimuli (Clark et al., 2014; Nitzany and Victor, 2014), and their use might facilitate accurate motion estimation. We can explore this hypothesis by summing the HRC with a motion estimator designed to respond specifically to 3-point correlations. For instance, the mirror anti-symmetric ‘converging’ 3-point correlator multiplies one high-pass filtered signal with two low-pass filtered signals (Figure 1H) and mimics the converging structure present in certain glider stimuli (last two stimuli, Figure 1F). We tune the model for motion estimation accuracy by choosing the weights of the HRC and the converging 3-point correlator to minimize the mean squared error (‘Materials and methods’). The resulting model is more accurate than the HRC (Figure 1I) and it predicts that flies should respond to glider stimuli in the observed directions (Figure 1J, ‘Materials and methods’). Nevertheless, this simple model underestimates 3-point turning magnitudes (Figure 1J), indicating a discrepancy between the fly's motion estimator and this performance-optimized model.

In this study, we apply this same basic model building procedure to a series of increasingly general model architectures. There are four benefits to this approach. First, each model incorporates a type of computation that was neglected by earlier models. Thus, we can compare model accuracies to quantify how important various computations are for naturalistic motion estimation. Second, each model has a distinct biological interpretation in terms of Drosophila's motion estimation circuit (Table 1). This allows us to enumerate many directions for future experimental and computational research. Third, this set of models reveals several distinct principles of accurate naturalistic motion estimators, yet no single model illustrates every principle. Finally, by comparing the glider predictions of each model to behavioral data, we can gain insight into which principles underlie Drosophila's known glider responses.

Nonlinear preprocessing of HRC inputs improves estimation but poorly predicts responses to gliders

The HRC correlates pairs of photoreceptor signals (Figure 1A). We previously assumed that each photoreceptor's response was generated from incoming contrast signals through linear spatiotemporal filtering. However, real photoreceptors are linear only over a limited range of inputs (Laughlin, 1981; Juusola and Hardie, 2001) (Table 1). Our first model thus modifies the HRC by allowing the photoreceptor responses to become nonlinear (Figure 2A). More specifically, we consider models in which a static nonlinearity transforms the filtered contrast signals before a standard HRC is applied to the two input streams (Figure 2A, ‘Materials and methods’). Since the nonlinearity occurs before the HRC, we refer to this model as the front-end nonlinearity model. By nonlinearly transforming the contrast signals, the front-end nonlinearity model is able to reshape natural sensory statistics. In particular, linear photoreceptor signals inherit complex non-Gaussian statistics from their natural inputs (Figure 2B), but front-end nonlinearities (Figure 2C) can produce transformed signals with alternate statistics (Figure 2D, ‘Materials and methods’). Thus, optimal front-end nonlinearity models should reshape natural statistics into those that best suit the HRC. Previous studies have already demonstrated example front-end nonlinearity models that improve naturalistic motion processing by the HRC (Dror et al., 2001; Brinkworth and O'Carroll, 2009). Here we provide new theoretical insight into these improvements and their consequences for glider responses.

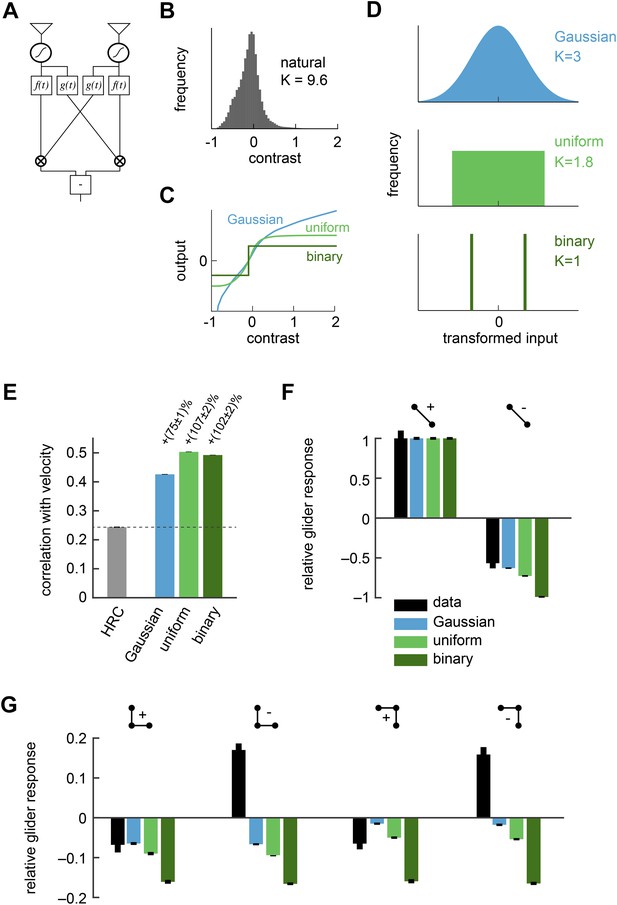

Front-end nonlinearities improved naturalistic motion estimation but did not reproduce the psychophysical results.

(A) Diagram of the front-end nonlinearity model. The nonlinearity occurs after the spatiotemporal filtering of photoreceptors but before the temporal filtering of the HRC. (B) The distribution of contrast signals after photoreceptor filtering had a kurtosis of 9.6. The kurtosis of unfiltered pixels in the image database was 7.8. (C) Three different nonlinearities that transformed this input distribution into a Gaussian distribution, a uniform distribution, and a binary distribution. (D) After these transformations, the kurtosis of the contrast signal was reduced to 3, 1.8, and 1, respectively. (E) Each front-end nonlinearity model improved the HRC's estimation accuracy, and uniform output signals worked best. (F, G) The front-end nonlinearity models reproduced the sign of the negative 2-point glider psychophysical responses but did not reproduce the pattern of psychophysical responses to 3-point gliders.

Although the statistics of natural images are complicated, the mean squared error between the HRC's output and the velocity of motion depends only on a few statistical quantities. Since the HRC is a 2-point correlator, the mean velocity signal decoded by an HRC is determined by the second-order statistics of the image ensemble (Dror et al., 2001). The variance of the motion signal comes from the square of a quadratic signal, and thus the noise statistics of the HRC depend on the fourth-order statistics of the image ensemble (Appendix 2). If the image ensemble is spatially uncorrelated, the situation simplifies further and the correlation between the estimated and true image velocity is determined entirely by the standardized fourth central moment of the input streams, a quantity known as kurtosis (Appendix 3). A larger kurtosis results in a larger error in the motion estimate. Note that some authors use ‘kurtosis’ to refer to the ‘excess kurtosis’, which shifts kurtosis values such that the Gaussian distribution has zero excess kurtosis. This shift is not relevant for our purposes. Because large positive contrasts are relatively probable, naturalistic inputs are highly kurtotic (kurtosis = 9.6 for the spatiotemporal filtering in our simulations) and are thus expected to hinder HRC performance (Figure 2B).

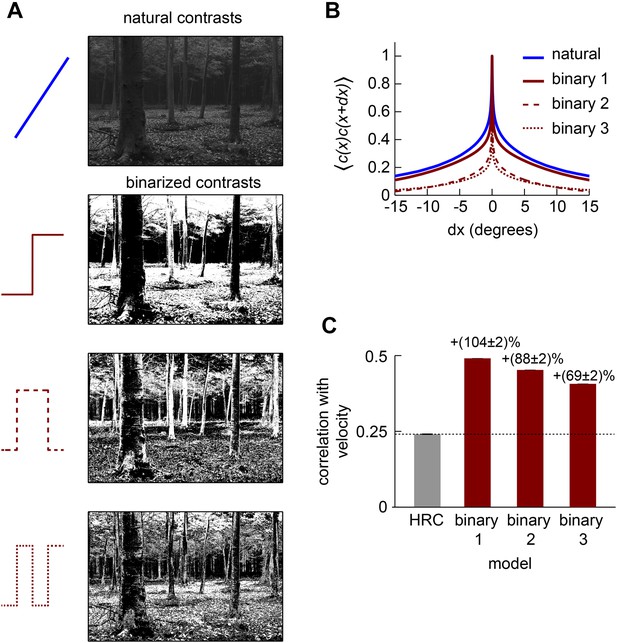

The Gaussian, uniform, and symmetric Bernoulli distributions have much lower kurtosis values (kurtosis = 3.0, 1.8, 1.0, respectively, Figure 2D). In fact, the symmetric Bernoulli distribution has the lowest kurtosis of any probability distribution (DeCarlo, 1997). When we transformed the HRC's inputs to have these statistics (‘Materials and methods’), we found that each nonlinearity substantially improved the accuracy of the HRC (Figure 2E). The contrast equalizing nonlinearity, which produces uniform outputs, performed best and also plays a prominent role in efficient coding theory (Laughlin, 1981). It is interesting that contrast equalization improved the accuracy of the HRC more than binarization (Figure 2E), even though it produced outputs with greater kurtosis. The reason for this is that natural images are spatially correlated, and the accuracy of the HRC over a general image ensemble depends on the ensemble's spatial correlation structure (Appendix 2). Binarization attenuated spatial correlations more strongly than contrast equalization over the natural image ensemble (Figure 2—figure supplement 1), and spatial correlations can enhance the performance of the HRC (Appendices 4, 5). Designing a nonlinearity that optimally sculpts the correlation structure of natural images is not simple and goes beyond the scope of this study.

Each front-end nonlinearity model is sensitive to a variety of higher-order correlations (Appendix 6). We thus tested whether accurate front-end nonlinearity models would predict Drosophila's glider response pattern. However, each front-end nonlinearity model performed poorly at this task (Figure 2F,G). None of the three models predicted that Drosophila would invert its response to positive and negative 3-point gliders (Figure 2G), even though they predicted that the 3-point glider responses would be nonzero. The simplest explanation for this observation is that the front-end nonlinearity models responded to fourth-order correlations that are common to the stimuli, rather than the third-order correlations that defined the glider stimuli and primarily drove the experimental response (Clark et al., 2014). Mechanistically, this result follows from the fact that the nonlinearities that reduced kurtosis (Figure 2C) were not strongly asymmetric around zero contrast (Appendix 6). The binarizing front-end nonlinearity model also failed to predict that Drosophila would respond less to negative 2-point glider stimuli than positive 2-point glider stimuli (Figure 2F). Since this effect was correctly predicted by the standard HRC (Figure 1G), this observation shows that accurate front-end nonlinearity models can distort the processing of 2-point correlations. Although the front-end nonlinearity model did not explain the phenomenon of fly glider perception, future work should investigate whether its merits make it functionally relevant for motion processing in other contexts or species.

Separating ON and OFF signals improves motion estimation and predicts responses to gliders

Instead of a front-end nonlinearity, Drosophila could use an alternative non-Reichardtian motion estimation strategy that reflects natural sensory statistics, without necessarily requiring nonlinear preprocessing. Previous computational analyses show that motion estimation strategies that distinguish light and dark information can enhance motion processing with natural inputs (Fitzgerald et al., 2011; Clark et al., 2014; Nitzany and Victor, 2014), and recent experiments indicate that flies use separate channels to process the motion of light and dark edges (Joesch et al., 2010; Clark et al., 2011; Behnia et al., 2014; Clark et al., 2014; Meier et al., 2014; Strother et al., 2014) (Table 1). Our next model explores the hypothesis that Drosophila segregates ON and OFF signals in order to facilitate naturalistic motion estimation (Clark et al., 2014) (Figure 3A, ‘Materials and methods’). There are four ways to pair the ON and OFF components of the two filtered signals that enter the HRC's multiplier. For example, one possibility is to pair the ON component of the low-pass filtered signals with the OFF component of the high-pass filtered signal. Since each pairing restricts the HRC's multiplier to a single quadrant of the Cartesian plane, we refer to these four signals as HRC-quadrants. If the quadrants are summed with equal weights, then this model is mathematically identical to the HRC (Hassenstein and Reichardt, 1956; Clark et al., 2011). Unequal weighting coefficients enable the motion estimator to prioritize some quadrants over others, and here we select quadrant weightings that minimize the mean squared error between the model output and velocity (Figure 3B, ‘Materials and methods’). More generally, we refer to any model that linearly combines the four HRC-quadrants as a weighted 4-quadrant model. The precise manner in which the four HRC-quadrants might map onto circuitry remains unclear; we do not suggest there exists separate circuitry for each quadrant. For instance, studies have identified only two motion-processing channels in the Drosophila brain, which might suggest that the fly only uses a subset of the quadrants (Eichner et al., 2011; Joesch et al., 2013; Maisak et al., 2013). On the other hand, each channel appears imperfectly selective for light vs dark signals (Behnia et al., 2014), which in principle enables these two channels to access all four quadrants (Table 1).

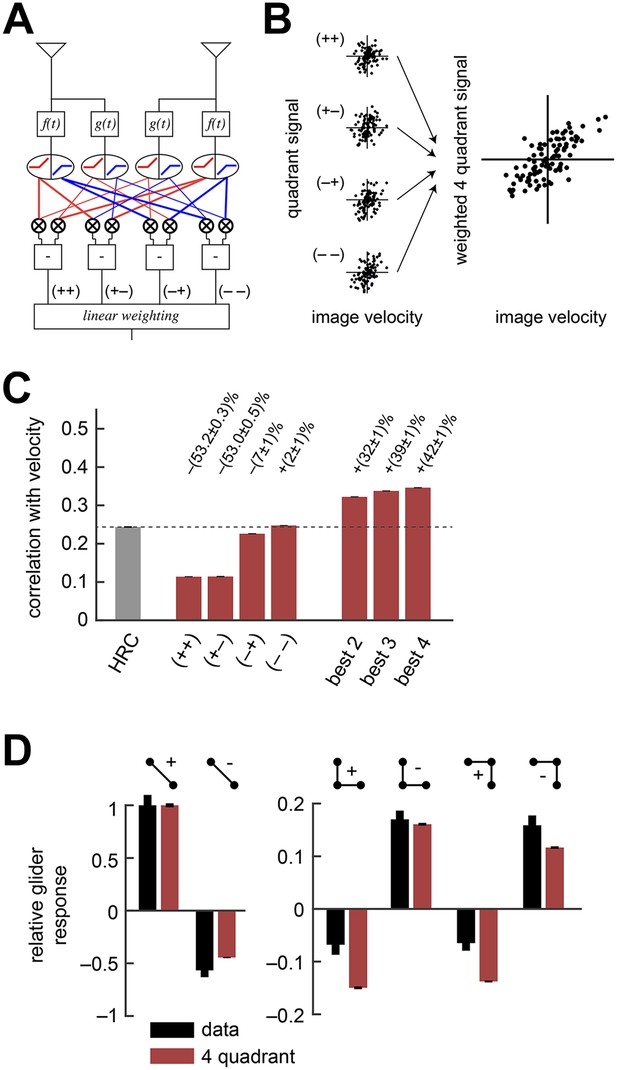

The weighted 4-quadrant model improved estimation performance and reproduced the directionality of psychophysical results.

(A) Diagram of the weighted 4-quadrant model. Similar to ON/OFF processing in the visual system, the weighted 4-quadrant model splits the four differentially filtered signals into positive and negative components. As in the HRC, these component signals are paired, multiplied, and subtracted to produce four mirror anti-symmetric signals. We refer to these signals as HRC-quadrants. The model output is a weighted sum of the quadrant signals. We identify quadrants by whether they respond to the positive or negative components of each filtered signal and denote the four quadrants as (+ +), (+ −), (− +), and (− −). In this notation, the first index refers to the sign of the low-pass filtered signal (emanating from ), and the second refers to the high-pass filtered signal (emanating from ). (B) We measured the response of each quadrant to naturalistic motions and chose the quadrant weightings to minimize the mean squared error between the model output and the true velocity. (C) Comparison of the estimation performance of individual quadrants, multiple quadrants, and the HRC. The best two quadrants were (− −) and (− +); the best three also included (+ −). (D) The performance-optimized weighted 4-quadrant model reproduced the signs and approximate magnitudes of the psychophysical results.

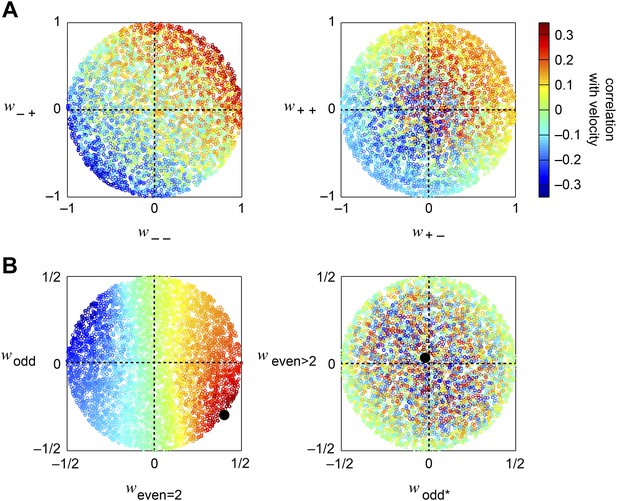

We began by examining how well individual quadrants predicted the velocity of motion. The four quadrants provided motion signals of strikingly different quality (first four red bars, Figure 3C). The most accurate quadrant correlated negative low-pass filtered signals with negative high-pass filtered signals ((− −) bar, Figure 3C). This isolated quadrant already outperformed the full HRC. The quadrant that correlated negative low-pass filtered signals with positive high-pass filtered signals also performed relatively well ((− +) bar, Figure 3C), whereas the quadrants that involved positive low-pass filtered signals performed poorly ((+ +) and (+ −) bars, Figure 3C). This shows that negative signals emanating from the low-pass filter better facilitate motion estimation, and the HRC's uniform weighting of all four quadrants is computationally detrimental.

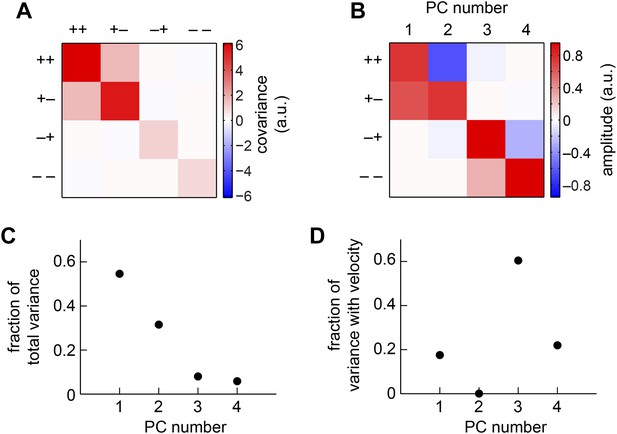

We next considered all subsets of two, three, or four quadrants. The best subsets for each number of predictors were nested, and the quadrants were incorporated in the order (i) (− −); (ii) (− +); (iii) (+ +); (iv) (+ −). Although all four quadrants enhanced the accuracy of the weighted 4-quadrant model, the benefit of each added quadrant decreased with the number of quadrants (Figure 3C). It is possible to reparameterize the weighted 4-quadrant model in a form that isolates the contributions of various higher-order correlations to the model's accuracy (Appendix 7). Interestingly, this parameterization showed that nearly all the accuracy of the weighted 4-quadrant model can be obtained by supplementing the HRC with a set of odd-ordered correlations that account for the asymmetry between positive and negative low-pass filtered signals (Figure 3—figure supplement 1, Appendix 8). Principal component analysis (PCA) did not reveal this simple interpretation of the model's computation (Appendix 9).

The performance-optimized weighted 4-quadrant model also offered an interesting interpretation of Drosophila's glider response pattern. First note that the model preserved the HRC's response pattern to 2-point glider stimuli (compare left subpanels of Figure 3D and Figure 1G). More interestingly, the model predicted behavioral responses to 3-point glider stimuli that matched the experimentally observed turning directions, and even the response magnitudes were similar between the model and the data (right, Figure 3D). Nevertheless, the model's predictions were imperfect. The primary qualitative discrepancy was that the model failed to predict that positive 3-point glider stimuli would generate smaller turning responses than negative 3-point glider stimuli. The simplest interpretation for this experimental result is that flies might incorporate both 3-point correlations and 4-point correlations into their motion estimation strategy. In particular, since the positive and negative 3-point glider stimuli have inverted 3-point correlations and matched 4-point correlations, third-order and fourth-order correlations would have the same sign for one parity and opposite signs for the other parity. This observation makes it easier to understand the glider predictions of the weighted 4-quadrant model. The optimized model does a good job accounting for the direction and approximate magnitude of the glider responses because it draws heavily on second-order and odd-order correlations, but it fails to predict the 3-point glider magnitude asymmetry because it finds little added utility in higher-order even correlations (Figure 3—figure supplement 1, Appendix 8). This failure stems from architectural limitations in the weighted 4-quadrant model (Figure 3—figure supplement 2), so it is important to consider alternate model classes.

Drosophila circuitry contains additional elements that might facilitate motion estimation

The previous section suggested that the segregation of light and dark signals by Drosophila's motion estimation circuitry might enhance naturalistic motion estimation in a manner that also generates the observed glider responses. In this section, we introduce three hierarchical models to investigate other features of Drosophila's circuit that might have functional consequences for the processing of natural stimuli and gliders (Table 1). We refrain from modifying the temporal filtering of the motion estimator, and we focus on its nonlinear architecture.

The first of these models recasts the HRC and the weighted 4-quadrant model in a more general architecture. This model is the class of mirror anti-symmetric models that apply a 2-dimensional nonlinearity to the low-pass filtered signal from one point in space and the high-pass filtered signal from a neighboring point in space (Figure 4A). Since the observed glider responses indicate that flies use higher-order correlations of both even and odd order, we model this 2-dimensional nonlinearity as a fourth-order polynomial (‘Materials and methods’). The HRC corresponds to the special case of this nonlinearity that multiplies the two inputs (left, Figure 4B). To emphasize how the model class in Figure 4A generalizes the HRC, we refer to it as the non-multiplicative nonlinearity model. In comparison, the weighted 4-quadrant model corresponds to a different nonlinearity that separately scales a pure multiplication in each quadrant of the Cartesian plane. Compared to the HRC, the optimized forms of both the weighted 4-quadrant model and the non-multiplicative nonlinearity model substantially attenuated positive low-pass filtered signals (middle and right, Figure 4B), though the non-multiplicative nonlinearity shows less attenuation. This model architecture provides enough flexibility to generate the glider response pattern (Figure 4—figure supplement 1).

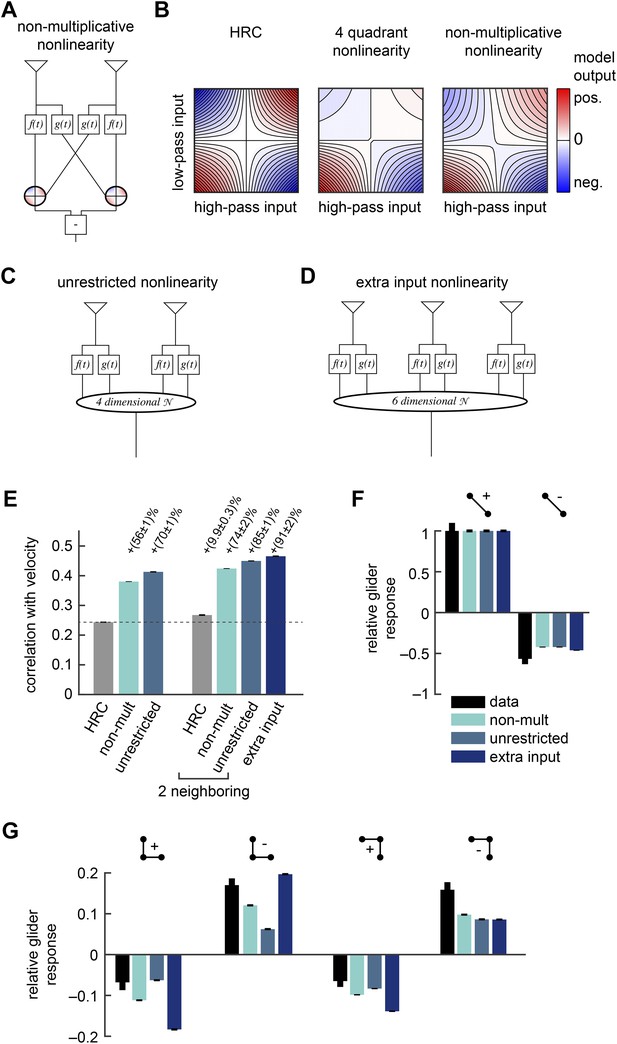

Several biologically motivated generalizations of the motion estimator further improved estimation performance without sacrificing glider responses.

See Table 1 for a description of the biological rationales behind these models. (A) The ‘non-multiplicative nonlinearity’ model substitutes a 2-dimensional nonlinearity for the pure multiplication of the HRC. Here, we approximated the nonlinearity with a fourth order polynomial. (B) Two-dimensional nonlinearities underlying the HRC, the weighted 4-quadrant model, and the non-multiplicative nonlinearity model. The latter models reflect optimized cases, in which the weighting coefficients maximized estimation performance with natural inputs. Iso-output lines are shown in each plot, and the horizontal and vertical limits are chosen to include 95% of the naturalistic input signals. (C) Another generalization, the ‘unrestricted nonlinearity’ model allows all 4 input signals to be combined nonlinearly. We approximate this 4-dimensional nonlinearity with a fourth-order polynomial. (D) A final generalization, the ‘extra input nonlinearity’ model, relaxes the restriction that the motion estimator only uses 2 spatial inputs. We approximate this 6-dimensional nonlinearity with a fourth-order polynomial. (E) Comparison of the estimation performance of these models to the HRC. We compare the extra input nonlinearity model to the average of two neighboring motion estimators. (F, G) The three models correctly predicted the directions of psychophysical responses. The pattern of 3-point responses differed somewhat across the models, and the extra input nonlinearity model was the first to predict a large asymmetry between positive and negative 3-point glider responses.

The non-multiplicative nonlinearity model relaxes some restrictions of the 4-quadrant model. This is prudent because the exact nonlinear transformations implemented by neural circuits in the Drosophila brain remain poorly understood. For example, T4 and T5 are the first direction-selective neurons in the fly brain (Maisak et al., 2013), but the mechanism by which they become direction-selective is not yet known. Furthermore, neurons upstream of T4 and T5 imperfectly segregate light and dark information (Behnia et al., 2014) and show overlap between the two motion pathways (Silies et al., 2013), suggesting that ON/OFF segregation may not be crisply realized. We will discuss this model's estimation accuracy and glider performance in the next section.

Drosophila's motion processing circuitry suggests two more generalizations of the non-multiplicative nonlinearity model. First, note that the non-multiplicative nonlinearity model inherits the HRC's assumption that each nonlinear unit only acts upon the low-pass filtered signal from one point in space and the high-pass filtered signal from the neighboring point (Figure 4A). In contrast, the converging 3-point correlator (Figure 1H) shows that the accuracy of motion estimation can sometimes be enhanced by nonlinearly combining both low-pass filtered signals (Figure 1I). Moreover, connectomic evidence conflicts with the non-multiplicative nonlinearity model's constraints, because each T4 cell receives synaptic connections from both the Mi1 cell and the Tm3 cells (T4's two major input channels) at overlapping points in space (Takemura et al., 2013). The unrestricted nonlinearity model removes this restriction of the non-multiplicative nonlinearity model by allowing a 4-dimensional nonlinearity to act on all four filtered signals (Figure 4C). Here, we again model this nonlinearity as a fourth-order polynomial (‘Materials and methods’). The unrestricted nonlinearity allows the motion estimator to nonlinearly combine multiple temporal channels from the same point in space. Recent experiments indicate that the Mi1 and Tm3 cells alone are insufficient to account for the motion processing of the T4 channel (Ammer et al., 2015). Future work might generalize the unrestricted nonlinearity model to include three or more temporal channels at each point in space.

The models presented so far operate only on a pair of neighboring photoreceptors, and the final generalization incorporates a third point in space. Averaging EMDs over space improves the accuracy of whole-field motion estimation (Dror et al., 2001), but Drosophila's neural circuitry suggests that it might adopt a more sophisticated strategy to combine signals across space. In particular, single T4 cells receive synaptic inputs from Mi1 cells and Tm3 cells from more than two retinotopic columns (Takemura et al., 2013). This arrangement could allow the circuit to incorporate higher-order correlations that are distributed across three or more spatial input channels. To explore whether this possibility has computational significance, we generalized the unrestricted nonlinearity model to provide unrestricted access to six temporal channels distributed across three points in space (Figure 4D). We refer to this model as the extra input nonlinearity model. We approximate its 6-dimensional nonlinearity as a fourth-order polynomial (‘Materials and methods’).

Elaborated circuit architectures improve motion estimation without sacrificing glider responses

Having introduced the rationale behind the non-multiplicative, unrestricted, and extra input nonlinearity models, it is straightforward to examine their performance as motion estimators. First note that the polynomial non-multiplicative nonlinearity model was a better motion estimator (Figure 4E) than the weighted 4-quadrant model (Figure 3C). This implies that some useful signatures of naturalistic motion are not made accessible by simply segregating ON and OFF motion signals. Interestingly, this performance improvement is largely due to 3-point correlations, and models that exclude fourth-order polynomial terms still outperform the weighted 4-quadrant model (Figure 4—figure supplement 2). Third-order correlations are only useful for motion estimation because of light–dark asymmetries in natural stimulus statistics (Fitzgerald et al., 2011; Clark et al., 2014), so this result implies that ON/OFF segregation provides an imperfect way to account for the complexity of light–dark asymmetries found in the natural world. The non-multiplicative nonlinearity model also made novel use of low-order correlations to improve its motion estimate (Appendix 10).

The three models are hierarchical because the non-multiplicative nonlinearity model is a special case of the unrestricted nonlinearity model, which is itself a special case of the extra input nonlinearity model. Thus, we expect each model to perform at least as well as its predecessor, but it is possible that some circuit elaborations will not introduce useful computational cues. Nevertheless, we found that that the unrestricted nonlinearity model performed better than the non-multiplicative nonlinearity model, and the extra input model performed better than the average of two neighboring unrestricted nonlinearity models (Figure 4E). Therefore, both models incorporated novel computational signatures with relevance for visual motion estimation. Although the relative improvements were fairly small, it's worth noting that the improvement from spatial averaging is also small, and it is possible that the fly brain builds an accurate motion estimator by combining a large number of weak predictors of motion.

Each of these three generalized models predicted 2-point glider responses (Figure 4F) that closely resembled the standard HRC (left, Figure 1G). Each model also correctly predicted the experimental turning directions to each of the 3-point glider stimuli (Figure 4G). The magnitudes of the 3-point glider turning responses did not unambiguously favor any of the three hierarchical models (Figure 4G) or the weighted 4-quadrant model (right, Figure 3D). Each model did better on some stimuli and worse on others. Nevertheless, the predicted glider responses did make several interesting points. First, the extra input nonlinearity model predicted a clear asymmetry between positive and negative 3-point gliders (Figure 4G). This shows that some of the even-ordered correlations found in 3-point glider stimuli have relevance for naturalistic motion estimation. Second, the observation that each model provides qualitatively similar glider response patterns illustrates that animals could use multiple nonlinear mechanisms to access ethologically relevant higher-order correlations. Future experiments should directly assess the functional relevance of the different models in the hierarchy. Finally, the qualitative agreement between all of these predictions and the experimental data supports the general hypothesis that glider responses could reflect underlying nonlinear mechanisms that facilitate motion estimation in natural environments.

The extra input nonlinearity model contains the conceptual content of the other considered models

In this paper, we sequentially introduced several models in order to isolate specific ideas about the relationships between Drosophila's behavior, its motion estimation circuit, and the statistical demands of accurate motion estimation in natural environments. The front-end nonlinearity model explored an interesting candidate principle for visual motion estimation, but it conflicted sharply with fly behavior (Figure 2G) and excluded the conceptual insights offered by other models. For example, the front-end nonlinearities we considered eliminated the asymmetry between light and dark contrasts (Figure 2D), removing the need for separate ON and OFF processing. However, the remaining models embodied ideas that are complementary rather than exclusive, and these models should not be thought of as competitors. Instead, we will show here that the final, most general model incorporates the variety of conceptual points that were initially illustrated by specific models.

The structure of the non-multiplicative nonlinearity models can be directly plotted (Figure 4B), but is not easy to visualize the 6-dimensional nonlinearity that defines the extra input nonlinearity model. We therefore need an alternate technique to illustrate its computations. We proceed by leveraging three ideas. First, a wide variety of visual motion estimators can be expanded as an abstract series of multipoint correlators (e.g., see Poggio and Reichardt, 1980, Fitzgerald et al., 2011, and Appendices 6, 7, 11), and it is straightforward to pictorially represent a multipoint correlator (e.g., see Figure 1A,H, and more to come). In the extra input nonlinearity model, this expansion is immediate because we have already parameterized its 6-dimensional nonlinearity as a polynomial. Importantly, this expansion should be considered at the algorithmic level (Marr and Poggio, 1976), and we do not suggest that the wiring of brain circuits will reflect a large number of higher-order correlators. To the contrary, a large number of higher-order multipoint correlators may be implemented implicitly by high-dimensional nonlinearities suggested by Drosophila's visual circuitry. Second, we note that certain multipoint correlators can be recombined into a 2-dimensional non-multiplicative nonlinearity that facilitates easy comparisons with the HRC and weighted 4-quadrant models (e.g., see Figure 4B). Taken together, these two points mean that we can represent the performance-optimized extra input nonlinearity model in terms of non-multiplicative nonlinearity models and multipoint correlators, each of which are easy to represent graphically.

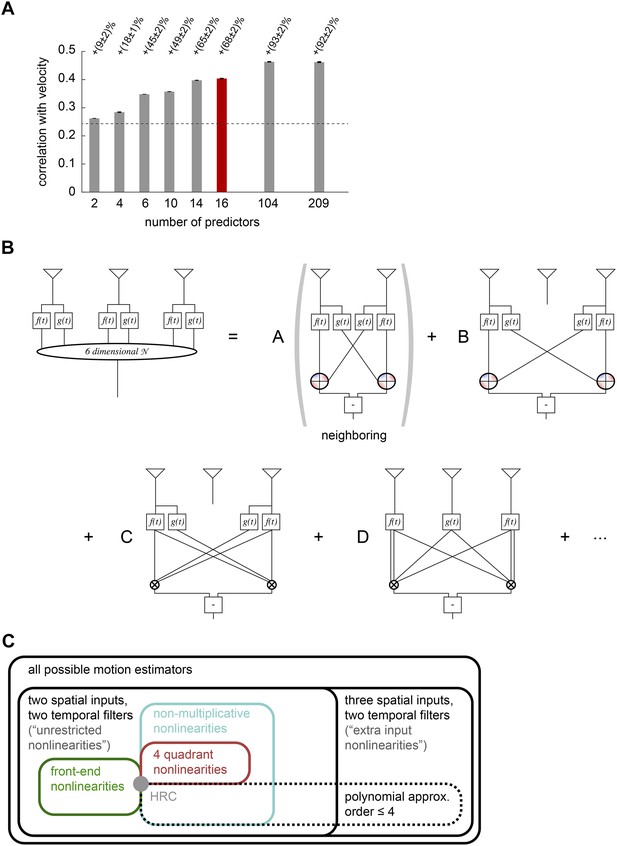

This graphical representation could be unwieldy because of the shear number of higher-order correlators in the model. Thus the third and final point is that we need a way to identify a relatively small number of terms that substantially improve the accuracy of motion estimation and illustrate the conceptual content of the model. To achieve this, we used lasso regression (Tibshirani, 1996) to identify models with fewer multipoint correlators that still enabled accurate motion estimation (‘Materials and methods’). This analysis revealed that fewer than half of all multipoint correlators were needed to account for the full accuracy of the extra input nonlinearity model (rightmost bars, Figure 5A). In fact, the accuracy of naturalistic motion estimation increased rapidly as the few correlators were sequentially added (left bars, Figure 5A), and a model that used 16 out of the 209 possible predictors was already able to produce 74% of the gain offered by the full extra input nonlinearity model (red bar, Figure 5A).

Computational interpretation of the extra input nonlinearity model.

(A) We used lasso regression to select subsets of predictors that might enable accurate estimation (see ‘Materials and methods’). With only 16 predictors, the model improved naturalistic performance over the HRC by 68%, and including fewer than half of the predictors improved it by the full 92%. The maximum number of predictors corresponds to the number of polynomial coefficients that were fit in the full model. (B) We visualized the 6-dimensional nonlinearity as the sum of several simpler computational modules. When only 16 predictors were used (red bar in (A)), the model used four distinct types of computations. In particular, the model included nearest-neighbor and next-nearest-neighbor non-multiplicative nonlinearities (top row). It also included a converging 3-point correlator from the two furthest photoreceptors and a 4-point correlator that combined three spatial inputs (bottom row). (C) Venn diagram illustrating the hierarchical nesting of models used in this paper. All models in this paper contain sets of parameters that reproduce the HRC (gray dot). The weighted 4-quadrant model is a subset of non-multiplicative nonlinearity models, which are themselves a subset of unrestricted nonlinearity models. The extra input nonlinearity encompasses all the models. When we approximated the nonlinearites with fourth order polynomials, we restricted them to a smaller portion of the model space. The 4-quadrant nonlinearities only overlapped with the fourth-order polynomial approximation at the HRC, because the weighted 4-quadrant model is infinite order when expanded as a polynomial (see Appendix 7).

The leading 16 predictors compactly illustrated how the extra input nonlinearity model recapitulates the conceptual advances offered by the other models (Figure 5B). Four of the predictors combine to implement a mirror-symmetric non-multiplicative nonlinearity model that acts on the first and second points in space (first term, Figure 5B). The dominant contribution to the nonlinearity is the HRC's multiplier, but an additional third-order term breaks the symmetry between positive and negative low-pass filtered signals (Figure 5—figure supplement 1). Thus, the extra input nonlinearity model approximately correlates neighboring points in space, as the HRC would suggest, but it differentially weights positive and negative low-pass filtered signals, like the weighted 4-quadrant model. It also replicates the main insight from the non-multiplicative nonlinearity model: the best treatment of asymmetric light and dark information need not be as simple as pure ON/OFF segregation. The model used another eight predictors to construct two more non-multiplicative nonlinearity models, one that surveyed the second and third points in space and another that surveyed the first and third points (first and second terms, Figure 5B, Figure 5—figure supplement 1). These components make the previously highlighted conceptual points and add the observation that spatial averaging improves estimates.

The final four predictors implemented two mirror anti-symmetric multipoint correlators (third and fourth terms, Figure 5B). In particular, two predictors went towards implementing a converging 3-point correlator that spanned the first and third spatial points (third term, Figure 5B). This estimator made the model's asymmetric treatment of light and dark signals more nuanced than permitted by the non-multiplicative nonlinearity model, and it also incorporated motion signals that combine multiple temporal signals from the same point in space. This latter point was the main conceptual motivation for the unrestricted nonlinearity model. Finally, the last two predictors implemented a 4-point correlator that combined temporal signals from three distinct points in space (fourth term, Figure 5B). This component reinforces the conceptual motivation for the extra input nonlinearity model and gives a concrete example of a computationally relevant higher-order correlator that is distributed across three points in space. It's interesting that the leading fourth-order correlator spanned three spatial points, because the extra input nonlinearity model was the first performance-optimized model that generated a substantially asymmetric response to positive and negative 3-point gliders (Figure 4G).

This paper set out with the goal of exploring whether the statistical demands of naturalistic motion estimation could provide a useful lens for interpreting features of Drosophila's behavior and neural circuitry that push beyond the canonical HRC. Although we have considered several interesting classes of visual motion estimators, the space of possible motion estimators is much larger (Figure 5C). For instance, these models have not explored the impact of temporal filter choice on naturalistic motion estimation. Nor have they assessed the possibility of more than two temporal filters, which is be suggested by anatomical (Takemura et al., 2013) and physiological (Ammer et al., 2015) experiments. More generally, the neural circuits contributing to Drosophila's motion estimator are still incompletely known, and the extent to which the fly brain's biological complexity reflects computational sophistication remains an open question. Theoretical considerations will be critical for resolving that question and pinpointing the most relevant principles underlying visual motion estimation.

Discussion

Ongoing research is providing an increasingly detailed picture of the anatomy and physiology of the visual circuitry that implements motion processing in Drosophila. Through the combination of genetic silencing experiments, connectomic analysis, and functional recordings, researchers have identified many individual neurons in the fly brain that contribute to visual motion processing (Silies et al., 2014). Although the HRC provided the initial theoretical impetus for these experiments, specific experimental outcomes have often been unanticipated. For instance, the fly brain contains multiple pathways that segregate different types of motion information (Joesch et al., 2010; Clark et al., 2011; Silies et al., 2013); its direction-selective neurons receive inputs from more than two neighboring points in visual space (Takemura et al., 2013); and the biological substrates for reverse-phi signals, which were fundamental to the formulation of the HRC, remain poorly understood (Clark et al., 2011; Tuthill et al., 2011; Joesch et al., 2013). Theoretical work to illuminate the computational significance of these various discrepancies is critical for understanding Drosophila's motion estimator.

The results presented in this paper provide a new theoretical perspective on these experimental results. While previous research has addressed how neural circuits could use four quadrants to carry out algebraic multiplication, here, the recurring theme of our models was that motion-processing circuits should treat light and dark signals differently for functional reasons. We first showed that visual systems could use ON and OFF processing channels that separately correlate light and dark signals to improve the accuracy of motion estimation (Figure 3). This model was inspired by the experimental observation that Drosophila's motion processing channels distinguish between light increments and decrements (Joesch et al., 2010; Clark et al., 2011), but this study is the first to explicitly demonstrate how such processing channels can improve the accuracy of motion estimation. Furthermore, our model shows that both the phi channels (i.e., the (+ +) and (− −) and quadrants) and the reverse-phi channels (i.e., the (+ −) and (− +) quadrants) can contribute productively to motion estimation in natural environments. Since many animals experience similar sensory statistics and ON and OFF visual processing channels are pervasive across visual systems (Schiller, 1992; Westheimer, 2007), these mechanisms might be very general. Ultimately, the performance gains from weighted quadrants were a consequence of statistical asymmetries between light and dark contrasts in natural images, and our models showed that neural circuits could perform even better if they made distinctions between light and dark signals that were subtler than simple ON/OFF segregation (Figure 4). Recent experimental evidence indicates that the fly's motion processing channels are imperfectly selective for ON vs OFF information (Silies et al., 2013; Behnia et al., 2014; Strother et al., 2014), and it is important that future experiments characterize such subtleties in the computations performed by these circuits.

Our most general model contained three spatial inputs and showed that spatial averaging of local motion detectors was suboptimal (Figure 4E). Anatomy suggests that single T4 cells receive inputs from several different retinotopic columns, and also from multiple neuron types in a single retinotopic column (Takemura et al., 2013). Our modeling suggests that these two forms of circuit heterogeneity could enhance motion estimation by facilitating computations that go beyond averaging to compute higher-order correlations that are distributed across multiple points in space (Figure 5B). Overall, our results demonstrate how the subtleties of neural circuit nonlinearities can improve motion detection with naturalistic inputs. It therefore seems likely that some of the complexities of Drosophila's circuitry are critical to its performance under natural conditions.

It is remarkable that our approximation of natural motion by the rigid translation of natural images revealed substantial utility for higher-order correlations in motion processing. Truly naturalistic motion would include spatial velocity gradients, occlusion, expansion, and contraction, yet the simplified naturalism we used to optimize our models already sufficed to account for many aspects of the fly's glider responses. This may be because the rotational optomotor response measured in the fly experiments is thought to be sensitive primarily to full-field rotations, which our naturalism emulates well. However, since other higher-order correlations may be associated with non-rigid translation (Nitzany and Victor, 2014), one might expect a different set of glider sensitivities to be optimal in the context of other motion-guided behaviors, such as looming responses (Gabbiani et al., 1999; Tammero and Dickinson, 2002; Card and Dickinson, 2008). Since a common elementary motion detector might underlie many or all motion-guided behaviors, incorporating more complex optic flow patterns may even diminish discrepancies between our models and Drosophila's behavior.

The approach of this study is also relevant to vertebrate vision, where researchers typically model motion estimation using the motion energy model (Adelson and Bergen, 1985). Like the HRC, the motion energy model only responds to 2-point correlations in the visual stimulus. Consequently, many of the theoretical considerations in this paper apply directly to the motion energy model. Furthermore, each of our computational models can be straightforwardly generalized to the architecture of the motion energy model. For example, one could incorporate non-multiplicative nonlinearities by replacing the squaring operation of the motion energy model with a more flexible nonlinearity. Nevertheless, the numerical benefits offered by each modification to the motion energy model might differ from those found for the HRC because the motion energy model and HRC use distinct spatial and temporal filtering. Such differences could in principle manifest themselves as a different pattern of predicted glider responses (Hu and Victor, 2010; Clark et al., 2014), but comparative electrophysiology experiments in macaques and dragonflies currently suggest that similarities between primate and insect motion processing are abundant (Nitzany et al., 2014).

Our models make predictions that are testable with new experiments. Researchers hypothesize that the T4 and T5 neurons in the fly lobula nonlinearly combine visual inputs across space and time to become the first direction-selective neurons in Drosophila's visual system (Maisak et al., 2013). In accordance with the HRC model, conventional wisdom says that these neurons will multiply their input channels. In contrast, we predict that T4 and T5 will combine their visual input streams with non-multiplicative nonlinearities that facilitate accurate motion estimation in natural sensory environments. It's crucial to note that subtle differences between biology's nonlinearity and a pure multiplication can correspond to substantial functional effects. In particular, the optimized nonlinearity that we found here (Figure 4B) is superficially similar a simple multiplication, yet its subtle distinctions manifest themselves by improving the local estimation accuracy of the HRC by an impressive margin (Figure 2D).

In this paper, we studied several simple models to most clearly illustrate the computational consequences of fundamental nonlinear circuit operations. Each of these operations individually provided a way for Drosophila to improve their motion estimation accuracy in natural environments, but they are not necessarily exclusive. For example, if a front-end nonlinearity does not fully remove the asymmetry between light and dark contrasts, then subsequent ON and OFF processing might further improve estimation accuracy. Similarly, non-multiplicative nonlinearities might enable an even better combination of ON and OFF signals for motion estimation. The general approach that we adopted here is to restrict the space of candidate models to those that have immediate biological relevance and to identify interesting models by optimizing the model's estimation accuracy over naturalistic stimuli. Future models should incorporate more biological details to better emulate the specifics of Drosophila's visual circuitry, which is rapidly being dissected through unprecedented anatomical, functional, and behavioral experiments (Silies et al., 2014).

Materials and methods

Simulated ensemble of naturalistic motions

Request a detailed protocolWe simulated the linear responses of neighboring photoreceptors to naturalistic motion using methods similar to previous work (Clark et al., 2014). We began with a database of natural images (van Hateren and van der Schaaf, 1998). We converted each natural image to a contrast scale, , where is the contrast at the spatial point , is its intensity, and is the average intensity across the image. Since we only consider horizontal motion, we emulated the spatial blurring of Drosophila's photoreceptors in the vertical dimension by filtering across rows with a Gaussian kernel (FWHM = 5.7°). We then took the central row of each filtered image to represent a one-dimension variant of the natural image, denoted . We applied reflective boundary conditions to generate images that covered 360° and down-sampled each resulting image to 1° pixels by averaging. Photoreceptor blurring from signals in the horizontal dimension depends on the velocity of motion. In particular, we model the response of the ith photoreceptor as

where is a causal exponential kernel (timescale = 10 ms), is a Gaussian kernel (FWHM = 5.7°), is the location of the ith photoreceptor, and is the velocity of motion.

Each naturalistic motion comprised a randomly selected one-dimensional natural image, an offset to set the initial location of the photoreceptors, and a velocity drawn from a zero-mean normal distribution with a standard deviation of 90°/s. In this manner, we simulated the responses of three horizontally adjacent photoreceptors (spaced by 5.1°) to 5 × 105 naturalistic motions (each with duration = 800 ms, time step = 5 ms). We then explicitly enforced left-right symmetry in the naturalistic ensemble by pairing each naturalistic motion with a new simulated motion, in which the natural image is reflected, the velocity is inverted, and the offset is chosen such that in the new naturalistic motion is exactly from its partner. The final symmetric ensemble thus consists of 106 naturalistic motions.

The HRC

Request a detailed protocolThe HRC applies two temporal filters to its photoreceptor inputs. We denote the kernels of the low-pass and high-pass filters as f and g, respectively, such that the output of a local HRC is

where * denotes convolution (Figure 1A). We consider the HRC's velocity estimate for a given naturalistic motion as its value at the final time point of the simulation. We model the filter kernels as

and

where = 20 ms and is comparable to lamina monopolar cell responses (Clark et al., 2011; Behnia et al., 2014). We built the alternate motion estimators considered in this work from the same four filtered signals, , always considering the estimator's output at the final time point as its velocity estimate. Thus, none of our models modified the spatial or temporal processing of the HRC, reflecting our emphasis on how nonlinear processing might be tuned for naturalistic motion estimation. The global output of an array of HRCs would be obtained by pooling signals across space. Here we focus on spatiotemporally local strategies for motion estimation and at most pool motion signals across two neighboring motion detectors.

Relationship between the mean squared error and the correlation coefficient

Request a detailed protocolWe evaluate motion estimators by the mean squared error between their output and the true velocity. To minimize the mean squared error of the HRC, we scale its output by , where is the correlation coefficient between the HRC's output and the velocity of motion, is the standard deviation of the velocity distribution, and is the standard deviation of the HRC's output. Once the HRC is scaled in this manner, its mean squared error is

More generally, this equation rewrites the mean squared error of any optimally scaled motion estimator in terms of its correlation coefficient with the velocity. All motion estimators considered in this paper are optimally scaled, and we find the correlation coefficient to be more intuitive than the mean squared error. We thus always report the performance of each motion estimator in terms of the correlation coefficient between the true and estimated velocity.

Model fitting procedure

Request a detailed protocolWe fit the linear weighting parameters in the models of Figures 1I, 3A, 4A,C,D to maximize the estimation accuracy over a simulated ensemble of naturalistic motions. The formulas provided in subsequent sections of the ‘Materials and methods’ will cast each motion estimation scheme as a linear combination of a variety of motion predictors,

where the are nonlinear combinations of that depend on the model architecture, and the wi are associated weighting coefficients. We chose the weights to minimize the mean squared error between the true and predicted velocity, which is the standard scenario considered by ordinary least-squares regression. The same weights maximize the correlation coefficient between the true and predicted velocity, and we typically present model accuracies as correlation coefficients.

We used twofold cross-validation to protect against over-fitting. In particular, we randomly divided the ensemble of naturalistic motions into a training set of 500,000 symmetrically paired examples and a testing set of the remaining 500,000 examples. We determined the weighting coefficients by minimizing the empirical error over the training set, and we reported accuracies over the test set. To estimate error bars for each model's accuracy, we computed twenty random divisions of the naturalistic motion ensemble and calculated the standard deviation of the estimation accuracy.

Model responses to glider stimuli

Request a detailed protocolWe generated 25 random instantiations of each glider stimulus considered by our previous experimental work (Figure 1F, duration = 3 s, update rate = 40 Hz, pixel size = 5°) (Hu and Victor, 2010; Clark et al., 2014). We evaluated the response of each model to these stimuli by averaging the outputs of 60 identical local motion estimators (each separated by 5.1°) over the last two seconds of visual stimulation. Glider predictions were equal and opposite for the left and right variants of the stimuli, so we pooled leftward and rightward stimuli in all figures (Figure 1F shows the rightward variants). We scaled each model's output such that the average response to the positive 2-point glider was 1. All figures associated with glider responses show the mean and standard error of each model's response across the 25 glider instantiations.

Front-end nonlinearity model

Request a detailed protocolThe model in Figure 2A replaces the linear photoreceptor signals, and , with nonlinear photoreceptor signals

where is some nonlinear function. Thus, the motion estimate from the front-end nonlinearity model is

To implement the contrast equalizing nonlinearity, we replaced values of by their rank-order (scaled and shifted to range between −1 and +1). Note that all were sorted together (i.e., including all spatial points, temporal points, and simulated naturalistic motions). When multiple had the same value, they were given the same rank. To implement binarizing nonlinearities, we again sorted the and found the values corresponding to the threshold locations. For example, to calculate the binarizing nonlinearity with two steps (Appendix 4): (i) we found the values corresponding to the 25th and 75th percentiles; (ii) signals below the 25th percentile or above the 75th percentiles were assigned the value of −1; and (iii) signals between 25th and 75th percentiles were assigned the value of +1. To implement the Gaussianizing nonlinearity, we again rank-ordered the (scaled to range between 0 and 1) and applied the inverse Gaussian cumulative distribution function to these ranks. The HRC is the special case of this model where the front-end nonlinearity is linear.

Weighted 4-quadrant model

Request a detailed protocolThe weighted 4-quadrant model in Figure 3A separately correlates bright and dark signals. Mathematically, it is

where are adjustable weights that parameterize the model,

is equal to when is positive and zero otherwise, and is equal to when is negative and zero otherwise. The HRC is the special case of this model where .

Non-multiplicative nonlinearity model

Request a detailed protocolThe non-multiplicative nonlinearity model in Figure 4A replaces the HRC's multiplication step with a more flexible two-dimension nonlinearity. In particular, it is

where we approximate the nonlinearity, , as a fourth-order polynomial

and are adjustable weights that parameterize the model. We include terms up to fourth order in this model to ensure that it is flexible enough to describe the published glider response data. In particular: (i) the second-order terms accommodate responses to 2-point glider stimuli; (ii) the third-order terms accommodate parity-inverting responses to 3-point glider stimuli; and (iii) the fourth-order terms enable the model to respond with unequal magnitude to positive and negative parity 3-point glider stimuli (Figure 4—figure supplement 1). Thus, this model has 14 parameters. The HRC is the special case of this model where only is nonzero.

Unrestricted nonlinearity model

Request a detailed protocolHere we model the 4-dimensional nonlinearity in Figure 4C as a fourth-order polynomial of the four filtered signals in the HRC. In general, this motion estimator is

where are adjustable weights that parameterize the model, and we set because this term has no utility for naturalistic motion estimation. Thus, this model has 69 parameters. The HRC is the special case of this model where , and all other parameters are zero.

Extra input nonlinearity model

Request a detailed protocolHere we model the 6-dimensional nonlinearity in Figure 4D as a fourth-order polynomial of the six filtered signals in two neighboring HRCs. In general, this motion estimator is

where are adjustable weights that parameterize the model, and we set because this term has no utility for naturalistic motion estimation. Thus, this model has 209 parameters. The average of two neighboring HRCs is the special case of this model where , and all other parameters are zero.

Lasso regression for predictor selection

Request a detailed protocolLasso regression augments the squared error with an L1 penalty on nonzero weighting coefficients that favors sparse solutions (Tibshirani, 1996). We used lasso regression to identify subsets of predictors that might enable accurate motion estimation (Figure 5A). Once we identified a predictor subset using lasso regression, we refit the nonzero model weights using ordinary least squares regression (i.e., without the weight penalty).

Appendix 1

Visual signatures of motion.

The pattern of light that stimulates the retina encodes information about the relative motion between the retina and its visual environment. The manner in which this information is encoded depends on the geometry of the photoreceptor array, the statistics of self-motion, and the statistics of the visual environment. The principal goal of this paper is to illustrate several ways that the brain's nonlinear processing of visual motion signals might be tuned to reflect specific features of the natural visual environment. We thus begin by enumerating some computational signatures of visual motion in natural environments, thereby exposing a diversity of stimulus features that visual system nonlinearities might aim to extract.

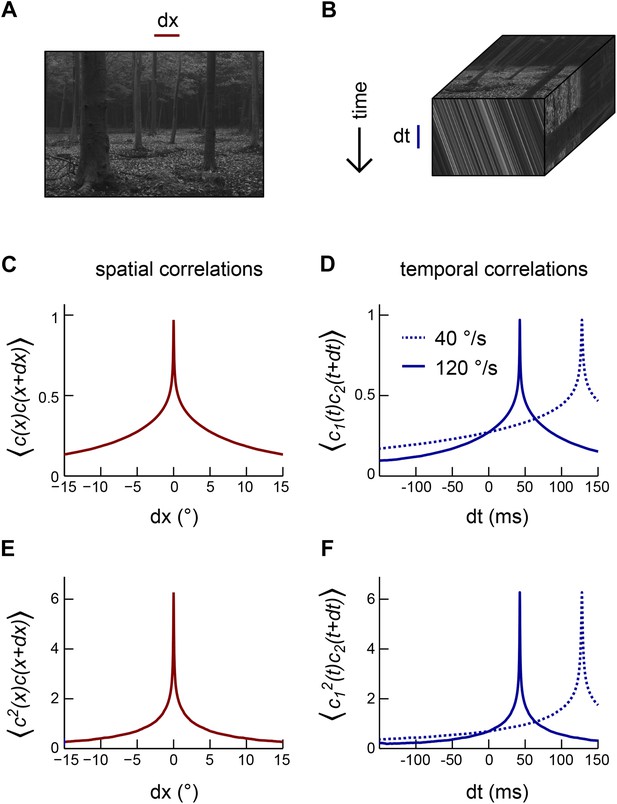

In the real world, animals encounter visual environments that are intricately structured and far from random (Appendix figure 1A) (Ruderman and Bialek, 1994; van Hateren and van der Schaaf, 1998; Geisler, 2008). When an animal rotates with constant angular velocity through the environment, the spatiotemporal response profile of the photoreceptor array encodes the velocity of self-motion through the slope of oriented streaks in space-time (front face, Appendix figure 1B) (Adelson and Bergen, 1985). Thus, a visual system with a dense array of noiseless photoreceptors could extract the angular velocity of an arbitrary image by computing the ratio of temporal and spatial derivatives (Potters and Bialek, 1994). The statistics of the image ensemble become relevant once multiple interpretations of the sensory world become logically consistent with the photoreceptor data. In particular, the optimal motion estimator depends on the statistics of the image ensemble when photoreceptors have noise (Potters and Bialek, 1994; Fitzgerald et al., 2011), and a nonzero spacing between photoreceptors introduces ambiguity via aliasing (Potters and Bialek, 1994). In these cases, the animal can use prior information regarding the sensory environment and its motion in order to weigh the plausibility of each sensory interpretation.

Motion transforms spatial correlations into temporal correlations.

(A) An example natural image (van Hateren and van der Schaaf, 1998). (B) When a natural image (top face) moves to the right, streaks in space-time (front face) indicate the direction and speed of the motion. Alternatively, motion influences the temporal correlation structure of visual signals (side face). (C) Second-order correlation function between pairs of spatially separated contrast signals (across the natural image ensemble [van Hateren and van der Schaaf, 1998]). (D) For constant velocity motion, the temporal correlation function between a pair of spatially separated points is shifted and stretched relative to the spatial correlation function. We separated the two points by Drosophila's photoreceptor spacing (5.1°). (E) Example third-order spatial correlation function involving two points in space. (F) As with pairwise correlations, higher-order temporal correlations between spatially separated visual signals are shifted and stretched (relative to higher-order spatial correlation functions) in a manner that indicates the speed and direction of motion.