A mechanism for hunchback promoters to readout morphogenetic positional information in less than a minute

Abstract

Cell fate decisions in the fly embryo are rapid: hunchback genes decide in minutes whether nuclei follow the anterior/posterior developmental blueprint by reading out positional information in the Bicoid morphogen. This developmental system is a prototype of regulatory decision processes that combine speed and accuracy. Traditional arguments based on fixed-time sampling of Bicoid concentration indicate that an accurate readout is impossible within the experimental times. This raises the general issue of how speed-accuracy tradeoffs are achieved. Here, we compare fixed-time to on-the-fly decisions, based on comparing the likelihoods of anterior/posterior locations. We found that these more efficient schemes complete reliable cell fate decisions within the short embryological timescales. We discuss the influence of promoter architectures on decision times and error rates, present concrete examples that rapidly readout the morphogen, and predictions for new experiments. Lastly, we suggest a simple mechanism for RNA production and degradation that approximates the log-likelihood function.

Introduction

From development to chemotaxis and immune response, living organisms make precise decisions based on limited information cues and intrinsically noisy molecular processes, such as the readout of ligand concentrations by specialized genes or receptors (Houchmandzadeh et al., 2002; Perry et al., 2012; Takeda et al., 2012; Marcelletti and Katz, 1992; Bowsher and Swain, 2014). Selective pressure in biological decision-making is often strong, for reasons that range from predator evasion to growth maximization or fast immune clearance. In development, early embryogenesis of insects and amphibians unfolds outside of the mother, which arguably imposes selective pressure for speed to limit the risks of predation and infection by parasitoids (O'Farrell, 2015). In Drosophila embryos, the first 13 cycles of DNA replication and mitosis occur without cytokinesis, resulting in a multinucleated syncytium containing about 6000 nuclei (O'Farrell et al., 2004). Speed is witnessed both by the rapid and synchronous cleavage divisions observed over the cycles, and the successive fast decisions on the choice of differentiation blueprints, which are made in less than 3 min (Lucas et al., 2018).

In the early fly embryo, the map of the future body structures is set by the segmentation gene hierarchy (Nüsslein-Volhard et al., 1984; Houchmandzadeh et al., 2002; Jaeger, 2011). The definition of the positional map starts by the emergence of two (anterior and posterior) regions of distinct hunchback (hb) expression, which are driven by the readout of the maternal Bicoid (Bcd) morphogen gradient (Houchmandzadeh et al., 2002, Figure 1a). hunchback spatial profiles are sharp and the variance in hunchback expression of nuclei at similar positions along the AP axis is small (Desponds et al., 2016; Lucas et al., 2018). Taken together, these observations imply that the short-time readout is accurate and has a low error. Accuracy ensures spatial resolution and the correct positioning of future organs and body structures, while low errors ensure reproducibility and homogeneity among spatially close nuclei. The amount of positional information available at the transcriptional locus is close to the minimal amount necessary to achieve the required precision (Gregor et al., 2007b; Porcher et al., 2010; Garcia et al., 2013; Petkova et al., 2019). Furthermore, part of the morphogenetic information is not accessible for reading by downstream mechanisms (Tikhonov et al., 2015), as information is channeled and lost through subsequent cascades of gene activity. In spite of that, by the end of nuclear cycle 14 the positional information encoded in the gap gene readouts is sufficient to correctly predict the position of each nucleus within 2% of the egg length (Petkova et al., 2019). Adding to the time constraints, mitosis resets the binding of transcription factors (TF) during the phase of synchronous divisions (Lucas et al., 2018), suggesting that the decision about the nuclei’s position is made by using information accessible within one nuclear cycle. Experiments additionally show that during the nuclear cycles 10–13 the positional information encoded by the Bicoid gradient is read out by hunchback promoters precisely and within 3 min (Lucas et al., 2018).

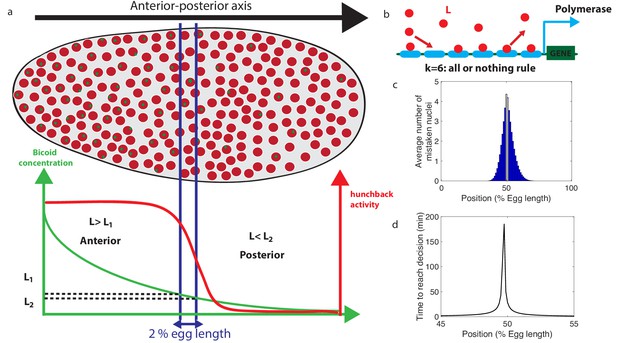

Decision between anterior and posterior developmental blueprints.

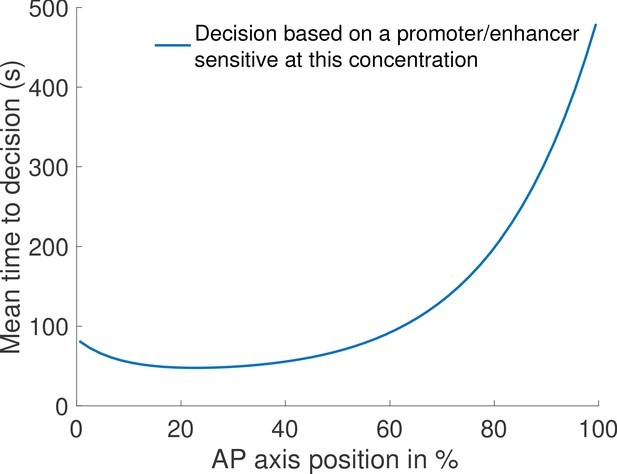

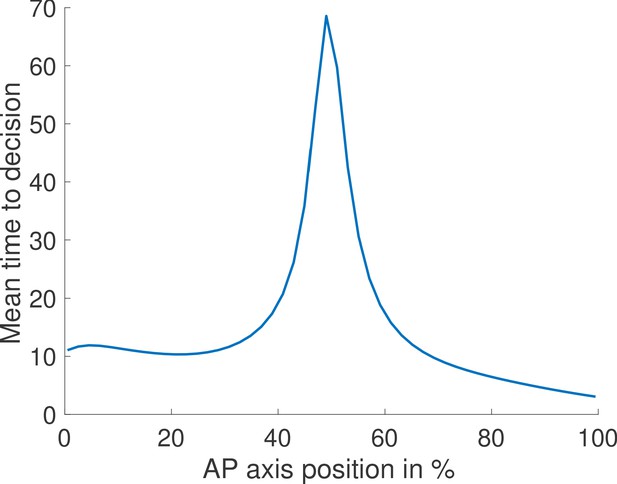

(a) The early Drosophila embryo and the Bicoid morphogen gradient. The cartoon shows a projection on one plane of the embryo at nuclear cycles 10–13, when nuclei (red dots) have migrated to the surface of the embryo (O'Farrell, 2015). The activity of the hunchback gene decreases along the Anterior-Posterior (AP) axis. The green dots represent active transcription loci. The average concentration L(x) of maternal Bicoid decreases exponentially along the AP axis by about a factor five from the anterior (left) to the posterior (right) ends. Between the blue lines lies the boundary region. Its width δx is 2% of the egg length. hunchback activity decreases along the AP axis and undergoes a sharp drop around the boundary region. The half-maximum Bcd concentration position in WT embryos is shifted by about 5% of the egg-length toward the anterior with respect to the mid-embryo position (Struhl et al., 1992). We consider this hunchback half maximum expression as a reference point when describing the AP axis of the embryo. The hunchback readout defines the cell fate decision whether each nucleus will follow an anterior or the posterior gene expression program. (b) A typical promoter structure contains six binding sites for Bicoid molecules present at concentration L. indicates that the gene is active only when all binding sites are occupied, defining an all-or-nothing promoter architecture. Other forms and details of the promoter structure will be discussed in Figure 2 and Figure 3. (c) The average number of nuclei making a mistake in the decision process as a function of the egg length position at cycle 11. For a fixed-time decision process completed within seconds (and , that is, all-or-nothing activation scheme), a large number of nuclei make the wrong decision (full blue bars). seconds is the duration of the interphase of nuclear cycle 11 (Tran et al., 2018). For nuclei located in the boundary region either answer is correct so that we leave these bars unfilled. Most errors happen close to the boundary, as intuitively expected. See Appendix 1 for a detailed description of how the error is computed for the fixed-time decision strategy. (d) The time needed to reach the standard error probability of 32% (Gregor et al., 2007a) for the same process as in panel c (see also the subsection 'How many nuclei make a mistake?’) as a function of egg length position. Decisions are easy away from the center but the time required for an accurate decision soars close to the boundary up to 50 min – much longer than the embryological times. Parameters for panels (c) and (d) are six binding sites that bind and unbind Bicoid without cooperaivity and a diffusion limited on rate per site , and an unbinding rate per site that lead to half activation in the boundary region.

Effective speed-accuracy tradeoffs are not specific to developmental processes, but are shared by a large number of sensing processes (Rinberg et al., 2006; Heitz and Schall, 2012; Chittka et al., 2009). This generality has triggered interest in quantitative limits and mechanisms for accuracy. Berg and Purcell derived the seminal tradeoff between integration time and readout accuracy for a receptor evaluating the concentration of a ligand (Berg and Purcell, 1977) based on its average binding occupancy. Later studies showed that this limit takes more complex forms when rebinding events of detached ligands (Bialek and Setayeshgar, 2005; Kaizu et al., 2014) or spatial gradients (Endres and Wingreen, 2008) are accounted for. The accuracy of the averaging method in Berg and Purcell, 1977 can be improved by computing the maximum likelihood estimate of the time series of receptor occupancy for a given model (Endres and Wingreen, 2009; Mora and Wingreen, 2010). However, none of these approaches can result in a precise anterior-posterior (AP) decision for the hunchback promoter in the short time of the early nuclear cycles, which has led to the conclusion that there is not enough time to apply the fixed-time Berg-Purcell strategy with the desired accuracy (Gregor et al., 2007a). Additional mechanisms to increase precision (including internuclear diffusion) do yield a speed-up (Erdmann et al., 2009; Aquino et al., 2016), yet they are not sufficient to meet the 3-min challenge. The issue of the embryological speed-accuracy tradeoff remains open.

The approaches described above are fixed-time strategies of decision-making, that is, they assume that decisions are made at a pre-defined deterministic time T that is set long enough to achieve the desired level of error and accuracy. As a matter of fact, fixing the decision time is not optimal because the amount of available information depends on the specific statistical realization of the noisy signal that is being sensed. The time of decision should vary accordingly and therefore depend on the realization. This intuition was formalized by A. Wald by his Sequential Probability Ratio Test (SPRT) (Wald, 1945a). SPRT achieves optimality in the sense that it ensures the fastest decision strategy for a given level of expected error. The adaption of the method to biological sensing posits that the cell discriminates between two hypothetical concentrations by accumulating information through binding events, and by computing on the fly the ratio of the likelihoods (or appropriate proxies) for the two concentrations to be discriminated (Siggia and Vergassola, 2013). When the ratio ‘strongly’ favors one of the two hypotheses, a decision is triggered. The strength of the evidence required for decision-making depends on the desired level of error. For a given level of precision, the average decision time can be substantially shorter than for traditional averaging algorithms (Siggia and Vergassola, 2013). SPRT has also been proposed as an efficient model of decision-making in the fields of social interactions and neuroscience (Gold and Shadlen, 2007; Marshall et al., 2009) and its connections with non-equilibrium thermodynamics are discussed in Roldán et al., 2015.

Wald’s approach is particularly appealing for biological concentration readouts since many of them, including the anterior-posterior decision faced by the hunchback promoter, appear to be binary decisions. Our first goal here is to specifically consider the paradigmatic example of the hunchback promoter and elucidate the degree of speed-up that can be achieved by decisions on the fly. Second, we investigate specific implementations of the decision strategy in the form of possible hunchback promoter architectures. We specifically ask how cooperative TF binding affects the sensing limits. Our results have implications beyond fly development and are generally relevant to regulatory processes. We identify promoter architectures that, by approximating Wald’s strategy, do satisfy several key experimental constraints and reach the experimentally observed level of accuracy of hunchback expression within the (apparently) very stringent time limits of the early nuclear cycles.

Results

Methodological setup

The decision process of the anterior vs posterior hunchback expression

The problem faced by nuclei in their decision of anterior vs posterior developmental fate is sketched in Figure 1a. By decision we mean that nuclei commit to a cell fate through a process that is mainly irreversible leading to one of two classes of cell states that correspond to either the anterior or the posterior regions of the embryo, based on positional information acquired through gene activity. We limit our investigation of promoter architectures to the six experimentally identified Bicoid-binding sites (Figure 1b). We do not consider the known Hunchback-binding sites because before nuclear cycle 13 there is little time to produce sufficient concentrations of zygotic proteins for a significant feedback effect and the measured maternal hunchback profile has not been shown to alter anterior-posterior decision-making. Following the observation that Bcd readout is the leading factor in nuclei fate determination (Ochoa-Espinosa et al., 2009), we also neglect the role of other maternal gradients, for example Caudal, Zelda or Capicua (Jiménez et al., 2000; Sokolowski et al., 2012; Tran et al., 2018; Lucas et al., 2018), since the readout of these morphogens can only contribute additional information and decrease the decision time. We focus on the proximal promoter since no active enhancers have been identified for the hunchback locus in nuclear cycles 11–13 (Perry et al., 2011). Our results can be generalized to enhancers (Hannon et al., 2017), the addition of which only further improves the speed-accuracy efficacy, as we explicitly show for a simple model of Bicoid activated enhancers in the section ’Joint dynamics of Bicoid enhancer and promoter’. Since our goal is to show that accurate decisions can be made rapidly, we focus on the worst case decision-making scenario: the positional information (Wolpert et al., 2015) is gathered through a readout of the Bicoid concentration only, and the decision is assumed to be made independently in each nucleus. Having additional information available and/or coupling among nuclei can only strengthen our conclusion.

The profile of the average concentration of the maternal morphogen Bicoid is well represented by an exponential function that decreases from the anterior toward the posterior of the embryo : , where x is the position along the anterior-posterior axis measured in terms of percentage egg-length (EL), and x0 is the position of half maximum hb expression corresponding to L0 Bcd concentration. The decay length corresponds to 20% EL (Gregor et al., 2007a). Nuclei convert the graded Bicoid gradient into a sharp border of hunchback expression (Figure 1a), with high and low expressions of the hunchback promoter at the left and the right of the border respectively (Driever and Nüsslein-Volhard, 1988; Struhl et al., 1992; Crauk and Dostatni, 2005; Gregor et al., 2007b; Houchmandzadeh et al., 2002; Porcher et al., 2010; Garcia et al., 2013; Lucas et al., 2013; Tran et al., 2018; Lucas et al., 2018). We define the border region of width δx symmetrically around x0 by the dashed lines in Figure 1a. δx is related to the positional resolution (Erdmann et al., 2009; Tran et al., 2018) of the anterior-posterior decision: it is the minimal distance measured in percentages of egg-length between two nuclei’s positions, at which the nuclei can distinguish the Bcd concentrations. Although this value is not known exactly, a lower bound is estimated as EL (Gregor et al., 2007a), which corresponds to the width of one nucleus.

We denote the Bcd concentration at the anterior (respectively posterior) boundary of the border region by L1 (respectively L2) (see Figure 1a). At each position x, nuclei compare the probability that the local concentration is greater than L1 (anterior) or smaller than L2 (posterior). By using current best estimates of the parameters (see Appendix 1), a classic fixed-time-decision integration process and an integration time of 270 s (the duration of the interphase in nuclear cycle 11 [Tran et al., 2018]), we compute in Figure 1c the probability of error per nucleus for each position in the embryo (see Appendix 2 for details). As expected, errors occur overwhelmingly in the vicinity of the border region, where the decision is the hardest (Figure 1c). For nuclei located within the border region, both anterior and posterior decisions are correct since the nuclei lie close to both regions. It follows that, although the error rate can formally be computed in this region, the errors do not describe positional resolution mistakes and do not contribute to the total error (white zone in Figure 1c).

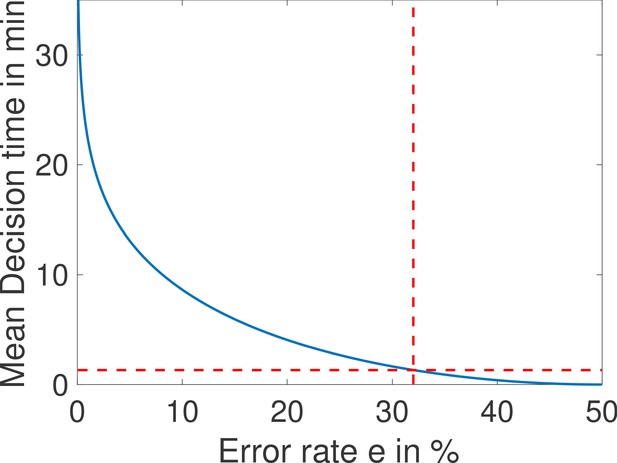

In view of Figure 1c and to simplify further analysis we shall focus on the boundaries of the border region : each nucleus discriminates between hypothesis 1 – the Bcd concentration is , and hypothesis 2 – the Bcd concentration is . To achieve a positional resolution of EL, nuclei need to be able to discriminate between differences in Bcd concentrations on the order of 10%. In addition to the variation in Bcd concentration estimates that are due to biological precision, concentration estimated using many trials follows a statistical distribution. The central limit theorem suggests that this distribution is approximately Gaussian. This assumption means that the probability that the Bcd concentration estimate deviates from the actual concentration by more than the prescribed 10% positional resolution is 32% (see the subsection 'How many nuclei make a mistake?’ for variations on the value and arguments on the error rate). In Figure 1d, we show that the time required under a fixed-time-decision strategy for a promoter with six binding sites to estimate the Bcd concentration within the 32% Gaussian error rate (Gregor et al., 2007a) close to the boundary is much longer than 270 s, minutes (see Appendix 2 for details of the calculation). The activation rule for the promoter architecture in the figure is that all binding sites need to be bound for transcription initiation.

Identifying fast decision promoter architectures

The promoter model

We model the hb promoter as six Bcd binding sites (Schröder et al., 1988; Driever et al., 1989; Struhl et al., 1989; Ochoa-Espinosa et al., 2005) that determine the activity of the gene (Figure 2a). Bcd molecules bind to and unbind from each of the sites with rates μi and νi, which are allowed to be different for each site. For simplicity, the gene can only take two states : either it is silenced (OFF) and mRNA is not produced, or the gene is activated (ON) and mRNA is produced at full speed. While models that involve different levels of polymerase loading are biologically relevant and interesting, the simplified model allows us to gain more intuition and follows the worst-case scenario logic that we discussed in the previous subsection ’The decision process of the anterior vs posterior hunchback expression’. The same remark applies for the wide variety of promoter architectures considered in previous works (Estrada et al., 2016; Tran et al., 2018). In particular, we assume that only the number of sites that are bound matters for gene activation (and not the specific identity of the sites). Such architectures are again a subset of the range of architectures considered in Estrada et al., 2016; Tran et al., 2018.

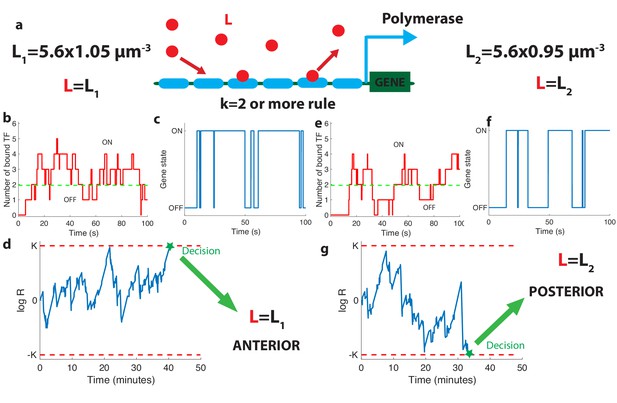

The relation between promoter structure and on-the-fly decision-making.

(a). Using six Bicoid binding sites, the promoter decides between two hypothetical Bcd concentrations and , given the actual (unknown) concentration L in the nucleus. The number of occupied Bicoid sites fluctuates with time (b) and we assume the gene is expressed (c) when the number of occupied Bicoid binding sites on the promoter is (green dashed line, here ). The gene activity defines a non-Markovian telegraph process. The ratio of the likelihoods that the time trace of this telegraph process is generated by vs is the log-likelihood ratio used for decision-making (d). The log-likelihood ratio undergoes random excursions until it reaches one of the two decision boundaries (K, −K). In d. the actual concentration is and the log-likelihood ratio hits the upper barrier and makes the right decision. When , less Bicoid-binding sites are occupied (e) and the gene is less likely to be expressed (f), resulting in a negative drift in the log-likelihood ratio, which directs the random walk to the lower boundary −K and the decision (g). We consider that all six binding sites bind Bicoid independently and are identical with binding rate per site and unbinding rate per site , , , for panels (b, c, d) and (e, f, g).

The dynamics of our model is a Markov chain with seven states with probability Pi corresponding to the number of sites occupied: from all sites unoccupied (probability P0) to all six sites bound by Bcd molecules (probability P6). The minimum number k of bound Bicoid sites required to activate the gene divides this chain into the two disjoint and complementary subsets of active states (, for which the gene is activated) and inactive states (, for which the gene is silenced) as illustrated in Figure 2b and d.

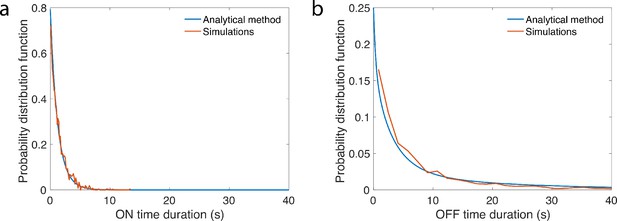

As Bicoid ligands bind and unbind the promoter (Figure 2b), the gene is successively activated and silenced (Figure 2c). This binding/unbinding dynamics results in a series of OFF and ON activation times that constitute all the information about the Bcd concentration available to downstream processes to make a decision. We note that the idea of translating the statistics of binding-unbinding times into a decision remains the same as in the Berg-Purcell approach, where only the activation times are translated into a decision (and not the deactivation times). The promoter architecture determines the relationship between Bcd concentration and the statistics of the ON-OFF activation time series, which makes it a key feature of the positional information decision process. Following (Siggia and Vergassola, 2013), we model the decision process as a Sequential Probability Ratio Test (SPRT) based on the time series of gene activation. At each point in time, SPRT computes the likelihood P of the observed time series under both hypotheses and and takes their ratio : . The logarithm of undergoes stochastic changes until it reaches one of the two decision threshold boundaries K or −K (symmetric boundaries are used here for simplicity) (Figure 2d). The decision threshold boundaries K are set by the error rate e for making the wrong decision between the hypothetical concentrations : (see Siggia and Vergassola, 2013 and Appendix 3). The choice of K or e depends on the level of reproducibility desired for the decision process. We set , corresponding to the widely used error rate of being more than one standard deviation away from the mean of the estimate for the concentration assumed to be unbiased in a Gaussian model (see the subsection ’The decision process of the anterior vs posterior hunchback expression’). The statistics of the fluctuations in likelihood space are controlled by the values of the Bcd concentrations: when Bcd concentration is low, small numbers of Bicoid ligands bind to the promoter (Figure 2e) and the hb gene spends little time in the active expression state (Figure 2f), which leads to a negative drift in the process and favors the lower one of the two possible concentrations (Figure 2g).

Mean decision time: connecting drift-diffusion and Wald’s approaches

In this section, we develop new methods to determine the statistics of gene switches between the OFF and ON expression states. Namely, by relating Wald’s approach (Wald, 1945a) with drift-diffusion, we establish the equality between the drift and diffusion coefficients in decision making space for difficult decision problems, that is, when the discrimination is hard, we elucidate the reason underlying the equality. That allows us to effectively determine long-term properties of the likelihood log-ratio and compute mean decision times for complex architectures.

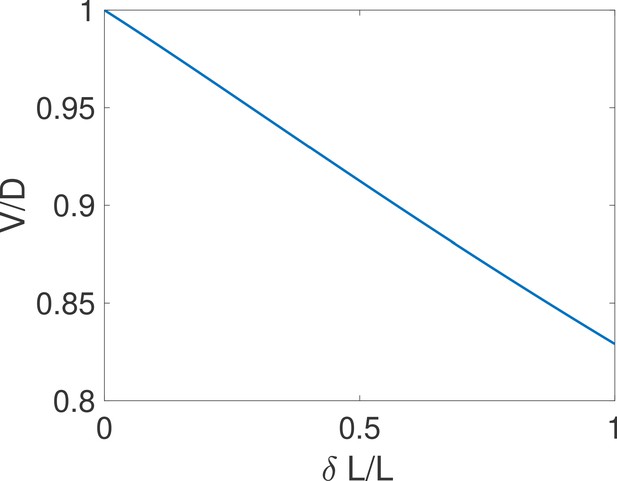

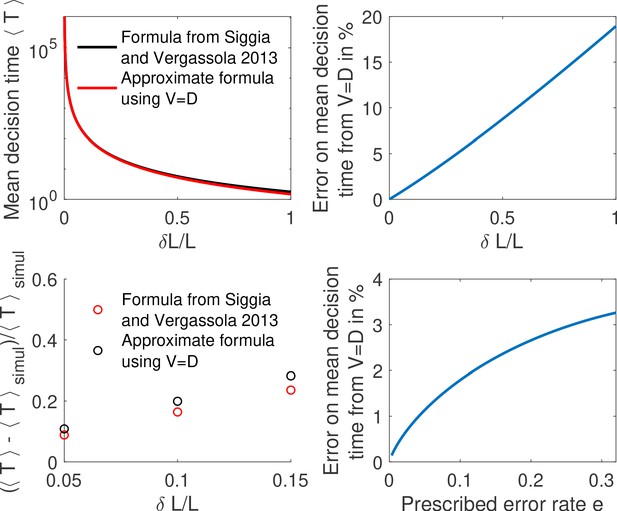

A gene architecture consists of N binding sites and is represented by N + 1 Markov states corresponding to the number of bound TF, and the rates at which they bind or unbind (Figure 3a). For a given architecture, the dynamics of binding/unbinding events and the rules for activation define the two probability distributions and for the duration of the OFF and ON times, respectively (Figure 3b). The two series are denoted and , where and are the number of switching events in time t from OFF to ON and vice versa. For those cases where the two concentrations L1 and L2 are close and the discrimination problem is difficult (which is the case of the Drosophila embryo), an accurate decision requires sampling over many activation and deactivation events to achieve discrimination. The logarithm of the ratio can then be approximated by a drift–diffusion equation: , where V is the constant drift, that is, the bias in favor of one of the two hypotheses, D is the diffusion constant and a standard Gaussian white noise with zero mean and delta-correlated in time (Wald, 1945a; Siggia and Vergassola, 2013). The decision time for the case of symmetric boundaries , is given by the mean first-passage time for this biased random walk in the log-likelihood space (Redner, 2001; Siggia and Vergassola, 2013):

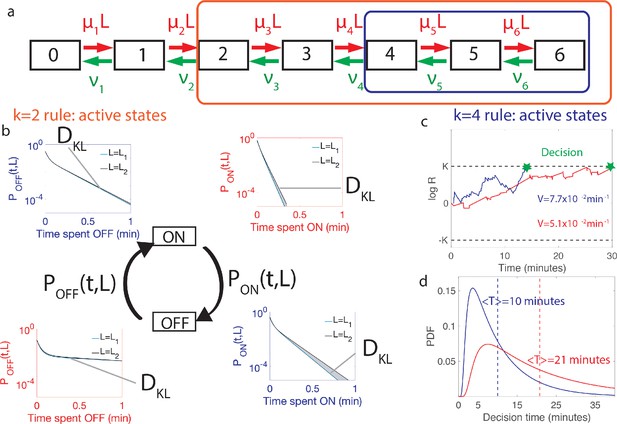

Comparing the performance of two promoter activation rules.

(a) The dynamics of the six Bcd binding site promoter is represented by a seven state Markov chain where the state number indicates the number of occupied Bicoid-binding sites. The boxes indicate the states in which the gene is expressed for the 2-or-more activation rule (red box and red in panels b-d) where the gene is active when 2-or-more TF are bound and the 4-or-more activation rule (blue box and blue in panels b-d) where the gene is active when 4-or-more TF are bound. (b) The dynamics of TF binding translates into bursting and inactive periods of gene activity. The OFF and ON time distributions are different for the two hypothetical concentrations (blue boxes for and red boxes for ). The Kullback-Leibler divergence between the distributions for the two hypothetical concentrations () sets the decision time and is related to the difference in the area below the two distributions. For the activation rule, the OFF time distributions are similar for the two hypothetical concentrations but the ON times distributions are very different. The ON times are more informative for the activation rule than the activation rule (c) The drift V of the log-likelihood ratio characterizes the deterministic bias in the decision process. The differences in (b) translate into larger drift for for the same binding/unbinding dynamics. (d) The distribution of decision times (calculated as the first-passage time of the log-likelihood random walk) decays exponentially for long times. Higher drift leads to on average faster decisions than for the activation rule (mean decision times are shown in dashed lines). For all panels the six binding sites are independent and identical with , , , and for all binding sites.

Note that in this approximation all the details of the promoter architecture are subsumed into the specific forms of the drift V and the diffusion D.

Drift. We assume for simplicity that the time series of OFF and ON times are independent variables (when this assumption is relaxed, see Appendix 8). This assumption is in particular always true when gene activation only depends on the number of bound Bicoid molecules. Under these assumptions, we can apply Wald’s equality (Wald, 1945b; Durrett, 2010) to the log-likelihood ratio, . Wald considered the sum of a random number M of independent and identically distributed (i.i.d.) variables. The equality that he derived states that if M is independent of the outcome of variables with higher indices (i.e. M is a stopping time), then the average of the sum is the product .

Wald’s equality applies to our likelihood sum ( of the likelihoods, where M is the number of ON and OFF times before a given (large) time t). We conclude the drift of the log-likelihood ratio, , is inversely proportional to , where is the mean of the distribution of ON times and is the mean of the distribution of OFF times . The term determines the average speed at which the system completes an activation/deactivation cycle, while the average describes how much deterministic bias the system acquires on average per activation/deactivation cycle. The latter can be re-expressed in terms of the Kullback-Leibler divergence between the distributions of the OFF and ON times calculated for the actual concentration L and each one of the two hypotheses, L1 and L2 :

Equation 2 quantifies the intuition that the drift favors the hypothetical concentration with the time distribution which is the closest to that of the real concentration L (Figure 3b).

Diffusivity : Why it is more involved to calculate and how we circumvent it. While the drift has the closed simple form in Equation 2, the diffusion term is not immediately expressed as an integral. The qualitative reason is as follows. Computing the likelihood of the two hypotheses requires computing a sum where the addends are stochastic (ratios of likelihoods) and the number of terms is also stochastic (the number of switching events). These two random variables are correlated: if the number of switching events is large, then the times are short and the likelihood is probably higher for large concentrations. While the drift is linear in the above sum (so that the average of the sum can be treated as shown above), the diffusivity depends on the square of the sum. The diffusivity involves then the correlation of times and ratios (Carballo-Pacheco et al., 2019), which is harder to obtain as it depends a priori on the details of the binding site model (see the subsection ’Equality between drift and diffusivity’ and the subsection ’When are correlations between the times of events leading to decision important?’ of Appendix 3 for details).

We circumvent the calculation of the diffusivity by noting that the same methods used to derive Equation (1) also yield the probability of first absorption at one of the two boundaries, say +K (see the subsection ’Equality between drift and diffusivity’ of Appendix 3):

By imposing , we obtain and the comparison with the expression of leads to the equality .

The above equality is expected to hold for difficult decisions only. Indeed, drift-diffusion is based on the continuity of the log-likelihood process and Wald’s arguments assume the absence of substantial jumps in the log-likelihood over a cycle. In other words, the two approaches overlap if the hypotheses to be discriminated are close. For very distinct hypotheses (easy discrimination problems), the two approaches may differ from the actual discrete process of decision and among themselves. We expect then that holds only for hypotheses that are close enough, which is verified by explicit examples (see the subsection ’Equality between drift and diffusivity’ of Appendix 3). The Appendix subsection also verifies by expanding the general expression of V and D for close hypotheses. The origin of the equality is discussed below.

Using , we can reduce the general formula Equation (1) to

which is formula 4.8 in Wald, 1945a and it is the expression that we shall be using (unless stated otherwise) in the remainder of the paper.

The additional consequence of the equality is that the argument of the hyperbolic tangent in Equation (1) is . It follows that for any problem where the error , the argument of the hyperbolic tangent is large and the decision time is weakly dependent on deviations to that occur when the two hypotheses differ substantially. A concrete illustration is provided in the subsection ’The first passage time to decision’ of Appendix 3.

Single binding site example. As an example of the above equations, we consider the simplest possible architecture with a single binding site (), where the gene activation and de-activation processes are Markovian. In this case, the de-activation rate ν is independent of TF concentration and the activation rate is exponentially distributed . We can explicitly calculate the drift (Equation 2) and expand it for and , at leading order in . Inserting the resulting expression into Equation 4, we conclude that

decreases with increasing relative TF concentration difference and gives a very good approximation of the complete formula (see Appendix 3—figure 2a–c with different values of ).

Equations 2 and 4 greatly reduce the complexity of evaluating the performance of architectures, especially when the number of binding sites is large. Alternatively, computing the correlation of times and log-likelihoods would be increasingly demanding as the size of the gene architecture transfer matrices increase. As an illustration, Figure 3 compares the performance of different activation strategies : the 2-or-more rule (), which requires at least two Bcd-binding sites to be occupied for hb promoter activation (Figure 3a–d in blue), and the 4-or-more rule () (Figure 3a–d in red) for fixed binding and unbinding parameters. Figure 3c shows that stronger drifts lead to faster decisions. The full decision time probability distribution is computed from the explicit formula for its Laplace transform (Siggia and Vergassola, 2013, Figure 3d). With the rates chosen for Figure 3, the rule leads to an ON time distribution that varies strongly with the concentration, making it easier to discriminate between similar concentrations: it results in a stronger average drift that leads to a faster decision than (Figure 3d).

What is the origin of the equality? The special feature of the SPRT random process is that it pertains to a log-likelihood. This is at the core of the equality that we found above. First, note that the equality is dimensionally correct because log-likelihoods have no physical dimensions so that both V and D have units of . Second, and more important, log-likelihoods are built by Bayesian updating, which constrains their possible variations. In particular, given the current likelihoods and at time t for the two concentrations L1 and L2 and the respective probabilities and of the two hypotheses, it must be true that the expected values after a certain time remain the same if the expectation is taken with respect to the current (see, e.g. Reddy et al., 2016). In formulae, this implies that the average variation of the probability over a given time that is

should vanish (see the subsection 'Equality between drift and diffusivity’ of Appendix 3 for a derivation). Here, is the expected variation of under the assumption that hypothesis 1 is true and is the same quantity but under the assumption that hypothesis 2 is true. We notice now that , where is the log-likelihood, and that the drift-diffusion of the log-likelihood implies that , and . By using that and , we finally obtain that

and imposing yields the equality . Note that the above derivation holds only for close hypotheses, otherwise the velocity and the diffusivity under the two hypotheses do not coincide.

Additional embryological constraints on promoter architectures

In addition to the requirements imposed by their performance in the decision process (green dashed line in Figure 4a), promoter architectures are constrained by experimental observations and properties that limit the space of viable promoter candidates for the fly embryo. A discussion about their possible function and their relation to downstream decoding processes is deferred to the final section.

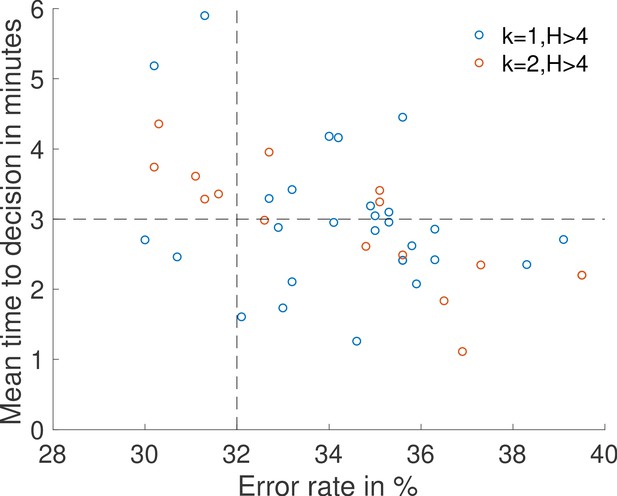

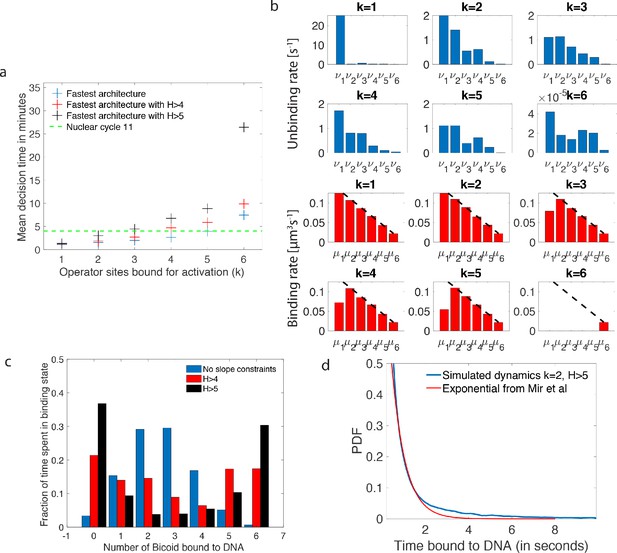

Performance, constraints and statistics of fastest decision-making architectures.

(a) Mean decision time for discriminating between two concentrations with and . Results shown for the fastest decision-making architectures for different activation rules and steepness constraints. For a given activation rule (k), we optimize over all values of ON rates and OFF rates (see Figure 3a) within the diffusion limit ( per site), constraining the steepness H and probability of nuclei to be active at the boundary (see the paragraph ’Additional embryological constraints on promoter architectures’). The green lines denotes the interphase duration of nuclear cycle 11 and even for the strongest constraints () we identify architectures that make an accurate decision within this time limit. (b) The unbinding rates (blue) and binding rates (red) of the fastest decision-making architectures with – all these regulatory systems require cooperativity in TF binding to the promoter-binding sites. The dashed line on the ON rates plots shows the upper bound set by the diffusion limit. (c) Histogram of the probability distribution of the time spent in different Bcd-binding site occupancy states for the fastest decision-making architecture for and no constraints on the slope (blue), (red) and (black). (d) Probability distribution of the time spent bound to th DNA by Bicoid molecules for the fastest decision-making architecture with and . Our prediction is compared to the exponential distribution with parameters fit by Mir et al., 2017, for the specific binding at the boundary. While the distributions are close, our simulated distribution is not exponential, as expected for the 6-binding site architecture. The non-exponential behavior in the experimental curve is likely masked by the convolution with non-specific binding. We use the boundary region concentration (see panel b, for rates).

First, we require that the average probability for a nucleus to be active in the boundary region is equal to 0.5, as it is experimentally observed (Lucas et al., 2013; Figure 1a). This requirement mainly impacts and constrains the ratio between binding rates and unbinding rates .

Second, there is no experimental evidence for active search mechanisms of Bicoid molecules for its targets. It follows that, even in the best case scenario of a Bcd ligand in the vicinity of the promoter always binding to the target, the binding rate is equal to the diffusion limited arrival rate (Appendix 1). As a result, the binding rates are limited by diffusion arrivals and the number of available binding sites: (black dashed line in Figure 4b), where L is the concentration of Bicoid. This sets the timescale for binding events. In Appendix 1, we explore the different measured values and estimates of parameters defining the diffusion limit and their influence on the decision time (see Appendix 1—table 1 for all the predictions).

Third, as shown in Figure 1, the hunchback response is sharp, as quantified by fitting a Hill function to the expression level vs position along the egg length. Specifically, the hunchback expression (in arbitrary units) is well approximated as a function of the Bicoid concentration by the Hill function , where is the Bcd concentration at the half-maximum hb expression point and H is the Hill coefficient (Figure 1a). Experimentally, the measured Hill coefficient for mRNA expression from the WT hb promoter is (Lucas et al., 2018; Tran et al., 2018). Recent work (Tran et al., 2018) suggests that these high values might not be achieved by Bicoid-binding sites only. Given current parameter estimates and an equilibrium binding model, (Tran et al., 2018) shows that a Hill coefficient of 7 is not achievable within the duration of an early nuclear cycle ( min). That points at the contribution of other mechanisms to pattern steepness. Given these reasons (and the fact that we limit ourselves only to a model with six equilibrium Bcd-binding sites only), we shall explore the space of possible equilibrium promoter architectures limiting the steepness of our profiles to Hill coefficients .

Numerical procedure for identifying fast decision-making architectures

Using Equations 2 and 4, we explore possible hb promoter architectures and activation rules to find the ones that minimize the time required for an accurate decision, given the constraints listed in the paragraph ‘Additional embryological constraints on promoter architectures’. We optimize over all possible binding rates (μ1 is the binding rate of the first Bcd ligand and the binding rate of the last Bcd ligand when 5 Bcd ligands are already bound to the promoter), and the unbinding rates (ν1 is the unbinding rate of a single Bcd ligand bound to the promoter and is the unbinding rate of all Bcd ligands when all six Bcd-binding sites are occupied). We also explore different activation rules by varying the minimal number of Bcd ligands k required for activation in the k-or-more activation rule (Estrada et al., 2016; Tran et al., 2018). We use the most recent estimates of biological constants for the hb promoter and Bcd diffusion (see Appendix 1) and set the error rate at the border to 32% (Gregor et al., 2007b; Petkova et al., 2019). Reasons for this choice were given in the subsection ‘The decision process of the anterior vs posterior hunchback expression’ and will be revisited in the subsection ‘How many nuclei make a mistake?’, where we shall introduce some embryological considerations on the number of nuclei involved in the decision process and determine the error probability accordingly. The optimization procedure that minimized the average decision time for different values of k and H is implemented using a mixed strategy of multiple random starting points and steepest gradient descent (Figure 4a).

Logic and properties of the identified fast decision architectures

The main conclusion we reach using the methodology presented in the 'Methodological setup' section is that there exist promoter architectures that reach the required precision within a few minutes and satisfy all the additional embryological constraints that were discussed previously (Figure 4a). The fastest promoters (blue crosses in Figure 4a) reach a decision within the time of nuclear cycle 11 (green line in Figure 4a) for a wide range of activation rules. Even imposing steep readouts () allows us to identify relatively fast promoters, although imposing the nuclear cycle time limit, pushes the activation rule to smaller k. Interestingly, we find that the fastest architectures identified perform well over a range of high enough concentrations (Appendix 4—figure 1). The optimal architectures differ mainly by the distribution of their unbinding rates (Figure 4b). We shall now discuss their properties, namely the binding times of Bicoid molecules to the DNA binding sites, and the dependence of the promoter activity on various features, such as activation rules and the number of binding sites in detail. Together, these results elucidate the logic underlying the process of fast decision-making.

How many nuclei make a mistake?

The precision of a stochastic readout process is defined by two parameters: the resolution of the readout , and the probability of error, which sets the reproducibility of the readout. In Figure 4, we have used the statistical Gaussian error level (32%) to obtain our results. However, the error level sets a crucial quantity for a developing organism and it is important to connect it with the embryological process, namely how many nuclei across the embryo will fail to properly decide (whether they are positioned in the anterior or in the posterior part of the embryo). To make this connection, we compute this number for a given average decision time t and we integrate the error probability along the AP axis to obtain the error per nucleus . The expected number of nuclei that fail to correctly identify their position is given by , where c is the nuclear cycle and we have neglected the loss due to yolk nuclei remaining in the bulk and arresting their divisions after cycle 10 (Foe and Alberts, 1983). Assuming a 270 s readout time – the total interphase time of nuclear cycle 11 (Tran et al., 2018) – for the fastest architecture identified above and an error rate of 32%, we find that , that is an essentially fail-proof mechanism. This number can be compared with >30 nuclei in the embryo that make an error in a read-out in a Berg-Purcell-like fixed-time scheme (integrated blue area in Figure 1c).

Conversely, for a given architecture, reducing the error level increases drastically the mean first-passage time to decision: the mean time for decision as a function of the error rate for the fastest architecture identified with and is shown in Appendix 2—figure 1. The decision can be made in about a minute for but requires on average 10 min for (Appendix 2—figure 1). Note that, because the mean first-passage depends simply on the inverse of the drift per cycle (Equation 4), the relative performance of two architectures is the same for any error rate so that the fastest architectures identified in Figure 4a are valid for all error levels.

Just like for the fixed-time strategy (Figure 1c and d), nuclei located in the mid-embryo region are more likely to make mistakes and take longer on average to trigger a decision (Appendix 4—figure 2).

Residence times among the various states

As shown in Tran et al., 2018, high Hill coefficients in the hunchback response are associated with frequent visits of the extreme expression states where available binding sites are either all empty (state 0), or all occupied (state 6). Figure 4c provides a concrete illustration by showing the distribution of residence times for the promoter architectures that yield the fastest decision times for and no constraints (blue bars), (red bars) and (black bars). When there are no constraints on the slope of the hunchback response, the most frequently occupied states are close to the ON-OFF transition (2 and 3 occupied binding sites in Figure 4c) to allow for fast back and forth between the active and inactive states of the gene and thereby gather information more rapidly by reducing (see formulae 2 and 4).

We notice that for higher Hill coefficients, the system transits quickly through the central states (in particular states with 3 and 4 occupied Bcd sites, Figure 4 red and black bars). As expected for high Hill coefficients, such dynamics requires high cooperativity. Cooperativity helps the recruitment of extra transcription factors once one or two of them are already bound and thus speeds up the transitioning through the states with 2, 3 and 4 occupied binding sites. An even higher level of cooperativity is required to make TF DNA binding more stable when 5 or 6 of them are bound, reducing the OFF rates or (Figure 4b).

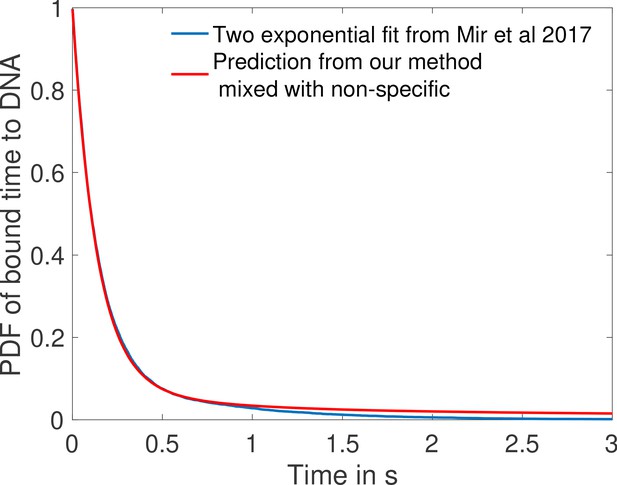

The (short) binding times of Bicoid on DNA

The distribution of times spent bound to DNA of individual Bicoid molecules is shown in Figure 4d obtained from Monte Carlo simulations using rates from the fastest architecture with and . We find an exponential decay, an average bound time of about 7.1 s and a median around 0.5 s. Our median-time-bound prediction is of the same order of magnitude as the observed bound times seen in recent experiments by Mir et al., 2017; Mir et al., 2018, who found short (mean ∼0.629 s and median ∼0.436 s based on exponential fits), yet quite distinguishable from background, bound times to DNA. These results were considered surprising because it seemed unclear how such short binding events could be consistent with the processing of ON and OFF gene switching events. Our results show that such short binding times may actually be instrumental in achieving the tradeoff between accuracy and speed, and rationalize how longer activation events are still achieved despite the fast binding and unbinding. High cooperativity architectures lead to non-exponential bound times to DNA (Figure 4d) for which the typical bound time (median) is short but the tail of the distribution includes slower dynamics that can explain longer activation events (the mean is much larger than the median). This result suggests that cells can use the bursty nature of promoter architectures to better discriminate between TF concentrations.

In Mir et al., 2017, the raw distribution comprises both non-specific and specific binding and cannot be directly compared to simulation results. Instead, we use the largest of the two exponents fit for the boundary region (Mir et al., 2017), which should correspond to specific binding. The agreement between the distributions in Figure 4d is overall good, and we ascribe discrepancies to the fact that (Mir et al., 2017) fit two exponential distributions assuming the observed times were the convolution of exponential specific and non-specific binding times. Yet the true specific binding time distribution is likely not exponential, e.g. due to the effect of binding sites having different binding affinities. We show in Appendix 5—figure 1 that the two distributions are very similar and hard to distinguish once they are mixed with the non-specific part of the distribution.

Activation rules

In the parameter range of the early fly embryo, the fastest decision-making architectures share the one-or-more () activation rule : the promoter switches rapidly between the ON and OFF expression states and the extra binding sites are used for increasing the size of the target rather than building a more complex signal. Architectures with and activation rules can make decisions in less than 270 s and satisfy all the required biological constraints. Generally speaking, our analysis predicts that fast decisions require a small number of Bicoid-binding sites (less than three) to be occupied for the gene to be active. The advantage of the or activation rules is that the ON and OFF times are on average longer than for , which makes the downstream processing of the readout easier. We do not find any architecture satisfying all the conditions for the activation rules, although we cannot exclude there could be some architectures outside of the subset that we managed to sample, especially for the activation rules where we did identify some promoter structures that are close to the time constraint.

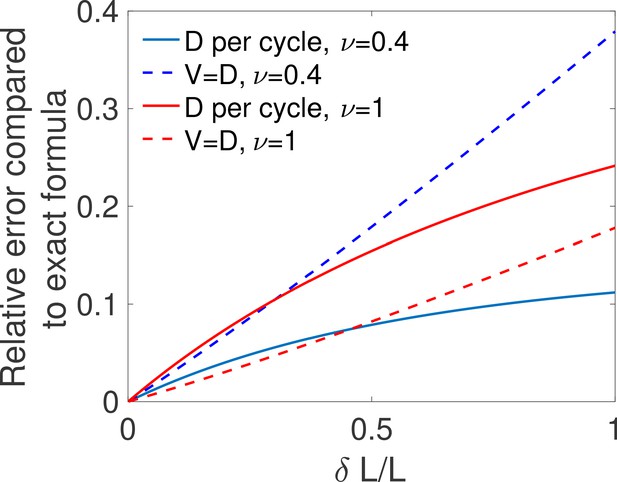

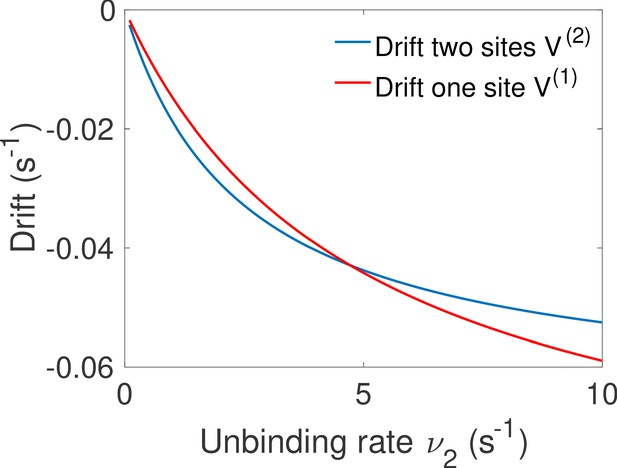

Activation rules with higher k can give higher information per cycle for the ON rate, yet they do not seem to lead to faster decisions because of the much longer duration of the cycles. To gain insight on how the tradeoff between fast cycles and information affects the efficiency of activation rules, we consider architectures with only two binding sites, which lend to analytical understanding (Figure 5a and b). Both of these considered architectures are out of equilibrium and require energy consumption (as opposed to the two equilibrium architectures of Figure 5c and d).

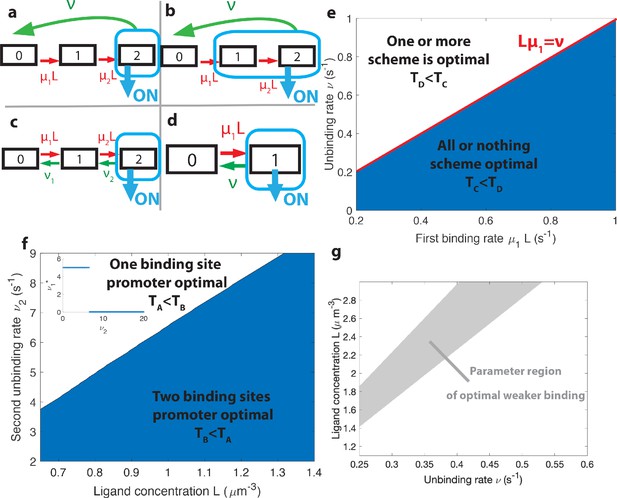

The effects of different promoter architectures on the mean decision time.

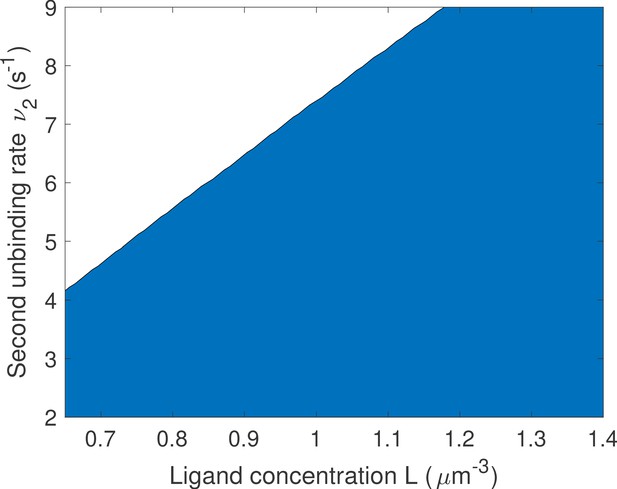

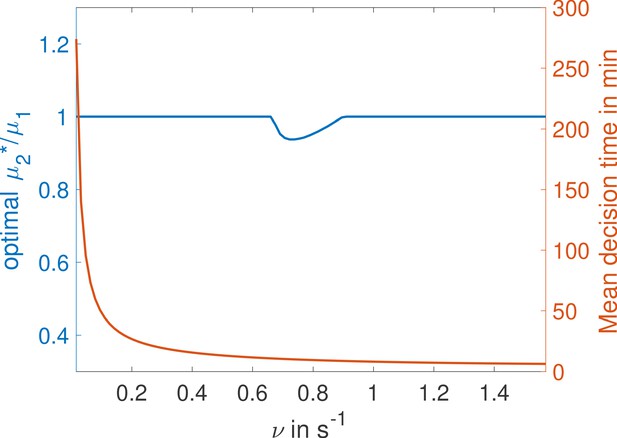

We compare promoters of different complexity: the all-or-nothing out-of-equilibrium model (a), the 1-or-more out-of-equilibrium model (b), the two binding site all-or-nothing equilibrium model (c) and the one binding site equilibrium model (d). (e) Comparison of the mean decision time between (a) and (b) activation schemes for the two binding site out-of-equilibrium models as a function of the unbinding rate ν and binding rate μ1. The binding rate μ2 is fixed to . The fastest decision-making solution associates the second binding with slower variables to maximize V. Along the line the activation rules and perform at the same speed. . (f) Comparison of the mean decision time for equilibrium architectures with one (d) and two (c) equilibrium TF-binding sites. are optimized at fixed , . We set a maximum value of for μ1 and μ2, corresponding to the diffusion limited arrival at the binding site. For ν1, the maximum value of 5 s−1 corresponds to the inverse minimum time required to read the presence of a ligand, or to differentiate it from unspecific binding of other proteins. Additional binding sites are beneficial at high ligand concentrations and for small unbinding rates. In the blue region, the fastest mean decision time for a fixed accuracy assuming equilibrium binding, comes from a two binding site architecture with a non-zero first unbinding rate. In the white region, one of the binding rates →0 (see inset), which reduces to a one binding site model. . (g) Weaker binding sites can lead to faster decision times within a range of parameters (gray stripe). We consider the activation scheme with two binding sites (b). For fixed L (x axis), ν (y axis) and , we optimize over μ2 while setting (context of diffusion limited first and second bindings). The gray regions corresponds to parameters for which the optimal second unbinding rate and the second binding is weak. In the white region . For all panels , , .

When is 1-or-more faster than all-or-nothing activation?

A first model has the promoter consisting of two binding sites with the all-or-nothing rule (Figure 5a). We consider the mathematically simpler, although biologically more demanding, situation where TFs cannot unbind independently from the intermediate states – once one TF binds, all the binding sites need to be occupied before the promoter is freed by the unbinding with rate ν of the entire complex of TFs. This situation can be formulated in terms of a non-equilibrium cycle model, depicted for two binding sites in Figure 5a. The activation time is a convolution of the exponential distributions . In the simple case, when the two binding rates are similar (), the OFF times follow a Gamma distribution and the drift and diffusion can be computed analytically (see Appendix 4). When the two binding rates are not similar the drift and diffusion must be obtained by numerical integration (see Appendix 4).

In the first model described above (Figure 5a), deactivation times are independent of the concentration and do not contribute to the information gained per cycle and, as a result, to . To explore the effect of deactivation time statistics on decision times, we consider a cycle model where the gene is activated by the binding of the first TF (the 1-or-more rule) and deactivation occurs by complete unbinding of the TFs complex (Figure 5b). The resulting activation times are exponentially distributed and contribute to drift and diffusion as in the simple two state promoter model (Figure 5d). The deactivation times are a convolution of the concentration-dependent second binding and the concentration-independent unbinding of the complex and their probability distribution is . Drift and diffusion can be obtained analytically (Appendix 4). The concentration-dependent deactivation times prove informative for reducing the mean decision time at low TF concentrations but increase the decision time at high TF concentrations compared to the simplest irreversible binding model. In the limit of unbinding times of the complex () much larger than the second binding time (), no information is gained from deactivation times. In the limit of , the model reduces to a one binding site exponential model and the two architectures (Figure 5b and d) have the same asymptotic performance.

Within the irreversible schemes of Figure 5a and Figure 5b, the average time of one activation/deactivation cycle is the same for the all-or-nothing and 1-or-more activation schemes. The difference in the schemes comes from the information gained in the drift term , which begs the question : is it more efficient to deconvolve the second binding event from the first one within the all-or-nothing activation scheme, or from the deactivation event in the activation scheme?

In general, the convolution of two concentration-dependent events is less informative than two equivalent independent events, and more informative than a single binding event. For small concentrations L, activation events are much longer than deactivation events. In the scheme, OFF times are dominated by the concentration-dependent step and the two activation events can be read independently. This regime of parameters favors the rule (Figure 5e). However, when the concentration L is very large the two binding events happen very fast and for , in the scheme, it is hard to disentangle the binding and the unbinding events. The information gained in the second binding event goes to 0 as and the one-or-more activation scheme (Figure 5b) effectively becomes equivalent to a single binding site promoter (Figure 5d), making the all-or-nothing activation (Figure 5a) scheme more informative (Figure 5e). The fastest decision time architecture systematically convolves the second binding event with the slowest of the other reactions (Figure 5e), with the transition between the two activation schemes when the other reactions have exactly equal rates ( line in Figure 5e) (see Appendix 6 for a derivation).

How the number of binding sites affects decisions

The above results have been obtained with six binding sites. Motivated by the possibility of building synthetic promoters (Park et al., 2019) or the existence of yet undiscovered binding sites, we investigate here the role of the number of binding sites. Our results suggest that the main effect of additional binding sites in the fly embryo is to increase the size of the target (and possibly to allow for higher cooperativity and Hill coefficients). To better understand the influence of the number of binding sites on performance at the diffusion limit, we compare a model with one binding site (Figure 5d) to a reversible model with two binding sites where the gene is activated only when both binding sites are bound (all-or-nothing , Figure 5c). Just like for the six binding site architectures, we describe this two binding site reversible model by using the transition matrix of the Markov chain and calculate the total activation time .

For fixed values of the real concentration L, the two hypothetical concentrations L1 and L2, the error e and the second off-rate , we optimize the remaining parameters μ1, μ2 and ν1 for the shortest average decision time.

For high gene deactivation rates , the fastest decision time is achieved by a promoter with one binding site (Figure 5f): once one ligand has bound, the promoter never goes back to being completely unbound (=0 in Figure 5c) but toggles between one and two bound TF (Figure 5d with and ). For lower values of gene deactivation rates , there is a sharp transition to a minimal solution using both binding sites. In the all-or-nothing activation scheme that is used here, the distribution of deactivation times is ligand independent and the concentration is measured only through the distribution of activation times, which is the convolution of the distributions of times spent in the 0 and 1 states before activation in the two state. For very small deactivation rates, it is more informative to 'measure’ the ligand concentration by accumulating two binding events every time the gene has to go through the slow step of deactivating (Figure 5c). However, for large deactivation rates little time is 'lost’ in the uninformative expressing state and there is no need to try and deconvolve the binding events but rather use direct independent activation/deactivation statistics from a single binding site promoter (Figure 5d, see Appendix 7 for a more detailed calculation).

The role of weak binding sites

An important observation about the strength of the binding sites that emerge from our search is that the binding rates are often below the diffusion limit (see black dashed line in Figure 4b) : some of the ligands reach the receptor, they could potentially bind but the decision time decreases if they do not. In other words, binding sites are 'weak’ and, since this is also a feature of many experimental promoters (Gertz et al., 2009), the purpose of this section is to investigate the rationale for this observation by using the models described in Figure 5.

Naively, it would seem that increasing the binding rate can only increase the quality of the readout. This statement is only true in certain parameter regimes, and weaker binding sites can be advantageous for a fast and precise readout. To provide concrete examples, we fix the deactivation rate ν and the first binding rate μ1 in the 1-or-more irreversible binding model of Figure 5b and we look for the unbinding rate that leads to the fastest decision. We consider a situation where the two binding sites are not interchangeable and binding must happen in a specific order. In this case, the diffusion limit states that if the first binding is strong and happens at the diffusion limit. We optimize the mean decision time for (see Appendix 9—figure 1 for an example) and find a range of parameters where the fastest-decision value is not as fast as parameter range allows (Figure 5g). We note that this weaker binding site that results in fast decision times can only exist within a promoter structure that features cooperativity. In this specific case, the first binding site needs to be occupied for the second one to be available. If the two binding sites are independent, then the diffusion limit is and the fastest solution always has the fastest possible binding rates.

Predictions for Bicoid-binding sites mutants

In addition to results for wild type embryos, our approach also yields predictions that could be tested experimentally by using synthetic hb promoters with a variable numbers of Bicoid-binding sites (Figure 6a). For any of the fast decision-making architectures identified and activation rules chosen, we can compute the effects of reducing the number of binding sites. Specifically, our predictions for the activation rule and in Figure 6b can be compared to FISH or fluorescent live imaging measurements of the fraction of active loci at a given position along the anterior-posterior axis. Bcd-binding site mutants of the WT promoter have been measured by immunostaining in cycle 14 (Park et al., 2019), although mRNA experiments in earlier cell cycles of well characterized mutants are needed to provide for a more quantitative comparison.

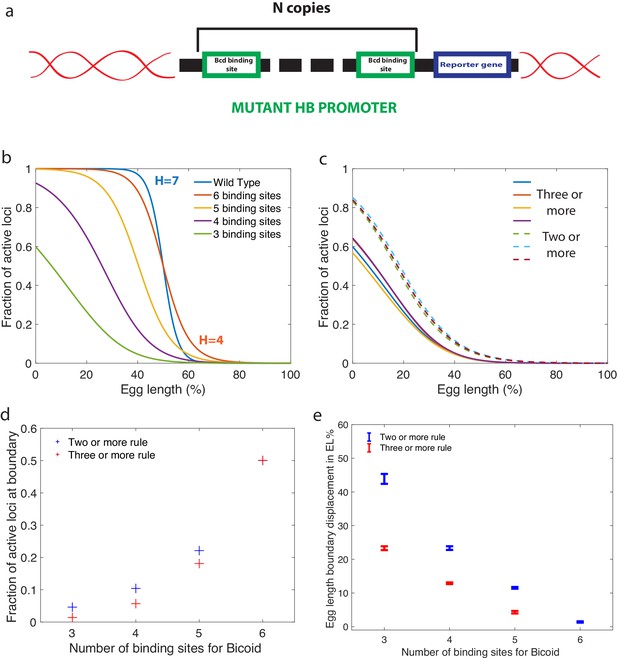

Predictions for experiments with synthetic hb promoters.

(a) We consider experiments involving mutant Drosophilae where a copy of a subgroup of the Bicoid-binding sites of the hunchback promoter is inserted into the genome along with a reporter gene to measure its activity. (b) The prediction for the activation profile across the embryo for wild type and mutants for the fastest decision time architecture for and . (c) The gene activation profile for several architectures for and (full lines) and (dashed lines) that results in mean decision times < 3 minutes. Groups of profiles gather in two distinct clusters. (d) Fraction of genes that are active on average at the expression boundary using the minimal architecture identified for and as a function of the number of binding sites in the hb promoter. Predictions for the six-binding site cases coincide because having half the nuclei active at the boundary is a requirement in the search for valid architectures. (e) Predicted displacement of the boundary region defined as the site of half hunchback expression in terms of egg length as a function of the number of binding sites. The architectures shown result in the fastest decisions for and and . Error bar width is the standard deviation of the various architectures that are close to minimal . For all panels, has an exponentially decreasing profile with decay length one fifth of total egg length with at the boundary. Parameters are given in Appendix 10.

An important consideration for the comparison to experimental data is that there is a priori no reason for the hb promoter to have an optimal architecture. We do find indeed many architectures that satisfy all the experimental constraints and are not the fastest decision-making but 'good enough’ hb promoters. A relevant question then is whether or not similarity in performance is associated with similarity in the microscopic architecture. This point is addressed in Figure 6c, where we compare the fraction of active loci along the AP axis using several constraint-conforming architectures for and the and activation rules. The plot shows that solutions corresponding to the same activation rule are clustered together and quite distinguishable from the rest. This result suggests that the precise values of the binding and unbinding constants are not important for satisfying the constraints, that many solutions are possible, and that FISH or MS2 imaging experiments can be used to distinguish between different activation rules. The fraction of active loci in the boundary region is an even simpler variable that can differentiate between different activation rules (Figure 6d). Lastly, we make a prediction for the displacement of the anterior-posterior boundary in mutants, showing that a reduced numbers of Bcd sites results in a strong anterior displacement of the hb expression border compared to six binding sites, regardless of the activation rule (Figure 6e). Error bars in Figure 6e, that correspond to different close-to-fastest architectures, confirm that these share similar properties and different activation rules are distinguishable.

Joint dynamics of Bicoid enhancer and promoter

The Bicoid transcription factor has been shown to target more than a thousand enhancer loci in the Drosophila embryo with a wide concentration range of sensitivities (Driever and Nüsslein-Volhard, 1988; Struhl et al., 1989; Hannon et al., 2017). Enhancers are of special interest because they can be located far away from the promoter (Ribeiro et al., 2010; Krivega and Dean, 2012) and perform a statistically independent sample of the concentration that is later combined with that of the promoter. Evidence suggests that promoter-based conformational changes can be stable over long times (Fukaya et al., 2016), which mimics information storage during a process of signal integration. To explore these effects, we consider a simple model of enhancer dynamics where a Bicoid-specific enhancer switches between two states ON and OFF independently of the promoter dynamics. We assume a simple rule for the gene activity: the gene is transcribed when both the promoter and the enhancer are ON. As an example, we consider an enhancer made of one binding site so that the ON rate of the enhancer is limited by the diffusion rate . As an example, we perform a parameter search for the promoter activation rule (see Appendix 12), while still assuming that about half the nuclei are active at the boundary and a required Hill coefficient greater than 4, looking for architectures yielding the shortest decision time for an error rate of 32% for the 10% relative concentration difference discrimination problem. We find that the enhancer improves the performance of the readout, reducing the time to decision by about . We find that adding extra binding sites to the promoter increases the computing power of the enhancer-promoter system and can reduce the time to decision to about 60% of the performance of the best architectures without enhancers.

Estimating the log-likelihood function with RNA concentrations

To illustrate how a biochemical network can approximate the calculation of the log-likelihood, we consider the case of the fastest architecture identified in the paragraph ’Numerical procedure for identifying fast decision-making architectures’ with and . In Figure 7a, we show the contributions of the OFF times (blue) and the ON times (red) to the log-likelihood for this architecture. We notice that the behavior of the log-likelihood contributions at long times is simply linear in time with a positive rate for ON times and a negative rate for OFF times. Conversely, short ON times contribute negatively to the log-likelihood while short OFF times contribute positively to the log-likelihood (Figure 7a). This observation suggests a simple model of RNA production with delay to approximate the computation of the log-likelihood. We consider a model of RNA production with five parameters (Figure 7b, details of the model are given in Appendix 11). We assume that when the promoter is in the ON state, polymerase is loaded and RNA is transcribed at a constant rate while when the promoter is in the OFF state, RNA is produced at a lower basal rate . In order to approximate the linear behavior of the log-likelihood function at long times we suggest the existence of an enzyme actively degrading hunchback RNA (Chanfreau, 2017) and include in our model of RNA production the delay and inertia associated with promoter dynamics and polymerase loading. RNA levels fluctuate and trigger decisions when the concentration is greater than a threshold (anterior decision) or lower than a threshold (posterior decision). Since many forms of switch have already been presented in the literature (Goldbeter and Koshland, 1981; Tyson et al., 2003; Ozbudak et al., 2004; Siggia and Vergassola, 2013; Sandefur et al., 2013), we shall concentrate on the log-likelihood calculation and refer to previous references for the implementation of a decision when reaching a threshold.

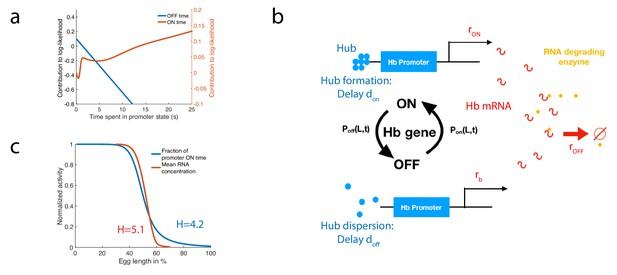

A model of RNA production and degradation approximates the contributions to log-likelihood.

(a) The log-likelihood of different times spent ON (red) and OFF (blue) for the fastest architecture identified with , assuming . The log-likelihood varies linearly with time for long times. (b) The hunchback promoter switches from ON to OFF and from OFF to ON according to the time distributions determined by its gene architecture and activation rule. When ON, after a delay associated with the formation of a cluster or hub (Cho et al., 2016b; Cho et al., 2016a; Mir et al., 2018), RNA is being produced at rate . When OFF, after a delay , the gene switches to basal rate . Hunchback RNA is being degraded actively by an enzyme at rate . The RNA is in excess for this reaction. (c) The model of RNA production with delay that yields an error of less than 32% in less than 3 min produces a profile of RNA production with high Hill coefficient (red lines, renormalized RNA profile) that is higher than the Hill coefficient of renormalized gene activity (blue line). Parameters for promoter activity are those of the fastest architecture identified with and , parameters for RNA production are , , s-1, s-1, s-1.

We look for parameters that satisfy both a high speed and high accuracy requirement for a decision between two points located 2% egg lengths apart across the mid-embryo boundary. For the fastest architecture identified with and , we identify parameters that satisfy and a mean decision time min (see Appendix 11—figure 1). We check that this model produces a profile of RNA that is consistent with the observed high Hill coefficient (Figure 7c). Interestingly, we find that for this particular set of parameters the RNA profile Hill coefficient is increased by the delayed transcription dynamics and the active degradation from (blue line in Figure 7c) up to (red line in Figure 7c, details of the calculation are given in Appendix 11). This result could shed new light on the fundamental limits to Hill coefficients in the context of cooperative TF binding (Estrada et al., 2016; Tran et al., 2018) and provide a possible mechanism to explain how mRNA profiles can reach higher steepness than the corresponding TF activities do. We also looked for parameter sets that approximate the log-likelihood for the optimal architecture identified for and and find several candidates that fall close to the requirement of speed and accuracy (Appendix 11—figure 1). Together these results show that implementing the log-likelihood using a molecular circuit with a hb promoter is possible. They do not show this is what is happening in the embryo itself.

Discussion

The issue of precision in the Bicoid readout by the hunchback promoter has a long history (Nüsslein-Volhard and Wieschaus, 1980; Tautz, 1988). Recent interest was sparked by the argument that the amount of information at the hunchback locus available during one nuclear cycle is too small for the observed 2% EL distance between neighboring nuclei that are able to make reproducible distinct decisions (Gregor et al., 2007b). By using updated estimates of the biophysical parameters (Porcher et al., 2010; Tran et al., 2018), and the Berg-Purcell error estimation, we confirm that establishing a boundary with 2% variability between neighbouring nuclei would take at least about 13.4 min – roughly the non-transient expression time in nuclear cycle 14 (Lucas et al., 2018; Tran et al., 2018) (Appendix 1). This holds for a single Bicoid-binding site. An intuitive way to achieve a speed up is to increase the number of binding sites: multiple occupancy time traces are thereby made available, which provides a priori more information on the Bicoid concentration.

Possible advantages of multiple sites are not so easy to exploit, though. First, the various sites are close and their respective bindings are correlated (Kaizu et al., 2014), so that their respective occupancy time traces are not independent. That reduces the gain in the amount of information. Second, if the activation of gene expression requires the joint binding of multiple sites, the transition to the active configuration takes time. The overall process may therefore be slowed down with respect to a single binding site model, in spite of the additional information. Third, and most importantly, information is conveyed downstream via the expression level of the gene, which is again a single time trace. This channeling of the multiple sites’ occupancy traces into the single time trace of gene expression makes gene activation a real information bottleneck for concentration readout. All these factors can combine and even lead to an increase in the decision time. To wit, an all-or-nothing equilibrium activation model with six identical binding sites functioning at the diffusion limit and no cooperativity takes about 38 min to achieve the same above accuracy. In sum, the binding site kinetics and the gene activation rules are essential to harness the potential advantage of multiple binding sites.

Our work addresses the question of which multisite promoters architecture minimize the effects of the activation bottleneck. Specifically, we have shown that decision schemes based on continuous updating and variable decision times significantly improve speed while maintaining the desired high readout accuracy. This should be contrasted to previously considered fixed-time integration strategies. In the case of the hunchback promoter in the fly embryo, the continuous update schemes achieve the 2% EL positional resolution in less than 1 min, always outperforming fixed-time integration strategies for the same promoter architecture (see Appendix 1—table 1). While 1 min is even beyond what is required for the fly embryo, this margin in speed allows to accommodate additional constraints, viz. steep spatial boundary and biophysical constraints on kinetic parameters. Our approach ultimately yields many promoter architectures that are consistent with experimental observables in fly embryos, and results in decision times that are compatible with a precise readout even for the fast nuclear cycle 11 (Lucas et al., 2018; Tran et al., 2018).

Several arguments have been brought forward to suggest that the duration of a nuclear cycle is the limiting time period for the readout of Bicoid concentration gradient. The first one concerns the reset of gene activation and transcription factor binding during mitosis. In that sense, any information that was stored in the form of Bicoid already bound to the gene is lost. The second argument is that the hunchback response integrated over a single nuclear cycle is already extremely precise. However, none of these imply that the hunchback decision is made at a fixed-time (corresponding to mitosis) so that strategies involving variable decision times are quite legitimate and consistent with all the known phenomenology.

We have performed our calculations in a worst-case scenario. First, we did not consider averaging of the readout between neighbouring nuclei. While both protein (Gregor et al., 2007a) and mRNA concentrations (Little et al., 2013) are definitely averaged, and it has been shown theoretically that averaging can both increase and decrease (Erdmann et al., 2009) readout variability between nuclei, we do not take advantage of this option. The fact that we achieve less than 3 min in nuclear cycle 11, demonstrates that averaging is a priori dispensable. Second, we demand that the hunchback promoter results in a readout that gives the positional resolution observed in nuclear cycle 14, in the time that the hunchback expression profile is established in nuclear cycle 11. The reason for this choice is twofold. On the one hand, we meant to show that such a task is possible, making feasible also less constrained set-ups. On the other hand, the hunchback expression border established in nuclear cycle 11 does not move significantly in later nuclear cycles in the WT embryo, suggesting that the positional resolution in nuclear cycle 11 is already sufficient to reach the precision of later nuclear cycles. The positional resolution that can be observed in nuclear cycle 11 at the gene expression level is EL (Tran et al., 2018), but this is also due to smaller nuclear density.

Two main factors generally affect the efficiency of decisions: how information is transmitted and how available information is decoded and exploited. Decoding depends on the representation of available information. Our calculations have considered the issue of how to convey information across the bottleneck of gene activation, under the constraint of a given Hill coefficient. The latter is our empirical way of taking into account the constraints imposed by the decoding process. High Hill coefficients are a very convenient way to package and represent positional information: decoding reduces to the detection of a sharp transition, an edge in the limit of very high coefficients. The interpretation of the Hill coefficient as a decoding constraint is consistent with our results that an increase in the coefficient slows down the decision time. The resulting picture is that promoter architecture results from a balance between the constraints imposed by a quick and accurate readout and those stemming from the ease of its decoding. The very possibility of a balance is allowed by the main conclusion demonstrated here that promoter structures can go significantly below the time limit imposed by the duration of the early nuclear cycles. That leaves room for accommodating other features without jeopardising the readout timescale. While the constraint of a fixed Hill coefficient is an effective way to take into account constraints on decoding, it will be of interest to explore in future work if and how one can go beyond this empirical approach. That will require developing a joint description for transmission and decoding via an explicit modeling of the mechanisms downstream of the activation bottleneck.

Recent work has shed light on the role of out of equilibrium architectures on steepness of response (Estrada et al., 2016) and gradient establishment (Tran et al., 2018; Park et al., 2019). Here, we showed that equilibrium architectures perform very well and achieve short decision times, and that out of equilibrium architectures do not seem to significantly improve the performance of promoters, except for making some switches from gene states a bit faster. Non-equilibrium effect can, however, increase the Hill coefficient of the response without adding extra binding sites, which is useful for the downstream readout of positional information that we formulated above as decoding.