A connectome and analysis of the adult Drosophila central brain

Abstract

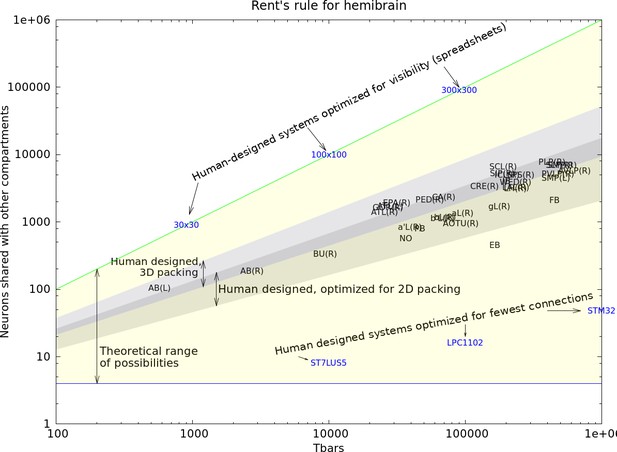

The neural circuits responsible for animal behavior remain largely unknown. We summarize new methods and present the circuitry of a large fraction of the brain of the fruit fly Drosophila melanogaster. Improved methods include new procedures to prepare, image, align, segment, find synapses in, and proofread such large data sets. We define cell types, refine computational compartments, and provide an exhaustive atlas of cell examples and types, many of them novel. We provide detailed circuits consisting of neurons and their chemical synapses for most of the central brain. We make the data public and simplify access, reducing the effort needed to answer circuit questions, and provide procedures linking the neurons defined by our analysis with genetic reagents. Biologically, we examine distributions of connection strengths, neural motifs on different scales, electrical consequences of compartmentalization, and evidence that maximizing packing density is an important criterion in the evolution of the fly’s brain.

eLife digest

Animal brains of all sizes, from the smallest to the largest, work in broadly similar ways. Studying the brain of any one animal in depth can thus reveal the general principles behind the workings of all brains. The fruit fly Drosophila is a popular choice for such research. With about 100,000 neurons – compared to some 86 billion in humans – the fly brain is small enough to study at the level of individual cells. But it nevertheless supports a range of complex behaviors, including navigation, courtship and learning.

Thanks to decades of research, scientists now have a good understanding of which parts of the fruit fly brain support particular behaviors. But exactly how they do this is often unclear. This is because previous studies showing the connections between cells only covered small areas of the brain. This is like trying to understand a novel when all you can see is a few isolated paragraphs.

To solve this problem, Scheffer, Xu, Januszewski, Lu, Takemura, Hayworth, Huang, Shinomiya et al. prepared the first complete map of the entire central region of the fruit fly brain. The central brain consists of approximately 25,000 neurons and around 20 million connections. To prepare the map – or connectome – the brain was cut into very thin 8nm slices and photographed with an electron microscope. A three-dimensional map of the neurons and connections in the brain was then reconstructed from these images using machine learning algorithms. Finally, Scheffer et al. used the new connectome to obtain further insights into the circuits that support specific fruit fly behaviors.

The central brain connectome is freely available online for anyone to access. When used in combination with existing methods, the map will make it easier to understand how the fly brain works, and how and why it can fail to work correctly. Many of these findings will likely apply to larger brains, including our own. In the long run, studying the fly connectome may therefore lead to a better understanding of the human brain and its disorders. Performing a similar analysis on the brain of a small mammal, by scaling up the methods here, will be a likely next step along this path.

Introduction

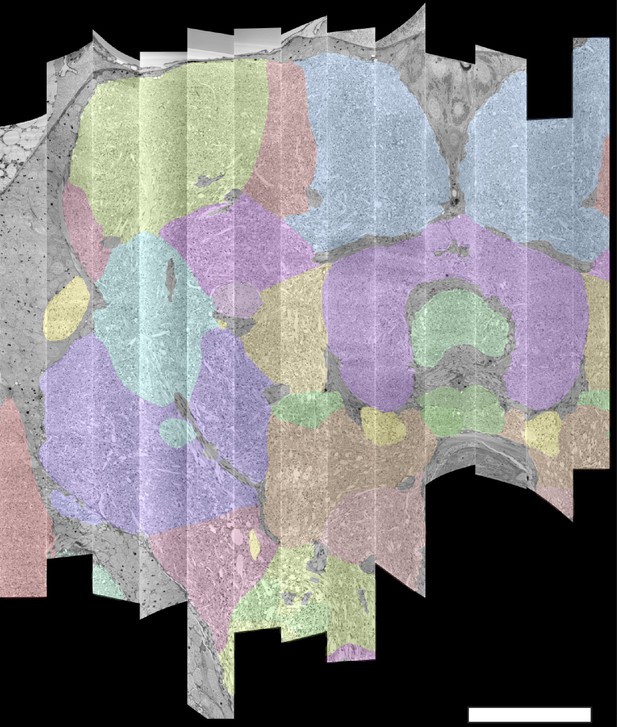

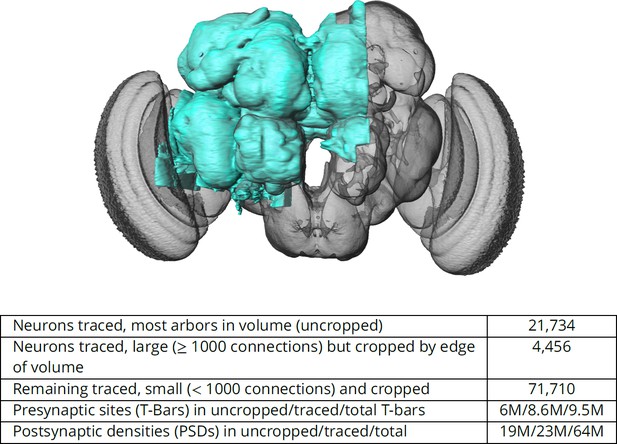

The connectome we present is a dense reconstruction of a portion of the central brain (referred to here as the hemibrain) of the fruit fly, Drosophila melanogaster, as shown in Figure 1. This region was chosen since it contains all the circuits of the central brain (assuming bilateral symmetry), and in particular contains circuits critical to unlocking mysteries involving associative learning in the mushroom body, navigation and sleep in the central complex, and circadian rhythms among clock circuits. The largest dense reconstruction to date, it contains around 25,000 neurons, most of which were rigorously clustered and named, with about 20 million chemical synapses between them, plus portions of many other neurons truncated by the boundary of the data set (details in Figure 1). Each neuron is documented at many levels - the detailed voxels that constitute it, a skeleton with segment diameters, its synaptic partners and the location of most of their synapses.

The hemibrain and some basic statistics.

The highlighted area shows the portion of the central brain that was imaged and reconstructed, superimposed on a grayscale representation of the entire Drosophila brain. For the table, a neuron is traced if all its main branches within the volume are reconstructed. A neuron is considered uncropped if most arbors (though perhaps not the soma) are contained in the volume. Others are considered cropped. Note: (1) our definition of cropped is somewhat subjective; (2) the usefulness of a cropped neuron depends on the application; and (3) some small fragments are known to be distinct neurons. For simplicity, we will often state that the hemibrain contains ≈25K neurons.

Producing this data set required advances in sample preparation, imaging, image alignment, machine segmentation of cells, synapse detection, data storage, proofreading software, and protocols to arbitrate each decision. A number of new tests for estimating the completeness and accuracy were required and therefore developed, in order to verify the correctness of the connectome.

These data describe whole-brain properties and circuits, as well as contain new methods to classify cell types based on connectivity. Computational compartments are now more carefully defined, we conclusively identify synaptic circuits, and each neuron is annotated by name and putative cell type, making this the first complete census of neuropils, tracts, cells, and connections in this portion of the brain. We compare the statistics and structure of different brain regions, and for the brain as a whole, without the confounds introduced by studying different circuitry in different animals.

All data are publicly available through web interfaces. This includes a browser interface, NeuPrint (Clements et al., 2020), designed so that any interested user can query the hemibrain connectome even without specific training. NeuPrint can query the connectivity, partners, connection strengths and morphologies of all specified neurons, thus making identification of upstream and downstream partners both orders of magnitude easier, and significantly more confident, compared to existing genetic methods. In addition, for those who are willing to program, the full data set - the gray scale voxels, the segmentation and proofreading results, skeletons, and graph model of connectivity, are also available through publicly accessible application program interfaces (APIs).

This effort differs from previous EM reconstructions in its social and collaborative aspects. Previous reconstructions were either dense in much smaller EM volumes (such as Meinertzhagen and O'Neil, 1991; Helmstaedter et al., 2013; Takemura et al., 2017) or sparse in larger volumes (such as Eichler et al., 2017 or Zheng et al., 2018). All have concentrated on the reconstruction of specific circuits to answer specific questions. When the same EM volume is used for many such efforts, as has occurred in the Drosophila larva and the full adult fly brain, this leads to an overall reconstruction that is the union of many individual efforts (Saalfeld et al., 2009). The result is inconsistent coverage of the brain, with some regions well reconstructed and others missing entirely. In contrast, here we have analyzed the entire volume, not just the subsets of interest to specific groups of researchers with the expertise to tackle EM reconstruction. We are making these data available without restriction, with only the requirement to cite the source. This allows the benefits of known circuits and connectivity to accrue to the field as a whole, a much larger audience than those with expertise in EM reconstruction. This is analogous to progress in genomics, which transitioned from individual groups studying subsets of genes, to publicly available genomes that can be queried for information about genes of choice (Altschul et al., 1990).

One major benefit to this effort is to facilitate research into the circuits of the fly’s brain. A common question among researchers, for example, is the identity of upstream and downstream (respectively input and output) partners of specific neurons. Previously, this could only be addressed by genetic trans-synaptic labeling, such as trans-Tango (Talay et al., 2017), or by sparse tracing in previously imaged EM volumes (Zheng et al., 2018). However, the genetic methods may give false positives and negatives, and both alternatives require specialized expertise and are time consuming, often taking months of effort. Now, for any circuits contained in our volume, a researcher can obtain the same answers in seconds by querying a publicly available database.

Another major benefit of dense reconstruction is its exhaustive nature. Genetic methods such as stochastic labeling may miss certain cell types, and counts of cells of a given type are dependent on expression levels, which are always uncertain. Previous dense reconstructions have demonstrated that existing catalogs of cell types are incomplete, even in well-covered regions (Takemura et al., 2017). In our hemibrain sample, we have identified all the cells within the reconstructed volume, thus providing a complete and unbiased census of all cell types in the fly’s central brain (at least in this single female), and a precise count of the cells of each type.

Another scientific benefit lies in an analysis without the uncertainty of pooling data obtained from different animals. The detailed circuitry of the fly’s brain is known to depend on nutritional history, age, and circadian rhythm. Here, these factors are held constant, as are the experimental methods, facilitating comparison between different fly brain regions in this single animal. Evaluating stereotypy across animals will of course eventually require additional connectomes.

Previous reconstructions of compartmentalized brains have concentrated on particular regions and circuits. The mammalian retina (Helmstaedter et al., 2013) and cortex (Kasthuri et al., 2015), and insect mushroom bodies (Eichler et al., 2017; Takemura et al., 2017) and optic lobes (Takemura et al., 2015) have all been popular targets. Additional studies have examined circuits that cross regions, such as those for sensory integration (Ohyama et al., 2015) or motion vision (Shinomiya et al., 2019).

So far lacking are systematic studies of the statistical properties of computational compartments and their connections. Neural circuit motifs have been studied (Song et al., 2005), but only those restricted to small motifs and at most a few cell types, usually in a single portion of the brain. Many of these results are in mammals, leading to questions of whether they also apply to invertebrates, and whether they extend to other regions of the brain. While there have been efforts to build reduced, but still accurate, electrical models of neurons (Marasco et al., 2012), none of these to our knowledge have used the compartment structure of the brain.

What is included

Table 1 shows the hierarchy of the named brain regions that are included in the hemibrain. Table 2 shows the primary regions that are at least 50% included in the hemibrain sample, their approximate size, and their completion percentage. Our names for brain regions follow the conventions of Ito et al., 2014 with the addition of ‘(L)’ or ‘(R)’ to indicate whether the region (most of which occur on both sides of the fly) has its cell bodies in the left or right, respectively. The mushroom body (Tanaka et al., 2008; Aso et al., 2014) and central complex (Wolff et al., 2015; Wolff and Rubin, 2018) are further divided into finer compartments.

Brain regions contained and defined in the hemibrain, following the naming conventions of Ito et al., 2014 with the addition of (R) and (L) to specify the side of the soma for that region.

Italics indicate master regions not explicitly defined in the hemibrain. Region LA is not included in the volume. The regions are hierarchical, with the more indented regions forming subsets of the less indented. The only exceptions are dACA, lACA, and vACA which are considered part of the mushroom body but are not contained in the master region MB.

| OL(R) | Optic lobe | CX | Central complex | LH(R) | Lateral horn |

| LA | lamina | FB | Fan-shaped body | ||

| ME(R) | Medula | FBl1 | Fan-shaped body layer 1 | SNP(R)/(L) | Superior neuropils |

| AME(R) | Accessory medulla | FBl2 | Fan-shaped body layer 2 | SLP(R) | Superior lateral protocerebrum |

| LO(R) | Lobula | FBl3 | Fan-shaped body layer 4 | SIP(R)/(L) | Superior intermediate protocerebrum |

| LOP(R) | Lobula plate | FBl4 | Fan-shaped body layer 4 | SMP(R)(L) | Superior medial protocerebrum |

| FBl5 | Fan-shaped body layer 5 | ||||

| MB(R)/(L) | Mushroom body | FBl6 | Fan-shaped body layer 6 | INP | Inferior neuropils |

| CA(R)/(L) | Calyx | FBl7 | Fan-shaped body layer 7 | CRE(R)/(L) | Crepine |

| dACA(R) | Dorsal accessory calyx | FBl8 | Fan-shaped body layer 8 | RUB(R)/(L) | Rubu |

| lACA(R) | Lateral accessory calyx | FBl9 | Fan-shaped body layer 9 | ROB(R) | Round body |

| vACA(R) | Ventral accessory calyx | EB | Ellipsoid body | SCL(R)/(L) | Superior clamp |

| PED(R) | Pedunculus | EBr1 | Ellipsoid body zone r1 | ICL(R)/(L) | Inferior clamp |

| a’L(R)/(L) | Alpha prime lobe | EBr2r4 | Ellipsoid body zone r2r4 | IB | Inferior bridge |

| a’1(R) | Alpha prime lobe compartment 1 | EBr3am | Ellipsoid body zone r3am | ATL(R)/(L) | Antler |

| a’2(R) | Alpha prime lobe compartment 2 | EBr3d | Ellipsoid body zone r3d | ||

| a’3(R) | Alpha prime lobe compartment 3 | EBr3pw | Ellipsoid body zone r3pw | AL(R)/(L) | Antennal lobe |

| aL(R)/(L) | Alpha lobe | EBr5 | Ellipsoid body zone r5 | ||

| a1(R) | Alpha lobe compartment 1 | EBr6 | Ellipsoid body zone r6 | VMNP | Ventromedial neuropils |

| a2(R) | Alpha lobe compartment 2 | AB(R)/(L) | Asymmetrical body | VES(R)/(L) | Vest |

| a3(R) | Alpha lobe compartment 3 | PB | Protocerebral bridge | EPA(R)/(L) | Epaulette |

| gL(R)/(L) | Gamma lobe | PB(R1) | PB glomerulus R1 | GOR(R)/(L) | Gorget |

| g1(R) | Gamma lobe compartment 1 | PB(R2) | PB glomerulus R2 | SPS(R)/(L) | Superior posterior slope |

| g2(R) | Gamma lobe compartment 2 | PB(R3) | PB glomerulus R3 | IPS(R)/(L) | Inferior posterior slope |

| g3(R) | Gamma lobe compartment 3 | PB(R4) | PB glomerulus R4 | ||

| g4(R) | Gamma lobe compartment 4 | PB(R5) | PB glomerulus R5 | PENP | Pariesophageal neuropils |

| g5(R) | Gamma lobe compartment 5 | PB(R6) | PB glomerulus R6 | SAD | Saddle |

| b’L(R)/(L) | Beta prime lobe | PB(R7) | PB glomerulus R7 | AMMC | Antennal mechanosensory and motor center |

| b’1(R) | Beta prime lobe compartment 1 | PB(R8) | PB glomerulus R8 | FLA(R) | Flange |

| b’2(R) | Beta prime lobe compartment 2 | PB(R9) | PB glomerulus R9 | CAN(R) | Cantle |

| bL(R)/(L) | Beta lobe | PB(L1) | PB glomerulus L1 | PRW | prow |

| b1(R) | Beta lobe compartment 1 | PB(L2) | PB glomerulus L2 | ||

| b2(R) | Beta lobe compartment 2 | PB(L3) | PB glomerulus L3 | GNG | Gnathal ganglia |

| PB(L4) | PB glomerulus L4 | ||||

| LX(R)/(L) | Lateral complex | PB(L5) | PB glomerulus L5 | Major Fiber bundles | |

| BU(R)/(L) | Bulb | PB(L6) | PB glomerulus L6 | AOT(R) | Anterior optic tract |

| LAL(R)/(L) | Lateral accessory lobe | PB(L7) | PB glomerulus L7 | GC | Great commissure |

| GA(R) | Gall | PB(L8) | PB glomerulus L8 | GF(R) | Giant Fiber (single neuron) |

| PB(L9) | PB glomerulus L9 | mALT(R)/(L) | Medial antennal lobe tract | ||

| VLNP(R) | Ventrolateral neuropils | NO | Noduli | POC | Posterior optic commissure |

| AOTU(R) | Anterior optic tubercle | NO1(R)/(L) | Nodulus 1 | ||

| AVLP(R) | Anterior ventrolateral protocerebrum | NO2(R)/(L) | Nodulus 2 | ||

| PVLP(R) | Posterior ventrolateral protocerebrum | NO3(R)/(L) | Nodulus 3 | ||

| PLP(R) | Posterior lateral cerebrum | ||||

| WED(R) | Wedge | ||||

Regions with ≥50% included in the hemibrain, sorted by completion percentage.

The approximate percentage of the region included in the hemibrain volume is shown as ‘%inV’. ‘T-bars’ gives a rough estimate of the size of the region. ‘comp%’ is the fraction of the post-synaptic densities (PSDs) contained in the brain region for which both the PSD and the corresponding T-bar are in neurons marked ‘Traced’.

| Name | %inV | T-bars | comp% | Name | %inV | T-bars | comp% |

|---|---|---|---|---|---|---|---|

| PED(R) | 100% | 54805 | 85% | aL(R) | 100% | 95375 | 84% |

| b’L(R) | 100% | 67695 | 83% | bL(R) | 100% | 71112 | 83% |

| gL(R) | 100% | 176785 | 83% | a’L(R) | 100% | 39091 | 82% |

| EB | 100% | 164286 | 81% | bL(L) | 56% | 58799 | 81% |

| NO | 100% | 36722 | 79% | b’L(L) | 88% | 57802 | 78% |

| gL(L) | 55% | 133256 | 76% | CA(R) | 100% | 69517 | 73% |

| AB(R) | 100% | 2734 | 65% | aL(L) | 51% | 44803 | 62% |

| FB | 100% | 451031 | 62% | AL(R) | 83% | 501004 | 59% |

| AB(L) | 100% | 572 | 57% | PB | 100% | 46557 | 55% |

| AME(R) | 100% | 6045 | 51% | BU(R) | 100% | 9385 | 46% |

| CRE(R) | 100% | 137946 | 40% | AOTU(R) | 100% | 92578 | 38% |

| LAL(R) | 100% | 234388 | 38% | SMP(R) | 100% | 510937 | 34% |

| PVLP(R) | 100% | 475219 | 30% | ATL(R) | 100% | 25472 | 29% |

| SPS(R) | 100% | 253818 | 29% | ATL(L) | 100% | 28153 | 29% |

| VES(R) | 84% | 157168 | 29% | IB | 100% | 200447 | 28% |

| CRE(L) | 90% | 132656 | 28% | SIP(R) | 100% | 187493 | 26% |

| BU(L) | 52% | 7014 | 26% | GOR(R) | 100% | 27140 | 26% |

| WED(R) | 100% | 232898 | 25% | SMP(L) | 100% | 460784 | 26% |

| EPA(R) | 100% | 31438 | 26% | PLP(R) | 100% | 429949 | 26% |

| AVLP(R) | 100% | 630538 | 23% | ICL(R) | 100% | 202549 | 23% |

| SLP(R) | 100% | 487795 | 23% | LO(R) | 64% | 855251 | 22% |

| SCL(R) | 100% | 189569 | 22% | GOR(L) | 60% | 19558 | 21% |

| LH(R) | 100% | 231662 | 19% | CAN(R) | 68% | 6512 | 16% |

Appendix 1—table 6 provide the list of identified neuron types and their naming schemes. These include newly identified sensory inputs and motor outputs.

The nature of the proofreading process allows us to improve the data even after their initial publication. Our initial data release was version v1.0 (Xu et al., 2020b). Version v1.1 is now available, including improvements such as better accuracy, more consistent cell naming and typing, and inclusion of anatomical names for central complex neurons. The old version(s) remain online and available, to allow reproducibility of older analyses, but we strongly recommend all new analyses use the latest version. The analyses in this article, and in the corresponding articles on the mushroom body and central complex, are based on version v1.1, unless otherwise noted.

What is not included

This research focused on the neurons of the brain and the chemical synapses between them. Every step in our process, from staining and sample preparation through segmentation and proofreading, has been optimized with this goal in mind. While neurons and their chemical synapses are critical to brain operation, they are far from the full story. Other contributors, known to be important, could not be included in our study, largely for technical reasons. Among these are gap junctions, glia, and structures internal to the cell such as mitochondria. Gap junctions, or electrical connections between neurons, are difficult to reliably detect by FIB-SEM under the best of circumstances and not detectable at the low (for EM) resolution needed to complete this study in a reasonable amount of time. Their contribution to the connectome will need to be established through other means - see the section on future research. Glial cells were difficult to segment, due to both staining differences and convoluted morphologies. We identified the volumes where they exist (a glia ’mask’, which allows these regions to be color-coded when viewed in NeuroGlancer) but did not separate them into cells. Structures internal to the neurons, except for synapses, are not considered here even though many are visible in our EM preparation. The most obvious example is mitochondria. Again, we have identified many of them so we could evaluate their effect on segmentation, but they are not included in our connectome. Finally, autapses (synapses from a neuron onto itself) are known to exist in Drosophila, but are sufficiently rare that they fall well below the rate of false positives in our automated synapse detection. Therefore most of the putative autapses are false positives, and we do not include them in our connectivity data.

Differences from connectomes of vertebrates

Most accounts of neurobiology define the operation of the mammalian nervous system with, at most, only passing reference to invertebrate brains. Fly (or other insect) nervous systems differ from those of vertebrates in several aspects (Meinertzhagen, 2016b). Some main differences include:

Most synapses are polyadic. Each synapse structure comprises a single presynaptic release site and, adjacent to this, several neurites expressing neurotransmitter receptors. An element, T-shaped and typically called a T-bar in flies, marks the site of transmitter release into the cleft between cells. This site typically abuts the neurites of several other cells, where a postsynaptic density (PSD) marks the receptor location.

Most neurites are neither purely axonic nor dendritic, but have both pre- and postsynaptic partners, a feature that may be more prominent in mammalian brains than recognized (Morgan and Lichtman, 2020). Within a single brain region, however, neurites are frequently predominantly dendritic (postsynaptic) or axonic (presynaptic).

Unlike some synapses in mammals, EM imagery (at least as we have acquired and analyzed it here) fails to reveal obvious information about whether a synapse is excitatory or inhibitory.

The soma or cell body of each fly neuron resides in a rind (the cell body layer) on the periphery of the brain, mostly disjoint from the main neurites innervating the internal neuropil. As a result, unlike vertebrate neurons, no synapses form directly on the soma. The neuronal process between the soma and the first branch point is called the cell body fiber (CBF), which is likewise not involved in the synaptic transmission of information.

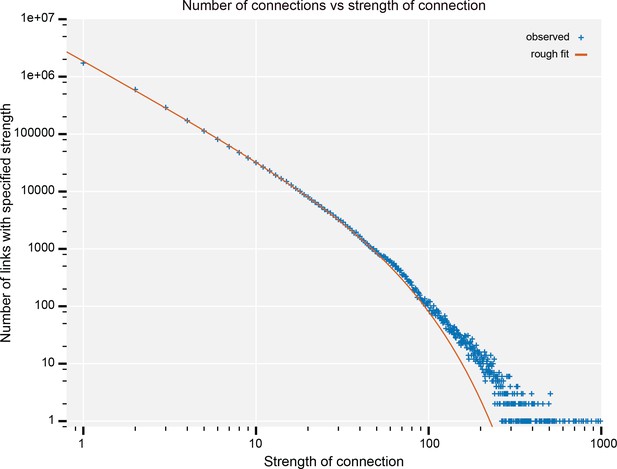

Synapse sizes are much more uniform than those of mammals. Stronger connections are formed by increasing the number of synapses in parallel, not by forming larger synapses, as in vertebrates. In this paper, we will refer to the ‘strength’ of a connection as the synapse count, even though we acknowledge that we lack information on the relative activity and strength of the synapses, and thus a true measure of their coupling strength.

The brain is small, about 250 μm per side, and has roughly the same size as the dendritic arbor of a single pyramidal neuron in the mammalian cortex.

Axons of fly neurons are not myelinated.

Some fly neurons rely on graded transmission (as opposed to spiking), without obvious anatomical distinction. Some neurons even switch between graded and spiking operation (Pimentel et al., 2016).

Connectome reconstruction

Producing a connectome comprising reconstructed neurons and the chemical synapses between them required several steps. The first step, preparing a fly brain and imaging half of its center, produced a dataset consisting of 26 teravoxels of data, each with 8 bits of grayscale information. We applied numerous machine-learning algorithms and over 50 person-years of proofreading effort over ≈2 calendar years to extract a variety of more compact and useful representations, such as neuron skeletons, synapse locations, and connectivity graphs. These are both more useful and much smaller than the raw grayscale data. For example, the connectivity could be reasonably summarized by a graph with ≈25,000 nodes and ≈3 million edges. Even when the connections were assigned to different brain regions, such a graph took only 26 MB, still large but roughly a million fold reduction in data size.

Many of the supporting methods for this reconstruction have been recently published. Here, we briefly survey each major area, with more details reported in the companion papers. Major advances include:

New methods to fix and stain the sample, preparing a whole fly brain with well-preserved subcellular detail particularly suitable for machine analysis.

Methods that have enabled us to collect the largest EM dataset yet using Focused Ion Beam Scanning Electron Microscopy (FIB-SEM), resulting in isotropic data with few artifacts, features that significantly sped up reconstruction.

A coarse-to-fine, automated flood-filling network segmentation pipeline applied to image data normalized with cycle-consistent generative adversarial networks, and an aggressive automated agglomeration regime enabled by advances in proofreading.

A new hybrid synapse prediction method, using two differing underlying techniques, for accurate synapse prediction throughout the volume.

New top-down proofreading methods that utilize visualization and machine learning to achieve orders of magnitude faster reconstruction compared with previous approaches in the fly’s brain.

Each of these is explained in more detail in the following sections and, where necessary, in the appendix. The companion papers are ‘The connectome of the Drosophila melanogaster mushroom body: implications for function’ (Li et al., 2020) and ‘A complete synaptic-resolution connectome of the Drosophila melanogaster central complex’ by Jayaraman, et al.

Image stack collection

The first steps, fixing and staining the specimen, have been accomplished taking advantage of three new developments. These improved methods allow us to fix and stain a full fly’s brain but nevertheless recover neurons as round profiles with darkly stained synapses, suitable for machine segmentation and automatic synapse detection. We started with a 5-day-old female of wild-type Canton S strain G1 x w1118, raised on a 12 hr day/night cycle. 1.5 hr after lights-on, we used a custom-made jig to microdissect the brain, which was then fixed and embedded in Epon, an epoxy resin. We then enhanced the electron contrast by staining with heavy metals, and progressively lowered the temperature during dehydration of the sample. Collectively, these methods optimize morphological preservation, allow full-brain preparation without distortion (unlike fast freezing methods), and provide increased staining intensity that speeds the rate of FIB-SEM imaging (Lu et al., 2019).

The hemibrain sample is roughly 250 × 250 × 250 μm, larger than we can FIB-SEM without introducing milling artifacts. Therefore, we subdivided our epoxy-embedded samples into 20-μm-thick slabs, both to avoid artifacts and allow imaging in parallel (each slab can be imaged in a different FIB machine) for increased throughput. To be effective, the cut surfaces of the slabs must be smooth at the ultrastructural level and have only minimal material loss. Specifically, for connectomic research, all long-distance processes must remain traceable across sequential slabs. We used an improved version of our previously published ‘hot-knife’ ultrathick sectioning procedure (Hayworth et al., 2015) which uses a heated, oil-lubricated diamond knife, to section the Drosophila brain into 37 sagittal slabs of 20 μm thickness with an estimated material loss between consecutive slabs of only ∼30 nm – sufficiently small to allow tracing of long-distance neurites. Each slab was re-embedded, mounted, and trimmed, then examined in 3D with X-ray tomography to check for sample quality and establish a scale factor for Z-axis cutting by FIB. The resulting slabs were FIB-SEM imaged separately (often in parallel, in different FIB-SEM machines), and the resulting volume datasets were stitched together computationally.

Connectome studies come with clearly defined resolution requirements – the finest neurites must be traceable by humans and should be reliably segmented by automated algorithms (Januszewski et al., 2018). In Drosophila, the very finest neural processes are usually 50 nm but can be as little as 15 nm (Meinertzhagen, 2016a). This fundamental biological dimension determines the minimum isotropic resolution requirements for tracing neural circuits. To meet the demand for high isotropic resolution and large volume imaging, we chose the FIB-SEM imaging platform, which offers high isotropic resolution (<10 nm in x, y, and z), minimal artifacts, and robust image alignment. The high-resolution and isotropic dataset possible with FIB-SEM has substantially expedited the Drosophila connectome pipeline. Compared to serial-section imaging, with its sectioning artifacts and inferior Z-axis resolution, FIB-SEM offers high-quality image alignment, a smaller number of artifacts, and isotropic resolution. This allows higher quality automated segmentation and makes manual proofreading and correction easier and faster.

At the beginning, deficiencies in imaging speed and system reliability of any commercial FIB-SEM system capped the maximum possible image volume to less than 0.01% of a full fly brain, problems that persist even now. To remedy them, we redesigned the entire control system, improved the imaging speed more than 10x, and created innovative solutions addressing all known failure modes, which thereby expanded the practical imaging volume of conventional FIB-SEM by more than four orders of magnitude from to , while maintaining an isotropic resolution of 8 × 8 × 8 nm voxels (Xu et al., 2017; Xu et al., 2019). In order to overcome the aberration of a large field of view (up to 300 μm wide), we developed a novel tiling approach without sample stage movement, in which the imaging parameters of each tile are individually optimized through an in-line auto focus routine without overhead (Xu et al., 2020a). After numerous improvements, we have transformed the conventional FIB-SEM from a laboratory tool that is unreliable for more than a few days of imaging to a robust volume EM platform with effective long-term reliability, able to perform years of continuous imaging without defects in the final image stack. Imaging time, rather than FIB-SEM reliability, is now the main impediment to obtaining even larger volumes.

In our study here, the Drosophila 'hemibrain', 13 consecutive hot-knifed slabs were imaged using two customized enhanced FIB-SEM systems, in which an FEI Magnum FIB column was mounted at 90° upon a Zeiss Merlin SEM. After data collection, streaking artifacts generated by secondary electrons along the FIB milling direction were computationally removed using a mask in the frequency domain. The image stacks were then aligned using a customized version of the software platform developed for serial section transmission electron microscopy (Zheng et al., 2018; Khairy et al., 2018), followed by binning along the z-axis to form the final 8 × 8 × 8 nm3 voxel datasets. Milling thickness variations in the aligned series were compensated using a modified version of the method described by Hanslovsky et al., 2017, with the absolute scale calibrated by reference to the MicroCT images.

The 20 μm slabs generated by the hot-knife sectioning were re-embedded in larger plastic tabs prior to FIB-SEM imaging. To correct for the warping of the slab that can occur in this process, methods adapted from Kainmueller (Kainmueller et al., 2008) were used to find the tissue-plastic interface and flatten each slab’s image stack.

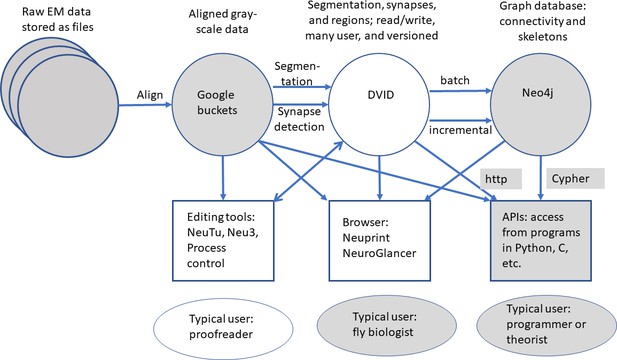

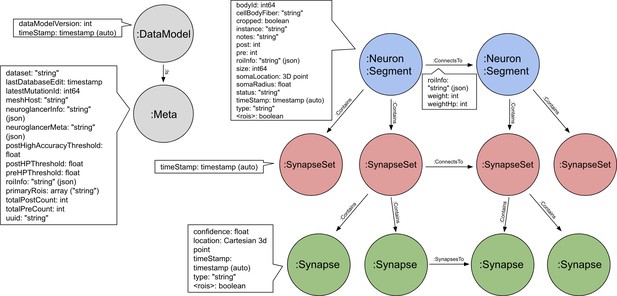

The series of flattened slabs was then stitched using a custom method for large-scale deformable registration to account for deformations introduced during sectioning, imaging, embedding, and alignment (Saalfeld et al. in prep). These volumes were then contrast adjusted using slice-wise contrast limited adaptive histogram equalization (CLAHE) (Pizer et al., 1987), and converted into a versioned database (Distributed, Versioned, Image-oriented Database, or DVID) (Katz and Plaza, 2019), which formed the raw data for the reconstruction, as illustrated in Figure 2.

Automated segmentation

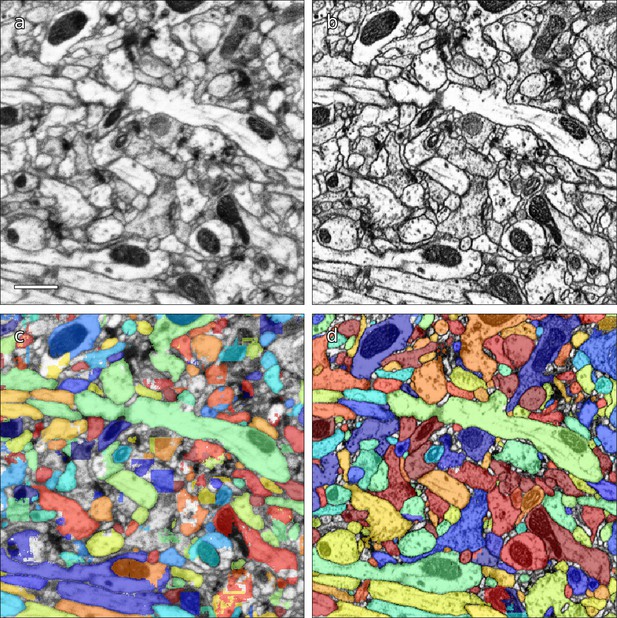

Computational reconstruction of the image data was performed using flood-filling networks (FFNs) trained on roughly five billion voxels of volumetric ground truth contained in two tabs of the hemibrain dataset (Januszewski et al., 2018). Initially, the FFNs generalized poorly to other tabs of the hemibrain, whose image content had different appearances. Therefore, we adjusted the image content to be more uniform using cycle-consistent generative adversarial networks (CycleGANs) (Zhu et al., 2017). Specifically, ‘generator’ networks were trained to alter image content such that a second ‘discriminator’ network was unable to distinguish between image patches sampled from, for example, a tab that contained volumetric training data versus a tab that did not. A cycle-consistency constraint was used to ensure that the image transformations preserved ultrastructural detail. The improvement is illustrated in Figure 3. Overall, this allowed us to use the training data from just two slabs, as opposed to needing training data for each slab.

Examples of results of CycleGAN processing.

(a) Original EM data from tab 34 at a resolution of 16 nm / resolution, (b) EM data after CycleGAN processing, (c–d) FFN segmentation results with the 16 nm model applied to original and processed data, respectively. Scale bar in (a) represents 1 μm.

FFNs were applied to the CycleGAN-normalized data in a coarse-to-fine manner at 32 × 32 × 32 nm3 and 16 × 16 × 16 nm3, and to the CLAHE-normalized data at the native 8 × 8 × 8 nm3 resolution, in order to generate a base segmentation that was largely over-segmented. We then agglomerated the base segmentation, also using FFNs. We aggressively agglomerated segments despite introducing a substantial number of erroneous mergers. This differs from previous algorithms, which studiously avoided merge errors since they were so difficult to fix. Here, advances in proofreading methodology described later in this report enabled efficient detection and correction of such mergers.

We evaluated the accuracy of the FFN segmentation of the hemibrain using metrics for expected run length (ERL) and false merge rate (Januszewski et al., 2018). The base segmentation (i.e. the automated reconstruction prior to agglomeration) achieved an ERL of 163 μm with a false merge rate of 0.25%. After (automated) agglomeration, run length increased to 585 μm but with a false merge rate of 27.6% (i.e. nearly 30% of the path length was contained in segments with at least one merge error). We also evaluated a subset of neurons in the volume, ∼500 olfactory PNs and mushroom body KCs chosen to roughly match the evaluation performed in Li et al., 2019 which yielded an ERL of 825 μm at a 15.9% false merge rate.

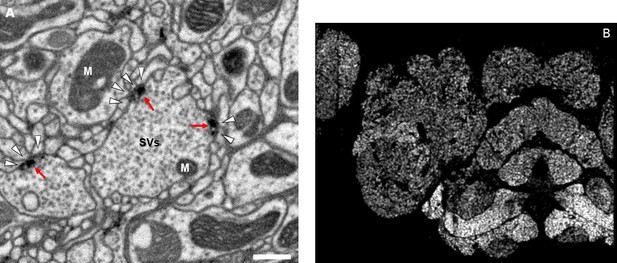

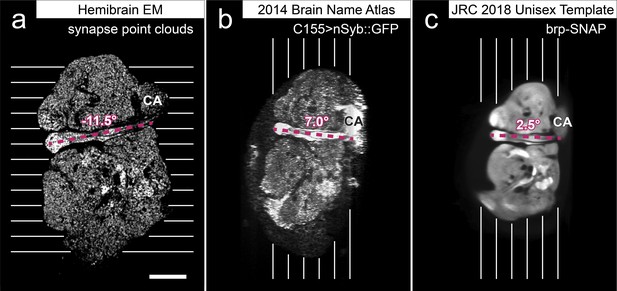

Synapse prediction

Accurate synapse identification is central to our analysis, given that synapses form both a critical component of a connectome and are required for prioritizing and guiding the proofreading effort. Synapses in Drosophila are typically polyadic, with a single presynaptic site (a T-bar) contacted by multiple receiving dendrites (most with PSDs) as shown in Figure 4A. Initial synapse prediction revealed that there are over 9 million T-bars and 60 million PSDs in the hemibrain. Manually validating each one, assuming a rate of 1000 connections annotated per trained person, per day, would have taken more than 230 working years. Given this infeasibility, we developed machine learning approaches to predict synapses as detailed below. The results of our prediction are shown in Figure 4B, where the predicted synapse sites clearly delineate many of the fly brain regions.

Well-preserved membranes, darkly stained synapses, and smooth round neurite profiles are characteristics of the hemibrain sample.

Panel (A) shows polyadic synapses, with a red arrow indicating the presynaptic T-bar, and white triangles pointing to the PSDs. We identified in total 64 million PSDs and 9.5 million T-bars in the hemibrain volume (Figure 1). Thus the average number of PSDs per T-bar in our sample is 6.7. Mitochondria (‘M’), synaptic vesicles (‘SV’), and the scale bar (0.5 μm) are shown. Panel (B) shows a horizontal cross section through a point cloud of all detected synapses. This EM point cloud defines many of the compartments in the fly’s brain, much like an optical image obtained using antibody nc82 (an antibody against Bruchpilot, a component protein of T-bars) to stain synapses. This point cloud is used to generate the transformation from our sample to the standard Drosophila brain.

Given the size of the hemibrain image volume, a major challenge from a machine learning perspective is the range of varying image statistics across the volume. In particular, model performance can quickly degrade in regions of the data set with statistics that are not well-captured by the training set (Buhmann et al., 2019).

To address this challenge, we took an iterative approach to synapse prediction, interleaving model re-training with manual proofreading, all based on previously reported methods (Huang et al., 2018). Initial prediction, followed by proofreading, revealed a number of false positive predictions from structures such as dense core vesicles which were not well-represented in the original training set. A second filtering network was trained on regions causing such false positives, and used to prune back the original set of predictions. We denote this pruned output as the ‘initial’ set of synapse predictions.

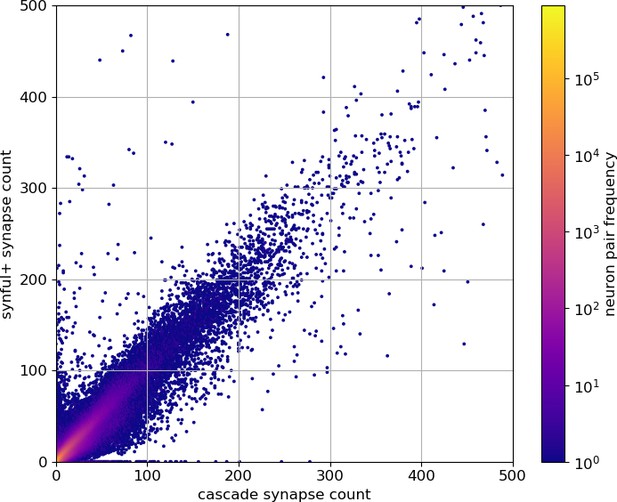

Based on this initial set, we began collecting human-annotated dense ground-truth cubes throughout the various brain regions of the hemibrain, to assess variation in classifier performance by brain region. From these cubes, we determined that although many regions had acceptable precision, there were some regions in which recall was lower than desired. Consequently, a subset of cubes available at that time was used to train a new classifier focused on addressing recall in the problematic regions. This new classifier was used in an incremental (cascaded) fashion, primarily by adding additional predictions to the existing initial set. This gave better performance than complete replacement using only the new classifier, with the resulting predictions able to improve recall while largely maintaining precision.

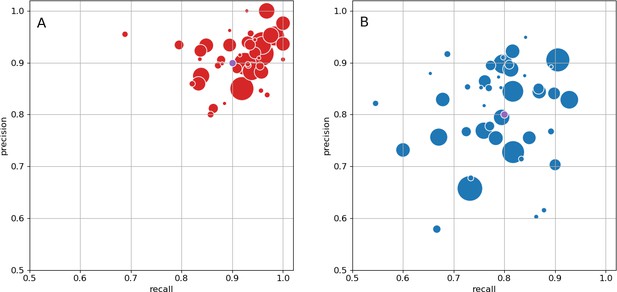

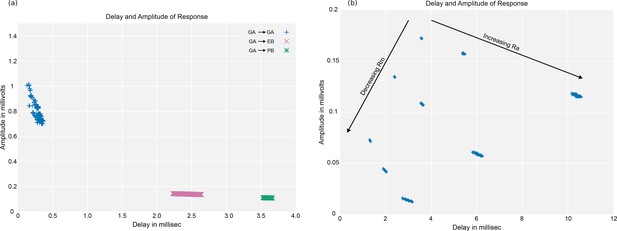

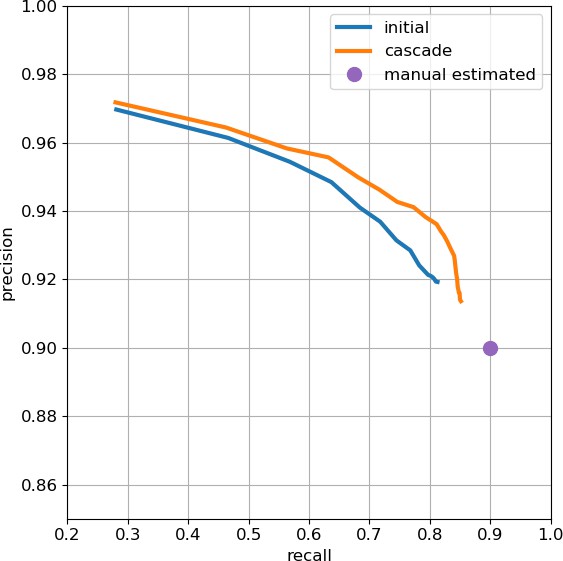

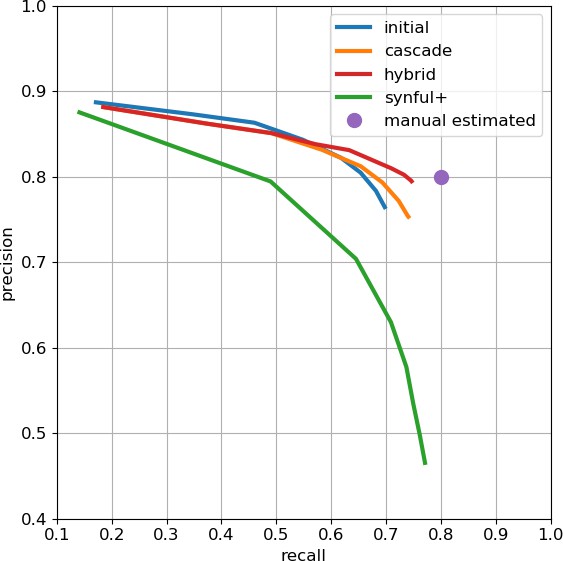

As an independent check on synapse quality, we also trained a separate classifier (Buhmann et al., 2019), using a modified version of the ‘synful’ software package. Both synapse predictors give a confidence value associated with each synapse, a measure of how firmly the classifier believes the prediction to be a true synapse. We found that we were able to improve recall by taking the union of the two predictor’s most confident synapses, and similarly improve precision by removing synapses that were low confidence in both predictions. Figure 5A and B show the results, illustrating the precision and recall obtained in each brain region.

Precision and recall for synapse prediction, panel (A) for T-bars, and panel (B) for synapses as a whole including the identification of PSDs.

T-bar identification is better than PSD identification since this organelle is both more distinct and typically occurs in larger neurites. Each dot is one brain region. The size of the dot is proportional to the volume of the region. Humans proofreaders typically achieve 0.9 precision/recall on T-bars and 0.8 precision/recall on PSDs, indicated in purple. Data available in Figure 5—source datas 1–2.

-

Figure 5—source data 1

Data for Figure 5A.

Column A: precision; column B: recall; column C: region size.

- https://cdn.elifesciences.org/articles/57443/elife-57443-fig5-data1-v3.csv

-

Figure 5—source data 2

Data for Figure 5B.

Column A: precision; column B: recall; column C: region size.

- https://cdn.elifesciences.org/articles/57443/elife-57443-fig5-data2-v3.csv

Proofreading

Since machine segmentation is not perfect, we made a concerted effort to fix the errors remaining at this stage by several passes of human proofreading. Segmentation errors can be roughly grouped into two classes - ‘false merges’, in which two separate neurons are mistakenly merged together, and ‘false splits’, in which a single neuron is mistakenly broken into several segments. Enabled by advances in visualization and semi-automated proofreading using our Neu3 tool (Hubbard et al., 2020), we first addressed large false mergers. A human examined each putative neuron and determined if it had an unusual morphology suggesting that a merge might have occurred, a task still much easier for humans than machines. If judged to be a false merger, the operator identified discrete points that should be on separate neurons. The shape was then resegmented in real time allowing users to explore other potential corrections. Neurons with more complex problems were then scheduled to be re-checked, and the process repeated until few false mergers remained.

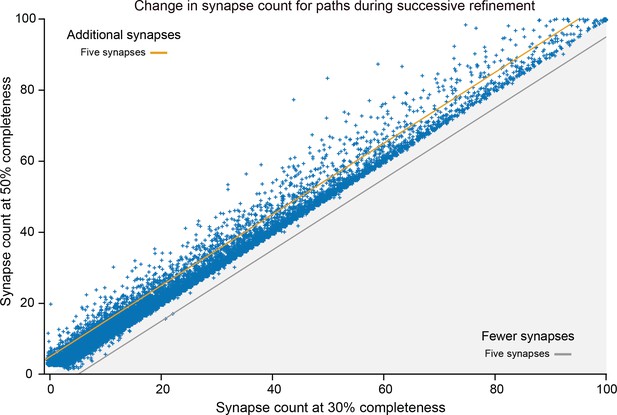

In the next phase, the largest remaining pieces were merged into neuron shapes using a combination of machine-suggested edits (Plaza, 2014) and manual intuition, until the main shape of each neuron emerged. This requires relatively few proofreading decisions and has the advantage of producing an almost complete neuron catalog early in the process. As discussed below, in the section on validation, emerging shapes were compared against genetic/optical image libraries (where available) and against other neurons of the same putative type, to guard against large missing or superfluous branches. These procedures (which focused on higher-level proofreading) produced a reasonably accurate library of the main branches of each neuron, and a connectome of the stronger neuronal pathways. At this point, there was still considerable variations among the brain regions, with greater completeness achieved in regions where the initial segmentation performed better.

Finally, to achieve the highest reconstruction completeness possible in the time allotted, and to enable confidence in weaker neuronal pathways, proofreaders connected remaining isolated fragments (segments) to already constructed neurons, using NeuTu (Zhao et al., 2018) and Neu3 (Hubbard et al., 2020). The fragments that would result in largest connectivity changes were considered first, exploiting automatic guesses through focused proofreading where possible. Since proofreading every small segment is still prohibitive, we tried to ensure a basic level of completeness throughout the brain with special focus in regions of particular biological interest such as the central complex and mushroom body.

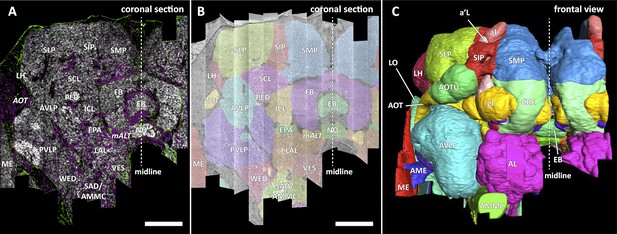

Defining brain regions

In a parallel effort to proofreading, the sample was annotated with discrete brain regions. Our progression in mapping the cells and circuits of the fly’s brain bears formal parallels to the history of mapping the earth, with many territories that are named and with known circuits, and others that still lack all or most of these. For the hemibrain dataset, the regions are based on the brain atlas in Ito et al., 2014. The dataset covers most of the right hemisphere of the brain, except the optic lobe (OL), periesophageal neuropils (PENP) and gnathal ganglia (GNG), as well as part of the left hemisphere (Table 2). It covers about 36% of all synaptic neuropils by volume, and 54% of the central brain neuropils. We examined innervation patterns, synapse distribution, and connectivity of reconstructed neurons to define the neuropils as well as their boundaries on the dataset. We also made necessary, but relatively minor, revisions to some boundaries by considering anatomical features that had not been known during the creation of previous brain maps, while following the existing structural definitions (Ito et al., 2014). We also used information from synapse point clouds, a predicted glial mask, and a predicted fiber bundle mask to determine boundaries of the neuropils (Figure 6A). The brain regions of the fruit fly (Figure 6, B and C) include synaptic neuropils and non-synaptic fiber bundles. The non-synaptic cell body layer on the brain surface, which contains cell bodies of the neurons and some glia, surrounds these structures. The synaptic neuropils can be further categorized into two groups: delineated and diffuse neuropils. The delineated neuropils have distinct boundaries throughout their surfaces, often accompanied by glial processes, and have clear internal structures in many cases. They include the antennal lobe (AL), bulb (BU), as well as the neuropils in the optic lobe (OL), mushroom body (MB), and central complex (CX). Remaining are the diffuse neuropils, sometimes referred to as terra incognita, since most have been less investigated than the delineated neuropils.

Division of the sample into brain regions.

(A) A vertical section of the hemibrain dataset with synapse point clouds (white), predicted glial tissue (green), and predicted fiber bundles (magenta). (B) Grayscale image overlaid with segmented neuropils at the same level as (A). (C) A frontal view of the reconstructed neuropils. Scale bar: (A, B) 50 μm.

Diffuse (terra incognita) neuropils

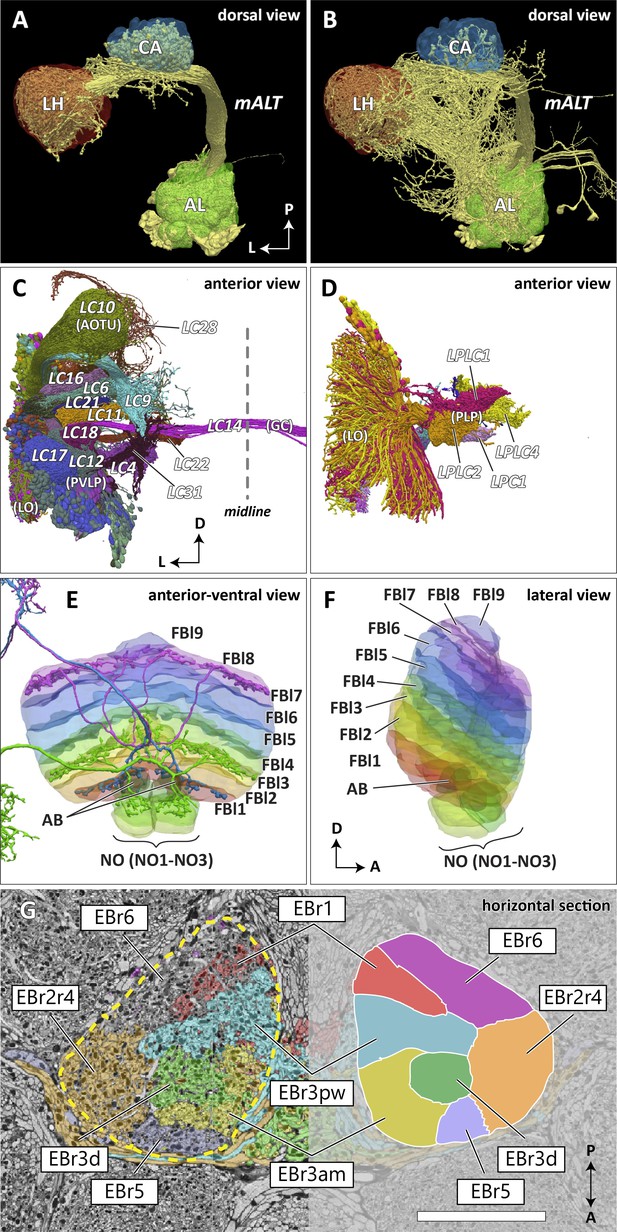

In the previous brain atlas of 2014, boundaries of some terra incognita neuropils were somewhat arbitrarily determined, due to a lack of precise information of the landmark neuronal structures used for the boundary definition. In the hemibrain data, we adjusted these boundaries to trace more faithfully the contours of the structures that are much better clarified by the EM-reconstructed data. Examples include the lateral horn (LH), ventrolateral neuropils (VLNP), and the boundary between the crepine (CRE) and lateral accessory lobe (LAL). The LH has been defined as the primary projection target of the olfactory projection neurons (PNs) from the antennal lobe (AL) via several antennal lobe tracts (ALTs) (Ito et al., 2014; Pereanu et al., 2010). The boundary between the LH and its surrounding neuropils is barely visible with synaptic immunolabeling such as nc82 or predicted synapse point clouds, as the synaptic contrast in these regions is minimal. The olfactory PNs can be grouped into several classes, and the projection sites of the uniglomerular PNs that project through the medial ALT (mALT), the thickest fiber bundle between the AL and LH, give the most conservative and concrete boundary of the ‘core’ LH (Figure 7A). Multiglomerular PNs, on the other hand, project to much broader regions, including the volumes around the core LH (Figure 7B). These regions include areas which are currently considered parts of the superior lateral protocerebrum (SLP) and posterior lateral protocerebrum (PLP). Since the ‘core’ LH roughly approximates the shape of the traditional LH, and the boundaries given by the multiglomerular PNs are rather diffused, in this study we assumed the core to be the LH itself. Of course, the multiglomerular PNs convey olfactory information as well, and therefore the neighboring parts of the SLP and PLP to some extent also receive inputs from the antennal lobe. These regions might be functionally distinct from the remaining parts of the SLP or PLP, but they are not explicitly separated from those neuropils in this study.

Reconstructed brain regions and substructures.

(A, B) Dorsal views of the olfactory projection neurons (PNs) and the innervated neuropils, AL, CA, and LH. Uniglomerular PNs projecting through the mALT are shown in (A), and multiglomerular PNs are shown in (B). (C, D) Columnar visual projection neurons. Each subtype of cells is color coded. LC cells are shown in (C), and LPC, LLPC, and LPLC cells are shown in (D). (E, F) The nine layers of the fan-shaped body (FB), along with the asymmetrical bodies (AB) and the noduli (NO), displayed as an anterior-ventral view (E), and a lateral view (F). In (E), three FB tangential cells (FB1D (blue), FB3A (green), FB8H (purple)) are shown as markers of the corresponding layers (FBl1, FBl3, and FBl8, respectively). (G) Zones in the ellipsoid body (EB) defined by the innervation patterns of different types of ring neurons. In this horizontal section of the EB, the left side shows the original grayscale data, and the seven ring neuron zones (see Table 1) are color-coded. The right side displays the seven segmented zones based on the innervation pattern, in a slightly different section. Scale bar: 20 μm.

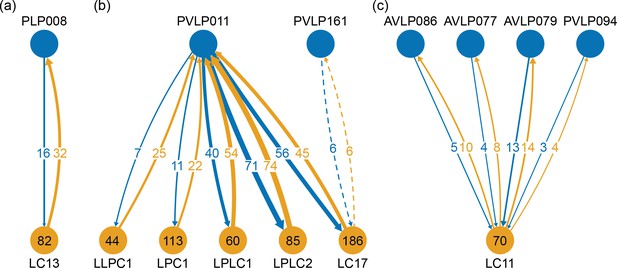

The VLNP is located in the lateral part of the central brain and receives extensive inputs from the optic lobe through various types of the visual projection neurons (VPNs). Among them, the projection sites of the lobula columnar (LC), lobula plate columnar (LPC), lobula-lobula plate columnar (LLPC), and lobula plate-lobula columnar (LPLC) cells form characteristic glomerular structures, called optic glomeruli (OG), in the AOTU, PVLP, and PLP (Klapoetke et al., 2017; Otsuna and Ito, 2006; Panser et al., 2016; Wu et al., 2016). We exhaustively identified columnar VPNs and found 41 types of LC, two types of LPC, six types of LLPC, and three types of LPLC cells (including sub-types of previously identified types). The glomeruli of these pathways were used to determine the medial boundary of the PVLP and PLP, following existing definitions (Ito et al., 2014), except for a few LC types which do not form glomerular terminals. The terminals of the reconstructed LC cells and other lobula complex columnar cells (LPC, LLPC, LPLC) are shown in Figure 7C and D, respectively.

In the previous paper (Ito et al., 2014), the boundary between the CRE and LAL was defined as the line roughly corresponding to the posterior-ventral surface of the MB lobes, since no other prominent anatomical landmarks were found around this region. In this dataset, we found several glomerular structures surrounding the boundary both in the CRE and LAL. These structures include the gall (GA), rubus (RUB), and round body (ROB). Most of them turned out to be projection targets of several classes of central complex neurons, implying the ventral CRE and dorsal LAL are closely related in their function. We re-determined the boundary so that each of the glomerular structures would not be divided into two, while keeping the overall architecture and definition of the CRE and LAL. The updated boundary passes between the dorsal surface of the GA and the ventral edge of the ROB. Other glomerular structures, including the RUB, are included in the CRE.

Delineated neuropils

Substructures of the delineated neuropils have also been added to the brain region map in the hemibrain. The asymmetrical bodies (AB) were added as the fifth independent neuropil of the CX (Wolff and Rubin, 2018). The AB is a small synaptic volume adjacent to the ventral surface of the fan-shaped body (FB) that has historically been included in the FB (Ito et al., 2014). The AB has been described as a Fasciclin II (FasII)-positive structure that exhibits left-right structural asymmetry by Pascual et al., 2004, who reported that most flies have their AB only in the right hemisphere, while a small proportion (7.6%) of wild-type flies have their AB on both sides. In the hemibrain dataset, the pair of ABs is situated on both sides of the midline, but the left AB is notably smaller than the right AB (right: 1679 μm3, left: 526 μm3), still showing an obvious left-right asymmetry. The asymmetry is consistent with light microscopy data (Wolff and Rubin, 2018), though the absolute sizes differ, with the light data showing averages (n = 21) of 522 μm3 for the right and 126 μm3 on the left. The AB is especially strongly connected to the neighboring neuropil, the FB, by neurons including vDeltaA_a (anatomical name AF in Wolff and Rubin, 2018), while it also houses both pre- and postsynaptic terminals of the CX output neurons such as the subset of FS4A and FS4B neurons that project to AB. These anatomical observations imply that the AB is a ventralmost annexed part of the FB, although this possibility is neither developmentally nor phylogenetically proven.

The round body (ROB) is also a small round synaptic structure situated on the ventral limit of the crepine (CRE), close to the β lobe of the MB (Lin et al., 2013; Wolff and Rubin, 2018). It is a glomerulus-like structure and one of the foci of the CX output neurons, including the PFR (protocerebral bridge – fan-shaped body – round body) neurons. It is classified as a substructure of the CRE along with other less-defined glomerular regions in the neuropil, many of which also receive signals from the CX. Among these, the most prominent one is the rubus (RUB). The ROB and RUB are two distinct structures; the RUB is embedded completely within the CRE, while the ROB is located on the ventrolateral surface of the CRE. The lateral accessory lobe (LAL), neighboring the CRE, also houses similar glomerular terminals, and the gall (GA) is one of them. While the ROB and GA have relatively clear boundaries separating them from the surrounding regions, they may not qualify as independent neuropils because of their small size and the structural similarities with the glomerulus-like terminals around them. They may be comparable with other glomerular structures such as the AL glomeruli and the optic glomeruli in the lateral protocerebrum, both of which are considered as substructures of the surrounding neuropils.

Substructures of independent neuropils are also defined using neuronal innervations. The five MB lobes on the right hemisphere are further divided into 15 compartments (α1–3, α’1–3, β1–2, β’1–2, and γ1–5) (Tanaka et al., 2008; Aso et al., 2014) by the mushroom body output neurons (MBONs) and dopaminergic neurons (DANs). Our compartment boundaries were defined by approximating the innervation of these neurons. Although the innervating regions of the MBONs and DANs do not perfectly tile the entire lobes, the compartments have been defined to tile the lobes, so that every synapse in the lobes belongs to one of the 15 compartments.

The anatomy of the central complex is discussed in detail in the companion paper ‘A complete synaptic-resolution connectome of the Drosophila melanogaster central complex’. Here, we summarize the division of its neuropils into compartments.

The FB is subdivided into nine horizontal layers (FBl1-9) (Figure 7E and F) as already illustrated (Wolff et al., 2015). The layer boundaries in our dataset were determined by the pattern of innervation of 574 FB tangential cells, which form nine groups depending on the dorsoventral levels they innervate in the FB. Since tangential cells overlap somewhat, and do not entirely respect the layer boundaries, these boundaries were chosen to maximize the containment of the tangential arbors within their respective layers.

The EB is likewise subdivided into zones by the innervating patterns of the EB ring neurons, the most prominent class of neurons innervating the EB. The ring neurons have six subtypes, ER1-ER6, and each projects to specific zones of the EB. Among them, the regions innervated by ER2 and ER4 are mutually exclusive but highly intermingled, so these regions are grouped together into a single zone (EBr2r4). ER3 has the most neurons among the ring neuron subtypes and is further grouped into five subclasses (ER3a, d, m, p, and w). While each subclass projects to a distinct part of the EB, the innervation patterns of the subclasses ER3a and ER3m, and also ER3p and ER3w, are very similar to each other. The region innervated by ER3 is, therefore, subdivided into three zones, including EBr3am, EBr3pw, and EBr3d. Along with the other three zones, EBr1, EBr5, and EBr6 (innervated by ER1, ER5, and ER6), the entire EB is subdivided into seven non-overlapping zones (Figure 7G). Unlike other zones, EBr6 is innervated only sparsely by the ER6 cells, with the space filled primarily by synaptic terminals of other neuron types, including the extrinsic ring neurons (ExR). Omoto et al., 2017 segmented the EB into five domains (EBa, EBoc, EBop, EBic, EBip) by the immunolabeling pattern of DN-cadherin, and each type of the ring neurons may innervate more than one domain in the EB. Our results show that the innervation pattern of each ring neuron subtype is highly compartmentalized at the EM level and the entire neuropil can be sufficiently subdivided into zones based purely on the neuronal morphologies. The neuropil may be subdivided differently if other neuron types, such as the extrinsic ring neurons (ExR) (Omoto et al., 2018), are recruited as landmarks.

Quality of the brain region boundaries

Since many of the terra incognita neuropils are not clearly partitioned from each other by solid boundaries such as glial walls, it is important to evaluate if the current boundaries reflect anatomical and functional compartments of the brain. To check our definitions, which are mostly based on morphology, we compute metrics for each boundary between any two adjacent neuropil regions. The first is the area of each boundary, in square microns, as shown in Figure 8A. The map shows results for brain regions that are over 75% in the hemibrain region, restricted to right regions with exception to the asymmetric AB(L). By restricting our analysis to the right part of the hemibrain, we hopefully minimize the effect of smaller, traced-but-truncated neuron fragments on our metric.

Quality checks of the brain compartments.

(A) Areas of the boundaries (in square microns) between adjacent neuropils, indicated on a log scale. (B) The number of excess crossings normalized by the area of neuropil boundary. Larger dots indicate a more uncertain boundary. Data available in Figure 8—source data 1.

-

Figure 8—source data 1

Data for Figure 8.

Column A: index number; column B: first ROI name; column C: second ROI name; column D: boundary area in square microns; column E: number of neurons crossings; column F: number of distinct neurons that cross; column G: (crossings - number of neurons) per area.

- https://cdn.elifesciences.org/articles/57443/elife-57443-fig8-data1-v3.csv

Next, for each boundary, we compute the number of ‘excess’ neuron crossings by traced neurons, where excess crossings are defined as 0 for a neuron that does not cross the boundary, and for a neuron crosses the same boundary n times. There is no contribution to the metric from neurons that cross a boundary once, since most such crossings are inevitable no matter where the boundary is placed. Figure 8B shows the number of excess crossings normalized by the area of boundary. A bigger dot indicates a potentially less well-defined boundary.

We spot checked many of the instances and in general note that the brain regions with high excess crossings per area, such as those in SNP, INP and VLNP, tend to have less well-defined boundaries. In particular, the boundaries at SMP/CRE, CRE/LAL, SMP/SIP, and SIP/SLP have worse scores, indicating these boundaries may not reflect actual anatomical and functional segregation of the neuropils. These brain regions were defined based on the arborization patterns of characteristic neuron types, but because neurons in the terra incognita neuropils tend to be rather heterogeneous, there are many other neuron types that do not follow these boundaries. The boundary between the FB and the AB also has a high excess crossing score, suggesting the AB is tightly linked to the neighboring FB.

Insights for a whole-brain remapping

The current brain regions based on Ito et al., 2014 contain a number of arbitrary determinations of brain regions and their boundaries in the terra incognita neuropils. In this study, we tried to solidify the ambiguous boundaries as much as possible using the information from the reconstructed neurons. However, large parts of the left hemisphere and the subesophageal zone (SEZ) are missing from the hemibrain dataset, and neurons innervating these regions are not sufficiently reconstructed. This incompleteness of the dataset is the main reason that we did not alter the previous map drastically and kept all the existing brain regions even if their anatomical and functional significance is not obvious. Once a complete EM volume of the whole fly brain is imaged and most of its 100,000 neurons are reconstructed, the entire brain can be re-segmented from scratch with more comprehensive anatomical information. Arbitrary or artificial neuropil boundaries will thereby be minimized, if not avoided, in a new brain map. Anatomy-based neuron segmentation strategies such as NBLAST may be used as neutral methods to revise the neuropils and their boundaries. Any single method, however, is not likely to produce consistent boundaries throughout the brain, especially in the terra incognita regions. It may be necessary to use different methods and criteria to segment the entire brain into reasonable brain regions. Such a new map would need discussion in a working group, and approval from the community in advance (as did the previous map [Ito et al., 2014]), insofar as it would replace the current map and therefore require a major revision of the neuron mapping scheme.

Cell type classification

Defining cell types for groups of similar neurons is a time-honored means to help to understand the anatomical and functional properties of a circuit. Presumably, neurons of the same type have similar circuit roles. However, the definition of what is a distinct cell type and the exact delineation between one cell type and another remains inherently subjective and represents a classic taxonomic challenge, pitting ‘lumpers’ against ‘splitters’. Therefore, despite our best efforts, we recognize that our typing of cells may not be identical to that proposed by other experts. We expect future revisions to cell type classification, especially as additional dense connectome data become available.

One common method of cell type classification, used in flies, exploits the GAL4 system to highlight the morphology of neurons having similar gene expression (Jenett et al., 2012). Since these genetic lines are imaged using fluorescence and confocal microscopy, we refer to them as ‘light lines’. Where they exist and are sufficiently sparse, light lines provide a key method for identifying types by grouping morphologically similar neurons together. However, there are no guarantees of coverage, and it is difficult to distinguish between neurons of very similar morphology but different connectivity.

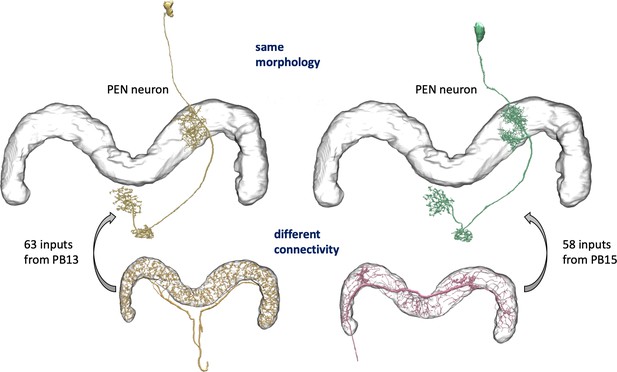

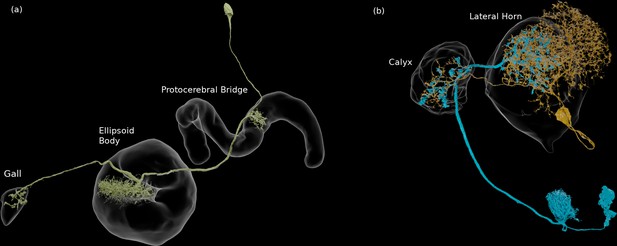

We enhanced the classic view of morphologically distinct cell types by defining distinct cell types (or sub-types) based on both morphology and connectivity. Connectivity-based clustering often reveals clear cell type distinctions, even when genetic markers have yet to be found, or when the neuronal morphologies of different types are hardly distinguishable in optical images. For example, the two PEN (protocerebral bridge - ellipsoid body - noduli) neurons have very similar forms but quite distinct inputs (Figure 9; Turner-Evans et al., 2019) Confirming their differences, PEN1 and PEN2 neurons, in fact, have been shown to have different functional activity (Green et al., 2017).

An example of two neurons with very similar shapes but differing connectivities.

PEN1 is on the left, PEN2 on the right.

Based on our previous definition of cell type, many neurons exhibit a unique morphology or connectivity pattern at least within one hemisphere of the brain (with a matching type in the other hemisphere in most cases). Because our hemibrain volume covers only the right-side examples of ipsilaterally-projecting neurons, and the contralateral arborizations of bilaterally-projecting neurons arising from the left side of the brain were in practice very difficult to match to neurons in the right side, many partial neurons were therefore left uncategorized. As a result, many neuron types consisting of a distinct morphology and connectivity have only a single example in our reconstruction.

It is possible to provide coarser groupings of neurons. For instance, most cell types are grouped by their cell body fiber representing a distinct clonal unit, which we discuss in more detail below. Furthermore, each neuron can be grouped with neurons that innervate similar brain regions. In this paper, we do not explicitly formalize this higher level grouping, but data on the innervating brain regions can be readily mined from the dataset.

Methodology for assigning cell types and nomenclature

Assigning types and names to the more than 20,000 reconstructed cells was a difficult undertaking. Less than 20% of neuron types found in our data have been described in the literature, and half of our neurons have no previously annotated type. Adding to the complexity, prior work focused on morphological similarities and differences, but here we have, for the first time, connectivity information to assist in cell typing as well.

Many cell types in well-explored regions have already been described and named in the literature, but existing names can be both inconsistent and ambiguous. The same cell type is often given differing names in different publications, and conversely, the same name, such as PN for projection neuron, is used for many different cell types. Nonetheless, for cell types already named in the literature (which we designate as published cell types, many indexed, with their synonyms, at http://virtualflybrain.org), we have tried to use existing names. In a few cases, using existing names created conflicts, which we have had to resolve. ‘R1’, for example, has long been used both for photoreceptor neurons innervating the lamina and medulla, and ring neurons in the ellipsoid body of the central complex. Similarly, ‘LN’ has been used to refer to lateral neurons in the circadian clock system, ‘local neurons’ in the antenna lobe, and LAL-Nodulus neurons in the central complex. To resolve these conflicts, the ellipsoid body ring neurons are now named ’ER1’ instead of ‘R1’, and the nodulus neurons are now ‘LNO’ and ’GLNO’ instead of ‘LN’ and ‘GLN’. The names of the antennal lobe local neuron are always preceded by lowercase letters for their cell body locations to differentiate them from the clock neuron names, for example, lLN1 versus LNd. Similarly, ‘dorsal neurons’ of the circadian clock system and ‘descending neurons’ in general, both previously abbreviated as ‘DN’, are distinguished by the following characters - numbers for the clock neurons (e.g. DN1) and letters for descending neurons (e.g. DNa01).

Overall, we defined a ‘type’ of neurons as either a single cell or a group of cells that have a very similar cell body location, morphology, and pattern of synaptic connectivity. We were able to trace from arborizations to the cell bodies for 15,912 neurons in the hemibrain volume, ≈85% of which are located in the right side of the brain while the rest are in the medialmost part of the left-side brain.

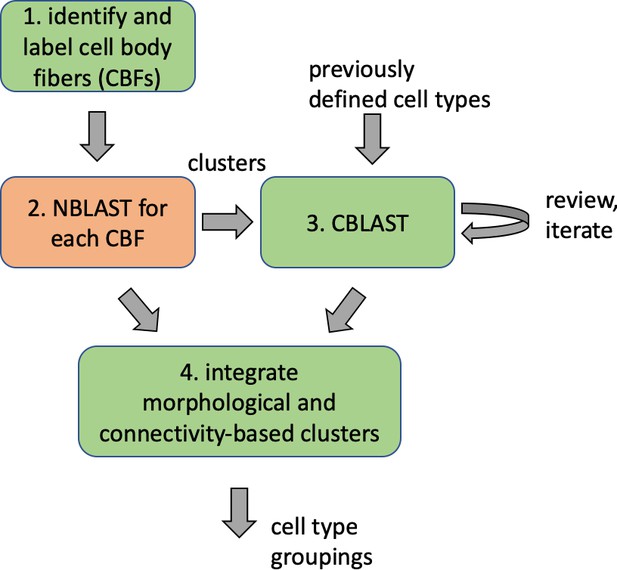

We classified these neurons in several steps. The first step classified all cells by their lineage, grouping neurons according to their bundle of cell body fibers (CBFs). Neuronal cell bodies are located in the cell body layer that surrounds the brain, and each neuron projects a single CBF towards synaptic neuropils. In the central brain, cell bodies of clonally related neurons deriving from a single stem cell (called a neuroblast in the insect brain) tend to form clusters, from each of which arises one or several bundles of CBFs. Comparing the location, trajectory, and the combined arborization patterns of all the neurons that arise from a particular CBF with the light microscopy (LM) image data of the neuronal progeny that derive from single neuroblasts (Ito et al., 2013; Yu et al., 2013), we confirmed that the neurons of each CBF group belong to a single lineage.

We carefully examined the trajectory and origins of CBFs of the 15,752 neurons on the right central brain and identified 192 distinct CBF bundles. Neurons arising from four specific CBF bundles arborize primarily in the contralateral brain side, which is not fully covered in the hemibrain volume. We characterized these neurons using the arborization patterns in the right-side brain that are formed by the neurons arising from the left-side CBFs.

The CBF bundles and associated neuronal cell body clusters were named according to their location (split into eight sectors of the brain surface with the combination of Anterior/Posterior, Ventral/Dorsal, and Medial/Lateral) and a number within the sector given according to the size of cell population. Thus, CBF group ADM01 is the group with the largest number of neurons in the Anterior Dorsal Medial sector of the brain’s surface (see the cellBodyFiber field of the Neuprint database explained later). For the neurons of the four CBF bundles that arborize primarily in the contralateral brain side - AVM15, 18, 19, and PVM10 - we indicated CBF information in the records of the left-side neurons.

Among the 192 bundles, 155 matched the CBF bundles of 92 known and six newly identified clonal units (Ito et al., 2013; Yu et al., 2013), a population of neurons and neuronal circuits derived from a single stem cell. The remaining 37 CBF bundles are minor populations and most likely of embryonic origin. In addition, we found 80 segregated cell body fiber bundles (SCB001-080, totalling 112 cells) with only one or two neurons per bundle. Many of them are also likely of embryonic origin.

We were able to identify another 6682 neurons that were not traced up to their cell bodies. For the neurons that arise from the contralateral side, we gave matching neuron names and associated CBF information, provided their specific arborization patterns gave us convincing identity information by comparison with cells that we identified in the right side of the brain. For the neurons arising from the ventralmost part of the brain outside of the hemibrain volume, we identified and gave them names if we could find convincingly specific arborization patterns, even if the CBF and cell body location data were missing. Sensory neurons that project to the specific primary sensory centers were also identified insofar as possible. In total, we typed and named 22,594 neurons.

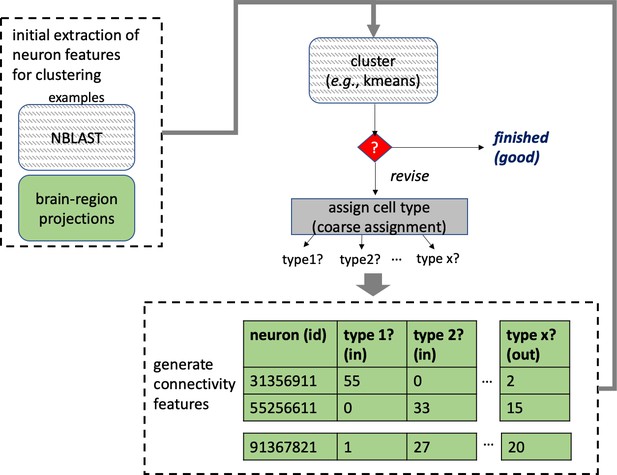

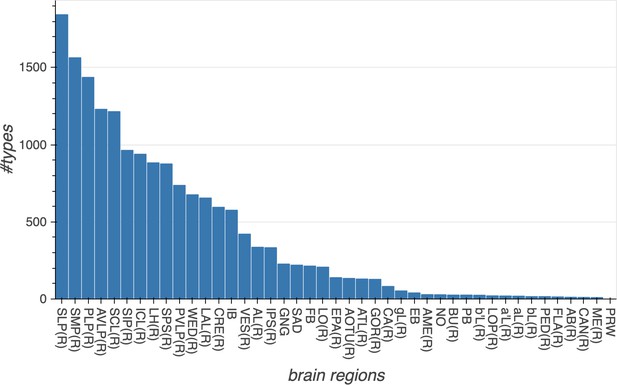

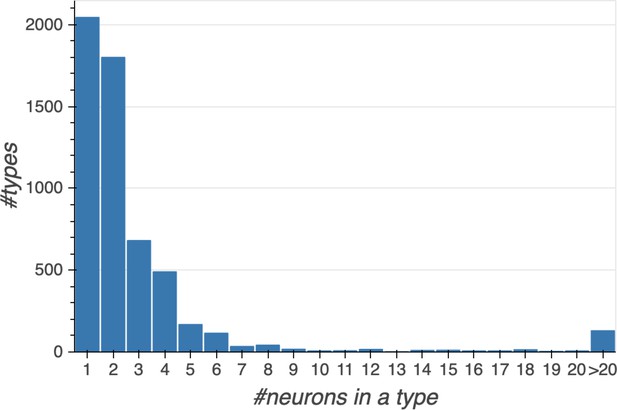

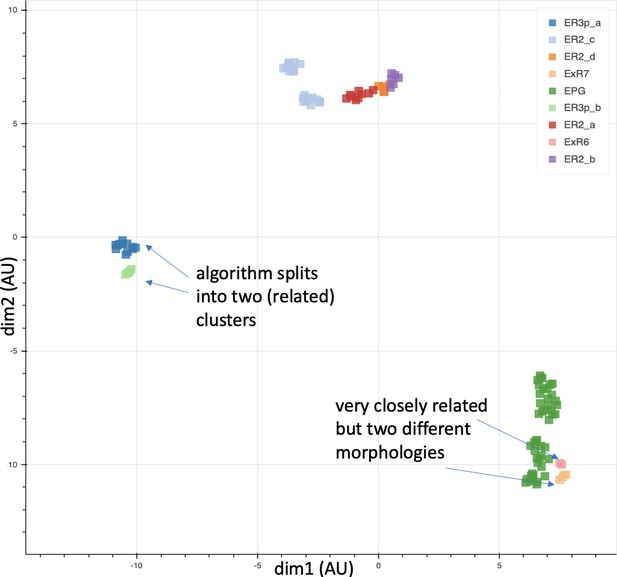

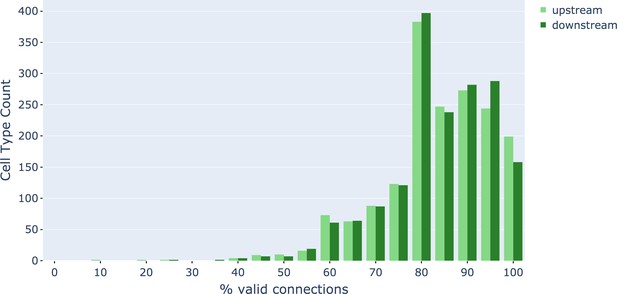

Different stem cells sometimes give rise to neurons with very similar morphologies. We classified these as different types because of their distinct developmental origin and slightly different locations of their cell bodies and CBFs. Thus, the next step in neuron typing was to cluster neurons within each CBF group. This process consisted of three further steps, as shown in Figure 10. First, we used NBLAST (Costa et al., 2016) to subject all the neurons of a particular CBF group to morphology-based clustering. Next, we used CBLAST, a new tool to cluster neurons based on synaptic connectivity (see the next section). This step is an iterative process, using neuron morphology as a template, regrouping neurons after more careful examination of neuron projection patterns and their connections. Neurons with similar connectivity characteristics but with distinguishable shapes were categorized into different morphology types. Those with practically indistinguishable shapes but with different connectivity characteristics were categorized into connectivity types within a morphology type. Finally, we validated the cell typing with extensive manual review and visual inspection. This review allowed us both to confirm cell type identity and help ensure neuron reconstruction accuracy. In total we identified 5229 morphology types and 5609 connectivity types in the hemibrain dataset. (See Table 3 for the detailed numbers and Appendix 1—table 6 for naming schemes for various neuron categories.)

Summary of the numbers and types of the neurons in the hemibrain EM dataset.

m-types is the number of morphology types; c-types the number of connectivity types; and c/t the average number of cells per connectivity type. Brain regions with repetitive array architecture tend to have higher average numbers of cells per type (see Figure 12). The cell number includes ≈4000 neurons on the contralateral side, and the percentage of contralateral cells varies between 0 and ≈50% depending on the category. For example, the central complex includes neurons on both sides of the brain, the mushroom body neurons are identified mostly on the right side, and many left-side antennal lobe sensory neurons are included as they tend to terminate bilaterally. Because of these differences, the figures shown above do not indicate the number of cells (or cell number per type) per brain side.

| Brain regions (neuropils) or neuron types | Cells | m-types | c-types | C/t | Notes |

|---|---|---|---|---|---|

| Central complex neuropil neurons | 2826 | 224 | 262 | 10.8 | |

| Mushroom body neuropil neurons | 2315 | 72 | 80 | 28.9 | Including MB-associated DANs |

| Mushroom body neuropil neurons | 2003 | 51 | 51 | 39.3 | Excluding MB-associated DANs |

| Dopaminergic neurons (DANs) | 335 | 35 | 43 | 7.8 | Including MB-associated DANs |

| Dopaminergic neurons (DANs) | 23 | 14 | 14 | 1.7 | Excluding MB-associated DANs |

| Octopaminergic neurons | 19 | 10 | 10 | 1.9 | |

| Serotonergic (5HT) neurons | 9 | 5 | 5 | 1.8 | |

| Peptidergic and secretory neurons | 51 | 12 | 14 | 3.6 | |

| Circadian clock neurons | 27 | 7 | 7 | 3.9 | |

| Fruitless gene expressing neurons | 84 | 29 | 30 | 2.8 | |

| Visual projection neurons and lobula intrinsic neurons | 3723 | 160 | 160 | 23.3 | |

| Descending neurons | 103 | 51 | 51 | 2.0 | |

| Sensory associated neurons | 2768 | 67 | 67 | 41.3 | |

| Antennal lobe neuropil neurons | 604 | 284 | 294 | 2.1 | |

| Lateral horn neuropil neurons | 1496 | 517 | 683 | 2.2 | |

| Anterior optic tubercle neuropil neurons | 243 | 77 | 80 | 3.0 | |

| Antler neuropil neurons | 81 | 45 | 45 | 1.8 | |

| Anterior ventrolateral protocerebrum neuropil neurons | 1276 | 596 | 629 | 2.0 | |

| Clamp neuropil neurons | 746 | 364 | 382 | 2.0 | |

| Crepine neuropil neurons | 333 | 108 | 115 | 2.9 | |

| Inferior bridge neuropil neurons | 264 | 119 | 119 | 2.2 | |

| Lateral accessory lobe neuropil neurons | 429 | 204 | 206 | 2.1 | |

| Posterior lateral protocerebrum neuropil neurons | 480 | 255 | 260 | 1.8 | |

| Posterior slope neuropil neurons | 621 | 303 | 311 | 2.0 | |

| Posterior ventrolateral protocerebrum neuropil neurons | 348 | 151 | 156 | 2.2 | |

| Saddle neuropil and antennal mechanosensory and motor center neurons | 219 | 96 | 99 | 2.2 | |

| Superior lateral protocerebrum neuropil neurons | 1096 | 468 | 494 | 2.2 | |

| Superior intermediate protocerebrum neuropil neurons | 220 | 90 | 92 | 2.4 | |

| Superior medial protocerebrum neuropil neurons | 1494 | 605 | 629 | 2.4 | |

| Vest neuropil neurons | 137 | 84 | 85 | 1.6 | |

| Wedge neuropil neurons | 559 | 212 | 230 | 2.4 | |

| Total | 22,594 | 5229 | 5609 | 4.0 |

In spite of this general rule, we assigned the same neuron type name for the neurons of different lineages in the following four cases.

Mushroom body intrinsic neurons called Kenyon cells, which are formed by a set of four near-identical neuroblasts (Ito et al., 1997) (see also the accompanying MB paper).

Columnar neurons of the central complex, where neurons arising from different stem cells form repetitive column-like arrangement and are near identical in terms of connectivity with tangential neurons (Hanesch et al., 1989; Wolff et al., 2015; Wolff and Rubin, 2018) (and the accompanying CX paper).

The PAM cluster of the dopaminergic neurons, where one of the hemilineages of the two clonal units forms near identical set of neurons (Lee et al., 2020) (accompanying MB paper).

Cell body fiber groupings for neurons of the lateral horn, where systematic neuron names have already been given based on the light microscopy analysis (Frechter et al., 2019), which did not allow for the precise segregation of very closely situated CBF bundles. Individual cell types exist within the same lineage, however.

‘Lumping’ versus ‘splitting’ is a difficult problem for classification. Following the experiences of taxonomy, we opted for splitting when we could not obtain convincing identity information, a decision designed to ease the task of future researchers. If we split two similar neuron types into Type 1 and Type 2, then there is a chance future studies might conclude that they are actually subsets of a common cell type. If so, then at that time we can simply merge the two types as Type 1, and leave the other type name unused, and publish a lookup table of the lumping process to keep track of the names that have been merged. The preceding studies can then be re-interpreted as the analyses on the particular subsets of a common neuron type. If, on the contrary, we lump the two similar neurons into a common type, then a later study finds they are actually a mixture of two neuron types, then it would not be possible to determine which of the two neuron types, or a mixture of them, was analyzed in preceding studies.

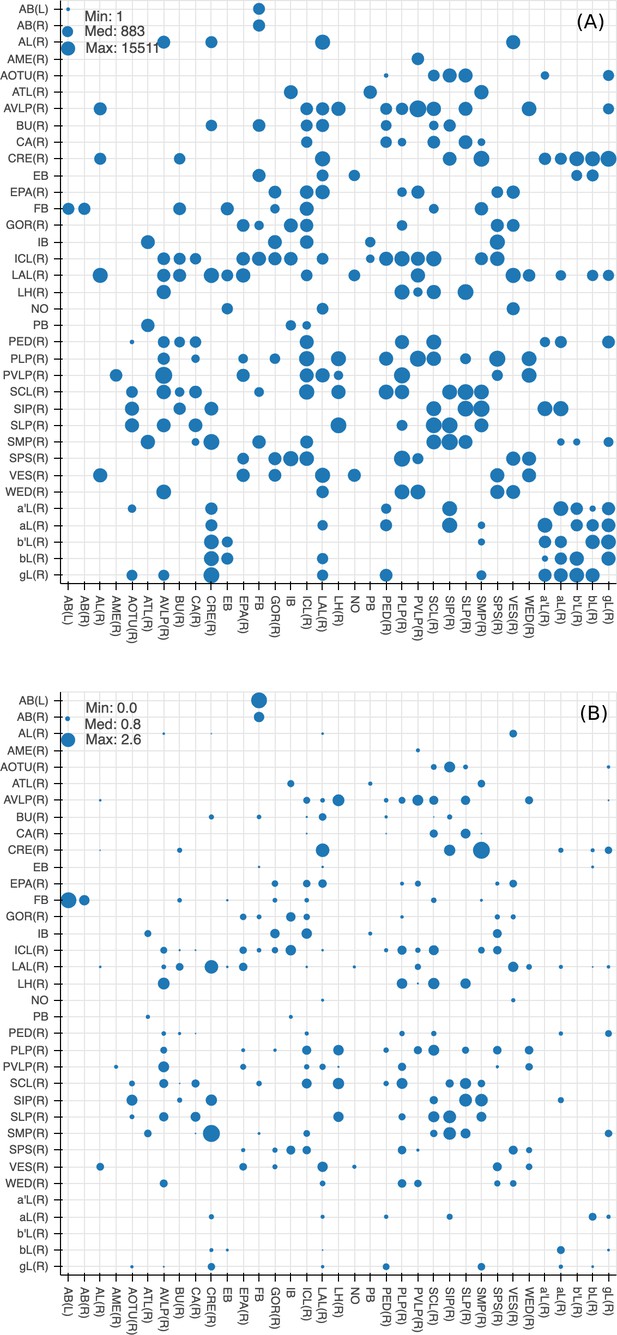

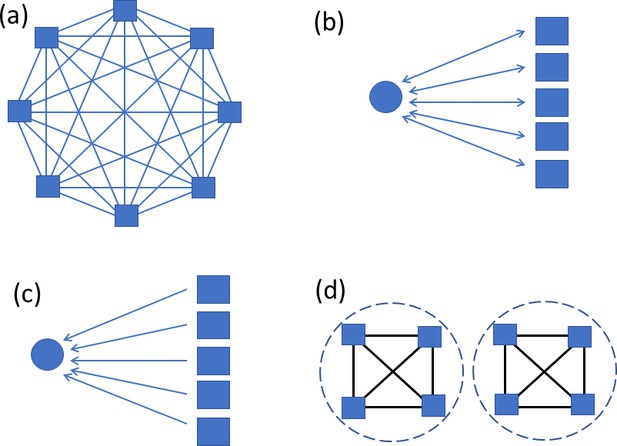

In the hemibrain, using the defined brain regions (neuropils) and reference to known expression driver strains, we were able to assign a cell type to many cells. Where possible, we matched previously defined cell types with those labeled in light data using a combination of Neuprint, an interactive analysis tool (described later), Color_MIP_mask search (Otsuna et al., 2018), and human recognition to find the matching cell types, especially in well-explored neuropils such as the mushroom body and central complex, where abundant cell type information was already available and where we are more confident in our anatomical expertise (see the accompanying MB and CX papers). Even though most of the cell types in the MB and CX were already known, we still found new cell types in these regions, an important vindication of our methods. In these cases, we tried to name them using the existing schemes for these regions, and further refined these morphological groupings with relevant information on connectivity.