Likelihood approximation networks (LANs) for fast inference of simulation models in cognitive neuroscience

Abstract

In cognitive neuroscience, computational modeling can formally adjudicate between theories and affords quantitative fits to behavioral/brain data. Pragmatically, however, the space of plausible generative models considered is dramatically limited by the set of models with known likelihood functions. For many models, the lack of a closed-form likelihood typically impedes Bayesian inference methods. As a result, standard models are evaluated for convenience, even when other models might be superior. Likelihood-free methods exist but are limited by their computational cost or their restriction to particular inference scenarios. Here, we propose neural networks that learn approximate likelihoods for arbitrary generative models, allowing fast posterior sampling with only a one-off cost for model simulations that is amortized for future inference. We show that these methods can accurately recover posterior parameter distributions for a variety of neurocognitive process models. We provide code allowing users to deploy these methods for arbitrary hierarchical model instantiations without further training.

eLife digest

Cognitive neuroscience studies the links between the physical brain and cognition. Computational models that attempt to describe the relationships between the brain and specific behaviours quantitatively is becoming increasingly popular in this field. This approach may help determine the causes of certain behaviours and make predictions about what behaviours will be triggered by specific changes in the brain.

Many of the computational models used in cognitive neuroscience are built based on experimental data. A good model can predict the results of new experiments given a specific set of conditions with few parameters. Candidate models are often called ‘generative’: models that can simulate data. However, typically, cognitive neuroscience studies require going the other way around: they need to infer models and their parameters from experimental data. Ideally, it should also be possible to properly assess the remaining uncertainty over the parameters after having access to the experimental data. To facilitate this, the Bayesian approach to statistical analysis has become popular in cognitive neuroscience.

Common software tools for Bayesian estimation require a ‘likelihood function’, which measures how well a model fits experimental data for given values of the unknown parameters. A major obstacle is that for all but the most common models, obtaining precise likelihood functions is computationally costly. In practice, this requirement limits researchers to evaluating and comparing a small subset of neurocognitive models for which a likelihood function is known. As a result, it is convenience, rather than theoretical interest, that guides this process.

In order to provide one solution for this problem, Fengler et al. developed a method that allows users to perform Bayesian inference on a larger number of models without high simulation costs. This method uses likelihood approximation networks (LANs), a computational tool that can estimate likelihood functions for a broad class of models of decision making, allowing researchers to estimate parameters and to measure how well models fit the data. Additionally, Fengler et al. provide both the code needed to build networks using their approach and a tutorial for users.

The new method, along with the user-friendly tool, may help to discover more realistic brain dynamics underlying cognition and behaviour as well as alterations in brain function.

Introduction

Computational modeling has gained traction in cognitive neuroscience in part because it can guide principled interpretations of functional demands of cognitive systems while maintaining a level of tractability in the production of quantitative fits of brain-behavior relationships. For example, models of reinforcement learning (RL) are frequently used to estimate the neural correlates of the exploration/exploitation tradeoff, of asymmetric learning from positive versus negative outcomes, or of model-based vs. model-free control (Schönberg et al., 2007; Niv et al., 2012; Frank et al., 2007; Zajkowski et al., 2017; Badre et al., 2012; Daw et al., 2011b). Similarly, models of dynamic decision-making processes are commonly used to disentangle the strength of the evidence for a given choice from the amount of that evidence needed to commit to any choice, and how such parameters are impacted by reward, attention, and neural variability across species (Rangel et al., 2008; Forstmann et al., 2010; Krajbich and Rangel, 2011; Frank et al., 2015; Yartsev et al., 2018; Doi et al., 2020). Parameter estimates might also be used as a theoretically driven method to reduce the dimensionality of brain/behavioral data that can be used for prediction of, for example, clinical status in computational psychiatry (Huys et al., 2016).

Interpreting such parameter estimates requires robust methods that can estimate their generative values, ideally including their uncertainty. For this purpose, Bayesian statistical methods have gained traction. The basic conceptual idea in Bayesian statistics is to treat parameters θ and data as stemming from a joint probability model . Statistical inference proceeds by using Bayes’ rule,

to get at , the posterior distribution over parameters' given data. is known as the prior distribution over the parameters θ, and is known as the evidence or just probability of the data (a quantity of importance for model comparison). The term , the probability (density) of the dataset given parameters θ, is known as the likelihood (in accordance with usual notation, we will also write in the following). It is common in cognitive science to represent likelihoods as , where specifies a particular stimulus. We suppress in our notation, but note that our approach easily generalizes when explicitly conditioning on trial-wise stimuli. Bayesian parameter estimation is a natural way to characterize uncertainty over parameter values. In turn, it provides a way to identify and probe parameter tradeoffs. While we often do not have access to directly, we can draw samples from it instead, for example, via Markov Chain Monte Carlo (MCMC) (Robert and Casella, 2013; Diaconis, 2009; Robert and Casella, 2011).

Bayesian estimation of the full posterior distributions over model parameters contrasts with maximum likelihood estimation (MLE) methods that are often used to extract single best parameter values, without considering their uncertainty or whether other parameter estimates might give similar fits. Bayesian methods naturally extend to settings that assume an implicit hierarchy in the generative model in which parameter estimates at the individual level are informed by the distribution across a group, or even to assess within an individual how trial-by-trial variation in (for example) neural activity can impact parameter estimates (commonly known simply as hierarchical inference). Several toolboxes exist for estimating the parameters of popular models like the drift diffusion model (DDM) of decision-making and are widely used by the community for this purpose (Wiecki et al., 2013; Heathcote et al., 2019; Turner et al., 2015; Ahn et al., 2017). Various studies have used these methods to characterize how variability in neural activity, and manipulations thereof, alters learning and decision parameters that can quantitatively explain variability in choice and response time distributions (Cavanagh et al., 2011; Frank et al., 2015; Herz et al., 2016; Pedersen and Frank, 2020).

Traditionally, however, posterior sampling or MLE for such models required analytical likelihood functions: a closed-form mathematical expression providing the likelihood of observing specific data (reaction times and choices) for a given model and parameter setting. This requirement limits the application of any likelihood-based method to a relatively small subset of cognitive models chosen for so-defined convenience instead of theoretical interest. Consequently, model comparison and estimation exercises are constrained as many important but likelihood-free models were effectively untestable or required weeks to process a single model formulation. Testing any slight adjustment to the generative model (e.g., different hierarchical grouping or splitting conditions) requires a repeated time investment of the same order. For illustration, we focus on the class of sequential sampling models (SSMs) applied to decision-making scenarios, with the most common variants of the DDM. The approach is, however, applicable to any arbitrary domain.

In the standard DDM, a two-alternative choice decision is modeled as a noisy accumulation of evidence toward one of two decision boundaries (Ratcliff and McKoon, 2008). This model is widely used as it can flexibly capture variations in choice, error rates, and response time distributions across a range of cognitive domains and its parameters have both psychological and neural implications. While the likelihood function is available for the standard DDM and some variants including inter-trial variability of its drift parameter, even seemingly small changes to the model form, such as dynamically varying decision bounds (Cisek et al., 2009; Hawkins et al., 2015) or multiple-choice alternatives (Krajbich and Rangel, 2011), are prohibitive for likelihood-based estimation, and instead require expensive Monte Carlo (MC) simulations, often without providing estimates of uncertainty across parameters.

In the last decade and a half, approximate Bayesian computation (ABC) algorithms have grown to prominence (Sisson et al., 2018). These algorithms enable one to sample from posterior distributions over model parameters, where models are defined only as simulators, which can be used to construct empirical likelihood distributions (Sisson et al., 2018). ABC approaches have enjoyed successful application across life and physical sciences (e.g., Akeret et al., 2015), and notably, in cognitive science (Turner and Van Zandt, 2018), enabling researchers to estimate theoretically interesting models that were heretofore intractable. However, while there have been many advances without sacrificing information loss in the posterior distributions (Turner and Sederberg, 2014; Holmes, 2015), such ABC methods typically require many simulations to generate synthetic or empirical likelihood distributions, and hence can be computationally expensive (in some cases prohibitive – it can take weeks to estimate parameters for a single model). This issue is further exacerbated when embedded within a sequential MCMC sampling scheme, which is needed for unbiased estimates of posterior distributions. For example, one typically needs to simulate between 10,000 and 100,000 times (the exact number varies depending on the model) for each proposed combination of parameters (i.e., for each sample along a Markov chain [MC], which may itself contain tens of thousands of samples), after which they are discarded. This situation is illustrated in Figure 1, where the red arrows point at the computations involved in the approach suggested by Turner et al., 2015.

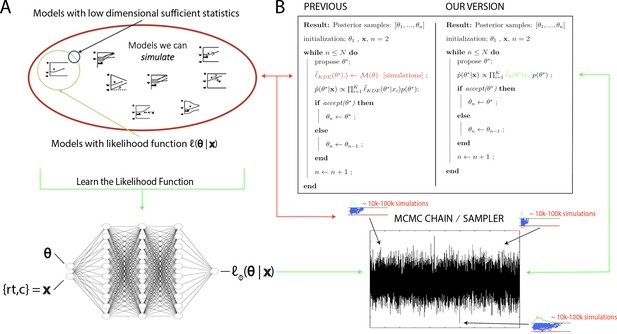

High level overview of the proposed methods.

(A) The space of theoretically interesting models in the cognitive neurosciences (red) is much larger than the space of mechanistic models with analytical likelihood functions (green). Traditional approximate Bayesian computation (ABC) methods require models that have low-dimensional sufficient statistics (blue). (B) illustrates how likelihood approximation networks can be used in lieu of online simulations for efficient posterior sampling. The left panel shows the predominant 'probability density approximation' (PDA) method used for ABC in the cognitive sciences (Turner et al., 2015). For each step along a Markov chain, 10K–100K simulations are required to obtain a single likelihood estimate. The right panel shows how we can avoid the simulation steps during inference using amortized likelihood networks that have been pretrained using empirical likelihood functions (operationally in this paper: kernel density estimates and discretized histograms).

To address this type of issue, the statistics and machine learning communities have increasingly focused on strategies for the amortization of simulation-based computations (Gutmann et al., 2018; Papamakarios and Murray, 2016; Papamakarios et al., 2019a; Lueckmann et al., 2019; Radev et al., 2020b; Radev et al., 2020a; Gonçalves et al., 2020; Järvenpää et al., 2021). The aim is generally to use model simulations upfront and learn a reusable approximation of the function of interest (targets can be the likelihood, the evidence, or the posterior directly).

In this paper, we develop a general ABC method (and toolbox) that allows users to perform inference on a significant number of neurocognitive models without repeatedly incurring substantial simulation costs. To motivate our particular approach and situate it in the context of other methods, we outline the following key desiderata:

The method needs to be easily and rapidly deployable to end users for Bayesian inference on various models hitherto deemed computationally intractable. This desideratum naturally leads us to an amortization approach, where end users can benefit from costs incurred upfront.

Our method should be sufficiently flexible to support arbitrary inference scenarios, including hierarchical inference, estimation of latent covariate (e.g., neural) processes on the model parameters, arbitrary specification of parameters across experimental conditions, and without limitations on dataset sizes. This desideratum leads us to amortize the likelihood functions, which (unlike other amortization strategies) can be immediately applied to arbitrary inference scenarios without further cost.

We desire approximations that do not a priori sacrifice covariance structure in the parameter posteriors, a limitation often induced for tractability in variational approaches to approximate inference (Blei et al., 2017).

End users should have access to a convenient interface that integrates the new methods seamlessly into preexisting workflows. The aim is to allow users to get access to a growing database of amortized models through this toolbox and enable increasingly complex models to be fit to experimental data routinely, with minimal adjustments to the user’s working code. For this purpose, we will provide an extension to the widely used HDDM toolbox (Wiecki et al., 2013; Pedersen and Frank, 2020) for parameter estimation of DDM and RL models.

Our approach should exploit modern computing architectures, specifically parallel computation. This leads us to focus on the encapsulation of likelihoods into neural network (NN) architectures, which will allow batch processing for posterior inference.

Guided by these desiderata, we developed two amortization strategies based on NNs and empirical likelihood functions. Rather than simulate during inference, we instead train NNs as parametric function approximators to learn the likelihood function from an initial set of a priori simulations across a wide range of parameters. By learning (log) likelihood functions directly, we avoid posterior distortions that result from inappropriately chosen (user-defined) summary statistics and corresponding distance measures as applied in traditional ABC methods (Sisson et al., 2018). Once trained, likelihood evaluation only requires a forward pass through the NN (as if it were an analytical likelihood) instead of necessitating simulations. Moreover, any algorithm can be used to facilitate posterior sampling, MLE or maximum a posteriori estimation (MAP).

For generality, and because they each have their advantages, we use two classes of architectures, multilayered perceptrons (MLPs) and convolutional neural networks (CNNs), and two different posterior sampling methods (MCMC and importance sampling). We show proofs of concepts using posterior sampling and parameter recovery studies for a range of cognitive process models of interest. The trained NNs provide the community with a (continually expandable) bank of encapsulated likelihood functions that facilitate consideration of a larger (previously computationally inaccessible) set of cognitive models, with orders of magnitude speedup relative to simulation-based methods. This speedup is possible because costly simulations only have to be run once per model upfront and henceforth be avoided during inference: previously executed computations are amortized and then shared with the community.

Moreover, we develop standardized amortization pipelines that allow the user to apply this method to arbitrary models, requiring them to provide only a functioning simulator of their model of choice.

In the 'Approximate Bayesian Computation' section, we situate our approach in the greater context of ABC, with a brief review of online and amortization algorithms. The subsequent sections describe our amortization pipelines, including two distinct strategies, as well as their suggested use cases. The 'Test beds' section provides an overview of the (neuro)cognitive process models that comprise our benchmarks. The 'Results' section shows proof-of-concept parameter recovery studies for the two proposed algorithms, demonstrating that the method accurately recovers both the posterior mean and variance (uncertainty) of generative model parameters, and that it does so at a runtime speed of orders of magnitude faster than traditional ABC approaches without further training. We further demonstrate an application to hierarchical inference, in which our trained networks can be imported into widely used toolboxes for arbitrary inference scenarios. In the'Discussion' and the last section, we further situate our work in the context of other ABC amortization strategies and discuss the limitations and future work.

Approximate Bayesian computation

ABC methods apply when one has access to a parametric stochastic simulator (also referred to as generative model), but, unlike the usual setting for statistical inference, no access to an explicit mathematical formula for the likelihood of observations given the simulator’s parameter setting.

While likelihood functions for a stochastic stimulator can in principle be mathematically derived, this can be exceedingly challenging even for some historically famous models such as the Ornstein–Uhlenbeck (OU) process (Lipton and Kaushansky, 2018) and may be intractable in many others. Consequently, the statistical community has increasingly developed ABC tools that enable posterior inference of such ‘likelihood-free’ stochastic models while completely bypassing any likelihood derivations (Cranmer et al., 2020).

Given a parametric stochastic simulator model and dataset , instead of exact inference based on , these methods attempt to draw samples from an approximate posterior . Consider the following general equation:

where refers to sufficient statistics (roughly, summary statistics of a dataset that retain sufficient information about the parameters of the generative process). refers to a kernel-based distance measure/cost function, which evaluates a probability density function for a given distance between the observed and expected summary statistics . The parameter (commonly known as bandwidth parameter) modulates the cost gradient. Higher values of lead to more graceful decreases in cost (and therefore a worse approximation of the true posterior).

By generating simulations, one can use such summary statistics to obtain approximate likelihood functions, denoted as , where approximation error can be mitigated by generating large numbers of simulations. The caveat is that the amount of simulation runs needed to achieve a desired degree of accuracy in the posterior can render such techniques computationally infeasible.

With a focus on amortization, our goal is to leverage some of the insights and developments in the ABC community to develop NN architectures that can learn approximate likelihoods deployable for any inference scenario (and indeed any inference method, including MCMC, variational inference, or even MLE) without necessitating repeated training. We next describe our particular approach and return to a more detailed comparison to existing methods in the 'Discussion' section.

Learning the likelihood with simple NN architectures

In this section, we outline our approach to amortization of computational costs of large numbers of simulations required by traditional ABC methods. Amortization approaches incur a one-off (potentially quite large) simulation cost to enable cheap, repeated inference for any dataset. Recent research has led to substantial developments in this area (Cranmer et al., 2020). The most straightforward approach is to simply simulate large amounts of data and compile a database of how model parameters are related to observed summary statistics of the data (Mestdagh et al., 2019). This database can then be used during parameter estimation in empirical datasets using a combination of nearest-neighbor search and local interpolation methods. However, this approach suffers from the curse of dimensionality with respect to storage demands (a problem that is magnified with increasing model parameters). Moreover, its reliance on summary statistics (Sisson et al., 2018) does not naturally facilitate flexible reuse across inference scenarios (e.g., hierarchical models, multiple conditions while fixing some parameters across conditions, etc.).

To fulfill all desiderata outlined in the introduction, we focus on directly encapsulating the likelihood function over empirical observations of a simulation model so that likelihood evaluation is substantially cheaper than constructing (empirical) likelihoods via model simulation online during inference. Such empirical observations could be, for example, trial-wise choices and reaction times (RTs). This strategy then allows for flexible reuse of such approximate likelihood functions in a large variety of inference scenarios applicable to common experimental design paradigms. Specifically, we encapsulate as a feed-forward NN, which allows for parallel evaluation by design. We refer to these networks as likelihood approximation networks (LANs).

Figure 1 spells out the setting (A) and usage (B) of such a method. The LAN architectures used in this paper are simple, small in size, and are made available for download for local usage. While this approach does not allow for instantaneous posterior inference, it does considerably reduce computation time (by up to three orders of magnitude; see ‘Run time’ section) when compared to approaches that demand simulations at inference. Notably, this approach also substantially speeds up inference even for models that are not entirely likelihood free but nevertheless require costly numerical methods to obtain likelihoods. Examples are the full-DDM with inter-trial variability in parameters (for which likelihoods can be obtained via numerical integration, as is commonly done in software packages such as HDDM but with substantial cost), but also other numerical methods for generalized DDMs (Shinn et al., 2020; Drugowitsch, 2016). At the same time, we maintain the high degree of flexibility with regards to deployment across arbitrary inference scenarios. As such, LANs can be treated as a highly flexible plug-in to existing inference algorithms and remain conceptually simple and lightweight.

Before elaborating our LAN approach, we briefly situate it in the context of some related work.

One branch of literature that interfaces ABC with deep learning attempts to amortize posterior inference directly in end-to-end NNs (Radev et al., 2020b; Radev et al., 2020a; Papamakarios and Murray, 2016; Papamakarios et al., 2019a; Gonçalves et al., 2020). Here, NN architectures are trained with a large number of simulated datasets to produce posterior distributions over parameters, and once trained, such networks can be applied to directly estimate parameters from new datasets without the need for further simulations. However, the goal to directly estimate posterior parameters from data requires the user to first train a NN for the very specific inference scenario in which it is applied empirically. Such approaches are not easily deployable if a user wants to test multiple inference scenarios (e.g., parameters may vary as a function of task condition or brain activity, or in hierarchical frameworks), and consequently, they do not achieve our second desideratum needed for a user-friendly toolbox, making inference cheap and simple.

We return to discuss the relative merits and limitations of these and further alternative approaches in the 'Discussion' section.

Formally, we use model simulations to learn a function , where is the output of a NN with parameter vector (weights, biases). The function is used as an approximation of the likelihood function . Once learned, we can use such as a plug-in to Bayes' rule:

To perform posterior inference, we can now use a broad range of existing MC or MCMC algorithms. We note that, fulfilling our second desideratum, an extension to hierarchical inference is as simple as plugging in our NN into a probabilistic model of the form

where α, β are generic group-level global parameters, and γ serves as a generic fixed hyperparameter vector.

We provide proofs of concepts for two types of LANs. While we use MLPs and convolutional neural networks (CNNs), of conceptual importance is the distinction between the two problem representations they tackle, rather than the network architectures per se.

The first problem representation, which we call the pointwise approach, considers the functions , where θ is the parameter vector of a given stochastic simulator model, and is a single datapoint (trial outcome). The pointwise approach is a mapping from the input dimension , where refers to the cardinality, to the one-dimensional output. The output is simply the log-likelihood of the single datapoint given the parameter vector θ. As explained in the next section, for this mapping we chose simple MLPs.

The second problem representation, which we will refer to as the histogram approach, instead aims to learn a function , which maps a parameter vector θ to the likelihood over the full (discretized) dataspace (i.e., the likelihood of the entire RT distributions at once). We represent the output space as an outcome histogram with dimensions (where in our applications is the number of bins for a discretization of reaction times, and refers to the number of distinct choices). Thus, our mapping has input dimension and output dimension . Representing the problem this way, we chose CNNs as the network architecture.

In each of the above cases, we pursued the architectures that seemed to follow naturally, without any commitment to their optimality. The pointwise approach operates on low-dimensional inputs and outputs. With network evaluation speed being of primary importance to us, we chose a relatively shallow MLP to learn this mapping, given that it was expressive enough. However, when learning a mapping from a parameter vector θ to the likelihood over the full dataspace, as in the histogram approach, we map a low-dimensional data manifold to a much higher dimensional one. Using an MLP for this purpose would imply that the number of NN parameters needed to learn this function would be orders of magnitude larger than using a CNN. Not only would this mean that forward passes through the network would take longer, but also an increased propensity to overfit on an identical data budget.

The next two sections will give some detail regarding the networks chosen for the pointwise and histogram LANs. Figure 1 illustrates the general idea of our approach while Figure 2 gives a conceptual overview of the exact training and inference procedure proposed. These details are thoroughly discussed in the last section of the paper.

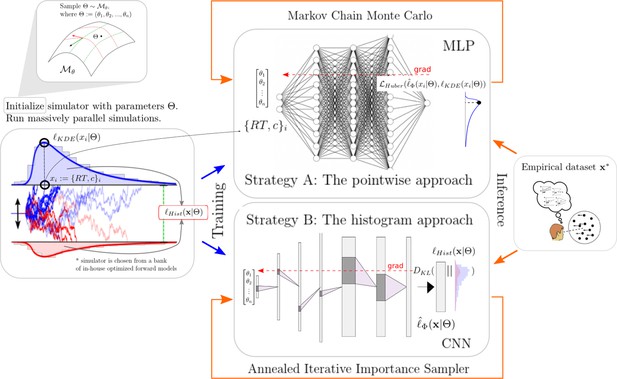

High-level overview of our approaches.

For a given model , we sample model parameters θ from a region of interest (left 1) and run 100k simulations (left 2). We use those simulations to construct a kernel density estimate-based empirical likelihood, and a discretized (histogram-like) empirical likelihood. The combination of parameters and the respective likelihoods is then used to train the likelihood networks (right 1). Once trained, we can use the multilayered perceptron and convolutional neural network for posterior inference given an empirical/experimental dataset (right 2).

Pointwise approach: learn likelihoods of individual observations with MLPs

As a first approach, we use simple MLPs to learn the likelihood function of a given stochastic simulator. The network learns the mapping , where θ represents the parameters of our stochastic simulator, are the simulator outcomes (in our specific examples below, , refers to the tuple of reaction times and choices), and is the log-likelihood of the respective outcome. The trained network then serves as the approximate likelihood function . We emphasize that the network is trained as a function approximator to provide us with a computationally cheap likelihood estimate, not to provide a surrogate simulator. Biological plausibility of the network is therefore not a concern when it comes to architecture selection. Fundamentally this approach attempts to learn the log-likelihood via a nonlinear regression, for which we chose an MLP. Log-likelihood labels for training were derived from empirical likelihoods functions (details are given in the 'Materials and methods' section), which in turn were constructed as kernel density estimates (KDEs). The construction of KDEs roughly followed Turner et al., 2015. We chose the Huber loss function (details are given in the 'Materials and methods' section) because it is more robust to outliers and thus less susceptible to distortions that can arise in the tails of distributions.

Histogram approach: learn likelihoods of entire dataset distributions with CNNs

Our second approach is based on a CNN architecture. Whereas the MLP learned to output a single scalar likelihood output for each datapoint (‘trial’, given a choice, reaction time, and parameter vector), the goal of the CNN was to evaluate, for the given model parameters, the likelihood of an arbitrary number of datapoints via one forward pass through the network. To do so, the output of the CNN was trained to produce a probability distribution over a discretized version of the dataspace, given a stochastic model and parameter vector. The network learns the mapping .

As a side benefit, in line with the methods proposed by Lueckmann et al., 2019; Papamakarios et al., 2019b, the CNN can in fact act as a surrogate simulator; for the purpose of this paper, we do not exploit this possibility however. Since here we attempt to learn distributions, labels were simple binned empirical likelihoods, and as a loss function we chose the Kullback Leibler (KL) divergence between the network's output distribution and the label distribution (details are given in the 'Materials and methods' section).

Training specifics

Most of the specifics regarding training procedures are discussed in detail in the 'Materials and methods' section; however, we mention some aspects here to aid readability.

We used 1.5 M and 3 M parameter vectors (based on 100k simulations each) to train the MLP and CNN approach, respectively. We chose these numbers consistently across all models and in fact trained on less examples for the MLP only due to RAM limitations we faced on our machines (which in principle can be circumvented). These numbers are purposely high (but in fact quite achievable with access to a computing cluster, simulations for each model was on the order of hours only) since we were interested in a workable proof of concept. We did not investigate the systematic minimization of training data; however, some crude experiments indicate that a decrease by an order of magnitude did not seriously affect performance.

We emphasize that this is in line with the expressed philosophy of our approach. The point of amortization is to throw a lot of resources at the problem once so that downstream inference is made accessible even on basic setups (a usual laptop). In case simulators are prohibitively expensive even for reasonably sized computer clusters, minimizing training data may gain more relevance. In such scenarios, training the networks will be very cheap compared to simulation time, which implies that retraining with progressively more simulations until one observes asymptotic test performance is a viable strategy.

Test beds

We chose variations of SSMs common in the cognitive neurosciences as our test bed (Figure 3). The range of models we consider permits great flexibility in allowable data distributions (choices and response times). We believe that initial applications are most promising for such SSMs because (1) analytical likelihoods are available for the most common variants (and thus provide an upper-bound benchmark for parameter recovery) and (2) there exist many other interesting variants for which no analytic solution exists.

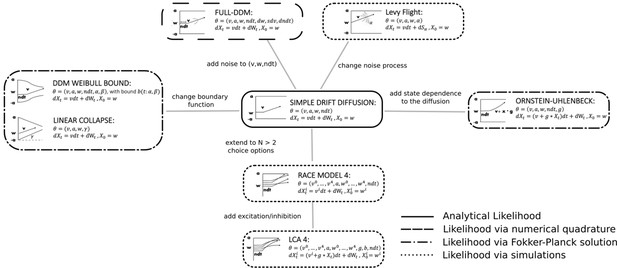

Pictorial representation of the stochastic simulators that form our test bed.

Our point of departure is the standard simple drift diffusion model (DDM) due to its analytical tractability and its prevalence as the most common sequential sampling model (SSM) in cognitive neuroscience. By systematically varying different facets of the DDM, we test our likelihood approximation networks (LANs) across a range of SSMs for parameter recovery, goodness of fit (posterior predictive checks), and inference runtime. We divide the resulting models into four classes as indicated by the legend. We consider the simple DDM in the analytical likelihood (solid line) category, although, strictly speaking, the likelihood involves an infinite sum and thus demands an approximation algorithm introduced by Navarro and Fuss, but this algorithm is sufficiently fast to evaluate so that it is not a computational bottleneck. The full-DDM needs numerical quadrature (dashed line) to integrate over variability parameters, which inflates the evaluation time by 1–2 orders of magnitude compared to the simple DDM. Similarly, likelihood approximations have been derived for a range of models using the Fokker–Planck equations (dotted-dashed line), which again incurs nonsignificant evaluation cost. Finally, for some models no approximations exist and we need to resort to computationally expensive simulations for likelihood estimates (dotted line). Amortizing computations with LANs can substantially speed up inference for all but the analytical likelihood category (but see runtime for how it can even provide speedup in that case for large datasets).

We note that there is an intermediate case in which numerical methods can be applied to obtain likelihoods for a broader class of models (e.g., Shinn et al., 2020). These methods are nevertheless computationally expensive and do not necessarily afford rapid posterior inference. Therefore, amortization via LANs is attractive even for these models. Figure 3 further outlines this distinction.

We emphasize that our methods are quite general, and any model that generates discrete choices and response times from which simulations can be drawn within a reasonable amount of time can be suitable to the amortization techniques discussed in this paper (given that the model has parameter vectors of dimension roughly lt15). In fact, LANs are not restricted to models of reaction time and choice to begin with, even though we focus on these as test beds.

As a general principle, all models tested below are based on stochastic differential equations (SDEs) of the following form:

where we are concerned with the probabilistic behavior of the particle (or vector of particles) . The behavior of this particle is driven by , an underlying drift function, , an underlying noise transformation function, , an incremental noise process, and , a starting point.

Of interest to us are specifically the properties of the first-passage-time-distributions (FPTD) for such processes, which are needed to compute the likelihood of a given response time/choice pair . In these models, the exit region of the particle (i.e., the specific boundary it reaches) determines the choice, and the time point of that exit determines the response time. The joint distribution of choices and reaction times is referred to as a FPTD.

Given some exit region , such FPTDs are formally defined as

In words, a first passage time, for a given exit region, is defined as the first time point of entry into the exit region, and the FPTD is the probability, respectively, of such an exit happening at any specified time (Ratcliff, 1978; Feller, 1968). Partitioning the exit region into subsets (e.g., representing choices), we can now define the set of defective distributions

where describes the collection of parameters driving the process. For every ,

jointly define the FPTD such that

These functions , jointly serve as the likelihood function s.t.

For illustration, we focus the general model formulation above to the standard DDM. Details regarding the other models in our test bed are relegated to the 'Materials and methods' section.

To obtain the DDM from the general equation above, we set (a fixed drift across time), (a fixed noise variance across time), and . The DDM applies to the two alternative decision cases, where decision corresponds to the particle crossings of an upper or lower fixed boundary. Hence, and , where is a parameter of the model. The DDM also includes a normalized starting point (capturing potential response biases or priors), and finally a nondecision time τ (capturing the time for perceptual encoding and motor output). Hence, the parameter vector for the DDM is then . The SDE is defined as

The DDM serves principally as a basic proof of concept for us, in that it is a model for which we can compute the exact likelihoods analytically (Feller, 1968; Navarro and Fuss, 2009).

The other models chosen for our test bed systematically relax some of the fixed assumptions of the basic DDM, as illustrated in Figure 3.

We note that many new models can be constructed from the components tested here. As an example of this modularity, we introduce inhibition/excitation to the race model, which gives us the leaky competing accumulator (LCA) (Usher and McClelland, 2001). We could then further extend this model by introducing parameterized bounds. We could introduce reinforcement learning parameters to a DDM (Pedersen and Frank, 2020) or in combination with any of the other decision models. Again we emphasize that while these diffusion-based models provide a large test bed for our proposed methods, applications are in no way restricted to this class of models.

Results

Networks learn likelihood function manifolds

Across epochs of training, both training and validation loss decrease rapidly and remain low (Figure 4A) suggesting that overfitting is not an issue, which is sensible in this context. The low validation loss further shows that the network can interpolate likelihoods to specific parameter values it has not been exposed to (with the caveat that it has to be exposed to the same range; no claims are made about extrapolation).

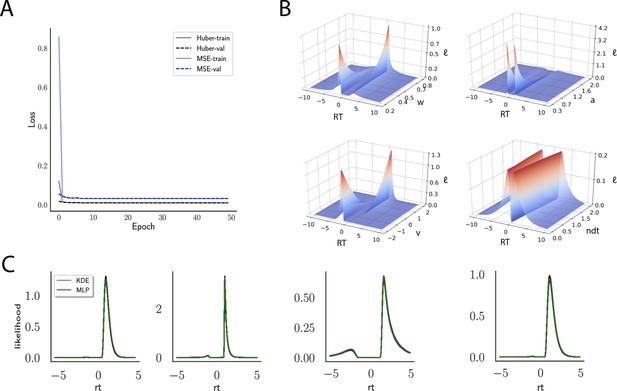

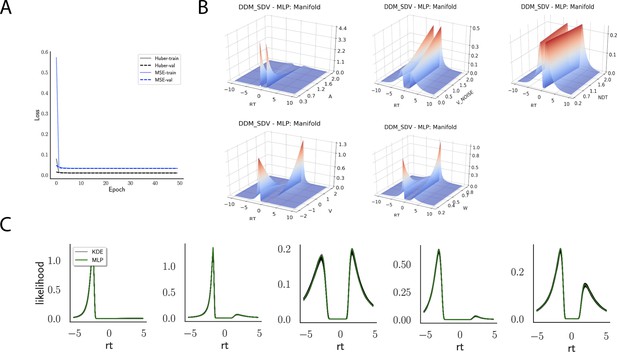

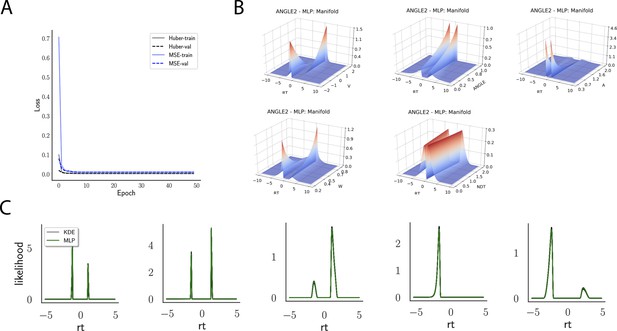

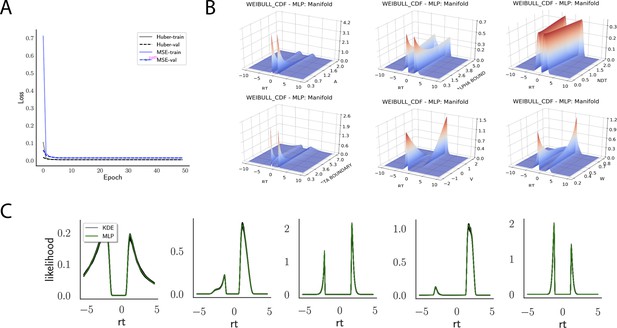

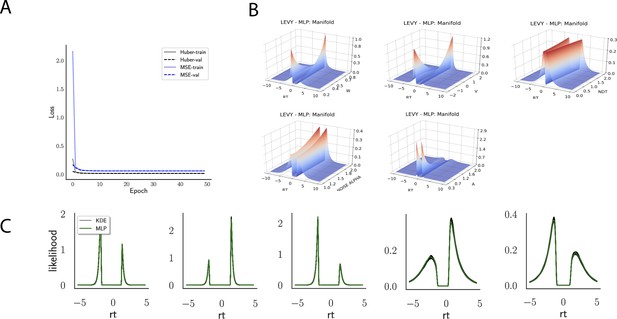

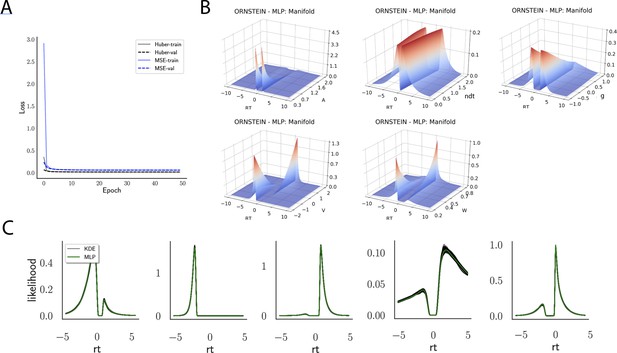

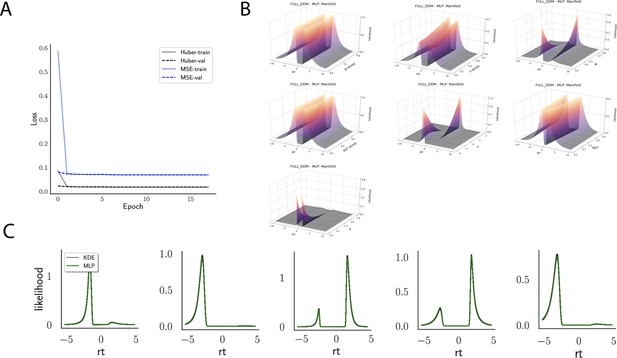

Likelihoods and manifolds: DDM.

(A) shows the training and validation loss for the multilayered perceptron (MLP) for the drift diffusion model across epochs. Training was driven by the Huber loss. The MLP learned the mapping , that is, the log-likelihood of a single-choice/RT datapoint given the parameters. Training error declines rapidly, and validation loss trailed training loss without further detriment (no overfitting). Please see Figure 2 and the 'Materials and methods' section for more details about training procedures. (B) illustrates the marginal likelihood manifolds for choices and RTs by varying one parameter in the trained region. Reaction times are mirrored for choice options −1, and 1, respectively, to aid visualization. (C) shows MLP likelihoods in green for four random parameter vectors, overlaid on top of a sample of 100 kernel density estimate (KDE)-based empirical likelihoods derived from 100k samples each. The MLP mirrors the KDE likelihoods despite not having been explicitly trained on these parameters. Moreover, the MLP likelihood sits firmly at the mean of sample of 100 KDEs. Negative and positive reaction times are to be interpreted as for (B).

Indeed, a simple interrogation of the learned likelihood manifolds shows that they smoothly vary in an interpretable fashion with respect to changes in generative model parameters (Figure 4B). Moreover, Figure 4C shows that the MLP likelihoods mirror those obtained by KDEs using 100,000 simulations, even though the model parameter vectors were drawn randomly and thus not trained per se. We also note that the MLP likelihoods appropriately filter out simulation noise (random fluctuations in the KDE empirical likelihoods across separate simulation runs of samples each). This observation can also be gleaned from Figure 4C, which shows the learned likelihood to sit right at the center of sampled KDEs (note that for each subplot 100 such KDEs were used). As illustrated in the Appendix, these observations hold across all tested models. One perspective on this is to consider the MLP likelihoods as equivalent to KDE likelihoods derived from a much larger number of underlying samples and interpolated. The results for the CNN (not shown to avoid redundancy) mirror the MLP results. Finally, while Figure 4 depicts the learned likelihood for the simple DDM for illustration purposes, the same conclusions apply to the learned manifolds for all of the tested models (as shown in Appendix 1—figures 1–6). Indeed inspection of those manifolds is insightful for facilitating interpretation of the dynamics of the underlying models, how they differ from each other, and the corresponding RT distributions that can be captured.

Parameter recovery

Benchmark: analytical likelihood available

While the above inspection of the learned manifolds is promising, a true test of the method is to determine whether one can perform proper inference of generative model parameters using the MLP and CNN. Such parameter recovery exercises are typically performed to determine whether a given model is identifiable for a given experimental setting (e.g., number of trials, conditions, etc.). Indeed, when parameters are collinear, recovery can be imperfect even if the estimation method itself is flawless (Wilson and Collins, 2019; Nilsson et al., 2011; Daw, 2011a). A Bayesian estimation method, however, should properly assign uncertainty to parameter estimates in these circumstances, and hence it is also important to evaluate the posterior variances over model parameters.

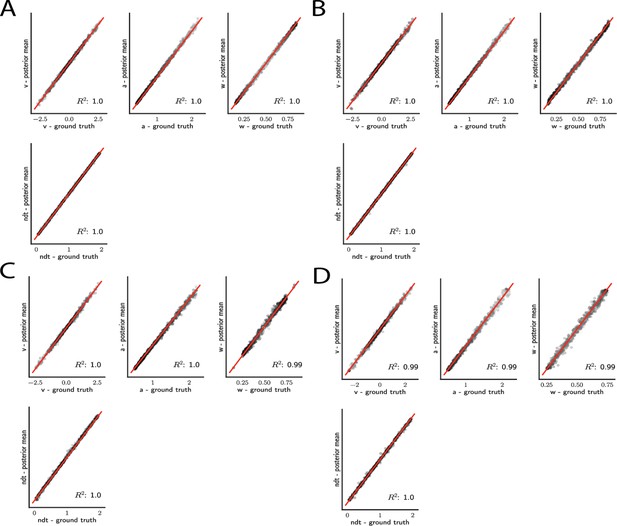

Thus as a benchmark, we first consider the basic DDM for which an arbitrarily close approximation to the analytical likelihood is available (Navarro and Fuss, 2009). This benchmark allows us to compare parameter recovery given (1) the analytical likelihood, (2) an approximation to the likelihood specified by training an MLP on the analytical likelihood (thus evaluating the potential loss of information incurred by the MLP itself), (3) an approximation to the likelihood specified by training an MLP on KDE-based empirical likelihoods (thus evaluating any further loss incurred by the KDE reconstruction of likelihoods), and (4) an approximate likelihood resulting from training the CNN architecture, on empirical histograms. Figure 5 shows the results for the DDM.

Simple drift diffusion model parameter recovery results for (A) analytical likelihood (ground truth), (B) multilayered perceptron (MLP) trained on analytical likelihood, (C) MLP trained on kernel density estimate (KDE)-based likelihoods (100K simulations per KDE), and (D) convolutional neural network trained on binned likelihoods.

The results represent posterior means, based on inference over datasets of size ‘trials’. Dot shading is based on parameter-wise normalized posterior variance, with lighter shades indicating larger posterior uncertainty of the parameter estimate.

For the simple DDM and analytical likelihood, parameters are nearly perfectly recovered given datapoints (‘trials’) (Figure 5A). Notably, these results are mirrored when recovery is performed using the MLP trained on the analytical likelihood (Figure 5B). This finding corroborates, as visually suggested by the learned likelihood manifolds, the conclusion that globally the likelihood function was well behaved. Moreover, only slight reductions in recoverability were incurred when the MLP was trained on the KDE likelihood estimates (Figure 5C), likely due to the known small biases in KDE itself (Turner et al., 2015). Similar performance is achieved using the CNN instead of MLP (Figure 5D).

As noted above, an advantage of Bayesian estimation is that we obtain an estimate of the posterior uncertainty in estimated parameters. Thus, a more stringent requirement is to additionally recover the correct posterior variance for a given dataset and model . One can already see visually in Figure 5C, D that posterior uncertainty is larger when the mean is further from the ground truth (lighter shades of gray indicate higher posterior variance). However, to be more rigorous one can assess whether the posterior variance is precisely what it should be.

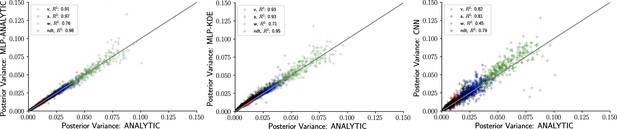

The availability of an analytical likelihood for the DDM, together with our use of sampling methods (as opposed to variational methods that can severely bias posterior variance), allows us to obtain the ‘ground truth’ uncertainty in parameter estimates. Figure 6 shows that the sampling from a MLP trained on analytical likelihoods, an MLP trained on KDE-based likelihoods, and a CNN all yield excellent recovery of the variance. For an additional run that involved datasets of size instead of , we observed a consistent decrease in posterior variance across all methods (not shown) as expected.

Inference using likelihood approximation networks (LANs) recovers posterior uncertainty.

Here, we leverage the analytic solution for the drift diffusion model to plot the ‘ground truth’ posterior variance on the x-axis, against the posterior variance from the LANs on the y-axis. (Left) Multilayered perceptrons (MLPs) trained on the analytical likelihood. (Middle) MLPs trained on kernel density estimate-based empirical likelihoods. (Right) Convolutional neural networks trained on binned empirical likelihoods. Datasets were equivalent across methods for each model (left to right) and involved samples.

No analytical likelihood available

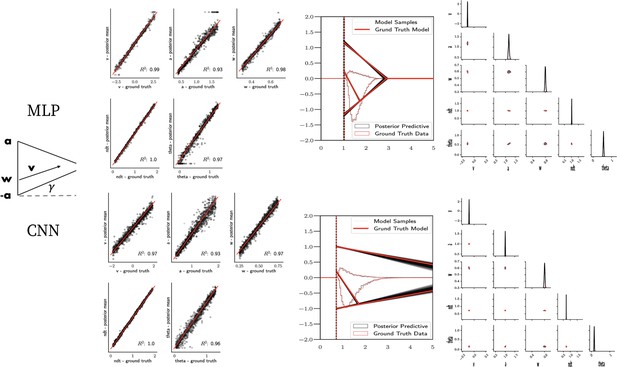

As a proof of concept for the more general ABC setting, we show parameter recovery results for two nonstandard models, the linear collapse (LC) and Weibull models, as described in the 'Test bed' section. The results are summarized in Figures 7 and 8 and described in more detail in the following two paragraphs.

Linear collapse model parameter recovery and posterior predictives.

(Left) Parameter recovery results for the multilayered perceptron (top) and convolutional neural network (bottom). (Right) Posterior predictive plots for two representative datasets. Model samples of all parameters (black) match those from the true generative model (red), but one can see that for the lower dataset, the bound trajectory is somewhat more uncertain (more dispersion of the bound). In both cases, the posterior predictive (black histograms) is shown as predicted choice proportions and RT distributions for upper and lower boundary responses, overlaid on top of the ground truth data (red; hardly visible since overlapping/matching).

Weibull model parameter recovery and posterior predictives.

(Left) Parameter recovery results for the multilayered perceptron (top) and convolutional neural network (bottom). (Right) Posterior predictive plots for two representative datasets in which parameters were poorly estimated (denoted in blue on the left). In these examples, model samples (black) recapitulate the generative parameters (red) for the nonboundary parameters, and the recovered bound trajectory is poorly estimated relative to the ground truth, despite excellent posterior predictives in both cases (RT distributions for upper and lower boundary, same scheme as Figure 7). Nevertheless, one can see that the net decision boundary is adequately recovered within the range of the RT data that are observed. Across all datasets, the net boundary is well recovered within the range of the data observed, and somewhat less so outside of the data, despite poor recovery of individual Weibull parameters α and β.

Parameter recovery

Figure 7 shows that both the MLP and CNN methods consistently yield very good to excellent parameter recovery performance for the LC model, with parameter-wise regression coefficients globally above . As shown in Figure 8, parameter recovery for the Weibull model is less successful, however, particularly for the Weibull collapsing bound parameters. The drift parameter , the starting point bias , and the nondecision time are estimated well; however, the boundary parameters , α, and β are less well recovered by the posterior mean. Judging by the parameter recovery plot, the MLP seems to perform slightly less well on the boundary parameters when compared to the CNN.

To interrogate the source of the poor recovery of α and β parameters, we considered the possibility that the model itself may have issues with identifiability, rather than poor fit. Figure 8 shows that indeed, for two representative datasets in which these parameters are poorly recovered, the model nearly perfectly reproduces the ground truth data in the posterior predictive RT distributions. Moreover, we find that whereas the individual Weibull parameters are poorly recovered, the net boundary is very well recovered, particularly when evaluated within the range of the observed dataset. This result is reminiscent of the literature on sloppy models (Gutenkunst et al., 2007), where sloppiness implies that various parameter configurations can have the same impact on the data. Moreover, two further conclusions can be drawn from this analysis. First, when fitting the Weibull model, researchers should interpret the bound trajectory as a latent parameter rather than the individual α and β parameters per se. Second, the Weibull model may be considered as viable only if the estimated bound trajectory varies sufficiently within the range of the empirical RT distributions. If the bound is instead flat or linearly declining in that range, the simple DDM or LC models may be preferred, and their simpler form would imply that they would be selected by any reasonable model comparison metric. Lastly, given our results the Weibull model could likely benefit from reparameterization if the desire is to recover individual parameters rather than the bound trajectory . Given the common use of this model in collapsing bound studies (Hawkins et al., 2015) and that the bound trajectories are nevertheless interpretable, we leave this issue for future work.

The Appendix shows parameter recovery studies on a number of other stochastic simulators with non-analytical likelihoods, described in the 'Test bed' section. The appendices show tables of parameter-wise recovery for all models tested. In general, recovery ranges from good to excellent. Given the Weibull results above, we attribute the less good recovery for some of these models to identifiability issues and specific dataset properties rather than to the method per se. We note that our parameter recovery studies here are in general constrained to the simplest inference setting equivalent to a single-subject, single-condition experimental design. Moreover, we use uninformative priors for all parameters of all models. Thus, these results provide a lower bound on parameter recoverability, provided of course that the datasets were generated from the valid parameter ranges on which the networks were trained; see Section 0.10 for how recovery can benefit from more complex experimental designs with additional task conditions, which more faithfully represents the typical inference scenario deployed by cognitive neuroscientists. Lastly, some general remarks about the parameter recovery performance. A few factors can negatively impact how well one can recover parameters. First, if the model generally suffers from identifiability issues, the resulting tradeoffs in the parameters can lead the MCMC chain to get stuck on the boundary for one or more parameters. This issue is endemic to all models and unrelated to likelihood-free methods or LANs, and should at best be attacked at the level of reparameterization (or a different experimental design that can disentangle model parameters). Second, if the generative parameters of a dataset are too close to (or beyond) the bounds of the trained parameter space, we may also end with a chain that gets stuck on the boundary of the parameter space. We confronted this problem by training on parameter spaces that yield response time distributions that are broader than typically observed experimentally for models of this class, while also excluding obviously defective parameter setups. Defective parameter setups were defined in the context of our applications as parameter vectors that generate data that never allow one or the other choice to occur (as in , data that concentrates more than half of the reaction times within a single 1 ms bin and data that generated mean reaction times beyond 10 s). These guidelines were chosen as a mix of basic rationale and domain knowledge regarding usual applications of DDMs to experimental data. As such, the definition of defective data may depend on the model under consideration.

Runtime

A major motivation for this work is the amortization of network training time during inference, affording researchers the ability to test a variety of theoretically interesting models for linking brain and behavior without large computational cost. To quantify this advantage, we provide some results on the posterior sampling runtimes using (1) the MLP with slice sampling (Neal, 2003) and (2) CNN with iterated importance sampling.

The MLP timings are based on slice sampling (Neal, 2003), with a minimum of samples. The sampler was stopped at some , for which the Geweke statistic (Geweke, 1992) indicated convergence (the statistic was computed once every 100 samples for ). Using an alternative sampler, based on differential evolution Markov chain Monte Carlo (DEMCMC) and stopped when the Gelman–Rubin (Gelman and Rubin, 1992), yielded very similar timing results and was omitted in our figures.

For the reported importance sampling runs, we used 200K importance samples per iteration, starting with γ values of 64, which was first reduced to 1 where in iteration , , before a stopping criterion based on relative improvement of the confusion metric was used.

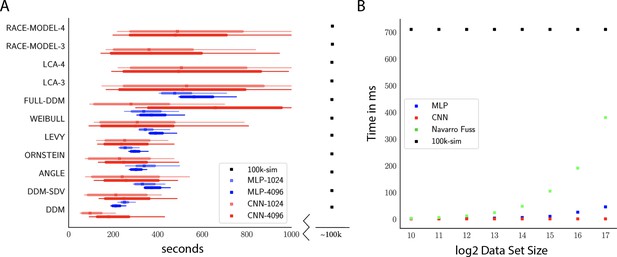

Figure 9A shows that all models can be estimated in the order of hundreds of seconds (minutes), comprising a speed improvement of at least two orders of magnitude compared to traditional ABC methods using KDE during inference (i.e., the PDA method motivating this work; Turner et al., 2015). Indeed, this estimate is a lower bound on the speed improvement: we extrapolate only the observed difference between network evaluation and online simulations, ignoring the additional cost of constructing and evaluating the KDE-based likelihood. We decided to use this benchmark because it provides a fairer comparison to more recent PDA approaches in which the KDE evaluations can be sped up considerably (Holmes, 2015).

Computation times.

(A) Comparison of sampler timings for the multilayered perceptron (MLP) and convolutional neural network (CNN) methods, for datasets of size 1024 and 4096 (respectively MLP-1024, MLP-4096, CNN-1024, CNN-4096). For comparison, we include a lower bound estimate of the sample timings using traditional PDA approach during online inference (using 100k online simulations for each parameter vector). 100K simulations were used because we found this to be required for sufficiently smooth likelihood evaluations and is the number of simulations used to train our networks; fewer samples can of course be used at the cost of worse estimation, and only marginal speedup since the resulting noise in likelihood evaluations tends to prevent chain mixing; see Holmes, 2015. We arrive at 100k seconds via simple arithmetic. It took our slice samplers on average approximately 200k likelihood evaluations to arrive at 2000 samples from the posterior. Taking 500 ms * 200,000 gives the reported number. Note that this is a generous but rough estimate since the cost of data simulation varies across simulators (usually quite a bit higher than the drift diffusion model [DDM] simulator). Note further that these timings scale linearly with the number of participants and task conditions for the online method, but not for likelihood approximation networks, where they can be in principle be parallelized. (B) compares the timings for obtaining a single likelihood evaluation for a given dataset. MLP and CNN refer to Tensorflow implementations of the corresponding networks. Navarro Fuss refers to a cython (Behnel et al., 2010) (cpu) implementation of the algorithm suggested (Navarro and Fuss, 2009) for fast evaluation of the analytical likelihood of the DDM. 100k-sim refers to the time it took a highly optimized cython (cpu) version of a DDM sampler to generate 100k simulations (averaged across 100 parameter vectors).

Notably, due to its potential for parallelization (especially on GPUs), our NN methods can even induce performance speedups relative to analytical likelihood evaluations. Indeed, Figure 9B shows that as the dataset grows runtime is significantly faster than even a highly optimized cython implementation of the Navarro Fuss algorithm (Navarro and Fuss, 2009) for evaluation of the analytic DDM likelihood. This is also noteworthy in light of the full-DDM (as described in the 'Test bed' section), for which it is currently common to compute the likelihood term via quadrature methods, in turn based on repeated evaluations of the Navarro Fuss algorithm. This can easily inflate the evaluation time by 1–2 orders of magnitude. In contrast, evaluation times for the MLP and CNN are only marginally slower (as a function of the slightly larger network size in response to higher dimensional inputs). We confirm (omitted as separate figure) from experiments with the HDDM Python toolbox that our methods end up approximately times faster for the full-DDM than the current implementation based on numerical integration, maintaining comparable parameter recovery performance. We strongly suspect there to be additional remaining potential for performance optimization.

Hierarchical inference

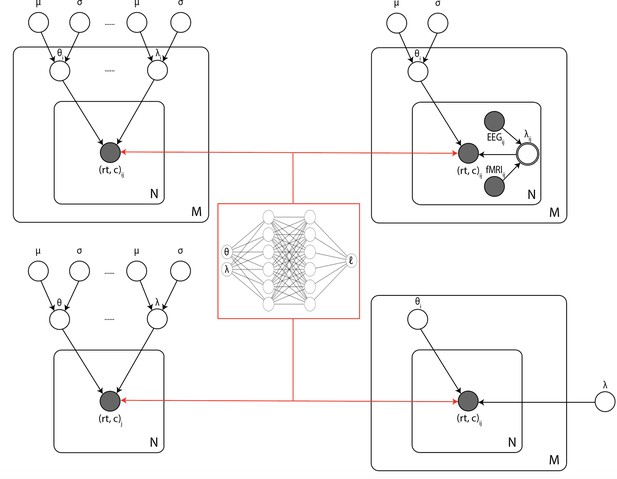

One of the principal benefits of LANs is that they can be directly extended – without further training – to arbitrary hierarchical inference scenarios, including those in which (1) individual participant parameters are drawn from group distributions, (2) some parameters are pooled and others separated across task conditions, and (3) neural measures are estimated as regressors on model parameters (Figure 10). Hierarchical inference is critical for improving parameter estimation particularly for realistic cognitive neuroscience datasets in which thousands of trials are not available for each participant and/or where one estimates impacts of noisy physiological signals onto model parameters (Wiecki et al., 2013; Boehm et al., 2018; Vandekerckhove et al., 2011; Ratcliff and Childers, 2015).

Illustration of the common inference scenarios applied in the cognitive neurosciences and enabled by our amortization methods.

The figure uses standard plate notation for probabilistic graphical models. White single circles represent random variables, white double circles represent variables computed deterministically from their inputs, and gray circles represent observations. For illustration, we split the parameter vector of our simulator model (which we call θ in the rest of the paper) into two parts θ and λ since some, but not all, parameters may sometimes vary across conditions and/or come from global distribution. (Upper left) Basic hierarchical model across M participants, with N observations (trials) per participant. Parameters for individuals are assumed to be drawn from group distributions. (Upper right) Hierarchical models that further estimate the impact of trial-wise neural regressors onto model parameters. (Lower left) Nonhierarchical, standard model estimating one set of parameters across all trials. (Lower right) Common inference scenario in which a subset of parameters (θ) are estimated to vary across conditions M, while others (λ) are global. Likelihood approximation networks can be immediately repurposed for all of these scenarios (and more) without further training.

To provide a proof of concept, we developed an extension to the HDDM Python toolbox (Wiecki et al., 2013), widely used for hierarchical inference of the DDM applied to such settings. Lifting the restriction of previous versions of HDDM to only DDM variants with analytical likelihoods, we imported the MLP likelihoods for all two-choice models considered in this paper. Note that GPU-based computation is supported out of the box, which can easily be exploited with minimal overhead using free versions of Google’s Colab notebooks. We generally observed GPUs to improve speed approximately fivefold over CPU-based setups for the inference scenarios we tested. Preliminary access to this interface and corresponding instructions can be found at https://github.com/lnccbrown/lans/tree/master/hddmnn-tutorial.

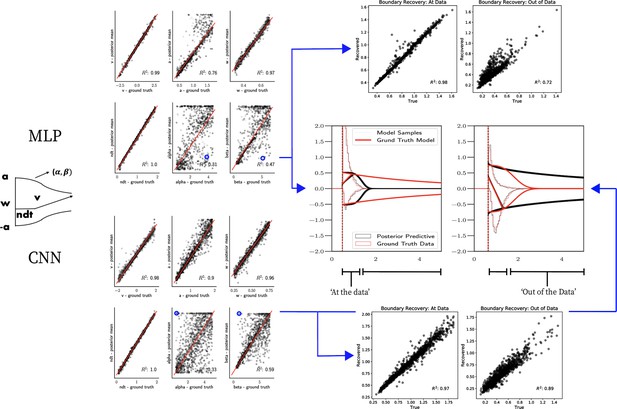

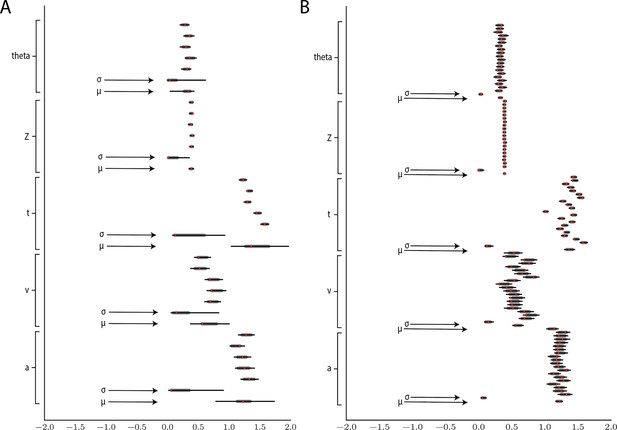

Figure 11 shows example results from hierarchical inference using the LC model, applied to synthetic datasets comprising 5 and 20 subjects (a superset of participants). Recovery of individual parameters was adequate even for five participants, and we also observe the expected improvement of recovery of the group-level parameters μ and σ for 20 participants.

Hierarchical inference results using the multilayered perceptron likelihood imported into the HDDM package.

(A) Posterior inference for the linear collapse model on a synthetic dataset with 5 participants and 500 trials each. Posterior distributions are shown with caterpillar plots (thick lines correspond to percentiles, thin lines correspond to percentiles) grouped by parameters (ordered from above . Ground truth simulated values are denoted in red. (B) Hierarchical inference for synthetic data comprising 20 participants and 500 trials each. μ and σ indicate the group-level mean and variance parameters. Estimates of group-level posteriors improve with more participants as expected with hierarchical methods. Individual-level parameters are highly accurate for each participant in both scenarios.

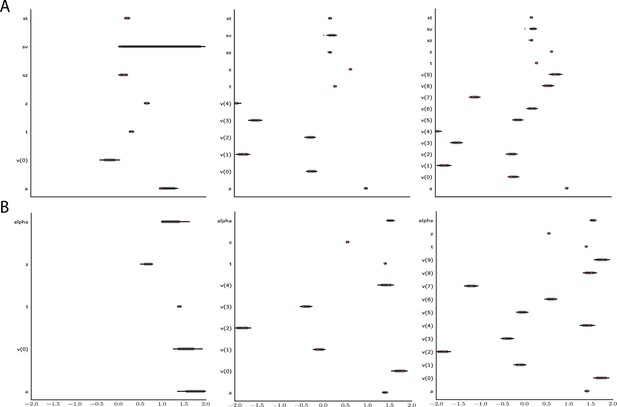

Figure 12 shows an example that illustrates how parameter recovery is affected when a dataset contains multiple experimental conditions (e.g., different difficulty levels). It is common in such scenarios to allow task conditions to affect a single (or subset)-model parameter (in the cases shown: ), while other model parameters are pooled across conditions. As expected, for both the full-DDM (A) and the Levy model (B), the estimation of global parameters is improved when increasing the number of conditions from 1 to 5 to 10 (left to right, where the former are subsets of the latter datasets). These experiments confirm that one can more confidently estimate parameters that are otherwise difficult to estimate such as the noise α in the Levy model and the standard deviation of the drift in the full-DDM.

Effect of multiple experimental conditions on inference.

The panel shows an example of posterior inference for 1, (left), 5 (middle), and 10 (right) conditions. (A) and (B) refer to the full drift diffusion model (DDM) and Levy model, respectively. The drift parameter is estimated to vary across conditions, while the other parameters are treated as global across conditions. Inference tends to improve for all global parameters when adding experimental conditions. Importantly, this is particularly evident for parameters that are otherwise notoriously difficult to estimate such as (trial-by-trial variance in drift in the full-DDM) and α (the noise distribution in the Levy model). Red stripes show the ground truth values of the given parameters.

Both of these experiments provide evidence that our MLPs provide approximate likelihoods which behave in accordance with what is expected from proper analytical methods, while also demonstrating their robustness to other samplers (i.e., we used HDDM slice samplers without further modification for all models).

We expect that proper setting of prior distributions (uniform in our examples) and further refinements to the slice sampler settings (to help mode discovery) can improve these results even further. We include only the MLP method in this section since it is most immediately amenable to the kind of trial-by-trial-level analysis that HDDM is designed for. We plan to investigate the feasibility of including the CNN method into HDDM in future projects.

Discussion

Our results demonstrate the promise and potential of amortized LANs for Bayesian parameter estimation of neurocognitive process models. Learned manifolds and parameter recovery experiments showed successful inference using a range of network architectures and posterior sampling algorithms, demonstrating the robustness of the approach.

Although these methods are extendable to any model of similar complexity, we focused here on a class of SSMs, primarily because the most popular of them – the DDM – has an analytic solution, and is often applied to neural and cognitive data. Even slight departures from the standard DDM framework (e.g., dynamic bounds or changes in the noise distribution) are often not considered for full Bayesian inference due to the computational complexity associated with traditional ABC methods. We provide access to the learned likelihood functions (in the form of network weights) and code to enable users to fit a variety of such models with orders of magnitude speedup (minutes instead of days). In particular, we provided an extension to the commonly used HDDM toolbox (Wiecki et al., 2013) that allows users to apply these models to their own datasets immediately. We also provide access to code that would allow users to train their own likelihood networks and perform recovery experiments, which can then be made available to the community.

We offered two separate approaches with their own relative advantages and weaknesses. The MLP is suited for evaluating likelihoods of individual observations (choices, response times) given model parameters, and as such can be easily extended to hierarchical inference settings and trial-by-trial regression of neural activity onto model parameters. We showed that importing the MLP likelihood functions into the HDDM toolbox affords fast inference over a variety of models without tractable likelihood functions. Moreover, these experiments demonstrated that use of the NN likelihoods even confers a performance speedup over the analytical likelihood function – particularly for the full-DDM, which otherwise required numerical methods on top of the analytical likelihood function for the simple DDM.

Conversely, the CNN approach is well suited for estimating likelihoods across parameters for entire datasets in parallel, as implemented with importance sampling. More generally and implying potential further improvements, any sequential MC method may be applied instead. These methods offer a more robust path to sampling from multimodal posteriors compared to MCMC, at the cost of the curse of dimensionality, rendering them potentially less useful for highly parameterized problems, such as those that require hierarchical inference. Moreover, representing the problem directly as one of learning probability distributions and enforcing the appropriate constraints by design endows the CNN approach with a certain conceptual advantage. Finally, we note that in principle (with further improvements) trial-level inference is possible with the CNN approach, and vice versa, importance sampling can be applied to the MLP approach.

In this work, we employed sampling methods (MCMC and importance sampling) for posterior inference because in the limit they are well known to allow for accurate estimation of posterior distributions on model parameters, including not only mean estimates but their variances and covariances. Accurate estimation of posterior variances is critical for any hypothesis testing scenario because it allows one to be confident about the degree of uncertainty in parameter estimates. Indeed, we showed that for the simple DDM we found that posterior inference using our networks yielded nearly perfect estimation of the variances of model parameters (which are available due to the analytic solution). Of course, our networks can also be deployed for other estimation methods even more rapidly: they can be immediately used for MLE via gradient descent or within other approximate inference methods, such as variational inference (see Acerbi, 2020 for a related approach).

Other approaches exist for estimating generalized diffusion models. A recent example, not discussed thus far, is the pyDDM Python toolbox (Shinn et al., 2020), which allows MLE of generalized drift diffusion models. The underlying solver is based on the Fokker–Planck equations, which allow access to approximate likelihoods (where the degree of approximation is traded off with computation time/discretization granularity) for a flexible class of diffusion-based models, notably allowing arbitrary evidence trajectories, starting point, and nondecision time distributions. However, to incorporate trial-by-trial effects would severely inflate computation time (on the order of the number of trials) since the solver would have to operate on a trial-by-trial level. Moreover, any model that is not driven by Gaussian diffusion, such as the Levy model we considered here or the linear ballistic accumulator, is out of scope with this method. In contrast, LANs can be trained to estimate any such model, limited only by the identifiability of the generative model itself. Finally, pyDDM does not afford full Bayesian estimation and thus quantification of parameter uncertainty and covariance.

We moreover note that our LAN approach can be useful even if the underlying simulation model admits other likelihood approximations, regardless of trial-by-trial effect considerations, since a forward pass through a LAN may be speedier. Indeed, we observed substantial speedups in HDDM for using our LAN method to the full-DDM, for which numerical methods were previously needed to integrate over inter-trial variability.

We emphasize that our test bed application to SSMs does not delimit the scope of application of LANs. Neither are reasonable architectures restricted to MLPs and CNNs (see Lueckmann et al., 2019; Papamakarios et al., 2019b for related approaches that use completely different architectures). Models with high-dimensional (roughly gt15) parameter spaces may present a challenge for our global amortization approach due to the curse of dimensionality. Further, models with discrete parameters of high cardinality may equally present a given network with training difficulties. In such cases, other methods may be preferred over likelihood amortization generally (e.g., Acerbi, 2020); given that this is an open and active area of research, we can expect surprising developments that may in fact turn the tide again in the near future.

Despite some constraints, this still leaves a vast array of models in reach for LANs, of which our test bed can be considered only a small beginning.

By focusing on LANs, our approach affords the flexibility of networks serving as plug-ins for hierarchical or arbitrarily complex model extensions. In particular, the networks can be immediately transferred, without further training, to arbitrary inference scenarios in which researchers may be interested in evaluating links between neural measures and model parameters, and to compare various assumptions about whether parameters are pooled and split across experimental manipulations. This flexibility in turn sets our methods apart from other amortization and NN-based ABC approaches offered in the statistics, machine learning, and computational neuroscience literature (Papamakarios and Murray, 2016; Papamakarios et al., 2019a; Gonçalves et al., 2020; Lueckmann et al., 2019; Radev et al., 2020b), while staying conceptually extremely simple. Instead of focusing on extremely fast inference for very specialized inference scenarios, our approach focuses on achieving speedy inference while not implicitly compromising modeling flexibility through amortization step.

Closest to our approach is the work of Lueckmann et al., 2019, and Papamakarios et al., 2019b, both of which attempt to target the likelihood with a neural density estimator. While flexible, both approaches imply the usage of summary statistics, instead of a focus on trial-wise likelihood functions. Our work can be considered a simple alternative with explicit focus on trial-wise likelihoods.

Besides deep learning-based approaches, another major machine learning-inspired branch of the ABC literature concerns log-likelihood and posterior approximations via Gaussian process surrogates (GPSs) (Meeds and Welling, 2014; Järvenpää et al., 2018; Acerbi, 2020). A major benefit of GPSs lies in the ability for clever training data selection via active learning since such GPSs allow uncertainty quantification out of the box, which in turn can be utilized for the purpose of targeting high-uncertainty regions in parameter space. GPS-based computations scale with the number of training examples, however, which make them much more suitable for minimizing the computational cost for a given inference scenario than facilitating global amortization as we suggest in this paper (for which one usually need larger sets of training data than can traditionally be handled efficiently by GPS). Again when our approach is applicable, it will offer vastly greater flexibility once a LAN is trained.

Limitations and future work

There are several limitations of the methods presented in this paper, which we hope to address in future work. While allowing for great flexibility, the MLP approach suffers from the drawback that we do not enforce (or exploit) the constraint that is a valid probability distribution, and hence the networks have to learn this constraint implicitly and approximately. Enforcing this constraint has the potential to improve estimation of tail probabilities (a known issue for KDE approaches to ABC more generally; Turner et al., 2015).

The CNN encapsulation exploits the fact that , however, makes estimation of trial-by-trial effects more resource hungry. We plan to investigate the potential of the CNN for trial-by-trial estimation in future research.

A potential solution that combines the strengths of both the CNN and MLP methods is to utilize mixture density networks to encapsulate the likelihood functions. We are currently exploring this avenue. Mixture density networks have been successfully applied in the context of ABC (Papamakarios and Murray, 2016); however, training can be unstable without extra care (Guillaumes, 2017). Similarly, invertible flows (Rezende and Mohamed, 2015) and/or mixture density networks Bishop, 1994 may be used to learn likelihood functions (Papamakarios et al., 2019b; Lueckmann et al., 2019); however, the philosophy remains focused on distributions of summary statistics for single datasets. While impressive improvements have materialized at the intersection of ABC and deep learning methods (Papamakarios et al., 2019a; Greenberg et al., 2019; Gonçalves et al., 2020) (showing some success with posterior amortization for models up to 30 parameters, but restricted to a local region of high posterior density in the resulting parameter space), generally less attention has been paid to amortization methods that are not only of case-specific efficiency but sufficiently modular to serve a large variety of inference scenarios (e.g., Figure 10). This is an important gap that we believe the popularization of the powerful ABC framework in the domain of experimental science hinges upon. A second and short-term avenue for future work is the incorporation of our presented methods into the HDDM Python toolbox (Wiecki et al., 2013) to extend its capabilities to a larger variety of SSMs. Initial work in this direction has been completed, the alpha version of the extension being available in the form of a tutorial at https://github.com/lnccbrown/lans/tree/master/hddmnn-tutorial.

Our current training pipeline can be further optimized on two fronts. First, no attempt was made to minimize the size of the network needed to reliably approximate likelihood functions so as to further improve computational speed. Second, little attempt was made to optimize the amount of training provided to networks. For the models explored here, we found it sufficient to simply train the networks for a very large number of simulated datapoints such that interpolation across the manifold was possible. However, as model complexity increases, it would be useful to obtain a measure of the networks’ uncertainty over likelihood estimates for any given parameter vector. Such uncertainty estimates would be beneficial for multiple reasons. One such benefit would be to provide a handle on the reliability of sampling, given the parameter region. Moreover, such uncertainty estimates could be used to guide the online generation of training data to train the networks in regions with high uncertainty. At the intersection of ABC and NNs, active learning has been explored via uncertainty estimates based on network ensembles (Lueckmann et al., 2019). We plan to additionally explore the use of Bayesian NNs, which provide uncertainty over their weights, for this purpose (Neal, 1995).