Mapping brain-behavior space relationships along the psychosis spectrum

Abstract

Difficulties in advancing effective patient-specific therapies for psychiatric disorders highlight a need to develop a stable neurobiologically grounded mapping between neural and symptom variation. This gap is particularly acute for psychosis-spectrum disorders (PSD). Here, in a sample of 436 PSD patients spanning several diagnoses, we derived and replicated a dimensionality-reduced symptom space across hallmark psychopathology symptoms and cognitive deficits. In turn, these symptom axes mapped onto distinct, reproducible brain maps. Critically, we found that multivariate brain-behavior mapping techniques (e.g. canonical correlation analysis) do not produce stable results with current sample sizes. However, we show that a univariate brain-behavioral space (BBS) can resolve stable individualized prediction. Finally, we show a proof-of-principle framework for relating personalized BBS metrics with molecular targets via serotonin and glutamate receptor manipulations and neural gene expression maps derived from the Allen Human Brain Atlas. Collectively, these results highlight a stable and data-driven BBS mapping across PSD, which offers an actionable path that can be iteratively optimized for personalized clinical biomarker endpoints.

Introduction

Mental health conditions cause profound disability, yet most treatments offer limited efficacy across psychiatric symptoms (Tohen et al., 2003; McEvoy et al., 2007; aan het Rot et al., 2010; Cipriani et al., 2018). A key step toward developing more effective therapies for specific psychiatric symptoms is reliably mapping them onto underlying neural targets. This goal is particularly challenging because neuropsychiatric diagnoses and consequently drug development still operate under ‘legacy’ categorical constraints, which were not quantitatively informed by neural data.

Critically, diagnostic systems in psychiatry (e.g. the Diagnostic and Statistical Manual of Mental Disorders (DSM) [American Psychiatric Association, 1994]) were built to aid clinical consensus, but were not designed to guide quantitative mapping of symptoms onto neural alterations (Phillips et al., 2008; Gillihan and Parens, 2011). Consequently, the current diagnosis system cannot optimally map onto patient-specific brain-behavioral alterations. This challenge is particularly evident along the psychosis spectrum disorders (PSD) where there is notable shared symptom variation across distinct DSM diagnostic categories, including schizophrenia (SZP), schizo-affective (SADP), bipolar disorder with psychosis (BPP). For instance, despite BPP being a distinct diagnosis, BPP patients exhibit similar but attenuated psychosis symptoms and neural alterations similar to SZP (e.g. thalamic functional connectivity (FC) [Anticevic et al., 2014]). It is essential to quantitatively map such shared clinical variation onto common neural alterations, to circumvent categorical constraints for biomarker development (Casey et al., 2013; Phillips et al., 2008; Gillihan and Parens, 2011) – a key goal for development of neurobiologically informed personalized therapies (Anticevic et al., 2014; Casey et al., 2013).

Recognizing the limitations of categorical frameworks, the NIMH's Research Domain Criteria (RDoC) initiative introduced dimensional mapping of functional domains on to neural circuits (Insel, 2014). This motivated cross-diagnostic multisite studies for mapping PSD symptom and neural variation (Tamminga et al., 2014; Koutsouleris et al., 2018; Casey et al., 2018; Di Martino et al., 2014). Multivariate neurobehavioral analyses across PSD and mood spectra reported brain-behavioral relationships across diagnoses, with the goal of informing individualized treatment (Drysdale et al., 2017). These studies attempted to address the challenge of moving beyond traditional a priori clinical scales, which provide composite scores (Cronbach and Meehl, 1955) that may not optimally capture neural variation (Gillihan and Parens, 2011; Barch et al., 2013). For instance, despite many data-driven dimensionality-reduction symptom studies (van der Gaag et al., 2006a; Lindenmayer et al., 1994; Emsley et al., 2003; Dollfus et al., 1996; Blanchard and Cohen, 2006; Chen et al., 2020; Lefort-Besnard et al., 2018), a common approach in PSD neuroimaging research is still to sum ‘positive’ or ‘negative’ psychosis symptoms into a single score for relating to neural measures (Ji et al., 2019a; Anticevic et al., 2014). Importantly, neural alterations in PSD may reflect a more complex weighted combination of symptoms than a priori composite scores.

While multivariate neurobehavioral studies have a way to address this, such studies face the risk of failing to replicate due to overfitting (Dinga et al., 2019), arising from high dimensionality of behavioral and neural features and a comparatively limited number of subjects (Helmer et al., 2020). Notably, current state-of-the-art large-scale clinical neuroimaging studies have target enrollment totals of ∼200–600 subjects (https://www.humanconnectome.org/disease-studies). We therefore wanted to test whether a reproducible neurobehavioral geometry can be derived with a sample size that is on par with current consortia studies in psychiatry.

We hypothesized that a linearly weighted low-dimensional symptom solution (capturing key disease-relevant information) may produce a robust and reporoducible univariate brain-behavioral mapping. Indeed, recent work used dimensionality reduction methods successfully to compute a neural mapping across canonical SZP symptoms (Chen et al., 2020). However, it remains unknown if this approach generalizes across PSD. Moreover, it is unknown if incorporating cognitive assessment, a hallmark and untreated PSD symptom (Barch et al., 2013), explains neural feature variation that is distinct from canonical PSD symptoms. Finally, prior work has not tested if a low-dimensional symptom-neural mapping can be predictive at the single patient level – a prerequisite for individualized clinical endpoints.

To inform these gaps, we first tested two key questions: (i) Can data-reduction methods reliably reveal symptom axes across PSD that include both canonical symptoms and cognitive deficits? (ii) Do these lower dimensional symptom axes map onto a reproducible brain-behavioral solution across PSD? Specifically, we combined fMRI-derived resting-state measures with psychosis and cognitive symptoms (Canuso et al., 2008; Kay et al., 1987) obtained from a public multi-site cohort of 436 PSD patients and 202 healthy individuals collected by the Bipolar-Schizophrenia Network for Intermediate Phenotypes (BSNIP-1) consortium across six sites in North America (Tamminga et al., 2014). The dataset included included 150 patients formally diagnosed with BPP, 119 patients diagnosed SADP, and 167 patients diagnosed with SZP (Appendix 1—table 2). This cohort enabled cross-site symptom-neural comparisons across multiple psychiatric diagnostic categories, which we then mapped onto specific neural circuits. First, we tested if dimensionality reduction of PSD symptoms revealed a stable solution for individual patient prediction. Next, we tested if this low-dimensional symptom solution yields novel, stable, and statistically robust neural mapping compared to canonical composite symptom scores or DSM diagnoses. We then tested if the computed symptom-neural mapping is reproducible across symptom axes and actionable for individual patient prediction. Finally, we anchor the derived symptom-relevant neural maps by computing their similarity against mechanistically-informed neural maps. Here we used independently collected pharmacological fMRI maps from healthy adults in response to putative PSD receptor treatment targets (glutamate via ketamine and serotonin via LSD) (Preller et al., 2018; Anticevic et al., 2015). We also computed transcriptomic maps from the Allen Human Brain Atlas (AHBA) (Hawrylycz et al., 2012; Burt et al., 2018) for genes implicated in PSD. The primary purpose of this study was to derive a robust and reproducible symptom-neural geometry across symptom domains in chronic PSD that can be resolved at the single-subject level and mechanistically linked to molecular benchmarks. This necessitated the development of novel methods and rigorous power analytics (as we found that existing multivariate methods are drastically underpowered [Helmer et al., 2020]), which we have also presented in the paper for full transparency and reproducibility. This approach can be iteratively improved upon to inform neural mechanisms underlying PSD symptom variation, and in turn applied to other psychiatric neuro-behavioral spectra.

To our knowledge, no study to date has mapped a data-reduced symptom geometry encompassing a transdiagnostic PSD cohort across combined cognitive and psychopathology symptom domains, with demonstrable statistical stability and reproducibility at the single subject level. Additionally, this symptom geometry can be mapped robustly onto neural data to achieve reproducible group-level effects as well as individual-level precision following neural feature selection. Furthermore, while other other studies have evaluated relationships between neural maps and complementary molecular datasets (e.g. transcriptomics), no study to our knowledge has benchmarked single-subject selected neural maps using reproducible neurobehavioral features against both pharmacological neuroimaging and gene expression maps that may inform single subject selection for targeted treatment. Collectively, this study used data-driven dimensionality-reduction methods to derive reproducible symptom dimensions across 436 PSD patients and mapped them onto novel and robust neuroimaging features. These effects were then benchmarked against molecular imaging targets, outlining an actionable path toward personalized clinical neuro-behavioral endpoints.

Results

The key questions and results of this study are summarized in Appendix 1—table 5 and an overview of the workflow is presented in Appendix 1—figure 1. Additionally, for convenient reference, a glossary of the key terms and abbreviations used throughout the study are provided in Appendix 1—table 1.

Dimensionality-reduced PSD symptom variation is stable and reproducible

First, to evaluate PSD symptom variation, we examined core PSD psychopathology metrics captured by two instruments: the Brief Assessment of Cognition in Schizophrenia (BACS) and the Positive and Negative Syndrome Scale (PANSS). We refer to these 36 items as ‘symptom measures’ throughout the rest of the paper. We observed group mean differences across DSM diagnoses (Figure 1A, p<0.05 Bonferroni corrected); however, symptom measure distributions revealed notable overlap that crossed diagnostic boundaries (Tamminga et al., 2014; Keshavan et al., 2011). Furthermore, we observed marked collinearity between symptom measures across the PSD sample (Figure 1B), indicating that a dimensionality-reduced solution may sufficiently capture meaningful varation in this symptom space. We hypothesized that such a dimensionality-reduced symptom solution may improve PSD symptom-neural mapping as compared to pre-existing symptom scales.

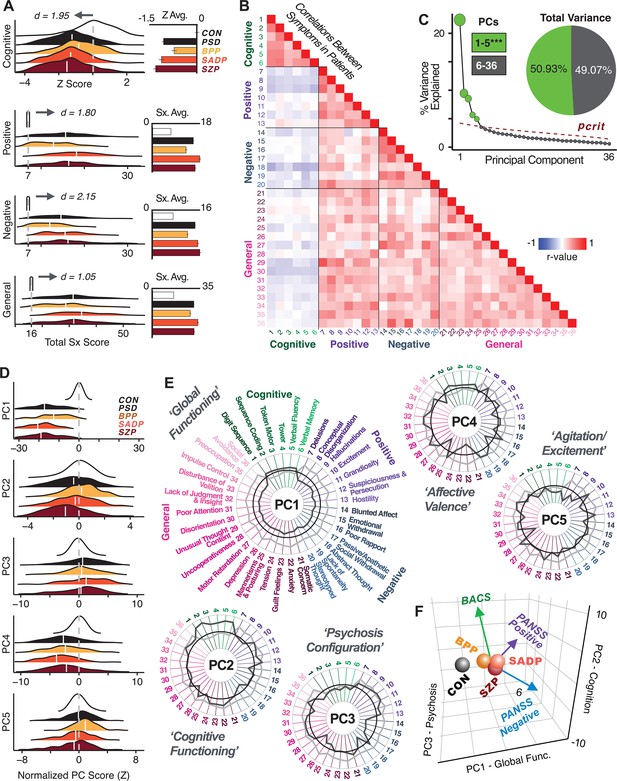

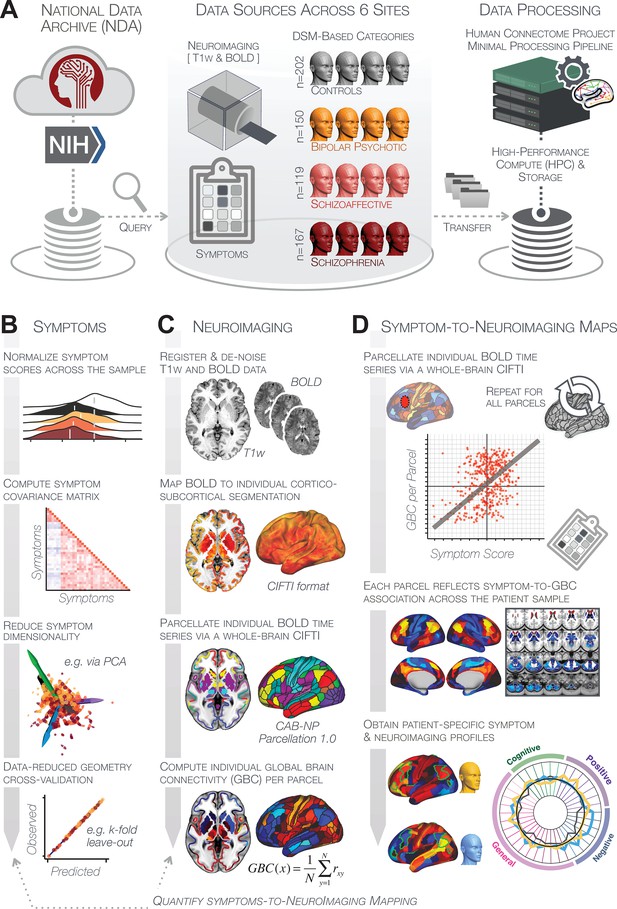

Quantifying data-driven low-dimensional variation of cross-diagnostic psychosis spectrum disorder (PSD) symptoms and cognitive deficits.

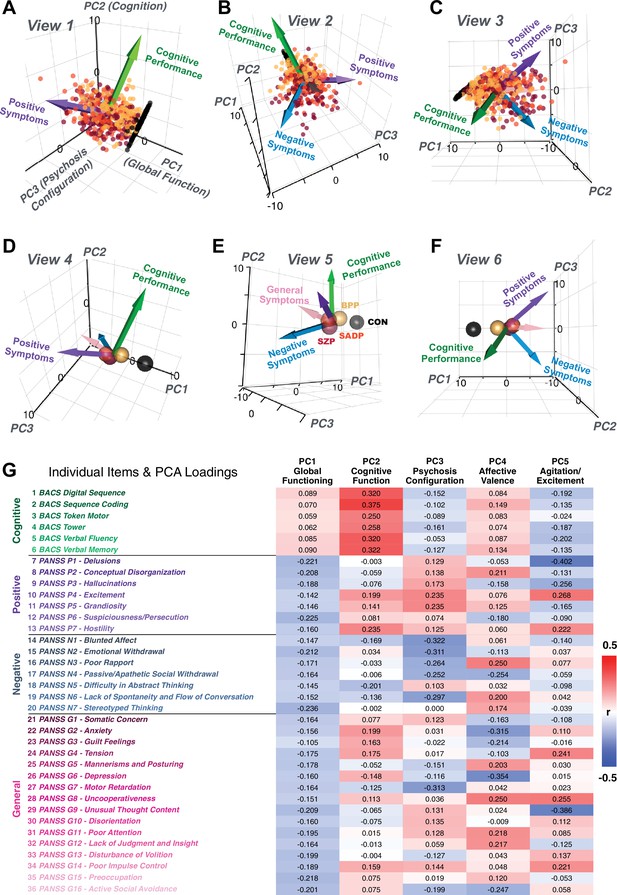

(A) Distributions of symptom scores for each of the DSM diagnostic groups across core psychosis symptom measures (PANSS positive, negative, and general symptoms tracking illness severity) and cognitive deficits (BACS composite cognitive performance). BPP: bipolar disorder with psychosis (yellow, N = 150); SADP: schizo-affective disorder (orange, N = 119); SZP: schizophrenia (red, N = 167); All PSD patients (black, N = 436); Controls (white, N = 202). Bar plots show group means; error bars show standard deviations. (B) Correlations between 36 symptom measures across all PSD patients (N = 436). (C) Screeplot shows the % variance explained by each of the principal components (PCs) from a PCA performed using all 36 symptom measures across 436 PSD patients. The size of each point is proportional to the variance explained. The first five PCs (green) survived permutation testing (p<0.05, 5000 permutations). Together they capture 50.93% of all symptom variance (inset). (D) Distribution plots showing subject scores for the five significant PCs for each of the clinical groups, normalized relative to the control group. Note that control subjects (CON) were not used to derive the PCA solution; however, all subjects, including CON, can be projected into the data-reduced symptom geometry. (E) Loading profiles shown in dark gray for the 36 PANSS/BACS symptom measures on the five significant PCs. Each PC (‘Global Functioning’, ‘Cognitive Functioning’, ‘Psychosis Configuration’, ‘Affective Valence’, ‘Agitation/Excitement’) was named based on the pattern of loadings on symptom measures. See Appendix 1—figure 2G for numerical loading values. The PSD group mean score on each symptom measure is also shown, in light gray (scaled to fit on the same radarplots). Note that the group mean configuration resembles the PC1 loading profile most closely (as PC1 explains the most variance in the symptom measures). (F) PCA solution shown in the coordinate space defined by the first three PCs. Colored arrows show a priori composite PANSS/BACS vectors projected into the PC1-3 coordinate space. The a priori composite symptom vectors do not directly align with data-driven PC axes, highlighting that PSD symptom variation is not captured fully by any one aggregate a priori symptom score. Spheres denote centroids (i.e. center of mass) for each of the patient diagnostic groups and control subjects. Alternative views showing individual patients and controls projected into the PCA solution are shown in Appendix 1—figure 2A-F.

Here, we report results from a principal component analysis (PCA) as it produces a deterministic solution with orthogonal axes (i.e. no a priori number of factors needs to be specified) and explains all variance in symptom measures. Results were highly consistent with prior symptom-reduction studies in PSD: we identified five PCs (Figure 1C), which captured ∼50.93% of all variance (see Materials and methods and Appendix 1—figure 2; Chen et al., 2020).

The key innovation here is the combined analysis across PSD diagnoses of core PSD symptoms and cognitive deficits, a fundamental PSD feature (Barch et al., 2013). The five PCs revealed few distinct boundaries between DSM categories (Figure 1D). Notably, for PCs 2-5 we observed substantial overlap in PC scores between controls and patients for all DSM diagnostic groups. This overlap is not unexpected, given that behaviors measured by the BACS and PANSS (e.g. mood, cognition) occur to some extent in ‘healthy’ individuals not meeting DSM diagnostic criteria (Verdoux and van Os, 2002; Nuevo et al., 2012; Kelleher and Cannon, 2011; Stefanis et al., 2002). In contrast, PC1 showed marked differentiation between PSD and CON, reflecting global functioning which was reduced across DSM diagnostic groups.

Figure 1E highlights loading configurations of symptom measures forming each PC. To aid interpretation, we assigned a name for each PC based on its most strongly weighted symptom measures. This naming is qualitative but informed by the pattern of loadings of the original 36 symptom measures. For example, PC1 was highly consistent with a general impairment dimension (i.e. ‘Global Functioning’); PC2 reflected variation in cognition (i.e. ‘Cognitive Functioning’); PC3 indexed a complex configuration of psychosis-spectrum relevant items (i.e. ‘Psychosis Configuration’); PC4 captured variation in mood and anxiety related items (i.e. ‘Affective Valence’); finally, PC5 reflected variation in arousal and level of excitement (i.e. ‘Agitation/Excitation’). For instance, a generally impaired patient would have a highly negative PC1 score, which would reflect low performance on cognition and elevated scores on most other symptoms. Conversely, an individual with a high positive PC3 score would exhibit delusional, grandiose, and/or hallucinatory behavior, whereas a person with a negative PC3 score would exhibit motor retardation, social avoidance, and possibly a withdrawn emotional state with blunted affect (Gelenberg, 1976). Comprehensive loadings for all five PCs are shown in Appendix 1—figure 1. Figure 1F highlights the mean of each of the three diagnostic groups (colored spheres) and healthy controls (black sphere) projected into a three-dimensional orthogonal coordinate system for PCs 1,2 and 3 (x,y,z axes respectively; alternative views of the three-dimensional coordinate system with all patients projected are shown in Appendix 1—figure 1). Critically, PC axes were not parallel with traditional aggregate symptom scales. For instance, PC3 is angled at ∼45° to the dominant direction of PANSS Positive and Negative symptom variation (purple and blue arrows respectively in Figure 1F).

PC3 loads most strongly onto hallmark symptoms of PSD (including strong positive loadings onto PANSS Positive symptom and strong negative loadings onto most PANSS Negative symptoms). Therefore, we focus on PC3 as an opportunity to quantify a fully data-driven dimension of symptom variation that is highly characteristic of the PSD patient population. Additionally, this bi-directional symptom axis captured shared variance from additional symptoms, such as PANSS General items and cognition. PC3 provides an empirical demonstration that using a data-driven dimensionality-reduced solution (via PCA) can reveal novel symptom patterns underlying PSD psychopathology.

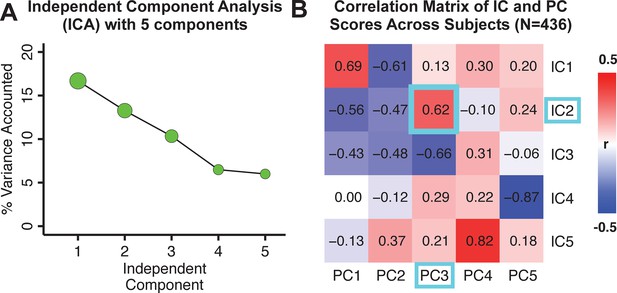

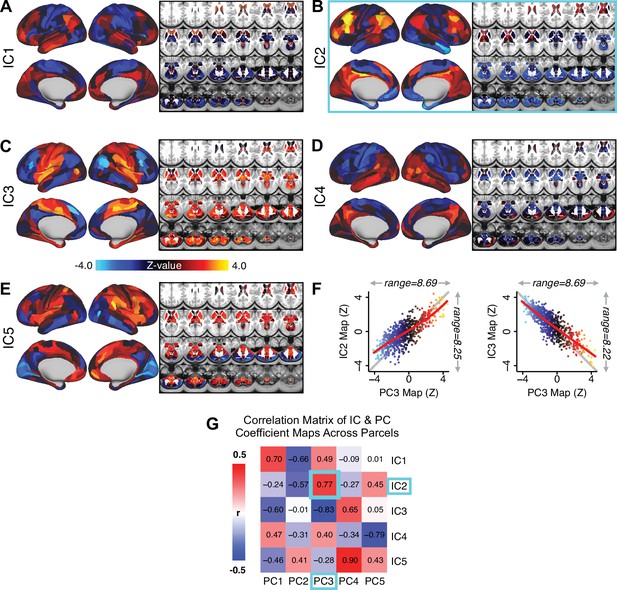

Notably, independent component analysis (ICA), an alternative dimensionality reduction procedure which does not enforce component orthogonality, produced similar effects for this PSD sample (see Appendix 1 - Note 1 and Appendix 1—figure 3A). Certain pairs of components between the PCA and ICA solutions appear to be highly similar and directly comparable (IC5 and PC4; IC4 and PC5) (Appendix 1—figure 3B). On the other hand, PCs 1–3 and ICs 1–3 do not exhibit a one-to-one mapping. For example, PC3 appears to correlate positively with IC2 and equally strongly negatively with IC3, suggesting that these two ICs are oblique to the PC and perhaps reflect symptom variation that is explained by a single PC. The orthogonality of the PCA solution forces the resulting components to capture maximally separated, unique symptom variance, which in turn map robustly on to unique neural circuits. We observed that the data may be distributed in such a way that highly correlated independent components emerge in the ICA, which do not maximally separate the symptom variance associated with neural variance. We demonstrate this by plotting the relationship between parcel beta coefficients for the map versus the and maps (Appendix 1—figure 11G).

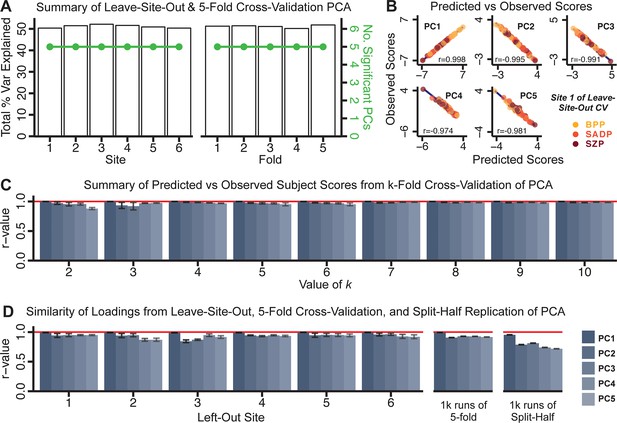

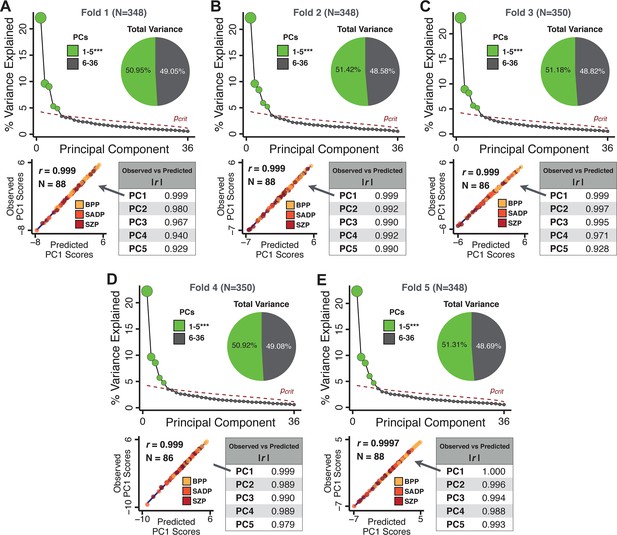

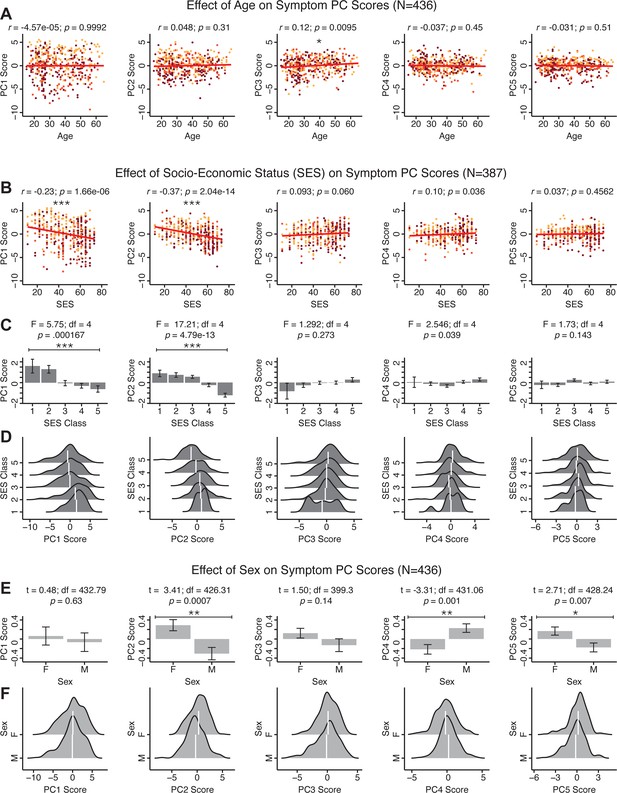

Next, we show that the PCA solution was highly stable when tested across sites, and k-fold cross-validations, and was reproducible in independent split-half samples. First, we show that the symptom-derived PCA solution remained highly robust across all sites (Figure 2A) and 5-fold cross-validation iterations (see Materials and methods). The total proportion of variance explained as well as the total number of significant PCs remained stable (Figure 2A). Second, PCA loadings accurately and reliably computed the scores of subjects in a hold-out sample (Figure 2B). Specifically, for each left-out site, we tested if single-subject predicted scores were similar to the originally observed scores of these same subjects in the full PCA (i.e. performed with all 436 subjects). This similarity was high for all sites (Appendix 1—figure 4). Also, we verified that the predicted-versus-observed PCA scores also remained similar via a k-fold cross-validation (for k = 2 to 10, Figure 2C and Appendix 1—figure 3). Finally, across all five PCs, predicted-to-observed similarity of PCA loadings was very high. Moreover, PCA loadings were stable when testing via leave-site-out analysis and 5-fold cross-validation. We furthermore demonstrated the reproducibility of the solution using 1000 independent split-half replications (Figure 2D). For each run of split-half replication, PSD subjects were split into two samples and a PCA was performed independently for each sample. The loadings from both PCAs were then compared using a Pearson’s correlation. Importantly, results were not driven by medication status or dosage (Appendix 1—figure 6). Collectively, these data reduction analyses strongly support a stable and reproducible low-rank PSD symptom geometry.

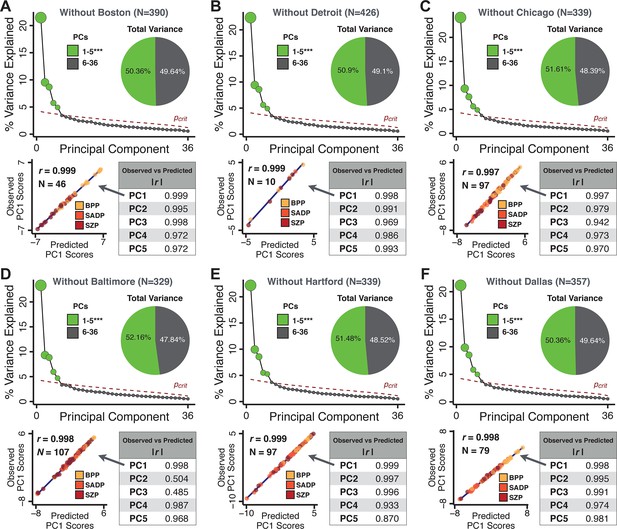

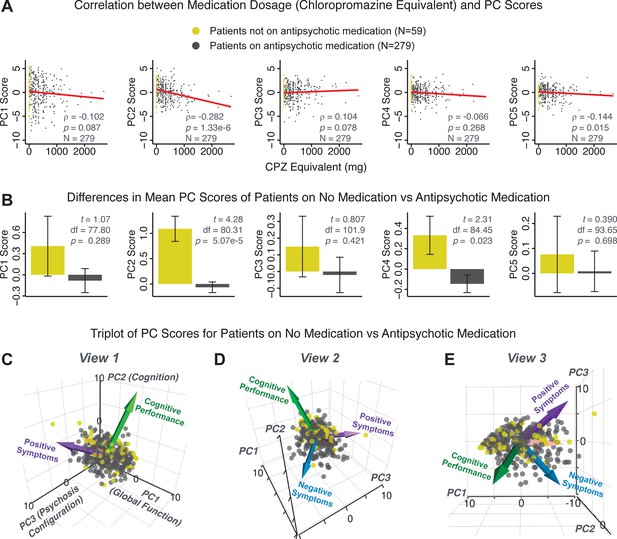

Dimensionality reduction of PSD symptom measures is highly stable and reproducible.

(A) PCA solutions for leave-one-site out cross-validation (left) and 5-fold bootstrapping (right) explain a consistent total proportion of variance with a consistent number of significant PCs after permutation testing. Full results available in Appendix 1—figure 4 and Appendix 1—figure 5. (B) Predicted versus observed single subject PC scores for all five PCs are shown for an example site (shown here for Site 1). (C) Mean correlations between predicted and observed PC scores across all patients calculated via k-fold bootstrapping for k = 2–10. For each k iteration, patients were randomly split into k folds. For each fold, a subset of patients was held out and PCA was performed on the remaining patients. Predicted PC scores for the held-out sample were computed from the PCA obtained from the retained samples. Original observed PC scores for the held-out sample were then correlated with the predicted PC scores derived from the retained sample. (D) Mean correlations between predicted and observed symptom measure loadings are shown for leave-one-site-out cross-validation (left), across 1000 runs of 5-fold cross-validation (middle), and 1000 split-half replications (right). For split-half replication, loadings were compared between the PCA performed in each independent half sample. Note: for panels C-D correlation values were averaged all k runs, all six leave-site-out-runs, or all 1000 runs of the fivefold cross-validation and split-half replications. Error bars indicate the standard error of the mean.

Dimensionality-reduced PSD symptom geometry reveals novel and robust neurobehavioral relationships

Next, we tested if the dimensionality-reduced symptom geometry can identify robust and novel patterns of neural variation across PSD patients. We opted to use global brain connectivity (GBC), a summary FC metric, to measure neural covariance because it yields a parsimonious measure reflecting how globally coupled an area is to the rest of the brain (Cole et al., 2010; see Materials and methods). Furthermore, we selected GBC because: (i) the metric is agnostic regarding the location of dysconnectivity as it weights each area equally; (ii) it yields an interpretable dimensionality-reduction of the full FC matrix; (iii) unlike the full FC matrix or other abstracted measures, GBC produces a neural map, which can be related to other independent neural maps (e.g. gene expression or pharmacology maps, discussed below). Furthermore, GBC has been shown to be sensitive to altered patterns of global neural connectivity in PSD cohorts (Anticevic et al., 2013; Fornito et al., 2011; Hahamy et al., 2014; Cole et al., 2011) as well as in models of psychosis (Preller et al., 2018; Driesen et al., 2013).

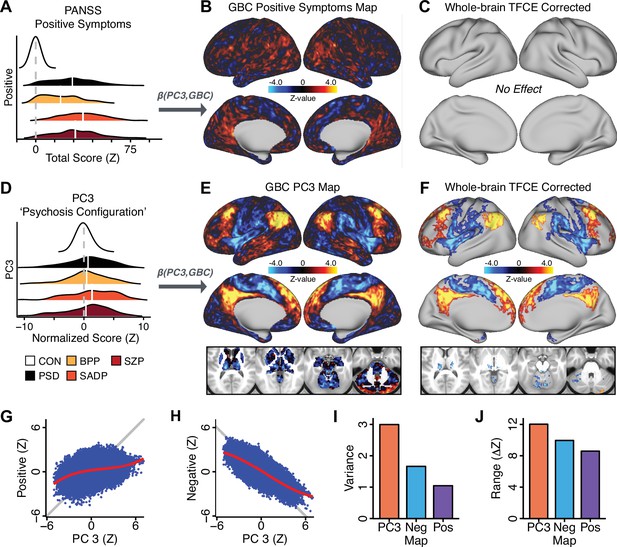

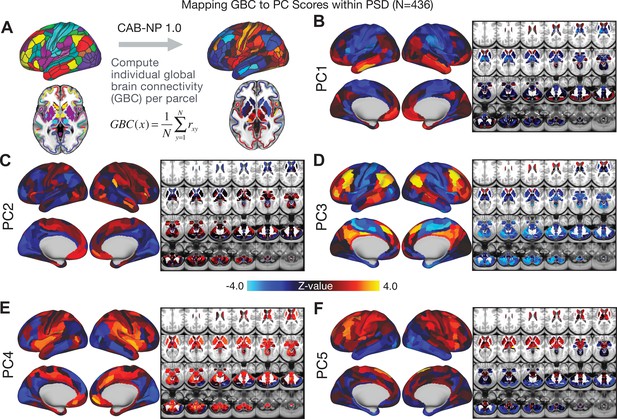

All five PCs captured unique patterns of GBC variation across the PSD (Appendix 1—figure 9), which were not observed in CON (Appendix 1—figure 10). Again we highlight the hallmark ‘Psychosis Configuration’ dimension (i.e. PC3), here to illustrate the benefit of the low-rank PSD symptom geometry for symptom-neural mapping relative to traditional aggregate PANSS symptom scales. The relationship between total PANSS Positive scores and GBC across N = 436 PSD patients (, Figure 3A) was statistically modest (Figure 3B) and no areas survived whole-brain type-I error protection (Figure 3C, p<0.05). In contrast, regressing PC3 scores onto GBC across N = 436 patients revealed a robust symptom-neural map (Figure 3E–F), which survived whole-brain type-I error protection. Of note, the PC3 ‘Psychosis Configuration’ axis is bi-directional whereby individuals who score either highly positively or negatively are symptomatic. Therefore, a high positive PC3 score was associated with both reduced GBC across insular and superior dorsal cingulate cortices, thalamus, and anterior cerebellum and elevated GBC across precuneus, medial prefrontal, inferior parietal, superior temporal cortices, and posterior lateral cerebellum – consistent with the default-mode network (Fox et al., 2005). A high negative PC3 score would exhibit the opposite pattern. Critically, this robust symptom-neural mapping emerged despite no differences between mean diagnostic group PC3 scores (Figure 3D). These two diverging directions may be captured separately in the ICA solution, when orthogonality is not enforced (Appendix 1—figure 12). Moreover, the PC3 symptom-neural map exhibited improved statistical properties relative to other GBC maps computed from traditional aggregate PANSS symptom scales (Figure 3G–J). Notably, the PC2 – Cognitive Functioning dimension, which captured a substantial proportion of cognitive performance-related symptom variance independent of other symptom axes, revealed a circuit that was moderately (anti-)correlated with other PC maps but strongly anti-correlated with the BACS composite cognitive deficit map (r = −0.81, Appendix 1—figure 8O). This implies that the PC2 map reflects unique neural circuit variance that is relevant for cognition, independent of the other PC symptom dimensions.

Dimensionality-reduced symptom variation reveals robust neurobehavioral mapping.

(A) Distributions of total PANSS Positive symptoms for each of the clinical diagnostic groups normalized relative to the control group (white = CON; black = all PSD patients; yellow = BPP; orange = SADP; red = SZP). (B) map showing the relationship between the aggregate PANSS Positive symptom score for each patient regressed onto global brain connectivity (GBC) across all patients (N = 436). (C) No regions survived non-parametric family-wise error (FWE) correction at p<0.05 using permutation testing with threshold-free cluster enhancement (TFCE). (D) Distributions of scores for PC3 ‘Psychosis Configuration’ across clinical groups, again normalized to the control group. (E) map showing the relationship between the PC3 ‘Psychosis Configuration’ score for each patient regressed onto GBC across all patients (N = 436). (F) Regions surviving p<0.05 FWE whole-brain correction via TFCE showed clear and robust effects. (G) Comparison between the Psychosis Configuration symptom score versus the aggregate PANSS Positive symptom score GBC map for every datapoint in the neural map (i.e. greyordinate in the CIFTI map). The sigmoidal pattern indicates an improvement in the Z-statistics for the Psychosis Configuration symptom score map (panel E) relative to the aggregate PANSS Positive symptom map (panel B). (H) A similar effect was observed when comparing the Psychosis Configuration GBC map relative to the PANSS Negative symptoms GBC map (Appendix 1—figure 8). (I) Comparison of the variances for the Psychosis Configuration, PANSS Negative and PANSS Positive symptom map Z-scores. (J) Comparison of the ranges between the Psychosis Configuration, Negative and Positive symptom map Z-scores. Symptom-neural maps for all five PCs and all four traditional symptom scales (BACS and PANSS subscales) are shown in Appendix 1—figure 8.

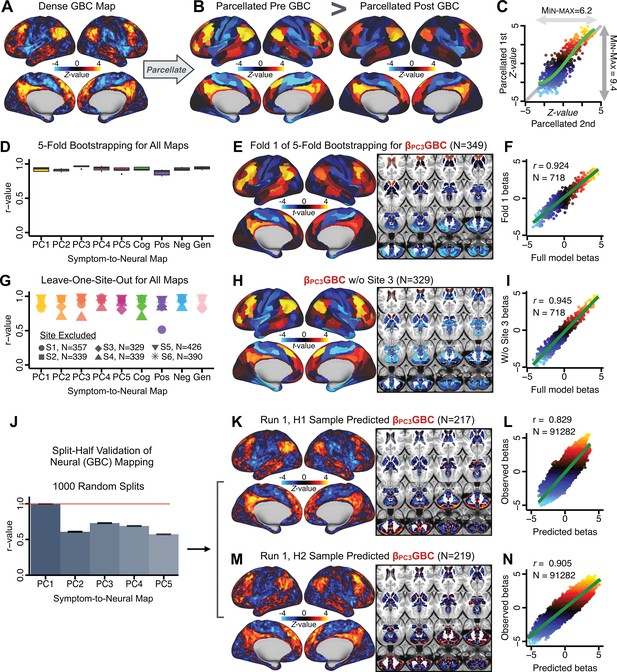

Univariate neurobehavioral map of psychosis configuration is reproducible

After observing improved symptom-neural PC3 statistics, we tested if these neural maps replicate. Recent attempts to derive stable symptom-neural mapping using multivariate techniques, while inferentially valid, have not replicated (Dinga et al., 2019). This fundamentally relates to the tradeoff between the sample size needed to resolve multivariate neurobehavioral solutions and the size of the feature space. To mitigate the feature size issue we re-computed the maps using a functionally-derived whole-brain parcellation via the recently-validated CAB-NP atlas (Glasser et al., 2016; Ji et al., 2019b; Materials and methods). Here, a functional parcellation is a principled way of neural feature reduction (to 718 parcels) that can also appreciably boost signal-to-noise (Glasser et al., 2016; Ji et al., 2019b). Indeed, parcellating the full-resolution ‘dense’ resting-state signal for each subject prior to computing GBC statistically improved the group-level symptom-neural maps compared to parcellating after computing GBC (Figure 4A–C, all maps in Appendix 1—figure 9). Results demonstrate that the univariate symptom-neural mapping was indeed stable across fivefold bootstrapping, leave-site-out, and split-half cross-validations (Figure 4D–N, see Appendix 1 - Note 2), yielding consistent symptom-neural PC3 maps. Importantly, the symptom-neural maps computed using ICA showed comparable results (Appendix 1—figure 12).

Parcellated symptom-neural GBC maps reflecting psychosis configuration are statistically robust and reproducible.

(A) Z-scored PC3 Psychosis Configuration GBC neural map at the ‘dense’ (full CIFTI resolution) level. (B, C) Neural data parcellated using a whole-brain functional partition (Ji et al., 2019b) before computing subject-level GBC yielded stronger statistical values in the Z-scored Psychosis Configuration GBC neural map as compared to when parcellation was performed after computing GBC for each subject. (D) Summary of similarities between all symptom-neural maps (PCs and traditional symptom scales) across fivefold cross-validation. Boxplots show the range of r values between maps for each fold and the full model. (E) Normalized map from regression of individual patients’ PC3 scores onto parcellated GBC data, shown here for a subset of patients from Fold 1 out of 5 (N = 349). The greater the magnitude of the coefficient for a parcel, the stronger the statistical relationship between GBC of that parcel and PC3 score. (F) Correlation between the value of each parcel in the regression model computed using patients in Fold one and the full PSD sample (N = 436) model indicates that the leave-one-fold-out map was highly similar to the map obtained from the full PSD sample model (r = 0.924). (G) Summary of leave-one-site-out regression for all symptom-neural maps. Regression of PC symptom scores onto parcellated GBC data, each time leaving out subjects from one site, resulted in highly similar maps. This highlights that the relationship between PC3 scores and GBC is robust and not driven by a specific site. (H) map for all PSD except one site. As an example, Site 3 is excluded here given that it recruited the most patients (and therefore may have the greatest statistical impact on the full model). (I) Correlation between the value of each parcel in the regression model computed using all patients minus Site 3, and the full PSD sample model. (J) Split-half replication of map. Bar plots show the mean correlation across 1000 runs; error bars show standard error. Note that the split-half effect for PC1 was exceptionally robust. The split-half consistency for PC3, while lower, was still highly robust and well above chance. (K) map from PC3-to-GBC regression for the first half (H1) patients, shown here for one exemplar run out of 1000 split-half validations. (L) Correlation across 718 parcels between the H1 predicted coefficient map (i.e. panel K) and the observed coefficient map for H1. (M–N) The same analysis as K-L is shown for patients in H2, indicating a striking consistency in the Psychosis Configuration map correspondence.

A multivariate PSD neurobehavioral solution can be computed but is not reproducible with the present sample size

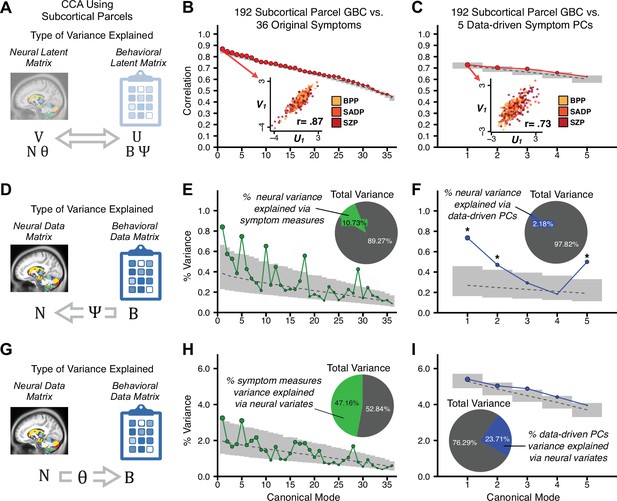

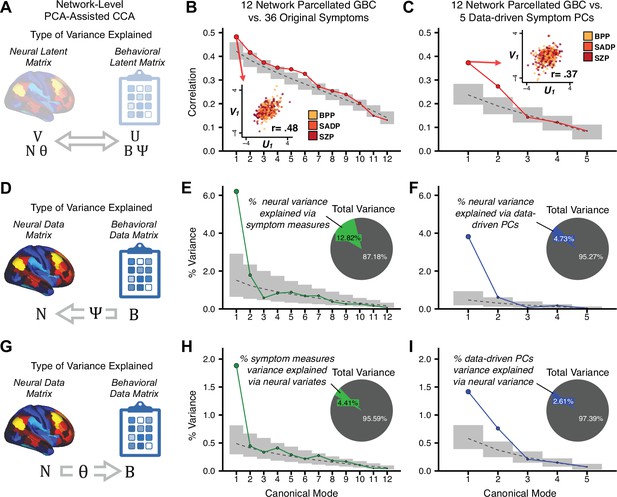

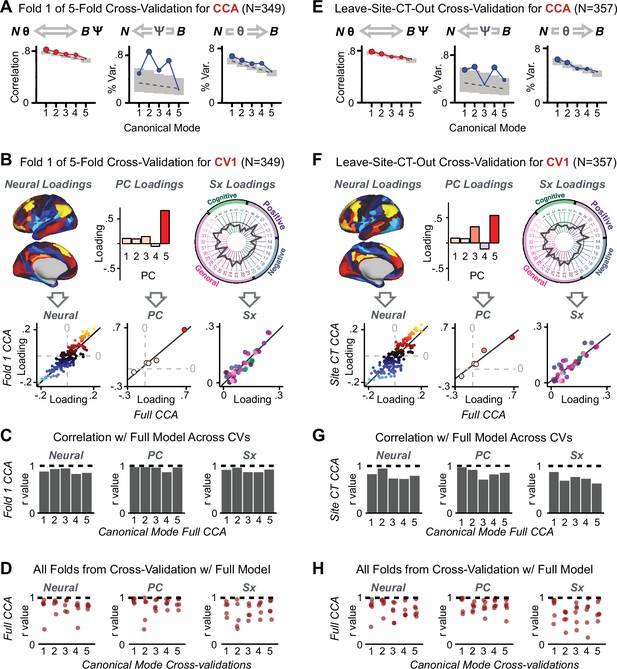

Several recent studies have reported ‘latent’ neurobehavioral relationships using multivariate statistics (Xia et al., 2018; Drysdale et al., 2017; Yu et al., 2019), which would be preferable because they simultaneously solve for maximal covariation across neural and behavioral features. Given the possibility of deriving a stable multivariate effect, here we tested if results improve with canonical correlation analysis (CCA) (Hardoon et al., 2004; Figure 5A).

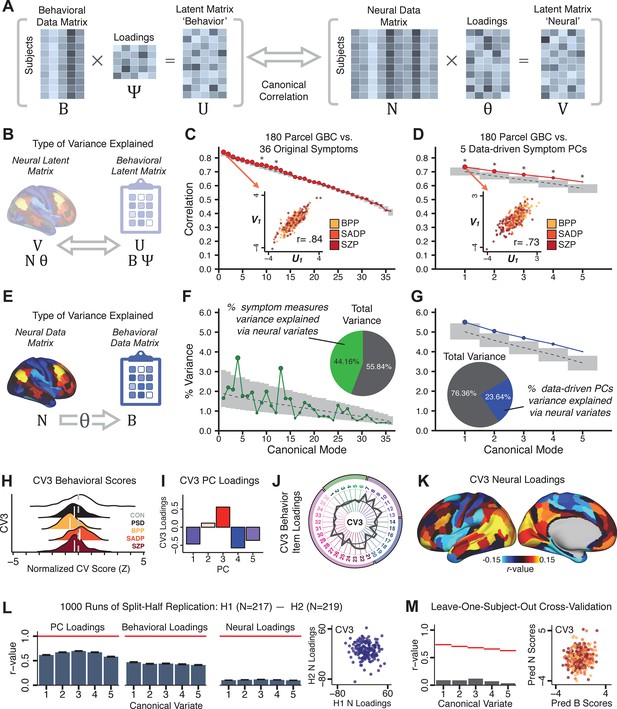

Multivariate symptom-neural feature mapping using canonical correlation analysis (CCA).

(A) Schematic of CCA data (B, N), transformation (, ), and transformed ‘latent’ (U, V) matrices. Here, each column in U and V is referred to as a canonical variate (CV); each corresponding pair of CVs (e.g. U1 and V1) is referred to as a canonical mode. (B) CCA maximized correlations between the CVs (U and V) (C) Screeplot showing canonical modes obtained from 180 neural features (cortical GBC symmetrized across hemispheres) and 36 symptom measures (‘180 vs. 36 CCA’). Inset illustrates the correlation (r = 0.85) between the CV of the first mode, U1 and V1 (note that the correlation was not driven by a separation between diagnoses). Modes 9 and 12 remained significant after FDR correction. (D) CCA computed with 180 neural features and five PC symptom features (‘180 vs. 5 CCA’). Here, all modes remained significant after FDR correction. Dashed black line shows the null calculated via a permutation test with 5000 shuffles; gray bars show 95% confidence interval. (E) Correlation between B and N reflects how much of the symptom variation can be explained by the latent neural features. (F) Proportion of symptom variance explained by each of the neural CVs in the 180 vs. 36 CCA. Inset shows the total proportion of behavioral variance explained by the neural CVs. (G) Proportion of total symptom variance explained by each of the neural CVs in the 180 vs. 5 CCA. While CCA using symptom PCs has fewer dimensions and thus accounts for lower total variance (see inset), each neural variate explains a higher amount of symptom variance than in F, suggesting that CCA could be optimized by first obtaining a low-rank symptom solution. Dashed black line indicates the null calculated via a permutation test with 5000 shuffles; gray bars show 95% confidence interval. Neural variance explained by symptom CVs are plotted in Appendix 1—figure 15. (H) Distributions of CV3 scores from the 180 vs. 5 CCA are shown here as an example of characterizing CV configurations. Scores for all diagnostic groups are normalized to CON. Additionally, (I) symptom canonical factor loadings, (J) loadings of the original 36 symptom measures, and (K) neural canonical factor loadings for CV3 are shown. (L) Within-sample CCA cross-validation appeared robust (see Appendix 1—figure 17). However, a split-half replication of the 180 vs. 5 CCA (using two independent non-overlapping samples) was not reliable. Bar plots show the mean correlation for each CV between the first half (H1) and the second half (H2) CCA, each computed across 1000 runs. Left: split-half replication of the symptom PC loadings matrix ; Middle: individual symptom measure loadings; Right: the neural loadings matrix , which in particular was not stable. Error bars show the standard error of the mean. Scatterplot shows the correlation between CV3 neural loadings for H1 vs. H2 for one example CCA run, illustrating lack of reliability. (M) Leave-one-subject-out cross-validation further highlights CCA instability.

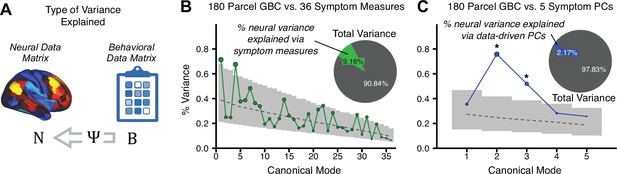

To evaluate if the number of neural features affects the solution, we computed CCA using GBC from: (i) 180 symmetrized cortical parcels; (ii) 359 bilateral subcortex-only parcels; (iii) 192 symmetrized subcortical parcels; (iv) 12 functional networks, all from the brain-wide cortico-subcortical CAB-NP parcellation (Glasser et al., 2016; Ji et al., 2019b). We did not compute a solution using 718 bilateral whole-brain parcels, as this exceeded the number of samples and rendered the CCA insolvable. Notably, the 359 subcortex-only solution did not produce stable results according to any criterion, whereas the 192 symmetrized subcortical features (Appendix 1—figure 13) and 12 network-level features (Appendix 1—figure 14) solutions captured statistically modest effects relative to the 180 symmetrized cortical features (Figure 5B–D). Therefore, we characterized the 180-parcel solution further. We examined two CCA solutions using these 180 neural features in relation to symptom scores: (i) all 36 item-level symptom measure scores from the PANSS/BACS (‘180 vs. 36 CCA’, Figure 5C,F); and (ii) five PC symptom scores (‘180 vs. 5 CCA’, Figure 5D,G, see Materials and methods). Only 2 out of 36 modes for the 180 vs. 36 CCA solution survived permutation testing and false discovery rate (FDR) correction (Figure 5C). In contrast, all 5 modes of the 180 vs. 5 CCA survived (Figure 5D). Critically, we found that no single CCA mode computed on item-level symptom measures captured more variance than the CCA modes derived from PC symptom scores, suggesting that the PCA-derived dimensions capture more neurally relevant variation than any one single clinical item (Figure 5E–G). Additional CCA details are presented in Appendix 1 - Note 3 and Appendix 1—figure 15.

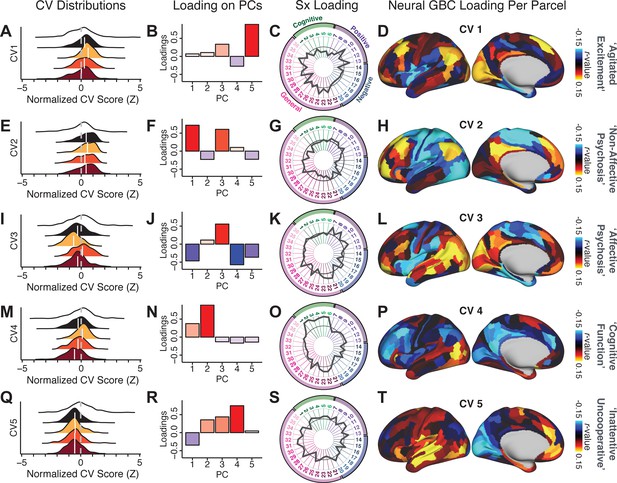

We highlight here an example canonical variate, CV3, across both symptom and neural effects, with data for all CVs shown in Appendix 1—figure 16. CV3 scores across diagnostic groups normalized to controls are shown Figure 5H in addition to how CV3 loads onto each PC (Figure 5I). The negative loadings on to PCs 1, 4, and 5 and the high positive loadings on to PC3 in Figure 5I indicate that CV3 captures some shared variation across symptom PCs. This can also be visualized by computing how CV3 projects onto the original 36 symptom measures (Figure 5J). Finally, the neural loadings for CV3 are shown in Figure 5K.

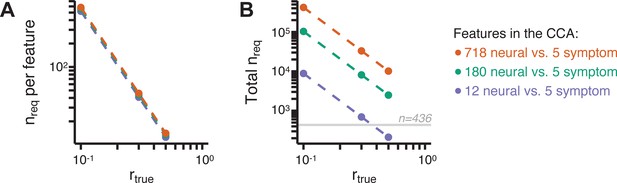

Lastly, we tested if the 180 vs. 5 CCA solution is stable and reproducible, as done with PC-to-GBC univariate results. The CCA solution was robust when tested with k-fold and leave-site-out cross-validation (Appendix 1—figure 17) likely because these methods use CCA loadings derived from the full sample. However, the CCA loadings did not replicate in non-overlapping split-half samples (Figure 5L, see Appendix 1 - Note 4). Moreover, a leave-one-subject-out cross-validation revealed that removing a single subject from the sample affected the CCA solution such that it did not generalize to the left-out subject (Figure 5M). This is in contrast to the PCA-to-GBC univariate mapping, which was substantially more reproducible for all attempted cross-validations relative to the CCA approach. This is likely because substantially more power is needed to resolve a stable multivariate neurobehavioral effect with this many features (Dinga et al., 2019). Indeed, a multivariate power analysis using 180 neural features and five symptom features and assuming a true canonical correlation of suggests that a minimal sample size of is needed to sufficiently detect the effect (Helmer et al., 2020), which is an order of magnitude greater than the available sample size (Appendix 1—figure 18). Therefore, we leverage the univariate symptom-neural result for subsequent subject-specific model optimization and comparisons to molecular neuroimaging maps.

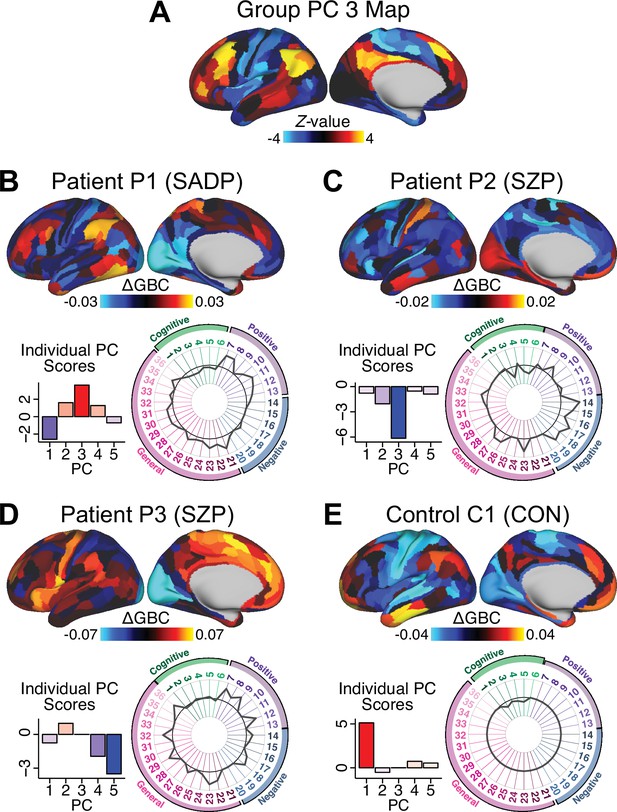

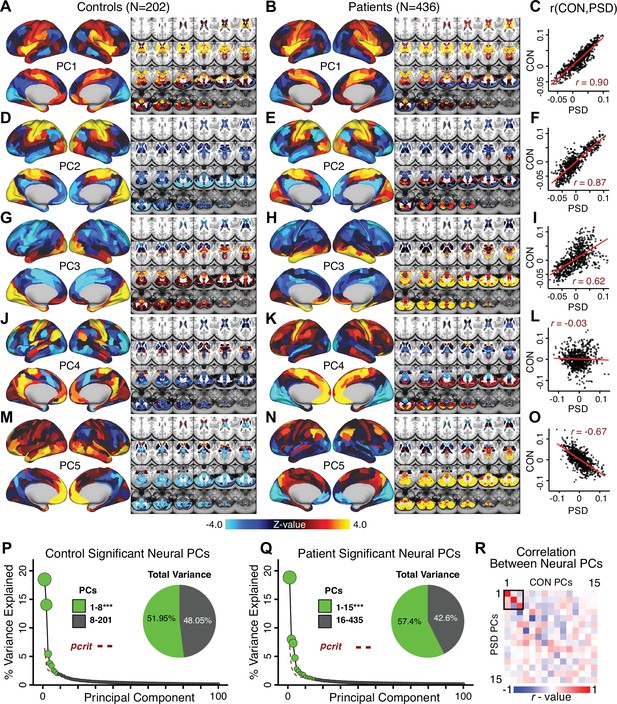

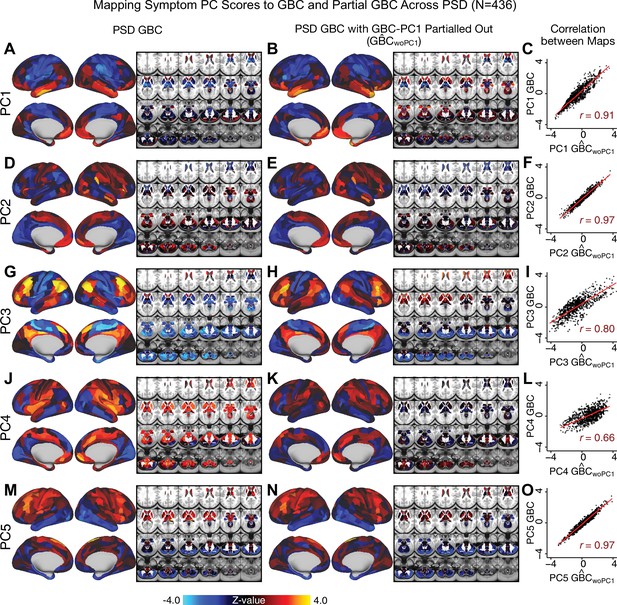

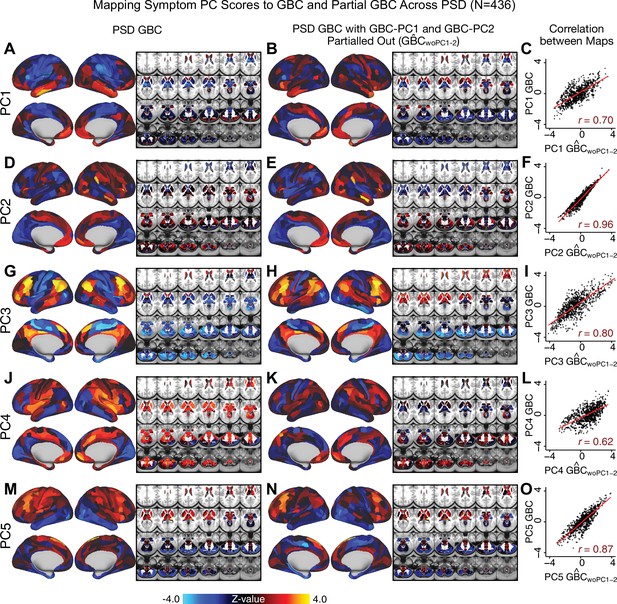

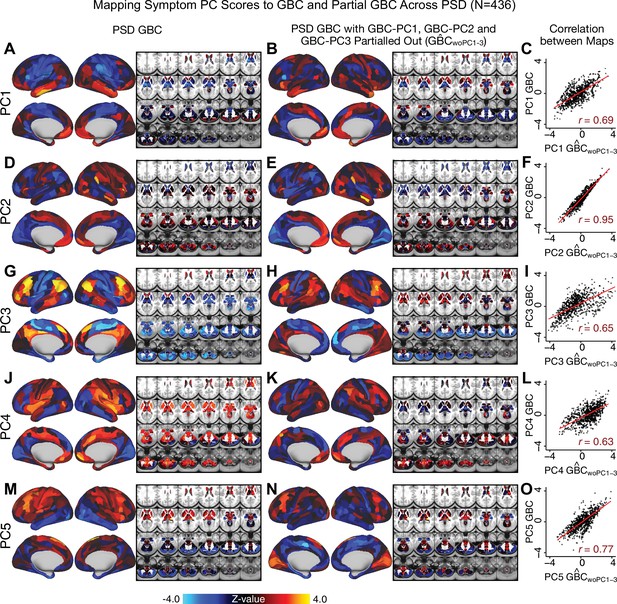

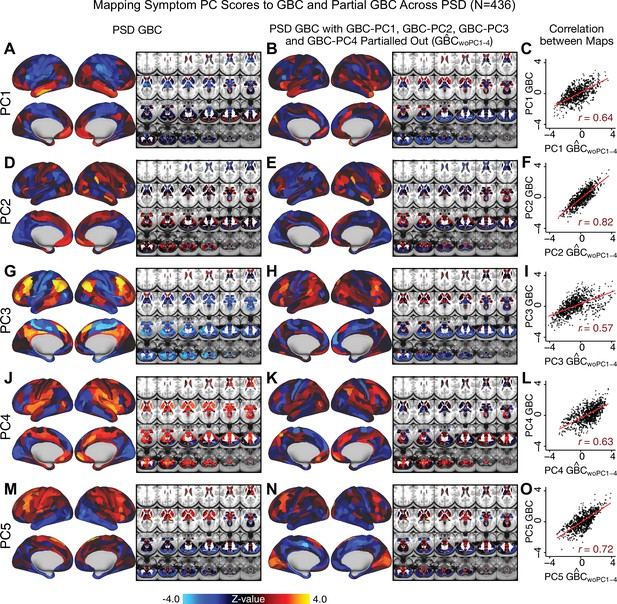

A major proportion of overall neural variance may not be relevant for psychosis symptoms

Most studies look for differences between clinical and control groups, but to our knowledge no study has tested whether both PSD and healthy controls actually share a major portion of neural variance that may be present across all people. If the bulk of the neural variance is similar across both PSD and CON groups then including this clinically irrelevant neural signal might obscure clinical-relevant neurobehavioral relationships. To test this, we examined the shared variance structure of the neural signal for all PSD patients (N = 436) and all controls (N = 202) independently by conducting a PCA on the GBC maps (see Materials and methods). Patients’ and controls’ neural signals were highly similar for each of the first three neural PCs (>30% of all neural variance in each group)(Appendix 1—figure 19A–J). These neural PCs may reflect a ‘core’ shared symptom-irrelevant neural variance that generalizes across all people. These data suggest that the bulk of neural variance in PSD patients may actually not be symptom-relevant, which highlights the importance of optimizing symptom-neural mapping. Under this assumption, removing the shared neural variance between PSD and CON should not drastically affect the reported symptom-neural univariate mapping solution, because this common variance does not map to clinical features. Thus, we conducted a PCA using the parcellated GBC data from all 436 PSD and 202 CON (see Materials and methods), which we will refer to as ‘GBC-PCA’ to avoid confusion with the symptom/behavioral PCA. This GBC-PCA resulted in 637 independent GBC-PCs. Since PCs are orthogonal to each other, we then partialled out the variance attributable to GBC-PC1 from the PSD data by reconstructing the PSD GBC matrix using only scores and coefficients from the remaining 636 GBC-PCs (). We then reran the univariate regression as described in Figure 3, using the same five symptom PC scores across 436 PSD (Appendix 1—figure 20). Removing the first PC of shared neural variance (which accounted for about 15.8% of the total GBC variance across CON and PSD) from PSD data attenuated the statistics slightly (not unexpected as the variance was by definition reduced) but otherwise did not strongly affect the univariate mapping solution. We repeated the symptom-neural regression next with the first two GBC-PCs partialled out of the PSD data Appendix 1—figure 21, with the first three PCs parsed out Appendix 1—figure 22, and with the first four neural PCs parsed out Appendix 1—figure 23. The symptom-neural maps remain fairly robust, although the similarity with the original maps does drop as more common neural variance is parsed out.

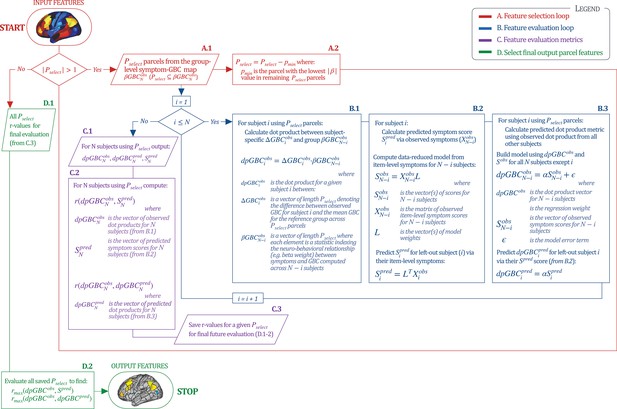

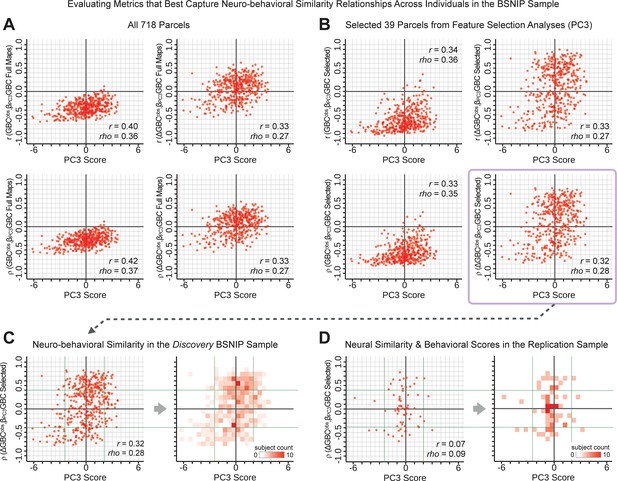

Optimizing symptom-neural mapping features for personalized prediction via dimensionality-reduced symptoms

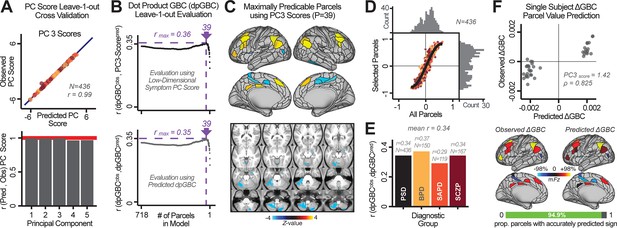

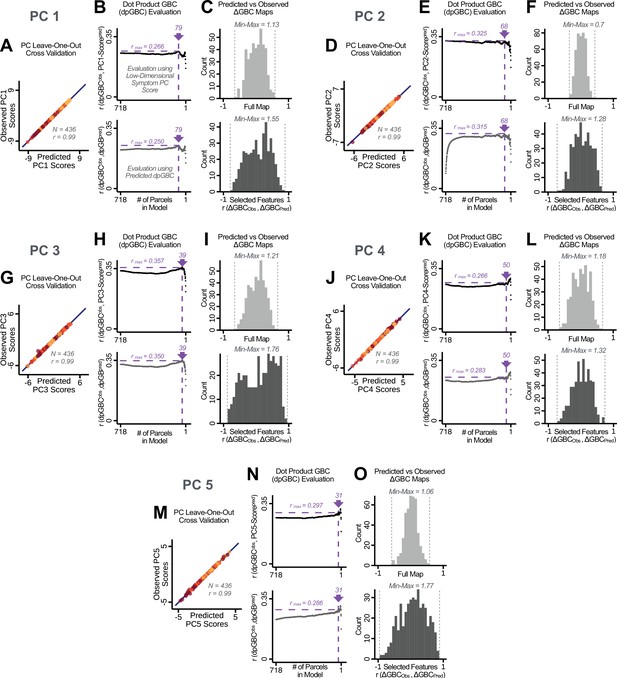

Above we demonstrated that symptom PC scores can be reliably computed across sites and cross-validation approaches (Figure 2). Next, we show that leave-one-subject-out cross-validation yields reliable effects for the low-rank symptom PCA solution (Figure 6A). This stable single-subject PC score prediction provides the basis for testing if the derived neural maps can yield an individually reliable subset of features. To this end, we developed a framework for univariate neural feature selection (Appendix 1—figure 23) based on PC scores (e.g. PC3 score). Specifically, we computed a dot product GBC metric () that provides an index of similarity between an individual topography relative to a ‘reference’ group-level map (see Materials and methods and Appendix 1—figure 23). Using this index via a feature selection step-down regression, we found a subset of parcels for which the symptom-neural statistical association was maximal (Figure 6A). For PC3, we found maximally predictive parcels out of the group neural map. Specifically, the relationship between PC3 symptom scores and values across subjects was maximal (Figure 6B, top panel, ) as was the relationship between predicted vs. observed (Figure 6B, bottom panel, ) (see Appendix 1—figure 25 for all PCs). Importantly, the ‘subset’ feature map (i.e. , Figure 6C) exhibited improved statistical properties relative to the full map (i.e. , Figure 6D). Furthermore, the relationship between observed vs. predicted subset feature maps (i.e. ) was highly consistent across DSM diagnoses (Figure 6E). Finally, a single patient is highlighted for whom the correlation between predicted and observed subset feature maps was high (i.e. , Figure 6F), demonstrating that the dimensionality-reduced symptom scores can be used to quantitatively optimize symptom-neural map features for individuals.

Optimizing neural feature selection to inform single-subject prediction via a low-dimensional symptom solution.

(A) Leave-one-out cross-validation for the symptom PCA analyses indicates robust individual score prediction. Top panel: Scatterplot shows the correlation between each subject's predicted PC3 score from a leave-one-out PCA model and their observed PC3 score from the full-sample PCA model, r = 0.99. Bottom panel: Correlation between predicted and observed individual PC scores was above 0.99 for each of the significant PCs (see Figure 1). The red line indicates r = 1. (B) We developed a univariate step-down feature selection framework to obtain the most predictive parcels using a subject-specific approach via the index. Specifically, the ’observed’ patient-specific was calculated using each patient’s (i.e. the patient-specific GBC map vs. the group mean GBC for each each parcel) and the ‘reference’ symptom-to-GBC PC3 map (described in Figure 4B) [ = ]. See Materials and methods and Appendix 1—figure 24 for complete feature selection procedure details. In turn, we computed the predicted index for each patient by holding their data out of the model and predicting their score (). We used two metrics to evaluate the maximally predictive feature subset: (i) The correlation between PC3 symptom score and across all N = 436, which was maximal for parcels [r = 0.36, purple arrow]; (ii) The correlation between and , which also peaked at parcels [r = 0.35, purple arrow]. (C) The maximally predictive parcels from the map are highlighted (referred to as the ‘selected’ map). (D) Across all n = 436 patients we evaluated if the selected parcels improve the statistical range of similarities between the and the reference for each patient. For each subject the value on the X-axis reflects a correlation between their map and the map across all 718 parcels; the Y-axis reflects a correlation between their map and the map only within the ‘selected’ 39 parcels. The marginal histograms show the distribution of these values across subjects. (E) Each DSM diagnostic group showed comparable correlations between predicted and observed values. The r-value shown for each group is a correlation between the and vectors, each of length N. (F) Scatterplot for a single patient with a positive behavioral loading ( score = 1.42) and also with a high correlation between predicted versus observed values for the ‘selected’ 39 parcels (). Right panel highlights the observed vs. predicted map for this patient, indicating that 94.9% of the parcels were predicted in the correct direction (i.e. in the correct quadrant).

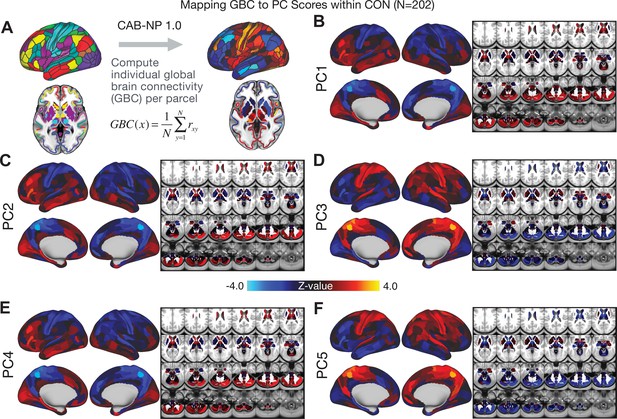

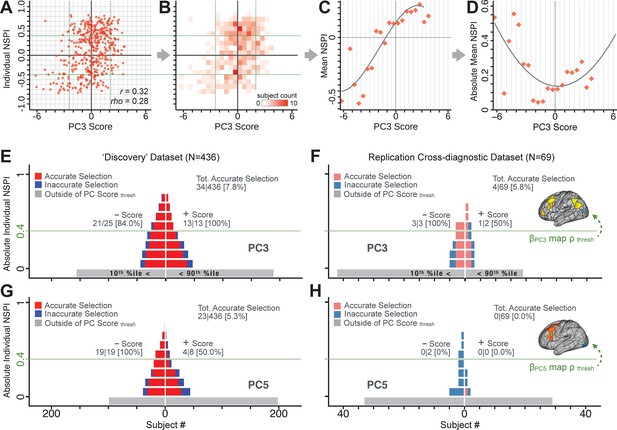

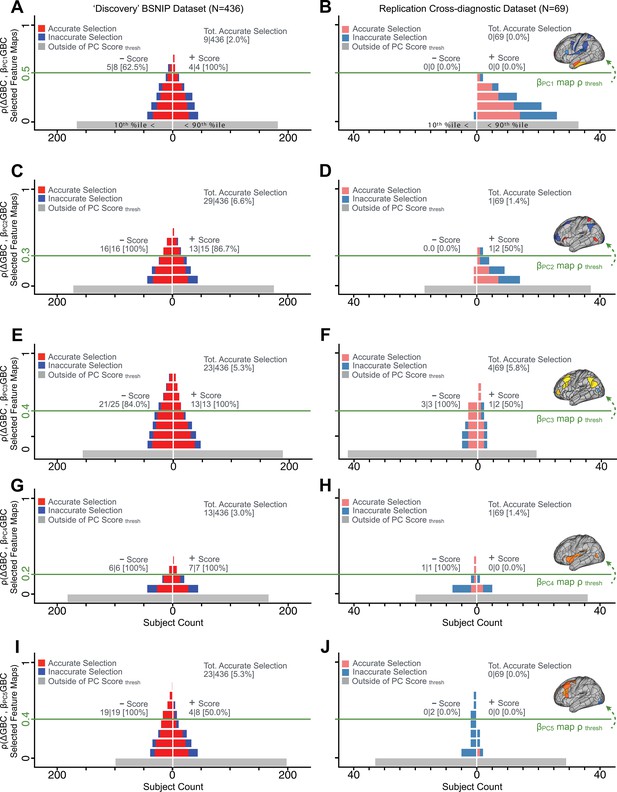

Single patient evaluation via neurobehavioral target map similarity

We showed a quantitative framework for computing a neurobehavioral model at the single-patient level. This brain-behavior space (BBS) model was optimized along a single dimensionality-reduced symptom PC axis. Next, we tested a hybrid patient selection strategy by first imposing a PC-based symptom threshold, followed by a target neural similarity threshold driven by the most highly predictive symptom-neural map features. This is described in Materials and methods, Appendix 1 - Note 5, and Appendix 1—figure 26–27. We found that for patients with a high (either positive or negative) PC symptom score the symptom-neural relationship was robust (Appendix 1—figure 26A-D), and these patients could be reliably selected for that particular dimension (Appendix 1—figure 26E,G). Conversely, patients with a low absolute PC score showed a weak relationship with symptom-relevant neural features. This is intuitive because these individuals do not vary along the selected PC symptom axis. We also tested this patient selection strategy on an independent cross-diagnostic ‘replication’ sample, which yielded consistent results (Appendix 1—figure 26F,H). Collectively, these results show that data-driven symptom scores can pinpoint individual patients for whom neural variation strongly maps onto a target neural reference map. These data also highlight that both symptom and neural information for an independent patient can be quantified in the reference ‘discovery’ BBS using their symptom data alone.

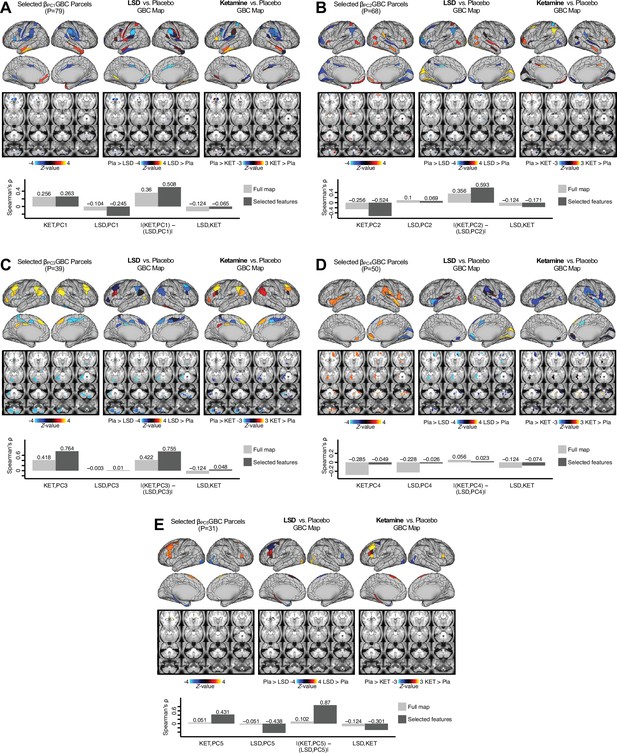

Subject-specific PSD neurobehavioral features track neuropharmacological map patterns

Next, we use the personalized BBS selection in a proof-of-concept framework for informing molecular mechanism of possible treatment response by relating subject-specific BBS to independently acquired pharmacological neuroimaging maps. Here, we examine two mechanisms implicated in PSD neuropathology via ketamine, a N-methyl-D-aspartate (NMDA) receptor antagonist (Krystal et al., 1994), and lysergic acid diethylamide (LSD), primarily a serotonin receptor agonist (Preller et al., 2018; González-Maeso et al., 2007; Egan et al., 1998). We first quantified individual subjects’ BBS ‘locations’ in the established reference neurobehavioral geometry. The radarplot in Figure 7A shows original symptoms whereas Figure 7B shows maps for two patients from the replication dataset (denoted here with and , see Appendix 1—figure 11 for other example patients). Both of these patients exceeded the neural and behavioral BBS selection indices for PC3 (defined independently in the ‘discovery’ dataset, Appendix 1—figure 26C). Furthermore, both patients exhibited neurobehavioral variation in line with their expected locations in the BBS geometry. Specifically, patient from the replication dataset scored highly negatively on the PC3 axis defined in the ‘discovery’ PSD sample (Figure 7A). In contrast, patient scored positively on the PC3 axis. Importantly, the correlation between the map for each patient and the group-reference was directionally consistent with their symptom PC score (Figure 7B–C).

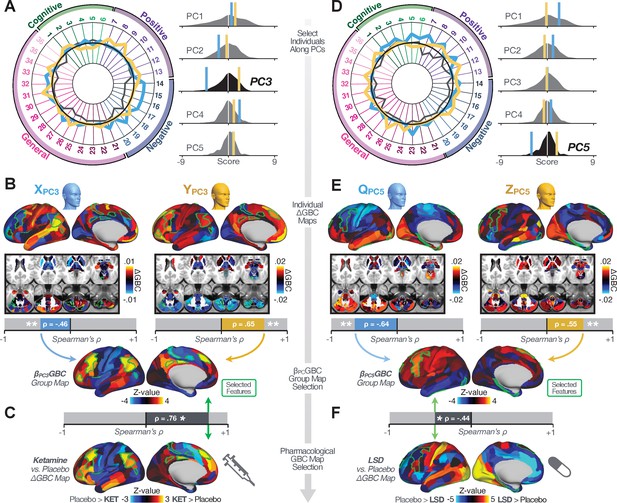

Leveraging subject-specific brain-behavioral maps for molecular neuroimaging target selection.

(A) Data for two individual patients from the replication dataset are highlighted for PC3: (blue) and (yellow). Both of these patients scored above the neural and behavioral thresholds for PC3 defined in the ‘discovery’ PSD dataset. Patient loads highly negatively on the PC5 axis and Patient loads highly positively. Density plots show the projected PC scores for Patients and overlaid on distributions of PC scores from the discovery PSD sample. (B) Neural maps show cortical and subcortical for the two patients and specifically reflecting a difference from the mean PC3. The similarity of and the map within the most predictive neural parcels for PC3 (outlined in green). Note that the sign of neural similarity to the reference PC3 map and the sign of the PC3 score is consistent for these two patients. (C) The selected PC3 map (parcels outlined in green) is spatially correlated to the neural map reflecting the change in GBC after ketamine administration (ρ = 0.76, Materials and methods). Note that Patient who exhibits that is anti-correlated to the ketamine map also expresses depressive moods symptoms (panel A). This is consistent with the possibility that this person may clinically benefit from ketamine administration, which may elevate connectivity in areas where they show reductions (Berman et al., 2000). In contrast, Patient may exhibit an exacerbation of their psychosis symptoms given that their is positively correlation with the ketamine map. (D) Data for two individual patients from the discovery dataset are highlighted for PC5: (blue) and (yellow). Note that no patients in the replication dataset were selected for PC5 so both of these patients were selected from ‘discovery’ PSD dataset for illustrative purposes. Patient loads highly negatively on the PC5 axis and Patient loads highly positively. Density plots show the projected PC scores for Patients and overlaid on distributions of PC scores from the discovery PSD sample. (E) Neural maps show cortical and subcortical for Patients and , which are highly negatively and positively correlated with the selected PC5 map respectively. (F) The selected PC5 map (parcels outlined in green) is spatially anti-correlated with the LSD response map (ρ = −0.44, see Materials and methods), suggesting that circuits modulated by LSD (i.e. serotonin, in particular 5-HT2A) may be relevant for the PC5 symptom expression. Here, a serotonin receptor agonist may modulate the symptom-neural profile of Patient , whereas an antagonist may be effective for Patient .

We then tested if the single-subject BBS selection could be quantified with respect to a neural map reflecting glutamate receptor manipulation, a hypothesized mechanism underlying PSD symptoms (Moghaddam and Javitt, 2012). Specifically, we used an independently collected ketamine infusion dataset, collected in healthy adult volunteers during resting-state fMRI (Anticevic et al., 2012a). As with the clinical data, here we computed a map reflecting the effect of ketamine on GBC relative to placebo (Materials and methods). The maximally predictive PC3 parcels exhibited high spatial similarity with the ketamine map (ρ = 0.76, see Materials and methods), indicating that the pattern induced by ketamine tracks with the GBC pattern reflecting PC3 symptom variation.

Critically, because is negatively loaded on the PC3 symptom axis, an NMDA receptor antagonist like ketamine may modulate symptom-relevant circuits in a way that reduces similarity with the PC3 map. This may in turn have an impact on the PC3-like symptoms. Consistent with this hypothesis, expresses predominantly depressive symptoms (Figure 7A), and ketamine has been shown to act as an anti-depressant (Berman et al., 2000). This approach can be applied for patients that load along another axis, such as PC5. Figure 7D–E shows the symptom and neural data for two patients whom met thresholds for PC5 selection (Appendix 1—figure 26C). Notably, the selected PC5 map is anti-correlated with a map reflecting LSD vs. placebo effects (Preller et al., 2018) (ρ = −0.44, Figure 7F). Hence areas modulated by LSD may map onto behavioral variation along PC5. Consequently, serotonergic modulation may be effective for treating and , via an antagonist or an agonist respectively. These differential similarities between pharmacological response maps and BBS maps (Appendix 1—figure 29) can be refined for quantitative patient segmentation.

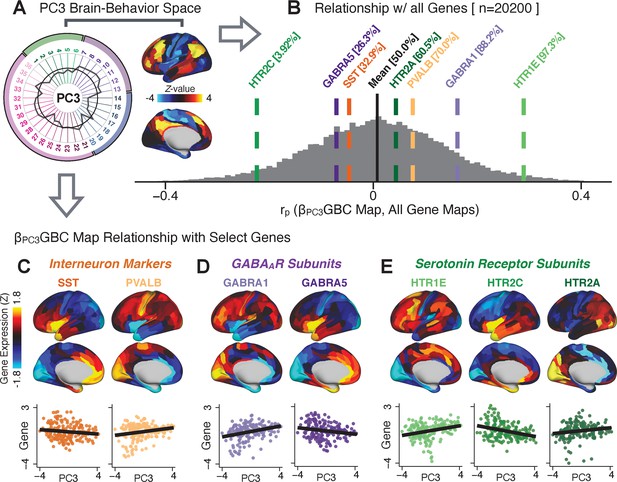

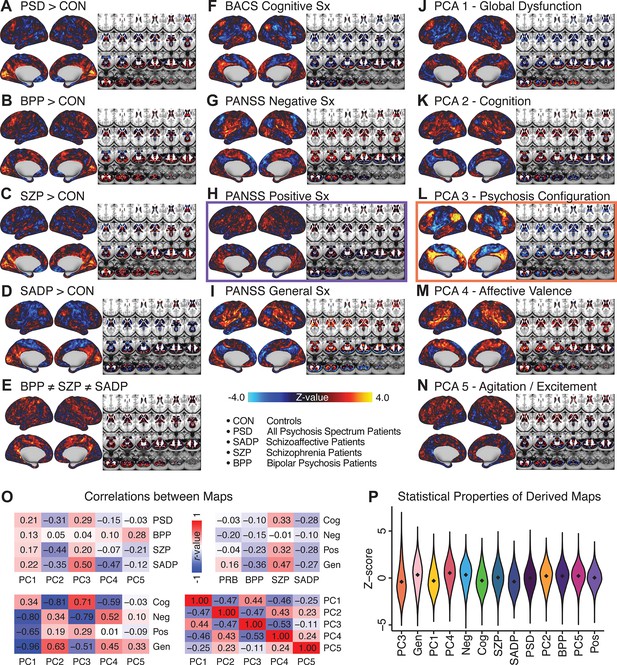

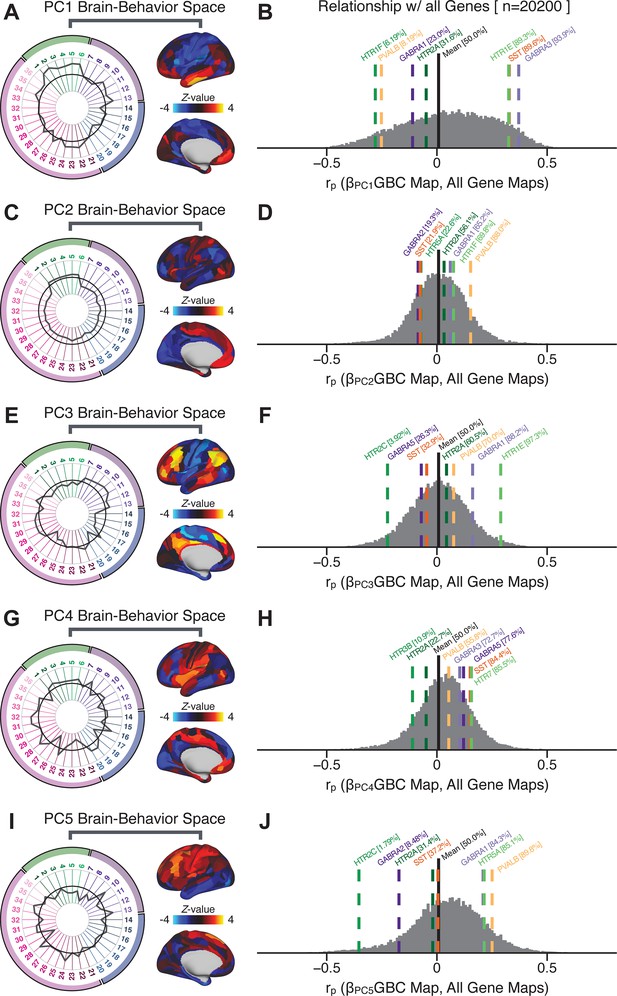

Group-level PSD neurobehavioral features track neural gene expression patterns

To further inform molecular mechanism for the derived BBS results, we compared results with neural gene expression profiles derived from the Allen Human Brain Atlas (AHBA) (Hawrylycz et al., 2012; Burt et al., 2018; Figure 8A, Materials and methods). Specifically, we tested if BBS cortical topographies, which reflect stable symptom-neural mapping along PSD, covary with the expression of genes implicated in PSD neuropathology. We focus here on serotonin receptor subunits (HTR1E, HTR2C, HTR2A), GABA receptor subunits (GABRA1, GABRA5), and the interneuron markers somatostatin (SST) and parvalbumin (PVALB). Serotonin agonists such as LSD have been shown to induce PSD-like symptoms in healthy adults (Preller et al., 2018) and the serotonin antagonism of ‘second-generation’ antipsychotics are thought to contribute to their efficacy in targeting broad PSD symptoms (Geyer and Vollenweider, 2008; Meltzer et al., 2012; Abi-Dargham et al., 1997). Abnormalities in GABAergic interneurons, which provide inhibitory control in neural circuits, may contribute to cognitive deficits in PSD (Benes and Berretta, 2001; Inan et al., 2013; Dienel and Lewis, 2019) and additionally lead to downstream excitatory dysfunction that underlies other PSD symptoms (Lisman et al., 2008; Grace, 2016). In particular, a loss of prefrontal parvalbumin-expression fast-spiking interneurons has been implicated in PSD (Enwright Iii et al., 2018; Lodge et al., 2009; Beasley and Reynolds, 1997; Lewis et al., 2012). Figure 8B shows the distribution of correlations between the PC3 map and the cortical expression patterns of 20,200 available AHBA genes (results for other PCs are shown in Appendix 1—figure 30). Seven genes of interest are highlighted, along with their cortical expression topographies and their similarity with the PC3 BBS map (Figure 8C–E). This BBS-to-gene mapping can potentially reveal novel therapeutic molecular targets for neurobehavioral variation. For example, the HTR1E gene, which encodes the serotonin 5-HT1E receptor, is highly correlated with the PC3 BBS map. This could drive further development of novel selective ligands for this receptor, which are not currently available (Kitson, 2007).

Psychosis spectrum symptom-neural maps track neural gene expression patterns computed from the Allen Human Brain Atlas (AHBA).

(A) The symptom loadings and the associated neural map jointly reflect the PC3 brain-behavioral space (BBS) profile, which can be quantitatively related to human cortical gene expression patterns obtained from the AHBA (Burt et al., 2018). (B) Distribution of correlation values between the PC3 BBS map and ∼20,000 gene expression maps derived from the AHBA dataset. Specifically, AHBA gene expression maps were obtained using DNA microarrays from six postmortem brains, capturing gene expression topography across cortical areas. These expression patterns were then mapped onto the cortical surface models derived from the AHBA subjects’ anatomical scans and aligned with the Human Connectome Project (HCP) atlas, described in prior work and methods (Burt et al., 2018). Note that because no significant inter-hemispheric differences were found in cortical gene expression all results were symmetrized to the left hemisphere, resulting in 180 parcels. We focused on a select number of psychosis-relevant genes – namely genes coding for the serotonin and GABA receptor subunits and interneuron markers. Seven genes of interest are highlighted with dashed lines. Note that the expression pattern of HTR2C (green dashed line) is at the low negative tail of the entire distribution, that is highly anti-correlated with PC3 BBS map. Conversely, GABRA1 and HTR1E are on the far positive end, reflecting a highly similar gene-to-BBS spatial pattern. (C) Upper panels show gene expression patterns for two interneuron marker genes, somatostatin (SST) and parvalbumin (PVALB). Positive (yellow) regions show areas where the gene of interest is highly expressed, whereas negative (blue) regions indicate low expression values. Lower panels highlight all gene-to-BBS map spatial correlations where each value is a symmetrized cortical parcel (180 in total) from the HCP atlas parcellation. (D) Gene expression maps and spatial correlations with the PC3 BBS map for two GABAA receptor subunit genes: GABRA1 and GABRA5. (E) Gene expression maps and spatial correlations with the PC3 BBS map for three serotonin receptor subunit genes: HTR1E, HTR2C, and HTR2A.

Discussion

We found a robust and reproducible symptom-neural mapping across the psychosis spectrum that emerged from a low-dimensional symptom solution. Critically, this low-rank symptom solution was predictive of a neural circuit pattern, which reproduced at the single-subject level. In turn, we show that the derived PSD symptom-neural feature maps exhibit spatial correspondence with independent pharmacological and gene expression neural maps that are directly relevant for PSD neurobiology. We demonstrate, for the first time in the literature, a transdiagnostic data-reduced PSD symptom geometry across hallmark psychopathology symptoms and cognitive measures, that shows stable and robust relationships with neural connectivity with singles subject-level precision. Critically, we anchor these symptom-neural maps to mechanistically informed molecular imaging benchmarks that can be linked to relevant pathophysiology, and furthermore demonstrate how these methods can be combined to inform personalized patient selection decisions.

Deriving an individually predictive low-dimensional symptom representation across the psychosis spectrum

Psychosis spectrum is associated with notable clinical heterogeneity such deficits in cognition as well as altered beliefs (i.e. delusions), perception (i.e. hallucinations), and affect (i.e. negative symptoms) (Lefort-Besnard et al., 2018). This heterogeneity is captured by clinical instruments that quantify PSD symptoms across dozens of specific questions and ratings. This yields a high-dimensional symptom space that is intractable for reliable mapping of neural circuits (Helmer et al., 2020). Here we show that a low-rank solution captures principal axes of PSD symptom variation, a finding in line with prior work in SZP (van der Gaag et al., 2006a; van der Gaag et al., 2006b; Lindenmayer et al., 1994; Emsley et al., 2003; White et al., 1997; Dollfus et al., 1996; Blanchard and Cohen, 2006; Chen et al., 2020; Lefort-Besnard et al., 2018).

These results highlights two key observations: (i) Existing symptom reduction studies (even those in SZP specifically) have not evaluated solutions that include cognitive impairment – a hallmark deficit across the psychosis spectrum (Barch et al., 2013). Here we show that cognitive performance captures a notable portion of the symptom variance independent of other axes. We observed that cognitive variation captured 10% of PSD sample variance even after accounting for ‘Global Functioning’ psychopathology. (ii) No study has quantified cognitive deficit variation along with core psychosis symptoms via dimensionality reduction across multiple PSD diagnoses. While existing studies have evaluated stability of data-reduced solutions within a single DSM category (van der Gaag et al., 2006a; Lindenmayer et al., 1995; Lefort-Besnard et al., 2018), current results show that dimensionality-reduced PSD symptom solutions can be reproducibly obtained across DSM diagnoses.

Importantly, on each data-reduced symptom axis, some subset of PSD patients received a score near zero. This does not imply that these patients were unimpaired; rather, the symptom configurations for these patients were orthogonal to variation along this specific axis. While in the current paper we demonstrate individual selection based on symptom scores along a single axis, it may also be possible to use a combination of several symptom dimensions to further inform individual treatment selection. For example, for patients with above-threshold symptom scores along several dimensions, a combination of these PCs may be more predictive of their neural features and in turn be a better indication of treatment selection for these individuals. Alternatively, the symptom dimensions can be used as exclusionary criteria for selectively picking out individuals along only one particular axis (e.g. filtering out patients above-threshold on PCs 1, 3, 4, and five to selectively study patients with only severe PC2 cognitive deficits). Additionally, the ICA solution revealed oblique axes which are linear combinations of the PCs, for example IC2 which is oblique to PCs 1, 2, and 3 (Appendix 1—figure 3). However, these ICs do not result in as robust or unique a neural circuit mapping as do the PCs, as shown in Appendix 1—figure 12. The observation that PSD are associated with multiple complex symptom dimensions highlights an important intuition that may extend to other mental health spectra. Additionally, the PSD symptom axes reported here are neither definitive nor exhaustive. In fact, close to 50% of all clinical variance was not captured by the symptom PCA – an observation often overlooked in symptom data-reduction studies, which focus on attaining ‘predictive accuracy’. Such studies rarely consider how much variance remains unexplained in the final data-reduced model and, relatedly, if the proportion of explained variance is reproducible across samples. This is a key property for reliable symptom-to-neural mapping. Thus, we tested if this reproducible low-dimensional PSD symptom space robustly mapped onto neural circuit patterns.

Leveraging a robust low-dimensional symptom representation for mapping brain-behavior relationships

We show that the dimensionality-reduced symptom space improved the mapping onto neural circuit features (i.e. GBC), as compared to a priori item-level clinical symptom measures (Figure 3). This symptom-neural mapping was highly reproducible across various cross-validation procedures and split-half replication (Figure 4). The observed statistical symptom-neural improvement after dimensionality reduction suggests that data-driven clinical variation more robustly covaried with neural features. As noted, the low-rank symptom axes generalized across DSM diagnoses. Consequently, the mapping onto neural features (i.e. GBC) may have been restricted if only a single DSM category or clinical item was used. Importantly, as noted, traditional clinical scales are uni-directional (i.e. zero is asymptomatic, hence there is an explicit floor). Here, we show that data-driven symptom axes (e.g. PC3) were associated with bi-directional variation (i.e. no explicit floor effect). Put differently, patients who score highly on either end of these data-driven axes are severely symptomatic but in very different ways. If these axes reflect clinically meaningful phenomena at both tails then they should more robustly map to neural feature variation, which is in line with reported effects. Therefore, by definition, the derived map for each of the PCs will reflect the neural circuitry that may be modulated by the behaviors that vary along that PC (but not others). For example, we named the PC3 axis ‘Psychosis Configuration’ because of its strong loadings onto conventional ‘positive’ and ‘negative’ PSD symptoms. This PC3 ‘Psychosis Configuration’ showed strong positive variation along neural regions that map onto the canonical default mode network (DMN), which has frequently been implicated in PSD (Fryer et al., 2013; Anticevic et al., 2012b; Ongür et al., 2010; Woodward et al., 2011; Baker et al., 2014; Meda et al., 2014) suggesting that individuals with severe ‘positive’ symptoms exhibit broadly elevated connectivity with regions of the DMN. On the contrary, this bi-directional ‘Psychosis Configuration’ axis also showed strong negative variation along neural regions that map onto the sensory-motor and associative control regions, also strongly implicated in PSD (Ji et al., 2019a; Anticevic et al., 2014). The ‘bi-directionality’ property of the PC symptom-neural maps may thus be desirable for identifying neural features that support individual patient selection. For instance, it may be possible that PC3 reflects residual untreated psychosis symptoms in this chronic PSD sample, which may reveal key treatment neural targets. In support of this circuit being symptom-relevant, it is notable that we observed a mild association between GBC and PC scores in the CON sample (Appendix 1—figure 10). The symptom-neural mapping of dimensionality-reduced symptom scores also produced notable neural maps along other PC axes (Appendix 1—figure 9). Higher PC1 scores, indicating higher general functioning, may be associated with lower global connectivity in sensory/cingulate cortices and thalamus, but higher global connectivity with the temporal lobe. Higher PC2 scores, indicating better cognitive functioning were positively associated with higher medial prefrontal and cerebellar GBC, and negatively with visual cortices and striatum. Importantly, the unique neural circuit variance shown in the map suggest that there are PSD patients with more (or less) severe cognitive deficits independent of any other symptom axis, which would be in line with the observation that these symptoms are not treatable with antipsychotic medication (and therefore should not correlate with symptoms that are treatable by such medications; i.e. PC3). Of note, the statistics in the map were relatively moderate, opening up the possibility that a more targeted measure of neural connectivity (e.g. seed FC, see below) would reveal a robust circuit map for this particular symptom dimension. Given the key role of cognitive deficits in PSD (Barch et al., 2013), this will be a particularly important direction to pursue, especially in prodromal or early-stage individuals where cognitive dysfunction has been shown to predict illness trajectories (Fusar-Poli et al., 2012; Bora et al., 2014; Bora, 2015; Antshel et al., 2017). The PC4 – Affective Valence axis was positively associated with higher GBC in cingulate and sensori-motor cortices and subcortically with the thalamus, basal ganglia, and anterior cerebellum, which may be implicated in the deficits in social functioning and affective aspects of this symptom dimension. Lastly, positive scores on PC5, which we named ‘Agitation/Excitement’, were associated with broad elevated global connectivity in the frontal associative and sensori-motor cortices, thalamus, and basal ganglia. Interestingly, however, the nucleus accumbens (as well as hippocampus, amygdala, cerebellum, and temporal lobe) appear to be negatively associated with PC5 score (i.e. more severe grandiosity, unusual thought, delusional and disorganized thinking), illustrating another example of a bi-directional axis.

Deriving individually actionable brain-behavior mapping across the psychosis spectrum

Deriving a neurobehavioral mapping that is resolvable and stable at the individual patient level is a necessary benchmark for deploying symptom-neural ‘biomarkers’ in a clinically useful way. Therefore, there is increasing attention placed on the importance of achieving reproducibility in the psychiatric neuroimaging literature (Noble et al., 2019; Balsters et al., 2016; Cao et al., 2019; Woo et al., 2017), which becomes especially important for individualized symptom-neural mapping. Recently, several efforts have deployed multivariate methods to quantify symptom-neural relationships (Drysdale et al., 2017; Xia et al., 2018; Smith et al., 2015; Moser et al., 2018; Rodrigue et al., 2018; Yu et al., 2019), highlighting how multivariate techniques may perhaps provide clinically innovative insights. However, such methods face the risk of overfitting for high-dimensional but underpowered datasets (Dinga et al., 2019), as recently shown via formal generative modeling (Helmer et al., 2020).

Here, we attempted to use multivariate solutions (i.e. CCA) to quantify symptom and neural feature co-variation. In principle, CCA is well-suited to address the brain-behavioral mapping problem. However, symptom-neural mapping using CCA in our sample was not reproducible even when using a low-dimensional symptom solution and parcellated neural data as a starting point. Therefore, while CCA (and related multivariate methods such as partial least squares) are theoretically appropriate and may be helped by regularization methods such as sparse CCA, in practice many available psychiatric neuroimaging datasets may not provide sufficient power to resolve stable multivariate symptom-neural solutions (Helmer et al., 2020). A key pressing need for forthcoming studies will be to use multivariate power calculators to inform sample sizes needed for resolving stable symptom-neural geometries at the single subject level. Of note, athough we were unable to derive a stable CCA in the present sample, this does not imply that the multivariate neurobehavioral effect may not be reproducible with larger effect sizes and/or sample sizes. Critically, this does highlight the importance of power calculations prior to computing multivariate brain-behavioral solutions (Helmer et al., 2020).

Consequently, we tested if a low-dimensional symptom solution can be used in a univariate symptom-neural model to optimize individually predictive features. Indeed, we found that a univariate brain-behavioral space (BBS) relationship can result in neural features that are stable for individualized prediction. Critically, we found that if a patient exhibited a high PC symptom score, they were more likely to exhibit a topography of neural ΔGBC that was topographically similar to the map. This suggests that optimizing such symptom-neural mapping solutions (and ultimately extending them to multivariate frameworks) can inform cross-diagnostic patient segmentation with respect to symptom-relevant neural features. Importantly, this could directly inform patient identification based on neural targets that are of direct symptom relevance for clinical trial design.

Utilizing independent molecular neuroimaging maps to ‘benchmark’ symptom-relevant neural features

Selecting single patients via stable symptom-neural mapping of BBS solutions is necessary for individual patient segmentation, which may ultimately inform treatment indication. However, it is also critical to be able to relate the derived symptom-neural maps to a given mechanism. Here we highlight two ways to ‘benchmark’ the derived symptom-neural maps by calculating their similarity against independent pharmacological neuroimaging and gene expression maps. We show a proof-of-principle framework for quantifying derived symptom-neural reference maps with two PSD-relevant neuropharmacological manipulations derived in healthy adults via LSD and ketamine. These analyses revealed that selecting single patients, via the derived symptom-neural mapping solution, can yield above-chance quantitative correspondence to a given molecular target map. These data highlight an important effect: it is possible to construct a ‘strong inference’ (Platt, 1964) evaluation of single patients’ differential similarity to one molecular target map versus another. For instance, this approach could be applied to maps associated with already approved PSD treatments (such as clozapine, olanzapine, or chlorpromazine [Lally and MacCabe, 2015; Miyamoto et al., 2005]) to identify patients with symptom-neural configurations that best capture available treatment-covarying neural targets.

Relatedly, AHBA gene expression maps (Burt et al., 2018) may provide an a priori benchmark for treatment targets that may be associated with a given receptor profile. Here we show that identified BBS maps exhibit spatial correspondence with neural gene expression maps implicated in PSD – namely serotonin, GABA and interneuron gene expression. This gene-to-BBS mapping could be then used to select those patients that exhibit high correspondence to a given gene expression target.

Collectively, this framework could inform empirically testable treatment selection methods (e.g. a patient may benefit from ketamine, but not serotonin agonists such as LSD/psilocybin). In turn, this independent molecular benchmarking framework could be extended to other approaches (e.g. positron emission tomography (PET) maps reflecting specific neural receptor density patterns [Farde et al., 1986; Arakawa et al., 2008]) and iteratively optimized for quantitative patient-specific selection against actionable molecular targets.

Considerations for generalizing solutions across time, severity, and mental health spectra

There are several constraints of the current result that require future optimization – namely the ability to generalize across time (early course vs. chronic patients), across a range of symptom severity (e.g. severe psychotic episode or persistent low-severity psychosis) and across distinct symptom spectra (e.g. mood). This applies to both the low-rank symptom solution and the resulting symptom-neural mapping. It is possible that the derived lower-dimensional symptom solution, and consequently the symptom-neural mapping solution, exhibits either time-dependent (i.e. state) or severity-dependent (i.e. trait) re-configuration. Relatedly, medication dose, type, and timing may also impact the solution. Another important aspect that will require further characterization is the possibility of oblique axes in the symptom-neural geometry. While orthogonal axes derived via PCA were appropriate here and similar to the ICA-derived axes in this solution, it is possible that oblique dimensions more clearly reflect the geometry of other psychiatric spectra and/or other stages in disease progression. For example, oblique components may better capture dimensions of neurobehavioral variation in a sample of prodromal individuals, as these patients are exhibiting early-stage psychosis-like symptoms and may show signs of diverging along different trajectories.

Critically, these factors should constitute key extensions of an iteratively more robust model for individualized symptom-neural mapping across the PSD and other psychiatric spectra. Relatedly, it will be important to identify the ‘limits’ of a given BBS solution – namely a PSD-derived effect may not generalize into the mood spectrum (i.e. both the symptom space and the resulting symptom-neural mapping is orthogonal). It will be important to evaluate if this framework can be used to initialize symptom-neural mapping across other mental health symptom spectra, such as mood/anxiety disorders.

These types of questions will require longitudinal and clinically diverse studies that start prior to the onset of full-blown symptoms (e.g. the North American Prodrome Longitudinal Study (NAPLS) [Addington et al., 2012; Seidman et al., 2010]). A corollary of this point is that ∼50% of unexplained symptom variance in the current PCA solution necessitates larger samples with adequate power to map this subtle, but perhaps clinically essential, PSD variation.