Sampling motion trajectories during hippocampal theta sequences

Abstract

Efficient planning in complex environments requires that uncertainty associated with current inferences and possible consequences of forthcoming actions is represented. Representation of uncertainty has been established in sensory systems during simple perceptual decision making tasks but it remains unclear if complex cognitive computations such as planning and navigation are also supported by probabilistic neural representations. Here, we capitalized on gradually changing uncertainty along planned motion trajectories during hippocampal theta sequences to capture signatures of uncertainty representation in population responses. In contrast with prominent theories, we found no evidence of encoding parameters of probability distributions in the momentary population activity recorded in an open-field navigation task in rats. Instead, uncertainty was encoded sequentially by sampling motion trajectories randomly and efficiently in subsequent theta cycles from the distribution of potential trajectories. Our analysis is the first to demonstrate that the hippocampus is well equipped to contribute to optimal planning by representing uncertainty.

Editor's evaluation

This paper will be of interest to neuroscientists interested in predictive coding and planning. It presents a novel analysis of hippocampal place cells during exploration of an open arena. It performs a comprehensive comparison of real and synthetic data to determine which encoding model best explains population activity in the hippocampus.

https://doi.org/10.7554/eLife.74058.sa0Introduction

Model-based planning and predictions are necessary for flexible behavior in a range of cognitive tasks. In particular, navigation is a domain that is ecologically highly relevant not only for humans but for rodents as well, which established a field for parallel investigation of the theory of planning, the underlying cognitive computations, and their neural underpinnings (Hunt et al., 2021; Mattar and Lengyel, 2022). Importantly, predictions extending into the future have to cope with uncertainty coming from multiple sources: uncertainty in the current state of the environment (our current location relative to a dangerous spot, the satiety of a predator or the actual geometry of the environment) and the availability of multiple future options when evaluating upcoming choices (Glimcher, 2003; Redish, 2016). Whether and how this planning-related uncertainty is represented in the brain is not known.

The hippocampus has been established as one of the brain areas critically involved in both spatial navigation and more abstract planning (O’Keefe and Nadel, 1978; Miller et al., 2017). Recent progress in recording techniques and analysis methods largely contributed to understanding of the neuronal mechanisms underlying such computations (Pfeiffer and Foster, 2013; Kay et al., 2020). A crucial insight gained about the neural code underlying navigation is that neuron populations in the hippocampus represent the trajectory of the animal on multiple time scales: Not only the current position of the animal can be read out at the behavioral time scale (O’Keefe and Nadel, 1978; Wilson and McNaughton, 1993), but also trajectories starting in the past and ending in the near future are repeatedly expressed on a shorter time scale at accelerated speed during individual cycles of the 6–10 Hz theta oscillation (theta sequences, Foster and Wilson, 2007, Figure 1a). Moreover, features characteristic of planning can be identified in the properties of encoded trajectories, such as their dependence on the immediate context the animal is in, and on the span of the current run, future choices and rewards (Johnson and Redish, 2007; Gupta et al., 2012; Wikenheiser and Redish, 2015; Tang et al., 2021; Zheng et al., 2020). These data provide strong support for a computational framework where planning relies on sequential activity patterns in the hippocampus delineating future locations based on the current beliefs of the animal (Stachenfeld et al., 2017; Miller et al., 2017). Whether hippocampal computations also take into account the uncertainty associated with planning and thus the population activity represents the uncertainty of the encoded trajectories has not been studied yet.

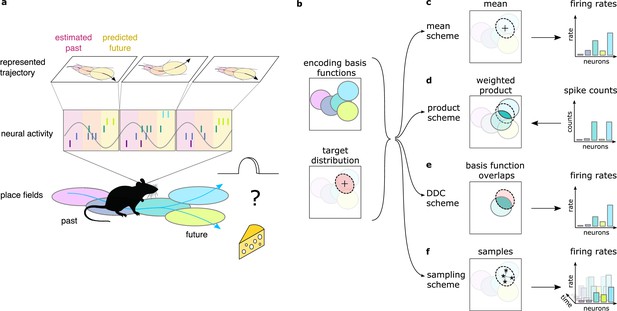

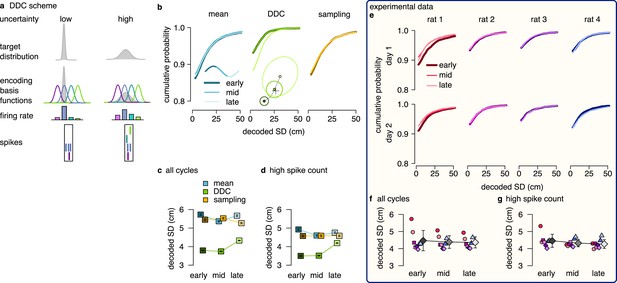

Theta sequences, uncertainty and variability.

(a) Schematic showing the way hippocampal place cell activity represents possible trajectories in subsequent theta cycles during navigation. (b-f) Schemes for representing a target probability distribution (b, bottom) by the activity of a population of neurons each associated with an encoding basis function (related to their place field, Methods; b, top). (c) In the mean scheme the firing rates of the neurons (right, colored bars) are defined as the value of their basis functions at the mean of the target distribution (left, cross). (d) Similar to other schemes, the product scheme also defines a mapping between the probability distribution and the activity of the neuron population but it is easier to understand this mapping in the reverse direction (arrow): from the spike counts (right) to the represented distribution (left). The spike count of each neuron (right) can be considered as its vote for the contribution of their tuning curves to the represented distribution and ultimately the target distribution is approximated as the weighted product of these tuning curves (left). (e) In the DDC scheme the firing rate of each neuron (right) is defined by the overlap between the target distribution and the basis function (left). (f) In the sampling scheme the firing rate of the neurons at each point in time (right) equals the value of their basis functions at the location sampled from the target distribution (left, asterisks).

Neuronal representations of uncertainty have been extensively studied in sensory systems (Ma et al., 2006; Orbán et al., 2016; Vértes and Sahani, 2018; Walker et al., 2020). Schemes for representing uncertainty fall into three broad categories (Figure 1d–f). In the first two categories (product and Distributed Distributional Code, DDC, schemes), the firing rate of a population encodes a complete probability distribution over spatial locations instantaneously at any given time by representing the parameters of the distribution (Wainwright and Jordan, 2007, Methods). In the product scheme, the firing rate of neurons encode a probability distribution through taking the product of the tuning functions (basis functions, Methods) of the coactive neurons (Ma et al., 2006; Pouget et al., 2013, Figure 1d). In contrast, in the DDC scheme a population of neurons represents a probability distribution by signalling the overlap between the distribution and the basis function of individual neurons (Zemel et al., 1998; Vértes and Sahani, 2018, Figure 1e). In the third category, the sampling scheme, the population activity represents a single value sampled stochastically from the target distribution. In this case uncertainty is represented sequentially by the across-time variability of the neuronal activity (Fiser et al., 2010, Figure 1f). These coding schemes provide a firm theoretical background to investigate the representation of uncertainty in hippocampus. Importantly, all these schemes have been developed for static features, where the represented features do not change in time (Ma et al., 2006; Orbán et al., 2016; but see Kutschireiter et al., 2017). In contrast, trajectories represented in the hippocampus encode the temporally changing position of the animal. Here, we extend the coding schemes to be able to accommodate the encoding of uncertainty associated with dynamic motion trajectories and investigate their neuronal signatures in rats while navigating an open environment.

Probabilistic planning in an open field entails the representation of subjective beliefs about the past, present, and future states, which requires that a continuous probability distribution over possible locations is represented. Previous studies investigated prospective codes during theta sequences in a constrained setting, in which binary decisions were required in a spatial navigation task (Johnson and Redish, 2007; Kay et al., 2020; Tang et al., 2021). These studies found that alternative choices were encoded sequentially in distinct theta cycles suggesting a sampling based representation. However, the dominant source of future uncertainty in these tasks is directly associated with the binary choice of the animal (left or right) and it remains unclear whether this generalizes to other sources of uncertainty relevant in open field navigation. In particular, it has been widely reported that the hippocampal spatial code has different properties in linear tracks, where the physical movement of the animal is constrained by the environment, than during open-field navigation (Buzsáki, 2005). Moreover, these previous studies did not attempt to test the consistency of the hippocampal code with alternative schemes for representing uncertainty. Thus, the way hippocampal populations contribute to probabilistic planning during general open-field navigation remains an open question.

In the present paper, we propose that the hippocampus is performing probabilistic inference in a model that represents the temporal dependencies between spatial locations. Using a computational model, we demonstrate that key features of the hippocampal single neuron and population activity are compatible with representing uncertainty of motion trajectories in the population activity during theta sequences. Further, by developing novel computational measures, we pitch three alternative schemes of uncertainty representation and a scheme that lacks the capacity to represent uncertainty, the mean scheme (Figure 1c), against each other and demonstrate that hippocampal activity does not show the hallmarks of schemes encoding probability distributions instantaneously. Instead, we demonstrate that the large and structured trial to trial variability between subsequent theta cycles is consistent with stochastic sampling from potential future trajectories but not with a scheme ignoring the uncertainty by representing only the most likely trajectory. Finally we confirm previous results in simpler mazes by showing that the trajectories sampled in subsequent theta cycles tend to be anti-correlated, a signature of efficient sampling algorithms. These results demonstrate that the brain employs probabilistic computations not only in sensory areas during perceptual decision making but also in associative cortices during naturalistic, high-level cognitive processes.

Results

Neural variability increases within theta cycle

A key insight of probabilistic computations is that during planning uncertainty increases as trajectories proceed into more distant future (Murphy, 2012; Sezener et al., 2019). As a consequence, if planned trajectories are encoded in individual theta sequences, the uncertainty associated with the represented positions increases within a theta cycle (Figure 1a). This systematic change in the uncertainty of the represented position during theta cycles is a crucial observation that enabled us to investigate the neuronal signatures of uncertainty during hippocampal theta sequences. For this, we analyzed a previously published dataset (Pfeiffer and Foster, 2013). Briefly, rats were trained to collect food reward in a 2×2 m large open arena from one of the 36 uniformly distributed food wells alternating between random foraging and spatial memory task (Pfeiffer and Foster, 2013). Position of the animal was recorded via a pair of distinctly coloured head-mounted LED light. Goal directed navigation in an open arena requires continuous monitoring and online correction of the deviations between the intended and actual motion trajectories. While sequences during both sharp waves and theta oscillations have been implicated in planning, here we focused on theta sequences as they are more strongly tied to the current position and thus averaging over many thousands of theta cycles can provide the necessary statistical power to identify the neuronal correlate of uncertainty representation.

Activity of hippocampal neurons was recorded by 20 tetrodes targeted to dorsal hippocampal area CA1 (Pfeiffer and Foster, 2013). Individual neurons typically had location-related activity (Figure 2b, see also Figure 4—figure supplement 1a), but their spike trains were highly variable (Skaggs et al., 1996; Fenton and Muller, 1998, Figure 2a). We used the empirical tuning curves (i.e., place fields) and a Bayesian decoder (Zhang et al., 1998) to estimate the represented spatial position from the spike trains of the recorded population in overlapping 20ms time bins. Theta oscillations partition time into discrete segments and analysis was performed in these cycles separately (Figure 2c). Despite the large number of recorded neurons (68–242 putative excitatory cells in 8 sessions from 4 rats), position decoding had a limited accuracy (Fisher lower bound on the decoding error in 20ms bins: 16–30 cm vs. typical trajectory length ∼20 cm). Yet, in high spike count cycles we could approximately reconstruct the trajectories encoded in individual theta cycles (Figure 2c). We then compared the reconstructed trajectories to the actual trajectory of the animal. We observed substantial deviation between the decoded trajectories and the motion trajectory of the animal: decoded trajectories typically started near the actual location of the animal and then proceeded forward (Foster and Wilson, 2007) often departing in both directions from the actual motion trajectory (Kay et al., 2020; Figure 2c).

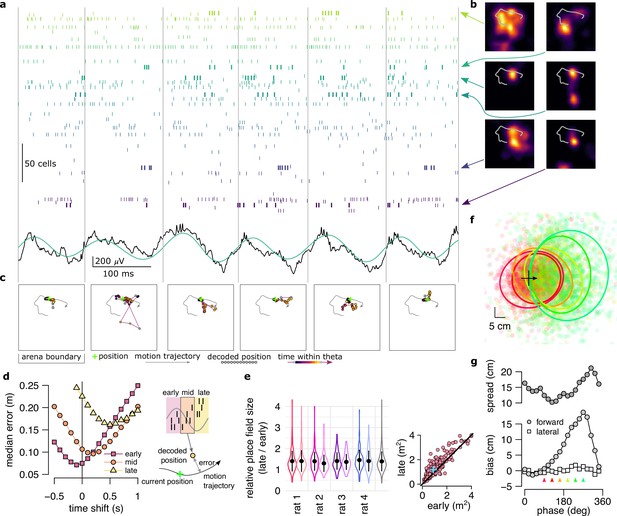

Neural variability increases within theta cycle.

(a) Example spiking activity of 250 cells (top) and raw (black) and theta filtered (green) local field potential (bottom) for 6 consecutive theta cycles (vertical lines). (b) Place fields of 6 selected cells in a 2x2 m large open arena. Gray line indicates the motion trajectory during the run episode analysed in a-c. (c) Position decoded in overlapping 20ms time bins with 5ms shift (circles) during the 6 theta cycles shown in a. Time within theta cycle is color coded, gray indicates bins on the border of two cycles. Gray line shows the motion trajectory during the run episode, green crosses indicate the location of the animal in each cycle. (d) Decoding error for early, mid and late phase spikes (inset, top) calculated as a function of the time shift of the animal’s position along its motion trajectory (inset, right). For the analysis in panels d-e each theta cycle was divided into 3 parts with equal spike counts. (e) Relative place field size in late versus early theta spikes for the eight sessions (error bars: SD across n=80-263 cells). Gray bar: average and SD across all sessions (n=1264 cells). Inset: Place field size (ratemap area above 10% of the max firing rate) estimated from late vs. early theta spikes in an example session (individual dots correspond to individual place cells, blue cross: median). Only putative excitatory cells are included. To estimate the ratemaps, we shifted the reference positions with that minimized decoding error for the given theta phase (see panel d). (f) Decoded positions (dots, in 20 ms bins with 5 ms shift) relative to the instantaneous position and motion direction (cross), and 0.5 confidence interval (CI) ellipses for six different theta phases (color, as in panel g). (g) Bias (bottom, mean of the decoded positions) and spread (top, see Methods) of decoded positions as a function of theta phase for an example session. Panels d,f,g show data from theta cycles with the highest 10% of spike counts.

To systematically analyse how this deviation depends on the theta phase, we sorted spikes into three classes (early, mid and late). For any given class, we decoded position from the spikes and compared it to the position of the animal shifted forward and backward along the motion trajectory. Time shift dependence of the accuracy of the decoders reveals the most likely portion of the trajectory the given class encodes (Figure 2d, see also Figure 4—figure supplement 2). For early spikes, the minimum of the average decoding error was shifted backward by ∼100ms, while for late spikes +500ms forward shift minimized the decoding error. Note that the position identified by the minima can only establish positions relative to the LED used to record the position of the animal. The relative shifts in the minima of the decoding error across different phases of theta are not affected by the arbitrariness of the positioning of the LED sources. The observed systematic biases indicate that theta sequences tended to start slightly behind the animal and extended into the near future (Foster and Wilson, 2007; Gupta et al., 2012).

Further, the minima of the decoding errors showed a clear tendency: position decoded from later phases displayed larger average deviation from the motion trajectory of the animal (Figure 2d). At the single neuron level, increased deviations at late theta phases resulted in the expansion of place fields (Skaggs et al., 1996): Place fields were more compact when estimated from early-phase spikes than those calculated from late-phase activity (Figure 2e). At the population level, larger deviation between the motion trajectory and the late phase-decoded future positions can be attributed to the increased variability in encoded possible future locations. Indeed, when we aligned the decoded locations relative to the current location and motion direction of the animal, we observed that the spread of the decoded locations increased in parallel with the forward progress of their mean within theta cycles (Figure 2f, g). Taken together, this analysis demonstrated that the variability of the decoded positions is larger at the end of the theta cycle when encoding more uncertain future locations than at the beginning of the cycle when representing the past position.

The observed increase of the variability across theta cycles could be a direct consequence of encoding variable two-dimensional trajectories (Figure 4—figure supplement 2a, b) or it may be a signature of the neuronal representation of uncertainty. In the next sections, we set up a synthetic dataset to investigate the theta sequences and their variability in the four alternative coding schemes.

Synthetic data: testbed for discriminating the encoding schemes

To analyse the distinctive properties of the different coding schemes and to develop specific measures capable of discriminating them, we generated a synthetic dataset in which both the behavioral component and the neural component could be precisely controlled. The behavioral component was matched to the natural movement statistics of rats during navigating a 2D environment. The neural component was constructed such that it could accommodate the alternative encoding schemes for prospective representations during navigation.

Our synthetic dataset had three consecutive levels. First, we simulated a planned trajectory for the rat in a two dimensional open arena by allowing smooth changes in the speed and motion direction (Methods, Figure 3—figure supplement 1a, b). Second, similar to the real situation, the simulated animal did not have access to its true position, , in theta cycle , but had to infer it from the sensory inputs it had observed in the past, (Methods). To perform this inference and predict its future position, the animal used a model of its own movements in the environment. In this dynamical generative model, the result of the inference is a posterior distribution over possible trajectories starting steps back in the past and ending nf steps forward in the future. To generate the motion trajectory of the animal noisy motor commands were calculated from the difference between its planned trajectory and its inferred current position (Methods, Figure 3—figure supplement 1a, b). The kinematics was matched between simulation and experimental animals (Figure 3—figure supplement 2).

Third, in our simulations the hippocampal population activity encoded an inferred trajectory at an accelerated speed in each theta cycle such that the trajectory started in the past at the beginning of the simulated theta cycle and led to the predicted future states (locations) by the end of the theta cycle (Figure 3a–c). To approximately match the size of the synthetic and experimental data, we simulated the activity of 200 hippocampal pyramidal cells (place cells). Firing rates of pyramidal cells depended on the encoded spatial location using either of the four different coding schemes (Methods): In the mean code, the population encoded the single most likely trajectory without representing uncertainty. In product and DDC schemes, a snapshot of the population activity at any given theta phase encoded the estimated past or predicted future part of the trajectory in the form of a probability distribution (Methods). Finally, in the sampling scheme, in each theta cycle a single trajectory was sampled stochastically form the distribution of possible trajectories (Figure 3—figure supplement 1). Spikes were generated from the firing rates independently across neurons via an inhomogenous Poisson process. The posterior distribution was updated in every ∼100ms matching the length of theta cycles. Importantly, all of the four encoding schemes yielded single neuron and population activity dynamics consistent with the known features of hippocampal population activity including spatial tuning, phase precession (Figure 3d) and theta sequences (Figure 3e, see also Figure 3—figure supplement 3).

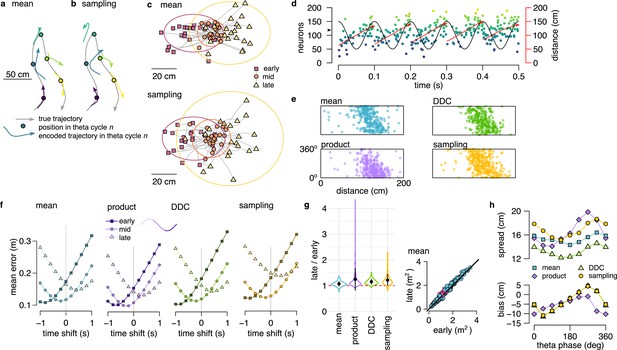

Theta sequences in simulated data.

(a) Motion trajectory of the simulated rat (gray, 10 s) together with its own inferred and predicted most likely (mean) trajectory segments (colored arrows) in five locations separated by 2 s (filled circles). These trajectories were represented in theta cycles using one of the four alternative schemes. (b) Same as panel a, but trajectories sampled from the posterior distribution. (c) Example represented trajectories aligned to the instantaneous position and direction of the simulated animal in the mean (top) and in the sampling (bottom) scheme. Ellipses indicate 50% CI of all theta cycles. Color code indicates start (red), mid (orange) and end (yellow) of the trajectories. (d) Simulated activity of 200 place cells sorted according to the location of their place fields on a linear track (200x10 cm) during an idealized 10 Hz theta oscillation using the mean encoding scheme. Red lines show the 1-dimensional trajectories represented in each theta cycle. Note that the overlap between trajectories is larger here than in panels a-b, because, for clarity, only trajectories at every 20th theta cycle are shown there. (e) Theta phase of spikes of an example simulated neuron (arrowhead in panel d) as a function of the animal’s position in the four coding schemes. (f) Decoding error from early, mid and late phase spikes (highest 5% spike count cycles) as a function of the time shift of the simulated animal’s position in a mean, product, DDC and sampling schemes. (g) Relative place field size in late versus early theta spikes for the four different encoding schemes (error bars: SD over 200 cells). Inset: place field size estimated from late vs. early theta spikes in the mean scheme. Median is indicated with red cross. (h) Decoding bias (bottom) and spread (top) as a function of theta phase for the four different encoding schemes. Decoding was performed in 120° bins with 45° shifts. All theta cycles are included in this analysis, as focusing on the highest spike count cycles highly influences these quantities in the product model.

After generating the synthetic datasets, we investigated how positional information is represented during theta sequences in each of the four alternative coding schemes. We decoded the synthetic population activity in early, mid and late theta phases and compared the estimated position with the actual trajectory of the simulated animal. The deviation between the decoded position and the motion trajectory increased throughout the theta cycle irrespective of the coding scheme (Figure 3f) due to the combined effects of the divergence of possible future trajectories and the increased variability of the encoded locations. Moreover, place fields were significantly larger when estimated from late than early-phase spikes in all four encoding schemes (Figure 3g, for each scheme). Finally, when aligned to the current position and motion direction of the simulated animal, the spread of the decoded locations increased with the advancement of their mean within theta cycles in all four coding schemes (Figure 3h).

Our analyses thus confirmed that the disproportionate increase in the variability of the encoded locations at late theta phase is consistent with encoding trajectories in a dynamical model of the environment irrespective of the representation of the uncertainty. These synthetic datasets provide a functional interpretation of the hippocampal population activity during theta oscillations and offer a unique testbed for contrasting different probabilistic encoding schemes. In the following sections, we will identify hallmarks for each of the encoding schemes of uncertainty and use these hallmarks to discriminate them through the analysis of neural data (Figure 4a).

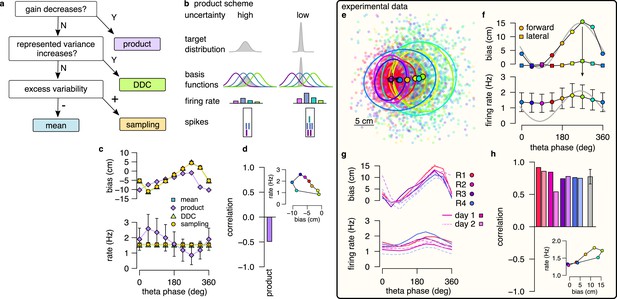

Product scheme: population gain decreases with uncertainty.

(a) Decision-tree for identifying the encoding scheme. (b) Schematic of encoding a high-uncertainty (left) and a low-uncertainty (right) target distribution using the product scheme with 4 neurons. The variance is represented by the gain of the population. (c) Population firing rate (bottom) and forward decoding bias (top) as a function of theta phase for the four schemes in synthetic data. Only the product scheme predicts a systematic change in the firing rate. Error bars show SD across n=17990 theta cycles. (d) Correlation between firing rate and forward bias for the product scheme. Inset: Firing rate as a function of forward bias in the product scheme. Color code is the same as in f. (e) Decoded position relative to the location and motion direction of the animal (black arrow) at eight different theta phases in an example recording session. Filled circles indicate mean, ellipses indicate 50% CI of the data. (f) Forward decoding bias of the decoded position (top) and population firing rate (bottom) as a function of theta phase in an example recording session. Gray line in top and bottom show cosine fit to the forward decoding bias. Error bars show SD across n=9264 theta cycles. (g) Decoding bias (top) and firing rate (bottom) for all animals and sessions (line type). (h) Correlation between firing rate and forward bias for all recorded sessions. Gray bar: mean and SD across the eight sessions. Inset: Firing rate as a function of forward decoding bias in an example session. Bias in (e-g) was calculated using the 5% highest spike count theta cycles. Population firing rate plots show average over all cycles. In this figure, decoding was performed in 120° bins with 45° shifts.

Testing the product scheme: gain

To discriminate the product scheme from other representations, we capitalize on the specific relationship between response intensity of neurons and uncertainty of the represented variable. In a product representation, a probability distribution over a feature, such as the position, is encoded by the product of the neuronal basis functions (Methods). When the basis functions are localized, as in the case of hippocampal place fields, the width of the encoded distribution tends to decrease with the total number of spikes in the population (Ma et al., 2006, Figure 4b, Appendix 1, Figure 4—figure supplement 1). Therefore, we propose using the systematic decrease of the population firing rate (gain) with increasing uncertainty as a hallmark of the product scheme.

We first tested for the specificity of the co-modulation of the population gain with uncertainty to the product scheme: we compared gain modulation in the four different coding schemes in our synthetic dataset. In each of the coding schemes, we identified the theta phase with the maximal uncertainty by the maximal forward bias in encoded positions. For this, we decoded positions from spikes using eight overlapping 120° windows in theta cycles and defined the end of the theta cycles based on the maximum of the average forward bias (Figure 4C top). Then we calculated the average number of spikes in a given 120° window as a function of the theta phase. We found that the product scheme predicted a systematic, ∼threefold modulation of the firing rate within the theta cycle (Figure 4c, bottom). The peak of the firing rate coincided with the start of the encoded trajectory, where the uncertainty is minimal and the correlation between the firing rate and the forward bias was negative (Figure 4d). The three other coding schemes did not constrain the firing rate of the population to represent probabilistic quantities, and thus the firing rate was independent of the theta phase or the encoded uncertainty.

After demonstrating the specificity of uncertainty-related gain modulation to the product scheme, we returned to the experimental dataset and applied the same analysis to neuronal activity recorded from freely navigating rats. We first decoded the spikes during theta oscillation falling in eight overlapping 120° window and aligned the decoded locations relative to the animals’ position and motion direction. We confirmed that the encoded location varied systematically within the theta cycle from the beginning towards the end of the theta cycle both when considering all theta cycles (Figure 4—figure supplement 2) or when constraining the analysis to theta cycles with the highest 5% spike count (Figure 4e, f; Figure 2f, g). Maximal population firing rate coincided with the end of the theta sequences which correspond to future positions characterized by the highest uncertainty (Figure 4f). Thus, a positive correlation emerged between the represented uncertainty and the population gain (Figure 4h). This result was consistent across recording sessions and animals (Figure 4g, h) and was also confirmed in an independent dataset where rats were running on a linear track (Figure 4—figure supplement 3a–d; Grosmark et al., 2016).

This observation is in sharp contract with the prediction of the product encoding scheme where the maximum of the firing rate should be near the beginning of the theta sequences (Figure 4c). The other encoding schemes are neutral about the theta modulation of the firing rate, and therefore they are all consistent with the observed small phase-modulation of the firing rate.

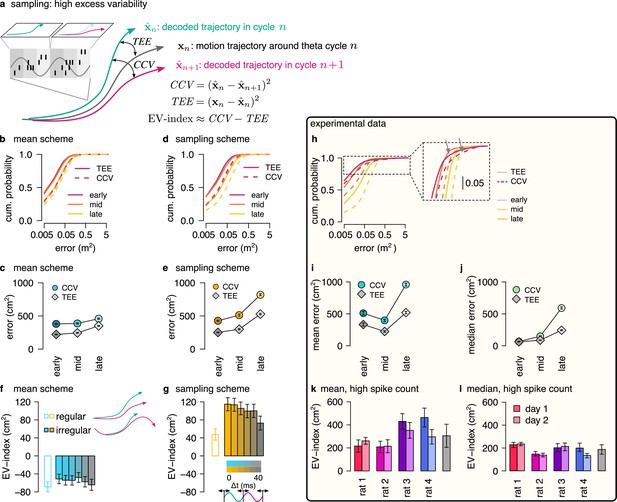

Testing the DDC scheme: diversity

Next, we set out to discriminate the DDC scheme from the mean and the sampling schemes. In the DDC scheme, neuronal firing rate represents the overlap of the basis functions with the encoded distribution (Figure 1e; Zemel et al., 1998; Vértes and Sahani, 2018). Intuitively, in this scheme the diversity of the co-active neurons increases as the variance of the encoded distribution increases, i.e. when the encoded variance is small, only a smaller fraction of basis functions will overlap with the distribution, thus limiting the number of neurons participating in encoding. (Figure 5a). Conversely, a diverse set of neurons becomes co-active when encoding a distribution of high variance (Figure 5a). Therefore, the set of active neurons reflects the width of the encoded probability distribution. This feature of the population code carries information about uncertainty, which can thus be decoded from the population activity (Figure 5—figure supplement 1b). We used a maximum likelihood decoder to estimate the first two moments (mean and SD) of the encoded distribution (Methods, Equation 19). Intuitively, increased uncertainty at later stages of the trajectory is expected to be reflected in a parallel increase in the decoded SD, and we propose to use this systematic increase of decoded SD as a hallmark of DDC encoding.

DDC scheme: diversity increases with uncertainty.

(a) Schematic of encoding a narrow (left) and a wide (right) distribution with spike-based DDC using four neurons. Intuitively, the standard deviation (SD) is represented by the diversity of the co-active neurons. (b) Cumulative distribution function (CDF, accross theta cycles) of the decoded SD from spikes at different theta phases for the mean (left), DDC (middle) and sampling schemes (right) in the simulated dataset. (c) Mean and SE (across n=14954 theta cycles) of decoded SD as a function of theta phase for the different schemes in the simulated dataset. Only the DDC code predicts a slight, but significant increase in the decoded SD at late theta phases. (d) Same as panel c, calculated from theta cycles with higher than median spike count (SE across n=7214 theta cycles). (e) CDF of the decoded SD from spikes in early, mid and late phase of the theta cycles (across all cycles) for the analysed sessions.(f, g) Mean of the decoded SD for each animal from early, mid and late theta spikes using all theta cycles (f) or theta cycles with higher than median spikecount (g). Grey symbols show mean and SD across sessions. See also Figure 5—figure supplement 2 for similar analysis using the estimated encoding basis functions instead of the empirical tuning curves for decoding and Figure 5—figure supplement 3 for the predictions of the product scheme.

First, we turned to our synthetic dataset to demonstrate that systematic changes in decoded SD are specific to this scheme. In each of the three remaining coding schemes (mean, DDC and sampling), we divided the population activity to three distinct windows relative to the theta cycle (early, mid, and late). We decoded the mean and the SD of the encoded distribution of trajectories (Figure 5b inset) using the empirical tuning curves in each theta cycle and analysed the systematic changes in the decoded SD values from early to late theta phase (Methods). We found a systematic and significant increase in the decoded SD in the DDC scheme from early to late theta phases (one-sided, two sample Kolmogorov-Smirnov test, ), whereas the decoded SD was independent of the theta phase for the mean and the sampling schemes (KS test, mean scheme: , sampling scheme: , Figure 5b, c). This result was robust against using the theta cycles with higher than median spike count for the analysis (Figure 5d, mean: , DDC: , sampling: ) or against using the estimated basis functions instead of the empirical tuning curves for decoding (Figure 5—figure supplement 2b; Appendix 2). Thus, our analysis of synthetic data demonstrated that the decoded SD is a reliable measure to discriminate the DDC scheme from sampling or mean encoding.

After testing on synthetic data, we repeated the same analysis on the experimental dataset. We divided each theta cycle into three windows of equal spike counts (early, mid, and late) and decoded the mean and the SD of the encoded trajectories from the population activity in each window. We found that the decoded SDs had a nearly identical distribution at the three theta phases for all recording sessions (Figure 5e). The mean of the decoded SD did not change significantly or consistently across the recording session neither when we analysed all theta cycles (early vs. late, KS test for all sessions, Figure 5e, f) nor when we constrained the analysis to the half of the cycles with higher than median spike count (KS test, for all sessions, Figure 5g) or when we used the estimated encoding basis functions instead of the empirical tuning curves for decoding (Figure 5—figure supplement 2d). We obtained similar results in a different dataset with rats running on a linear track (Figure 4—figure supplement 3e-i; Grosmark et al., 2016). We conclude that there are no signatures of DDC encoding scheme in the hippocampal population activity during theta oscillation. Taken together with the findings on the product scheme, our results indicate that hippocampal neurons encode individual trajectories rather than entire distributions during theta sequences.

Testing the sampling scheme: excess variability

Both the mean scheme and the sampling scheme represent individual trajectories but only the sampling scheme is capable of representing uncertainty. Therefore, a critical question concerns if the two schemes can be distinguished based on the population activity during theta sequences. Sampling-based codes are characterized by large and structured trial-to-trial neuronal variability (Orbán et al., 2016). Our results showed a systematic increase in the variability of the decoded location at phases of theta oscillation that correspond to later portions of the trajectory associated with higher uncertainty. This parallel increase of variability and uncertainty could be taken as evidence towards the sampling scheme. However, we demonstrated that the systematic theta phase-dependence of the neural variability and the variability of the encoded trajectories is a general feature of predictions in a dynamical model characterizing both the sampling and the mean schemes (Figure 3). In the sampling scheme, the cycle-to-cycle variability is further increased by the sampling variance, that is the stochasticity of the represented trajectory, such that the magnitude of excess variance is proportional to uncertainty. In order to discriminate sampling from the mean scheme we designed a measure, excess variability, that can identify the additional variance resulting from sampling. For this, we partitioned the variability of encoded trajectories such that the full magnitude of variability across theta cycles (cycle-to-cycle variability, CCV) is compared to the amount of variability expected from the animal’s own uncertainty (trajectory encoding error, TEE, Figure 6a, Methods).

Sampling scheme: excess variability increases with uncertainty.

(a) To discriminate sampling from mean encoding, we defined the EV-index which measures the magnitude of cycle-to-cycle variability (CCV) relative to the trajectory encoding error (TEE). (b) Cumulative distribution of CCV (dashed) and TEE (solid) across theta cycles for early, mid and late theta phase (colors) in the mean scheme using simulated data. Note the logarithmic x axis. (c) Mean CCV and TEE calculated from early, mid and late phase spikes in the mean scheme. (d-e) Same as (b-c) for the sampling scheme. (f-g) The EV-index for the mean (f) and sampling (g) schemes with simulating regular or irregular theta-trajectories (left inset) and applying various amount of jitter for segmenting the theta cycles (color code, right inset). Error bars show SE across n=5300 (regular) and n=4529 (irregular) theta cycle-pairs. (h) Cumulative distribution of CCV (dashed) and TEE (solid) across theta cycles for early, mid, and late theta phase (colors) for an example recording session. Note the logarithmic x axis. Arrows in inset highlight atypically large errors occurring mostly at early theta phase. (i-j) Mean (i) and median (j) of CCV and TEE calculated from early, mid and late phase spikes for the session shown in h. (k-l) EV-index calculated for all analysed sessions (color) and across session mean and SD (gray) using the mean (k) or the median (l) across theta cycles. Error bars show SE in k and 5% and 95% confidence interval in l across n=3098-6558 theta cycle-pairs. p-values are shown in Table 3. Here, we analysed only theta cycles with higher than median spike count. See Figure 6—figure supplement 1 for similar analysis including all theta cycles and Figure 5—figure supplement 3 for the EV-index calculated using the product and DDC schemes.

We assessed excess variability in our synthetic dataset using either the sampling or the mean representational schemes, that is encoding either sampled trajectories or mean trajectories. Specifically, we decoded the population activity in three separate windows of the theta cycle (early, mid, and late) using a standard static Bayesian decoder and computed the difference between the decoded locations across subsequent theta cycles (cycle-to-cycle variability, CCV) and the difference between the decoded position and the true location of the animal (trajectory encoding error, TEE). As expected, both TEE and CCV increased from early to late theta phase both for mean (Figure 6b, c) and sampling codes (Figure 6d, e, Methods). Our analysis confirmed that it is the magnitude of the increase that is the most informative of the identity of the code: the increase of CCV is more intense within theta cycle than TEE in the case of sampling (Figure 6e) whereas the increase of the TEE is more intense during the theta cycle than that of CCV in the mean encoding scheme (Figure 6c, Methods). To evaluate this distinction in population responses, we quantified the difference between the rate of change of CCV and TEE using the excess variability index (EV-index). The EV-index was negative for mean (Methods, Figure 6f) and positive for sampling schemes (Figure 6g). To test the robustness of the EV-index against various factors influencing the cycle-to-cycle variability, we analyzed potential confounding factors. First, to compensate for the potentially large decoding errors during low spike count theta cycles, we calculated the EV-index both using all theta cycles (Figure 6—figure supplement 1e, f) or only theta cycles with high spike count (Figure 6f, g). Second, we varied randomly the speed and the length of the encoded trajectories (irregular vs. regular trajectories, Figure 6f inset, Methods). Third, we introduced a jitter to the boundaries of the theta cycles in order to model our uncertainty about cycle boundaries in experimental data (jitter 0–40ms, Figure 6g inset, Methods). We found that the EV-index was robust against these manipulations, reliably discriminating sampling-based codes from mean codes across a wide range of parameters. Thus, EV-index can distinguish sampling related excess variability from variability resulting from other sources.

We repeated the same analysis on the dataset recorded from rats exploring the 2D spatial arena. We calculated cycle-to-cycle variability and trajectory encoding error and found that typically both the error and the variability increased during theta (Figure 6h, inset). However, at early theta phase the distributions had high positive skewness due to a few outliers displaying extremely large errors typically at early theta phase (Figure 6h, arrows in the inset). The outliers could reflect an error in the identification of the start of the theta cycles, and the resulting erroneous assignment of spikes that encode a different trajectory caused increased error in the estimation of the starting position of the trajectory. To mitigate this effect, we calculated the EV-index using both the mean and the median across all theta cycles (Figure 6—figure supplement 1j, k) or including only high spike count cycles (Figure 6k, l). We found that the EV-index was consistently and significantly positive for all recording sessions. This analysis supports that the large cycle-to-cycle variability of the encoded trajectories during hippocampal theta sequences (Gupta et al., 2012) is consistent with random sampling from the posterior distribution of possible trajectories in a dynamic generative model.

The consistently positive EV-index across all recording sessions signified that variance in the measured responses was higher at the end of theta cycle than what would be expected from a scheme not encoding uncertainty. In fact, the magnitude of the EV-index was substantially larger when evaluated on real data than in any of our synthetic datasets, including datasets where additional structured variability was introduced through randomly changing the speed or the length of the encoded trajectories (irregular trajectories, Figure 6g) or through additional randomness in the cycle boundaries (jitter in Figure 6g cf., Figure 6k). A potential source for higher excess variability could be task-dependent switching between multiple coexisting maps (Kelemen and Fenton, 2010; Jezek et al., 2011). However, we found that most neurons had identical spatial tuning in the two phases of the task (random foraging versus goal directed navigation; Figure 6—figure supplement 2a–c) and the remaining cells typically showed relatively minor change in their spatial activity across task phases (Figure 6—figure supplement 2; see also Pfeiffer, 2022). Thus, multiple maps are not responsible for the large EV-index. Furthermore, we did not find consistent differences in the EV-index evaluated in the random foraging versus the goal directed search phase of the task (Figure 6—figure supplement 2), implying that specific goals during planning do not modulate the uncertainty of trajectories during theta sequences. Finally, large variability could be indicative of efficient sampling algorithms where correlations between subsequent samples are actively suppressed (MacKay, 2003; Kay et al., 2020; Echeveste et al., 2020). In the final section, we tested this possibility using real and simulated data.

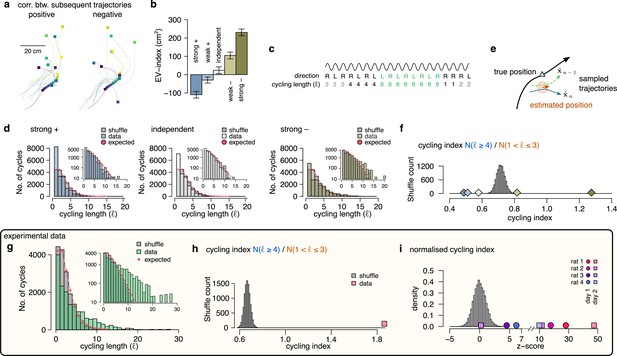

Signature of efficient sampling: generative cycling

The efficiency of a sampling process can be characterized by the number of samples required to cover the target probability distribution. The efficiency can be increased when subsequent samples are generated from distant parts of the distribution making the samples anti-correlated. To test the hypothesis that the magnitude of the EV-index may be indicative of the magnitude of correlation between the samples, we first generated five datasets with varying the degree of correlation between the endpoint of trajectories sampled in subsequent theta cycles (Figure 7a; Methods) and calculated the EV-index for all these datasets. We found that the EV-index varied consistently with the sign and the magnitude of the correlation between the sampled trajectories: The EV-index was negative when positive correlations between the subsequent trajectories reduced the cycle-to-cycle variability (Figure 7b). In this case, persistent sampling biases could not be distinguished from erroneous inference (Beck et al., 2012) and sampling became very similar to mean scheme. Conversely, the EV-index was positive for independent or anti-correlated samples with a substantial increase in the magnitude of the EV-index for strongly anti-correlated samples (Figure 7b). Thus, the large EV-index observed in the data is consistent with an efficient sampling algorithm that preferentially collects diverging trajectories in subsequent theta cycles.

Signature of efficient sampling: generative cycling.

(a) Examples of sampled trajectories with positive (left) and negative (right) correlation between the direction of subsequent trajectory endpoints (squares) relative to the current position (circles). (b) EV-index calculated with different amount of correlation between the subsequent trajectories. Error bars show SE across n=17989 theta cycle-pairs. (c) Schematic showing the measurement of the cycling length . Top: theta oscillation. Middle: relative direction (L: left; R: right) of the sampled trajectories in each cycle. Bottom: cycling length () defined as the number of cycles with consistent alternation between left and right samples. (d) Histograms of the cycling length (, see panel c) on simulated data (colors) with strong positive correlations (left), no correlations (middle) or with strong negative correlations (right). Gray shows the distribution after randomly shuffling the data across theta cycles, red shows the theoretical distribution assuming iid directions. Insets show the same distribution using logarithmic y-axes. (e) Schematic illustrating how difference between the true position (blue triangle) and the estimated position (brown arrowhead) can induce apparent correlations between alternating trajectories. Brown arrow represents the animal’s own position and motion direction estimate with the green circle illustrating its uncertainty. Green and blue lines depict two hypothetical trajectories alternating around the animal’s own position estimate (brown) but falling to the same side of its measured trajectory (black). (f) Cycling index () in simulated data with different amount of correlation between the subsequent trajectories (colors) versus shuffled data (gray, 10,000 permutations). (g) Histograms of the cycling length () in an example session (green, R1D2) versus shuffle control (gray) and the theoretical distribution assuming iid directions (red). Inset shows the same histogram on a logarithmic scale. (h) Cycling index for the dataset shown in g (pink symbol) versus shuffled data (gray, 10000 permutations). (i) z-scored cycling index for all experimental sessions (colors) versus shuffle control (gray).

To more directly test the hypothesis that subsequently encoded trajectories are anti-correlated, we explored if future portions of decoded trajectories tend to visit distinct parts of the environments. If sampling is optimized to produce anti-correlated samples then future portions of trajectories are expected to alternate around the real trajectories more intensely than positively correlated trajectories. To formulate this, we calculated the duration of the consistent cycling periods (cycling length, , Figure 7c; Kay et al., 2020). In our synthetic dataset, we found that ch:cyclingthe cycling length took the value one more frequently than in the shuffle control, indicating the absence of alternation even when the samples were strongly anti-correlated (Figure 7d). We identified that the source of this bias is the difference between the observed true position of the animal, and the animal’s subjective location estimate (Figure 7e). Specifically, even when the sampled trajectories alternate around the estimated position, the alternating trajectories often fell into the same side of the real trajectory of the animal if the true and the estimated position do not coincide (Figure 7e). Indeed, the bias was be eliminated when we calculated the alternation ot the encoded trajectories with respect to the animal’s internal location estimate (Figure 7—figure supplement 1). Note that this bias is not present in simpler, 1-dimensional environments when the position of the animal is constrained to a linear corridor (Kay et al., 2020). To compensate for this bias, we introduced the cycling index, that ignores the repeats () and measures the prevalence of long () cycling periods relative to the short alternations (; Figure 7f). We validated the cycling index on synthetic data by showing that it correctly distinguishes strongly correlated and anti-correlated settings.

Finally, we analysed the cycling behavior in the experimental data. We found that, similar to our synthetic datasets, repeats () were relatively overrepresented in the data compared to short alternations (, Figure 7g). However, the distribution of cycling length had a long tail and long periods of alternations () were frequent (Figure 7g, see also Figure 7—figure supplement 1c) resulting in a cycling index significantly larger than in shuffle control (Figure 7h). To standardize the cycling index for all sessions, we z-scored it using the corresponding shuffle distribution and found that the normalized cycling index was significantly positive for 7 of the 8 recording sessions (Figure 7i) and not different from random in the remaining session. This finding indicates that hypothetical trajectories encoded in subsequent theta cycles tend to alternate between different directions relative to the animal’s true motion trajectory, which is a signature of efficient sampling from the underlying probability distribution.

Analysis of signatures using model-free trajectories

The core of our analysis was a generative model that we used to obtain distributions of potential trajectories in order to identify signatures of alternative coding schemes. To test if specific properties of the generative model affect these signatures, we designed an analysis in which the same signatures could be tested in a model-free manner. The original generative model directly provided a way to assess the distributions of past, current, and future potential positions using probabilistic inference in the model. In the model-free version, we used only the recorded motion trajectories to construct an empirical distribution of hypothetical trajectories at each point in the motion trajectory of the animal. Specifically, for a particular target point on a recorded motion trajectory, we sampled multiple real trajectory segments with similar starting speed and aligned the start of these trajectories with the start of the target trajectory by shifting and rotating the sampled segments (Figure 7—figure supplement 2a). This alignment resulted in a bouquet of trajectories for each actual point along the motion trajectory that outlined an empirical distribution of hypothetical future positions and speeds (Figure 7—figure supplement 2a).

We used the empirical, model-free distributions of motion trajectories to synthesize spiking data using the four different coding schemes. We then showed that the forward bias and the spread of the decoded locations changed systematically within the theta cycle (Figure 7—figure supplement 2b, c) indicating that theta sequences are similar in this model-free dataset to real data (Figure 1d, f and g). Next, we repeated identical analyses that were performed with the original, model-based synthetic trajectories. Our analyses demonstrated that the signatures we introduced to test alternative coding schemes are robust against changes in the way distributions of planned trajectories are constructed (Figure 7—figure supplement 2c–g).

Discussion

In this paper, we demonstrated that the statistical structure of the sequential neural activity during theta oscillation in the hippocampus is consistent with repeatedly performing inference and predictions in a dynamical generative model. Further, we have established a framework to directly contrast competing hypotheses about the way probability distributions can be represented in neural populations. Importantly, new measures were developed to dissociate alternative coding schemes and to identify their hallmarks in the population activity. Reliability and robustness of these measures was validated on multiple synthetic data sets that were designed to match the statistics of neuronal and behavioral activity of recorded animals. Our analysis demonstrated that the neural code in the hippocampus shows hallmarks of probabilistic planning by representing information about uncertainty associated with the encoded positions. Specifically, our analysis has shown that the hippocampal population code displays the signature of efficient sampling of possible motion trajectories but could not identify the hallmarks of alternative proposed schemes for coding uncertainty.

Planning and dynamical models

Hippocampal activity sequences both during theta oscillation and sharp waves have been implicated in planning. During sharp waves, sequential activation of place cells can outline extended trajectories up to 10 m long (Davidson et al., 2009), providing a mechanism suitable for selecting the correct path leading to goal locations (Pfeiffer and Foster, 2013; Mattar and Daw, 2018; Widloski and Foster, 2022). During theta oscillation, sequences typically cover a few tens of centimeters (Figure 2; Wikenheiser and Redish, 2015) and are thus more suitable for surveilling the immediate consequences of the imminent actions. Monitoring future consequences of actions at multiple time scales is a general strategy for animals, humans and autonomous artificial agents alike (Neftci and Averbeck, 2019).

In our model, the dominant source of uncertainty represented by the hippocampal population activity was the stochasticity of the animal’s forthcoming choices. Indeed, it has been observed that before the decision point in a W-maze the hippocampus also represents hypothetical trajectories not actually taken by the animal (Redish, 2016; Kay et al., 2020; Tang et al., 2021). Here, we generalized this observation to an open-field task and found that monitoring alternative paths is not restricted to decision points but the hippocampus constantly monitors consequences of alternative choices. Disentangling the potential contribution of other sources of uncertainty, including ambiguous sensory inputs (Jezek et al., 2011) or unpredictable environments to hippocampal representations requires analysing population activity during more structured experimental paradigms (Kelemen and Fenton, 2010; Miller et al., 2017).

Although representation of alternative choices in theta sequences is well supported by data, it is not clear how much the encoded trajectories are influenced by the current policy of the animal. On one hand, prospective coding, reversed sequences during backward travel or the modulation of the sequences by goal location indicates that current context influences the content and dynamics of the sequences (Frank et al., 2000; Johnson and Redish, 2007; Cei et al., 2014; Wikenheiser and Redish, 2015). On the other hand, theta sequences represent alternatives with equal probability in binary decision tasks and the content of the sequences is not predictive about the future choice of the animal (Kay et al., 2020; Tang et al., 2021). Our finding that there is no consistent difference between the EVindex in home versus away trials (Figure 6—figure supplement 2) is also consistent with the idea that trajectories are sampled from a wide distribution not influenced strongly by the current goal or policy of the animal. Sampling from a relatively wide proposal distribution is a general motif utilized by several sampling algorithms (MacKay, 2003). Brain areas beyond the hippocampus, such as the prefrontal cortex, might perform additional computations on the array of trajectories sampled during theta oscillations, including rejection of the samples not consistent with the current policy (Tang et al., 2021). The outcome of the sampling process can have profound effect on future behavior as selective abolishment of theta sequences by pharmacological manipulations impairs behavioral performance (Robbe et al., 2006).

Compared to previous models simulating hippocampal place cell activity (McClain et al., 2019; Chadwick et al., 2015), a major novelty in our approach was that its spatial location was not assumed to be known by the animal. Instead, the animal had to estimate its own position and motion trajectory using a probabilistic generative model of the environment. Consequently, the neuronal activity in our simulations was driven by the estimated rather than the true positions. This probabilistic perspective allowed us to identify the sources of the variability of theta sequences, to define quantities (CCV, TEE and EV-index) that can discriminate between sampling and mean schemes and to recognise the origin of biases in generative cycling. Although the fine details of the model are not crucial for our results (Figure 7—figure supplement 2) and our specific parameter choices were motivated mainly to achieve a good match with the real motion and neural data (Figure 3—figure supplements 2 and 3), a probabilistic generative model was necessary for these insights and for the consistent implementation of the inference process. Alternative frameworks, such as the successor representations (Dayan, 1993; Stachenfeld et al., 2017) provide only aggregate predictions in the form of expected future state occupancy averaged across time and intermediate states instead of temporally and spatially detailed predictions on specific future states necessary for generating hypothetical trajectories (Johnson and Redish, 2005; Kay et al., 2020).

We found that excess variability in the experimental data was higher than that in the simulated data sampling independent trajectories in subsequent theta cycles. This high EV-index is consistent with preferentially selecting samples from the opposite lobes of the target distribution (MacKay, 2003) via generative cycling (Kay et al., 2020) leading to low autocorrelation of the samples. Recurrent neural networks can be trained to generate samples with rapidly decaying auto-correlation (Echeveste et al., 2020). Interestingly, these networks were shown to display strong oscillatory activity in the gamma band (Echeveste et al., 2020). Concurrent gamma and theta band activities are characteristics of hippocampus (Colgin, 2016), which indicates that network mechanisms underlying efficient sampling might be exploited by the hippocampal circuitry. Efficient sampling of trajectories instead of static variables could necessitate multiple interacting oscillations where individual trajectories evolve during multiple gamma cycles and alternative trajectories are sampled in subsequent theta cycles.

Circuit mechanisms

Recent theoretical studies established that recurrent neural networks can implement complex nonlinear dynamics (Mastrogiuseppe and Ostojic, 2018; Vyas et al., 2020) including sampling from the posterior distribution of a static generative model (Echeveste et al., 2020). External inputs to the network can efficiently influence the trajectories emerging in the network either by changing the internal state and initiating a new sequence (Kao et al., 2021) or by modulating the internal dynamics influencing the transition structure between the cell assemblies or the represented spatial locations (Mante et al., 2013; Stroud et al., 2018). We speculate that the recurrent network of the CA3 subfield could serve as a neural implementation of the dynamical generative model with inputs from the entorhinal cortex providing strong contextual signals selecting the right map and conveying landmark information necessary for periodic resets at the beginning of the theta cycles.

Although little is known about the role of entorhinal inputs to the CA3 subfield during theta sequences, inputs to the CA1 subfield show functional segregation consistent with this idea. Specifically, inputs to the CA1 network from the entorhinal cortex and from the CA3 region are activated dominantly at distinct phases of the theta cycle and are associated with different bands of gamma oscillations reflecting the engagement of different local micro-circuits (Colgin et al., 2009; Schomburg et al., 2014). The entorhinal inputs are most active at early theta phases when they elicit fast gamma oscillations (Schomburg et al., 2014) and these inputs might contribute to the reset of the sequences (Fernández-Ruiz et al., 2017). Initial part of the theta cycle showing transient backward sequences (Wang et al., 2020) could reflect the effect of external inputs resetting the local network dynamics. Conversely, CA3 inputs to CA1 are coupled to local slow gamma rhythms preferentially occurring at later theta phases, associated with prospective coding and relatively long, temporally compressed paths (Schomburg et al., 2014; Bieri et al., 2014) potentially following the trajectory outlined by the CA3 place cells.

Representations of uncertainty

Uncertainty representation implies that not only a best estimate of an inferred quantity is maintained in the population but properties of a full probability distribution can also be recovered from the activity. Machine learning provides two major classes of computational methods to represent probability distributions: 1, instantaneous representations which rely on a set of parameters to encode a probability distribution; or 2, sequential representations that collect samples from the distributions (MacKay, 2003). Accordingly, theories of neural probabilistic computations fall into these categories: the product scheme and DDC instantaneously, while sampling sequentially represents uncertainty (Pouget et al., 2013; Savin and Deneve, 2014). Our analysis did not find evidence for representing a probability distributions instantaneously during hippocampal theta sequences. Instead, our data is consistent with representing a single location at any given time where uncertainty is encoded sequentially by the variability of the represented locations across time.

Importantly, it is not possible to accurately recover the represented position of the animal from the observed spikes in any of the coding schemes: one can only estimate it with a finite precision, summarized by a posterior distribution over the possible positions. Thus, decoding noisy neural activity naturally leads to a posterior distribution. However, this does not imply that it was actually a probability distribution encoded in the population activity (Zemel et al., 1998; Lange et al., 2020). Specifically, when a scalar variable is encoded in the spiking activity of neurons using an exponential family likelihood function, then the resulting code is a linear probabilistic population code (PPC; Ma et al., 2006). In fact, we used an exponential family likelihood function (Poisson) in our mean and sampling scheme, so these schemes belong, by definition, to the PPC family. However, the PPC should not be confused with our product scheme where a target distribution is encoded in the noisy population activity instead of a single variable (Lange et al., 2020).

To test the product scheme, we used the population gain as a hallmark. We found that the gain varied systematically, but the variance was not consistent with the basic statistical principle, that on average uncertainty accumulates when predicting future states. An alternative test would be to estimate both the encoding basis functions and the represented distributions as proposed by Ma et al., 2006 but this would require the precise estimation of stimulus dependent correlations among the neurons.

We also did not find evidence for representing distributions via the DDC scheme during theta sequences. However, the lack of evidence for instantaneous representation of probability distributions in the hippocampus does not rule out that these schemes might be effectively employed by other neuronal systems (Pouget et al., 2013). In particular, on the behavioral time scale when averaging over many theta cycles, the sampling and DDC schemes become equivalent: when we calculate the average firing rate of a neuron that uses the sampling scheme across several theta cycles, it becomes the expectation of its associated encoding basis function under the represented distribution. This way, sampling alternative trajectories in each theta cycle can be interpreted as DDC on the behavioral time scale with all computational advantages of this coding scheme (Vértes and Sahani, 2019). Similarly, sampling potential future trajectories at the theta time scale naturally explains the emergence of successor representations on the behavioral time scale (Stachenfeld et al., 2017).

In standard sampling codes, individual neurons correspond to variables and their activity (membrane potential or firing rate) represent the value of the represented variable which is very efficient for sampling from complex, high dimensional distributions (Fiser et al., 2010; Orbán et al., 2016). Here, we take a population coding approach when individual neurons are associated with encoding basis functions and the population activity collectively encode the value of the variable (Zemel et al., 1998; Savin and Deneve, 2014). This scheme allows the hippocampal activity to efficiently encode the value of a low dimensional variable at high temporal precision.

Recurrent networks can implement parallel chains of sampling from the posterior distribution of static (Savin and Deneve, 2014) or dynamic (Kutschireiter et al., 2017) generative models. Similar to the DDC scheme, these implementations would also encode uncertainty by the increase of the diversity of the co-active neurons. Thus, our data indicates that the hippocampus avoids sampling multiple, parallel chains for representing uncertainty in dynamical models and rather multiplexes sampling in time by collecting several samples subsequently at an accelerated temporal scale.

Our analysis leveraged the systematic increase in the uncertainty of the predicted states with time in dynamical models. The advantage of this approach is that we could analyse 1000s of theta cycles, much more than the typical number of trials in behavioral experiments where uncertainty is varied by manipulating stimulus parameters (e.g. image contrast; Orbán et al., 2016; Walker et al., 2020). Uncertainty could also be manipulated during navigation by changing the amount of available sensory information (Zhang et al., 2014), introducing ambiguity regarding the spatial context (Jezek et al., 2011) or manipulating the volatility of the environment (Miller et al., 2017). Our analysis predicts that the variability across theta cycles will increase systematically after all manipulations causing an increase in the uncertainty regardless of the nature of this manipulation or the shape of the environment. These experiments would also allow a more direct test of our theory by comparing changes in the neuronal activity with a behavioral readout of subjective uncertainty.

Methods

Theory

To study the neural signatures of the probabilistic coding schemes during hippocampal theta sequences, we developed a coherent theoretical framework which assumes that the hippocampus implements a dynamical generative model of the environment. The animal uses this model to estimate its current spatial location and predict possible consequences of its future actions. Since multiple possible positions are consistent with recent sensory inputs and multiple options are available to choose from, representing these alternative possibilities, and their respective probabilities, in the neuronal activity is beneficial for efficient computations. Within this framework, we interpreted trajectories represented by the sequential population activity during theta oscillation as inferences and predictions in the dynamical generative model.

We define three hierarchical levels for this generative process (Figure 3—figure supplement 1a). (1) We modeled the generation of smooth planned trajectories in the two-dimensional box, similar to the experimental setup, with a stochastic process. These trajectories represented the intended locations for the animal at discrete time steps. (2) We formulated the generation of motion trajectories via motor commands that are calculated as the difference between the planned trajectory and the position estimated from the sensory inputs. Again, this component was assumed to be stochastic due to noisy observations and motor commands. Calculating the correct motor command required the animal to update its position estimate at each time step and we assumed that the animal also maintained a representation of its inferred past and predicted future trajectory. (3) We modeled the generative process which encodes the represented trajectories by neural activity. Activity of a population of hippocampal neurons was assumed to be generated by either of the four different encoding schemes as described below.

We implemented this generative model to synthesize both locomotion and neural data and used it to test contrasting predictions of different encoding schemes. Importantly, the specific algorithm we used to synthesize motion trajectories and perform inference is not assumed to underlie the algorithmic steps implemented in the hippocampal network, it only provides sample trajectories from the distribution with the right summary statistics. The flexibility of this hierarchical framework enabled us to match qualitatively the experimental data both at the behavioral (Figure 3—figure supplement 2) and the neural (Figure 3—figure supplement 3) level. In the following paragraphs we will describe these levels in detail.

Generation of smooth planned trajectory

The planned trajectory was established at a temporal resolution corresponding to the length of a theta cycle, s, and spanned a length s providing a set of planned positions, for any given theta cycle (see Table 1 for a list of frequently used symbols). The planned trajectories were initialized from the center of a hypothetical 2 m×2 m box with a random initial velocity. Magnitude and direction of the velocity in subsequent steps were jointly sampled from their respective probability distributions. Specifically, at time step we first updated the direction of motion by adding a random two-dimensional vector of length cm/s to the velocity . Next, we changed the speed, the magnitude of , according to an Ornstein-Uhlenbeck process:

Summary of the symbols used in the model.

| Symbol | Meaning |

|---|---|

| index of time step in the generative model measured as the number of theta cycles | |

| position at theta cycle n (two-dimensional) | |

| sensory input (two-dimensional) | |

| motor command (two-dimensional) | |

| planned position (-dimensional) | |

| planned position (two-dimensional) | |

| past sensory input until theta cycle n | |

| mean of the filtering posterior | |

| covariance of the filtering posterior | |

| theta phase | |

| trajectory of the animal around theta cycle n | |

| posterior mean trajectory at theta cycle n | |

| posterior variance of trajectory at theta cycle n | |

| trajectory sampled from | |

| encoding basis function of cell i - firing rate as a function of the encoded position | |

| empirical tuning curve of cell i - firing rate as a function of the real position | |

| firing rate of cell i | |

| spikes recorded in theta cycle n encoding trajectory xn | |

| trajectory decoded from the observed spikes assuming direct encoding (Equation 18) | |

| estimated trajectory mean assuming DDC encoding (Equation 19) | |

| estimated trajectory variance assuming DDC encoding (Equation 19) |

where denotes the speed in theta cycle . We used the parameters cm/s, s, with cm/s and when and otherwise. The planned trajectory was generated by discretized integration of the velocity signal:

When the planned trajectory reached the boundary of the box, the trajectory was reflected from the walls by inverting the component of the velocity vector that was perpendicular to the wall. The parameters of the planned trajectory were chosen to approximate the movement of the real rats by the movement of the simulated animal (Figure 3—figure supplement 2).

Importantly, in any theta cycle multiple hypothetical planned trajectories could be generated by resampling the motion direction and the noise term, in Equation 1. Moreover, these planned trajectories can be elongated by recursively applying Equations 1 and 2. The planned trajectory influenced the motion of the simulated animal through the external control signal (motor command) as we describe it in the next paragraph.

Generation of motion trajectories

We assumed that the simulated animal aims at following the planned trajectory but does not have access to its own location. Therefore the animal was assumed to infer the location from its noisy sensory inputs. To follow the planned trajectory, the simulated animal calculated motor commands to minimize the deviation between its planned and estimated locations.

To describe the transition between physical locations, , we formulated a model where transitions were a result of motor commands, . For this, we adopted the standard linear Gaussian state space model:

where represented motor noise. The animal only had access to the location-dependent, but noisy sensory observations, :

where is the sensory noise and and are diagonal noise covariance matrices with cm2 and cm2. The small motor variance was necessary for smooth movements since motor errors accumulate across time (Figure 3—figure supplement 2). Conversely, large sensory variance was efficiently reduced by combining sensory information across different time steps (see below, Equation 6).

Since the location, , was not observed, inference was required to calculate the motor command. This inference relied on estimates of the location in earlier theta cycles, the motor command, and the current sensory observation. The estimated location was represented by the Gaussian filtering posterior:

This posterior is characterized by the mean estimated location and a covariance, , which quantifies the uncertainty of the estimate. These parameters were updated in each time step (theta cycle) using the standard Kalman filter algorithm (Murphy, 2012):

where is the Kalman gain matrix.

The motor command, , was calculated by low-pass filtering the deviation between the planned position, , and the estimated position of the animal (posterior mean, ):

with ensuring sufficiently smooth motion trajectories (Figure 3—figure supplement 2). Relationship between the planned position , actual position , the sensory input , and the estimated location is depicted in Figure 3—figure supplement 1b.

To make predictions about future positions, we defined the subjective trajectory of the animal, the distribution of trajectories consistent with all past observations and motor commands: . This subjective trajectory is associated with a particular theta cycle: since it is estimated on a cycle-by-cycle manner we use the index to distinguish trajectories at different cycles. Here is a trajectory starting steps in the past and ending steps ahead in the future (Figure 3—figure supplement 1c, Table 1). We call the distribution the trajectory posterior. We sampled trajectories from the posterior distribution by starting each trajectory from the posterior of current position (filtering posterior, Equation 5) and proceeded first backward, sampling from the conditional smoothing posterior, and then forward, sampling from the generative model.

To sample the past component of the trajectory (), we capitalized on the following relationship:

where the first factor on the right hand side of Equation 8 is the filtering posterior (Equation 5) and the second factor is defined by the generative process ( (Equation 3) ) and . We started each trajectory by sampling its first point independently from the filtering posterior (Equation 5) and applied (Equation 8) recursively to elongate the trajectory backward in time.

To generate samples in the forward direction () we implemented an ancestral sampling approach. First, a hypothetical planned trajectory was generated as in Equations 1 and 2 starting from the last planned location . Next, we calculated the hypothetical future motor command, based on the difference between the next planned location and current prediction for , as in Equation 7. Finally, we sampled the next predicted position from the distribution

To elongate the trajectory further into the future we repeated this procedure multiple times.