Effects of noise and metabolic cost on cortical task representations

eLife assessment

This work provides a valuable analysis of the effect of two commonly used hyperparameters, noise amplitude and firing rate regularization, on the representations of relevant and irrelevant stimuli in trained recurrent neural networks (RNNs). The results suggest an interesting interpretation of prefrontal cortex (PFC) dynamics, based on comparisons to previously published data from the same lab, in terms of decreasing metabolic cost during learning. The evidence indicating that the mechanisms identified in the RNNs are the same ones operating in PFC was considered incomplete, but could potentially be bolstered by additional analyses and appropriate revisions.

https://doi.org/10.7554/eLife.94961.2.sa0Valuable: Findings that have theoretical or practical implications for a subfield

- Landmark

- Fundamental

- Important

- Valuable

- Useful

Incomplete: Main claims are only partially supported

- Exceptional

- Compelling

- Convincing

- Solid

- Incomplete

- Inadequate

During the peer-review process the editor and reviewers write an eLife Assessment that summarises the significance of the findings reported in the article (on a scale ranging from landmark to useful) and the strength of the evidence (on a scale ranging from exceptional to inadequate). Learn more about eLife Assessments

Abstract

Cognitive flexibility requires both the encoding of task-relevant and the ignoring of task-irrelevant stimuli. While the neural coding of task-relevant stimuli is increasingly well understood, the mechanisms for ignoring task-irrelevant stimuli remain poorly understood. Here, we study how task performance and biological constraints jointly determine the coding of relevant and irrelevant stimuli in neural circuits. Using mathematical analyses and task-optimized recurrent neural networks, we show that neural circuits can exhibit a range of representational geometries depending on the strength of neural noise and metabolic cost. By comparing these results with recordings from primate prefrontal cortex (PFC) over the course of learning, we show that neural activity in PFC changes in line with a minimal representational strategy. Specifically, our analyses reveal that the suppression of dynamically irrelevant stimuli is achieved by activity-silent, sub-threshold dynamics. Our results provide a normative explanation as to why PFC implements an adaptive, minimal representational strategy.

Introduction

How systems solve complex cognitive tasks is a fundamental question in neuroscience and artificial intelligence (Rigotti et al., 2013; Yang et al., 2019; Wang et al., 2018; Silver et al., 2016; Jensen et al., 2023). A key aspect of complex tasks is that they often involve multiple types of stimuli, some of which can even be irrelevant for performing the correct behavioral response (Freedman et al., 2001; Mante et al., 2013; Parthasarathy et al., 2017) or predicting reward (Bernardi et al., 2020; Chadwick et al., 2023). Over the course of task exposure, subjects must typically identify which stimuli are relevant and which are irrelevant. Examples of irrelevant stimuli include those that are irrelevant at all times in a task (Freedman et al., 2001; Chadwick et al., 2023; Duncan, 2001; Rainer et al., 1998; Stokes et al., 2013) – which we refer to as static irrelevance – and stimuli that are relevant at some time points but are irrelevant at other times in a trial – which we refer to as dynamic irrelevance (e.g. as is often the case for context-dependent decision-making tasks Mante et al., 2013; Flesch et al., 2022; Monsell, 2003; Braver, 2012). Although tasks involving irrelevant stimuli have been widely used, it remains an open question as to how different types of irrelevant stimuli should be represented, in combination with relevant stimuli, to enable optimal task performance.

One may naively think that statically irrelevant stimuli should always be suppressed. However, stimuli that are currently irrelevant may be relevant in a future task. Furthermore, it is unclear whether dynamically irrelevant stimuli should be suppressed at all since the information is ultimately needed by the circuit. It may therefore be beneficial for a neural circuit to represent irrelevant information as long as no unnecessary costs are incurred and task performance remains high. Several factors could have a strong impact on whether irrelevant stimuli affect task performance. For example, levels of neural noise in the circuit as well as energy constraints and the metabolic costs of overall neural activity (Flesch et al., 2022; Whittington et al., 2022; Sussillo et al., 2015; Orhan and Ma, 2019; Löwe, 2023; Cueva and Wei, 2018; Luo et al., 2023; Kao et al., 2021; Deneve et al., 2001; Barak et al., 2013) can affect how stimuli are represented in a neural circuit. Indeed, both noise and metabolic costs are factors that biological circuits must contend with (Tomko and Crapper, 1974; Laughlin, 2001; Churchland et al., 2006; Hasenstaub et al., 2010). Despite these considerations, models of neural population codes, including hand-crafted models and optimized artificial neural networks, typically use only a very limited range of the values of such factors (Wang et al., 2018; Mante et al., 2013; Sussillo et al., 2015; Barak et al., 2013; Cueva et al., 2020; Driscoll et al., 2022; Song et al., 2016; Echeveste et al., 2020; Stroud et al., 2021) (but also see Yang et al., 2019; Orhan and Ma, 2019). Therefore, despite the success of recent comparisons between neural network models and experimental recordings (Wang et al., 2018; Mante et al., 2013; Sussillo et al., 2015; Cueva and Wei, 2018; Barak et al., 2013; Cueva et al., 2020; Echeveste et al., 2020; Stroud et al., 2021; Lindsay, 2021), we may only be recovering very few out of a potentially large range of different representational strategies that neural networks could exhibit (Schaeffer et al., 2022).

One challenge for distinguishing between different representational strategies, particularly when analyzing experimental recordings, is that some stimuli may simply be represented more strongly than others. In particular, we might expect stimuli to be strongly represented in cortex a priori if they have previously been important to the animal. Indeed, being able to represent a given stimulus when learning a new task is likely a prerequisite for learning whether it is relevant or irrelevant in that particular context (Rigotti et al., 2013; Bernardi et al., 2020). Previously, it has been difficult to distinguish between whether a given representation existed a priori or emerged as a consequence of learning because neural activity is typically only recorded after a task has already been learned. A more relevant question is how the representation changes over learning (Chadwick et al., 2023; Reinert et al., 2021; Durstewitz et al., 2010; Schuessler et al., 2020; Costa et al., 2017), which provides insights into how the specific task of interest affects the representational strategy used by an animal or artificial network (Richards et al., 2019).

To resolve these questions, we optimized recurrent neural networks on a task that involved two types of irrelevant stimuli. One feature of the stimulus was statically irrelevant, and another feature of the stimulus was dynamically irrelevant. We found that, depending on the neural noise level and metabolic cost that was imposed on the networks during training, a range of representational strategies emerged in the optimized networks, from maximal (representing all stimuli) to minimal (representing only relevant stimuli). We then compared the strategies of our optimized networks with learning-resolved recordings from the prefrontal cortex (PFC) of monkeys exposed to the same task. We found that the representational geometry of the neural recordings changed in line with the minimal strategy. Using a simplified model, we derived mathematically how the strength of relevant and irrelevant coding depends on the noise level and metabolic cost. We then confirmed our theoretical predictions in both our task-optimized networks and neural recordings. By reverse-engineering our task-optimized networks, we also found that activity-silent, sub-threshold dynamics led to the suppression of dynamically irrelevant stimuli, and we confirmed predictions of this mechanism in our neural recordings.

In summary, we provide a mechanistic understanding of how different representational strategies can emerge in both biological and artificial neural circuits over the course of learning in response to salient biological factors such as noise and metabolic costs. These results in turn explain why PFC appears to employ a minimal representational strategy by filtering out task-irrelevant information.

Results

A task involving relevant and irrelevant stimuli

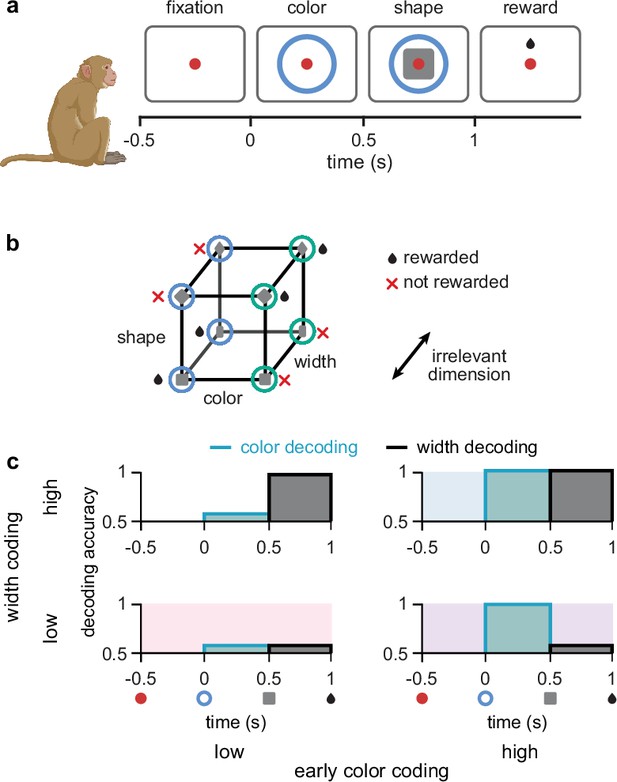

We study a task used in previous experimental work that uses a combination of multiple, relevant and irrelevant stimuli (Wójcik, 2023; Figure 1a). The task consists of an initial ‘fixation’ period, followed by a ‘color’ period, in which one of two colors are presented. After this, in the ‘shape’ period, either a square or diamond shape is presented (while the color stimulus stays on), such that the width of the shape can be either thick or thin. After this, the stimuli disappear and reward is delivered according to an XOR structure between color and shape (Figure 1b). Note that the width of the shape is not predictive of reward, and it is therefore an irrelevant stimulus dimension (Figure 1b). As width information is always irrelevant when it is shown, its irrelevance is static. In contrast, color is relevant during the shape period but could be ignored during the color period without loss of performance. Hence its irrelevance is dynamic.

Task design and irrelevant stimulus representations.

(a) Illustration of the timeline of task events in a trial with the corresponding displays and names of task periods. Red dot shows fixation ring, blue (or green) circles appear during the color and shape periods, gray squares (or diamonds) appear during the shape period, and a juice reward is given during the reward period for rewarded combinations of color and shape stimuli (see panel b). No behavioral response was required for the monkeys as it was a passive object–association task (Wójcik, 2023). (b) Schematic showing that rewarded conditions of color and shape stimuli follows an XOR structure. In addition, the width of the shape was not predictive of reward and was thus an irrelevant stimulus dimension. (c) Schematic of four possible representational strategies, as indicated by linear decoding of population activity, for the task shown in panels a and b. Turquoise lines with shading show early color decoding and black lines with shading show width decoding. Strategies are split according to whether early color decoding is low (left column) or high (right column), and whether width decoding is low (bottom row) or high (top row).

Due to the existence of multiple different forms of irrelevant stimuli, there exist multiple different representational strategies for a neural circuit solving the task in Figure 1a. These representational strategies can be characterized by assessing the extent to which different stimuli are linearly decodable from neural population activity (Bernardi et al., 2020; Stokes et al., 2013; Meyers et al., 2008; King and Dehaene, 2014). We use linear decodability because it only requires the computation of simple weighted sums of neural responses, and as such, it is a widely accepted criterion for the usefulness of a neural representation (DiCarlo and Cox, 2007). Moreover, while representational strategies can differ along several dimensions in this task (e.g. the decodability of color or shape during the shape period – both of which are task-relevant Wójcik, 2023), our main focus here is on the two dimensions that specifically control the representation of irrelevant stimuli. Therefore, depending on whether each of the irrelevant stimuli are linearly decodable during their respective period of irrelevance, we distinguish four different (extreme) strategies, ranging from a ‘minimal strategy’, in which the irrelevant stimuli are only weakly represented (Figure 1c, bottom left; pink shading), to a ‘maximal strategy’, in which both irrelevant stimuli are strongly represented (Figure 1c, top right; blue shading).

Stimulus representations in task-optimized recurrent neural networks

To understand the factors determining which representational strategy neural circuits employ to solve this task, we optimized recurrent neural networks to perform the task (Figure 2a; see also Neural network models). The neural activities in these stochastic recurrent networks evolved according to

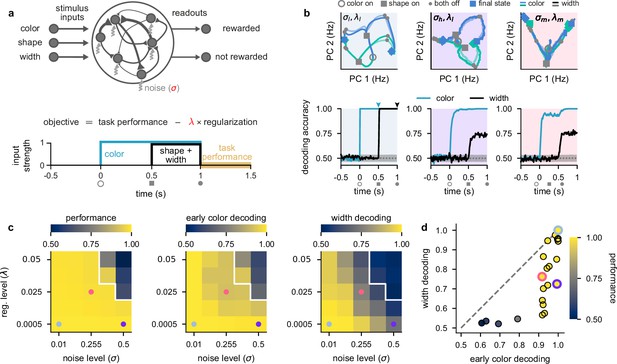

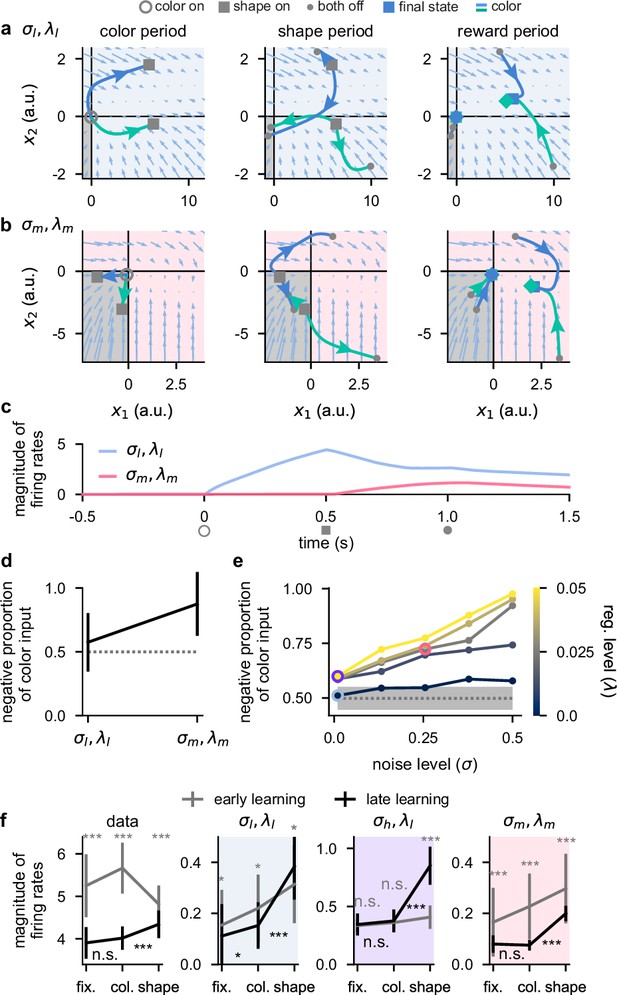

Stronger levels of noise and firing rate regularization lead to suppression of task-irrelevant stimuli in optimized recurrent networks.

(a) Top: illustration of a recurrent neural network model where each neuron receives independent white noise input with strength σ (middle). Color, shape, and width inputs are delivered to the network via three input channels (left). Firing rate activity is read out into two readout channels (either rewarded or not rewarded; right). All recurrent weights in the network, as well as weights associated with the input and readout channels, were optimized (Neural network models). Bottom: cost function used for training the recurrent neural networks (Equation 4) and timeline of task events within a trial for the recurrent neural networks (Figure 1a). Yellow shading on time axis shows the time period in which the task performance term enters the cost function (Equation 4). (b) Top: neural firing rate trajectories in the top two PCs for an example network over the course of a trial (from color stimulus onset) for a particular noise (σ) and regularization (λ) regime. Open gray circles indicate color onset, filled gray squares indicate shape onset, filled gray circles indicate offset of both stimuli, and colored thick and thin squares and diamonds indicate the end of the trial at 1.5 s for all stimulus conditions. Pale and brightly colored trajectories indicate the two width conditions. We show results for networks exposed to low noise and low regularization (; left, pale blue shading), high noise and low regularization (; middle, purple shading), and medium noise and medium regularization (; right, pink shading). Bottom: performance of a linear decoder (mean over 10 networks) trained at each time point within the trial to decode color (turquoise) or width (black) from neural firing rate activity for each noise and regularization regime. Dotted gray lines and shading show mean ± 2 s.d. of chance level decoding based on shuffling trial labels 100 times. (c) Left: performance of optimized networks determined as the mean performance over all trained networks during the reward period (a, bottom; yellow shading) for all noise (σ, horizontal axis) and regularization levels (λ, vertical axis) used during training. Pale blue, pink, and purple dots indicate parameter values that correspond to the dynamical regimes shown in panel b and Figure 1c with the same background coloring. For parameter values above the white line, networks achieved a mean performance of less than 0.95. Middle: early color decoding determined as mean color decoding over all trained networks at the end of the color period (b, bottom left, turquoise arrow) using the same plotting scheme as the left panel. Right: width decoding determined as mean width decoding over all trained networks at the end of the shape period (b, bottom left, black arrow) using the same plotting scheme as the left panel. (d) Width decoding plotted against early color decoding for all noise and regularization levels and colored according to performance. Pale blue, pink, and purple highlights indicate the parameter values shown with the same colors in panel c.

where corresponds to the vector of ‘sub-threshold’ activities of the neurons in the network, is their momentary firing rates which is a rectified linear function (ReLU) of the sub-threshold activity, τ=50 ms is the effective time constant, and denotes the inputs to the network encoding the three stimulus features as they become available over the course of the trial (Figure 2a, bottom). The optimized parameters of the network were , the recurrent weight matrix describing connection strengths between neurons in the network (Figure 2a, top; middle), , the feedforward weight matrix describing connections from the stimulus inputs to the network (Figure 2a, top; left; see also Neural network models), and , a stimulus-independent bias. Importantly, σ is the standard deviation of the neural noise process (Figure 2a, top; pale gray arrows; with being a sample from a Gaussian white noise process with mean 0 and variance 1), and as such represents a fundamental constraint on the operation of the network. The output of the network was given by

with optimized parameters , the readout weights (Figure 2a, top; right), and , a readout bias.

We optimized networks for a canonical cost function (Orhan and Ma, 2019; Driscoll et al., 2022; Song et al., 2016; Stroud et al., 2021; Masse et al., 2019; Figure 2a, bottom; Network optimization):

The first term in Equation 4 is a task performance term measuring the cross-entropy loss between the one-hot encoded target choice, , and the network’s output probabilities, , during the reward period, (Figure 2a, bottom; yellow shading). The second term in Equation 4 is a firing rate regularization term. This regularization term can be interpreted as a form of energy or metabolic cost (Whittington et al., 2022; Kao et al., 2021; Cueva et al., 2020; Masse et al., 2019; Schimel et al., 2023) measured across the whole trial, T, because it penalizes large overall firing rates. Therefore, optimizing this cost function encourages networks to not only solve the task, but to do so using low overall firing rates. How important it is for the network to keep firing rates low is controlled by the ‘regularization’ parameter λ. Critically, we used different noise levels σ and regularization strengths λ (Figure 2a, highlighted in red) to examine how these two constraints affected the dynamical strategies employed by the optimized networks to solve the task and compared them to our four hypothesized representational strategies (Figure 1c). We focused on these two parameters because they are both critical factors for real neural circuits and a priori can be expected to have important effects on the resulting circuit dynamics. For example, metabolic costs will constrain the overall level of firing rates that can be used to solve the task while noise levels directly affect how reliably stimuli can be encoded in the network dynamics. For the remainder of this section, we analyze representational strategies utilized by networks after training. In subsequent sections, we analyze learning-related changes in both our optimized networks and neural recordings.

We found that networks trained in a low noise–low firing rate regularization setting (which we denote by ) employed a maximal representational strategy (Figure 2b, left column; pale blue shading). Trajectories in neural firing rate space diverged for the two different colors as soon as the color stimulus was presented (Figure 2b, top left; blue and green trajectories from open gray circle to gray squares), which resulted in high color decoding during the color period (Figure 2b, bottom left; turquoise line; Linear decoding). During the shape period, the trajectories corresponding to each color diverged again (Figure 2b, top left; trajectories from gray squares to filled gray circles), such that all stimuli were highly decodable, including width (Figure 2b, bottom left; black line, Figure 2—figure supplement 1a). After removal of the stimuli during the reward period, trajectories converged to one of two parts of state space according to the XOR task rule – which is required for high performance (Figure 2b, top left; trajectories from filled gray circles to colored squares and diamonds). Because early color and width were highly decodable in these networks trained with a low noise and low firing rate regularization, the dynamical strategy they employed corresponds to the maximal representational regime (Figure 1c, blue shading).

We next considered the setting of networks that solve this task while being exposed to a high level of neural noise (which we denote by ; Figure 2b, middle column; purple shading). In this setting, we also observed neural trajectories that diverged during the color period (Figure 2b, top middle; gray squares are separated), which yielded high color decoding during the color period (Figure 2b, bottom middle; turquoise line). However, in contrast to networks with a low level of neural noise (Figure 2b, left), width was poorly decodable during the shape period (Figure 2b, bottom middle; black line). Therefore, for networks challenged with higher levels of neural noise, the irrelevant stimulus dimension of width is represented more weakly (Figure 1c, purple shading). A similar representational strategy was also observed in networks that were exposed to a low level of noise but a high level of firing rate regularization (Figure 2—figure supplement 1c, black lines).

Finally, we considered the setting of networks that solve this task while being exposed to medium levels of both neural noise and firing rate regularization (which we denote by ; Figure 2b, right column; pink shading). In this setting, neural trajectories diverged only weakly during the color period (Figure 2b, top middle; gray squares on-top of one another), which yielded relatively poor (non-ceiling) color decoding during the color period (Figure 2b, bottom right; turquoise line). Nevertheless, color decoding was still above chance despite the neural trajectories strongly overlapping in the two-dimensional state space plot in Figure 2b, top right, because these trajectories became separable in the full-dimensional state space of these networks. Additionally, width decoding was also poor during the shape period (Figure 2b, bottom right; black line). Therefore, networks that were challenged with increased levels of both neural noise and firing rate regularization employed dynamics in line with a minimal representational strategy by only weakly representing irrelevant stimuli (Figure 1c, pink shading).

To gain a more comprehensive picture of the full range of dynamical solutions that networks can exhibit, we performed a grid search over multiple different levels of noise and firing rate regularization. Nearly all parameter values allowed networks to achieve high performance (Figure 2c, left), except when both the noise and regularization levels were high (Figure 2c, left; parameter values above white line). Importantly, all of the dynamical regimes that we showed in Figure 2b achieved similarly high performances (Figure 2c, left; pale blue, purple, and pink dots).

When looking at early color decoding (defined as color decoding at the end of the color period; Figure 2b, bottom left, turquoise arrow) and width decoding (defined as width decoding at the end of the shape period; Figure 2b, bottom left, black arrow), we saw a consistent pattern of results. Early color decoding was high only when either the lowest noise level was used (Figure 2c, middle; column) or when the lowest regularization level was used (Figure 2c, middle; row). In contrast, width decoding was high only when both the level of noise and regularization were small (Figure 2c, right; bottom left corner). Otherwise, width decoding became progressively worse as either the noise or regularization level was increased and achieved values that were typically lower than the corresponding early color decoding (Figure 2c, compare right with middle). This pattern becomes clearer when we plot width decoding against early color decoding (Figure 2d). No set of parameters yielded higher width decodability compared to early color decodability (Figure 2d, no data point above the identity line). This means that we never observed the fourth dynamical regime we hypothesized a priori, in which width decoding would be high and early color decoding would be low (Figure 1c, top left). Therefore, information with static irrelevance (width) was more strongly suppressed compared to information whose irrelevance was dynamic (color). We also note that we never observed pure chance levels of decoding of color or width during stimulus presentation. This is likely because it is challenging for recurrent neural networks to strongly suppress their inputs and it may also be the case that other hyperparameter regimes more naturally lead to stronger suppression of inputs (we discuss this second possibility later; e.g. Figure 5).

Comparing learning-related changes in stimulus representations in neural networks and primate lateral PFC

To understand the dynamical regime employed by PFC, we analyzed a dataset (Wójcik, 2023) of multi-channel recordings from lateral prefrontal cortex (lPFC) in two monkeys exposed to the task shown in Figure 1a. These recordings yielded 376 neurons in total across all recording sessions and both animals (Experimental materials and methods). Importantly, for understanding the direction in which neural geometries changed over learning, recordings commenced in the first session in which the animals were exposed to the task – i.e., the recordings spanned the entirety of the learning process. For our analyses, we distinguished between the first half of recording sessions (Figure 3a, gray; ‘early learning’) and the second half of recording sessions (Figure 3a, black; ‘late learning’). A previous analysis of this dataset showed that, over the course of learning, the XOR representation of the task comes to dominate the dynamics during the late shape period of the task (Wójcik, 2023). Here, however, we focus on relevant and irrelevant task variable coding during the stimulus periods and compare the recordings to the dynamics of task-optimized recurrent neural networks. Also, in line with the previous study (Wójcik, 2023), we do not examine the reward period of the task because the one-to-one relationship between XOR and reward in the data (which is not present in the models) will likely lead to trivial differences in XOR representations in the reward period between the data and models.

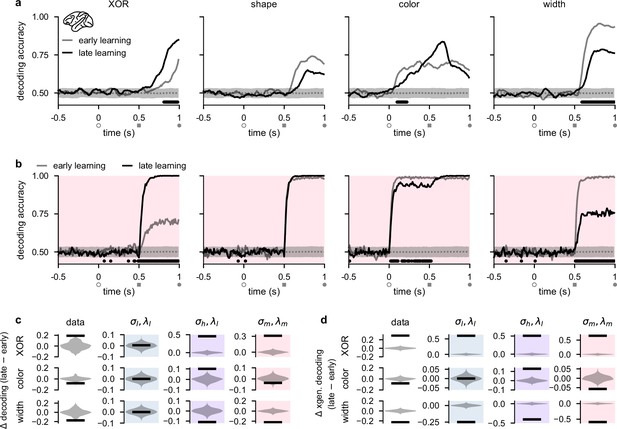

Stimulus representations in primate lateral prefrontal cortex (lPFC) correspond to a minimal representational strategy.

(a) Performance of a linear decoder trained at each time point to predict either XOR (far left), shape (middle left), color (middle right), or width (far right) from neural population activity in lPFC (Experimental materials and methods and Linear decoding). We show decoding results separately from the first half of sessions (gray, ‘early learning’) and the second half of sessions (black, ‘late learning’). Dotted gray lines and shading show mean ± 2 s.d. of chance level decoding based on shuffling trial labels 100 times. Horizontal black bars show significant differences between early and late decoding using a two-sided cluster-based permutation test and a significance threshold of 0.05 (Statistics). Open gray circles, filled gray squares, and filled gray circles on the horizontal indicate color onset, shape onset, and offset of both stimuli, respectively. (b) Same as panel a but for decoders trained on neural activity from optimized recurrent neural networks in the regime (Figure 2b–d, pink). (c) Black horizontal lines show the mean change between early and late decoding during time periods when there were significant differences between early and late decoding in the data (horizontal black bars in panel a) for XOR (top row), color (middle row), and width (bottom row). (No significant differences in shape decoding were observed in the data; cf. panel a.) Violin plots show chance differences between early and late decoding based on shuffling trial labels 100 times. We show results for the data (far left column), networks (middle left column, pale blue shading), networks (middle right column, purple shading), and networks (far right column, pink shading). (d) Same as panel c but we show results using cross-generalized decoding (Bernardi et al., 2020) during the same time periods as those used in panel c (Linear decoding).

Similar to our analyses of our recurrent network models (Figure 2b, bottom), we performed population decoding of the key task variables in the experimental data (Figure 3a, see also Linear decoding). We found that the decodability of the XOR relationship between task-relevant stimuli that determined task performance (Figure 1b) significantly increased during the shape period over the course of learning, consistent with the animals becoming more familiar with the task structure (Figure 3a, far left; compare gray and black lines). We also found that shape decodability during the shape period decreased slightly, but not significantly, over learning (Figure 3a, middle left; compare gray and black lines from gray square to gray circle), while color decodability during the shape period increased slightly (Figure 3a, middle right; compare gray and black lines from gray square to gray circle). Importantly, however, color decodability significantly decreased during the color period (when it is ‘irrelevant’; Figure 3a, middle right; compare gray and black lines), and width decodability significantly decreased during the shape period (Figure 3a, far right; compare gray and black lines). Neural activities in lPFC thus appear to change in line with the minimal representational strategy over the course of learning, consistent with recurrent networks trained in the regime (Figure 1c, pink and Figure 2b, bottom, pink).

We then directly compared these learning-related changes in stimulus decodability from lPFC with those that we observed in our task-optimized recurrent neural networks (Figure 2). We found that the temporal decoding dynamics of networks trained with medium noise and firing rate regularization (; Figure 2b–d, pink shading and pink dots) exhibited decoding dynamics most similar to those that we observed in lPFC. Specifically, XOR decodability significantly increased after shape onset, consistent with the networks learning the task (Figure 3b, far left; compare gray and black lines). We also found that shape and color decodability did not significantly change during the shape period (Figure 3b, middle left and middle right; compare gray and black lines from gray square to gray circle). Importantly, however, color decodability significantly decreased during the color period (when it is ‘irrelevant’; Figure 3b, middle right; compare gray and black lines from open gray circle to gray square), and width decodability significantly decreased during the shape period (Figure 3a, far right; compare gray and black lines). Other noise and regularization settings yielded temporal decoding that displayed a poorer resemblance to the data (Figure 2—figure supplement 1). For example, , and networks exhibited almost no changes in decodability during the color and shape periods (Figure 2—figure supplement 1a and c) and networks exhibited increased XOR, shape, and color decodability at all times after stimulus onset while width decodability decreased during the shape period (Figure 2—figure supplement 1b). We also found that if regularization is driven to very high levels, color and shape decoding becomes weak during the shape period while XOR decoding remains high (Figure 2—figure supplement 1d). Therefore, such networks effectively perform a pure XOR computation during the shape period.

We also note that the model does not perfectly match the decoding dynamics seen in the data. For example, although not significant, we observed a decrease in shape decoding and an increase in color decoding in the data during the shape period whereas the model only displayed a slight (non-significant) increase in decodability of both shape and color during the same time period. These differences may be due to fundamentally different ways that brains encode sensory information upstream of PFC, compared to the more simplistic abstract sensory inputs used in models (see Discussion).

To systematically compare learning-related changes in the data and models, we analyzed time periods when there were significant changes in decoding in the data over the course of learning (Figure 3a, horizontal black bars). This yielded a substantial increase in XOR decoding during the shape period, and substantial decreases in color and width decoding during the color and shape periods, respectively, in the data (Figure 3c, far left column). During the same time periods in the models, networks in the regime exhibited no changes in decoding (Figure 3c, middle left column; blue shading). In contrast, networks in the regime exhibited substantial increases in XOR and color decoding, and a substantial decrease in width decoding (Figure 3c, middle right column; purple shading). Finally, in line with the data, networks in the regime exhibited a substantial increase in XOR decoding, and substantial decreases in both color and width decoding (Figure 3c, middle right column; pink shading).

In addition to studying changes in traditional decoding, we also studied learning-related changes in ‘cross-generalized decoding’ which provides a measure of how factorized the representational geometry is across stimulus conditions (Bernardi et al., 2020; Figure 3—figure supplement 1). (For example, for evaluating cross-generalized decoding for color, a color decoder trained on square trials would be tested on diamond trials, and vice versa Bernardi et al., 2020; see also Linear decoding.) Using this measure, we found that changes in decoding were generally more extreme over learning and that models and data bore a stronger resemblance to one another compared with traditional decoding. Specifically, all models and the data showed a strong increase in XOR decoding (Figure 3d, top row, ‘XOR’) and a strong decrease in width decoding (Figure 3d, bottom row, ‘width’). However, only the data and networks showed a decrease in color decoding (Figure 3d, compare middle far left and middle far right), whereas networks showed no change in color decoding (Figure 3d, middle left; blue shading) and networks showed an increase in color decoding (Figure 3d, middle right; purple shading).

Beside studying the effects of input noise and firing rate regularization, we also examined the effects of different strengths of the initial stimulus input connections prior to training (Flesch et al., 2022). In line with previous studies (Yang et al., 2019; Orhan and Ma, 2019; Stroud et al., 2021; Masse et al., 2019; Dubreuil et al., 2022), for all of our previous results, the initial input weights were set to small random values prior to training (Network optimization). We found that changing these weights had similar effects to changing neural noise (with the opposite sign). Specifically, when input weights were set to 0 before training, initial decoding was at chance levels and only increased with learning for XOR, shape, and color (whereas width decoding hardly changed with learning and remained close to chance levels; Figure 3—figure supplement 2) – analogously to the regime (Figure 2—figure supplement 1b). In contrast, when input weights were set to large random values prior to training, initial decoding was at ceiling levels and did not significantly change over learning during the color and shape periods for any of the task variables (Figure 3—figure supplement 3) – similar to what we found in the regime (Figure 2—figure supplement 1a). Thus, neither extremely small nor extremely large initial input weights were consistent with the data that exhibited both increases and decreases in decodability of task variables over learning (Figure 3a).

Theoretical predictions for strength of relevant and irrelevant stimulus coding

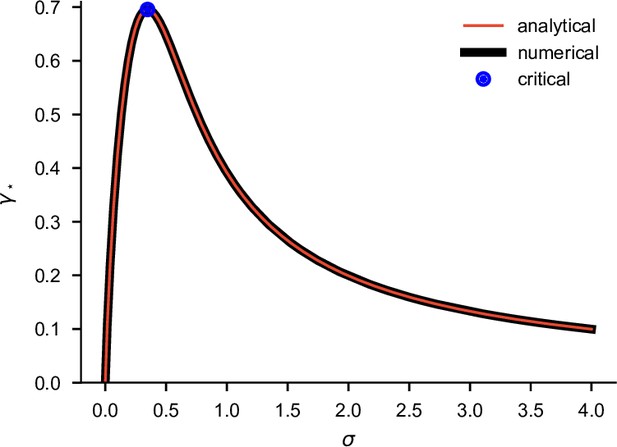

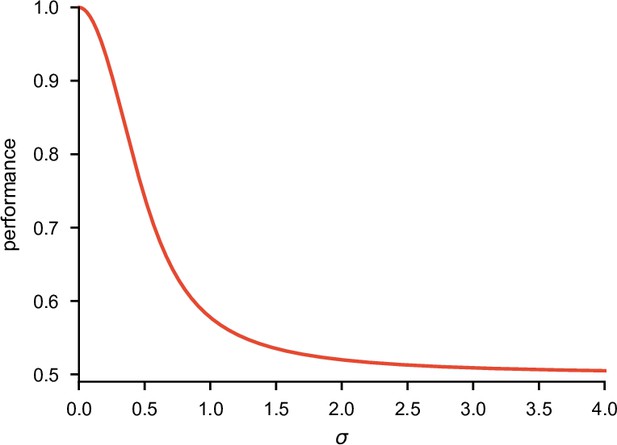

To gain a theoretical understanding of how irrelevant stimuli should be processed in a neural circuit, we performed a mathematical analysis of a minimal linear model that only included a single relevant stimulus and a single statically irrelevant stimulus (see also Appendix 1, Mathematical analysis of relevant and irrelevant stimulus coding in a linear network). Although this analysis applies to a simpler task compared with that faced by our neural networks and animals, crucially it still allows us to understand how relevant and irrelevant coding depend on noise and metabolic constraints. Our mathematical analysis suggested that the effects of noise and firing rate regularization on the performance and metabolic cost of a network can be understood via three key aspects of its representation: the strength of neural responses to the relevant stimulus (‘relevant coding’), the strength of responses to the irrelevant stimulus (‘irrelevant coding’), and the overlap between the population responses to relevant and irrelevant stimuli (‘overlap’; Figure 4a). In particular, maximizing task performance (i.e. the decodability of the relevant stimulus) required relevant coding to be strong, irrelevant coding to be weak, and the overlap between the two to be small (Figure 4b, top; see also Appendix 1, The performance of the optimal linear decoder). This ensures that the irrelevant stimulus interferes minimally with the relevant stimulus. In contrast, to reduce a metabolic cost (such as we considered previously in our optimized recurrent networks, see Equation 4 and Figure 2), both relevant and irrelevant coding should be weak (Figure 4b, bottom; see also Appendix 1, Metabolic cost).

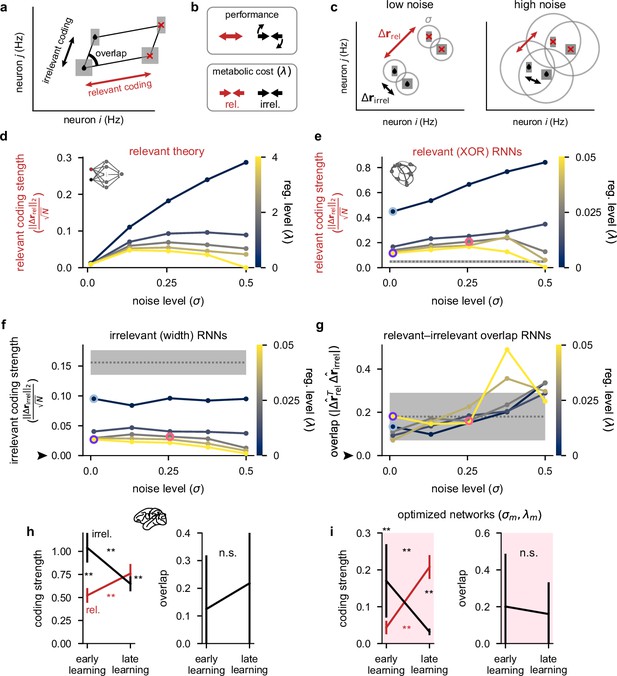

Theoretical predictions for strength of relevant and irrelevant stimulus coding and comparison to lateral prefrontal cortex (lPFC) recordings.

(a) Schematic of activity in neural state space for two neurons for a task involving two relevant (black drops vs. red crosses) and two irrelevant stimuli (thick vs. thin squares). Strengths of relevant and irrelevant stimulus coding are shown with red and black arrows, respectively, and the overlap between relevant and irrelevant coding is also shown (‘overlap’). (b) Schematic of our theoretical predictions for the optimal setting of relevant (red arrows) and irrelevant (black arrows) coding strengths when either maximizing performance (top) or minimizing a metabolic cost with strength λ (bottom; see Equation 4). (c) Schematic of our theoretical predictions for the strength of relevant (; black drops vs. red crosses; red arrows) and irrelevant (; thick vs. thin squares; black arrows) coding when jointly optimizing for both performance and a metabolic cost (cf. panel b). In the low noise regime (left), relevant conditions are highly distinguishable and irrelevant conditions are poorly distinguishable as well as strongly orthogonal to the relevant conditions. In the high noise regime (right), all conditions are poorly distinguishable. (d) Our theoretical predictions (Equation S34) for the strength of relevant coding (, see panel c) as a function of the noise level σ (horizontal axis) and firing rate regularization strength λ (colorbar). (e) Same as panel d but for our optimized recurrent neural networks (Figure 2) where we show the strength of relevant (XOR) coding (Measuring stimulus coding strength). Pale blue, purple, and pink highlights correspond to the noise and regularization strengths shown in Figure 2c and d. Gray dotted line and shading shows mean ±2 s.d. (over 250 networks; 10 networks for each of the 25 different noise and regularization levels) of prior to training. (f) Same as panel e but for the strength of irrelevant (width) coding (). The black arrow indicates the theoretical prediction of 0 irrelevant coding. (g) The absolute value of the normalized dot product (overlap) between relevant and irrelevant representations (, i.e. 0 implies perfect orthogonality and 1 implies perfect overlap) for our optimized recurrent neural networks. The black arrow indicates the theoretical prediction of 0 overlap. (h) Left: coding strength (length of arrows in panel a; Measuring stimulus coding strength) for relevant (XOR; red) and irrelevant (width; black) stimuli during early and late learning for our lPFC recordings. Right: the absolute value of the overlap between relevant and irrelevant representations for our lPFC recordings (0 implies perfect orthogonality and 1 implies perfect overlap). Error bars show the mean ± 2 s.d. over 10 non-overlapping splits of the data. (i) Same as panel h but for the optimized recurrent neural networks in the regime (see pink dots in Figure 2). Error bars show the mean ± 2 s.d. over 10 different networks. p-Values resulted from a two-sided Mann–Whitney U test (*, p<0.05; **, p<0.01; n.s., not significant; see Statistics).

In combination, when decoding performance and metabolic cost are jointly optimized, as in our task-optimized recurrent networks (Figure 2), our theoretical analyses suggested that performance should decrease with both the noise level σ and the strength of firing rate regularization λ in an approximately interchangeable way, and metabolic cost should increase with σ but decrease with λ (Appendix 1, Qualitative predictions about optimal parameters). We also found that the strength of relevant coding should decrease with λ, but its dependence on σ was more nuanced. For small σ, the performance term effectively dominates the metabolic cost (Figure 4b, top) and the strength of relevant coding should increase with σ. However, if σ is too high, the strength of relevant coding starts decreasing otherwise a disproportionately high metabolic cost must be incurred to achieve high performance (Figure 4c,d). Our mathematical analysis also suggested that irrelevant coding and relevant–irrelevant overlap could in principle depend on the noise and metabolic cost strength – particularly if performing noisy optimization where the curvature of the cost function can be relatively shallow around the minimum (Appendix 1, Qualitative predictions about optimal parameters). Therefore, practically, we also expect that irrelevant coding should be mostly dependent (inversely) on λ, but relevant–irrelevant overlap should mostly depend on σ (Appendix 1, Curvature of the loss function around the optimum). These theoretical predictions were confirmed by our recurrent neural network simulations (Figure 4e–g).

We next measured, in both recorded and simulated population responses, the three aspects of population responses that our theory identified as being key in determining the performance and metabolic cost of a network (Figure 4a; Measuring stimulus coding strength). We found a close correspondence in the learning-related changes of these measures between our lPFC recordings (Figure 4h) and optimized recurrent networks (Figure 4i). In particular, we found that the strength of relevant (XOR) coding increased significantly over the course of learning (Figure 4h and i, left; red). The strength of irrelevant (width) coding decreased significantly over the course of learning (Figure 4h and i, left; black), such that it became significantly smaller than the strength of relevant coding (Figure 4h and i, left; compare red and black at ‘late learning’). Finally, relevant and irrelevant directions were always strongly orthogonal in neural state space, and the level of orthogonality did not significantly change with learning (Figure 4h and i, right). Although we observed no learning-related changes in overlap, it may be that for stimuli that are more similar than the relevant and irrelevant features we studied here (XOR and width), the overlap between these features may decrease over learning rather than simply remaining small.

Activity-silent, sub-threshold dynamics lead to the suppression of dynamically irrelevant stimuli

While the representation of statically irrelevant stimuli can be suppressed by simply weakening the input connections conveying information about it to the network, the suppression of dynamically irrelevant stimuli requires a mechanism that alters the dynamics of the network (since this information ultimately needs to be used by the network to achieve high performance). To gain some intuition about this mechanism, we first analyzed two-neuron networks trained on the task. To demonstrate the basic mechanism of suppression of dynamically irrelevant stimuli (i.e., weak early color coding), we compared the (Figure 5a) and (Figure 5b) regimes for these networks, as these corresponded to the minimal and maximal amount of suppression of dynamically irrelevant stimuli (Figure 2c).

Activity-silent, sub-threshold dynamics lead to the suppression of dynamically irrelevant stimuli.

(a) Sub-threshold neural activity ( in Equation 1) in the full state space of an example two-neuron network over the course of a trial (from color onset) trained in the regime. Pale blue arrows show flow field dynamics (direction and magnitude of movement in the state space as a function of the momentary state). Open gray circles indicate color onset, gray squares indicate shape onset, filled gray circles indicate offset of both stimuli, and colored squares and diamonds indicate the end of the trial at 1.5 s. We plot activity separately for the three periods of the task (color period, left; shape period, middle; reward period, right). We plot dynamics without noise for visual clarity. (b) Same as panel a but for a network trained in the regime. (c) Momentary magnitude of firing rates (; i.e. momentary metabolic cost, see Equation 4) for the two-neuron networks from panels a (blue line) and b (pink line). (d) Mean ± 2 s.d. (over 10 two-neuron networks) proportion of color inputs that have a negative sign for the two noise and regularization regimes shown in panels a–c. Gray dotted line shows chance level proportion of negative color input. (e) Mean (over 10 fifty-neuron networks) proportion of color inputs that have a negative sign for all noise (horizontal axis) and regularization (colorbar) strengths shown in Figure 2c and d. Pale blue, purple, and pink highlights correspond to the noise and regularization strengths shown in Figure 2c and d. Gray dotted line and shading shows mean ± 2 s.d. (over 250 networks; 10 networks for each of the 25 different noise and regularization levels) of the proportion of negative color input prior to training (i.e. the proportion of negative color input expected when inputs are drawn randomly from a Gaussian distribution; Network optimization). (f) Momentary magnitude of firing rates () for our lateral prefrontal cortex (lPFC) recordings (far left) and 50-neuron networks in the regime (middle left, blue shading), regime (middle right, purple shading), and regime (far right, pink shading). Error bars show the mean ± 2 s.d. over either 10 non-overlapping data splits for the data or over 10 different networks for the models. p-Values resulted from a two-sided Mann–Whitney U test (*, p<0.05; **, p<0.01; ***, p<0.001; n.s., not significant; see Statistics).

We examined trajectories of sub-threshold neural activity (Equation 1) in the full two-neuron state space (Figure 5a and b, blue and green curves). We distinguished between the negative quadrant of state space, which corresponds to the rectified part of the firing rate nonlinearity (Figure 5a and b, bottom left gray quadrant; Equation 2), and the rest of state space. Importantly, if sub-threshold activity lies within the negative quadrant at some time point, both neurons in the network have zero firing rate and consequently a decoder cannot decode any information from the firing rate activity and the network exhibits no metabolic cost (Equation 4). Therefore, we reasoned that color inputs may drive sub-threshold activity so that it lies purely in the negative quadrant of state space so that no metabolic cost is incurred during the color period (akin to nonlinear gating Flesch et al., 2022; Miller and Cohen, 2001). Later in the trial, when these color inputs are combined with the shape inputs, activity may then leave the negative quadrant of state space so that the network can perform the task.

We found that for the regime, there was typically at least one set of stimulus conditions for which sub-threshold neural activity evolved outside the negative quadrant of state space during any task period (Figure 5a). Consequently, color was decodable to a relatively high level during the color period (Figure 5—figure supplement 1a, middle right) and this network produced a relatively high metabolic cost throughout the task (Figure 5c, blue line). In contrast, for networks in the regime, the two color inputs typically drove neural activity into the negative quadrant of state space during the color period (Figure 5b, left). Therefore, during the color period, the network produced zero firing rate activity (Figure 5c, pink line from open gray circle to gray square). Consequently, color was poorly decodable (Figure 5—figure supplement 1b, middle right; black line) and the network incurred no metabolic cost during the color period. Thus, color information was represented in a sub-threshold, ‘activity-silent’ (Epsztein et al., 2011) state during the color period. However, during the shape and reward periods later in the trial, the color inputs, now in combination with the shape inputs, affected the firing rate dynamics and the neural trajectories explored the full state space in a similar manner to the network (Figure 5b, middle and right panels). Indeed, we also found that color decodability increased substantially during the shape period in the network (Figure 5—figure supplement 1b, middle right; black line). This demonstrates how color inputs can cause no change in firing rates during the color period when they are irrelevant, and yet these same inputs can be utilized later in the trial to enable high task performance (Figure 5—figure supplement 1a and b, far left). While we considered individual example networks in Figure 5a–c, color inputs consistently drove neural activity into the negative quadrant of state space across repeated training of networks but not for networks (Figure 5d).

Next, we performed the same analysis as Figure 5d on the large (50-neuron) networks that we studied previously (Figures 2—4). Similar to the two-neuron networks, we found that large networks in the regime did not exhibit more negative color inputs than would be expected by chance (Figure 5e, pale blue highlighted point) – consistent with the high early color decoding we found previously in these networks (Figure 2c, middle, pale blue highlighted point). However, when increasing either the noise or regularization level, the proportion of negative color inputs increased above chance such that for the highest noise and regularization level, nearly all color inputs were negative (Figure 5e). We also found a strong negative correlation between the proportion of negative color input and the level of early color decoding that we found previously in Figure 2c (Figure 5—figure supplement 1c, ). This suggests that color inputs that drive neural activity into the rectified part of the firing rate nonlinearity, and thus generate purely sub-threshold activity-silent dynamics, is the mechanism that generates weak early color coding during the color period in these networks. We also examined whether these results generalized to networks that use a sigmoid (as opposed to a ReLU) nonlinearity. To do this, we trained networks with a nonlinearity (shifted for a meaningful comparison with ReLU, so that the lower bound on firing rates was 0, rather than –1) and found qualitatively similar results to the ReLU networks. In particular, color inputs drove neural activity toward 0 firing rate during the color period in networks but not in networks (Figure 5—figure supplement 2, compare a and b), which resulted in a lower metabolic cost during the color period for networks compared to networks (Figure 5—figure supplement 2c). This was reflected in color inputs being more strongly negative in networks compared to networks which only showed chance levels of negative color inputs (Figure 5—figure supplement 2d).

We next sought to test whether this mechanism could also explain the decrease in color decodability over learning that we observed in the lPFC data. To do this, we measured the magnitude of firing rates in the fixation, color, and shape periods for both early and late learning (note that the magnitude of firing rates coincides with our definition of the metabolic cost; Equation 4 and Figure 5c). To decrease metabolic cost over learning, we would expect two changes: firing rates should decrease with learning and firing rates should not significantly increase from the fixation to the color period after learning (Figure 5c, pink line). Indeed, we found that firing rates decreased significantly over the course of learning in all task periods (Figure 5f, far left; compare gray and black lines), and this decrease was most substantial during the fixation and color periods (Figure 5f, far left). We also found that after learning, firing rates during the color period were not significantly higher than during the fixation period (Figure 5f, far left; compare black error bars during fixation and color periods). During the shape period however, firing rates increased significantly compared to those during the fixation period (Figure 5f, far left; compare black error bars during fixation and shape periods). Therefore, the late learning dynamics of the data are in line with what we saw for the optimized two-neuron network (Figure 5c, pink line).

We then compared these results from our neural recordings with the results from our large networks trained in different noise and regularization regimes. We found that for networks, over the course of learning, firing rates decreased slightly during the fixation and color periods but actually increased during the shape period (Figure 5f, middle left pale blue shading; compare gray and black lines). Additionally, after learning, firing rates increased between fixation and color periods and between color and shape periods (Figure 5f, middle left pale blue shading; compare black error bars). For networks, over the course of learning, we found that firing rates did not significantly change during the fixation and color periods but increased significantly during the shape period (Figure 5f, middle right purple shading; compare gray and black lines). Furthermore, after learning, firing rates did not change significantly between fixation and color periods but did increase significantly between color and shape periods (Figure 5f, middle right purple shading; compare black error bars). Finally, for the networks, we found a pattern of results that was most consistent with the data. Firing rates decreased over the course of learning in all task periods (Figure 5f, far right pink shading; compare gray and black lines) and the decrease in firing rates was most substantial during the fixation and color periods. After learning, firing rates did not change significantly from fixation to color periods but did increase significantly during the shape period (Figure 5f, far right; compare black error bars).

To investigate whether these findings depended on our random network initialization prior to training, we also compared late learning firing rates to firing rates that resulted from randomly shuffling the color, shape, and width inputs (which emulates alternative tasks where different combinations of color, shape, and width are relevant). For example, the relative strengths of the three inputs to the network prior to training on this task may affect how the firing rates change over learning. Under this control, we also found that networks bore a close resemblance to the data (Figure 5—figure supplement 1d, compare black lines to blue lines).

Discussion

Comparing the neural representations of task-optimized networks with those observed in experimental data has been particularly fruitful in recent years (Wang et al., 2018; Mante et al., 2013; Sussillo et al., 2015; Barak et al., 2013; Cueva et al., 2020; Echeveste et al., 2020; Stroud et al., 2021; Lindsay, 2021). However, networks are typically optimized using only a very limited range of hyperparameter values (Wang et al., 2018; Mante et al., 2013; Sussillo et al., 2015; Barak et al., 2013; Cueva et al., 2020; Driscoll et al., 2022; Song et al., 2016; Echeveste et al., 2020; Stroud et al., 2021). Instead, here we showed that different settings of key, biologically relevant hyperparameters such as noise and metabolic costs, can yield a variety of qualitatively different dynamical regimes that bear varying degrees of similarity with experimental data. In general, we found that increasing levels of noise and firing rate regularization led to increasing amounts of irrelevant information being filtered out in the networks. Indeed, filtering out of task-irrelevant information is a well-known property of the PFC and has been observed in a variety of tasks (Freedman et al., 2001; Duncan, 2001; Cueva et al., 2020; Reinert et al., 2021; Miller and Cohen, 2001; Rainer and Miller, 2002; Asaad et al., 2000; Everling et al., 2002). We provide a mechanistic understanding of the specific conditions that lead to stronger filtering of task-irrelevant information. We predict that these results should also generalize to richer, more complex cognitive tasks that may, for example, require context switching (Flesch et al., 2022; Reinert et al., 2021; Asaad et al., 2000) or invoke working memory (Freedman et al., 2001; Rainer et al., 1998; Asaad et al., 2000). Indeed, filtering out of task-irrelevant information in the PFC has been observed in such tasks (Freedman et al., 2001; Rainer et al., 1998; Flesch et al., 2022; Reinert et al., 2021; Asaad et al., 2000; Mack et al., 2020).

Our results are also likely a more general finding of neural circuits that extend beyond the PFC. In line with this, it has previously been shown that strongly regularized neural network models trained to reproduce monkey muscle activities during reaching bore a stronger resemblance to neural recordings from primary motor cortex compared to unregularized models (Sussillo et al., 2015). In related work on motor control, recurrent networks controlled by an optimal feedback controller recapitulated key aspects of experimental recordings from primary motor cortex (such as orthogonality between preparatory and movement neural activities) when the control input was regularized (Kao et al., 2021). Additionally, regularization of neural firing rates, and its natural biological interpretation as a metabolic cost, has recently been shown to be a key ingredient for the formation of grid cell-like response profiles in artificial networks (Whittington et al., 2022; Cueva and Wei, 2018).

By showing that PFC representations changed in line with a minimal representational strategy, our results are in line with various studies showing low-dimensional representations under a variety of tasks in the PFC and other brain regions (Rainer et al., 1998; Flesch et al., 2022; Cueva et al., 2020; Ganguli et al., 2008; Sohn et al., 2019). This is in contrast to several previous observations of high-dimensional neural activity in PFC (Rigotti et al., 2013; Bernardi et al., 2020). Both high- and low-dimensional regimes confer distinct yet useful benefits: high-dimensional representations allow many behavioral readouts to be generated, thereby enabling highly flexible behavior (Rigotti et al., 2013; Flesch et al., 2022; Barak et al., 2013; Enel et al., 2016; Maass et al., 2002; Fusi et al., 2016), whereas low-dimensional representations are more robust to noise and allow for generalization across different stimuli (Flesch et al., 2022; Barak et al., 2013; Fusi et al., 2016). These two different representational strategies have previously been studied in models by setting the initial network weights to either small values (to generate low-dimensional ‘rich’ representations) or large values (Flesch et al., 2022) (to generate high-dimensional ‘lazy’ representations). However, in contrast to previous approaches, we studied the more biologically plausible effects of firing rate regularization (i.e. a metabolic cost; see also the supplement of Flesch et al., 2022) on the network activities over the course of learning and compared them to learning-related changes in PFC neural activities. Firing rate regularization will cause neural activities to wax and wane as networks are exposed to new tasks depending upon the stimuli that are currently relevant. In line with this, it is conceivable that prolonged exposure to a task that has a limited number of stimulus conditions, some of which can even be generalized over (as was the case for our task), encourages more low-dimensional dynamics to form (Wójcik, 2023; Flesch et al., 2022; Dubreuil et al., 2022; Fusi et al., 2016; Musslick, 2017; Mastrogiuseppe and Ostojic, 2018). In contrast, tasks that use a rich variety of stimuli (that may even dynamically change over the task Wang et al., 2018; Jensen et al., 2023; Heald et al., 2021), and which do not involve generalization across stimulus conditions, may more naturally lead to high-dimensional representations (Rigotti et al., 2013; Barak et al., 2013; Dubreuil et al., 2022; Fusi et al., 2016; Mastrogiuseppe and Ostojic, 2018; Bartolo et al., 2020). It would therefore be an important future question to understand how our results also depend on the task being studied as some tasks may more naturally lead to the ‘maximal’ representational regime (Barak et al., 2013; Dubreuil et al., 2022; Mastrogiuseppe and Ostojic, 2018; Figures 1—3 and 5, blue shading).

A previous analysis of the same dataset that we studied here focused on the late parts of the trial (Wójcik, 2023). In particular, they found that the final result of the computation needed for the task, the XOR operation between color and shape, emerges and eventually comes to dominate lPFC representations over the course of learning in the late shape period (Wójcik, 2023). Our analysis goes beyond this by studying mechanisms of suppression of both static and dynamically irrelevant stimuli across all task periods and how different levels of neural noise and metabolic cost can lead to qualitatively different representations of irrelevant stimuli in task-optimized recurrent networks. Other previous studies focused on characterizing the representation of several task-relevant (Rigotti et al., 2013; Bernardi et al., 2020; Stokes et al., 2013) – and, in some cases, -irrelevant (Flesch et al., 2022) – variables over the course of individual trials. Characterizing how key aspects of neural representations change over the course of learning, as we did here, offers unique opportunities for studying the functional objectives shaping neural representations (Richards et al., 2019).

There were several aspects of the data that were not well captured by our models. For example, during the shape period, decodability of shape decreased while decodability of color increased (although not significantly) in our neural recordings (Figure 3a). These differences in changes in decoding may be due to fundamentally different ways that brains encode sensory information upstream of PFC, compared to our models. For example, shape and width are both geometric features of the stimulus, whose encoding is differentiated from that of color already at early stages of visual processing (Kandel, 2000). Such a hierarchical representation of inputs may automatically lead to the (un)learning about the relevance of width (which the model reproduced) generalizing to shape, but not to color. In contrast, inputs in our model used a non-hierarchical one-hot encoding (Figure 2), which did not allow for such generalization. Moreover, in the data, we may expect width to be a priori more strongly represented than color or shape because it is a much more potent sensory feature. In line with this, in our neural recordings, we found that width was very strongly represented in early learning compared to the other stimulus features (Figure 3a, far right) and width always yielded high cross-generalized decoding – even after learning (Figure 3—figure supplement 1a, far right). Nevertheless, studying changes in decoding over learning, rather than absolute decoding levels, allowed us to focus on features of learning that do not depend on the details of upstream sensory representations of stimuli. Future studies could incorporate aspects of sensory representations that we ignored here by using stimulus inputs with which the model more faithfully reproduces the experimentally observed initial decodability of stimulus features.

In line with previous studies (Yang et al., 2019; Whittington et al., 2022; Sussillo et al., 2015; Orhan and Ma, 2019; Kao et al., 2021; Cueva et al., 2020; Driscoll et al., 2022; Song et al., 2016; Stroud et al., 2021; Masse et al., 2019; Schimel et al., 2023), we operationalized metabolic cost in our models through L2 firing rate regularization. This cost penalizes high overall firing rates. There are however alternative conceivable ways to operationalize a metabolic cost; e.g., L1 firing rate regularization has been used previously when optimizing neural networks and promotes more sparse neural firing (Yang et al., 2019). Interestingly, although our L2 is generally conceived to be weaker than L1 regularization, we still found that it encouraged the network to use purely sub-threshold activity in our task. The regularization of synaptic weights may also be biologically relevant (Yang et al., 2019) because synaptic transmission uses the most energy in the brain compared to other processes (Faria-Pereira and Morais, 2022; Harris et al., 2012). Additionally, even sub-threshold activity could be regularized as it also consumes energy (although orders of magnitude less than spiking Zhu et al., 2019). Therefore, future work will be needed to examine how different metabolic costs affect the dynamics of task-optimized networks.

We build on several previous studies that have also analyzed learning-related changes in PFC activity (Wójcik, 2023; Reinert et al., 2021; Durstewitz et al., 2010; Bartolo et al., 2020) – although these studies typically used reversal-learning paradigms in which animals are already highly task proficient and the effects of learning and task switching are inseparable. For example, in a rule-based categorization task in which the categorization rule changed after learning an initial rule, neurons in mouse PFC adjusted their selectivity depending on the rule such that currently irrelevant information was not represented (Reinert et al., 2021). Similarly, neurons in rat PFC transition rapidly from representing a familiar rule to representing a completely novel rule through trial-and-error learning (Durstewitz et al., 2010). Additionally, the dimensionality of PFC representations was found to increase as monkeys learned the value of novel stimuli (Bartolo et al., 2020). Importantly, however, PFC representations did not distinguish between novel stimuli when they were first chosen. It was only once the value of the stimuli were learned that their representations in PFC were distinguishable (Bartolo et al., 2020). These results are consistent with our results where we found poor XOR decoding during the early stages of learning which then increased over learning as the monkeys learned the rewarded conditions (Figure 3a, far left). However, we also observed high decoding of width during early learning which was not predictive of reward (Figure 3a, far right). One key distinction between our study and that of Bartolo et al., 2020, is that our recordings commenced from the first trial the monkeys were exposed to the task. In contrast, in Bartolo et al., 2020, the monkeys were already highly proficient at the task and so the neural representation of their task was already likely strongly task specific by the time recordings were taken.

In line with our approach here, several recent studies have also examined the effects of different hyperparameter settings on the solution that optimized networks exhibit. One study found that decreasing regularization on network weights led to more sequential dynamics in networks optimized to perform working memory tasks (Orhan and Ma, 2019). Another study found that the number of functional clusters that a network exhibits does not depend strongly on the strength of (L1 rate or weight) regularization, but did depend upon whether the single neuron nonlinearity saturates at high firing rates (Yang et al., 2019). It has also been shown that networks optimized to perform path integration can exhibit a range of different properties, from grid cell-like receptive fields to distinctly non grid cell-like receptive fields, depending upon biologically relevant hyperparameters – including noise and regularization (Whittington et al., 2022; Cueva and Wei, 2018; Schaeffer et al., 2022). Indeed, in addition to noise and regularization, various other hyperparameters have also been shown to affect the representational strategy used by a circuit, such as the firing rate nonlinearity (Yang et al., 2019; Whittington et al., 2022; Schaeffer et al., 2022) and network weight initialization (Flesch et al., 2022; Schaeffer et al., 2022). It is therefore becoming increasingly clear that analyzing the interplay between learning and biological constraints will be key for understanding the computations that various brain regions perform.

Methods

Experimental materials and methods

Experimental methods have been described previously (Wójcik, 2023). The experiments were conducted in line with the Animals (Scientific Procedures) Act 1986 of the UK and licensed by a Home Office Project License obtained after review by Oxford University’s Animal Care and Ethical Review committee. The procedures followed the standards set out in the European Community for the care and use of laboratory animals (EUVD, European Union directive 86/609/EEC). Briefly, two adult male rhesus macaques (monkey 1 and monkey 2) performed a passive object–association task (Figure 1a and b; see the main text ‘A task involving relevant and irrelevant stimuli’ for a description of the task). Neural recordings commenced from the first session the animals were exposed to the task. All trials with fixation errors were excluded. The dataset contained on average 237.9 (s.d. = 23.9) and 104.8 (s.d. = 2.3) trials for each of the eight conditions for monkeys 1 and 2, respectively. Data were recorded from the ventral and dorsal lPFC over a total of 27 daily sessions across both monkeys which yielded 146 and 230 neurons for monkey 1 and monkey 2, respectively. To compute neural firing rates, we convolved binary spike trains with a Gaussian kernel with a standard deviation of 50 ms. In order to characterize changes in neural dynamics over learning, analyses were performed separately on the first half of sessions (‘early learning’; 9 and 5 sessions from monkey 1 and monkey 2, respectively) and the second half of sessions (‘late learning’; 8 and 5 sessions from monkey 1 and monkey 2, respectively; Figures 3—5 and Figure 3—figure supplement 1a). This experiment was only performed once in these two animals.

Neural network models

The dynamics of our simulated networks evolved according to Equations 1 and 2 and are repeated here:

where corresponds to the vector of ‘sub-threshold’ activities of the neurons in the network, is their momentary firing rates, obtained as a rectified linear function (ReLU) of their sub-threshold activities (Equation 6; except for the networks of Figure 5—figure supplement 2 in which we used a nonlinearity to examine the generalizability of our results), τ=50 ms is the effective time constant, is the recurrent weight matrix, denotes the total stimulus input, is a stimulus-independent bias, σ is the standard deviation of the neural noise process, is a sample from a Gaussian white noise process with mean 0 and variance 1, , and are color, shape, and width input weights, respectively, and , and are one-hot encodings of color, shape, and width inputs, respectively.

All simulations started at s and lasted until s, and consisted of a fixation (–0.5 to 0 s), color (0–0.5 s), shape (0.5–1 s), and reward (1–1.5 s) period (Figures 1a and 2a). The initial condition of neural activity was set to . In line with the task, elements of were set to 0 outside the color and shape periods, and elements of both and were set to 0 outside the shape period. All networks used N=50 neurons (except for Figure 5a–d and Figure 5—figure supplement 1a and b which used N=2 neurons). We solved the dynamics of Equations 5–7 using a first-order Euler–Maruyama approximation with a discretization time step of 1 ms.

Network optimization

Choice probabilities were computed through a linear readout of network activities:

where are the readout weights and is a readout bias. To measure network performance, we used a canonical cost function (Yang et al., 2019; Orhan and Ma, 2019; Driscoll et al., 2022; Song et al., 2016; Stroud et al., 2021; Masse et al., 2019; Equations 3 and 4). We repeat the cost function from the main text here:

where the first term is a task performance term which consists of the cross-entropy loss between the one-hot encoded target choice, (based on the stimuli of the given trial, as defined by the task rules, Figure 1b), and the network’s readout probabilities, (Equation 8). Note that we measure total classification performance (cross-entropy loss) during the reward period (integral in the first term runs from t=1.0 to t=1.5; Figure 2a, bottom; yellow shading), as appropriate for the task. The second term in Equation 9 is a widely used (Yang et al., 2019; Whittington et al., 2022; Sussillo et al., 2015; Orhan and Ma, 2019; Kao et al., 2021; Driscoll et al., 2022; Song et al., 2016; Stroud et al., 2021; Masse et al., 2019) L2 regularization term (with strength λ) applied to the neural firing rates throughout the trial (integral in the second term runs from to t=1.5).

We initialized the free parameters of the network (the elements of , and ) by sampling (independently) from a normal distribution of mean 0 and standard deviation . There were two exceptions to this: we also investigated the effects of initializing the elements of the input weights () to either 0 (Figure 3—figure supplement 2) or sampling their elements from a normal distribution of mean 0 and standard deviation (Figure 3—figure supplement 3). After initialization, we optimized these parameters using gradient descent with Adam (Kingma and Ba, 2014), where gradients were obtained from backpropagation through time. We used a learning rate of 0.001 and trained networks for 1000 iterations using a batch size of 10. For each noise σ and regularization level λ (see Table 1), we optimized 10 networks with different random initializations of the network parameters.

Analysis methods

Here, we describe methods that we used to analyze neural activities. No data were excluded from our analyses. Whenever applicable, the same processing and analysis steps were applied to both experimentally recorded and model simulated data. All neural firing rates were sub-sampled at a 10 ms resolution and, unless stated otherwise, we did not trial-average firing rates before performing analyses. Analyses were either performed at every time point in the trial (Figures 2b and 3a, b, Figure 5a–c, Figure 2—figure supplement 1, Figure 3—figure supplements 1–3, and Figure 5—figure supplement 1a and b), at the end of either the color (Figure 2c and d, and Figure 5—figure supplement 1c; ‘early color decoding’) or shape periods (Figure 2c and d, ‘width decoding’), during time periods of significant changes in decoding over learning in the data (Figure 3c and d), during the final 100 ms of the shape period (Figure 4e–i), or during the final 100 ms of the fixation, color, and shape periods (Figure 5f and Figure 5—figure supplement 1d).

Linear decoding

For decoding analyses (Figures 2b–d–3, Figure 2—figure supplement 1, Figure 3—figure supplements 1–3, and Figure 5—figure supplement 1a–c), we fitted decoders using linear support vector machines to decode the main task variables: color, shape, width, and the XOR between color and shape. We measured decoding performance in a cross-validated way, using separate sets of trials to train and test the decoders, and we show results averaged over 10 random 1:1 train:test splits. For firing rates resulting from simulated neural networks, we used 10 trials for both the train and test splits. Chance level decoding was always 0.5 as all stimuli were binary.

For showing changes in decoding over learning (Figure 3c and d), we identified time periods of significant changes in decoding during the color and shape periods in the data (Figure 3a, horizontal black bars; see Statistics), and show the mean change in decoding during these time periods for both the data and models (Figure 3c, horizontal black lines). We used the same time periods when showing changes in cross-generalized decoding over learning (Figure 3d, see below).

For cross-generalized decoding (Bernardi et al., 2020; Figure 3d and Figure 3—figure supplement 1), we used the same decoding approach as described above, except that cross-validation was performed across trials corresponding to different stimulus conditions. Specifically, following the approach outlined in Bernardi et al., 2020, because there were three binary stimuli in our task (color, shape, and width), there were eight different trial conditions (Figure 1b). Therefore, for each task variable (we focused on color, shape, width, and the XOR between color and shape), there were six different ways of choosing two of the four conditions corresponding to each of the two possible stimuli for that task variable (e.g. color 1 vs. color 2). For example, when training a decoder to decode color, there were six different ways of choosing two conditions corresponding to color 1, and six different ways of choosing two conditions corresponding to color 2 (the remaining four conditions were then used for testing the decoder). Therefore, for each task variable, there were 6×6 = 36 different ways of creating training and testing sets that corresponded to different stimulus conditions. We then took the mean decoding accuracy we obtained across all 36 different training and testing sets.

Measuring stimulus coding strength

In line with our mathematical analysis (Mathematical analysis of relevant and irrelevant stimulus coding in a linear network, and in particular Equation S1) and in line with previous studies (Mante et al., 2013; Dubreuil et al., 2022), to measure the strength of stimulus coding for relevant and irrelevant stimuli, we fitted the following linear regression model