A unified internal model theory to resolve the paradox of active versus passive self-motion sensation

Abstract

Brainstem and cerebellar neurons implement an internal model to accurately estimate self-motion during externally generated (‘passive’) movements. However, these neurons show reduced responses during self-generated (‘active’) movements, indicating that predicted sensory consequences of motor commands cancel sensory signals. Remarkably, the computational processes underlying sensory prediction during active motion and their relationship to internal model computations during passive movements remain unknown. We construct a Kalman filter that incorporates motor commands into a previously established model of optimal passive self-motion estimation. The simulated sensory error and feedback signals match experimentally measured neuronal responses during active and passive head and trunk rotations and translations. We conclude that a single sensory internal model can combine motor commands with vestibular and proprioceptive signals optimally. Thus, although neurons carrying sensory prediction error or feedback signals show attenuated modulation, the sensory cues and internal model are both engaged and critically important for accurate self-motion estimation during active head movements.

https://doi.org/10.7554/eLife.28074.001eLife digest

When seated in a car, we can detect when the vehicle begins to move even with our eyes closed. Structures in the inner ear called the vestibular, or balance, organs enable us to sense our own movement. They do this by detecting head rotations, accelerations and gravity. They then pass this information on to specialized vestibular regions of the brain.

Experiments using rotating chairs and moving platforms have shown that passive movements – such as car journeys and rollercoaster rides – activate the brain’s vestibular regions. But recent work has revealed that voluntary movements – in which individuals start the movement themselves – activate these regions far less than passive movements. Does this mean that the brain ignores signals from the inner ear during voluntary movements? Another possibility is that the brain predicts in advance how each movement will affect the vestibular organs in the inner ear. It then compares these predictions with the signals it receives during the movement. Only mismatches between the two activate the brain’s vestibular regions.

To test this theory, Laurens and Angelaki created a mathematical model that compares predicted signals with actual signals in the way the theory proposes. The model accurately predicts the patterns of brain activity seen during both active and passive movement. This reconciles the results of previous experiments on active and passive motion. It also suggests that the brain uses similar processes to analyze vestibular signals during both types of movement.

These findings can help drive further research into how the brain uses sensory signals to refine our everyday movements. They can also help us understand how people recover from damage to the vestibular system. Most patients with vestibular injuries learn to walk again, but have difficulty walking on uneven ground. They also become disoriented by passive movement. Using the model to study how the brain adapts to loss of vestibular input could lead to new strategies to aid recovery.

https://doi.org/10.7554/eLife.28074.002Introduction

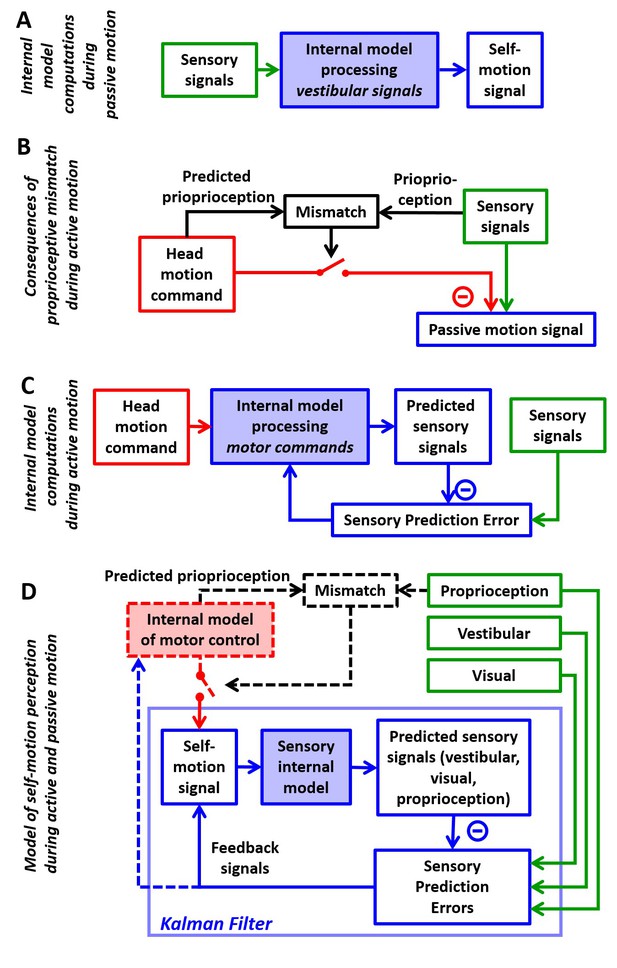

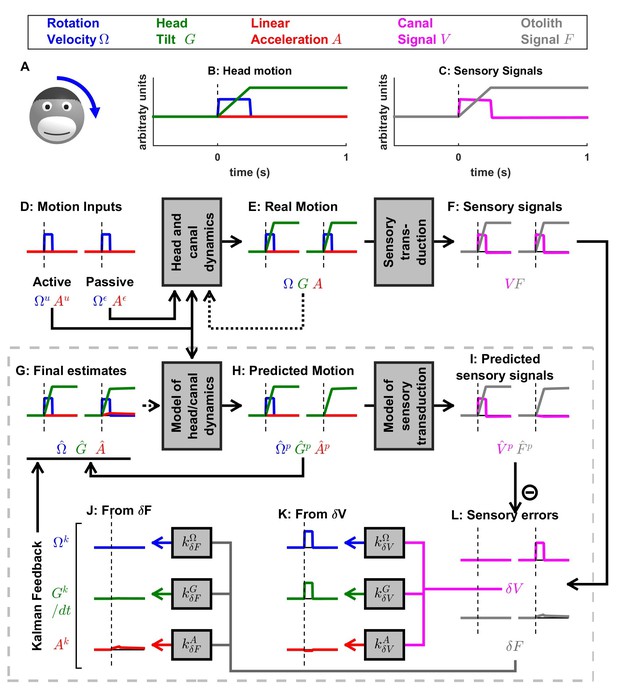

For many decades, research on vestibular function has used passive motion stimuli generated by rotating chairs, motion platforms or centrifuges to characterize the responses of the vestibular motion sensors in the inner ear and the subsequent stages of neuronal processing. This research has revealed elegant computations by which the brain uses an internal model to overcome the dynamic limitations and ambiguities of the vestibular sensors (Figure 1A; Mayne, 1974; Oman, 1982; Borah et al., 1988; Glasauer, 1992; Merfeld, 1995; Glasauer and Merfeld, 1997; Bos et al., 2001; Zupan et al., 2002; Laurens, 2006; Laurens and Droulez, 2007; Laurens and Droulez, 2008; Laurens and Angelaki, 2011; Karmali and Merfeld, 2012; Lim et al., 2017). These computations are closely related to internal model mechanisms that underlie motor control and adaptation (Wolpert et al., 1995; Körding and Wolpert, 2004; Todorov, 2004; Chen-Harris et al., 2008; Berniker et al., 2010; Berniker and Kording, 2011; Franklin and Wolpert, 2011; Saglam et al., 2011; 2014). Neuronal correlates of the internal model of self-motion have been identified in brainstem and cerebellum (Angelaki et al., 2004; Shaikh et al., 2005; Yakusheva et al., 2007, 2008, 2013, Laurens et al., 2013a, 2013b).

Internal model computations for self-motion estimation.

(A) Previous studies based on passive stimuli have proposed that vestibular sensory signals are processed by an internal model to compute optimal estimates of self-motion. (B) Other studies (Roy and Cullen, 2004) have shown that the cancellation of central vestibular responses during active motion is gated by mismatches between predicted and actual neck proprioceptive signals, and interpreted central vestibular responses as a passive motion signal. (C) Brooks and Cullen (Brooks et al., 2015; Brooks and Cullen, 2013) have proposed that an internal model processes motor commands to compute sensory predictions during active motion and that central vestibular neurons encode sensory prediction errors. (D) Framework proposed in this study, in which the internal models in (A) and (C) are in fact identical and interactions between head motion commands and sensory signals are modeled as a Kalman filter (blue) that computes optimal self-motion estimates during both passive and active motions. For simplicity, we have not included how head motion commands are generated (red), how head movements are executed, as well as the contribution of feedback to error correction and motor learning (dashed blue arrow). In line with B, the proprioceptive gating mechanism in D is shown as a switch controlling the transmission of head motion commands to the Kalman filter. Solid lines in (D): computations modeled as a Kalman filter. Broken lines in (D): additional computations that are only discussed (but not modeled) in the present study. Note that the ‘self-motion signal’ box in D stands for both the predicted motion and final self-motion estimate (Figure 1—figure supplement 2).

In the past decade, a few research groups have also studied how brainstem and cerebellar neurons modulate during active, self-generated head movements. Strikingly, several types of neurons, well-known for responding to vestibular stimuli during passive movement, lose or reduce their sensitivity during self-generated movement (Gdowski et al., 2000; Gdowski and McCrea, 1999; Marlinski and McCrea, 2009; McCrea et al., 1999; McCrea and Luan, 2003; Roy and Cullen, 2001; 2004; Brooks and Cullen, 2009; 2013; 2014; Brooks et al., 2015; Carriot et al., 2013). In contrast, vestibular afferents respond indiscriminately for active and passive stimuli (Cullen and Minor, 2002; Sadeghi et al., 2007; Jamali et al., 2009). These properties resemble sensory prediction errors in other sensorimotor functions such as fish electrosensation (Requarth and Sawtell, 2011; Kennedy et al., 2014) and motor control (Tseng et al., 2007; Shadmehr et al., 2010). Yet, a consistent quantitative take-home message has been lacking. Initial experiments and reviews implicated proprioceptive switches (Figure 1B; Roy and Cullen, 2004; Cullen et al., 2011; Cullen, 2012; Carriot et al., 2013; Brooks and Cullen, 2014). More recently, elegant experiments by Brooks and colleagues (Brooks and Cullen, 2013; Brooks et al., 2015) started making the suggestion that the brain predicts how self-generated motion activates the vestibular organs and subtracts these predictions from afferent signals to generate sensory prediction errors (Figure 1C). However, the computational processes underlying this sensory prediction have remained unclear.

Confronting the findings of studies utilizing passive and active motion stimuli leads to a paradox, in which central vestibular neurons encode self-motion signals computed by feeding vestibular signals through an internal model during passive motion (Figure 1A), but during active motion, efference copies of motor commands, also transformed by an internal model (Figure 1C), attenuate the responses of the same neurons. Thus, a highly influential interpretation is that the elaborate internal model characterized with passive stimuli would only be useful in situations that involve unexpected (passive) movements but would be unused during normal activities, because either its input or its output (Figure 1—figure supplement 1) would be suppressed during active movement. Here, we propose an alternative that the internal model that processes vestibular signals (Figure 1A) and the internal model that generates sensory predictions during active motion (Figure 1C) are identical. In support of this theory, we show that the processing of motor commands must involve an internal model of the physical properties of the vestibular sensors, identical to the computations described during passive motion, otherwise accurate self-motion estimation would be severely compromised during actively generated movements.

The essence of the theory developed previously for passive movements is that the brain uses an internal representation of the laws of physics and sensory dynamics (which has been elegantly modeled as forward internal models of the sensors) to process vestibular signals. In contrast, although it is understood that transforming head motor commands into sensory predictions is likely to also involve internal models, no explicit mathematical implementation has ever been proposed for explaining the response attenuation in central vestibular areas. A survey of the many studies by Cullen and colleagues even questions the origin and function of the sensory signals canceling vestibular afferent activity, as early studies emphasized a critical role of neck proprioception in gating the cancellation signal (Figure 1B, Roy and Cullen, 2004), whereas follow-up studies proposed that the brain computes sensory prediction errors, without ever specifying whether the implicated forward internal models involve vestibular or proprioceptive cues (Figure 1C, Brooks et al., 2015). This lack of quantitative analysis has obscured the simple solution, which is that transforming motor commands into sensory predictions requires exactly the same forward internal model that has been used to model passive motion. We show that all previous experimental findings during both active and passive movements can be explained by a single sensory internal model that is used to generate optimal estimates of self-motion (Figure 1D, ‘Kalman filter’). Because we focus on sensory predictions and self-motion estimation, we do not model in detail the motor control aspects of head movements and we consider the proprioception gating mechanism as a switch external to the Kalman filter, similar to previous studies (Figure 1D, black dashed lines and red switch).

We use the framework of the Kalman filter (Figure 1D; Figure 1—figure supplement 2; Kalman, 1960), which represents the simplest and most commonly used mathematical technique to implement statistically optimal dynamic estimation and explicitly computes sensory prediction errors. We build a quantitative Kalman filter that integrates motion signals originating from motor, canal, otolith, vision and neck proprioceptor signals during active and passive rotations, tilts and translations. We show how the same internal model must process both active and passive motion stimuli, and we provide quantitative simulations that reproduce a wide range of behavioral and neuronal responses, while simultaneously demonstrating that the alternative models (Figure 1—figure supplement 1) do not. These simulations also generate testable predictions, in particular which passive stimuli should induce sensory errors and which should not, that may motivate future studies and guide interpretation of experimental findings. Finally, we summarize these internal model computations into a schematic diagram, and we discuss how various populations of brainstem and cerebellar neurons may encode the underlying sensory error or feedback signals.

Results

Overview of Kalman filter model of head motion estimation

The structure of the Kalman filter in Figure 1D is shown with greater detail in Figure 1—figure supplement 2 and described in Materials and methods. In brief, a Kalman filter (Kalman, 1960) is based on a forward model of a dynamical system, defined by a set of state variables that are driven by their own dynamics, motor commands and internal or external perturbations. A set of sensors, grouped in a variable , provide sensory signals that reflect a transformation of the state variables. Note that may provide ambiguous or incomplete information, since some sensors may measure a mixture of state variables, and some variables may not be measured at all.

The Kalman filter uses the available information to track an optimal internal estimate of the state variable . At each time , the Kalman filter computes a preliminary estimate (also called a prediction, ) and a corresponding predicted sensory signal . In general, the resulting state estimate and the predicted sensory prediction may differ from the real values and . These errors are reduced using sensory information, as follows (Figure 1—figure supplement 2B): First, the prediction and the sensory input are compared to compute a sensory error Second, sensory errors are transformed into a feedback , where is a matrix of feedback gains, whose dimensionality depends on both the state variable and the sensory inputs. Thus, an improved estimate at time is . The feedback gain matrix determines how sensory errors improve the final estimate (see Supplementary methods, ‘Kalman filter algorithm’ for details).

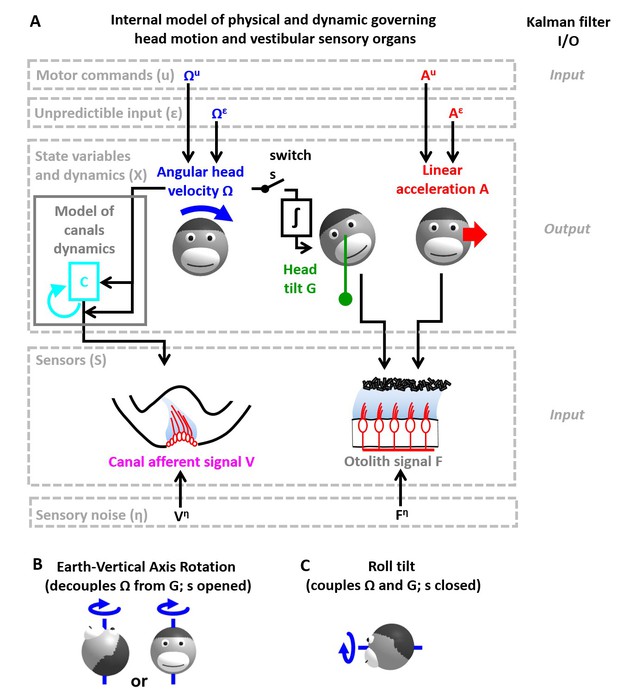

Figure 2 applies this framework to the problem of estimating self-motion (rotation, tilt and translation) using vestibular sensors, with two types of motor commands: angular velocity () and translational acceleration (), with corresponding unpredicted inputs, and (Figure 2A) that represent passive motion or motor error (see Discussion: ‘Role of the vestibular system during active motion: fundamental, ecological and clinical implications’). The sensory signals () we consider initially encompass the semicircular canals (rotation sensors that generate a sensory signal ) and the otoliths organs (linear acceleration sensors that generate a sensory signal ) – proprioception is also added in subsequent sections. Each of these sensors has distinct properties, which can be accounted for by the internal model of the sensors. The semicircular canals exhibit high-pass dynamic properties, which are modeled by another state variable (see Supplementary methods, ‘Model of head motion and vestibular sensors’). The otolith sensors exhibit negligible dynamics, but are fundamentally ambiguous: they sense gravitational as well as linear acceleration – a fundamental ambiguity resulting from Einstein’s equivalence principle [Einstein, 1907; modeled here as and ; note that and are expressed in comparable units; see Materials and methods; 'Simulation parameters']. Thus, in total, the state variable has 4-degrees of freedom (Figure 2A): angular velocity and linear acceleration (which are the input/output variables directly controlled), as well as (a hidden variable that must be included to model the dynamics of the semicircular canals) and tilt position (another hidden variable that depends on rotations , necessary to model the sensory ambiguity of the otolith organs).

Application of the Kalman filter algorithm into optimal self-motion estimation using an internal model with four state variables and two vestibular sensors.

(A) Schematic diagram of the model. Inputs to the model include motor commands, unexpected perturbations, as well as sensory signals. Motor commands during active movements, that is angular velocity () and translational acceleration (), are known by the brain. Unpredicted internal or external factors such as external (passive) motion are modeled as variables and . The state variable has 4 degrees of freedom: angular velocity , tilt position linear acceleration and a hidden variable used to model the dynamics of the semicircular canals (see Materials and methods). Two sensory signals are considered: semicircular canals (rotation sensors that generate a signal ) and the otoliths organs (linear acceleration sensors that generate a signal ). Sensory noise and is illustrated here but omitted from all simulations for simplicity. (B, C) illustration of rotations around earth-vertical (B) and earth-horizontal (C) axes.

The Kalman filter computes optimal estimates , , and based on motor commands and sensory signals. Note that we do not introduce any tilt motor command, as tilt is assumed to be controlled only indirectly though rotation commands (). For simplicity, we restrict self-motion to a single axis of rotation (e.g. roll) and a single axis of translation (inter-aural). The model can simulate either rotations in the absence of head tilt (e.g. rotations around an earth-vertical axis: EVAR, Figure 2B) or tilt (Figure 2C, where tilt is the integral of rotation velocity, ) using a switch (but see Supplementary methods, ‘Three-dimensional Kalman filter’ for a 3D model). Sensory errors are used to correct internal motion estimates using the Kalman gain matrix, such that the Kalman filter as a whole performs optimal estimation. In theory, the Kalman filter includes a total of eight feedback signals, corresponding to the combination of two sensory (canal and otolith) errors and four internal states (, , and ). From those eight feedback signals, two are always negligible (Table 2; see also Supplementary methods, ‘Kalman feedback gains’).

We will show how this model performs optimal estimation of self-motion using motor commands and vestibular sensory signals in a series of increasingly complex simulations. We start with a very short (0.2 s) EVAR stimulus, where canal dynamics are negligible (Figure 3), followed by a longer EVAR that highlights the role of an internal model of the canals (Figure 4). Next, we consider the more complex tilt and translation movements that require all four state variables to demonstrate how canal and otolith errors interact to disambiguate otolith signals (Figures 5 and 6). Finally, we extend our model to simulate independent movement of the head and trunk by incorporating neck proprioceptive sensory signals (Figure 7). For each motion paradigm, identical active and passive motion simulations will be shown side by side in order to demonstrate how the internal model integrates sensory information and motor commands. We show that the Kalman feedback plays a preeminent role, which explains why lots of brain machinery is devoted to its implementation (see Discussion). For convenience, all mathematical notations are summarized in Table 1. For Kalman feedback gain nomenclature and numerical values, see Table 2.

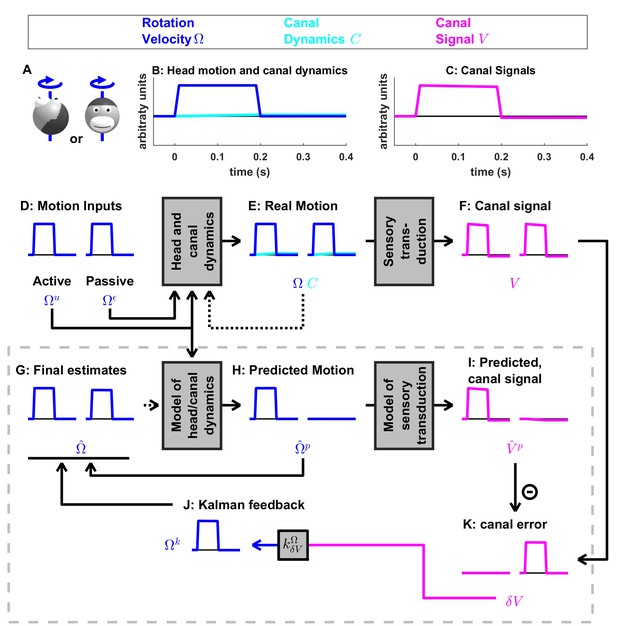

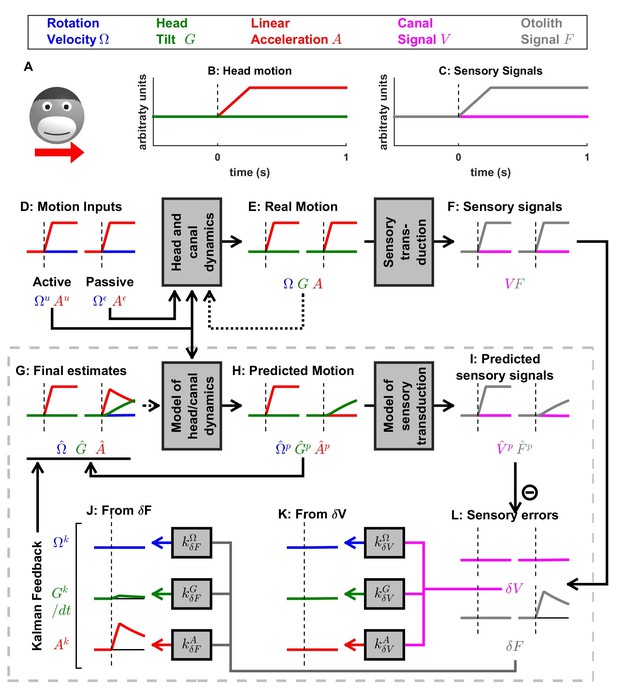

Short duration rotation around an earth-vertical axis (as in Figure 2B).

(A) Illustration of the stimulus lasting, 200 ms. (B,C) Time course of motion variables and sensory (canal) signals. (D–K) Simulated variables during active (left panels) and passive motion (right panels). Only the angular velocity state variable is shown (tilt position and linear acceleration are not considered in this simulation, and the hidden variable is equal to zero). Continuous arrows represent the flow of information during one time step, and broken arrows the transfer of information from one time step to the next. (J) Kalman feedback. For clarity, the Kalman feedback is shown during passive motion only (it is always zero during active movements in the absence of any perturbation and noise). The box defined by dashed gray lines illustrates the Kalman filter computations. For the rest of mathematical notations, see Table 1.

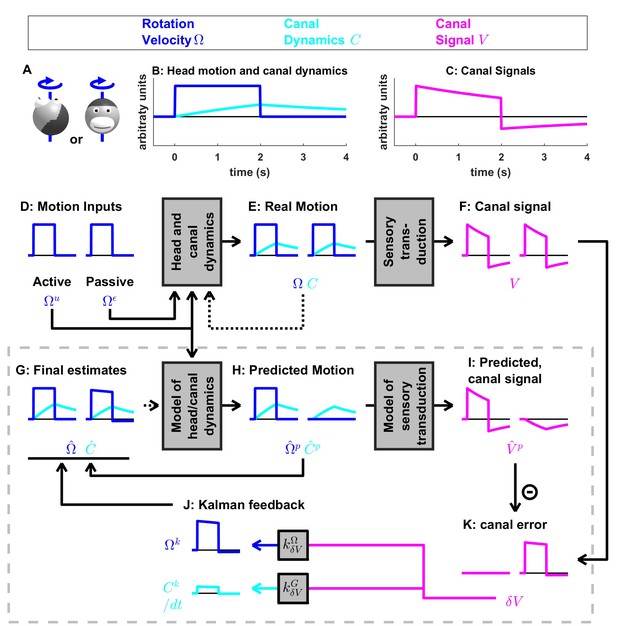

Medium-duration rotation around an earth-vertical axis, demonstrating the role of the internal model of canal dynamics.

(A) Illustration of the stimulus lasting 2 s. (B,C) Time course of motion variables and sensory (canal) signals. (D–K) Simulated variables during active (left panels) and passive motions (right panels). Two state variables are shown: the angular velocity (blue) and canal dynamics (cyan). Continuous arrows represent the flow of information during one time step, and broken arrows the transfer of information from one time step to the next. (J) Kalman feedback. For clarity, the Kalman feedback (reflecting feedback from the canal error signal to the two state variables) is shown during passive motion only (it is always zero during active movements in the absence of any perturbation and noise). All simulations use a canal time constant of 4 s. Note that, because of the integration, the illustrated feedback is scaled by a factor ; see Supplementary methods, ‘Kalman feedback gains’. The box defined by dashed gray lines illustrates the Kalman filter computations. For the rest of mathematical notations, see Table 1.

Simulation of short duration head tilt.

(A) Illustration of the stimulus lasting 0.2 s. (B,C) Time course of motion variables and sensory (canal and otolith) signals. (D–L) Simulated variables during active (left panels) and passive motions (right panels). Three state variables are shown: the angular velocity (blue), tilt position and linear acceleration . Continuous arrows represent the flow of information during one time step, and broken arrows represent the transfer of information from one time step to the next. (J, K) Kalman feedback (shown during passive motion only). Two error signals (: canal error; : otolith error) are transformed into feedback to state variables : blue, : green, : red (variable is not shown, but see Figure 5—figure supplement 1 for simulations of a 2-s tilt). Feedback originating from is shown in (J) and from in (K). The feedback to is scaled by a factor (see Supplementary methods, ‘Kalman feedback gains’). Note that in this simulation we consider an active () or passive () rotation velocity as input. The tilt itself is a consequence of the rotation, and not an independent input. The box defined by dashed gray lines illustrates the Kalman filter computations. For the rest of mathematical notations, see Table 1.

Simulation of short duration translation.

Same legend as Figure 5. Note that is identical in Figures 5 and 6: in terms of sensory inputs, these simulation differ only in the canal signal.

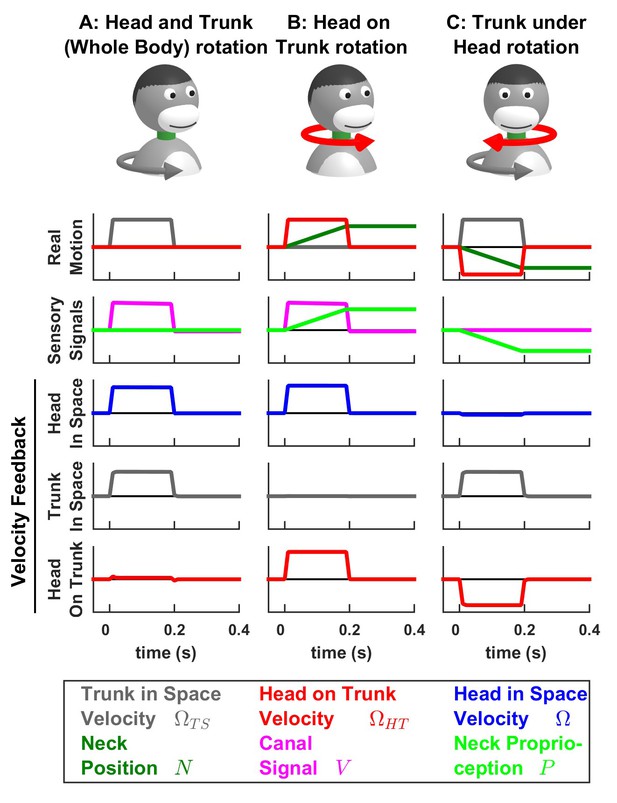

Simulations of passive trunk and head movements.

We use a variant of the Kalman filter model (see Supplementary methods) that tracks the velocity of both head and trunk (trunk in space: gray; head in space: blue; head on trunk: red) based on semicircular canal and neck proprioception signals. The real motion (first line), sensory signals (second line) and velocity feedback signals (third to fifth lines) are shown during (A) passive whole head and trunk rotation, (B) passive head on trunk rotation, and (C) passive trunk under head rotation. See Figure 7—figure supplement 1–3 for other variables and simulations of active motion.

List of motion variables and mathematical notations.

https://doi.org/10.7554/eLife.28074.026| Motion variables | |

| Head rotation velocity (in space) | |

| G | Head Tilt |

| Linear Acceleration | |

| Canals dynamics | |

| Trunk in space rotation velocity (variant of the model) | |

| Head on trunk rotation velocity (variant of the model) | |

| Neck position (variant of the model) | |

| Matrix containing all motion variables in a model | |

| Sensory variables | |

| Semicircular canal signal | |

| Otolith signal | |

| Neck proprioceptive signal | |

| Visual rotation signal | |

| Matrix containing all sensory variables in a model | |

| Accent and superscripts (motion variables) | |

| Real value of a variable | |

| Final estimate | |

| Predicted (or preliminary) estimate | |

| Motor command affecting the variable | |

| Perturbation or motor error affecting the variable (standard deviation ) | |

| Standard deviation of | |

| Kalman feedback on the variable | |

| Accent and superscripts (sensory variables) | |

| Real value of a variable | |

| Predicted value | |

| Sensory noise | |

| Standard deviation of | |

| Sensory error | |

| Kalman gain of the feedback from S to a motion variable X | |

| Other | |

| Time step used in the simulations | |

| Transposed of a matrix M | |

| Time constant of the semicircular canals |

Kalman feedback gains during EVAR and tilt/translation.

Some feedback gains are constant independently of while some other scale with (see Supplementary methods, ‘Feedback gains’ for explanations). Gains that have negligible impact on the motion estimates are indicated in normal fonts, others with profound influence are indicated in bold. The feedback gains transform error signals into feedback signals.

| Gains during EVAR | Gains during tilt | Notes | ||

|---|---|---|---|---|

| Canal feedbacks | 0.94 | 0.94 | ||

| 0.19 t | 0.23 t | Integrated over time | ||

| 0.00 | 0.90 t | |||

| 0.00 | -0.90 t | Negligible | ||

| Otolith feedbacks | - | 0.00 | Negligible | |

| - | 0.14 t | Integrated over time | ||

| - | 0.76 t | |||

| - | 0.99 | |||

Kalman feedback gains during head and neck rotation.

As in Table 2, some feedback gains are constant and independently of , while some others scale with or inversely to (see Supplementary methods, ‘Feedback gains of the model of head and neck motion’ for explanations). Gains that have negligible impact on the motion estimates are indicated in normal fonts, others with profound influence are indicated in bold. The feedback gains and are computed as and .

| Gains | Notes | ||

|---|---|---|---|

| Canal feedbacks | 0.85 | ||

| 0.10 | |||

| 0.05 t | Negligible | ||

| 0.22 t | Integrated over time | ||

| 0.95 | |||

| Proprioceptive feedbacks | -0.84/t | ||

| 0.89/t | |||

| 0.94 | |||

| 0.03 | Negligible | ||

| 0.05/t | Negligible | ||

Passive motion induces sensory errors

In Figure 3, we simulate rotations around an earth-vertical axis (Figure 3A) with a short duration (0.2 s, Figure 3B), chosen to minimize canal dynamics (, Figure 3B, cyan) such that the canal response matches the velocity stimulus (, compare magenta curve in Figure 3C with blue curve in Figure 3B). We simulate active motion (Figure 3D–K, left panels), where (Figure 3D) and (not shown), as well as passive motion (Figure 3D–K, right panels), where (Figure 3D) and (not shown). The rotation velocity stimulus (, Figure 3E, blue) and canal activation (, Figure 3F, magenta) are identical in both active and passive stimulus conditions. As expected, the final velocity estimate (output of the filter, Figure 3G, blue) is equal to the stimulus (Figure 3E, blue) during both passive and active conditions. Thus, this first simulation is meant to emphasize differences in the flow of information within the Kalman filter, rather than differences in performance between passive and active motions (which is identical).

The fundamental difference between active and passive motions resides in the prediction of head motion (Figure 3H) and sensory canal signals (Figure 3I). During active motion, the motor command (Figure 3D) is converted into a predicted rotation (Figure 3H) by the internal model, and in turn in a predicted canal signal (Figure 3I). Of course, in this case, we have purposely chosen the rotation stimulus to be so short (0.2 s), such that canal afferents reliably encode the rotation stimulus (; compare Figure 3F and E, left panels) and the internal model of canals dynamics have a negligible contribution; that is, (compare Figure 3I and H, left panels). Because the canal sensory error is null, that is (Figure 3K, left panel), the Kalman feedback pathway remains silent (not shown) and the net motion estimate is unchanged compared to the prediction, that is, . In conclusion, during active rotation (and in the absence of perturbations, motor or sensory noise), motion estimates are generated entirely based on an accurate predictive process, in turn leading to an accurate prediction of canal afferent signals. In the absence of sensory mismatch, these estimates don’t require any further adjustment.

In contrast, during passive motion the predicted rotation is null (, Figure 3H, right panel), and therefore the predicted canal signal is also null (, Figure 3I, right panel). Therefore, canal signals during passive motion generate a sensory error (Figure 3K, right panel). This sensory error is converted into a feedback signal (Figure 3J) with a Kalman gain (feedback from canal error to angular velocity estimate ) that is close to 1 (Table 2; note that this value represents an optimum and is computed by the Kalman filter algorithm). The final motion estimate is generated by this feedback, that is

These results illustrate the fundamental rules of how active and passive motion signals are processed by the Kalman filter (and, as hypothesized, the brain). During active movements, motion estimates are generated by a predictive mechanism, where motor commands are fed into an internal model of head motion. During passive movement, motion estimates are formed based on feedback signals that are themselves driven by sensory canal signals. In both cases, specific nodes in the network are silent (e.g. predicted canal signal during passive motion, Figure 3I; canal error signal during active motion, Figure 3K), but the same network operates in unison under all stimulus conditions. Thus, depending on whether the neuron recorded by a microelectrode in the brain carries predicted, actual or error sensory signals, differences in neural response modulation are expected between active and passive head motion. For example, if a cell encodes canal error exclusively, it will show maximal modulation during passive rotation, and no modulation at all during active head rotation. If a cell encodes mixtures of canal sensory error and actual canal sensory signals (e.g. through a direct canal afferent input), then there will be non-zero, but attenuated, modulation during active, compared to passive, head rotation. Indeed, a range of response attenuation has been reported in the vestibular nuclei (see Discussion).

We emphasize that in Figure 3 we chose a very short-duration (0.2 s) motion profile, for which semicircular canal dynamics are negligible and the sensor can accurately follow the rotation velocity stimulus. We now consider more realistic rotation durations, and demonstrate how predictive and feedback mechanisms interact for accurate self-motion estimation. Specifically, canal afferent signals attenuate (because of their dynamics) during longer duration rotations – and this attenuation is already sizable for rotations lasting 1 s or longer. We next demonstrate that the internal model of canal dynamics must be engaged for accurate rotation estimation, even during purely actively generated head movements.

Internal model of canals

We now simulate a longer head rotation, lasting 2 s (Figure 4A,B, blue). The difference between the actual head velocity and the average canal signal is modeled as an internal state variable , which follows low-pass dynamics (see Supplementary methods, ‘Model of head motion and vestibular sensors’). At the end of the 2 s rotation, the value of reaches its peak at ~40% of the rotation velocity (Figure 4B, cyan), modeled to match precisely the afferent canal signal , which decreases by a corresponding amount (Figure 4C). Note that persists when the rotation stops, matching the canal aftereffect ( after t > 2 s). Next, we demonstrate how the Kalman filter uses the internal variable to compensate for canal dynamics.

During active motion, the motor command (Figure 4D) is converted into an accurate prediction of head velocity (Figure 4H, blue). Furthermore, is also fed through the internal model of the canals to predict (Figure 4H, cyan). By combining the predicted internal state variables and , the Kalman filter computes a canal prediction that follows the same dynamics as (compare Figure 4F and I, left panels). Therefore, as in Figure 3, the resulting sensory mismatch is and the final estimates (Figure 4G) are identical to the predicted estimates (Figure 4H). Thus, the Kalman filter maintains an accurate rotation estimate by feeding motor commands through an internal model of the canal dynamics. Note, however, that because in this case (compare magenta curve in Figure 4F and blue curve in Figure 4E, left panels), (compare magenta curve in Figure 4I and blue curve in Figure 4H, left panels). Thus, the sensory mismatch can only be null under the assumption that motor commands have been processed through the internal model of the canals. But before we elaborate on this conclusion, let’s first consider passive stimulus processing.

During passive motion, the motor command is equal to zero. First, note that the final estimate is accurate (Figure 4G), as in Figure 3G, although canal afferent signals don’t encode accurately. Second, note that the internal estimate of canal dynamics (Figure 4G) and the corresponding prediction (; Figure 4H) are both accurate (compare with Figure 4E). This occurs because the canal error (Figure 4K) is converted into a second feedback, , (Figure 4J, cyan), which updates the internal estimate (see Supplementary methods, ‘Velocity Storage’). Finally, in contrast to Figure 3, the canal sensory error (Figure 4K) does not follow the same dynamics as (Figure 4C,F), but is (as it should) equal to (Figure 4B). This happens because, though a series of steps ( = - in Figure 4I and in Figure 4K), is added to the vestibular signal to compute . This leads to the final estimate (Figure 4G). Model simulations during even longer duration rotations and visual-vestibular interactions are illustrated in Figure 4—figure supplement 1. Thus, the internal model of canal dynamics improves the rotation estimate during passive motion. Remarkably, this is important not only during very long duration rotations (as is often erroneously presumed), but also during short stimuli lasting 1–2 s, as illustrated with the simulations in Figure 4.

We now return to the actively generated head rotations to ask the important question: What would happen if the brain didn’t use an internal model of canal dynamics? We simulated motion estimation where canal dynamics were removed from the internal model used by the Kalman filter (Figure 4—figure supplement 2). During both active and passive motion, the net estimate is inaccurate as it parallels, exhibiting a decrease over time and an aftereffect. In particular, during active motion, the motor commands provide accurate signals , but the internal model of the canals fails to convert them into a correct prediction , resulting in a sensory mismatch. This mismatch is converted into a feedback signal that degrades the accurate prediction such that the final estimate is inaccurate. These simulations highlight the role of the internal model of canal dynamics, which continuously integrates rotation information in order to anticipate canal afferent activity during both active and passive movements. Without this sensory internal model, active movements would result in sensory mismatch, and the brain could either transform this mismatch into sensory feedback, resulting in inaccurate motion estimates, or ignore it and lose the ability to detect externally generated motion or movement errors. Note that the impact of canal dynamics is significant even during natural short-duration and high-velocity head rotations (Figure 4—figure supplement 3). Thus, even though particular nodes (neurons) in the circuit (e.g. vestibular and rostral fastigial nuclei cells presumably reflecting either or in Figures 3 and 4; see Discussion) are attenuated or silent during active head rotations, efference copies of motor commands must always be processed though the internal model of the canals – motor commands cannot directly drive appropriate sensory prediction errors. This intuition has remained largely unappreciated by studies comparing how central neurons modulate during active and passive rotations – a misunderstanding that has led to a fictitious dichotomy belittling important insights gained by decades of studies using passive motion stimuli (see Discussion).

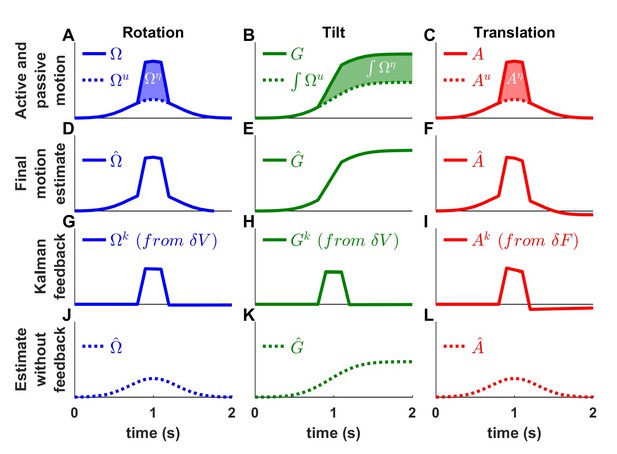

Active versus passive tilt

Next, we study the interactions between rotation, tilt and translation perception. We first simulate a short duration (0.2 s) roll tilt (Figure 5A; with a positive tilt velocity , Figure 5B, blue). Tilt position (, Figure 5B, green) ramps during the rotation and then remains constant. As in Figure 3, canal dynamics are negligible (; Figure 5F, magenta) and the final rotation estimate is accurate (Figure 5G, blue). Also similar to Figure 3, is carried by the predicted head velocity node during active motion (; ) and by the Kalman feedback node during passive motion (; ). That is, the final rotation estimate, which is accurate during both active and passive movements, is carried by different nodes (thus, likely different cell types; see Discussion) within the neural network.

When rotations change orientation relative to gravity, another internal state (tilt position , not included in the simulations of Figures 3 and 4) and another sensor (otolith organs; since in this simulation; Figure 5F, black) are engaged. During actively generated tilt movements, the rotation motor command () is temporally integrated by the internal model (see of Supplementary methods, ‘Kalman filter algorithm developed’), generating an accurate prediction of head tilt (Figure 5H, left panel, green). This results in a correct prediction of the otolith signal (Figure 5I, grey) and therefore, as in previous simulations of active movement, the sensory mismatch for both the canal and otolith signals (Figure 5L, magenta and gray, respectively) and feedback signals (not shown) are null; and the final estimates, driven exclusively by the prediction, are accurate; and .

During passive tilt, the canal error, , is converted into Kalman feedback that updates (Figure 5K, blue) and (not shown here; but see Figure 5—figure supplement 1 for 2 s tilt simulations), as well as the two other state variables ( and ). Specifically, the feedback from to (updates the predicted tilt and is temporally integrated by the Kalman filter (; see Supplementary methods, ‘Passive Tilt’; Figure 5K, green). The feedback signal from to has a minimal impact, as illustrated in Figure 5K, red (see also Supplementary methods,’ Kalman feedback gains’ and Table 2).

Because efficiently updates the tilt estimate , the otolith error is close to zero during passive tilt (Figure 5L, gray; see Supplementary methods, ‘Passive Tilt’) and therefore all feedback signals originating from (Figure 5J) play a minimal role (see Supplementary methods, ‘Passive Tilt’) during pure tilt (this is the case even for longer duration stimuli; Figure 5—figure supplement 1). This simulation highlights that, although tilt is sensed by the otoliths, passive tilt doesn’t induce any sizeable otolith error. Thus, unlike neurons tuned to canal error, the model predicts that those cells tuned to otolith error will not modulate during either passive or actively-generated head tilt. Therefore, cells tuned to otolith error would respond primarily during translation, and not during tilt, thus they would be identified ‘translation-selective’. Furthermore, the model predicts that those neurons tuned to passive tilt (e.g. Purkinje cells in the caudal cerebellar vermis; Laurens et al., 2013b) likely reflect a canal error that has been transformed into a tilt velocity error (Figure 5L, magenta). Thus, the model predicts that tilt-selective Purkinje cells should encode tilt velocity, and not tilt position, a prediction that remains to be tested experimentally (see Discussion).

Otolith errors are interpreted as translation and tilt with distinct dynamics

Next, we simulate a brief translation (Figure 6). During active translation, we observe, as in previous simulations of active movements, that the predicted head motion matches the sensory (otolith in this case: ) signals ( and ). Therefore, as in previous simulations of active motion, the sensory prediction error is zero (Figure 6L) and the final estimate is equal to, and driven by, the prediction (; Figure 6G, red).

During passive translation, the predicted acceleration is null (, Figure 6H, red), similar as during passive rotation in Figures 3 and 4). However, a sizeable tilt signal ( and , Figure 6G,H, green), develops over time. This (erroneous) tilt estimate can be explained as follows: soon after translation onset (vertical dashed lines in Figure 6B–J), is close to zero. The corresponding predicted otolith signal is also close to zero (), leading to an otolith error (Figure 6L, right, gray). Through the Kalman feedback gain matrix, this otolith error, , is converted into: (1) an acceleration feedback (Figure 6J, red) with gain (the close to unity feedback gain indicates that otolith errors are interpreted as acceleration: ; note however that the otolith error vanishes over time, as explained next); and (2) a tilt feedback (Figure 6J, green), with . This tilt feedback, although too weak to have any immediate effect, is integrated over time (; see Figure 5 and Supplementary methods, ‘Somatogravic effect’), generating the rising tilt estimate (Figure 6G, green) and (Figure 6H, green).

The fact that the Kalman gain feedback from the otolith error to the internal state generates the somatogravic effect is illustrated in Figure 6—figure supplement 1, where a longer acceleration (20 s) is simulated. At the level of final estimates (perception), these simulations predict the occurrence of tilt illusions during sustained translation (somatogravic illusion; Graybiel, 1952; Paige and Seidman, 1999). Further simulations show how activation of the semicircular canals without a corresponding activation of the otoliths (e.g. during combination of tilt and translation; Angelaki et al. (2004); Yakusheva et al., 2007) leads to an otolith error (Figure 6—figure supplement 2) and how signals from the otoliths (that sense indirectly whether or not the head rotates relative to gravity) can also influence the rotation estimate at low frequencies (Figure 6—figure supplement 3; this property has been extensively evaluated by Laurens and Angelaki, 2011). These simulations demonstrate that the Kalman filter model efficiently simulates all previous properties of both perception and neural responses during passive tilt and translation stimuli (see Discussion).

Neck proprioceptors and encoding of trunk versus head velocity

The model analyzed so far has considered only vestibular sensors. Nevertheless, active head rotations often also activate neck proprioceptors, when there is an independent rotation of the head relative to the trunk. Indeed, a number of studies (Kleine et al., 2004; Brooks and Cullen, 2009; 2013; Brooks et al., 2015) have identified neurons in the rostral fastigial nuclei that encode the rotation velocity of the trunk. These neurons receive convergent signals from the semicircular canals and neck muscle proprioception and, accordingly, are named ‘bimodal neurons’, to contrast with ‘unimodal neurons’, which encode passive head velocity. Because the bimodal neurons do not respond to active head and trunk movements (Brooks and Cullen, 2013; Brooks et al., 2015), they likely encode feedback signals related to trunk velocity. We developed a variant of the Kalman filter to model both unimodal and bimodal neuron types (Figure 7; see also Supplementary methods and Figure 7—figure supplement 1–3).

The model tracks the velocity of the trunk in space and the velocity of the head on the trunk as well as neck position (). Sensory inputs are provided by the canals (that sense the total head velocity, ), and proprioceptive signals from the neck musculature (), which are assumed to encode neck position (Chan et al., 1987).

In line with the simulations presented above, we find that, during active motion, the predicted sensory signals are accurate. Consequently, the Kalman feedback pathways are silent (Figure 7—figure supplement 1–3; active motion is not shown in Figure 7). In contrast, passive motion induces sensory errors and Kalman feedback signals. The velocity feedback signals (elaborated in Figure 7—figure supplement 1–3) have been re-plotted in Figure 7, where we illustrate head in space (blue), trunk in space (gray), and head on trunk (red) velocity (neck position feedback signals are only shown in Figure 7—figure supplement 1–3).

During passive whole head and trunk rotation, where the trunk rotates in space (Figure 7A, Real motion: , grey) and the head moves together with the trunk (head on trunk velocity , red, head in space , blue), we find that the resulting feedback signals accurately encode these rotation components (Figure 7A, Velocity Feedback; see also Figure 7—figure supplement 1). During head on trunk rotation (Figure 7B, Figure 7—figure supplement 2), the Kalman feedback signals accurately encode the head on trunk (red) or in space (blue) rotation, and the absence of trunk in space rotation (gray). Finally, during trunk under head rotation that simulates a rotation of the trunk while the head remains fixed in space, resulting in a neck counter-rotation, the various motion components are accurately encoded by Kalman feedback (Figure 7C, Figure 7—figure supplement 3). We propose that unimodal and bimodal neurons reported in (Brooks and Cullen, 2009; 2013) encode feedback signals about the velocity of the head in space (, Figure 7, blue) and of the trunk in space (, Figure 7, gray), respectively. Furthermore, in line with experimental findings (Brooks and Cullen, 2013), these feedback pathways are silent during self-generated motion.

The Kalman filter makes further predictions that are entirely consistent with experimental results. First, it predicts that proprioceptive error signals during passive neck rotation encode velocity (Figure 7—figure supplement 3L; see Supplementary methods, ‘Feedback signals during neck movement’). Thus, the Kalman filter explains the striking result that the proprioceptive responses of bimodal neurons encode trunk velocity (Brooks and Cullen, 2009; 2013), even if neck proprioceptors encode neck position. Note that neck proprioceptors likely encode a mixture of neck position and velocity at high frequencies (Chan et al., 1987; Mergner et al., 1991); and additional simulations (not shown) based on this hypothesis yield similar results as those shown here. We used here a model in which neck proprioceptors encode position for simplicity, and in order to demonstrate that Kalman feedback signals encode trunk velocity even when proprioceptive signals encode position.

Second, the model predicts another important property of bimodal neurons: their response gains to both vestibular (during sinusoidal motion of the head and trunk together) and proprioceptive (during sinusoidal motion of the trunk when the head is stationary) stimulation vary identically if a constant rotation of the head relative to the trunk is added, as an offset, to the sinusoidal motion (Brooks and Cullen, 2009). We propose that this offset head rotation extends or contracts individual neck muscles and affects the signal to noise ratio of neck proprioceptors. Indeed, simulations shown in Figure 7—figure supplement 4 reproduce the effect of head rotation offset on bimodal neurons. In agreement with experimental findings, we also find that simulated unimodal neurons are not affected by these offsets (Figure 7—figure supplement 4).

Finally, the model also predicts the dynamics of trunk and head rotation perception during long-duration rotations (Figure 7—figure supplement 5), which has been established by behavioral studies (Mergner et al., 1991).

Alternative models of interaction between active and passive motions

The theoretical framework of the Kalman filter asserts that the brain uses a single internal model to process copies of motor commands and sensory signals. But could alternative computational schemes, involving distinct internal models for motor and sensory signals, explain neuronal and behavioral responses during active and passive motions? Here, we consider three possibilities, illustrated in Figure 1—figure supplement 1. First, that the brain computes head motion based on motor commands only and suppresses vestibular sensory inflow entirely during active motion (Figure 1—figure supplement 1A). Second, that a ‘motor’ internal model and a ‘sensory’ internal model run in parallel, and that central neurons encode the difference between their outputs – which would represent a motion prediction error instead of a sensory prediction error, as proposed by the Kalman filter framework (Figure 1—figure supplement 1B). Third, that the brain computes sensory prediction errors based on sensory signals and the output of the ‘motor’ internal model, and then feeds these errors into the ‘sensory’ internal model (Figure 1—figure supplement 1C).

We first consider the possibility that the brain simply suppresses vestibular sensory inflow. Experimental evidence against this alternative comes from recordings performed when passive motion is applied concomitantly to an active movement (Brooks and Cullen, 2013; 2014; Carriot et al., 2013). Indeed, neurons that respond during passive but not active motion have been found to encode the passive component of combined passive and active motions, as expected based on the Kalman framework. We present corresponding simulation results in Figure 8. We simulate a rotation movement (Figure 8A), where an active rotation (, Gaussian velocity profile) is combined with a passive rotation (, trapezoidal profile), a tilt movement (Figure 8B; using similar velocity inputs, and , where the resulting active and passive tilt components are and ), and a translation movement (Figure 8C). We find that, in all simulations, the final motion estimate (Figure 8D–F; , and , respectively) matches the combined active and passive motions (, and , respectively). In contrast, the Kalman feedback signals (Figure 8G–I) specifically encode the passive motion components. Specifically, the rotation feedback (, Figure 8G) is identical to the passive rotation (Figure 8A). As in Figure 5, the tilt feedback (, Figure 8H) encodes tilt velocity, also equal to (Figure 8A). Finally, the linear acceleration feedback (, Figure 8I) follows the passive acceleration component, although it decreases slightly with time because of the somatogravic effect. Thus, Kalman filter simulations confirm that neurons that encode sensory mismatch or Kalman feedback should selectively follow the passive component of combined passive and active motions.

Interaction of active and passive motion.

Active movements (Gaussian profiles) and passive movements (short trapezoidal profiles) are superimposed. (A) Active () and passive () rotations. (B) Head tilt resulting from active and passive rotations (the corresponding tilt components are and ). (C) Active () and passive () translations. (D-F) Final motion estimates (equal to the total motion). (G-I) The Kalman feedback corresponds to the passive motion component. (J-K) Final estimates computed by inactivating all Kalman feedback pathways. These simulations represent the motion estimates that would be produced if the brain suppressed sensory inflow during active motion. The simulations contradict the alternative scheme of Figure 1—figure supplement 1A.

What would happen if, instead of computing sensory prediction errors, the brain simply discarded vestibular sensory (or feedback) signals during active motion? We repeat the simulations of Figure 8A–I after removing the vestibular sensory input signals from the Kalman filter. We find that the net motion estimates encode only the active movement components (Figure 8J–L; , and ) – thus, not accurately estimating the true movement. Furthermore, as a result of the sensory signals being discarded, all sensory errors and Kalman feedback signals are null. These simulations indicate that suppressing vestibular signals during active motion would prevent the brain from detecting passive motion occurring during active movement (see Discussion, ‘Role of the vestibular system during active motion: ecological, clinical and fundamental implications.”), in contradiction with experimental results.

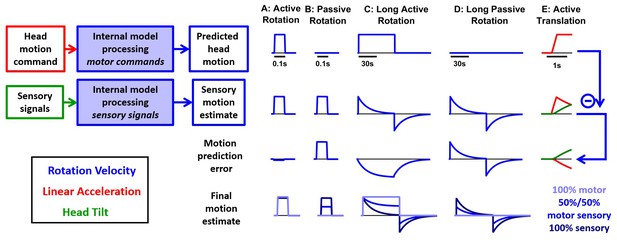

Next, we simulate (Figure 9) the alternative model of Figure 1—figure supplement 1B, where the motor commands are used to predict head motion (Figure 9, first row) while the sensory signals are used to compute a self-motion estimate (second row). According to this model, these two signals would be compared to compute a motion prediction error instead of a sensory prediction error (third row; presumably represented in the responses of central vestibular neurons). We first simulate short active and passive rotations (Figure 9A,B; same motion as in Figure 3). During active rotation (Figure 9A), both the motor prediction and the sensory self-motion estimate are close to the real motion and therefore the motor prediction is null (Figure 9A, third row). In contrast, the sensory estimate is not cancelled during passive rotation, leading to a non-zero motion prediction error (Figure 9B, third row). Thus, the motion prediction errors in Figure 9A,B resemble the sensory prediction errors predicted by the Kalman filter in Figure 3 and may explain neuronal responses recorded during brief rotations.

Simulations of the alternative scheme where motor commands cancel the output of a sensory internal model.

In this figure, we consider an alternative scheme (Figure 1—figure supplement 1B), where the motor commands (first row), which are assumed to encode head angular velocity and linear acceleration (as in the Kalman filer model), are used to cancel the output of a ‘sensory only’ internal model (second row, also identical to the Kalman filter model) to compute motion prediction errors (third row), instead of sensory prediction error, as in the Kalman filter model. (A) During a short active rotation (same as in Figure 3), both the motor prediction and the sensory self-motion estimate are close to the real motion and therefore the motor prediction cancels the sensory estimate accurately. (B) Similarly to Figure 3, the motor prediction is null and the sensory estimate is not cancelled during passive rotation. (C) During long-duration active rotation (same motion as in Figure 4—figure supplement 1A,B), the motor prediction (top row) does not match the sensory signal (second row), resulting in a substantial prediction error (third row). This contrasts with Kalman filter simulations, where no sensory prediction errors occur during active motion. (D) During long-duration passive motion, results agree with Kalman filter predictions. (E) During active translation (same motion as in Figure 6), the somatogravic effect would induce a tilt illusion (green) and an underestimation of linear acceleration (red), again leading to motion prediction errors. Thus, although the predictions of this alternative model resemble those of the Kalman filter during short active rotations, they differ during long rotations or active translations and are contradicted by experimental observations. The last row shows the final self-motion estimate obtained by computing a weighted average of the predicted head motion and sensory motion estimates. Three different weights are considered: 100% motor signals (dark blue), 100% sensory estimates (dark blue) or 50%/50% (blue).

However, this similarity breaks down when simulating a long-duration active or passive rotation (Figure 9C,D; same motion as in Figure 4—figure supplement 1A,B). The motor prediction of rotation velocity would remain constant during 1 min of active rotation (Figure 9C, first row), whereas the sensory estimate would decrease over time and exhibit an aftereffect (Figure 9C, second row). This would result in a substantial difference between the motor prediction and the sensory estimate (Figure 9C, third row) during active motion. This contrasts with Kalman filter simulations, where no sensory prediction errors occur during active motion.

A similar difference would also be seen during active translation (Figure 9E; same motion as in Figure 6). While the motion prediction (first row) would encode the active translation, the sensory estimate (second row) would be affected by the somatogravic effect (as in Figure 6), which causes the linear acceleration signal (red) to be replaced by a tilt illusion (green), also leading to motion prediction errors (third row). In contrast, the Kalman filter predicts that no sensory prediction error should occur during active translation.

These simulations indicate that processing motor and vestibular information independently would lead to prediction errors that would be avoided by the Kalman filter. Beyond theoretical arguments, this scheme may be rejected based on behavioral responses: Both rotation perception and the vestibulo-ocular reflex (VOR) decrease during sustained passive rotations, but persist indefinitely during active rotation (macaques: Solomon and Cohen, 1992); humans: Guedry and Benson (1983); Howard et al. (1998); Jürgens et al., 1999). In fact, this scheme cannot account for experimental findings, even if we consider different weighting for how the net self-motion signal is constructed from the independent motor and sensory estimates (Figure 9, bottom row). For example, if the sensory estimate is weighted 100%, rotation perception would decay during active motion (Figure 9C, bottom, dark blue), inconsistent with experimental results. If the motor prediction is weighted 100%, passive rotations would not be detected at all (Figure 9B,D, light blue). Finally, intermediate solutions (e.g. 50%/50%) would result in undershooting of both the steady state active (Figure 9C) and passive (Figure 9B,D) rotation perception estimates. Note also that, in all cases, the rotation after-effect would be identical during active and passive motion (Figure 9C,D, bottom), in contradiction with experimental findings (Solomon and Cohen, 1992; Guedry and Benson, 1983; Howard et al., 1998).

Finally, the third alternative scheme (Figure 1—figure supplement 1C), where sensory prediction error is used to cancel the input of a sensory internal model is, in fact, a more complicated version of the Kalman filter. This is because an internal model that processes motor commands to predict sensory signals must necessarily include an internal model of the sensors. Thus, simulations of the model in Figure 1—figure supplement 1C would be identical to the Kalman filter, by merely re-organizing the sequence of operations and uselessly duplicating some of the elements, to ultimately produce the same results.

Discussion

We have tested the hypothesis that the brain uses, during active motion, exactly the same sensory internal model computations already discovered using passive motion stimuli (Mayne, 1974; Oman, 1982; Borah et al., 1988; Merfeld, 1995; Zupan et al., 2002; Laurens, 2006; Laurens and Droulez, 2007; Laurens and Droulez, 2008; Laurens and Angelaki, 2011; Karmali and Merfeld, 2012; Lim et al., 2017). Presented simulations confirm the hypothesis that the same internal model (consisting of forward internal models of the canals, otoliths and neck proprioceptors) can reproduce behavioral and neuronal responses to both active and passive motions. The formalism of the Kalman filter allows predictions of internal variables during both active and passive motions, with a strong focus on sensory error and feedback signals, which we hypothesize are realized in the response patterns of central vestibular neurons.

Perhaps most importantly, this work resolves an apparent paradox in neuronal responses between active and passive movements (Angelaki and Cullen, 2008), by placing them into a unified theoretical framework in which a single internal model tracks head motion based on motor commands and sensory feedback signals. Although particular cell types that encode sensory errors or feedback signals may not modulate during active movements because the corresponding sensory prediction error is negligible, the internal models of canal dynamics and otolith ambiguity operate continuously to generate the correct sensory prediction during both active and passive movements. Thus, the model presented here should eliminate the misinterpretation that vestibular signals are ignored during self-generated motion, and that internal model computations during passive motion are unimportant for every day’s life. We hope that this realization should also highlight the relevance and importance of passive motion stimuli, as critical experimental paradigms that can efficiently interrogate the network and unravel computational principles of natural motor activities, which cannot easily be disentangled during active movements.

Summary of the Kalman filter model

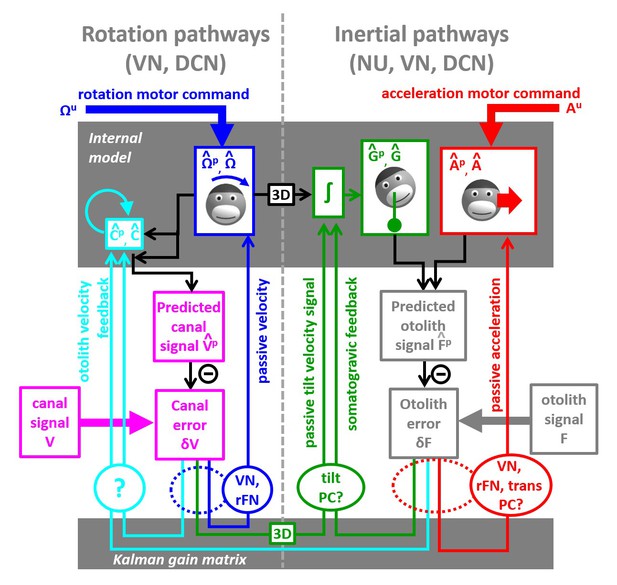

We have developed the first ever model that simulates self-motion estimates during both actively generated and passive head movements. This model, summarized schematically in Figure 10, transforms motor commands and Kalman filter feedback signals into internal estimates of head motion (rotation and translation) and predicted sensory signals. There are two important take-home messages: (1) Because of the physical properties of the two vestibular sense organs, the predicted motion generated from motor commands is not equal to predicted sensory signals (for example, the predicted rotation velocity is processed to account for canal dynamics in Figure 4). Instead, the predicted rotation, tilt and translation signals generated by efference copies of motor commands must be processed by the corresponding forward models of the sensors in order to generate accurate sensory predictions. This important insight about the nature of these internal model computations has not been appreciated by the qualitative schematic diagrams of previous studies. (2) In an environment devoid of externally generated passive motion, motor errors and sensory noise, the resulting sensory predictions would always match sensory afferent signals accurately. In a realistic environment, however, unexpected head motion occurs due to both motor errors and external perturbations (see ‘Role of the vestibular system during active motion: ecological, clinical and fundamental implications’). Sensory vestibular signals are then used to correct internal motion estimates through the computation of sensory errors and their transformation into Kalman feedback signals. Given two sensory errors ( originating from the semicircular canals and originating from the otoliths) and four internal state variables (rotation, internal canal dynamics, tilt and linear acceleration: , , , ), eight feedback signals must be constructed. However, in practice, two of these signals have negligible influence for all movements ( feedback to and feedback to ; see Table 2 and Supplementary methods, ‘Kalman Feedback Gains’), thus only six elements are summarized in Figure 10.

Schematic diagram of central vestibular computations.

This diagram is organized to offer a synthetic view of the processing elements, as well as their putative neural correlates. An internal model (top gray box) predicts head motion based on motor commands and receives feedback signals. The internal model computes predicted canal and otolith signals that are compared to actual canal and otolith inputs. The resulting sensory errors are transformed by the Kalman gain matrix into a series of feedback ‘error’ signals. Left: canal error feedback signals; Right: otolith error feedback signals. Rotation signals are spatially transformed (‘3D’ boxes) into tilt velocity signals. Ovals indicate putative neuronal correlates of the feedback signals (VN: vestibular only vestibular nuclei neurons; rFN: rostral fastigial nuclei neurons, PC: Purkinje cells in the caudal vermis, DCN: deep cerebellar nuclei).

The non-negligible feedback signals originating from the canal error are as follows (Figure 10, left):

The feedback to the rotation estimate represents the traditional ‘direct’ vestibular pathway (Raphan et al., 1979; Laurens and Angelaki, 2011). It is responsible for rotation perception during high-frequency (unexpected) vestibular stimulation, and has a gain close to unity.

The feedback to feeds into the internal model of the canals, thus allowing compensation for canals dynamics. This pathway corresponds to the ‘velocity storage’ (Raphan et al., 1979; Laurens and Angelaki, 2011). Importantly, the contribution of this signal is significant for movements larger than ~1 s, particularly during high velocity rotations.

The feedback to tilt () converts canal errors into a tilt velocity () signal, which is subsequently integrated by the internal model of head tilt.

The non-negligible feedback signals originating from the otolith error are as follows (Figure 9, right):

The feedback to linear acceleration () converts unexpected otolith activation into an acceleration signal and is responsible for acceleration perception during passive translations (as well as experimentally generated otolith errors; Merfeld et al., 1999; Laurens et al., 2013a).

The feedback to tilt () implements the somatogravic effect that acts to bias the internal estimate of gravity toward the net otolith signal so as to reduce the otolith error.

The feedback to plays a similar role with the feedback to tilt , that is, to reduce the otolith error; but acts indirectly by biasing the internal estimate of rotation in a direction which, after integration, drives the internal model of tilt so that it matches otolith signal (this feedback was called ‘velocity feedback’ in Laurens and Angelaki, 2011). Behavioral studies (and model simulations) indicate that this phenomenon has low-frequency dynamics and results in the ability of otolith signals to estimate rotational velocity (Angelaki and Hess, 1996; Hess and Angelaki, 1993). Lesion studies have demonstrated that this feedback depends on an intact nodulus and ventral uvula, the vermal vestibulo-cerebellum (Angelaki and Hess, 1995a; Angelaki and Hess, 1995b).

The model in Figure 10 is entirely compatible with previous models based on optimal passive self-motion computations (Oman, 1982; Borah et al., 1988; Merfeld, 1995; Laurens, 2006; Laurens and Droulez, 2007; Laurens and Droulez, 2008; Laurens and Angelaki, 2011; Karmali and Merfeld, 2012; Lim et al., 2017; Zupan et al., 2002). The present model is, however, distinct in two very important aspects: First, it takes into account active motor commands and integrates these commands with the vestibular sensory signals. Second, because it is formulated as a Kalman filter, it makes specific predictions about the feedback error signals, which constitute the most important nodes in understanding the neural computations underlying head motion sensation. Indeed, as will be summarized next, the properties of most cell types in the vestibular and cerebellar nuclei, as well as the vestibulo-cerebellum, appear to represent either sensory error or feedback signals.

Vestibular and rostral fastigial neurons encode sensory error or feedback signals during rotation and translation

Multiple studies have reported that vestibular-only (erroneous term to describe ‘non-eye-movement-sensitive’) neurons in the VN encode selectively passive head rotation (McCrea and Luan, 2003; Roy and Cullen, 2001; 2004; Brooks and Cullen, 2014) or passive translation (Carriot et al., 2013), but suppress this activity during active head movements. In addition, a group of rostral fastigial nuclei (unimodal rFN neurons; Brooks and Cullen, 2013; Brooks et al., 2015) also selectively encodes passive (but not active) rotations. These rotation-responding VN/rFN neurons likely encode either the semicircular canal error itself or its Kalman feedback to the rotation estimate (blue in Figure 10, dashed and solid ovals ‘VN, rFN’, respectively). The translation-responding neurons likely encode either the otolith error or its feedback to the linear acceleration estimate (Figure 10, solid and dashed red lines ‘VN, trans PC’). Because error and feedback signals are proportional to each other in the experimental paradigms considered here, whether VN/rFN encode sensory errors or feedback signals cannot easily be distinguished using vestibular stimuli alone. Nevertheless, it is also important to emphasize that, while the large majority of VN and rFN neurons exhibit reduced responses during active head movements, this suppression is rarely complete (McCrea et al., 1999; Roy and Cullen, 2001; Brooks and Cullen, 2013; Carriot et al., 2013). Thus, neuronal responses likely encode mixtures of error/feedback and sensory motion signals (e.g. such as those conveyed by direct afferent inputs).

During large amplitude passive rotations (Figure 4—figure supplement 3), the rotation estimate persists longer than the vestibular signal (Figure 4, blue; a property called velocity storage). Because the internal estimate is equal to the canal error, this implies that VN neurons (that encode the canal error) should exhibit dynamics that are different from those of canal afferents, having incorporated velocity storage signals. This has indeed been demonstrated in VN neurons during optokinetic stimulation (Figure 4—figure supplement 1; Waespe and Henn, 1977; Yakushin et al., 2017) and rotation about tilted axes (Figure 6—figure supplement 3; Reisine and Raphan, 1992; Yakushin et al., 2017).

Thalamus-projecting VN neurons possibly encode final motion estimates

Based on the work summarized above, the final estimates of rotation (Figure 4G) and translation (Figure 6G), which are the desirable signals to drive both perception and spatial navigation, do not appear to be encoded by most VN/rFN cells. Thus, one may assume that they are reconstructed downstream, perhaps in thalamic (Marlinski and McCrea, 2008; Meng et al., 2007; Meng and Angelaki, 2010) or cortical areas. Interestingly, more than half (57%) of ventral thalamic neurons (Marlinski and McCrea, 2008) and an identical fraction (57%) of neurons of the VN cells projecting to the thalamus (Marlinski and McCrea, 2009) respond similarly during passive and actively-generated head rotations. The authors emphasized that VN neurons with attenuated responses during actively-generated movements constitute only a small fraction (14%) of those projecting to the thalamus. Thus, although abundant in the VN, these passive motion-selective neurons may carry sensory error/feedback signals to the cerebellum, spinal cord or even other VN neurons (e.g. those coding the final estimates; Marlinski and McCrea, 2009). Note that Dale and Cullen, 2016, reported contrasting results where a large majority of ventral thalamus neurons exhibit attenuated responses during active motion. Even if not present in the ventral posterior thalamus, this signal should exist in the spatial perception/spatial navigation pathways. Thus, future studies should search for the neural correlates of the final self-motion signal. VN neurons identified physiologically to project to the cervical spinal cord do not to modulate during active rotations, so they could encode either passive head rotation or active and passive trunk rotation (McCrea et al., 1999).

Furthermore, the dynamics of the thalamus-projecting VN neurons with similar responses to passive and active stimuli were not measured (Marlinski and McCrea, 2009). Recall that the model predicts that final estimates of rotation differ from canal afferent signals only in their response dynamics (Figure 4, compare panels F and G). It would make functional sense that these VN neurons projecting to the thalamus follow the final estimate dynamics (i.e., they are characterized by a prolonged time constant compared to canal afferents) – and future experiments should investigate this hypothesis.

Rostral fastigial neurons encoding passive trunk rotations

Another class of rFN neurons (and possibly VN neurons projecting to the thalamus; Marlinski and McCrea, 2009, or those projecting to the spinal cord; McCrea et al., 1999) specifically encodes passive trunk velocity in space, independently of head velocity (bimodal neurons; Brooks and Cullen, 2009; 2013; Brooks et al., 2015). These neurons likely encode Kalman feedback signals about trunk velocity (Figure 7, blue). Importantly, these neurons respond equivalently to passive whole trunk rotation when the trunk and the head rotate together (Figure 7A) and to passive trunk rotation when the head is space-fixed (Figure 7C). The first protocol activates the semicircular canals and induces a canal error , while the later activates neck proprioceptors and generates a proprioceptive error, . From a physiological point of view, this indicates that bimodal neurons respond to semicircular canals as well as neck proprioceptors (hence their name). Note that several other studies identified VN (Anastasopoulos and Mergner, 1982), rFN (Kleine et al., 2004) and anterior suprasylvian gyrus (Mergner et al., 1985) neurons that encode trunk velocity during passive motion, but didn’t test their response to active motion.

The Kalman filter also predicts that neck proprioceptive signals that encode neck position should be transformed into error signals that encode neck velocity. In line with model predictions, bimodal neurons encode velocity signals that originate from neck proprioception during passive sinusoidal (1 Hz, Brooks and Cullen, 2009) and transient (Gaussian velocity profile, Brooks and Cullen, 2013) movements. Remarkably, although short-duration rotation of the trunk while the head is stationary in space leads to a veridical perception of trunk rotation, long duration trunk rotation leads to an attenuation of the perceived trunk rotation and a growing illusion of head rotation in the opposite direction (Mergner et al., 1991). These experimental findings are also predicted by the Kalman filter model (Figure 7—figure supplement 5).

Purkinje cells in the vestibulo-cerebellum encode tilt and acceleration feedback

The simple spike modulation of two distinct types of Purkinje cells in the caudal cerebellar vermis (lobules IX-X, Uvula and Nodulus) encodes tilt (tilt-selective cells) and translation (translation-selective cells) during three-dimensional motion (Yakusheva et al., 2007, 2008, 2013; Laurens et al., 2013a; Laurens et al., 2013b). Therefore, it is possible that tilt- and translation selective cells encode tilt and acceleration feedback signals (Figure 10, green and red lines, respectively). If so, we hypothesize that their responses are suppressed during active motion (Figures 5 and 6). How Purkinje cells modulate during active motion is currently unknown. However, one study (Lee et al., 2015) performed when rats learned to balance on a swing indicates that Purkinje cell responses that encode trunk motion are reduced during predictable movements, consistent with the hypothesis that they encode sensory errors or Kalman feedback signals.

Model simulations have also revealed that passive tilt does not induce any significant otolith error (Figure 5J). In contrast, passive tilt elicits a significant canal error (Figure 5K). Thus, we hypothesize that the tilt signal present in the responses of Purkinje cells originates from the canal error onto the tilt internal state variable. If it is indeed a canal, rather than an otolith, error, it should be proportional to tilt velocity instead of tilt position (or linear acceleration). Accordingly, we observed (Laurens et al., 2013b) that tilt-selective Purkinje cell responses were on average close to velocity (average phase lag of 36° during sinusoidal tilt at 0.5 Hz). However, since sinusoidal stimuli are not suited for establishing dynamics (Laurens et al., 2017), further experiments are needed to confirm that tilt-selective Purkinje cells indeed encode tilt velocity.

Model simulations have also revealed that passive translation, unlike passive tilt, should include an otolith error. This otolith error feeds also into the tilt internal variable (Figure 9, somatogravic feedback) and is responsible for the illusion of tilt during sustained passive linear acceleration (somatogravic effect; Graybiel, 1952). Therefore, as summarized in Figure 10 (green lines), both canal and otolith errors should feedback onto the tilt internal variable. The canal error should drive modulation during tilt, whereas the otolith error should drive modulation during translation. In support of these predictions, we have demonstrated that tilt-selective Purkinje cells also modulate during translation, with a gain and phase consistent with the simulated otolith-driven feedback (Laurens et al., 2013b). Thus, both of these feedback error signals might be carried by caudal vermis Purkinje cells – and future experiments should address these predictions.

Note that semicircular canal errors must be spatially transformed in order to produce an appropriate tilt feedback. Indeed, converting a rotation into head tilt requires taking into account the angle between the rotation axis and earth-vertical. This transformation is represented by a bloc marked ‘3D’ in Figure 9 (see also () in Supplemenatry methods, ‘Three-Dimensional Kalman filter’. Importantly, we have established (Laurens et al., 2013b) that tilt-selective Purkinje cells encode spatially transformed rotation signals, as predicted by theory. In fact, we have demonstrated that tilt-selective Purkinje cells do not simply modulate during vertical canal stimulation, but also carry the tilt signal during off-vertical axis yaw rotations (Laurens et al., 2013b).

In this respect, it is important to emphasize that truly tilt-selective neurons exclusively encode changes in orientation relative to gravity, rather than being generically activated by vertical canal inputs. Thus, it is critical that this distinction is experimentally made using three-dimensional motion (see Laurens et al., 2013b; Laurens and Angelaki, 2015). Whereas 3D rotations have indeed been used to identify tilt-selective Purkinje cells in the vermis (Laurens et al., 2013b; Yakusheva et al., 2007), this is not true for other studies. For example, Siebold et al., 1997, Siebold et al., 1999, 2001), Laurens and Angelaki, 2015 and Zhou et al. (2006) have reported tilt-modulated cells in the rFN and VN, respectively, but because these neurons were not tested in three dimensions, the signals carried by these neurons remain unclear.