Bayesian inference of kinetic schemes for ion channels by Kalman filtering

Abstract

Inferring adequate kinetic schemes for ion channel gating from ensemble currents is a daunting task due to limited information in the data. We address this problem by using a parallelized Bayesian filter to specify hidden Markov models for current and fluorescence data. We demonstrate the flexibility of this algorithm by including different noise distributions. Our generalized Kalman filter outperforms both a classical Kalman filter and a rate equation approach when applied to patch-clamp data exhibiting realistic open-channel noise. The derived generalization also enables inclusion of orthogonal fluorescence data, making unidentifiable parameters identifiable and increasing the accuracy of the parameter estimates by an order of magnitude. By using Bayesian highest credibility volumes, we found that our approach, in contrast to the rate equation approach, yields a realistic uncertainty quantification. Furthermore, the Bayesian filter delivers negligibly biased estimates for a wider range of data quality. For some data sets, it identifies more parameters than the rate equation approach. These results also demonstrate the power of assessing the validity of algorithms by Bayesian credibility volumes in general. Finally, we show that our Bayesian filter is more robust against errors induced by either analog filtering before analog-to-digital conversion or by limited time resolution of fluorescence data than a rate equation approach.

Editor's evaluation

The authors develop a Bayesian approach to modeling signals arising from ensembles of ion channels that can incorporate multiple simultaneously recorded signals such as fluorescence and ionic current. For simulated data from a simple ion channel model where ligand binding drives pore opening, they show that their approach enhances parameter identifiability and/or estimates of parameter uncertainty over more traditional approaches. The developed approach provides a valuable tool for modeling macroscopic time series data including data with multiple observation channels.

https://doi.org/10.7554/eLife.62714.sa0Introduction

Ion channels are essential proteins for the homeostasis of an organism. Disturbance of their function by mutations often causes severe diseases, such as epilepsy (Oyrer et al., 2018; Goldschen-Ohm et al., 2010), sudden cardiac death (Clancy and Rudy, 2001), or sick sinus syndrome (Verkerk and Wilders, 2014) indicating a medical need (Goldschen-Ohm et al., 2010) to gain further insight into the biophysics of ion channels. The gating of ion channels is usually interpreted by kinetic schemes which are inferred either from macroscopic currents with rate equations (REs) (Colquhoun and Hawkes, 1995b; Celentano and Hawkes, 2004; Milescu et al., 2005; Stepanyuk et al., 2011; Wang et al., 2012) or from single-channel currents using dwell time distributions (Neher and Sakmann, 1976; Colquhoun et al., 1997a; Horn and Lange, 1983; Qin et al., 1996; Epstein et al., 2016; Siekmann et al., 2016) or hidden Markov models (HMMs) (Chung et al., 1990; Fredkin and Rice, 1992; Qin et al., 2000; Venkataramanan and Sigworth, 2002). A HMM consists of a discrete set of metastable states. Changes of their occupation occur as random events over time. Each state is characterized by transition probabilities, related to transition rates, and a probability distribution of the observed signal (Rabiner, 1989). It is becoming increasingly clear that the use of Bayesian statistics in HMM estimation constitutes a major advantage (Ball et al., 1999; De Gunst et al., 2001; Rosales et al., 2001; Rosales, 2004; Gin et al., 2009; Siekmann et al., 2012; Siekmann et al., 2011; Hines et al., 2015; Sgouralis and Pressé, 2017b; Sgouralis and Pressé, 2017a; Kinz-Thompson and Gonzalez, 2018). In ensemble patches, simultaneous orthogonal fluorescence measurement of either conformational changes (Zheng and Zagotta, 2000; Taraska and Zagotta, 2007; Taraska et al., 2009; Bruening-Wright et al., 2007; Kalstrup and Blunck, 2013; Kalstrup and Blunck, 2018; Wulf and Pless, 2018) or ligand binding itself (Biskup et al., 2007; Kusch et al., 2010; Kusch et al., 2011; Wu et al., 2011) has increased insight into the complexity of channel activation.

Currently, a Bayesian estimator that can collect information from cross-correlations and time correlations inherent in multi-dimensional signals of ensembles of ion channels is still missing. Traditionally, macroscopic currents are analyzed with solutions of REs which yield a point estimate of the rate matrix or its eigenvalues (Colquhoun et al., 1997a; Sakmann and Neher, 2013; d’Alcantara et al., 2002; Milescu et al., 2005; Wang et al., 2012) if they are fitted to the data. The RE approach is based on a deterministic differential equation derived by averaging the chemical master equation (CME) for the underlying kinetic scheme (Kurtz, 1972; Van Kampen, 1992; Jahnke and Huisinga, 2007). Its accuracy can be improved by processing the information contained in the intrinsic noise (stochastic gating and binding) (Milescu et al., 2005; Munsky et al., 2009). Nevertheless, all deterministic approaches do not use the information of the time- and cross-correlations of the intrinsic noise. These deterministic approaches are asymptotically valid for an infinite number of channels. Thus, a time trace with a finite number of channels contains, strictly speaking, only one independent data point. Previous rigorous attempts to incorporate the autocorrelation of the intrinsic noise of current data into the estimation (Celentano and Hawkes, 2004) suffer from cubic computational complexity (Stepanyuk et al., 2011) in the amount of data points, rendering the algorithm non-optimal or even impractical for a Bayesian analysis of larger data set. To understand this, note, that a maximum likelihood optimization (ML) usually takes several orders of magnitude fewer likelihood evaluations to converge compared to the number of posterior evaluations when one samples the posterior. One Monte Carlo iteration (Betancourt, 2017) evaluates the posterior distribution and its derivatives many times to propose one sample from the posterior. Stepanyuk suggested an algorithm (Stepanyuk et al., 2011; Stepanyuk et al., 2014) which derives from the algorithm of Celentano and Hawkes, 2004 but evaluates the likelihood quicker. Under certain conditions, Stepanyuk’s algorithm can be faster than the Kalman filter (Moffatt, 2007). The algorithm by Milescu et al., 2005 achieves its superior computation time efficiency at the cost of ignoring the time correlations of the fluctuations. A further argument for our approach, independent of the Bayesian context, is investigated in this paper: The KF is the minimal variance filter (Anderson and Moore, 2012). Instead of strong analog filtering of currents to reduce the noise, but with the inevitable signal distortions (Silberberg and Magleby, 1993), we suggest to apply the KF with higher analyzing frequency on minimally filtered data.

Phenomenological difference between an RE approach and our Bayesian filter

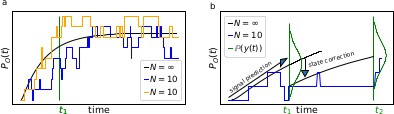

The First-order Markov property of the data requires a recursive prediction of the signal (by the model) and correction (by the data) scheme of the algorithm.

(a) Idealized patch-clamp (PC) data in the absence of instrumental noise for either ten (colored) or an infinite number of channels generating the mean time trace (black). The fluctuations with respect to the mean time trace (black) reveal autocorrelation (b) Conceptual idea of the Kalman Filter (KF): the stochastic evolution of the ensemble signal is predicted and the prediction model updated recursively.

Two major problems for parameter inference for the dynamics of the ion channel ensemble are: (I) that currents are only low-dimensional observations (e.g. one dimension for patch-clamp or two for cPCF) of a high-dimensional process (dimension being the number of model states) blurred by noise and (II) the fluctuations due to the stochastic gating and binding process cause autocorrelation in the signal. Traditional analyses for macroscopic PC data (and also for related fluorescence data) by the RE approach, e.g. Milescu et al., 2005 ignores the long-lasting autocorrelations of the deviations (Box 1—figure 1a) blue and orange curves from the mean time trace (black) that occur in real data measured from a finite ensemble. To account for the autocorrelation in the signal, an optimal prediction (Box 1—figure 1b) of the future signal distribution should use the measurement from the current time step t1 to update the belief about the underlying . Based on stochastic modelling of the time evolution of the channel ensemble, it then predicts .

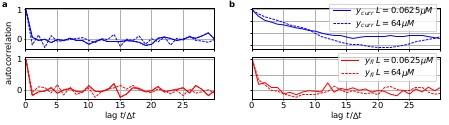

The residuals between model prediction and data reveal long autocorrelations if the analysis algorithm ignores the first-order Markov property.

(a) Autocorrelation of the residuals of two ligand concentrations of currents (blue) and of the fluorescence (red) after the data have been analyzed with the KF approach. (b) autocorrelation of after analysing with he RE approach.

To demonstrate the difference how the two algorithms analyze the data, we compute the autocorrelation of the residuals of the data. After the analysis with either the RE approach or the KF, we can construct from the model with the mean predicted signal (see Eq. 4 for the definition of ) and the predicted standard deviation the normalized residual time trace of the data which are defined as

Filtering (fitting) with the KF (given the true kinetic scheme) one expects to find a white-noise process for the residuals. Plots of the autocorrelation function of both signal components (Box 1—figure 2a) confirms our expectation. The estimated autocorrelation vanishes after one multiple of the lag time (the interval between sampling points), which means that the residuals are indeed a white-noise process. In contrast, the residuals derived from the RE approach (Box 1—figure 2b) display long lasting periodic autocorrelations.

On the one hand, a complete HMM analysis (forward algorithm) would deliver the most exact likelihood of macroscopic data. On the other hand, the computational complexity of the forward algorithm limits this type of analysis in ensemble patches to no more than a few hundred channels per time trace (Moffatt, 2007). To tame the computational complexity (Jahnke and Huisinga, 2007), we approximate the solution of the CME with a Kalman filter (KF), thereby remaining in a stochastic framework Kalman, 1960. This allows us to explicitly model the time evolution of the first two moments (mean value and covariance matrix) of the probability distribution of the hidden channel states. Notably, for linear (first or pseudo) Gaussian system dynamics, the KF is optimal in producing a minimal prediction error for the mean state. KFs have been used previously in several protein expression studies which also demonstrate the connection of the KF to the linear noise approximation (Komorowski et al., 2009; Finkenstädt et al., 2013; Fearnhead et al., 2014; Folia and Rattray, 2018; Calderazzo et al., 2019; Gopalakrishnan et al., 2011).

Our approach generalizes the work of Moffatt, 2007 by including state-dependent fluctuations such as open-channel noise and Poisson noise in additional fluorescence data. A central technical difficulty which we solved is that due to the state-dependent noise the central Bayesian update equation loses its analytical solution. We derived an approximation which is correct for the first two moments of the probability distributions. Stochastic rather than deterministic modeling is generally preferable for small systems or non-linear dynamics (Van Kampen, 1992; Gillespie and Golightly, 2012). However, even with simulated data of unrealistic high numbers of channels per patch (more than several thousands within one patch), the KF outperforms the deterministic approach in estimating the model parameters. Moffatt, 2007 already demonstrated the advantage of the KF to learn absolute rates from time traces at equilibrium. Like all algorithms that estimate the variance and the mean (Milescu et al., 2005) the KF can infer the number of channels for each time trace, the single-channel current and analogous in optical recordings the mean number of photons from bound ligands per recorded frame. To select models and to identify parameters, stochastic models are formulated within the framework of Bayesian statistics where parameters are assigned uncertainties by treating them as random variables (Hines, 2015; Ball, 2016). In contrast, previous work on ensemble currents combined the KF only with ML estimation (Moffatt, 2007). Difficulties in treating simple stochastic models by ML approaches in combination with the KF (Auger-Méthé et al., 2016), especially with non-observable dynamics, justify the computational burden of Bayesian statistics. Bayesian statistics has an intuitive way to incorporate soft or hard constrains from diverse sources of prior information. Those sources include mathematical prerequisites, other experiments, simulations or theoretical assumptions. They are applied as additional model assumptions by a prior probability distribution over the possible parameter space. Hence, knowledge of the model parameters prior to the experiment are correctly accounted for in the analyzes of the new data. Alternatively, some of these benefits of prior knowledge can be incorporated by penalized maximum likelihood (Salari et al., 2018; Navarro et al., 2018). Bayesian inference provides outmatching tools for modeling over point estimates: First, the Bayesian approach is still applicable in situations where parameters are not identifiable (Hines et al., 2014; Middendorf and Aldrich, 2017b) or posteriors are non-Gaussian, whereas ML fitting ceases to be valid (Calderhead et al., 2013; Watanabe, 2007). Second, a Bayesian approach provides superior model selection tools for singular models such as HMMs or KFs Gelman et al. (2014). Third, Bayesian statistics has a correct uncertainty quantification (Gillespie and Golightly, 2012) based on the data and the prior for the statistical problem. In contrast, ML or maximum posterior approaches lack uncertainty quantification based on one data set (Joshi et al., 2006). Only under optimal conditions their uncertainty quantification becomes equivalent to Bayesian credibility volumes (Jaynes and Kempthorne, 1976). This study focuses on the effects on the posterior due to formulating the likelihood via a KF instead of an RE approach and the benefits of adding a second dimension of observation. We consider the performance of our algorithm against the gold standards in four different aspects: (I) The relative distance of the posterior to the true values, (II) the uncertainty quantification, here in the form of the shape of the posterior, (III) parameter identifiability, and (IV) robustness against typical misspecifications of the likelihood (such as ignoring that currents are filtered or that the integration time of fluorescence data points is finite) of real experimental data.

Results and discussion

Simulation of ligand-gated ion-channel data

Here we treat an exemplary ligand-gated channel with two ligand binding steps and one open-closed isomerization described by an HMM (see Figure 1a). For this model, confocal patch-clamp fluorometry (cPCF) data were simulated: time courses of ligand binding and channel current upon concentration jumps were generated (see Appendix 5 and Materials and methods section). Idealized example data with added white noise are shown in Figure 1b–d. We added realistic instrumental noise to the simulated data (see Appendix 5). A qualitative description of the statistical problem that needs to be addressed when modeling time series data such as the simulated is outlined in Box. 1.

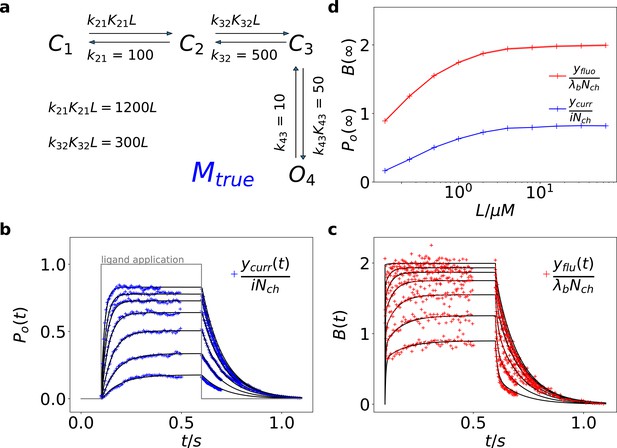

Kinetic scheme and simulated data.

(a) The Markov state model (kinetic scheme) consists of two binding steps and one opening step. The rate matrix is parametrized by the absolute rates , the ratios between on and off rates (i.e. equilibrium constants) and , the ligand concentration in the solution. The units of the rates are and , respectively. The liganded states are C2, C3, O4. The open state O4 conducts a mean single-channel current . Note, that absolute magnitude of the single channel current is irrelevant regarding this study what matters is its relative magnitude compared with and . Simulations were performed with 10 kHz or 100 kHz (for Figures 11 and 12) sampling, KF analysis frequency fana for cPCF data is in the range of (200-500) Hz while pure current data is analyzed at 2-5 kHz. (b-c) Normalized time traces of simulated relaxation experiments of ligand concentration jumps with channels, mean photons per bound ligand per frame and single-channel current , open-channel noise with and an instrumental noise with the variance . The current ycurr and fluorescence yflu time courses are calculated from the same simulation. For visualization, the signals are normalized by the respective median estimates of the KF. The black lines are the theoretical open probabilities and the average binding per channel for of the used model. Typically, we used 10 ligand concentrations which are (0.0625, 0.125, 0.25, 0.5, 1, 2,4, 8, 16, 64) . d, Equilibrium binding and open probability as function of the ligand concentration .

-

Figure 1—source data 1

The example data is provided.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig1-data1-v3.zip

Kalman filter derived from a Bayesian filter

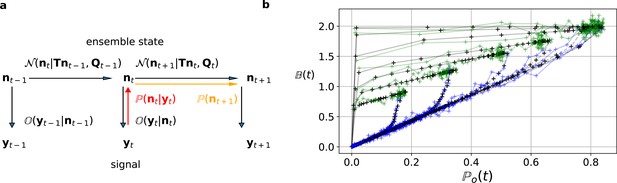

Here and in the Materials and methods section, we derive the mathematical tools to account correctly for the stochastic Markov dynamics of single molecules in the fluctuations of macroscopic signals. The KF is a Bayesian filter (see Materials and methods), that is a continuous state HMM with a multivariate normal transition probability Ghahramani, 1997 (Figure 2a). We define the hidden ensemble state vector

which counts the number of channels in each state (see Methods). To make use of the KF, we assume the following general form of the dynamic model: The evolution of is determined by a linear model that is parametrized by the state evolution matrix

where ∼ means sampled from and is a shorthand for the multivariate normal distribution, with the mean μ and the variance-covariance matrix . The state evolution matrix (transition matrix) is related to the rate matrix by the matrix exponential . The mean of the hidden state evolves according to the equation . It is perturbed by normally distributed white process noise with the following properties: The mean value of the noise fulfills and the variance-covariance matrix of the noise is (see Materials and methods Equation 38d, Ball, 2016). In short, Equation 3 defines a Gaussian Markov process.

The first-order hidden Markov structure is explicitly used by the Bayesian filter.

The filter can be seen as continuous state analog of the forward algorithm. (a) Graphical model of the conditional dependencies of the stochastic process. Horizontal black arrows represent the conditional multivariate normal transition probability of a continuous state Markov process. Notably, it is which is treated as the Markov state by the KF. The transition matrix and the time-dependent covariance characterise the single-channel dynamics. The vertical black arrows represent the conditional observation distribution . The observation distribution summarizes the noise of the experiment, which in the KF is assumed to be multivariate normal. Given a set of model parameters and a data point , the Bayesian theorem allows to calculate in the correction step (red arrow). The posterior is propagated linearly in time by the model, predicting a state distribution (orange arrow). The propagated posterior predicts together with the observation distribution the mean and covariance of the next observation. Thus, it creates a multivariate normal likelihood for each data point in the observation space. (b) Observation space trajectories of the predictions and data of the binding per channel vs. open probability for different ligand concentrations. The curves are normalized by the median estimates of , and and the ratio of open-channels which approximates the open probability . The black crosses represent the predicted mean signal , which is calculated by multiplying the observational matrix with the mean predicted state . For clarity, we used the mean value of the posterior of the KF. The green and blue trajectories represent the part of the time traces with after the jump to non-zero ligand concentration and after jumping backt to zero ligand concentration in the bulk, respectively.

The observations depend linearly on the hidden state . The linear map is determined by an observation matrix .

The noise of the measurement setup (Appendix 5 and Equation 43) is modeled as a random perturbation of the mean observation vector. The noise fulfills and . Equation 4 defines the state-conditioned observation distribution (Figure 2a). The set of all measurements up to time is defined by . If the system strictly obeys Equation 3 and Equation 4 then the KF is optimal in the sense that it is the minimum variance filter of that system Anderson and Moore, 2012. If the distributions of ν and ω are not normal, the KF is still the minimum variance filter in the class of all linear filters but there might be better non-linear filters. In case of colored noise ν and ω the filtering equations (see Materials and methods) can be reformulated by state augmentation or measurement-time-difference approach techniques Chang, 2014. For each element in a sequence of hidden states and for a fixed set of parameters , an algorithm based on a Bayesian filter (Figure 2a), explicitly exploits the conditional dependencies of the assumed Markov process. A Bayesian filter recursively predicts prior distributions for the next

given what is known about due to . The KF as a special Bayesian filter assumes that the transition probability is multivariate normal according to Equation 3

Note, that Equation 6 is a central approximation of the KF. While the exact transition distribution of an ensemble of ion channels is the generalized-multinomial distribution (Methods Equation 32), the quality of normal approximations to multinomial Milescu et al., 2005 or generalized-multinomial Moffatt, 2007 distributions depends on the number of ion channels in the patch and on the position of the probability vector in the simplex space. The difference between the log-likelihoods of the true generalized-multinomial dynamics and Equation 6 type approximation scales as Moffatt, 2007. As a rule of thumb one should be careful with both algorithms for time traces with . Below or even inside this interval there are more qualified concepts such as the forward algorithm or even particle filters (Golightly and Wilkinson, 2011; Gillespie and Golightly, 2012) which avoid the normal approximation.

Each prediction of (Equation 6) is followed by a correction step,

that allows to incorporate the current data point into the estimate, based on the Bayesian theorem (Chen, 2003). Additionally, the KF assumes (Anderson and Moore, 2012; Moffatt, 2007) a multivariate normal observation distribution

If the initial prior distribution is multivariate normal then due to the mathematical properties of the normal distributions the prior and posterior in Equation 8 become multivariate normal Chen, 2003 for each time step. In this case, one can derive algebraic equations for the prediction (Materials and methods Equation 37, Equation 38d) and correction (Materials and methods Equation 58 and Equation 58) of the mean and covariance. The algebraic equations originate from the fact that a normal prior is the conjugated prior for the mean value of a normal likelihood. Due to the recursiveness of its equations, the KF has a time complexity that is linear in the number of data points, allowing a fast algorithm. The denominator of Equation 8 is the normal distributed marginal likelihood for each data point, which constructs by

a product marginal likelihood of normal distributions of the whole time trace of length for the KF. For the derivation of and see Materials and methods (Equation 38d) and Equation 43. is the covariance of the prior distribution over before the KF took into account. The likelihood for the data allows to ascribe a probability to the parameters , given the observed data (Methods Equation 20). An illustration for the operation of the KF on the observation space is given in Figure 2b. The predicted mean signal corresponds to binding degree and open probability . These values are plotted as vector trajectories.

The standard KF (Moffatt, 2007; Anderson and Moore, 2012; Chen, 2003) has additive constant noise in the observation model. Thus, in this case a constant variance term is added, in Equation 9 to the aleatory variance which, as mentioned above, originates (Equation 38d) from the the fact that we do not know the true system state . For signals with Poisson-distributed photon counting or open-channel noise, we need to generalize the noise model to account for additional white-noise fluctuations with -dependent variance. For instance, in single-channel currents additional noise is often observed whose variance is referred to by . In macroscopic currents this additional noise can be modeled by a term , causing state-dependency of our noise model.

The second noise term is defined in terms of the first two moments and . To the best of our knowledge such a state-dependent noise makes the integration of the denominator of Equation 8 (which is also the incremental likelihood) intractable

This is because the state distribution as the prior also influences the variance parameter of the likelihood which means that the conjugacy property is lost. While a normal distribution is the conjugated prior of the mean of a normal likelihood, it is not the conjugated prior for the variance. However, by applying the theorem of total variance decomposition Equation 46a we deduce a normal approximation to Equation 8 and to the related problem of Poisson-distributed noise in fluorescence Equation 57, Equation 55a data. By computing the mean and the variance or covariance matrix of the signal, we can reformulate the noise model to fit the form of the traditional KF framework. Note, that the derived equations for the covariance matrix are still exact for the more general noise model. Mean and covariance just do not form a set of sufficient statistics anymore.

Our derivation is not limited to ligand-gated ion channels. For example, when investigating voltage-gated channels, the corresponding noise model can be easily adapted. This holds also when using the P/n protocol for which the noise model resembles that of the additional variance in the fluorescence signal. The additional variance is induced because the mean signal from the ligands swimming in the bulk (Materials amd methods Equation 43 Appendix 5) is eliminated by subtracting scaled mean reference signal which itself has an error. This manipulation adds additional variance to the resulting signal comparable to P/n protocol. Other experimental challenges, as for example series resistance compensation promoting oscillatory behavior of the amplifier, deserve certainly advanced treatment. Nevertheless, for voltage-clamp experiments with a rate equation approach it also becomes clear (Lei et al., 2020) that modeling of the actual experimental limitations, including series resistance, membrane and pipette capacitance, voltage offsets, imperfect compensations by the amplifier, and leak currents are necessary for consistent kinetic scheme inference.

The Bayesian posterior distribution

encodes all information from model assumptions and experimental data used during model training (see Materials and methods). A full Bayesian inference is usually not an optimization (finding the global maximum or mode of the posterior or likelihood) but calculates all sorts of quantities derived from the posterior distribution such as mean values of any function including the mean value or covariance matrix of the parameters themselves or even the likelihood of the data.

Besides the covariance matrix of the parameter to express parameter uncertainty, the posterior allows to calculate a credibility volume. The smallest volume that encloses a probability mass of

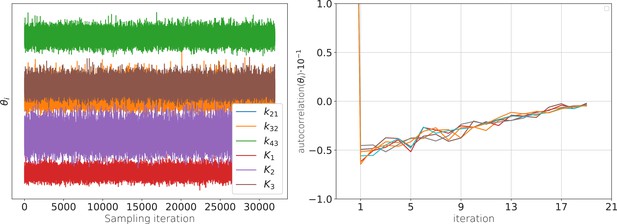

is called the Highest Density Credibility Volume/Interval (HDCV/HDCI). Those credibility volumes should not be confused with confidence volumes although under certain conditions they can become equivalent. Given that our model sufficiently captures the true process, the true values will be inside that volume with a probability . Unfortunately, typically there is no analytical solution to Equation 12 . However, it can be solved numerically with Monte Carlo techniques, enabling to calculate all quantities related to Equation 13 and Equation 14 . Our algorithm uses automatic differentiation of the statistical model to sample from the posterior (Appendix 1—figure 1a) via Hamiltonian Monte Carlo (HMC) (Betancourt, 2017), see Appendix 7 , as provided by the Stan software (Hoffman and Gelman, 2014; Gelman et al., 2015).

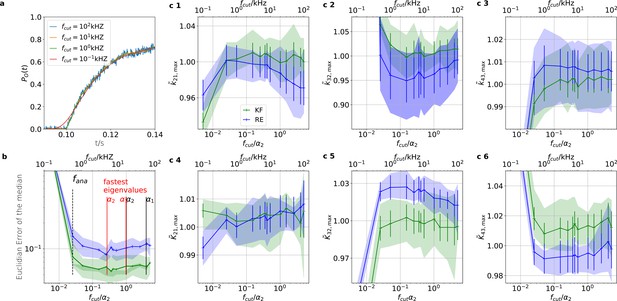

Benchmark for PC data against the gold standard algorithms

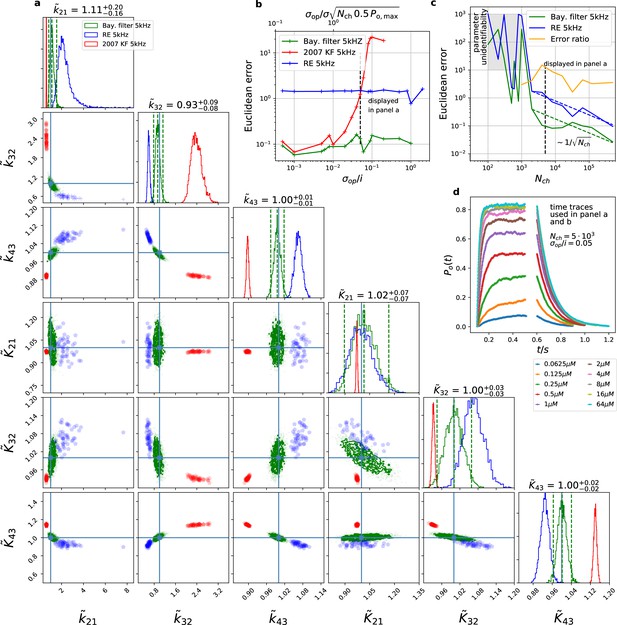

We compare the posterior distribution (Figure 3) of our algorithm against Bayesian versions of the deterministic (Milescu et al., 2005) and stochastic (Moffatt, 2007) algorithms, which we consider as the gold standard algorithms for macroscopic patch-clamp data. Simulated currents of a patch with are shown in (Figure 3d). The resulting posteriors (Figure 3a) show that both former algorithms are further away from the true parameter values with their maxima or mean values (Figure 3a). E.g., the relative error of the maximum of the posterior are for Milescu et al., 2005 and for Moffatt, 2007 . The four other parameters including the three equilibrium constants behave less problematic as judged by their relative error. Additionally, if one does not only judge the performance by the relative distance of maximum (or some other significant point) of the posterior but considers the spread of the posterior as well, it becomes apparent, that the marginal posterior of both former algorithms fail to cover the true values within at least the reasonable parts of their tails. Accordingly, for maximum likelihood inferences the true value would be far outside the estimated confidence interval. For the RE approach only the marginal posterior of is nicely centered over the true values and the marginal of could be considered to cover within a reasonable part of the distribution the true value. Uncertainty quantification is investigated in more detail further down (Figures 4—9). Note that in Figure 3a, parameter unidentifiability by heavy tails/ multiple maxima of the posterior distribution or (anti-) correlation is easily visible as non axial symmetric patterns.

The Bayesian filter overcomes the sensitivity to varying (open-channel) noise of the classic Kalman filter and does not show the overconfidence of the RE-approach.

Overall it shows the highest accuracy and the posterior covers the true values. The classical deterministic RE (blue), 2007 Kalman filter (red) and our Bayesian filter (green) are implemented as a full Bayesian version and the obtained posterior distributions are compared. For all PC data sets in the figure the analysing frequencies ranges within 2-5. (a) Posterior of the parameters for the 3 algorithms for the data set displayed in panel d. The blue crosses indicate the true values. All samples are normalized by their true values which is indicated by the ∼ above the parameters. For clarity, we only show a fraction of the samples of the posterior for blue and red. b, Effect of open channel noise: The Euclidean error for all three approaches is plotted vs. (low axis).The upper axis displays the ratio of the ‘typical’ standard deviation of the open channel excess noise of the ensemble of channels to the standard deviation of instrumental noise. c, Influence of patch size: Scaling of the Euclidean error vs. follows indicated by the dashed lines for for the RE and the Bayesian filter approach. The data indicates a constant error ratio (orange) for large . For samples of the posteriors for many data sets suggest an improper posterior. An instrumental noise of and was used. (d) The time traces on which the posteriors of panel a are based (for the ligand concentrations see Figure 1). Panel b used the same data too, but σ and were varied.

-

Figure 3—source data 1

The data folder includes all 15 sets of time traces.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig3-data1-v3.zip

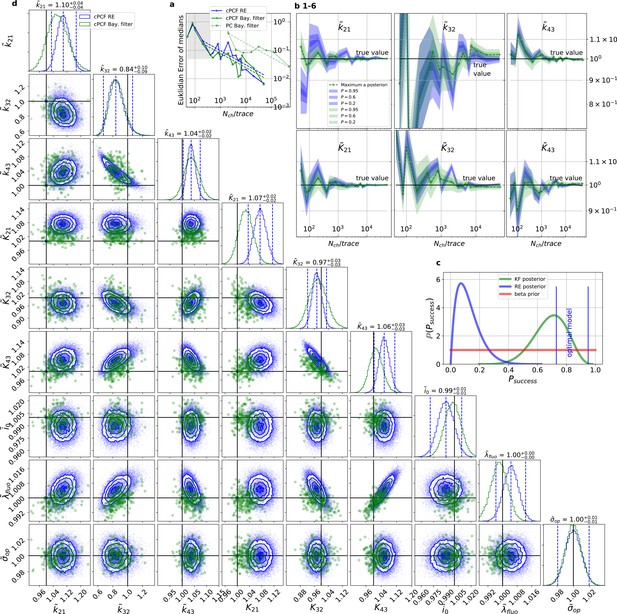

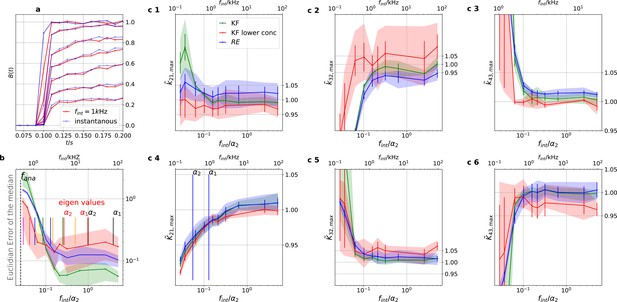

For multidimensional data (cPCF) the RE approach almost approaches the accuracy (Euclidean error) of the Bayesian Filter.

However, only the Bayesian filter covers the true value in a reasonable HDCV while RE based posteriors are too narrow. All samples are normalized by their true values which is indicated by the ∼ above the parameters. (a) Euclidean errors of the maximum for the rate and equilibrium constants obtained by the KF (green) and from the REs (blue) are plotted against for , and . Both algorithms scale like (dashed lines) for larger which is the expected scaling For smaller (gray range) the error is roughly the same indicating that limitations of the normal approximation to the multinomial distribution dominate the overall error in this regime. The combination of fluorescence and current data(cPCF) decreases the eucleadian error for both approaches compared to current data alone(PC). (b), HDCI and the mode of the 3 and 3 plotted vs. revealing that the maximum is a consistent estimator (converges in distribution to the true value with increasing data quality). While the KF (green) 0.95-HDCI includes usually the true value, the RE HDCI (blue) is too narrow and, thus, the real values are frequently not included. (c) Bayesian estimation of true success probability for the event that all 6 0.95-HDCI include the respective true values at the same time by a binomial likelihood. Since the data sets have different and the model approximations become better with increasing , we use a cut-off for . d, Comparison of 1-D and combinations of 2-D marginal posteriors of the parameters of interest for both algorithms calculated from a simulation. Blue lines indicate the true value. We depict that in two dimensions the disproportion of the deviation of the mode and the spread of RE (blue) approach is worsened while KF (green) posterior includes the true values with more reasonable probability mass.

-

Figure 4—source data 1

6µMol.

Each of the source data folders contains for a specific ligand concentration the time traces of cPCF data for all .

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data1-v3.zip

-

Figure 4—source data 2

64µMol.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data2-v3.zip

-

Figure 4—source data 3

32µMol.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data3-v3.zip

-

Figure 4—source data 4

8µMol.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data4-v3.zip

-

Figure 4—source data 5

4µMol.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data5-v3.zip

-

Figure 4—source data 6

1µMol.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data6-v3.zip

-

Figure 4—source data 7

2µMol.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data7-v3.zip

-

Figure 4—source data 8

025µMol.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data8-v3.zip

-

Figure 4—source data 9

05µMol.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data9-v3.zip

-

Figure 4—source data 10

00625µMol.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data10-v3.zip

-

Figure 4—source data 11

003125µMol.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data11-v3.zip

-

Figure 4—source data 12

0125µMol.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig4-data12-v3.zip

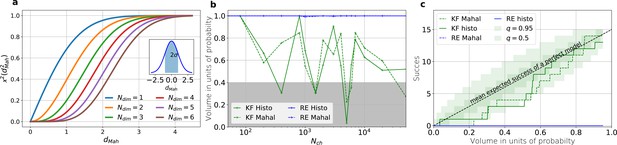

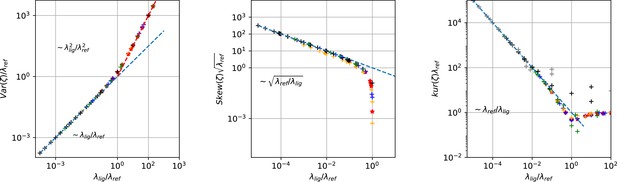

The HDCV of the posterior of the KF follows the requested binomial statistics while the HDCVs of the RE approach are too narrow.

(a) Cumulative χ-square distribution vs. the Mahalanobis distance . The y axis denotes the probability mass which is counted by moving away from the maximum before an ellipsoid with distance is reached. The different colours represent the changes of the cdf with an increasing number of rate parameters. The blue cdf at represents how much probability mass can be found from , see inset. In one dimension, we can expect to find the true value within around the mean with the usual probability of for univariate normally distributed random variables. The six parameters (brown) of the full rate matrix will almost certainly be beyond . The higher the dimensions of the space the less important becomes the maximum of the probability density distribution for the typical set which is by definition the region where the probability mass resides. The mathematical reason for this is that the probability mass is the integrated product of volume and probability density. b, The two methods to count volume in units of probability mass for the KF (green) and the RE (blue). The gray area indicates which data sets are considered a success if one chooses to evaluate a proababilty mass of 0.4 of each posterior around its mode. All data sets in the white area are considered a failure. For the optimistic noise assumptions , and a mean photon count per bound ligand per frame the RE approach (blue) distributes the probability mass such that the HDCV never includes the true rate matrix. From both HDCV estimates of the KF posterior (green curves) include the true value within a reasonable volume and show a similar behaviour. c, Binomial success statistics of HDCV to cover the true value vs. the expected probability constructed from the data of (b). Calculated for and and and minimal background noise.

Even for the highest tested experimental noise the RE approach does not follow the required binomial statistics, generating an underestimated uncertainty.

(a) Binomial success statistics of HDCV to cover the true value vs. the expected probability. Calculated for and and and a strong background noise. (b) Binomial success statistics of HDCV to cover the true value vs. the expected probability. For and and and a strong background noise. For both algorithms, the adaptation of the sampler of the posterior was more fragile for small , leading to differences in the posterior if the posterior is constructed from different independent sampling chains. Those data sets were then excluded. We assume that these instabilities are induced in both algorithms by the shortcomings of the multivariate normal assumptions. (c) Comparison of the Euclidean error vs. for the pessimistic noise case (solid lines) with Euclidean error for the optimistic noise case (dotted lines).

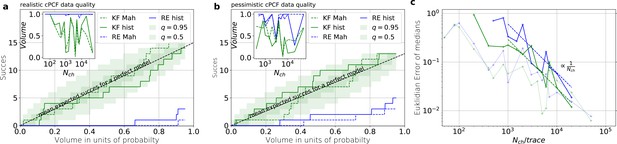

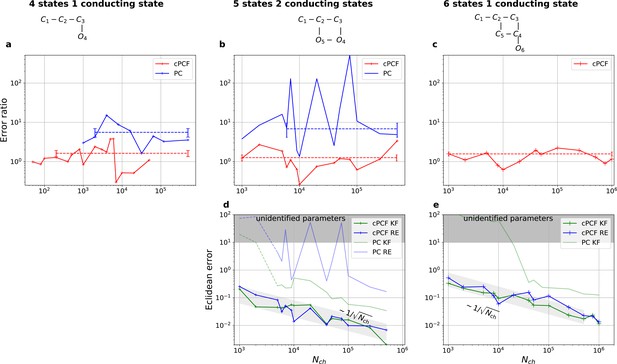

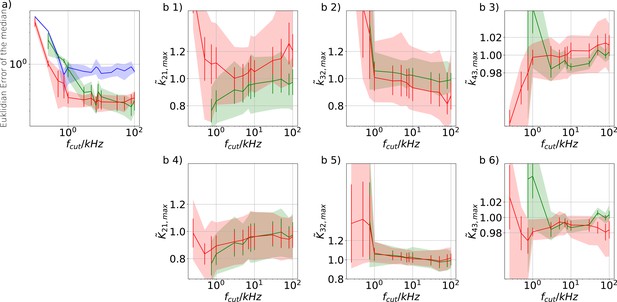

Higher model complexity drastically increases the minimal requirements of the data.

With PC data the RE approach is frequently incapable to identify all parameters while the Bayesian filter is more robust. cPCF data alleviate the parameter unidentifiabilities for patch sizes for which PC data are insufficient. Each panel column corresponds to a particular true process with increasing complexity from left to right, as indicated by the model schemes on top. Within all kinetic schemes, each transition to the right adds one bound ligand. Each transition to left is an unbinding step. Vertical transitions are either conformational or opening transitions. Plots in each row share the same y-axis respectively. Each column shares the same abscissa. (a-c) Error ratio for PC data (blue) and cPCF data (red). The dashed lines indicate the mean error ratio under the simplifying assumption that the error ratio does not depend on The vertical bars are the standard deviations of the mean values. Theses values were calculated from the Euclidean errors shown in Figures 3c and 4a for a, and panels (d-e), for (b-c), respectively. Results from the KF algorithm (green) and the RE algorithm (blue) are compared for PC (lighter shades) and cPCF (strong lines). The diagonal gray areas indicate a proportionality. For simulating the underlying PC data, we used standard deviations of and and for the cPCF data additionally a ligand of brightness . To facilitate the inference for the two more complex models, we assumed that the experimental noise and the single channel current are well characterized, meaning , and . In the models containing loops (last 2 columns), a prior was used to enforce microscopic-reversibility and set to multiplied by .

-

Figure 7—source data 1

The folder of the five-state model includes 15 sets of time traces in the interval .

Each of them has 10 ligand concentrations. The number in the file name reports the amount of ion channels in the patch.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig7-data1-v3.zip

-

Figure 7—source data 2

The folder of the six-state model includes 14 sets of time traces in the interval .

Each of them has 10 ligand concentrations. The number in the file name reports the amount of ion channels in the patch.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig7-data2-v3.zip

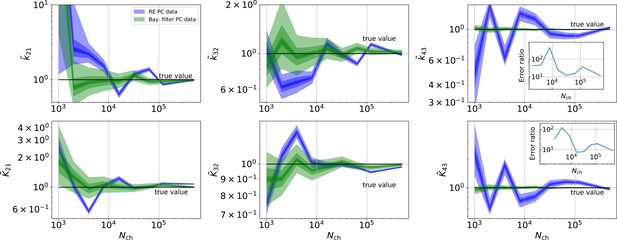

Revisiting the PC data obtained by the 4-state-1-open-state model shows that the KF succeeds to produce realistic uncertainty quantification, while the overconfidence problem (unreliable uncertainty quantification) of the RE approach remains.

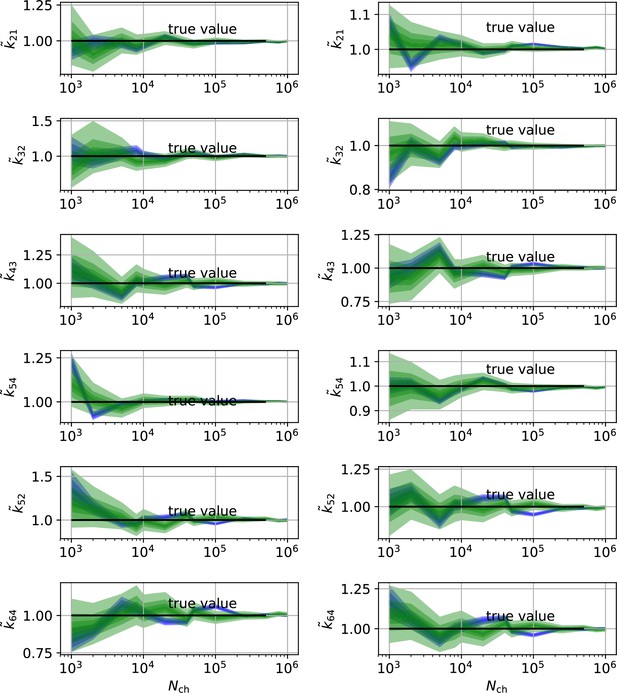

Comparison of a series of HDCIs shown as functions of for each parameter of the rate matrix obtained by the KF (green) and the RE algorithm (blue). The differing shades of green and blue indicate the set of -HDCIs. Only the interval in which all parameters are identified is displayed. The data are taken from the KF vs. RE benchmark of Figures 3c and 7a . The first row corresponds to three rates the second row to the equilibrium constants . All parameters are normalized by their true value. The insets show the error ratios of the respective single parameter estimates. Note that the error ratios on the single-parameter level can be even of the order of magnitude of 102. Thus, they can be much larger than the error ratios calculated from the Euclidean error if the errors of the respective parameters are small compared to other error terms in the Euclidean error Equation 15 .The lowest Euclidean error for this kinetic scheme has cPCF data analyzed with the KF. (Figure 7d). A 6-state-1-open-states model with cPCF data has again an error ratio of the the usual scale (Figure 7c). As expected, the Euclidean error continuously increases with model complexity (Figure 7d and e). For PC data of the 6-state-1-open-states model even the likelihood of the KF is that weak (Figure 7e) that it delivers unidentified parameters even for and we can detect heavy tailed distributions up until . Using RE on PC data alone does not lead to parameter identification, thus no error ratio can be calculated.

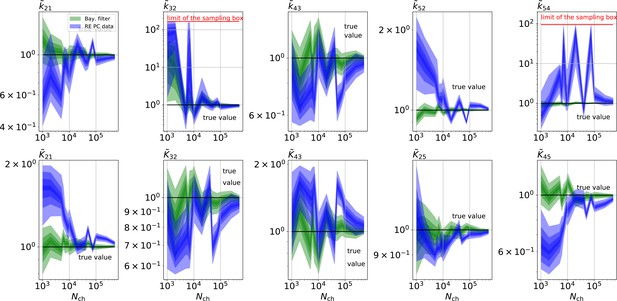

The HDCIs for PC data for a 5-state-2-open-states model show negligible bias for the KF with the true value being included.

In contrast, the HDCE for RE approach frequently does not include the true value and in general appears biased and frequently leaves certain parameters unidentified. Comparison of a series of -HDCIs as functions of for each parameter of the rate matrix obtained by the KF (green) and the RE algorithm (blue). The HDCIs correspond to the PC data displayed in Figure 7b and d . The first row corresponds to three rates the second row to the equilibrium constants . All parameters are normalized by their true value. is because of the microscopic-reversibility prior a parameter which is strongly dependent on the other rates and ratios. Refer to the caption of Figure 7 for details about the prior that enforces microscopic-reversibility. Thus, the deviations of are inherited from the other parameters. The rate is frequently not identified by the RE approach and only the limits of the sampling box confines he posterior.

To assess the location of the posterior conditioned on , we select the median vector of the marginal posteriors and calculate its Euclidean distance to the true values by:

This defines a single value to judge the overall accuracy of the posterior. Varying reveals the range of the validity (Figure 3b) of the algorithm (red) from Moffatt, 2007 . While both stochastic approaches are nearly equivalent for low open-channel noise, the RE (blue) performs consistently poorer. It may seem surprising that even for the two stochastic algorithms start to produce different results. But considering the scaling (Materials and methods Equation 46a) of the total open-channel noise (top axis) from currents of an ensemble patch one sees that if approaches σ the traditional KF suffers from ignoring state dependent noise contributions. The lower scale changes with experiments (e.g. and ). In contrast, the upper scale is largely independent of the particular measurements. The two different normalizations indicate an experimental intuition: “ Why should I consider the extra noise from the open state of the single channel if only ” is misleading. The small advantage of our algorithm for small over Moffatt, 2007 is due to the fact that we could apply an informative prior in the formulation of the inference problem on by taking advantage of our generalization (Equation 46a) Bayesian filter. Further, Figure 3b indicates the importance that the functional form of the likelihood is flexible enough to capture the second order statistics of the noise of the data sufficiently.

For an increasing data quality, which in our benchmark is an increasing per trace, we show (Figure 3c) that the deterministic RE and our Bayesian filter are consistent estimators, that is they converge in distribution to the true parameter values with their posterior maxima or median for increasing data quality. The scaling of the RE approach (blue) and our Bayesian filter (green) vs. shows that for large both algorithms seem to have a constant error ratio relative to each other. They are both well described by with an error ratio computed from the fit of 4.4. Thus, although our statistical model is singular (meaning that the fisher information matrix is singular Watanabe, 2007), its asymptotic learning behaviour is similar to a regular model (Figure 4c) which, however, means that the euclidean error from both algorithms stays different also for large . For data with the samples from the posterior typically indicate that the posterior is improper which is defined as

We consider this as the case of unidentified parameters. This data-driven definition is in so far different from structural and practical identifiability definitions (Middendorf and Aldrich, 2017a; Middendorf and Aldrich, 2017b) as the two latter cases are not distinguished. Still the practical consequence of structural or practical unidentifiability, which is usually an improper posterior, is captured. Cases of structural or practical unidentifiability which lead to a confined region of constant posterior density will be considered identified as the posterior is still normalizable thus the uncertainty quantification will still be correct, even when this finding is not sufficient to answer the research question at hand.

Benchmarking for cPCF data against the gold standard algorithm

For the simulated time traces with an optimistically high signal-to-noise assumption, the posterior of the KF (from hereon KF denotes our Bayesian Filter) and a RE (Milescu et al., 2005) approach are compared for cPCF data (Figure 4a–d). For a brief introduction of the RE approach, see Appendix 8 . The failure to analyze PC data with moderate open-channel noise (Moffatt, 2007; Figure 3a) disqualifies the classical KF with its constant noise variance also as a useful algorithm for fluorescence data, because here the Poisson distribution of the signal generates an even stronger state dependency of the signal variance.

By “high signal-to-noise assumption” , we refer to an experimental situation with a standard deviation of the current recordings , a low additional , and a high mean photon rate per bound ligand and frame . Additionally, we assume vanishing fluorescence background noise generated by the ligands in the bulk. The benefit of the high signal-to-noise is that the limitations of the two different approximations to the stochastic process of binding and gating can be investigated without running into the risk of being compensated or obscured by the noise from the experimental setup. For these experimental settings (Figure 4a), we calculate the Euclidean distance of the median (Equation 15) for different . For (gray shaded area in Figure 4a), the Euclidean error of both algorithms is roughly the same. On the single parameter level (Figure 4b), this can be seen as an onset of correlated deviations from the true value for both algorithms. Each marginal posterior has for each a similar deviation in magnitude and direction. That is in particular true for and which dominate Equation 15 . In spite of the correlation in direction of the errors of and their magnitude is still smaller for the KF. In summary, this indicates that in this regime the approximations to the involved multinomial distributions fail in a similar manner for both algorithms. That implies that treating the autocorrelation of the gating and binding becomes similar important compared to the error induced by normal approximations (which are used by the KF and the RE approach). For larger , the Euclidean error of the RE is on average 1.6 times larger than the corresponding error of the posterior mode of the KF, which we deduce by fitting the function . On the one hand, both algorithms are better in approaching the true values than with patch-clamp data alone. On the other hand, the smaller error ratio means, that adding a second observable constrains the posterior, such that much of the overfitting is prevented for the RE approach. By overfitting, we define the adaptation of any inference algorithm to the specific details of the used data set due to experimental and intrinsic noise which is aggravated if too complex kinetic schemes are used. Similarly, (Milescu et al., 2005) showed that the over fitting tendency of the RE can be reduced if the autocorrelation of the data is eliminated. The dotted green curve derives from PC data. The Euclidean error is roughly an order of magnitude larger for . Thus, in this regime the cPCF data set is equivalent to 102 fold more time traces or 102 more in a similar PC data set. For only cPCF establishes parameter identifiability (given a data set of 10 ligand concentrations and no other prior information). In Figure 4b(1-6), we demonstrate the 0.95-HDCI (Equation 14) of all parameters and their modes vs. . Even though the Bayesian filter and the RE approach are both consistent estimators, the RE approach covers the true values with its 0.95-HDCI only occasionally. The modeling assumption of the RE approach of treating each data point as if it does not come from a Markov process but from an individual draw from a multinomial distribution with deterministically evolving mean and variance makes the parameter estimates overly confident (Figure 4b(1-6)) . A likely explanation can be found by analyzing the extreme case where data points are sampled at high frequency relative to the time scales of the channel dynamics. The RE approach treats each data point as a new draw from Equation 67 while in reality the ion channel ensemble had no time to evolve into a new state. In contrast, the KF updates its information about the ensemble state after incorporating the current data point and then predicts from this updated information the generalised multinomial distribution of the next data point. For , the marginal posterior of the KF usually contains the true value. Nevertheless, one might depict a bias in both algorithms, in particular (Figure 4b 2,4) for and for , similar to the findings of Moffatt, 2007 . A proper investigation of bias can be found in Figure 11 and 12 and in the Appendix. Notably, with the more realistic higher experimental noise level, in those tests the bias is hardly or not all detectable (consider the unfiltered or infinitely fast integrated data). A plausible explanation is that the bias only occurs (Figure 4 2,4) because the data are that perfect that the discrete nature of the ensemble dynamics is almost visually detectable, thus deviating from to the modeling assumption of multi-variate normal distributions.

To investigate the six one-dimensional 0.95-HDCIs simultaneously, we declare the analysis of a data set as successful if all 0.95-HDCIs include the true values. Otherwise we define it as a failure. This enables to determine the true probability at which the probability mass of the KF and the RE approach covers the true values in a binomial setting. The left blue vertical line in Figure 4c indicates which is the lower limit and which would be the true success probability for an ideal model whose six 0.95-HDCIs are drawn from . This is the probability of getting 6 successes in 6 trials. The right blue vertical line equals , signifying the upper limit obtained by treating the six HDCIs as being drawn from each, which is a rather loose approximation. All marginal distributions are computed from the same high-dimensional posterior which is formed by one data set for each trial. Thus, the six HDCIs must have success rates between those two extremes if the algorithm creates an accurate posterior. We next combine the binomial likelihood with the conjugated beta prior (Hines et al., 2014) for mathematical convenience. On this occasion, for the sake of the argument, seems sufficient. A prior is a uniform prior on the open interval . The estimated true success rate of the RE approach (blue) is and therefore far away from the success probability an algorithm should have when it is based on an exact likelihood of the data. In contrast, the posterior (green) of the true success probability of the KF resides with a large probability mass between the lower and upper limit of the success probability of an optimal algorithm (given the correct kinetic scheme). As both algorithms use the same prior distribution, the different performance is not induced by the prior.

Exploiting six one-dimensional posterior distributions does not necessarily answer whether the posterior is accurate in 6 dimensions but we can refine the used binomial setting. In Figure 4d , we see that 2-D marginal distributions can, due to their additional degree of freedom, twist around the true value without covering it with HDCV (Equation 14) of reasonable size while simultaneously the two 1–D marginal distribution do cover it with a reasonable HDCI. In general, the KF posterior distribution has its mode much closer to the true value for various parameter combinations and it seems that the posterior is approximately multivariate normal. Further, we recognize that the probability mass of the reasonably sized HDCV of the KF posterior includes the true values whereas the HDCV from the RE does not. In 6 dimensions we lack visual representations of the posterior. Since we showed that both algorithms are consistent for a given identifiable model, we are looking for a way to ask whether the posterior is accurate (has the posterior distribution the right shape). We can answer that question by asking, how much probability mass around the mode (or around multiple modes) needs to be counted to construct a HDCV Equation 14 which includes the true values. Then we can ask for data sets how often did we find the true values inside a volume of a specific probability mass of the posterior distribution

An algorithm which estimates the parameters of the true process should fulfill this property simultaneously to being consistent. Otherwise credibility volumes or confidence volumes are meaningless. Noteworthy, that this is a empirical test of how sufficient the Bayesian filter and the RE approach hold frequentist coverage property of their HDCVs (Rubin and Schenker, 1986). We explain (Appendix 8) in detail how to quantify the overall shape and -dimensional posterior and comment on its geometrical meaning. One way is to use an analytical approximation via the cumulative Chi-squared distribution (Figure 5a and b), The other way is to count the probability mass of -dimensional histogram bins starting with the highest value until the first bin includes the true values (Figure 5b).

Knowing how much volume/probability mass is needed to include the true rate matrix allows us to test whether all HDCVs constructed from the two probability distributions match the binomial distributions of the ideal model. For each data set and for each HDCV of a fixed probability mass, there are two possible outcomes: The true rate matrix is inside or outside of that volume. For a chosen HDCV with a fixed probability volume, as indicated by a gray space in Figure 5b , we count how many times the true matrix is included in the volume of that probability mass for each trail in a fixed amount of trials. Since the success is binomially distributed, we plot the expected mean of a perfect model and binomial quantiles and compare them with the success rate found in our test runs (Figure 5c) for both algorithms with both methods to determine the posterior shape. The posterior of the KF distributes the probability mass in a consistent manner such that each volume includes the true rate matrix within the quantile range. In contrast, the RE approach fails for all data sets for all HDCVs (from 0 – 0.95 probability mass) and does not include the true values in one single case. Note, that all the binomial trials for each HDCV are made from the same set of data sets which explains the correlated deviation from the mean. For lower but realistic signal to noise ratios, where the fit quality decreases, for example by producing larger errors/wider posterior distributions (Figure 6a), the statistics of the HDCV from the RE approach improve but are still outperformed by the KF. In particular, in our tested case of realistic experimental noise we never find the true values within a 0.65-HDCV if the data are analyzed with a RE approach. Even for the highest noise level (Figure 6b), the probability mass of the KF posterior needed to include the true rate matrix remains almost always smaller then the posterior mass of the RE approach. That means that the posterior mass of the KF is much closer to the true value distributed than the posterior mass of the RE. With the KF we find the true rate matrix for one data set in small volume around the mode. To achieve the same with the RE approach we need at least a probability mass of 0.3.

In the inset of Figure 6a and b we do not observe a trend, thus no indication that the RE approach has a better performance for large values in this regard. This challenges the common argument that the RE approach should be equivalent to the KF for large because the ratio of mean signal vs. the intrinsic binding and gating noise is so large. Thus, including the autocorrelation into the analysis is important even for unrealistic large . One possible explanation is model a signal-to-noise ratio which scales . From the multinomial distribution both algorithms inherit mean signals which scale and variances which scale in the terms dominating for large similarly with . Thus, identical to the real signal, both algorithms model the scaling of the signal-to-noise ratio . It is plausible, that both algorithms remain sensitive for the occurrence of autocorrelation of the noise even for largest signal-to-noise ratios. In Figure 5c we compare the Euclidean error of the pessimistic high white noise case with an over-optimistic low noise case. We see, that when increasing there is a regime where the Euclidean error increases faster than which we indicate with a coarse approximate fit . In that regime two effects happen simultaneously. First, the mean and the intrinsic fluctuations of the signal become more and more dominant over the experimental noise. Second, the standard deviation of intrinsic fluctuations becomes smaller relative to the mean signal. We speculate, that this produces together a learning rate which is faster than the usual asymptotic learning rate of a regular model but relaxes asymptotically towards .

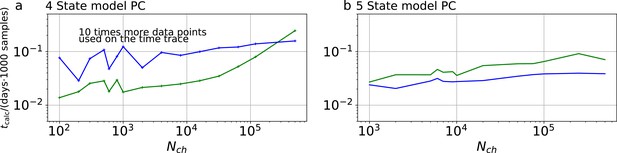

Statistical properties of both algorithms for more complex models

We have seen in Figure 3c and Figure 4a that the RE and the KF algorithm are consistent estimators, while their error ratio (Figure 7a) seems to have no trend to approach 1 with increasing . Adding a second observable increases parameter accuracy and adds identifiability for both algorithms since less aspects of the dynamics need to be statistically inferred (Figure 4a). Furthermore, the second observable takes away much of the tendency (compare Figure 4b 1 – 6 with 8) of the RE approach to overinterpret (overfit) which leads to a shrinking of the error ratio for PC data to smaller values for cPCF data (Figure 7a) (red) which are on average still bigger than one, while the Euclidean error is reduced (Figure 4a). If we then keep the amount and quality of the PC/cPCF data but increase the complexity of the model which produced the data (Figure 7b and d) from a four-state to a five-state model (see kinetic schemes above Figure 7a–c), we see that for cPCF data the error ratio stays roughly the same (difference between Figure 7a and b). For PC data instead both algorithms deliver an unidentified k21 for (defined as an improper posterior). For larger the KF always identifies all parameters while the RE fails at to identify k54. Thus, the KF reduces the risk of unidentified parameters. To calculate the mean error ratio, we exclude the values were some of the parameters are unidentified in total that still amounts to thus the advantage of the KF (given all parameters are identified) might increase with model complexity for PC data. The lowest Euclidean error for this kinetic scheme has cPCF data analyzed with the KF. (Figure 7d). A 6-state-1-open-states model with cPCF data has again an error ratio of the the usual scale (Figure 7c). As expected, the Euclidean error continuously increases with model complexity (Figure 7d and e). For PC data of the 6-state-1-open-states model even the likelihood of the KF is that weak (Figure 7e) that it delivers unidentified parameters even for and we can detect heavy tailed distributions up until . Using RE on PC data alone does not lead to parameter identification, thus no error ratio can be calculated.

Consistent with our findings, fluorescence data itself, should lower the advantage of the KF compared to PC data simply by signal-to-noise arguments. The stochastic aspect of the ligand binding is usually more dominated by the noise of Photon counting and background noise than the stochastic gating is dominated in current data by experimental noise. In terms of uncertainty quantification the advantage of the KF with cPCF varies with the model complexity (see, Appendix 9).

Besides analyzing what causes the changes in the Euclidean error (Figure 7a and b) at the single parameter, we now investigate whether the posterior is a proper representation of uncertainty. Thus, we look back at the HDCIs. The HDCIs of the 4-state-1-open-state (Figure 8) of the PC data from Figure 3 reveal an exacerbated over-confidence problem of the RE approach (blue) compared to cPCF-data (Figures 4b1—6). This, underlines our conclusion of Figures 5 and 6 that the Bayesian posterior sampled by the RE approach is misshaped. As a consequence a confidence volume derived from the curvature at the ML estimate of the RE algorithm understates parameter uncertainty. A possible way for ML methods to derive correct uncertainty quantification is by using bootstrapping data methods (Joshi et al., 2006). Furthermore, the error ratios of each single parameter from its true value in the last column strongly increased their magnitudes (insets Figure 8). Even error ratios of are possible. Note, that the way we defined Equation 15 suppresses the influence of the smaller parameter errors in the overall error ratio. Thus the advantage (error ratio) of the KF over RE approach for a single parameter can be much larger or lower compared to the error ratio derived from the Euclidean error if the respective parameter is contributing less to the Euclidean error. The posterior of the KF (green) seems to be unbiased after the transition into the regime where all parameters are identified. Similarly, for the RE algorithm there is no obvious bias in the inference. If we use the RE algorithm and change from the four-state to the five-state model (PC data from Figure 7b), bias occurs (Figure 9) in many inferred parameters, even for the highest investigated. Milescu et al., 2005 showed that one or the reason of the biased inference of the RE approach is its ignorance of autocorrelation of the intrinsic noise. We add here that the bias problem clearly aggravates with an increased model complexity. It is even present in unrealistically large patches which in principle could be generated by summing up 102 time traces with . In contrast, the KF algorithm reveals that its parameter inference is either unbiased or at least much less biased in the displayed regime. Furthermore, for both algorithms the position of the HDCI relative to the true value is for some parameters highly correlated, which corresponds to the correlation between optima of the ML method of Milescu et al., 2005 ; Moffatt, 2007.

As a side note, unbiased parameter estimates are a highly desirable feature of an inference algorithm. For example, with a bias in the inference, repeated experiments do not lead to the true value if the arithmetic mean of the parameter inferences is taken. With bias even bootstrapping methods fail to produce reliable uncertainty quantification. Due to the variation of the data the k54 parameter is either identified in some neighbourhood of the true value or complete unidentified (Figure 9), if the RE algorithm is used. The unidentified k54 occurs even at high-quality data such as . Only because of the nonphysical prior (Figure 9) of k54 induced by the limits of the sampling box of the sampling algorithm the posterior appears to be proper but is in fact either unidentified or or more than two orders of magnitude away from the true value. For the same data using the KF did not result in any unidentified parameters. Note, that comparable inference pathologies such as multimodal distributions of inferred parameter were also reported for the maximum likelihood RE algorithm for low quality PC data or too simple stimulation protocols (Milescu et al., 2005).

In conclusion, the two different perspectives on parameter uncertainty: On the one hand distributions of ML estimates due to the random data (Milescu et al., 2005 ; Moffatt, 2007) and the Bayesian posterior distribution loose their tightly linked (and necessary) connection if the RE algorithm is used. Thus, KF robustifies also ML inferences of the rate matrix. Our findings are consistent with the findings for gene regulatory networks (Gillespie and Golightly, 2012) which show that RE approaches deliver a too narrow posterior in contrast to stochastic approximations which deliver an acceptable posterior compared to the true posterior (defined by a particle filter algorithm). On the data side of the inference problem adding cPCF data eliminates the bias, reduces the variance of the position of the HDCI and eliminates unidentified parameters (Appendix 9—figures 1 and 2) for both investigated algorithms. This advantage increases with model-complexity.

For the five-state and six-state model, we applied microscopic-reversibility (Colquhoun et al., 2004). We enforced it by hierarchical prior distribution (Materials and methods Equation 60) whose parameters can be chosen such that they allow only arbitrarily small violations of microscopic-reversibility. But the prior distribution can also be used to enforce some softer regularization around microscopic-reversibility. Thus, we can transfer the usually strictly applied algebraic constraint (Salari et al., 2018) of microscopic-reversibility to a constraint with scalable softness. In that way we can model the lack of information if microscopic-reversibility is exactly fulfilled (Colquhoun et al., 2004) by the given ion channel instead of enforcing the strict constraint upon the model.

Prior critique and model complexity

In the Bayesian framework, the likelihood of the data and the prior generate the posterior. Thus, the performance of both algorithms can be influenced by appropriate prior distributions. We used a uniform prior over the rate matrix which is not optimal. Note, that uniform priors are widely used by several reasons. They appear to be unbiased, and are assumed to be a ‘no prior’ option (which they are not). This is true for location parameters like mean values. In contrast, for other parameters, such as scaling parameters like rates or variances, a uniform prior adds bias to the inference towards faster rates (Zwickl and Holder, 2004). We suspect, that for the PC data even in the simplest model discussed here the lower data quality limit below which we detected unidentified parameters (improper posteriors) is caused by the uniform prior. This lower limit for the KF also increases with the complexity of the model from for the foue-state model till for 6-state-1-open-state model. Note, that it is hardly possible to fit the 6-state-1-open-state model with the RE approach for the same amount of PC data. We observe cPCF data eases this problem because the likelihood becomes more concentrated for all parameters. The likelihood dominates the uniform prior. Nevertheless, for most parts of the paper we used a uniform prior over the rates and equilibrium constants to be comparable with the usual default method: a plain ML which influences our results in data regimes in which the data is not strong enough to dominate the bias from the uniform prior. Thus, both algorithms perform better with smarter informative or at least unbiased prior choices for the rate matrix.

In principle, to rule out an influence of the prior, unbiased priors should be used for the rates. The standard concept for unbiased least informative priors is to construct a Jeffreys prior Jeffreys, 1946 for the rate matrix which is, however, beyond the scope of the paper.

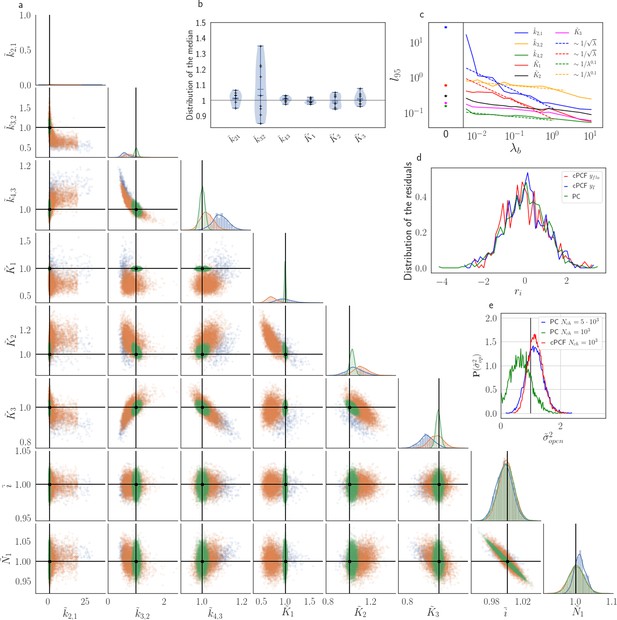

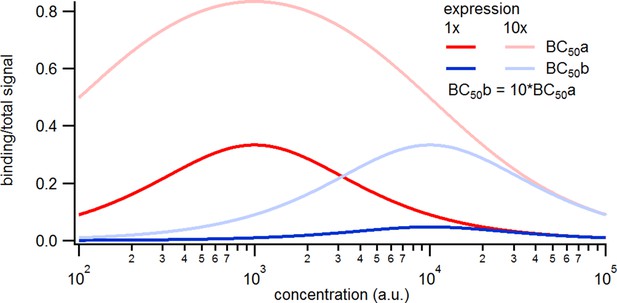

The influence of the brightness of the ligands of cPCF data on the inference

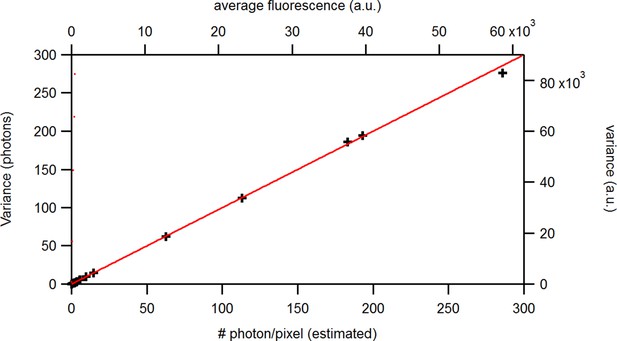

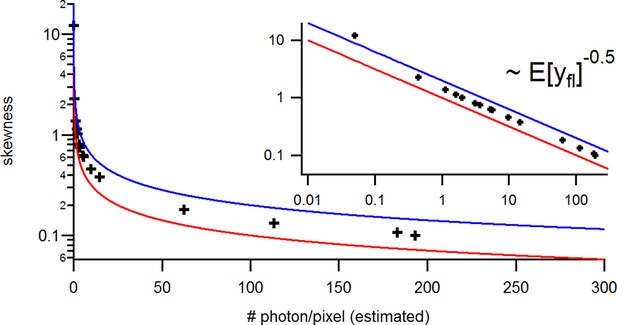

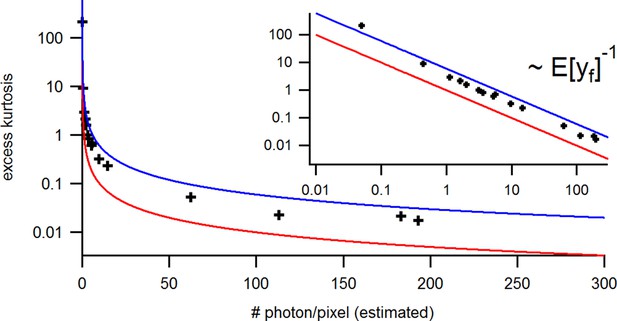

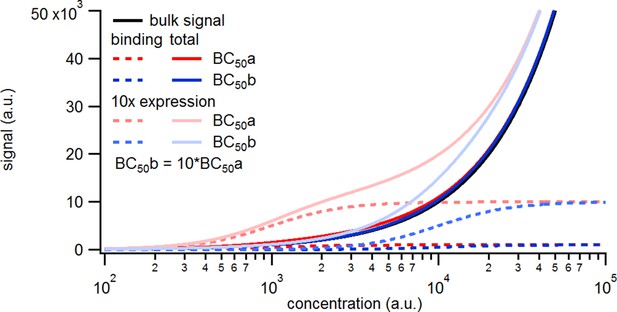

To evaluate the advantage of cPCF data Biskup et al., 2007 with respect to PC data only (Figure 10), we compare different types of ligands: Idealized ligands with brightness , emitting light only when bound to the channels, ‘real’ ligands which also produce background fluorescence when diffusing in the bath solution (Appendix 5) and current data alone. For datasets including fluorescence, the increased precision for the dissociation rate of the first ligand, , is that strong that the variance of the posterior nearly vanishes in the combined plot with the current data (nearly all probability mass is concentrated in a single point in Figure 10a). The effect on the error of the equilibrium constants is less strong. Additionally, the bias is reduced and even the estimation of is improved. The brighter the ligands are, the more the posterior of the rates decorrelates, in particular (Figure 10a). All median estimates of nine different cPCF data sets (Figure 10b) differ by less than a factor 1.1 from the true parameter except , which does not profit as much from the fluorescence data as (Figure 10c). The 95th percentiles, l95 of and follow . Thus, with increasing magnitude of ligand brightness λ, the estimation of becomes increasingly better compared to that of (Figure 10c). The posterior of the binding and unbinding rates of the first ligand contracts with increasing . The l95 percentiles of other parameters exhibit a weaker dependency on the brightness (). For photons per bound ligand and frame, which corresponds to a maximum mean signal of 20 photons per frame, the normal approximation to the Poisson noise hardly captures the asymmetry of photon counting noise included in the time traces. Nevertheless, l95 decreases about ten times when cPCF data are used (Figure 10c). The estimated variance of

with the mean predicted signal , for PC or cPCF data is (Figure 10d) which means that the modeling predicts the stochastic process correctly up to the variance of the signal. Note that the mean value and covariance of the signal and the state form sufficient statistics of the process, since all involved distributions are approximately multivariate normal. The fat tails and skewness of and arises because the true model is too flexible for current data without further prior information. The KF allows to determine the variance (Figure 10e) of the open-channel current noise for . Adding fluorescence data has roughly the same effect on the estimation of like using five times more ion channels to estimate .

The Benchmark of the KF for PC versus cPCF data with different bright ligands shows that even adding a weak fluorescence binding signal can add enough information to identify before unidentified parameters.

(a) Posteriors of PC data (blue), cPCF data with (orange) and cPCF data with (green). For the data set with , we additionally accounted for the superimposing fluorescence of unbound ligands in solution. In all cases . The black lines represent the true values of the simulated data. The posteriors for cPCF are centered around the true values that are hardly visible on the scale of the posterior for the PC data. The solid lines on the diagonal are kernel estimates of the probability density. (b) Accuracy and precision of the median estimates visualized by a violin plot for the parameters of the rate matrix for 5different data sets. Four of the five data sets are used a second time with different instrumental noise, with and superimposing bulk signal. The blue lines represent the median, mean and the maximal and minimal extreme value. (c) The 95th percentile of the marginalized posteriors vs. normalized by the true value of each parameter. A regime with is shown for and K1, while other parameters show a weaker dependency on the ligand brightness. (d) Histograms of the residuals of cPCF with data and PC data. The randomness of the normalized residuals of the cPCF or PC data is well described by . The estimated variance is . Note that the fluorescence signal per frame is very low such that the normal approximation to Poisson counting statistics does not hold. e, Posterior of the open-channel noise for PC data with (green) and (blue) as well as for cPCF data with (red) with . We assumed as prior for the instrumental variance .

-

Figure 10—source data 1

Five different sets of time traces of panel b.

All instrumental noise is already added.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig10-data1-v3.zip

-

Figure 10—source data 2

Eighteen sets of time traces of panel c.

The number in the file name indicates the brightness.

- https://cdn.elifesciences.org/articles/62714/elife-62714-fig10-data2-v3.zip

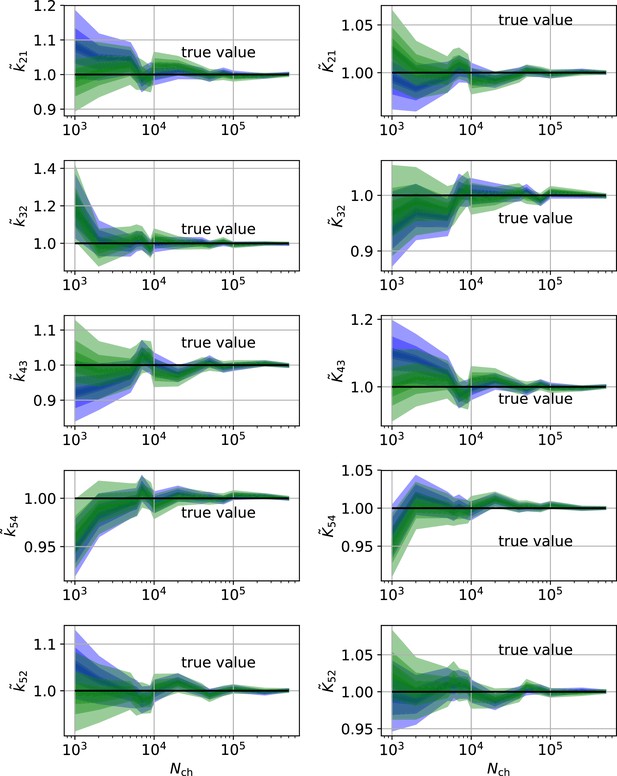

Sensitivity towards filtering before the analog-to-digital conversion of the signal