Trading mental effort for confidence in the metacognitive control of value-based decision-making

Abstract

Why do we sometimes opt for actions or items that we do not value the most? Under current neurocomputational theories, such preference reversals are typically interpreted in terms of errors that arise from the unreliable signaling of value to brain decision systems. But, an alternative explanation is that people may change their mind because they are reassessing the value of alternative options while pondering the decision. So, why do we carefully ponder some decisions, but not others? In this work, we derive a computational model of the metacognitive control of decisions or MCD. In brief, we assume that fast and automatic processes first provide initial (and largely uncertain) representations of options' values, yielding prior estimates of decision difficulty. These uncertain value representations are then refined by deploying cognitive (e.g., attentional, mnesic) resources, the allocation of which is controlled by an effort-confidence tradeoff. Importantly, the anticipated benefit of allocating resources varies in a decision-by-decision manner according to the prior estimate of decision difficulty. The ensuing MCD model predicts response time, subjective feeling of effort, choice confidence, changes of mind, as well as choice-induced preference change and certainty gain. We test these predictions in a systematic manner, using a dedicated behavioral paradigm. Our results provide a quantitative link between mental effort, choice confidence, and preference reversals, which could inform interpretations of related neuroimaging findings.

Introduction

Why do we carefully ponder some decisions, but not others? Decisions permeate every aspect of our lives – what to eat, where to live, whom to date, etc. – but the amount of effort that we put into different decisions varies tremendously. Rather than processing all decision-relevant information, we often rely on fast habitual and/or intuitive decision policies, which can lead to irrational biases and errors (Kahneman et al., 1982). For example, snap judgments about others are prone to unconscious stereotyping, which often has enduring and detrimental consequences (Greenwald and Banaji, 1995). Yet we don't always follow the fast but negligent lead of habits or intuitions. So, what determines how much time and effort we invest when making decisions?

Biased and/or inaccurate decisions can be triggered by psychobiological determinants such as stress (Porcelli and Delgado, 2009; Porcelli et al., 2012), emotions (Harlé and Sanfey, 2007; De Martino et al., 2006; Sokol-Hessner et al., 2013), or fatigue (Blain et al., 2016). But, in fact, they also arise in the absence of such contextual factors. That is why they are sometimes viewed as the outcome of inherent neurocognitive limitations on the brain's decision processes, e.g., bounded attentional and/or mnemonic capacity (Giguère and Love, 2013; Lim et al., 2011; Marois and Ivanoff, 2005), unreliable neural representations of decision-relevant information (Drugowitsch et al., 2016; Wang and Busemeyer, 2016; Wyart and Koechlin, 2016), or physiologically constrained neural information transmission (Louie and Glimcher, 2012; Polanía et al., 2019). However, an alternative perspective is that the brain has a preference for efficiency over accuracy (Thorngate, 1980). For example, when making perceptual or motor decisions, people frequently trade accuracy for speed, even when time constraints are not tight (Heitz, 2014; Palmer et al., 2005). Related neural and behavioral data are best explained by ‘accumulation-to-bound’ process models, in which a decision is emitted when the accumulated perceptual evidence reaches a bound (Gold and Shadlen, 2007; O'Connell et al., 2012; Ratcliff and McKoon, 2008; Ratcliff et al., 2016). Further computational work demonstrated that if the bound is properly set, these models actually implement an optimal solution to speed-accuracy tradeoff problems (Ditterich, 2006; Drugowitsch et al., 2012). From a theoretical standpoint, this implies that accumulation-to-bound policies can be viewed as an evolutionary adaptation, in response to selective pressure that favors efficiency (Pirrone et al., 2014).

This line of reasoning, however, is not trivial to generalize to value-based decision-making, for which objective accuracy remains an elusive notion (Dutilh and Rieskamp, 2016; Rangel et al., 2008). This is because, in contrast to evidence-based (e.g., perceptual) decisions, there are no right or wrong value-based decisions. Nevertheless, people still make choices that deviate from subjective reports of value, with a rate that decreases with value contrast. From the perspective of accumulation-to-bound models, these preference reversals count as errors and arise from the unreliable signaling of value to decision systems in the brain (Lim et al., 2013). That value-based variants of accumulation-to-bound models are able to capture the neural and behavioral effects of, e.g., overt attention (Krajbich et al., 2010; Lim et al., 2011), external time pressure (Milosavljevic et al., 2010), confidence (De Martino et al., 2013), or default preferences (Lopez-Persem et al., 2016) lends empirical support to this type of interpretation. Further credit also comes from theoretical studies showing that these process models, under some simplifying assumptions, optimally solve the problem of efficient value comparison (Tajima et al., 2016; Tajima et al., 2019). However, they do not solve the issue of adjusting the amount of effort to invest in reassessing an uncertain prior preference with yet-unprocessed value-relevant information. Here, we propose an alternative computational model of value-based decision-making that suggests that mental effort is optimally traded against choice confidence, given how value representations are modified while pondering decisions (Slovic, 1995; Tversky and Thaler, 1990; Warren et al., 2011).

We start from the premise that the brain generates representations of options' value in a quick and automatic manner, even before attention is engaged for comparing option values (Lebreton et al., 2009). The brain also encodes the certainty of such value estimates (Lebreton et al., 2015), from which a priori feelings of choice difficulty and confidence could, in principle, be derived. Importantly, people are reluctant to make a choice that they are not confident about (De Martino et al., 2013). Thus, when faced with a difficult decision, people should reassess option values until they reach a satisfactory level of confidence about their preference. This effortful mental deliberation would engage neurocognitive resources, such as attention and memory, in order to process value-relevant information. In line with recent proposals regarding the strategic deployment of cognitive control (Musslick et al., 2015; Shenhav et al., 2013), we assume that the amount of allocated resources optimizes a tradeoff between expected effort cost and confidence gain. The main issue here is that the impact of yet-unprocessed information on value representations is a priori unknown. Critically, we show how the system can anticipate the expected benefit of allocating resources before having processed value-relevant information. The ensuing metacognitive control of decisions or MCD thus adjusts mental effort on a decision-by-decision basis, according to prior decision difficulty and importance (Figure 1).

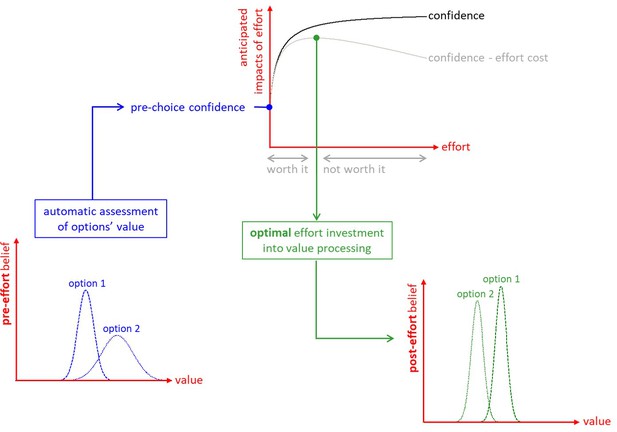

The metacognitive control of decisions.

First, automatic processes provide a ‘pre-effort’ belief about option values. This belief is probabilistic, in the sense that it captures an uncertain prediction regarding the to-be-experienced value of a given option. This pre-effort belief serves to identify the anticipated impact of investing costly cognitive resources (i.e., effort) in the decision. In particular, investing effort is expected to increase decision confidence beyond its pre-effort level. But how much effort it should be worth investing depends upon the balance between expected confidence gain and effort costs. The system then allocates resources into value-relevant information processing up until the optimal effort investment is reached. At this point, a decision is triggered based on the current post-effort belief about option values (in this example, the system has changed its mind, i.e., its preference has reversed). Note: we refer to the ensuing increase in the value difference between chosen and unchosen items as the ‘spreading of alternatives’ (cf. Materials and methods section).

As we will see, the MCD model makes clear quantitative predictions about several key decision variables (cf. Model section below). We test these predictions by asking participants to report their judgments about each item's subjective value and their subjective certainty about their value judgments, both before and after choosing between pairs of the items. Note that we also measure choice confidence, response time, and subjective effort for each decision.

The objective of this work is to show how most non-trivial properties of value-based decision-making can be explained with a minimal (and mutually consistent) set of assumptions. The MCD model predicts response time, subjective effort, choice confidence, probability of changing one's mind, as well as choice-induced preference change and certainty gain, out of two properties of pre-choice value representations, namely value ratings and value certainty ratings. Relevant details regarding the model derivations, as well as the decision-making paradigm we designed to evaluate those predictions, can be found in the Model and Methods sections below. In the subsequent section, we present our main dual computational/behavioral results. Finally, we discuss our results in light of the existing literature on value-based decision-making.

The MCD model

In what follows, we derive a computational model of the metacognitive control of decisions or MCD. In brief, we assume that the amount of cognitive resources that is deployed during a decision is controlled by an effort-confidence tradeoff. Critically, this tradeoff relies on a prospective anticipation of how these resources will perturb the internal representations of subjective values. As we will see, the MCD model eventually predicts how cognitive effort expenditure depends upon prior estimates of decision difficulty, and what impact this will have on post-choice value representations.

Deriving the expected value of decision control

Let be the amount of cognitive (e.g., executive, mnemonic, or attentional) resources that serve to process value-relevant information. Allocating these resources will be associated with both a benefit , and a cost . As we will see, both are increasing functions of : derives from the refinement of internal representations of subjective values of alternative options or actions that compose the choice set, and quantifies how aversive engaging cognitive resources are (mental effort). In line with the framework of expected value of control or EVC (Musslick et al., 2015; Shenhav et al., 2013), we assume that the brain chooses to allocate the amount of resources that optimizes the following cost–benefit trade-off:

where the expectation accounts for predictable stochastic influences that ensue from allocating resources (this will be clarified below). Note that the benefit term is the (weighted) choice confidence :

where the weight is analogous to a reward and quantifies the importance of making a confident decision (see below). As we will see, plays a pivotal role in the model, in that it captures the efficacy of allocating resources for processing value-relevant information. So, how do we define choice confidence?

We assume that decision makers may be unsure about how much they like/want the alternative options that compose the choice set. In other words, the internal representations of values of alternative options are probabilistic. Such a probabilistic representation of value can be understood in terms of, for example, an uncertain prediction regarding the to-be-experienced value of a given option. Without loss of generality, the probabilistic representation of option value takes the form of Gaussian probability density functions, as follows:

where and are the mode and the variance of the probabilistic value representation, respectively (and indexes alternative options in the choice set).

This allows us to define choice confidence as the probability that the (predicted) experienced value of the (to be) chosen item is higher than that of the (to be) unchosen item:

where is the standard sigmoid mapping. Here the second line derives from assuming that the choice follows the sign of the preference , and the last line derives from a moment-matching approximation to the Gaussian cumulative density function (Daunizeau, 2017a).

As stated in the Introduction section, we assume that the brain valuation system automatically generates uncertain estimates of options' value (Lebreton et al., 2009; Lebreton et al., 2015), before cognitive effort is invested in decision-making. In what follows, and are the mode and variance of the ensuing prior value representations (we treat them as inputs to the MCD model). We also assume that these prior representations neglect existing value-relevant information that would require cognitive effort to be retrieved and processed (Lopez-Persem et al., 2016).

Now, how does the system anticipate the benefit of allocating resources to the decision process? Recall that the purpose of allocating resources is to process (yet unavailable) value-relevant information. The critical issue is thus to predict how both the uncertainty and the modes of value representations will eventually change, before having actually allocated the resources (i.e., without having processed the information). In brief, allocating resources essentially has two impacts: (i) it decreases the uncertainty , and (ii) it perturbs the modes in a stochastic manner.

The former impact derives from assuming that the amount of information that will be processed increases with the amount of allocated resources. Here, this implies that the variance of a given probabilistic value representation decreases in proportion to the amount of allocated effort, that is:

where is the prior variance of the representation (before any effort has been allocated), and controls the efficacy with which resources increase the precision of the value representation. Formally speaking, Equation 5 has the form of a Bayesian update of the belief's precision in a Gaussian-likelihood model, where the precision of the likelihood term is . More precisely, is the precision increase that follows from allocating a unitary amount of resources . In what follows, we will refer to as the ‘type #1 effort efficacy’.

The latter impact follows from acknowledging the fact that the system cannot know how processing more value-relevant information will affect its preference before having allocated the corresponding resources. Let be the change in the position of the mode of the ith value representation, having allocated an amount of resources. The direction of the mode's perturbation cannot be predicted because it is tied to the information that would be processed. However, a tenable assumption is to consider that the magnitude of the perturbation increases with the amount of information that will be processed. This reduces to stating that the variance of increases in proportion to , that is:

where is the mode of the value representation before any effort has been allocated, and controls the relationship between the amount of allocated resources and the variance of the perturbation term . The higher the parameter , the greater the expected perturbation of the mode for a given amount of allocated resources. In what follows, we will refer to as the ‘type #2 effort efficacy’. Note that Equation 6 treats the impact of future information processing as a non-specific random perturbation on the mode of the prior value representation. Our justification for this assumption is twofold: (i) it is simple, and (ii) and it captures the idea that the MCD controller does not know how the allocated resources will be used (here, by the value-based decision system downstream). We will see that, in spite of this, the MCD controller can still make quantitative predictions regarding the expected benefit of allocating resources.

Taken together, Equations 5 and 6 imply that predicting the net effect of allocating resources onto choice confidence is not trivial. On one hand, allocating effort will increase the precision of value representations (cf. Equation 5), which mechanically increases choice confidence, all other things being equal. On the other hand, allocating effort can either increase or decrease the absolute difference between the modes. This, in fact, depends upon the sign of the perturbation terms , which are not known in advance. Having said this, it is possible to derive the expected absolute difference between the modes that would follow from allocating an amount of resources:

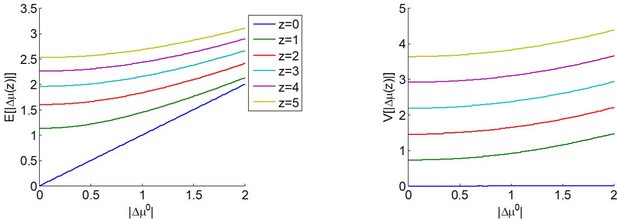

where we have used the expression for the first-order moment of the so-called 'folded normal distribution', and the second term in the right-hand side of Equation 7 derives from the same moment-matching approximation to the Gaussian cumulative density function as above. The expected absolute means' difference depends upon both the absolute prior mean difference and the amount of allocated resources . This is depicted in Figure 2.

The expected impact of allocated resources onto value representations.

Left panel: the expected absolute mean difference (y-axis) is plotted as a function of the absolute prior mean difference (x-axis) for different amounts of allocated resources (color code), having set type #2 effort efficacy to unity (i.e. ). Right panel: Variance of the absolute mean difference; same format.

One can see that is always greater than 0 and increases with (and if , then ). In other words, allocating resources is expected to increase the value difference, despite the fact that the impact of the perturbation term can go either way. In addition, the expected gain in value difference afforded by allocating resources decreases with the absolute prior means' difference.

Similarly, the variance of the absolute means' difference is derived from the expression of the second-order moment of the corresponding folded normal distribution:

One can see in Figure 2 that increases with the amount of allocated resources (but if , then ).

Knowing the moments of the distribution of now enables us to derive the expected confidence level that would result from allocating the amount of resource :

where we have assumed, for the sake of conciseness, that both prior value representations are similarly uncertain (i.e., ). It turns out that the expected choice confidence always increase with , irrespective of the efficacy parameters, as long as or . These, however, control the magnitude of the confidence gain that can be expected from allocating an amount of resources. Equation 9 is important, because it quantifies the expected benefit of resource allocation, before having processed the ensuing value-relevant information. More details regarding the accuracy of Equation 9 can be found in section 1 of the Appendix. In addition, section 2 of the Appendix summarizes the dependence of MCD-optimal choice confidence on and .

To complete the cost–benefit model, we simply assume that the cost of allocating resources to the decision process linearly scales with the amount of resources, that is:

where determines the effort cost of allocating a unitary amount of resources . In what follows, we will refer to as the ‘effort unitary cost’. We note that weak nonlinearities in the cost function (e.g., quadratic terms) would not qualitatively change the model predictions.

In brief, the MCD-optimal resource allocation is simply given by:

which does not have any closed-form analytic solution. Nevertheless, it can easily be identified numerically, having replaced Equations 7–9 into Equation 11. We refer the readers interested in the impact of model parameters on the MCD-optimal control to section 2 of the Appendix.

At this point, we do not specify how Equation 11 is solved by neural networks in the brain. Many alternatives are possible, from gradient ascent (Seung, 2003) to winner-take-all competition of candidate solutions (Mao and Massaquoi, 2007). We will also comment on the specific issue of prospective (offline) versus reactive (online) MCD processes in the Discussion section.

Note: implicit in the above model derivation is the assumption that the allocation of resources is similar for both alternative options in the choice set (i.e. ). This simplifying assumption is justified by eye-tracking data (cf. section 8 of the Appendix).

Corollary predictions of the MCD model

In the previous section, we derived the MCD-optimal resource allocation , which effectively best balances the expected choice confidence with the expected effort costs, given the predictable impact of stochastic perturbations that arise from processing value-relevant information. This quantitative prediction is effectively shown in Figures 5 and 6 of the Results section below, as a function of (empirical proxies for) the prior absolute difference between modes and the prior certainty of value representations. But, this resource allocation mechanism has a few interesting corollary implications.

To begin with, note that knowing enables us to predict what confidence level the system should eventually reach. In fact, one can define the MCD-optimal confidence level as the expected confidence evaluated at the MCD-optimal amount of allocated resources, that is, . This is important, because it implies that the model can predict both the effort the system will invest and its associated confidence, on a decision-by-decision basis. The impact of the efficacy parameters on this quantitative prediction is detailed in section 2 of the Appendix.

Additionally, determines the expected improvement in the certainty of value representations (hereafter: the ‘certainty gain’), which trivially relates to type #2 efficacy, that is: . This also means that, under the MCD model, no choice-induced value certainty gain can be expected when .

Similarly, one can predict the MCD-optimal probability of changing one's mind. Recall that the probability of changing one's mind depends on the amount of allocated resources , that is:

One can see that the MCD-optimal probability of changing one's mind is a simple monotonic function of the allocated effort . Importantly, when . This implies that MCD agents do not change their minds when effort cannot change the relative position of the modes of the options’ value representations (irrespective of type #1 effort efficacy). In retrospect, this is critical, because there should be no incentive to invest resources in deliberation if it would not be possible to change one’s pre-deliberation preference.

Lastly, we can predict the magnitude of choice-induced preference change, that is, how value representations are supposed to spread apart during the decision. Such an effect is typically measured in terms of the so-called ‘spreading of alternatives’ or SoA, which is defined as follows:

where is the cumulative perturbation term of the modes' difference. Taking the expectation of the right-hand term of Equation 13 under the distribution of and evaluating it at now yields the MCD-optimal spreading of alternatives :

where the last line derives from the expression of the first-order moment of the truncated Gaussian distribution. Note that the expected preference change also increases monotonically with the allocated effort . Here again, under the MCD model, no preference change can be expected when .

We note that all of these corollary predictions essentially capture choice-induced modifications of value representations. This is why we will refer to choice confidence, value certainty gain, change of mind, and spreading of alternatives as ‘decision-related’ variables.

Correspondence between model variables and empirical measures

In summary, the MCD model predicts cognitive effort (or, more properly, the amount of allocated resources) and decision-related variables, given the prior absolute difference between modes and the prior certainty of value representations. In other words, the inputs to the MCD model are the prior moments of value representations, whose trial-by-trial variations determine variations in model predictions. Here, we simply assume that pre-choice value and value certainty ratings provide us with an approximation of these prior moments. More precisely, we use ΔVR0 and VCR0 (cf. section 3.3 below) as empirical proxies for and , respectively. Accordingly, we consider post-choice value and value certainty ratings as empirical proxies for the posterior mean and precision of value representations, at the time when the decision was triggered (i.e., after having invested the effort ). Similarly, we match expected choice confidence (i.e., after having invested the effort ) with empirical choice confidence.

Note that the MCD model does not specify what the allocated resources are. In principle, both mnesic and attentional resources may be engaged when processing value-relevant information. Nevertheless, what really matters is assessing the magnitude of decision-related effort. We think of as the cumulative engagement of neurocognitive resources, which varies both in terms of duration and intensity. Empirically, we relate to two different ‘effort-related’ empirical measures, namely subjective feeling of effort and response time. The former relies on the subjective cost incurred when deploying neurocognitive resources, which would be signaled by experiencing mental effort. The latter makes sense if one thinks of response time in terms of effort duration. Although it is a more objective measurement than subjective rating of effort, response time only approximates if effort intensity shows relatively small variations. We will comment on this in the Discussion section.

Finally, the MCD model is also agnostic about the definition of ‘decision importance’, that is, the weight in Equation 2. In this work, we simply investigate the effect of decision importance by comparing subjective effort and response time in ‘neutral’ versus ‘consequential’ decisions (cf. section 'Task conditions' below). We will also comment on this in the Discussion section.

Materials and methods

Participants

Participants for our study were recruited from the RISC (Relais d’Information sur les Sciences de la Cognition) subject pool through the ICM (Institut du Cerveau et de la Moelle – Paris Brain Institute). All participants were native French speakers, with no reported history of psychiatric or neurological illness. A total of 41 people (28 female; age: mean = 28, SD = 5, min = 20, max = 40) participated in this study. The experiment lasted approximately 2 hr, and participants were paid a flat rate of 20€ as compensation for their time, plus a bonus, which was given to participants to compensate for potential financial losses in the ‘penalized’ trials (see below). More precisely, in ‘penalized’ trials, participants lost 0.20€ (out of a 5€ bonus) for each second that they took to make their choice. This resulted in an average 4€ bonus (across participants). One group of 11 participants was excluded from the cross-condition analysis only (see below) due to technical issues.

Materials

Written instructions provided detailed information about the sequence of tasks within the experiment, the mechanics of how participants would perform the tasks, and images illustrating what a typical screen within each task section would look like. The experiment was developed using Matlab and PsychToolbox, and was conducted entirely in French. The stimuli for this experiment were 148 digital images, each representing a distinct food item (50 fruits, 50 vegetables, and 48 various snack items including nuts, meats, and cheeses). Food items were selected such that most items would be well known to most participants.

Eye gaze position and pupil size were continuously recorded throughout the duration of the experiment using The Eye Tribe eye-tracking devices. Participants’ head positions were fixed using stationary chinrests. In case of incidental movements, we corrected the pupil size data for distance to screen, separately for each eye.

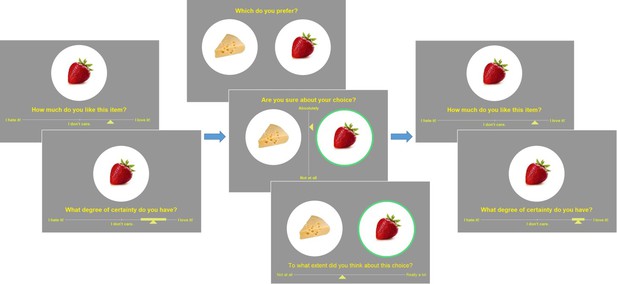

Task design

Prior to commencing the testing session of the experiment, participants underwent a brief training session. The training tasks were identical to the experimental tasks, although different stimuli were used (beverages). The experiment itself began with an initial section where all individual items were displayed in a random sequence for 1.5 s each, in order to familiarize the participants with the set of options they would later be considering and form an impression of the range of subjective value for the set. The main experiment was divided into three sections, following the classic Free-Choice Paradigm protocol (e.g., Izuma and Murayama, 2013): pre-choice item ratings, choice, and post-choice item ratings. There was no time limit for the overall experiment, nor for the different sections, nor for the individual trials. The item rating and choice sections are described below (see Figure 3).

Experimental design.

Left: pre-choice item rating session: participants are asked to rate how much they like each food item and how certain they are about it (value certainty rating). Center: choice session: participants are asked to choose between two food items, to rate how confident they are about their choice, and to report the feeling of effort associated with the decision. Right: post-choice item rating session (same as pre-choice item rating session).

Item rating (same for pre-choice and post-choice sessions)

Request a detailed protocolParticipants were asked to rate the entire set of items in terms of how much they liked each item. The items were presented one at a time in a random sequence (pseudo-randomized across participants). At the onset of each trial, a fixation cross appeared at the center of the screen for 750 ms. Next, a solitary image of a food item appeared at the center of the screen. Participants had to respond to the question, ‘How much do you like this item?’ using a horizontal slider scale (from ‘I hate it!’ to ‘I love it!”) to indicate their value rating for the item. The middle of the scale was the point of neutrality (‘I don’t care about it.”). Hereafter, we refer to the reported value as the ‘pre-choice value rating’. Participants then had to respond to the question, ‘What degree of certainty do you have?’ (about the item’s value) by expanding a solid bar symmetrically around the cursor of the value slider scale to indicate the range of possible value ratings that would be compatible with their subjective feeling. We measured participants' certainty about value rating in terms of the percentage of the value scale that is not occupied by the reported range of compatible value ratings. We refer to this as the ‘pre-choice value certainty rating’. At that time, the next trial began.

Note

Request a detailed protocolIn the Results section below, ΔVR0 is the difference between pre-choice value ratings of items composing a choice set. Similarly, VCR0 is the average pre-choice value certainty ratings across items composing a choice set. Both value and value certainty rating scales range from 0 to 1 (but participants were unaware of the quantitative units of the scales).

Choice

Request a detailed protocolParticipants were asked to choose between pairs of items in terms of which item they preferred. The entire set of items was presented one pair at a time in a random sequence. Each item appeared in only one pair. At the onset of each trial, a fixation cross appeared at the center of the screen for 750 ms. Next, two images of snack items appeared on the screen: one toward the left and one toward the right. Participants had to respond to the question, ‘Which do you prefer?’ using the left or right arrow key. We measured response time in terms of the delay between the stimulus onset and the response. Participants then had to respond to the question, ‘Are you sure about your choice?’ using a vertical slider scale (from ‘Not at all!’ to ‘Absolutely!'). We refer to this as the report of choice confidence. Finally, participants had to respond to the question, ‘To what extent did you think about this choice?’ using a horizontal slider scale (from ‘Not at all!’ to ‘Really a lot!”). We refer to this as the report of subjective effort. At that time, the next trial began.

Task conditions

Request a detailed protocolWe partitioned the task trials into three conditions, which were designed to test the following two predictions of the MCD model: all else equal, effort should increase with decision importance and decrease with related costs. We aimed to check the former prediction by asking participants to make some decisions where they knew that the choice would be real, that is, they would actually have to eat the chosen food item at the end of the experiment. We refer to these trials as ‘consequential’ decisions. To check the latter prediction, we imposed a financial penalty that increased with response time. More precisely, participants were instructed that they would lose 0.20€ (out of a 5€ bonus) for each second that they would take to make their choice. The choice section of the experiment was composed of 60 ‘neutral’ trials, 7 ‘consequential’ trials, and 7 ‘penalized’ trials, which were randomly intermixed. Instructions for both ‘consequential’ and ‘penalized’ decisions were repeated at each relevant trial, immediately prior to the presentation of the choice items.

Probabilistic model fit

Request a detailed protocolThe MCD model predicts trial-by-trial variations in the probability of changing one’s mind, choice confidence, spreading of alternatives, certainty gain, response time, and subjective effort ratings (MCD outputs) from trial-by-trial variations in value rating difference ΔVR0 and mean value certainty rating VCR0 (MCD inputs). Together, three unknown parameters control the quantitative relationship between MCD inputs and outputs: the effort unitary cost , type #1 effort efficacy , and type #2 effort efficacy . However, additional parameters are required to capture variations induced by experimental conditions. Recall that we expect ‘consequential’ decisions to be more important than ‘neutral’ ones, and ‘penalized’ decisions effectively include an extraneous cost-of-time term. One can model the former condition effect by making (cf. Equation 2) sensitive to whether the decision is consequential or not. We proxy the latter condition effect by making the effort unitary cost a function of whether the decision is penalized (where effort induces both intrinsic and extrinsic costs) or not (intrinsic effort cost only). This means that condition effects require one additional parameter each.

In principle, all of these parameters may vary across people, thereby capturing idiosyncrasies in people’s (meta-)cognitive apparatus. However, in addition to estimating these five parameters, fitting the MCD model to each participant’s data also requires a rescaling of the model’s output variables. This is because there is no reason to expect the empirical measure of these variables to match their theoretical scale. We thus inserted two additional nuisance parameters per output MCD variable, which operate a linear rescaling (affine transformation, with a positive constraint on slope parameters). Importantly, these nuisance parameters cannot change the relationship between MCD inputs and outputs. In other terms, the MCD model really has only five degrees of freedom.

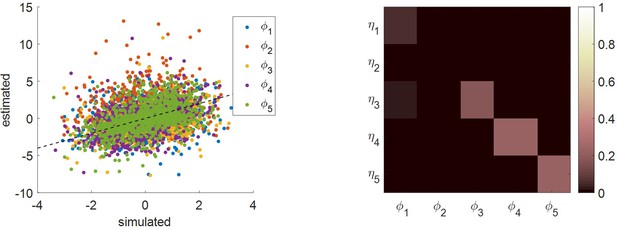

For each subject, we fit all MCD dependent variables concurrently with a single set of MCD parameters. Within-subject probabilistic parameter estimation was performed using the variational Laplace approach (Daunizeau, 2017b; Friston et al., 2007), which is made available from the VBA toolbox (Daunizeau et al., 2014). We refer the reader interested in the mathematical details of within-subject MCD parameter estimation to the section 3 of the Appendix (this also includes a parameter recovery analysis). In what follows, we compare empirical data to MCD-fitted dependent variables (when binned according to ΔVR0 and VCR0). We refer to the latter as ‘postdictions’, in the sense that they derive from a posterior predictive density that is conditional on the corresponding data.

We also fit the MCD model on reduced subsets of dependent variables (e.g., only ‘effort-related’ variables), and report proper out-of-sample predictions of data that were not used for parameter estimation (e.g., ‘decision-related’ variables). We note that this is a strong test of the model, since it does not rely on any train/test partition of the predicted variable (see next section below).

Results

Here, we test the predictions of the MCD model. We note that basic descriptive statistics of our data, including measures of test–retest reliability and replications of previously reported effects on confidence in value-based choices (De Martino et al., 2013), are appended in sections 5–7 of the Appendix.

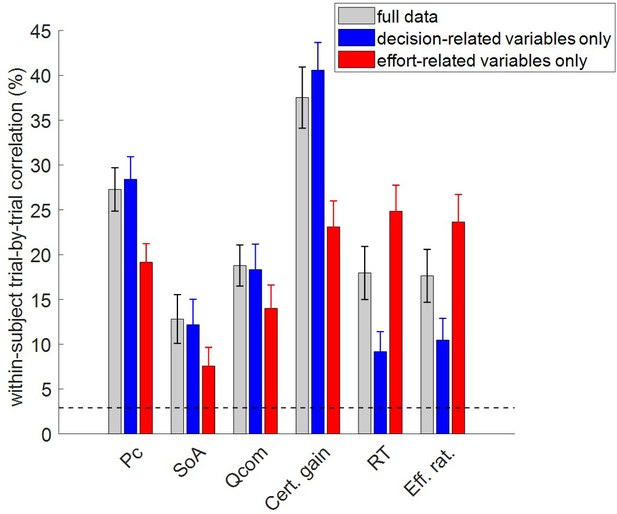

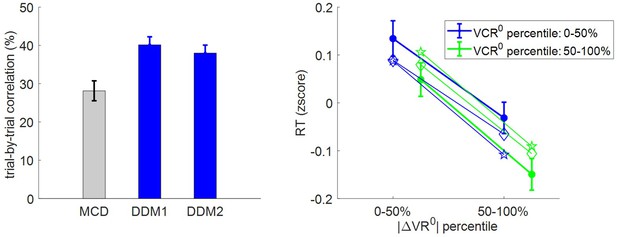

Within-subject model fit accuracy and out-of-sample predictions

To capture idiosyncrasies in participants’ metacognitive control of decisions, the MCD model was fitted to subject-specific trial-by-trial data, where all MCD outputs (namely change of mind, choice confidence, spreading of alternatives, value certainty gain, response time, and subjective effort ratings) were considered together. In the next section, we present summary statistics at the group level, which validate the predictions that can be derived from the MCD model, when fitted to all dependent variables. But can we provide even stronger evidence that the MCD model is capable of predicting all dependent variables at once? In particular, can the model make out-of-sample predictions regarding effort-related variables (i.e., RT and subjective effort ratings) given decision-related variables (i.e., choice confidence, change of mind, spreading of alternatives, and certainty gain), and vice versa?

To address this question, we performed two partial model fits: (i) with decision-related variables only, and (ii) with effort-related variables only. In both cases, out-of-sample predictions for the remaining dependent variables were obtained directly from within-subject parameter estimates. For each subject, we then estimated the cross-trial correlation between each pair of observed and predicted variables. Figure 4 reports the ensuing group-average correlations, for each dependent variable and each model fit. In this context, the predictions derived when fitting the full dataset only serve as a reference point for evaluating the accuracy of out-of-sample predictions. For completeness, we also show chance-level prediction accuracy (i.e. the 95% percentile of group average correlations between observed and predicted variables under the null).

Accuracy of model postdictions and out-of-sample predictions.

The mean within-subject (across-trial) correlation between observed and predicted/postdicted data (y-axis) is plotted for each variable (x-axis, from left to right: choice confidence, spreading of alternatives, change of mind, certainty gain, RT and subjective effort ratings), and each fitting procedure (gray: full data fit, blue: decision-related variables only, and red: effort-related variables only). Error bars depict standard error of the mean, and the horizontal dashed black line shows chance-level prediction accuracy.

In what follows, we refer to model predictions on dependent variables that were actually fitted by the model as ‘postdictions’ (full data fits: all dependent variables, partial model fits: variables included in the fit). As one would expect, the accuracy of postdictions is typically higher than that of out-of-sample predictions. Slightly more interesting, perhaps, is the fact that the accuracy of model predictions/postdictions depends upon which output variable is considered. For example, choice confidence is always better predicted/postdicted than spreading of alternatives. This is most likely because the latter data has lower reliability.

But the main result of this analysis is the fact that out-of-sample predictions of dependent variables perform systematically better than chance. In fact, all across-trial correlations between observed and predicted (out-of-sample) data were statistically significant at the group-level (all p<10−3). In particular, this implies that the MCD model makes accurate out-of-sample predictions regarding effort-related variables given decision-related variables, and reciprocally.

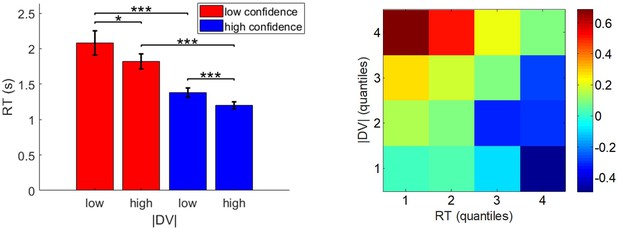

Predicting effort-related variables

In what follows, we inspect the three-way relationships between pre-choice value and value certainty ratings and each effort-related variable: namely, RT and subjective effort rating. The former can be thought of as a proxy for the duration of resource allocation, whereas the latter is a metacognitive readout of resource allocation cost. Unless stated otherwise, we will focus on both the absolute difference between pre-choice value ratings (hereafter: |ΔVR0|) and the mean pre-choice value certainty rating across paired choice items (hereafter: VCR0). Under the MCD model, increasing |ΔVR0| and/or VCR0 will decrease the demand for effort, which should result in smaller expected RT and subjective effort rating. We will now summarize the empirical data and highlight the corresponding quantitative MCD model postdictions and out-of-sample predictions (here: predictions are derived from model fits on decision-related variables only, that is, all dependent variables except RT and subjective effort rating).

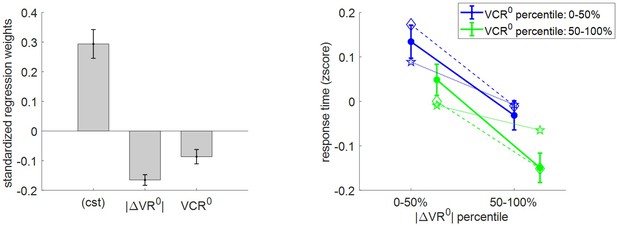

First, we checked how RT relates to pre-choice value and value certainty ratings. For each subject, we regressed (log-) RT data against |ΔVR0| and VCR0, and then performed a group-level random-effect analysis on regression weights. The results of this model-free analysis provide a qualitative summary of the impact of trial-by-trial variations in pre-choice value representations on RT. We also compare RT data with both MCD model postdictions (full data fit) and out-of-sample predictions. In addition to summarizing the results of the model-free analysis, Figure 5 shows the empirical, predicted, and postdicted RT data, when median-split (within subjects) according to both |ΔVR0| and VCR0.

Three-way relationship between RT, value, and value certainty.

Left panel: Mean standardized regression weights for |ΔVR0| and VCR0 on log-RT (cst is the constant term); error bars represent s.e.m. Right panel: Mean z-scored log-RT (y-axis) is shown as a function of |ΔVR0| (x-axis) and VCR0 (color code: blue = 0–50% lower quantile, green = 50–100% upper quantile); solid lines indicate empirical data (error bars represent s.e.m.), star-dotted lines show out-of-sample predictions and diamond-dashed lines represent model postdictions.

One can see that RT data behave as expected under the MCD model, that is, RT decreases when |ΔVR0|and/or VCR0 increases. The random effect analysis shows that both variables have a significant negative effect at the group level (|ΔVR0|: mean standardized regression weight = −0.16, s.e.m. = 0.02, p<10−3; VCR0: mean standardized regression weight = −0.08, s.e.m. = 0.02, p<10−3; one-sided t-tests). Moreover, MCD postdictions are remarkably accurate at capturing the effect of both |ΔVR0|and VCR0 variables in a quantitative manner. Although MCD out-of-sample predictions are also very accurate, they tend to slightly underestimate the quantitative effect of |ΔVR0|. This is because this effect is typically less pronounced in decision-related variables than in effort-related variables (see below), which then yield MCD parameter estimates that eventually attenuate the impact of |ΔVR0| on effort.

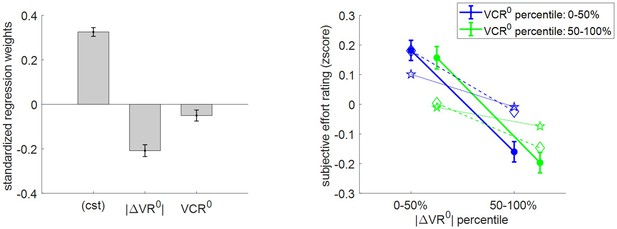

Second, we checked how subjective effort ratings relate to pre-choice value and value certainty ratings. We performed the same analysis as above, the results of which are summarized in Figure 6.

Three-way relationship between subjective effort rating, value, and value certainty.

Same format as Figure 5.

Here as well, subjective effort rating data behave as expected under the MCD model, that is, subjective effort decreases when |ΔVR0| and/or VCR0 increases. The random effect analysis shows that both variables have a significant negative effect at the group level (|ΔVR0|: mean standardized regression weight = −0.21, s.e.m. = 0.03, p<10−3; VCR0: mean regression weight = −0.05, s.e.m. = 0.02, p=0.027; one-sided t-tests). One can see that MCD postdictions and out-of-sample predictions accurately capture the effect of both |ΔVR0|and VCR0 variables. More quantitatively, we note that MCD postdictions slightly overestimate the effect VCR0, whereas out-of-sample predictions also tend to underestimate the effect of |ΔVR0|.

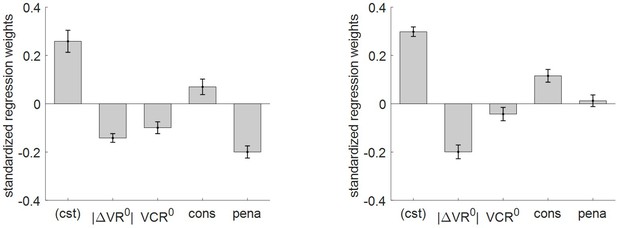

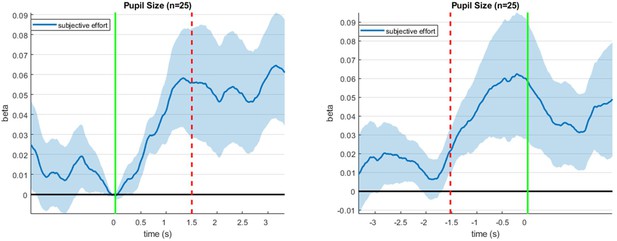

At this point, we note that the MCD model makes two additional predictions regarding effort-related variables, which relate to our task conditions. In brief, all else equal, effort should increase in ‘consequential’ trials, while it should decrease in ‘penalized’ trials. To test these predictions, we modified the model-free regression analysis of RT and subjective effort ratings by including two additional subject-level regressors, encoding consequential and penalized trials, respectively. Figure 7 shows the ensuing augmented set of standardized regression weights for both RT and subjective effort ratings.

Impact of consequential and penalized conditions on effort-related variables.

Left panel: log-RT: mean standardized regression weights (same format as Figure 4 – left panel, cons = ‘consequential’ condition, pena = ‘penalized’ condition). Right panel: subjective effort ratings: same format as left panel.

First, we note that accounting for task conditions does not modify the statistical significance of the impact of |ΔVR0| and VCR0 on effort-related variables, except for the effect of VCR0 on subjective effort ratings (p=0.09, one-sided t-test). Second, one can see that the impact of ‘consequential’ and ‘penalized’ conditions on effort-related variables globally conforms to MCD predictions. More precisely, both RT and subjective effort ratings were significantly higher for ‘consequential’ decisions than for ‘neutral’ decisions (log-RT: mean standardized regression weight = 0.07, s.e.m. = 0.03, p=0.036; effort ratings: mean standardized regression weight = 0.12, s.e.m. = 0.03, p<10−3; one-sided t-tests). In addition, response times are significantly faster for ‘penalized’ than for ‘neutral’ decisions (mean standardized regression weight = −0.26, s.e.m. = 0.03, p<10−3; one-sided t-test). However, the difference in subjective effort ratings between ‘neutral’ and ‘penalized’ decisions does not reach statistical significance (mean effort difference = 0.012, s.e.m. = 0.024, p=0.66; two-sided t-test). We will comment on this in the Discussion section.

Predicting decision-related variables

Under the MCD model, ‘decision-related’ dependent variables (i.e., choice confidence, change of mind, spreading of alternatives, and value certainty gain) are determined by the amount of resources allocated to the decision. However, their relationship to features of prior value representation is not trivial (see section 2 of the Appendix for the specific case of choice confidence). For this reason, we will recapitulate the qualitative MCD prediction that can be made about each of them, prior to summarizing the empirical data and its corresponding postdictions and out-of-sample predictions. Note that here, the latter are obtained from a model fit on effort-related variables only.

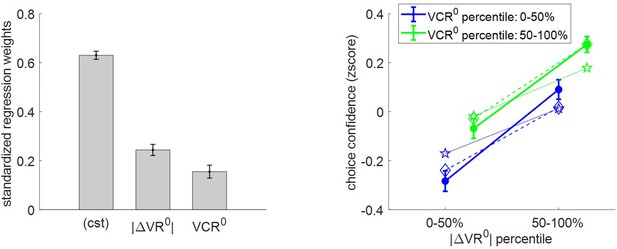

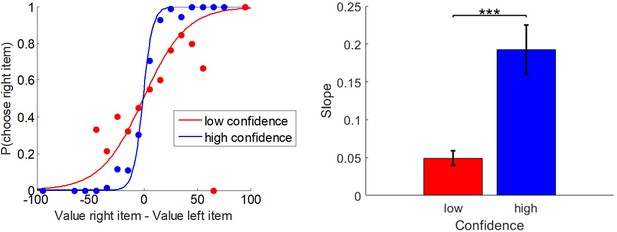

First, we checked how choice confidence relates to |ΔVR0| and VCR0. Under the MCD model, choice confidence reflects the discriminability of the options’ value representations after optimal resource allocation. Recall that more resources are allocated to the decision when either |ΔVR0| or VCR0 decreases. However, under moderate effort efficacies, this does not overcompensate decision difficulty, and thus choice confidence should decrease. As with effort-related variables, we regressed trial-by-trial confidence data against |ΔVR0| and VCR0, and then performed a group-level random-effect analysis on regression weights. The results of this analysis, as well as the comparison between empirical, predicted, and postdicted confidence data are shown in Figure 8.

Three-way relationship between choice confidence, value, and value certainty.

Same format as Figure 5.

The results of the group-level random effect analysis confirm our qualitative predictions. In brief, both |ΔVR0| (mean standardized regression weight = 0.25, s.e.m. = 0.02, p<10−3; one-sided t-test) and VCR0 (mean standardized regression weight = 0.16, s.e.m. = 0.03, p<10−3; one-sided t-test) have a significant positive impact on choice confidence. Here again, MCD postdictions and out-of-sample predictions are remarkably accurate at capturing the effect of both |ΔVR0|and VCR0 variables (though predictions slightly underestimate the effect of |ΔVR0|).

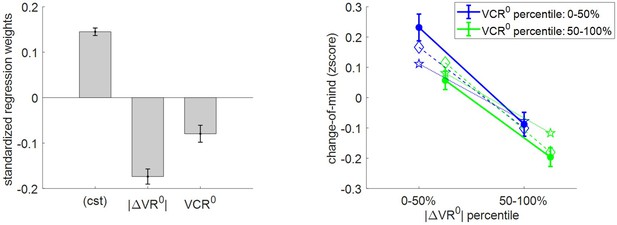

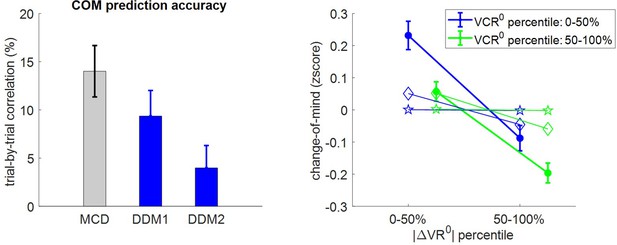

Second, we checked how change of mind relates to |ΔVR0| and VCR0. Note that we define a change of mind according to two criteria: (i) the choice is incongruent with the prior preference inferred from the pre-choice value ratings, and (ii) the choice is congruent with the posterior preference inferred from post-choice value ratings. The latter criterion distinguishes a change of mind from a mere ‘error’, which may arise from attentional and/or motor lapses. Under the MCD model, we expect no change of mind unless type #2 efficacy . In addition, the rate of change of mind should decrease when either |ΔVR0| or VCR0 increases. This is because increasing |ΔVR0| and/or VCR0 will decrease the demand for effort, which implies that the probability of reversing the prior preference will be smaller. Figure 9 shows the corresponding model predictions/postdictions and summarizes the corresponding empirical data.

Three-way relationship between change of mind, value, and value certainty.

Same format as Figure 5.

Note that, on average, the rate of change of mind reaches about 14.5% (s.e.m. = 0.008, p<10−3, one-sided t-test), which is significantly higher than the rate of ‘error’ (mean rate difference = 2.3%, s.e.m. = 0.01, p=0.032; two-sided t-test). The results of the group-level random effect analysis confirm our qualitative MCD predictions. In brief, both |ΔVR0| (mean standardized regression weight = −0.17, s.e.m. = 0.02, p<10−3; one-sided t-test) and VCR0 (mean standardized regression weight = −0.08, s.e.m. = 0.03, p<10−3; one-sided t-test) have a significant negative impact on the rate of change of mind. Again, MCD postdictions and out-of-sample predictions are remarkably accurate at capturing the effect of both |ΔVR0|and VCR0 variables (though predictions slightly underestimate the effect of |ΔVR0|).

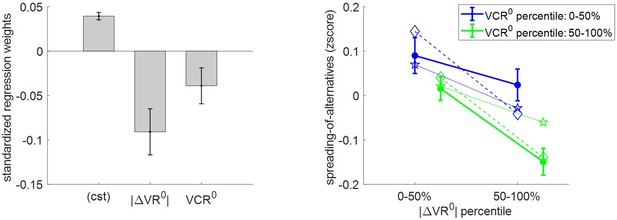

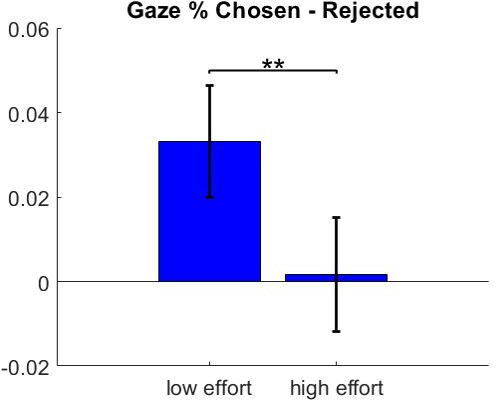

Third, we checked how spreading of alternatives relates to |ΔVR0| and VCR0. Recall that spreading of alternatives measures the magnitude of choice-induced preference change. Under the MCD model, the reported value of alternative options cannot spread apart unless type #2 efficacy . In addition, and as with change of mind, spreading of alternatives should globally follow the optimal effort allocation, that is, it should decrease when |ΔVR0| and/or VCR0 increase. Figure 10 shows the corresponding model predictions/postdictions and summarizes the corresponding empirical data.

Three-way relationship between spreading of alternatives, value, and value certainty.

Same format as Figure 5.

One can see that there is a significant positive spreading of alternatives (mean = 0.04 A.U., s.e.m. = 0.004, p<10−3, one-sided t-test). This is reassuring, because it dismisses the possibility that (which would mean that effort does not perturb the mode of value representations). In addition, the results of the group-level random effect analysis confirm that both |ΔVR0| (mean standardized regression weight = −0.09, s.e.m. = 0.03, p=0.001; one-sided t-test) and VCR0 (mean standardized regression weight = −0.04, s.e.m. = 0.02, p=0.03; one-sided t-test) have a significant negative impact on spreading of alternatives. Note that this replicates previous findings on choice-induced preference change (Lee and Coricelli, 2020; Lee and Daunizeau, 2020). Finally, MCD postdictions and out-of-sample predictions accurately capture the effect of both |ΔVR0| and VCR0 variables in a quantitative manner (though predictions slightly underestimate the effect of |ΔVR0|).

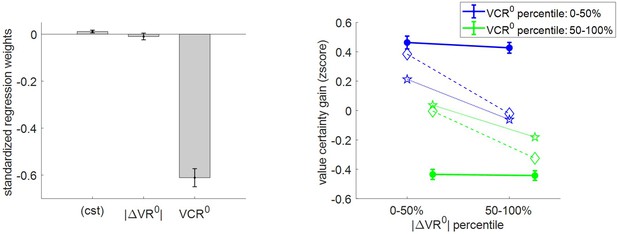

Fourth, we checked how |ΔVR0| and VCR0 impact value certainty gain. Under the MCD model, the certainty of value representations cannot improve unless type #1 efficacy . In addition, value certainty gain should globally follow the optimal effort allocation, i.e., it should decrease when |ΔVR0| and/or VCR0 increase. Figure 11 shows the corresponding model predictions/postdictions and summarizes the corresponding empirical data.

Three-way relationship between value certainty gain, value, and value certainty.

Same format as Figure 5.

Importantly, there is a small but significantly positive certainty gain (mean = 0.11 A.U., s.e.m. = 0.06, p=0.027, one-sided t-test). This is reassuring, because it dismisses the possibility that (which would mean that effort does not increase the precision of value representation). This time, the results of the group-level random effect analysis only partially confirm our qualitative MCD predictions. In brief, although VCR0 has a very strong negative impact on certainty gain (mean standardized regression weight = −0.61, s.e.m. = 0.04, p<10−3; one-sided t-test), the effect of |ΔVR0| does not reach statistical significance (mean standardized regression weight = −0.009, s.e.m. = 0.01, p=0.35; one-sided t-test). We note that a simple regression-to-the-mean artifact (Stigler, 1997) likely inflates the observed negative correlation between VCR0 and certainty gain, beyond what would be predicted under the MCD model. Accordingly, both MCD postdictions and out-of-sample predictions clearly underestimate the effect of VCR0 (and overestimate the effect of |ΔVR0|).

Discussion

In this work, we have presented a novel computational model of decision-making that explains the intricate relationships between effort-related variables (response time, subjective effort) and decision-related variables (choice confidence, change of mind, spreading of alternatives, and choice-induced value certainty gain). This model assumes that deciding between alternative options whose values are uncertain induces a demand for allocating cognitive resources to value-relevant information processing. Cognitive resource allocation then optimally trades mental effort for confidence, given the prior discriminability of the value representations.

Such metacognitive control of decisions or MCD provides an alternative theoretical framework to accumulation-to-bound models of decision-making, e.g., drift-diffusion models or DDMs (Milosavljevic et al., 2010; Ratcliff et al., 2016; Tajima et al., 2016). Recall that DDMs assume that decisions are triggered once the noisy evidence in favor of a particular option has reached a predefined bound. Standard DDM variants make quantitative predictions regarding both response times and decision outcomes, but are agnostic about choice confidence, spreading of alternatives, value certainty gain, and/or subjective effort ratings. We note that simple DDMs are significantly less accurate than MCD at making out-of-sample predictions on dependent variables common to both models (e.g., change of mind). We refer the reader interested in the details of the MCD–DDM comparison to section 9 of the Appendix.

But how do MCD and accumulation-to-bound models really differ? For example, if the DDM can be understood as an optimal policy for value-based decision-making (Tajima et al., 2016), then how can these two frameworks both be optimal? The answer lies in the distinct computational problems that they solve. The MCD solves the problem of finding the optimal amount of effort to invest under the possibility that yet-unprocessed value-relevant information might change the decision maker’s mind. In fact, this resource allocation problem would be vacuous, would it not be possible to reassess preferences during the decision process. In contrast, the DDM provides an optimal solution to the problem of efficiently comparing option values, which may be unreliably signaled, but remain nonetheless stationary. Of course, the DDM decision variable (i.e., the ‘evidence’ for a given choice option over the alternative) may fluctuate, e.g. it may first drift toward the upper bound, but then eventually reach the lower bound. This is the typical DDM’s explanation for why people change their mind over the course of deliberation (Kiani et al., 2014; Resulaj et al., 2009). But, critically, these fluctuations are not caused by changes in the underlying value signal (i.e., the DDM’s drift term). Rather, the fluctuations are driven by neural noise that corrupts the value signals (i.e., the DDM’s diffusion term). This is why the DDM cannot predict choice-induced preference changes, or changes in options’ values more generally. This distinction between MCD and DDM extends to other types of accumulation-to-bound models, including race models (De Martino et al., 2013; Tajima et al., 2019). We note that either of these models (DDM or race) could be equipped with pre-choice value priors (initial bias), and then driven with ‘true’ values (drift term) derived from post-choice ratings. But then, simulating these models would require both pre-choice and post-choice ratings, which implies that choice-induced preference changes cannot be predicted from pre-choice ratings using a DDM. In contrast, the MCD model assumes that the value representations themselves are modified during the decision process, in proportion to the effort expenditure. Now the latter is maximal when prior value difference is minimal, at least when type #2 efficacy dominates (γ-effect, see section 2 of the Appendix). In turn, the MCD model predicts that the magnitude of (choice-induced) value spreading should decrease when the prior value difference increases (cf. Equation 14). Together with (choice-induced) value certainty gain, this quantitative prediction is unique to the MCD framework, and cannot be derived from existing variants of DDM.

As a side note, the cognitive essence of spreading of alternatives has been debated for decades. Its typical interpretation is that of ‘cognitive dissonance’ reduction: if people feel uneasy about their choice, they later convince themselves that the chosen (rejected) item was actually better (worse) than they originally thought (Bem, 1967; Harmon-Jones et al., 2009; Izuma and Murayama, 2013). In contrast, the MCD framework would rather suggest that people tend to reassess value representations until they reach a satisfactory level of confidence prior to committing to their choice. Interestingly, recent neuroimaging studies have shown that spreading of alternatives can be predicted from brain activity measured during the decision (Colosio et al., 2017; Jarcho et al., 2011; Kitayama et al., 2013; van Veen et al., 2009, Voigt et al., 2019). This is evidence against the idea that spreading of alternatives only occurs after the choice has been made. In addition, key regions of the brain’s valuation and cognitive control systems are involved, including: the right inferior frontal gyrus, the ventral striatum, the anterior insula, and the anterior cingulate cortex (ACC). This further corroborates the MCD interpretation, under the assumption that the ACC is involved in controlling the allocation of cognitive effort (Musslick et al., 2015; Shenhav et al., 2013). Having said this, both MCD and cognitive dissonance reduction mechanisms may contribute to spreading of alternatives, on top of its known statistical artifact component (Chen and Risen, 2010). The latter is a consequence of the fact that pre-choice value ratings may be unreliable and is known to produce an apparent spreading of alternatives that decreases with pre-choice value difference (Izuma and Murayama, 2013). Although this pattern is compatible with our results, the underlying statistical confound is unlikely to drive our results. The reason is twofold. First, effort-related variables yield accurate within-subject out-of-sample predictions about spreading of alternatives (cf. Figure 10). Second, we have already shown that the effect of pre-choice value difference on spreading of alternatives is higher here than in a control condition where the choice is made after both rating sessions (Lee and Daunizeau, 2020).

A central tenet of the MCD model is that involving cognitive resources in value-related information processing is costly, which calls for an efficient resource allocation mechanism. A related notion is that information processing resources may be limited, in particular: value-encoding neurons may have a bounded firing range (Louie and Glimcher, 2012). In turn, ‘efficient coding’ theory assumes that the brain has evolved adaptive neural codes that optimally account for such capacity limitations (Barlow, 1961; Laughlin, 1981). In our context, efficient coding implies that value-encoding neurons should optimally adapt their firing range to the prior history of experienced values (Polanía et al., 2019). When augmented with a Bayesian model of neural encoding/decoding (Wei and Stocker, 2015), this idea was successful in explaining the non-trivial relationship between choice consistency and the distribution of subjective value ratings. Both MCD and efficient coding frameworks assume that value representations are uncertain, which stresses the importance of metacognitive processes in decision-making control (Fleming and Daw, 2017). However, they differ in how they operationalize the notion of efficiency. In efficient coding, the system is ‘efficient’ in the sense that it changes the physiological properties of value-encoding neurons to minimize the information loss that results from their limited firing range. In MCD, the system is ‘efficient’ in the sense that it allocates the amount of resources that optimally trades effort cost against choice confidence. These two perspectives may not be easy to reconcile. A possibility is to consider, for example, energy-efficient population codes (Hiratani and Latham, 2020; Yu et al., 2016), which would tune the amount of neural resources involved in representing value to optimally trade information loss against energetic costs.

Now, let us highlight that the MCD model offers a plausible alternative interpretation for the two main reported neuroimaging findings regarding confidence in value-based choices (De Martino et al., 2013). First, the ventromedial prefrontal cortex or vmPFC was found to respond positively to both value difference (i.e., ΔVR0) and choice confidence. Second, the right rostrolateral prefrontal cortex or rRLPFC was more active during low-confidence versus high-confidence choices. These findings were originally interpreted through a so-called ‘race model’, in which a decision is triggered whenever the first of option-specific value accumulators reaches a bound. Under this model, choice confidence is defined as the final gap between the two value accumulators. We note that this scenario predicts the same three-way relationship between response time, choice outcome, and choice confidence as the MCD model (see section 7 of the Appendix). In brief, rRLPFC was thought to perform a readout of choice confidence (for the purpose of subjective metacognitive report) from the racing value accumulators hosted in the vmPFC. Under the MCD framework, the contribution of the vmPFC to value-based decision control might rather be to construct item values, and to anticipate and monitor the benefit of effort investment (i.e., confidence). This would be consistent with recent fMRI studies suggesting that vmPFC confidence computations signal the attainment of task goals (Hebscher and Gilboa, 2016; Lebreton et al., 2015). Now, recall that the MCD model predicts that confidence and effort should be anti-correlated. Thus, the puzzling negative correlation between choice confidence and rRLPFC activity could be simply explained under the assumption that rRLPFC provides the neurocognitive resources that are instrumental for processing value-relevant information during decisions (and/or to compare item values). This resonates with the known involvement of rRLPFC in reasoning (Desrochers et al., 2015; Dumontheil, 2014) or memory retrieval (Benoit et al., 2012; Westphal et al., 2019).

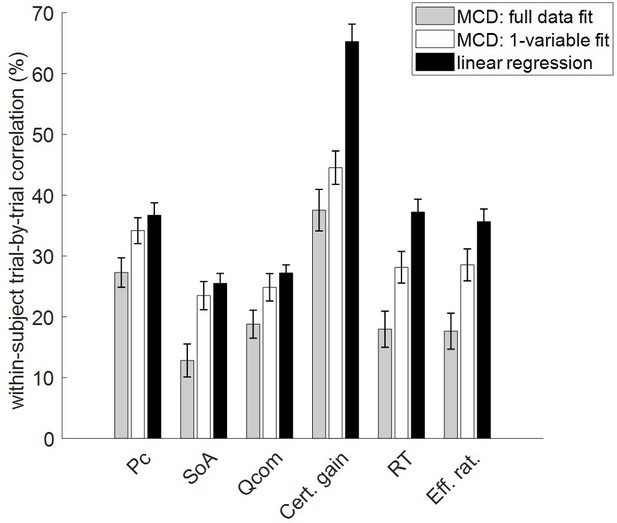

At this point, we note that the current MCD model clearly has limited predictive power. Arguably, this limitation is partly due to the imperfect reliability of the data, and to the fact that MCD does not model all decision-relevant processes. In addition, assigning variations in many effort- and/or decision-related variables to a unique mechanism with few degrees of freedom necessarily restricts the model’s expected predictive power. Nevertheless, the MCD model may also not yield a sufficiently tight approximation to the mechanism that it focuses on. In turn, it may unavoidably distort the impact of prior value representations and other decision input variables. The fact that it can only explain 81% of the variability in dependent variables that can be captured using simple linear regressions against ΔVR0 and VCR0 (see section 11 of the Appendix) supports this notion. A likely explanation here is that the MCD model includes constraints that prevent it from matching the model-free postdiction accuracy level. In turn, one may want to extend the MCD model with the aim of relaxing these constraints. For example, one may allow for deviations from the optimal resource allocation framework, e.g., by considering candidate systematic biases whose magnitudes would be controlled by specific additional parameters. Having said this, some of these constraints may be necessary, in the sense that they derive from the modeling assumptions that enable the MCD model to provide a unified explanation for all dependent variables (and thus make out-of-sample predictions). What follows is a discussion of what we perceive as the main limitations of the current MCD model, and the directions of improvement they suggest.

First, we did not specify what determines decision ‘importance’, which effectively acts as a weight for confidence against effort costs (cf. in Equation 2 of the Model section). We know from the comparison of ‘consequential’ and ‘neutral’ choices that increasing decision importance eventually increases effort, as predicted by the MCD model. However, decision importance may have many determinants, such as, for example, the commitment duration of the decision (e.g., life partner choices), the breadth of its repercussions (e.g., political decisions), or its instrumentality with respect to the achievement of superordinate goals (e.g., moral decisions). How these determinants are combined and/or moderated by the decision context is virtually unknown (Locke and Latham, 2002; Locke and Latham, 2006). In addition, decision importance may also be influenced by the prior (intuitive/emotional/habitual) appraisal of choice options. For example, we found that, all else equal, people spent more time and effort deciding between two disliked items than between two liked items (results not shown). This reproduces recent results regarding the evaluation of choice sets (Shenhav and Karmarkar, 2019). One may also argue that people should care less about decisions between items that have similar values (Oud et al., 2016). In other terms, decision importance would be an increasing function of the absolute difference in pre-choice value ratings. However, this would predict that people invest fewer resources when deciding between items of similar pre-choice values, which directly contradicts our results (cf. Figures 5 and 6). Importantly, options with similar values may still be very different from each other, when decomposed on some value-relevant feature space. For example, although two food items may be similarly liked and/or wanted, they may be very different in terms of, e.g., tastiness and healthiness, which would induce some form of decision conflict (Hare et al., 2009). In such a context, making a decision effectively implies committing to a preference about feature dimensions. This may be deemed to be consequential, when contrasted with choices between items that are similar in all regards. In turn, decision importance may rather be a function of options’ feature conflict. In principle, this alternative possibility is compatible with our results, under the assumption that options’ feature conflict is approximately orthogonal to pre-choice value difference. Considering how decision importance varies with feature conflict may significantly improve the amount of explained trial-by-trial variability in the model’s dependent variables. We note that the brain’s quick/automatic assessment of option features may also be the main determinant of the prior value representations that eventually serve to compute the MCD-optimal resource allocation. Probing these computational assumptions will be the focus of forthcoming publications.

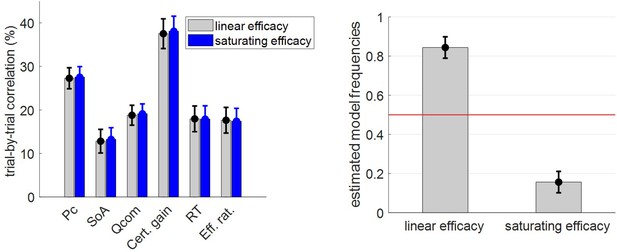

Second, our current version of the MCD model relies on a simple variant of resource costs and efficacies. One may thus wonder how sensitive model predictions are to these assumptions. For example, one may expect that type #2 efficacy saturates, i.e. that the magnitude of the perturbation to the modes of the value representations eventually reaches a plateau instead of growing linearly with (cf. Equation 6). We thus implemented and tested such a model variant. We report the results of this analysis in section 10 of the Appendix. In brief, a saturating type #2 efficacy brings no additional explanatory power for the model’s dependent variables. Similarly, rendering the cost term nonlinear (e.g., quadratic) does not change the qualitative nature of the MCD predictions. More problematic, perhaps, is the fact that we did not consider distinct types of effort, which could, in principle, be associated with different costs and/or efficacies. For example, the efficacy of allocating attention may depend upon which option is considered. In turn, the brain may dynamically refocus its attention on maximally uncertain options when prospective information gains exceed switch costs (Callaway et al., 2021; Jang et al., 2021). Such optimal adjustment of divided attention might eventually explain systematic decision biases and shortened response times for ‘default’ choices (Lopez-Persem et al., 2016). Another possibility is that effort might be optimized along two canonical dimensions, namely duration and intensity. The former dimension essentially justifies the fact that we used RT as a proxy for the amount of allocated resources. This is because, if effort intensity stays constant, then longer RT essentially signals greater resource expenditure. In fact, as is evident from the comparison between ‘penalized’ and ‘neutral’ choices, imposing an external penalty cost on RT reduces, as expected, the ensuing effort duration. More generally, however, the dual optimization of effort dimensions might render the relationship between effort and RT more complex. For example, beyond memory span or attentional load, effort intensity could be related to processing speed. This would explain why, although ‘penalized’ choices are made much faster than ‘neutral’ choices, the associated subjective feeling of effort is not as strongly impacted as RT (cf. Figure 7). In any case, the relationship between effort and RT might depend upon the relative costs and/or efficacies of effort duration and intensity, which might themselves be partially driven by external availability constraints (cf. time pressure or multitasking). We note that the essential nature of the cost of mental effort in cognitive tasks (e.g., neurophysiological cost, interferences cost, or opportunity cost) is still a matter of intense debate (Kurzban et al., 2013; Musslick et al., 2015; Ozcimder et al., 2017). Progress toward addressing this issue will be highly relevant for future extensions of the MCD model.

Third, we did not consider the issue of identifying plausible neuro-computational implementations of MCD. This issue is tightly linked to the previous one, in that distinct cost types would likely impose different constraints on candidate neural network architectures (Feng et al., 2014; Petri et al., 2017). For example, underlying brain circuits are likely to operate MCD in a more reactive manner, eventually adjusting resource allocation from the continuous monitoring of relevant decision variables (e.g., experienced costs and benefits). Such a reactive process contrasts with our current, prospective-only variant of MCD, which sets resource allocation based on anticipated costs and benefits. We already checked that simple reactive scenarios, where the decision is triggered whenever the online monitoring of effort or confidence reaches the optimal threshold, make predictions qualitatively similar to those we have presented here. We tend to think, however, that such reactive processes should be based on a dynamic programming perspective on MCD, as was already done for the problem of optimal efficient value comparison (Tajima et al., 2016; Tajima et al., 2019). We will pursue this and related neuro-computational issues in subsequent publications.

Code availability

The computer code and algorithms that support the findings of this study will soon be made available from the open academic freeware VBA (http://mbb-team.github.io/VBA-toolbox/). Until then, they are available from the corresponding author upon reasonable request.

Ethical compliance

This study complies with all relevant ethical regulations and received formal approval from the INSERM Ethics Committee (CEEI-IRB00003888, decision no 16–333). In particular, in accordance with the Helsinki declaration, all participants gave written informed consent prior to commencing the experiment, which included consent to disseminate the results of the study via publication.

Appendix 1

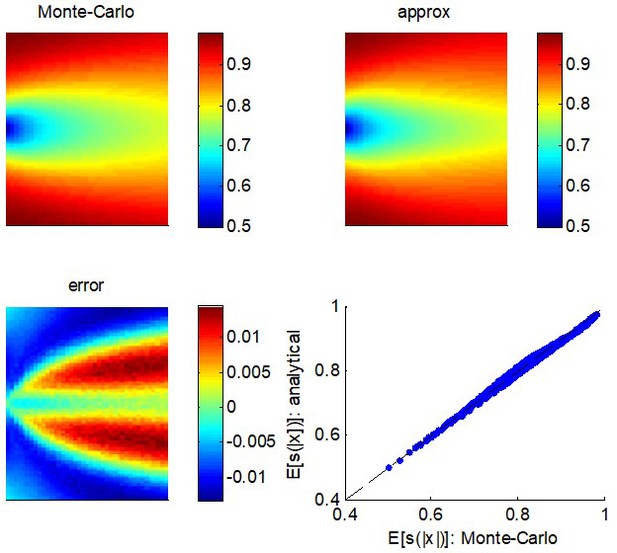

1. On the approximation accuracy of the expected confidence gain

The MCD model relies on the system's ability to anticipate the benefit of allocating resources to the decision process. Given the mathematical expression of choice confidence (Equation 4 in the main text), this reduces to finding an analytical approximation to the following expression:

where is the sigmoid mapping, is an arbitrary constant, and the expectation is taken under the Gaussian distribution of , whose mean and variance are µ and , respectively.

Note that the absolute value mapping follows a folded normal distribution, whose first two moments and have known expressions:

where the first line relies on a moment-matching approximation to the cumulative normal distribution function (Daunizeau, 2017a). This allows us to derive the following analytical approximation to Equation A1:

where setting makes this approximation tight (Daunizeau, 2017a).

The quality of this approximation can be evaluated by drawing samples of , and comparing the Monte-Carlo average of with the expression given in Equation A3. This is summarized in Appendix 1—figure 1, where the range of variation for the moments of was set as follows: and .

Quality of the analytical approximation to .

Upper left panel: the Monte-Carlo estimate of (color-coded) is shown as a function of both the mean (y-axis) and the variance (x-axis) of the parent process . Upper right panel: analytic approximation to as given by Equation A3 (same format). Lower left panel: the error, that is, the difference between the Monte-Carlo and the analytic approximation (same format). Lower right panel: the analytic approximation (y-axis) is plotted as a function of the Monte-Carlo estimate (x-axis) for each pair of moments of the parent distribution.

One can see that the error rarely exceeds 5%, across the whole range of moments of the parent distribution. This is how tight the analytic approximation of the expected confidence gain (Equation 9 in the main text) is.

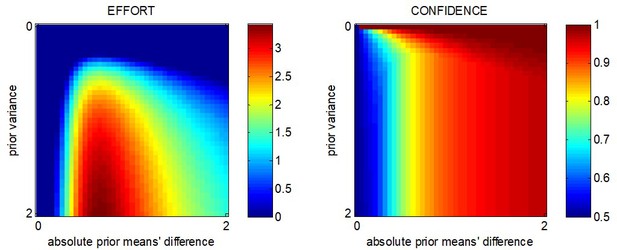

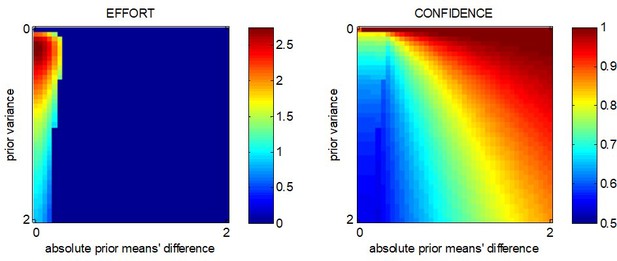

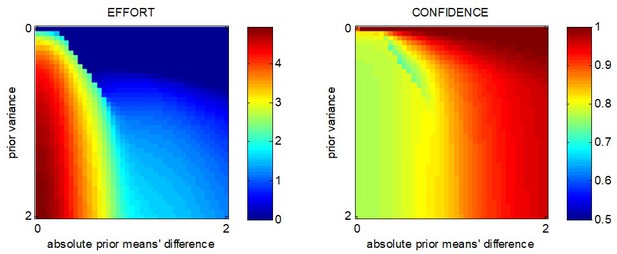

2. On the impact of model parameters for the MCD model

To begin with, note that the properties of the metacognitive control of decisions (in terms of effort allocation and/or confidence) actually depend on the demand for resources, which is itself determined by prior value representations (or, more properly, by the prior uncertainty and the absolute means' difference ). Now, the way the MCD-optimal control responds to the resource demand is determined by effort efficacy and unitary cost parameters. In addition, MCD-optimal confidence may not trivially follow resource allocation, because it may be overcompensated by choice difficulty.

First, recall that the amount of allocated resources maximizes the EVC:

where is given in Equation 9 in the main text. According to the implicit function theorem, the derivatives of w.r.t. and are given by Gould et al., 2016:

The double derivatives in Equations A5 are not trivial to obtain.

First, the gradient of choice confidence w.r.t. writes:

where is given by:

Note that the gradient in Equation A6 can be obtained analytically from Equation 7 in the main text. However, we refrain from doing this, because it is clear that deriving the right-hand term of Equation A6 w.r.t. both and will not bring any simple insight regarding the impact of onto .

Also, although the gradient of choice confidence wr.t. takes a much more concise form:

it still remains tedious to derive its expression with respect to both and . This is why we opt for separating the respective effects of type #1 and type #2 efficacies.

First, let us ask what would be the MCD-optimal effort and confidence when , that is, if the only effect of allocating resources is to increase the precision of value representations. We call this the 'β-effect'. In this case, and irrespective of . This greatly simplifies Equations A6–A8:

Inserting Equation A9 back into Equation A5 now yields:

Now the sign of the gradients of w.r.t. and are driven by the numerators of Equation A10 because all partial derivatives of have unambiguous signs:

Replacing the expression for in Equation A11 into Equation A10 now yields: